>> David Wilson: So it's a pleasure to introduce... a Ph.D. student at UBC, graduated in 2008, and then...

advertisement

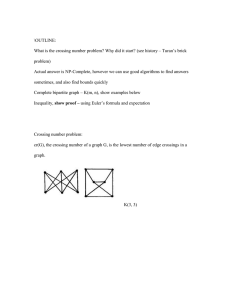

>> David Wilson: So it's a pleasure to introduce our next speaker, Ben Young. Ben was a Ph.D. student at UBC, graduated in 2008, and then went on to postdoctoral positions in various places, McGill and in Stockholm and at MSRI. And for the past two years he's been at the University of Oregon. And he's going to speak about sampling from the Fomin-Kirillov distribution. >> Benjamin Young: So much of this is joint work with Andrew Holroyd here at Microsoft Research and Sara Billey, at the University of Washington. So in fact, this entire project began when I was a postdoc at MSRI and Andrew Holroyd was visiting for one of our workshops there. He asked me a good question about reduced words in the symmetric group. There's a certain -- well, there's several probability distributions on reduced words in the symmetric group, one of which is the uniform distribution, which is already quite interesting. These are the random sorting networks that he's been working on for the past I guess nearly 10 years, perhaps. Maybe a little longer. And then the second interesting distribution is coming from an identity of Macdonald, who is a combinatorist, which came up in his study of Schubert polynomials. And then there's an interesting interpolating distribution between the two. And I will hopefully, at the end, tell you how you can draw a sample from this interpolating distribution. So the extent to which this is a probability talk, I'm afraid to somewhat limit it. I'm a combinatorist. But I will try to highlight what probabilistic content I can find in what I have done as I go along. So let's begin. So first of all, we're talking about the symmetric group. So this is a group of permutations of objects. Of course, it is generated by adjacent transpositions of items. So here, so S1 is going to be the transposition which is lop side in one and item two and so forth down all the way down to simple transposition, SN minus 1. And I'm going to represent them as crosses like this. So I just have a bit of an idea. Maybe I can turn off the grid of my graph paper and then everything will become clearer. Maybe only some things will become clearer. I'm going to leave it the way it is rather than try to be clever. Now, of course, generally, what you're used to doing with the symmetric group, you simply need to know any permutation can be represented as some product of such transpositions. I'm actually interested in the number of different ways in which this can be done. And actually, furthermore, in sort of looking at properties of what a representation of a permutation by adjacent transpositions looks like. Here are a couple. So, of course, here is three transpositions. Maybe S1, S2 and S1, and what they're achieving is swapping or moving the first element down to the ends., the last element up to the top and keeping the second element fixed. Here is another one that's doing the same thing, but these are two different reduced words. So maybe I should actually define reduced words. So I need some way of representing such a thing other than the diagram, and the usual thing to do is to simply write down the indices of the elementary transpositions, which you are using in order to generate the reduced words. So this is a list of numbers. This isn't an element of the symmetric group. These are numbers from 1 to like N minus 1. Now, the word reduced I suppose needs a little bit of explanation. So the point is, so these are two ways that you can represent the transposition, which interchanges, swaps every two numbers, the complete reversal. Of course you could also do it in many other ways. For example, by adding four more copies of this transposition on the end, they are redundant because they swap the same thing. I don't want those. I only want the ones which are reduced. In particular, the number of crossings in this picture should be the same as the number of inversions in the permutation, the number of things which are out of order. That's what the word reduced means here. So the permutation one, two, three goes to three, two, one, has three inversions and here are three crossings, in a word, producing it. These are the basic data structure that I'm talking about. And in the particular case, where you've got this permutation which sends 1 to N, 2 to N minus 1 and so forth, all the way to sending N down to the 1, the reduced words for this are called sorting networks. Here's a sorting network for the permutation 4, 3, 2, 1. There are 15 others. You call them sorting networks because you can view this as a rather poor sorting algorithm for sorting things, if you imagine putting these numbers in and out of order to begin with and then walking them through the wire and always sending the smallest element up or the large element down, say, then they'll end up in order at the other end, because every two have been compared. It's the same criteria. So the question is how many of these are there? And just a brief review, so this was originally computed by Stanley, as far as I'm aware, 1984. So in this particular case of the permutation, which is of maximal length in terms of inversions. Let's call that length K. It's N choose 2, because everything is out of order, everything is an inversion. Then the number of reduced words for that permutation -- well, I've written it like this because this is to suggest something I'm going to say later, is a famous number. It is K factorial over a certain product of odd numbers. This is also -- well, particularly famous if there are representation theorists in the room, so this is the number of standard Young tableau, which is an object that comes up in representation theory. I don't know why that's happened. All right. So now what we're going to do is ask for a bijective proof of this. So put on our combinatorists hats for a moment. Here I have two different counts of things. Here I have a count of reduced words, and trust me, this is a count of standard Young tableau of a staircase shape. So you write the numbers 1 through N inside a staircase-shaped grid in such a way that 1 goes in the corner and then the numbers are increasing in both directions. And again, there are this many ways to do that. I've worried that I have Ks and Ns in that formula. I suspect they're all supposed to be Ks or something along those lines. I'll check that later. So there should be a way of transforming a standard Young tableau into a reduced word like this, and indeed there is. It's due to Edelman and Greene I believe in 1987, and the proof is very similar to this Robinson-Schensted, maybe not with the -- the RobinsonSchensted algorithm. So this is a way of a similar problem in combinatorial representation theory. You know that the sum of the squares is the degrees of the characters of the irreducible characters in any group in representation theory is the size of the group. And in the case of the symmetric group it says that N factorial is the sum of, well, the dimensions of the irreducible characters, and the irreducible characters are each counting the number of standard Young tableaux of certain shapes. So there should be a way of taking two standard Young tableaux of the same shape and turning them into a permutation and vice versa. That's precisely what Robinson-Schensted algorithm does. This very first bijective proof is a variant of that algorithm. It's sort of an insertion algorithm. You basically take the elements out of this word one at a time, the crossings, and sort of insert them into a tableau and they're sort of shunted around and you get a tableau out. Well, you get two tableaux out, and one of them is always the same thing, so it can be discarded. And then there was a second bijective proof of the same fact due to David Lytle. I wrote in 1996 I don't actually have the faintest idea whether that number is right or if I've just made it up. But have to fact check that as well. And this is the one which I will show you, because it's going to come up in my work. And I will show it to you in just a second. And then we had a little bit later this paper of Omer Angel, Alex Holroyd, Dan Romik and Balint Virag in 2006. So it is a very careful study of large random sorting networks. So they realized that this Edelman-Greene algorithm was good and very efficient to implement on a computer, and you can make a really big sorting network and just generate some big ones and look at it. You see some remarkable patterns in these random sorting networks. Again, I'll show you a couple of pictures which I've pulled from their paper in a little bit. So that's sort of the beginning of this field. So I want to go and show you this David Lytle bumping algorithm. I don't really have the time to sort of prove that it works. But I've never actually tried this without a fully functioning laptop, so let's just hope this works here. Here is a reduced word. So this is going to be David Lytle's algorithm that transforms one of these into a standard Young tableau. So you'll notice my program has decorated this reduced word with large red arrows. Those are large red arrows are crossings which can be deleted and still leave a reduced word. Here, let me try and delete one. So I deleted that. This word is still reduced. The permutation 3, 4, 2, 1 has five inversions. Four, 2, for example. Those elements are out of order, so that's an inversion. There are five others and there are five things in this word. So I'm going to put that crossing back. This is the part I'm not sure will work. Good. Okay. So we say that the word -- so this is a reduced word. Independent of that, we also say it is nearly reduced at each of these red places. This is where if I delete that crossing, it's going to be a reduced word. So instead of deleting, what I can do is I can take this crossing and shove it over like that. Now, sometimes when you do this, you don't get a reduced word. Bumping down there. Make this a little bit bigger. So if I do, I stop. And then I've completed the little algorithm, the little bump. If it isn't reduced, it is a theorem that there is one place which is conflicting with the crossing I've just moved and that the word is nearly reduced there, as well. In that case, I take it, then I push it over in the same direction, down. Sometimes this makes you add -- you'll push a crossing off the bottom of the word or off the top of the word, in which case you add another wire for it to be on. So that is one instance of the little bump. So this is a reversible operation. I can by bump up using exactly the same rules, starting with the crossing I stopped at and get back to where I started. So this is going to be some sort of bijection between classes of reduced words and other classes of reduced words. If you do the bumping in an ingenious way, which I'm going to do it an ad hoc rather than ingenious way and just see if I can get it. There. Okay. So there's a certain sequence of bumps which you can do, which leaves the word in the following state. For one thing, there is only one descent. The numbers go 2, 4, 6, and then there is a descent. One is less than 6 -- 1, 3, 5. Moreover, so that characterizes the sort of word. It's called a Grassmannian word for various esoteric reasons. Moreover, the wires are monotone. You'll notice they're either going down, these three, or they're going up, such as wire 6, wire 4 and wire 2. So normally I would do this on the computer, but my computer's not behaving. So what you can do, the wires here -- let's have these wires that are monotone down. Those are going to represent rows in my standard tableau. And the wires that are up are going to represent columns. So going along, reading this word backwards, you can translate this into a tableau as follows. So this is going to be crossing 1, 2, 3, 4, 5 and 6. So on the first row I see 1, 2, and 3. On the second row, here I see crossings 4 and 6. And then in that very last one I see crossing 5. You'll notice that these numbers are increasing in both directions. And similarly, if I take an array of numbers like this, I can write it down in a transparent way to turn it into the tableau like this, and then shunt all of the crossings back up. I hope I remember to do this right and have a reduced word for 4, 3, 2, 1. That's how the little bijection works, and that's how you see these two sets are equinumerous. And the other thing to note is it's really fast to sample one of these. There's an algorithm due to Greene, [Ninehaus] and [Wolfe], which lets you basically almost just write down one of these in your computer as fast as the computer can put numbers into the grid. So you can generate a very large sorting network this way. Even the Edelman-Greene algorithm I think is even a little bit faster than this one. Okay. So let's see. I think I've managed to define the terms nearly reduced, bump and Grassmannian. I don't think I defined push, but that is simply the operation of just taking one crossing and moving it over. It's one step in this algorithm which I've described to you. Okay. Let's see. So that's one of the combinatorial objects I want to talk about in this talk. The other one, which is going to come up, are pipe dreams, which are these things. This is an object which looks very much like a reduced word. Although it is a little bit different. For one thing, wire 1 is shorter than wire 4 here. All of the crossings are forced to be in the square grid, and I either have them like sort of a plus shape or two wires which pass like this. This pattern occurs in all of these cells, and if you like to imagine filling the rest of this quadrant with more cells like this, then you just have straight lines below this. Why do we care about these? It's a little bit less obvious. But these are of interest in a branch of combinatorics called Schubert polynomials, which is somehow inheriting from the Schubert calculus, coming from algebraic geometry, and rather than getting into why we care about these, let me just say that the Schubert polynomial is a certain sum over pipe dreams. You write down all the pipe dreams, which are realizing a permutation. This one is -- I may have replaced something with its inverse. Let's just say that this is the permutation 1, 4, 3, 2. I think that's exactly backwards. And the Schubert polynomial is what happens when you record the row which each crossing appears in in the variable X and it's the polynomial many variables X. I don't really care too much about the fine details of that at present, because what I want to do is state the Macdonald identity. So this is what Andrew brought to my attention at MSRI in 2012. It turns out that if you -- so if you take the following sum over reduced words for a permutation, instead of summing up the number one, instead of just counting it, we're going to do a weighted count of reduced words. And the weight is the following. If I have these crossings or these -- yeah, these crossings appearing in my reduced word, I take the product of these numbers. This isn't concatenation. This is actually multiplying numbers together. That is going to be the weight of my word. And it turns out that if you add up all of these things, the answer is K factorial times the number of terms in the Schubert polynomial. You can forget the weighting that I said with the Xs. I just want to count pipe dreams. This is the number of pipe dreams realizing a particular permutation. And in the case -- actually a lot of time this number here is one. There's only one pipe dream. And in the case of this long permutation, for example, that is one such. And then the right-hand side is just K factorial. >>: [Indiscernible]. >> Benjamin Young: N minus 1, N minus 2. All the way down to 1, the 1for sorting networks. So what do you want to do? Ideally, you sort of want to give a bijective proof of this. I worked out -- again, basically by complete fluke in some sense, a way to start doing a bijective proof of this because I was thinking a lot about David Lytle's algorithm for other reasons. And I'll show you a little bit about how that goes. And so this is the special case that it is precisely this one I just said when the Schubert polynomial evaluates to one. So this does actually cover the case of the sorting network. Such permutations are called dominant permutations. The prepend on the archive of this of mine is covering that case. And this is in some ways the cleanest example, because this gives you not only a sampling algorithm but in fact a Markov growth rule for these reduced words under this probability distribution. So what I want to do, I guess I haven't even said what the distribution is. Imagine that number is 1. Take the K factorial across to the other side I have 1 over K factorial times the sum of this stuff is equal to 1. This implies there's going to be a probability distribution on reduced words where the probability of selecting each reduced word is precisely proportional to this, and the constant proportionality is K factorial. So that is the distribution from which I want to sample. It looks like I should be able to do it. I want to know what a large reduced word is coming from this distribution and so forth, and it turns out that the way that you get to do it is by growing a reduced word. You start with the reduced word with no crossings in it, and you put the crossings in sort of one at a time, adding inversions one at a time, until you end up with the permutation that you wanted. Very much reminiscent of actually I think coincidental -- but of Andrew's way of growing this independent forecoloring of his by sticking in new colors one at a time spreading things out. Similar kind of growth. And then Andrew and Sara Billey and I came up with a bijective proof, although no longer easily interpretable as a growth rule for the general case of this Macdonald identity. I don't actually know how to sample a random pipe dream. So, of course, when you have a computationally efficient bijection and can sample things on one side of it, then you can sample the other things on the other side of it by pushing them through the bijection. I don't actually know how to sample a pipe dream in general, but there's an interesting case in which I do. So hopefully we'll get to that by the end of the talk. I briefly want to say how the growth rule works, because that is one of the things that appeared in the title despite this being only a special case. You do the following: So you pick a standard Young tableau, say that one. But this is for a different reason. This is going to tell you in what order I do the insertions, what wires I do the insertions along. And the algorithm is going to be the following. I take the standard Young tableau. I see where the numbers occur. So in which row do they occur? So the numbers 1, 2, 3, 4, 5, 6 in case occurs in row 1, row 2, row 1, row 3, row 2, row 1. So those are going to be numbers of wires on my reduced word. And I'm going to repeatedly do the following thing. I'm going to insert a crossing somewhere on that wire, and then I'm going to immediately push it down until I get a reduced word. Okay, the first one is boring. There's no crossings there. I stick a crossing on it so that its feet are on top of wire one and I push it down. And now I have only reduced word for the permutation 2, 1. It's got one crossing on it, and it just swaps, and that's not interesting. Now I've got a reduced word. I find the next wire to look at. In this case it is 2. Somewhere along wire 2, I insert a crossing and I push it down. Why does that make sense? Well, when I've just inserted a crossing, the word is nearly reduced there, because I just had a reduced word when the crossing wasn't there. It's really elementary. I can do a few instances of it. Here let me just kill a bunch of these crossings. Let's say I've inserted crossings 1, 2 and 1 here. So now I'm going to insert a crossing somewhere along wire 3. I'll just do this sort of at random. Maybe I'll insert it kind of in the middle here. Please work. There. Okay. So I've just inserted a crossing on wire 3. Of course, this word is now not necessarily reduced. But this new crossing that I stick in, I immediately shove it down until I've got a reduced word. My instructions say to insert a crossing on wire 2. Thus, shove it down and then a crossing on wire 1 somewhere, maybe up here. This gives me a wire 0. That's okay. I just ignore that. This is reduced. Now, you see without paying attention to it at all, I now have a reduced word for 4, 3, 2, 1, which is what I was aiming for. This is a sorting network, and at the very least, this sort of generated it. And it's straightforward if not completely elementary to see that this is preserving this probability distribution that I was describing. Okay. Just by means of sort of convincing it to you or convincing you that this is doing the right thing. You sort of construct a graph. I guess I can zoom out a little bit and then get this whole thing on the screen. There. So here's all ways I that I could have done this insertion, using this pattern of wires. I stopped about halfway through for a different reduced word. If you count paths in this graph, you will see there are six paths which arrive at this reduced word, six which arrive there and 12 which arrive there. And moreover, these are also the Macdonald weights of these words. So this one, for example, here's crossings and positions 1, 3, 2 and 1, and 1 times 3, times 2, times 1 is in fact 6. This Macdonald identity that I showed you is really counting paths in this graph. And here, 12, for example -- 3 times 2 times 1 times 2 is for real 12. But it's also the number of ways I could follow red arrows and get to this diagram here. And another thing that's the number of paths in this graph is K factorial, because at each level, I'm inserting a new crossing into a word with like three crossings in it. So there are four ways to do that. It doesn't matter where the crossings are. I'm just putting the crossings somewhere along that wire. So one choice here, two choices there, three choices here, four choices here, wherever I am and so forth. The sum of these are going to be K factorial. In this case, six plus six plus 12 is 24, so that's four factorial. Moreover, because of this -- and this is the Markov growth rule here. It's really as simple as you might actually like. If I want to sample a reduced word from this distribution, I don't need to construct the whole graph. That would take exponential amount of space and time. I don't want to do that. Instead, what I can just do is do simple random walk in this graph. I just randomly insert crossings, and in general, you shouldn't expect simple random walk to give you the same probability measure as uniform measure on paths in a directed graph. That's false. But in this case, the outbound degree at each rank is constant. So in this case, those two measures do coincide. So this gives you this way of growing a reduced word. I have some simulations now, if I recall. So these are some wires in a big random sorting network. These are not the same size. This is taken -- this is a uniform random sorting network, screenshotted out of Andrew and Omer's paper. There are 2,000 things here being permuted. If you draw 2,000 wires, then you get a black square. So I have not drawn or they have not drawn all the wires. There's only some of the wires are shown. And the paths that these wires take are interesting, I think. They're sort of sinusoidally shaped. >>: Conjecturally. >> Benjamin Young: Conjecturally. Well, these ones certainly are, that's a fair statement. But this always seems to always happen conjecturally. Here is -- I visited here over the summer, and Andrew and I wrote a pretty efficient implementation of this algorithm which I just told you how to do, and this is an effort to size 600. And once again, you see sort of a similar phenomenon, although it's by no means the same. I don't really know what shapes these trajectories are. It's not really quite as nice a situation as in the uniform case. Nonetheless, at least you can generate large ones of these. And it should be maybe possible to formulate some conjectures about them. The black background is absent here. This is where the actual crossings are in the sorting network. You can see that there's some sort of smooth density presumably to which they are tending and it will very interesting to know what that density is, but again, I don't know that yet. I haven't really tried to do that part of the analysis yet, or not in a serious way. I don't know what conjecture to make. The other thing you can do is you can do is, of course, these are sorting networks. They're for sorting things. You can see what it looks like once it's halfway done. You can take the product of the first few transpositions in the sorting network. If you take a uniform sorting network and do this, you take the product of the first half of the transpositions, you get a picture like this. It looks like a uniform point cloud on a ball, projected isometrically onto a plane. Again, this is also conjectural. We really don't know how to prove this, and there are many other such conjectures about limiting properties of these sorting networks. This is the same operation on the sorting network I just showed you. I thought -- my first thought it is looked like a parabola or an ellipse or some other conic and it was through wishful thinking I thought this, but then when we ran the algorithm long enough, it became clear that this was not tending to any sort of parabola or ellipse or so forth. So once again, an unusual or surprising shaping matrix. I've certainly never seen permutation matrices that look like this come up in any other situation. Nonetheless, I don't have a conjecture as to what that limiting law is. Nonetheless you can make very large ones and look at them, which is comforting. How am I doing for time? >>: Five minutes. >> Benjamin Young: Five minutes. Okay, well, let me just very quickly say what our idea was for the general case. So here there is a -- so this was the special case where there was only one term in the Schubert polynomial of my Macdonald's identity. And it just said K factorial on the right-hand side. In the general case, when your permutation is not a dominant one, there's actually a Schubert polynomial there. And so the best you can hope to do is prove a bijection of the following sort. You can take a reduced word for pi, so your permutation, and then a collection of numbers which are strictly dominated by the elements in the reduced word. This will count something on the one side. And you can replace it with something of which there is K factorial, so a permutation of NSK, there's K factorial of those, and then a pipe dream. This is what the Schubert polynomial is counting. And I don't have time to go through it, but here's the interpretation. So those numbers BI, you think of this -- think of choosing your reduced word, and then after you've chosen the reduced word, you draw a little bit of a track above each crossing. And the crossing only runs within the track. If I've done some sort of little bump that's asking the crossing to pop out of the track, I delete it instead. And what you do is you go through the crossings in some canonical order, which again I think I shall omit exactly the details of what the canonical order are. They do not seem to be terribly important. You can use other canonical orders, and this does seem to go through, although we don't really understand why and why not it works sometimes. And you start doing the little bump, and if at any time one of the crossings is asked to be popped out of the track, you delete it instead of pushing it. And then eventually, if you keep pushing, you'll have removed all of the crossings. Just take very careful note of what happens. You write down the permutations that occur at each stage, and if something pops out of the track, you write down where it happens and you write down what wire it happened on, the location and this way and what wire it was on. If that doesn't happen, then the little bump terminates normally, the way I said the original little bump does, and you write down the last crossing which was pushed. That's probably slightly redundant information, but it's fine, it doesn't matter. And then what you do is you run the whole thing backwards. So, first of all, the order -- the horizontal order in which the crossings popped out, that is going to be your permutation. There's K crossings popped out in some order. Just write that order down. That's the part that's contributing to K factorial. Now, go through, everything else, you just sort of push into the left-hand side of the pipe dream, and I have my pipe dream application up here somewhere. Andy wrote this. You do the following thing. There's a version of the little bump which runs on pipe dreams, so for example, imagine all of these crossings are being pushed in from the left-hand side, so when you add a crossing, it's always coming in from the left. So there I just added a crossing on wire 1. If my instructions say that the next thing I did was add another crossing on wire 1, I push that in from the left and this bumps this one along and then there's a new crossing here. I might add a crossing there. And then maybe my instruction is to do a bump. So it will tell me which crossing I'm supposed to push. I should just take that crossing and push it along, and it moves along all the other crossings in the same row as it. So you might get this. I guess that's a possibility. I don't know. I haven't prepared this part of the talk. I was hoping to do it with the set of instructions and with my pen on my laptop. So that's not working. But the point is that sometimes, when you're doing a push, you may have this situation, where there's a conflict in the sorting network. That is not allowed for a pipe dream. So you resolve it in the same way that you do with the little algorithm. You just push the offending object over, as well. In this case, it would have been this one. So there is a way to sort of decompose the sorting networks into a sequence of instructions and then recombine half of that information, everything other than the horizontal positions of the network, into a pipe dream. And again, since everything is this little bump it's all pretty efficient. The only way which I know how to use this for sampling is the following. There is a slightly more general distribution. Again, if you have a dominant permutation, we have this identity, which is due to Fomin and Kirillov in a 1997 paper. Instead of multiplying the papers A1 up to AK together, you multiple these shifts together -- X plus A1 up to X plus AK. And it turns out that when you do this, you see K factorial and then this really is the Schubert polynomial here. But in this particular case, you should interpret this as a number of semi-standard Young tableaux, just a slightly different combinatorial gadget. So in order to actually sample from this distribution, but this is a very nice distribution, because if you let X get large, say X is a million, then X plus A1 is about the same as X plus anything else. So this is really tending to the uniform distribution. If you put X equal to 0, then you tend to the Macdonald distribution. So this is really interpolating between the two distributions as you let X vary. Also, one way to think about it is since we're just adding the same number to everything, X -- that's sort of like shifting the sorting network down by that many wires. So it is actually quite possible to just derive this equation bijectively as a corollary of ours -- you just have to do the one little bit of bijective combinatorics to see these semi-standard Young tableaux as Grassmannian permutations. And that actually is just David Lytle's proof unmodified, the very first thing I showed you, in which you shunt crossings around until you get a Grassmannian word. The thing is these very last things, you can sample from those. There is a -- let's see. I just wanted to highlight the name Krattenthaler. Christian Krattenthaler gave a proof of Stanley hook's content formula, which is so bijective as to provide an efficient sampling algorithm for choosing a random semi-standard Young tableau. So I haven't implemented this yet, but using our work, we should be able to sample from this Fomin=Kirillov distribution, and then hopefully have a nice interpolating series of sorting networks, which can go between the uniform ones on the one side and the Macdonald ones that I showed you on the other side. I don't know whether all of this will help us prove any sort of limiting properties about this network at all. But I can hope so. Okay. That's all I wanted to say. >> David Wilson: Thank you very much. Are there any questions? >>: Can you also go in the other direction negative, actually? >> Benjamin Young: I suppose you could. Like if you had ->>: Less than one. >> Benjamin Young: In this formula here? >>: Plus one. I guess ->> Benjamin Young: Well, you can, but the thing is if you have a crossing at height 0, then this is a multiplicative weight, so that word is not contributing. >>: That's between 0 and minus 1. >> Benjamin Young: Yes. That's true. There is a sort of a type B variant of all of this, in which you have reduced words which are symmetric. And it also has a little algorithm and so on and so forth. So I also worked this out with Sara Billey and colleagues. Zach Hamaker and Austin Roberts. I don't know what to make of it and I don't know how to use it to prove anything along these lines. There's also a affine version of this, in which you use the affine symmetric group instead of the symmetric group, and the crossings kind of go around it in a circle when you push them. But once again, I don't know what goes on the right-hand side. I don't know what the affine Schubert function is, or if that is the right thing to go on the right-hand side. >> David Wilson: Let's thank Ben again.