>> David Wilson: Good afternoon, everyone. We're especially... welcome this year's Birnbaum Speaker Professor Varadhan has transformed

advertisement

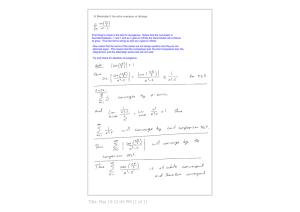

>> David Wilson: Good afternoon, everyone. We're especially delighted to welcome this year's Birnbaum Speaker Professor Varadhan has transformed quite a number of areas in probability and just give a small sample, the Varadhan Theory of Local Times. In general, he's worked on large deviations that we'll hear something about today, hydrodynamic limits, and one of my favorites is the introduction of the self-intersection local time. I could go on. But instead let's have the speaker tell us about the role of compactness in large deviation. >> Srinivasa Varadhan: Thank you very much. It's a pleasure to be here. So there are many problems in analysis where we use compactness of space in a routine manner. And sometimes it's not there. And when it's not there we have to work harder, and the hard work takes different forms. So look at a couple of examples of various types, things that we do to avoid the issue and then I'll give one example that leads to some interesting calculation. So what in large deviation theory one of the things we want to do is to analyze asymptotic behavior at certain integrals. These are integrals of the form experiential of a function with the large parameter multiplying it times certain familiar probability measures PN. And then you want to look at the tilted measure which you throw in the density NFX [indiscernible] of PN and normalize it by the expectation so that UN is a probability distribution now. Which somehow tries to maximize F. Now P in itself has its own behavior. It's not uniform and it decays locally exponentially different rate, different points. Such that there's a fight going on between the function F, which wants to be large but the cost it has to pay for picking a point X is I of X. So this fight has to be resolved and one can conclude that Z is asymptotically like N times exponential of N times the superior of F minus I, which is one of the results of the large deviation theory which is not easy necessarily to prove as long as you can interpret what DPN decays like experiential minus I of X you have to give a meaning to it because the left-hand side is a measure defined for sets and right-hand side is a function differing on points. So that that has to have an interpretation. And one of the things we want to prove with the interpretation is we want to show that the measure QN concentrates at a single point A provided the variational problem as a unique solution. If at some point AF minus A as a maximum strictly larger than other values then we want to show the DQN measure concentrates at the point A. So now what do we mean by this expression that DPN is like expression I minus X, well we're interested in log of PN and we want log of PN of A normalized by 1 over N to behave like [indiscernible] of I of X [indiscernible] this cannot be true for all sets because if we look at a single point set I could be finite at that point and P and of A is usually 0 for single points sets. This again has to be interpreted very carefully and the interpretation is you get a lower bound for open sets and upper bound for close sets just like limit theorems in probability. So for open sets it's easy because usually the results are obtained for small balls by some tilting argument you make law of large numbers for the tilted measure to localize at that point X and you figure out the cost of tilting and then that gives you the experiential lower bound. Lower bound you get for a small ball that's an F for an open set you can just optimize the location of the small ball and then you get the unique quality for open sets. The upper bound is for closed sets and how do you obtain the upper bound in probability what you do is you use temperatures in equality to get the upper bound. Use inequality of the probability that some F is bigger than L is bounded by U to the minus NL times the exponential of N times F like a Chernof bound type bound thing. Then if F is a nice function, you can find a ball around some X of radius epsilon contained in this half space if less than or equal to L gives you upper bound for a small ball and the upper bound of small ball is basically E to the minus N times F of X times the expected value of the expiration. And the expected value of the exponential perhaps grows by the exponential of some constant which depends on F. So in the end the best possible upper bound you can get is a decay rate which is the best possible temperatures in equality you can dream of. But this is only a local bound for a very tiny ball. And you cannot control the size of the ball, because it's a soft argument in some sense. Depends on where the [indiscernible] is obtained, what kind of bad F you have. And what you do, you use the inequality, which is simply an equality of the probability of the union of two sets. You can bind it by the sum and you can advertise the maximum. The beauty of large deviations when you take the log divide by N the 2 goes away. Once the 2 goes away you can cover a compact with a finite number of these things so immediately you get an upper bound for compact sets. So finite covering. So you can estimate the probabilities in question for compact sets the rate of convergence would be okay. As soon as it's compact, we're done. Problem is solved. Often these spaces are not compact and you need to do something else. What are the things you could do? Well, you can find a big compact set and show that what happened outside is not relevant. Now, in product theory, in order to show that something is not relevant, you have tightness estimates to ensure that the probability of a complement can be made arbitrarily small. Here we are interested in large deviations related to N so we have to show that outside the compact set the probability decays faster than any expiration you can think of. So basically the constant of decay has to be very large outside a compact set. You can sometimes do that. And that's called exponential type estimates that you do now. Or you can compact it with a space. In this space it can be compactified. Currently theorem in point topology. But then there isn't some problems because now once you compact with the space it's not clear whether you're functioning to the compactfication or not and then you have to get your local upper bound for all the points that were added in the compactfication. So you have to model as a concrete compactfication, not an abstract compactfication, in order to do it. Or you can modify the problem. You can just, if you're interested in estimating it you could simply say this is integral is less than or equal to some other integral somewhere else which happens to be a compact space and you can do that. And all of this has been tried. All just for Brownian motion. And just for the local time, in different contexts you can do different things. The Brownian motion local time is just a random measure, which is the amount of time that Brownian part spends in various sets A and the space of measures is the ambient space because think of LTA as a random probability measure. So your family of probability distributions and the space of measures in RD and this is where you want to do do large deviations, and who knows what the rate function is locally there's no mystery because it's just the, it's a reversible process standard compilation that the local rate function is [indiscernible] from the square root of F. So the question really is how do you handle the noncompactness here. The first attempt is to estimate, for example, if [indiscernible] formula for integrals like this for potentials that go to infinity as X goes to infinity. Now, the Brownian pipe wants to go too far because of it. So it's clear in this problem you can handle the noncompactness because the noncompact part just doesn't count. And the asymptotic rate is just the [indiscernible] of the integral. We have the supremum because of the minus I and so on trying to introduce [indiscernible]. But can also technically compactify the space, the easiest compactfication we can think of is the one point compactfication, add the point to infinity, semi space compact. The red function for the delta measured for infinity is 0 because the Brownian wants to get away to infinity. So the neighborhood of infinity really means most of the mass is very far away so it's clear that the rate function at that point is zero now everything is compactified but then the price you pay is you have to assume that the potential V goes to 0 or has a limit as X goes to infinity then you can subtract the limit and it's just basically going to 0. So the class of functions that you can handle gets reduced considerably because of the compactification used. Another thing you could do is you can replace RD by the Taurus. You can project the Brownian path that's wandering in RD to Brownian path on the Taurus and the function you're trying to estimate is larger on the Taurus for the protected path than the original path than if you try to estimate it on the Taurus it's good estimate on the whole space and the Taurus is compact so the estimates work for the Taurus so you can sort of do the problem for a large Taurus maybe and the end various of the Taurus go to infinity no trouble interchanging the two limits because actually domination in one case. And that has been tried to -- that's been the problem with Wiener sausage, because if you protect the sausage, the sausage and Taurus has smaller volume than sausage on RD. So problems like determining the range of random walk and things like that projecting on the Taurus is a good method of compactfication. Because the projection are estimates. So now the problem I want to look at is the following thing on the local time. In D dimension you look at this double integral of the potential 1 over mod X and you want to analyze this. Well, here what works is replacing Brownian motion by [indiscernible] process. Because [indiscernible] process is strongly recurrent and you can get super expiration limit what happens outside a compact set so you can do that and you can show because the function 1 over mod X is positively definite the integral is more for [indiscernible] process than it is for Brownian motion. So comparison works here. It's not quite satisfactory for the following reason. When you modify these problems like this use methods comparison and so on it is good enough to estimate the partition function ZT and Howard Gross and so on. And if you want to prove that the measure QT defined this tilting the new measure if you want to prove that it is -- I think I made a mistake. There's no 1 over T because I used the local time already. Okay. That should be T in this, clearly. So you cannot analyze this by modification because then the double limit is involved and it's hard to control this. So what I want to display now is joint work with Mukherjee, a post-doc at Munich. Let's look at this local time. And we have a function of the local time that we want to analyze. But this function is translation invariant. Because if you translate the measure mu it doesn't change. There are examples of this. The examples of such functions are our function which is a function of two variables or you can take functions of K variables which are invariant and rigid translations. And then you integrate with respect to the product measure of the mus and these are functions that are invariant with respect to translations so they have this property. And let's suppose these functions are tame that whenever the separation distance between a pair of points goes to infinity, these functions vanish. So they're basically supported on points X1 through XK which are tightly bound together. Sort of drift apart and the function vanishes. Function 1 over mod X minus X has set property. And then we want to analyze the expectation of something like this. So now we have to go to the question space because it's a problem if you want to -- the problem with the translation invariants so the natural space on which to do the large deviation is a quotient space of orbits of measures. Translation of it. This space is still not compact. So the question is: What is the compactfication of this space? You can't get away with one point compactfication here because the orbits -- then all the orbits collapse because at infinity everything meets compactfication. So you can't use that. So you have to have some other kind of compactification. The question is how to compactify. One point compactification is not suitable. It's not translation invariant. So let's take a function F which is translation invariant continuous and has this property of decaying when the distances go to infinity. And look at all the all such functions not just for 1 K but all the various values of K functions of several variables for all variables of K and I look at this linear functional lambda F mu which is the integral of this function against the mu. Just an elementary point topology here. These objects for each F are bounded and this space FK are all nice separable spaces because areas of functions of this type is really a function of K minus 1 variables that vanishes at infinity. So it's a nice separable bounds space. A comparable number of separable bound space. Use a subset that works for everything. And then by diagonalization procedure I can use a subspace that all these converge. So accountable set is enough. Now also one thing interesting that's to be noted is FK minus one in some sense a limit of FK. Because if I take a function of FK variables, find it as a function of K minus variables, multiply by a function of phi of X, 1 minus XK. Although this function phi has to vanish at infinity for this to be well defined in our class, but I can take the limit as phi goes to 1. If you take the limit as phi goes to 1, it's not a uniform limit, but it's a point with bounded limit it's okay for integrals. Because they can use the bounded convergence theorem. So then the integral FK with respect to the product measure will converge to measure the whole space times decay minus -- the K minus one dimensional integrals are recoverable from the K dimensional integral by limited procedure. So now what I want to do is choose the subsequence that these all limits exist. They can do it. Now I compactify the space in principle. But I have to know what is this lambda function F. Now, the answer is very simple. Or there is another way of thinking about it which is you define a metric in two measures probability measures mu 1 and mu 2, you look at this lambda FJ mu 1 which is a K dimensional integral of FJ, K depends on J because of different functions accountable sequence will be in different dimensions and you compute the integral, take the difference and it's the usual trick you do for counter product or metric spaces you put some weights in front so that the serious convergence is nothing special about it. And you try to complete this metric, which is really saying that anything that converges for each function should be some limiting object. And one way of choosing the weights is just usual choice. So here's the answer. The answer is the limit consists of collections of orbits. Mu tilde and their total masses of all the orbits, see the total mass doesn't depend on which measure you choose in the orbit. So these are sub probability measures. Each orbit will have some probability and you add them all up and you get something that's less than or equal to 1. There's the limit. And then the representation in terms of the limit you compute the same integral as you did before for each member of the orbit and add them all for the different orbits. The intuition behind this is very simple. How can a sequence mu N fail to be compact? The whole mask can go right away to infinity. And then the mask can split into two pieces that are running to infinity in different directions. That can happen. Or you can have a Gaussian distribution with a very high variance so the mass just goes away. So in the first case the orbit actually converges to a different orbit. The orbit -- in fact, the orbit doesn't change at all because they have the same mu. Here in the limit I get two pieces mu 1 and mu 2 mass one-half each but they're the same one-half mu, one-half mu appears twice. The third case it just becomes dust. So the compactification consists of various pieces you can pull together which have these sets of orbits and then something that disappears into dust, that's why the total masses when you add up you get something that adds up to P which is less than or equal to 1. Okay. And the same orbit can occur twice, so you have to count, you have to list the orbits with the understanding that you can list the same thing twice. Of course, it's possible that your collection is empty, which means that everything went to dust. So for every function F, your linear function goes to 0. Or you can just have a finite number of orbits or you can have accountable number of orbits that mass is getting smaller and smaller and adding up to something less than or equal to 1. So that's intuitively compactification. And you have a metric there which is you look at lambda F psi which is integral summed over all the orbits and the metric is the same metric except instead of a single measure mu now you do it for each member of the orbit and add them up. It's clear these are metrics but if you wanted to show it's really a metric, you have to show that the distance is 0 only if the two orbits two sets of orbits are identical. You have a problem here because one set of orbits here and another set of orbits there. And you have some objects which are equal. But the objects are collective objects and now you have to match orbit with orbit if you want to show the two sets of orbits that are identical. So there's a matching problem to be done. So let's not let, if you take a function of 2 K variables which consists of a function of K variable repeated in two blocks but the blocks linked by something, and the linking object can tend to 1 and then by dominant bounded convergence theorem what you converge to is sum of squares of these functions. So that means if you have integrals identical for two orbits, then the squares are also identical and you can repeat it for any power so all the powers are the same. That means you can identify individual pieces. So you would think that now that you identified individual pieces, then you're done, but not quite, because all I know is that any integral appears here appears in this list. But for different functions it may appear in different places. I have to locate them all in the same place. That requires an argument. So for each -- I will come to that in a minute. But what happens if you know that even if you succeed in doing it, what you know is the multiple integral for each F is the same for two different orbits. Then you have to conclude that the two orbits are the same, they measure or do you mean by -- so the real problem is to show there are two measures mu and mu which satisfies the relation of F and FK and for K bigger than or equal to 2 then mu is a shift of mu. That's what you have to prove. Okay. How do you do that? Well, let's take the characteristic functions. So the characteristic function is -- you can't use the characteristic function of all arguments because you have to be shift invariant. That means you can look at the product provided some other TIs are 0. That would be a limit, bounded limit of functions that we admit. So you have two characteristic functions phi and psi that the products are equal at all arguments where the sums are 0 than is 1 an imaginary explanation of the other. First, easiest choice to take to Ts is to take TN minus T and refers phi T times minus T, CT minus T, we know that phi minus T is the conjugate of phi T. So it tells you the absolute values are equal. Then you write for ITS times ki tee, you'd like to prove that ki T is an exponential. You take two of them you say now take 3 and that tells you criteria plus T2 equals ki T K 1 T2. That's only for Ts that are not 0. Ki T shouldn't be 0. If it is 0 then ki is not defined. But near the origin they're not 0. So and away from the origin you can prove that ki and T is ki T to the nth power because N positive Ts and 1 minus NT work. And that concludes that one is a shift to the other. So now we are faced with the problem of matching individual ones. That is, for each F is lambda F mu occurs some where on this list. So let's take one orbit and I compute for that orbit the bunch of numbers lambda F mu. All these numbers are going to come on the other list which is a collection of orbits somewhere. But I wanted to show that they all occur in one orbit. And match these two orbits. Peel them away and repeat it again. And then I'll be able to match the two orbits. So the question is Baire Category theorem helps. Now, if -- again F tend to 1 because 1 is translation invariant can be a limit of these things. So that means the collection of total masses are the same for the two sets of orbits. So tells you the size of the two orbits are the same total masses are the same. So in particular if you give me the mass there can only be a finite number with that mass. So if I know lambda F mu for all the F of 1 mu I know the total mass and only a finite number of things on the other side with which I could possibly match. So I consider this set of all functions in some FK such that it coincides with that mu. So this is a set of functions in FK for which the integrals match those that I get for a mu on the other side. These are closed sets. And their union is all of FK. By Baire Category theorem one is the interior. But to the linear functions agree in an open set, they're the same. So that's the end of that. Okay. And then you have to worry about what happens different K but notice that I said that FK minus 1 is a descendant of FK so only finite number there. I have a matching for every K. Eventually somebody has to match infinitely many Ks and then I can come down and they'll match all the Ks. So that is one of them that has all kinds of very large values of K and you can come down. Okay. Now, if you want to show that the object I have is really a compactification, then I have to show that the word [indiscernible] is dense. That's not very hard, because if I look at this, what I have in the completion, I have a bunch of measures with masses adding up to some number less or equal to 1. So take these measures, put them in space. Let's protect compact subset of these outside of the masses very small you can count approximate and spread them out as far as you can. And it's clear that that's going to approximate this, because your visualization of object that approximates this is just different pieces that are very far away from each other. So you can embed your mu and mu 2 into a single measure with pieces that are very far away. So it's clear that the original space is dense here. But then it's not -- we didn't really prove that representation theorem if I remember it correctly. I just mentioned it. I didn't prove it. I mentioned some consequences of the representation. I proved the uniqueness of the representation and so on and I proved the existence of the representation. And that is the crux of the compactification. I need to show that if you give me any sequence of measures, I can somehow extract from them these pieces. And you use concentration functions to do that. So you define the concentration function of a measure to be the superior -- how much time do I have? Plenty. I won't need that. So you use concentration function to do -- this is different. You look at the ball of radius R translate it around, take the maximum probability you can recover from it, and that's the concentration function of radius R. And any probability measure by just the tightness argument, if R goes to infinity, this goes to 1. Now, if you give me a sequence, I can choose a subsequence that converges. And the limit QK as K goes to infinity is monotone as the limit that limit need not be 1. It's something less than or equal to 1. In some sense you should think of Q as the biggest recoverable piece. And all these calculations depend only on the orbit. So it's done on the quotient space already. If Q equals 1, that means after some translation the measures of themselves are tight so the sequence of the translation converges. So the orbit converges to a single orbit. If Q is 0, the probability for any ball goes to 0 no matter where the ball is. That means the measures converge to real dust and the limit that corresponds to 0 orbits and the total mass is zero in that case. If Q is in between, you can recover a big piece. Because the concentration function gets values close to Q so you can pull it back and you will know something is just mass almost Q. Well, make it at least Q by 2. You can almost get 2 but it worked a little harder but you don't need to do that. You can at least mask Q by 2 but grow it as big as it can. That particular collection. You bring it in here and then you say what the limit is as you increase the radius. Okay. You have collected this biggest piece. It's not 1, it's something less than 1. And then you look at the measure that's left over. The leftover measure can't be anywhere near the vicinity of this measure because if it was in the vicinity of this measure, your radius by increasing more you'll recover more. The fact is that this is the maximum amount you could recover means that the rest of the mass is very, very far away. And then you remove this piece and work with the rest of the mass and remove piece after piece. And then you can show that that's a subsequence you have to do diagonalization many times at the end you get a subsequence that converges and the convergence isn't the metric we have. This is the compactification that you do. And repeat and exhaust. So now we have to worry about doing large deviations in this space. Okay. First there is some -- the orbit is not compact, because it's R over D. In the neighborhood of the orbit is the large bigger than the neighborhood of the original measure. Because they're there to, to complete all the translation. What saves us is a Brownian path is not going to wander too far. In time T, typically it wanders a distance route T. If I give it range T, then I'm in the large deviation range. If I give it stance T squared, then I'll be on large deviation. So I'm willing to say my Brownian path is allowed to honor a distance T squared. So the local time is essentially going to be limited to something volume T squared which is polynomial in volume that will express the exponential rate it by a an extra polynomial factor which I don't care about. The fact that the orbit is big doesn't matter. So I can limit myself to definite sized orbit. That is a way of doing it. You define a certain -- the way you compute large deviation in rate function is you need to have some integral which you can control. The integral you control are final cuts formula. Final cuts formula helps you to control integrals of exponentials. In fact, if the exponential is at the form one-half Laplacian mu over U with a minus sign, then by Martingale property, it's integral exponential of its integral is bounded by one basically. So there's lots of integrals you know of exponential size and cost type whose expectations are bounded by a constant. So you use all of them and look at the best you can and that's the large deviation upper bound that calculation gives you a gradient of square 1 over 8. And it's a simple calculation of variation. So that's what we need to do, except we can't use one function. We have to use a function here, a function there. A function here, to capture all the bits and pieces. So you have to take the supremum all possible locations. But a little perturbation doesn't change much because all these functions are continuous. So there's no problem in having a little variation, then your volume is only T squared so you can do it by a polynomial number of these things and it's a mess to write it down, but there are so many parameters -- you look at this integral, the expectation of the exponential of this of the 1 over T is bounded by some number. And then look at the supremum of these things, that's the lower bound you can get. That's the upper bound you can get and these integrals are all bounded by Final Cuts Formula, small variations change very little. And you don't have to shift by more than a distance T squared. The super polynomial of sets of AI. And if you do the [indiscernible] it can separate out all the components and then what you get for the red function is just some of the red function. In the end, we haven't really discovered anything new. We're just justified the compactification and pretend that this place is compact, that's all. Now you go back to the problem in question. So I want to look at this function. This function has a singularity that's an additional headache. But 1 mod over is not a bad singularity in single dimension. So usual estimation methods tell you that you don't have to worry about the singularity. For all practical purposes you can pretend it's a bounded continuous function. So if you do the variational problem, normally you can do it in our space and F is an L1 function, you can think of it as square of an L2 function and then 1 over 8 square F [indiscernible] and different components are different functions, and so the variational problem, this is what I get by taking the variational problem in the compactified space. And Q1 can then prove that the variational problem is attained at a single fee of unit mass. Which is unique up to translation so there's a unique absent for the variational problem on X tilde and that tells you the math under QT concentrates in the neighborhood of that orbit. The uniqueness of the variational of the problem proved primarily by Elliott Lieb in different contexts. Useful here. So the moral of the story is in the end you really need all this. But you needed it to be sure that you didn't need it. Thank you. [applause] >> David Wilson: Questions or comments? >>: But you said a minute ago, a statement would these precautions have been so unnecessary had they not been taken? >>: So this variational problem of your theory, the large specifications for this potential, so type some physical original, motivation? Tell us a little bit about that. >> Srinivasa Varadhan: I think garden -- all around problem. A question of electrons sort of reacting to the field that they created themselves, some in the past. >>: Because I know that if you just fix one variable and just look at the potential of the function of the other variables and the concentration of that along the random walk path has been extensively used by others in studying intersection processes of random walks, tightly related to what you're doing in your field. >> David Wilson: Any other comments or questions. Let's thank -- Thank you. [applause]