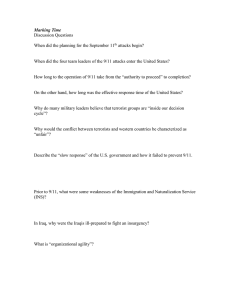

An Analysis of the 1999 DARPA/Lincoln Laboratory Evaluation Data for Network Anomaly Detection

advertisement

An Analysis of the 1999 DARPA/Lincoln Laboratory Evaluation Data for Network Anomaly Detection Matthew V. Mahoney and Philip K. Chan Data Mining for Computer Security Workshop at ICDM03 Melbourne, FL Nov 19, 2003 www.cs.fit.edu/~pkc/dmsec03/ Outline • DARPA/Lincoln Laboratory IDS evaluation (IDEVAL) • Analyze IDEVAL with respect to network anomaly detection • Propose a remedy for identified simulation artifacts • Measure effects on anomaly detection algorithms 1999 IDEVAL Simulated Internet Outside Sniffer Router 201 Attacks Solaris BSM Inside Sniffer SunOS Linux NT Audit Logs, Directory and File System Dumps Importance of 1999 IDEVAL • Comprehensive – signature or anomaly – host or network • • • • Widely used (KDD cup, etc.) Produced at great effort No comparable benchmarks are available Scientific investigation – Reproducing results – Comparing methods 1999 IDEVAL Results Top 4 of 18 systems at 100 false alarms System Attacks detected/in spec Expert 1 85/169 (50%) Expert 2 81/173 (47%) Dmine 41/102 (40%) Forensics 15/27 (55%) Partially Simulated Net Traffic • tcpdump records sniffed traffic on a testbed network • Attacks are “real”—mostly from publicly available scripts/programs • Normal user activities are generated based on models similar to military users Related Work • IDEVAL critique (McHugh, 00) mostly based on methodology of data generation and evaluation – Did not include “low-level” analysis of background traffic • Anomaly detection algorithms – Network based: SPADE, ADAM, LERAD – Host based: t-stide, instance-based Problem Statement • Does IDEVAL have simulation artifacts? • If so, can we “fix” IDEVAL? • Do simulation artifacts affect the evaluation of anomaly detection algorithms? Simulation Artifacts? • Comparing two data sets: – IDEVAL: Week 3 – FIT: 623 hours of traffic from a university departmental server • Look for features with significant differences # of Unique Values & % of Traffic Inbound client packets IDEVAL FIT Client IP addresses 29 24,924 HTTP user agents 5 807 SSH client versions 1 32 TCP SYN options 1 103 TTL values 9 177 Malformed SMTP None 0.1% TCP checksum errors None 0.02% IP fragmentation None 0.45% Growth Rate in Feature Values Number of values observed FIT IDEVAL Time Conditions for Simulation Artifacts 1. Are attributes easier to model in simulation (fewer values, distribution fixed over time)? • Yes (to be shown next). 2. Do simulated attacks have idiosyncratic differences in easily modeled attributes? • Not examined here Exploiting Simulation Artifacts • SAD – Simple Anomaly Detector • Examines only one byte of each inbound TCP SYN packet (e.g. TTL field) • Training: record which of 256 possible values occur at least once • Testing: any value never seen in training signals an attack (maximum 1 alarm per minute) SAD IDEVAL Results • Train on inside sniffer week 3 (no attacks) • Test on weeks 4-5 (177 in-spec attacks) • SAD is competitive with top 1999 results Packet Byte Examined Attacks Detected False Alarms IP source third byte 79/177 (45%) 43 IP source fourth byte 71 16 TTL 24 4 TCP header size 15 2 Suspicious Detections • Application-level attacks detected by lowlevel TCP anomalies (options, window size, header size) • Detections by anomalous TTL (126 or 253 in hostile traffic, 127 or 254 in normal traffic) Proposed Mitigation 1. Mix real background traffic into IDEVAL 2. Modify IDS or data so that real traffic cannot be modeled independently of IDEVAL traffic Mixing Procedure • Collect real traffic (preferably with similar protocols and traffic rate) • Adjust timestamps to 1999 (IDEVAL) and interleave packets chronologically • Map IP addresses of real local hosts to additional hosts on the LAN in IDEVAL (not necessary if higher-order bytes are not used in attributes) • Caveats: – No internal traffic between the IDEVAL hosts and the real hosts IDS/Data Modifications • Necessary to prevent independent modeling of IDEVAL – PHAD: no modifications needed – ALAD: remove destination IP as a conditional attribute – LERAD: verify rules do not distinguish IDEVAL from FIT – NETAD: remove IDEVAL telnet and FTP rules – SPADE: disguise FIT addresses as IDEVAL Evaluation Procedure • 5 network anomaly detectors on IDEVAL and mixed (IDEVAL+FIT) traffic • Training: Week 3 • Testing: Weeks 4 & 5 (177 “in-spec” attacks) • Evaluation criteria: – Number of detections with at most 10 false alarms per day – Percentage of “legitimate” detections (anomalies correspond to the nature of attacks) Criteria for Legitimate Detection • Anomalies correspond to the nature of attacks • Source address anomaly: attack must be on a password protected service (POP3, IMAP, SSH, etc.) • TCP/IP anomalies: attack on network or TCP/IP stack (not an application server) • U2R and Data attacks: not legitimate Mixed Traffic: Fewer Detections, but More are Legitimate Detections out of 177 at 100 false alarms 140 120 Total Legitimate 100 80 60 40 20 0 PHAD ALAD LERAD NETAD SPADE Concluding Remarks • Values of some IDEVAL attributes have small ranges and do not continue to grow continuously. Lack of “crud” in IDEVAL. • Artifacts can be “masked/removed” by mixing in real traffic. • Anomaly detection models from the mixed data achieved fewer detections, but a higher percentage of legitimate detections. Limitations • Traffic injection requires careful analysis and possible IDS modification to prevent independent modeling of the two sources. • Mixed traffic becomes proprietary. Evaluations cannot be independently verified. • Protocols have evolved since 1999. • Our results do not apply to signature detection. • Our results may not apply to the remaining IDEVAL data (BSM, logs, file system). Future Work • One data set of real traffic from a university--analyze headers in publicly available data sets • Analyzed features that can affect the evaluated algorithms--more features for other AD algorithms Final Thoughts • Real data – Pros: Real behavior in real environment – Cons: Can’t be released because of privacy concerns (i.e., results can’t be reproduced or compared) • Simulated data – Pros: Can be released as benchmarks – Cons: Simulating real behavior correctly is very difficult • Mixed data – A way to bridge the gap Tough Questions from John & Josh?