21852 >> Nikolai Tillmann: Hello. So it's my pleasure... from the University of Dusseldorf in Germany. He's been...

21852

>> Nikolai Tillmann: Hello. So it's my pleasure to have Carl Friedrich Bolz here today from the University of Dusseldorf in Germany. He's been working on PyPy. He's in fact one of the developers or the core developer of the PyPy project nowadays. One of the core developers. Okay. So he will talk about the approach in PyPy and dynamic languages in tracing JIT. He's visiting us for four days. If you want to talk to him later on, tell me and we'll schedule a meeting with him.

>> Carl Friedrich Bolz: Thanks very much. As Nikolai said, I've been working on PyPy for the last pretty much six years now.

And I learned a few things about implementing dynamic languages in during that time.

For this talk I sort of tried to summarize a bit what specific problems of implementing dynamic languages are. And this is very much thinking in progress.

So if you have any comments or questions, just feel free to interrupt me at any time. So the talk is about how to implement dynamic languages in a good way, with a focus on complicated dynamic languages because most of the currently interesting ones are rather complex. Also in the context where you can't just throw arbitrarily much money at the problem. Like an academic project or an open source project or even a domain-specific language.

I'm mostly going to focus on [inaudible] object oriented languages and not going to talk at all about wafering issues because it's just yet another large field.

So the goals of such implementations are that you want to reconcile flexibility and maintainability of the implementation, because languages quite often evolve. You also want your implementation to be simple, because your team is going to be small. And of course you want performance.

So the talk is going to be split into three parts. In the first part I'm going to summarize and analyze the specific difficulties that you get if you have to implement a dynamic language.

In the second part, I'm going to compare and contrast various approaches that people have been chosen to implement a dynamic language. And in the third part I'm going to talk about the PyPy project that I'm involved with.

So if you want to implement a dynamic language, a few things are just as if you implement any other language. Your language is going to have a syntax. So you need some kind of lexicon and [inaudible] you often need some compiler component whether it's to bytecode or something else. You'll need a good garbage collector and some way to lay out your object in memory.

Those are often rather similar to other languages so I'm not going to discuss all of them in detail.

Your language implementation will need a way to deal with the control flow of your language. And this is trivially and slowly done in an interpreter, whether it's based on

AST or bytecode. And if you go this path using AST bytecode you have a technically well understood problem.

Sometimes you have -- sometimes the control flow of the language is going to be a bit different than the sort of standard languages if you have, if you look at generators in

Python, or some language have really complex demands, but it's quite rare. Example for this would be Prolog where you're backtracking and nondeterminism, but such examples are rare.

So the very specific problem that you need to address when you want to make your dynamic language efficient is the problem of late binding because in dynamic languages all your look-ups can only be done at runtime just looking at the source code you cannot bind any of your names.

And historically dynamic languages have moved to later mining time, for example, if you compare small talk with say Python, in small talk a few things are bound at compile time like the shape of your object is fixed. And in Python the shape of the object can be completely arbitrarily. And the mechanism that exists in the various languages are greatly different. So even though on the surface the languages might look similar, the real details are vary greatly between all the various languages. And the mechanisms are also very ad hoc because the first implementation of dynamic languages were mostly interpreters so people say oh let's do this and it's easy. But then, of course, if you have to think about how to compile this then you have big problems.

Let's look at some examples. In Python all the following things are late bound. Global names, modules can be loaded dynamically at runtime. Instance variables can be completely changed, for instance. Methods are late bound. You can even change the methods of a class. You can change what class an object has. Change the class hierarchy, you can say this class now has this other base class and so on.

A special case of late binding is dispatching. And dispatching is the question how do I implement the operations that I want to do on my objects. And dispatching is again as late binding, it's quite complex.

And the languages -- the differences between the languages are very big. So usually all the operations, all that look like one operation on the language level are internally split up into a large number of look-ups called dispatches, and usually that means that one single operation internally has a huge space of paths.

And most of the paths are then actually uncommon, but you still need to deal with them somehow. And just to give you an example of the complexity involved, let's look at attribute reads in Python. So the details of this are not so important. What I want to get more at is how complex things can really become.

When you read an attribute M of an instance X in Python which is also the mechanism used for method calls, the interpreter has to do or the implementation has to do the following steps.

First you have to check for the special method underscore, underscore getAttribute on the object. If this special method is there, you just call it and be done. If it's not there, you have to -- the lookup proceeds as follows. First you look in the object directly, whether the object has an attribute of this name. If it's there, return it.

If it's not there, on the object you are going to look in the classes of the object. And for that you look at the method resolution order of the object and then just look in each class's dictionary for the attribute. If it's there, return it. If not, go on.

If the attribute is found in a class you're going to call a special method on the attribute value, and if the attribute is not found there's yet another method to override this behavior and then in the end finally if it's still not found you're going to raise an attribute arrow.

Something that looks rather simple on the surface, well, just read a feed out of an object.

Internally it's really a large space of different paths.

And the same is true if you look at integer addition. This image is greatly simplified. But basically if you want to add two objects, a large amount of things can happen. So basically you can add numerical objects like integers, floats, loans or complex numbers.

But you can also add strings or concatenate lists or TUPLs or you can also, the user defined class can completely override what addition should do on these objects.

And just a small part of the space like if you add -- if you add two objects A and B and A is an integer and B is an integer, you're going to get an integer, and perform integer addition.

And then, of course, there are combinations like if you add a loan to an integer you'll get a loan. And if you read at the graph in the beginning I tried you need a large monitor.

So as we saw one dispatch operation, the loan is rather complex. The problem is, of course, if you do many dispatch operations in a sequence, you have two additions then you get a combinatorial explosion of path.

So if you add A plus B then add C, then the dispatch decision that the first operation takes will influence the dispatching of the second iteration, because A of the second operation. Because, for example, if A and B are integers, then the paths that the second addition takes is constrained by this knowledge. And so somehow if you want to be a very efficient implementation you need to deal with this concatenation or explosion of paths.

A further source of inefficiency is the boxing of primitive values. Because you don't know the type of any of your objects. So many implementations actually have to box all objects, including primitives like integers or floats.

And, yes, I'm not going to talk about tagging here or other tricks. And the fact that your objects are going to be boxed puts a lot of pressure on your garbage collector, because if you just do a bit of arithmetic you're going to create millions and millions of short-lived arithmetic boxes and so you need a good garbage collector which is clear anyway.

But you would also like, by your compiler or by your implementation, to optimize away some of this overhead. Because if you have arithmetic, the lifetime of your boxes is known. So if you have again A plus B plus C, the intermediate result A plus B has a limited lifetime, because after the second addition it's no longer going to be used.

So, right, if you have A plus B and plus C is an int and C is also an int, then the box made for A plus B will die pretty quickly. And so what you would like is that the result of A plus

B is not allocated.

The problem with this is that if you just look at A plus B, there are also parts where the result escapes and something arbitrarily happens to it because C could be a user defined class that overrides addition.

So it could override addition to store the left operant into a file or into a globally [inaudible] place or whatever. So you are always going to have a path where you cannot get rid of this allocation of A plus B. And now I get to the last problem, which is a bit of a side problem, but it's good to keep it in mind anyway.

Many dynamic languages have refried frame access. What that means is that you can access the frame of functions that are currently running or have been run at some point in the past, and use refraction on them.

You can say give me the value of the local variable A in this function. And so languages that do that are Python Smalltalk and Ruby and quite a few others. And the reason why you want this is on the one hand you can do all sorts of interesting acts and the other hand one of the nice property implementations is you can write the debugger for the language in the language itself, which is for things like Smalltalk they have really a closed approach is highly desirable.

Implementing frames, these refried frames is trivial because in an interpreter you need the frames anyway because the interpreter is going to need the frames anyway to store its information. But the question is how should refried frames work efficiently if a compiler is used.

I think not a lot of implementations actually solve this problem. Okay. So to summarize a bit the difficulties that I just -- that we identified, you need a way to implement the controls of your language. You need to implement the various mechanisms for late binding and dispatching.

Ideally you want ways to optimize sequences of dispatches and also getting rid of the intermediate boxing in the process and then you should keep refried frames in the back of your mind.

Okay. So let's pause here for a moment and are there any questions about the first few?

Or comments or additions or.

If everybody is happy with this list, if somebody wants to add something. I will continue a bit.

So the next part I want to compare various approaches that are taken for implementing dynamic languages and look at how well they fit these comments. And of course if you implement the language quite often you'll implement some sort of virtual machine and the

language to write virtual machines is C++. So a lot of these approaches are using C++ as an implementation language, and well the virtual machine can be purely interpretation-based can have some sort of static compiler, can have or can have a just in time compiler.

And the sort of opposite approach is to reuse an existing virtual machine that has already been implemented and is highly tuned and the candidates for that are, of course, the JVM or the CLR.

So let's look at some examples. If you use, write your own virtual machine in C++, there's, for example, the C Python and Ruby which are the standard Python and Ruby implementations which are just C-based interpreters. There's V8 which is Google's

JavaScript implementation which is a method based JIT and there's trace monkey a traced just in time compiler and there are lots and lots of others, of course.

On the other hand you can build on top of an existing virtual machine, and for that the examples would be Jython and IronPython which are Python implementations for Java and the CLR and the equivalents, JRuby and IronRuby. And there's a large ecosystem of various implementations that try to target the JVM more than the Prolog slips,

Smalltalks, all kinds of things.

Let's first look at what happens when you implement your VM and C. The first problem you're going to hit if you program in C, it's quite hard to get all of the sort of meta goals that are listed in the beginning, flexibility, maintainability, simplicity and performance.

And in the Python case, this is rather obvious.

So on the one hand there's CPython, which is a very simple, flexible bytecode virtual machine. It's evolvable. It's been maintained for many years but it's really slow.

Psycho on the other hand which is an extension to CPython is a just in time compiler.

Extremely complex. It's hard to change. But it has excellent performance. And on the feature side of the spectrum there's Stackus [phonetic] which is a form of Python and adds a nice feature, adds very lightweight micro threats.

But it was never incorporated back into CPython for plexity reasons. So the CPython developers said, yes, this is very nice but it's going to make our VM much more complex so we don't want it.

Right. So if you use C++ or C or C++ to write an interpreter you end up with a -- well, the implementation itself is going to be mostly very easy. Quite well understood problem.

How to write interpreters. It's portable between different architectures if you don't do any crazy tricks and it's going to be slow. So basically if we go back to our requirements, implementing in C is not really going to -- an interpreter in C is not going to help you with many of them.

Yes, you can get the semantics right and it's quite nicely from the source perspective, but nearly nothing is going to be fast. The only thing that's sort of easy is that well you can get refried frames. You have them, but again there's no [inaudible] so the first reflexion of many people that hear well our interpreter is slow is that the first reflex always the bytecode dispatch overhead. We want to get rid of that.

And thus they write a static compiler that is reusing the object model of the interpreter.

And that gets nicely rid of the interpretation overhead, but it turns out that the interpretation overhead like the bytecode dispatch overhead is not really all that bad. So you get two times speedup which sounds very nice but not if you look at the fact that the dynamic languages are usually 60 or more times faster than CU or something.

And basically in a static compiler it's very, very hard to actually do anything about dispatch late binding or boxing, because the information you get just by looking at the source code is rather minimal.

And any sort of static analysis is extremely hard and mostly doesn't work in realistic programs. And in the Python case, well, there are lots of people have had this thought.

It's all the bytecode's fault.

So there are lots and lots of projects that try it, and the most interesting one that's still going on is Cython which is basically a compiler from a large subset of Python to C. And there are lots of discontinued experiments that have failed.

So, right, so in a static compiler you're not going to win much. Your control flow is going to be faster but all the other problems are not solved. Most static compilers that I know just give up on refried frames. So fundamentally attack some of the problems you will have to use some sort of dynamic compiler.

And if you need a dynamic or just in time compiler, then you open a whole new can of worms.

UVM is going to be significantly more complex. You will have to compile your functions to assembler. You have to do type profiling and you have to then do inlining based on

that you need general optimizations on the resulting functions and you need complex back ends per architecture that you want to support.

And basically all this is from an engineering perspective is extremely hard to pull off for a volunteer time. It's not something that you just write together with three people on a weekend.

And so the typical examples for method-based just in time compilers are, well, historically the small talk on the self JITs and they were extremely good. They took a long time to write. And the more modern examples would be the V8 and partial JagerMonkey.

JagerMonkey is a bit of of a strange case. And JagerMonkey is a strange part of that.

The reason why compilers are so much more complex than interpreters is compilers are inherently bad encoding of semantics. So basically the compiler will have to encode all the complex cases that your language has, all the strange paths, all the rare things that might conceivably happen.

And often needs a huge bag of tricks to support the common case as well. And interaction between all these tricks can be very complex and hard to foresee.

And thus this encoding of the language semantics into the compiler is quite often obscure and hard to change. And if you look at psycho, psycho is an excellent example for the slide. Psycho was written by, it's a one man project or rather a one genius project. It was written by a guy over the course of two years, and in the end it was extremely fast.

But then it turned out that it was so complex that on the one hand nobody else ever managed to understand it. So a few people tried to help develop it but didn't really get anywhere. And on the other hand CPython continues to be developed.

I mean CPython always grows new features and psycho couldn't really keep up with the rapid development of CPython. So many of CPython's newer features are not supported because even the author at one point just gave up.

And it's also not ported to anything else than 32 bit Intel machines and it never will, because it would just mean a complete reengineering of the whole thing.

So one specific problem of sort of method-based just in time compilers that I want to point out here is the problem of dispatching dependencies.

Let's go back to my favorite example of A plus B plus C. If you think internally that you have some generic operation add that's adding to numbers, then internally what the JIT

has to compile is something like this. First you do an add of A and B. Store it into intermediate result. And add X and C to R. If you just inline these operations here in a language that, for example, just supports integers and floats you get something quite complex.

First you have the case of A could be an integer. B could be an integer, then you perform an integer addition, otherwise you perform a float addition.

So at this point you already lost, if you just do inlining you already lost the information what X is. So at this point it could either be a float or an integer. And then if you just -- the second addition you're not making use of the fact that you already know in theory what X is. Based on which of the paths you took to get there. So to improve this, you would have to duplicate this code and move it into the specific paths, but this is a rather, in general, a rather complex optimization that you then need to implement somehow.

So to look at how method-based JITs meet the requirements we get a list a bit like this.

You get good control flow support. You can handle your light binding and dispatch given enough work. Dependency are rather hard, but it should still be possible somehow. And boxing, given enough manual work, you can probably write an optimization that tries to get rid of some of the boxings. Again refried frames are hard.

So along come tracing JITs and how many here know how tracing JITs work and have been exposed to enough of Nicolai's talks? Okay. So well I'm still going to give an introduction and you can tell me at which point up disagree.

So tracing JIT compilers are relatively recent approach to writing just in time compilers.

And they have been pioneered by Mikael Franz and Andreas Gal originally for Java VMs.

But then they turned out to be also well suited for dynamic languages.

And examples for tracing JITs are TraceMonkey and JIT, as well PyPy and why sort of?

They're clearly tracing JITs but they're still quite different from those.

So tracing JIT compilers, they took this idea from the Dynamo Project. Dynamo was a dynamic rewriting of Assembler. And the nice thing about tracing JIT compilers it's conceptually much simpler than the type profiling that the method based JIT has to do.

So the basic assumption of a tracing JIT are, A, the program will spend most of its time executing a loop. Well, that's kind of clear. And, B, several iterations of a loop are somehow likely to behave similarly. So the code path through a loop, the two iterations of a loop takes are similar.

And so what a tracing VM does is it's usually a mixed mode execution environment. That means in the simplest form it will contain both an interpreter and the tracer. And when you start running your program at first everything is simply interpreted.

And while you interpret your program you do some lightweight profiling after a while you find out this loop over there it seems to be executed very often. I want to do something about that.

And at this point you start generating code, specifically only for this loop. And you do this by starting to produce a trace. So what's a trace? A trace is basically just the sequential,

I mean the linear list of operations. And it's produced by recording every operation that the interpreter executes. So basically the tracer observes what the interpreter is running, writes it down. And then in the end at some point it will turn this list of operations into machine code.

And tracing stops usually after you reach a position in the program that you've already seen before. So if you trace a loop, you start at the beginning, write down your operations, at some point you'll reach a position in the program you've seen before and then tracing stops and the trace thus ends with a jump to the beginning, to its own beginning.

Right? So what happens if there is control flow in the loop? So, for example, if there are if statements within the loop you cannot just ignore the fact that you just took a decision and just recorded one path. So what you do is you -- in the trace you encode all these decisions and you encode them in guards and basically guards are points where the control flow could have diverged to another path.

And then, well, the next steps are code generation and then execution, and given that the list of operations is linear, it's very easy to turn into machine code because you just need to support linear paths, your allocator can be simple and so on.

You can also just immediately start executing the loop you compiled and you'll do it until the guard fails. And after a guard failure, I mean guard failure means that the assumptions that you did to produce the loop are no longer true.

So afterwards you will simply go back to interpret the program at the same point. So, of course, in practice there will be some control flow. So if a guard fails very often things are inefficient because you go back to the interpreter all the time. So after a while if a guard fails often enough you just attach a new trace to this guard that starts from there.

So how does a tracing JIT deal with dispatching? The trace contains the bytecode -- typically it contains the bytecode interpretations that the interpreter performed and the bytecode as we saw has complex internal semantics. For example, you would see an add and the add internally does rather complex things.

So what the optimizer typically does is specialize this trace to the specific types that have been seen during tracing. So, for example, if you trace a loop and the specific instance of this loop execution is working with integers, you will type specialized distrace to just contain the paths where you deal with integers.

And to do that, the optimizer needs to duplicate some of the language semantics. It needs to know that add has these path with integers and these paths with floats.

And the nice thing about tracers is that it deals with dependencies with subsequent dispatches very well. Because the paths through your loop are split very aggressively.

Basically control flow merging is happening only in the simplest approach only at the beginning of a loop. And that means after a type check you can assume the rest of the trace can assume that the type of that variable.

And that is not really true if you do inlining because if you do inlining your paths will merge after the inlined method and you lose the information that you gained about the types again.

And the reason why the tracer can be that aggressive about things is that it has to deal only with those paths that it has actually in practice observed. And in practice the number of paths that's actually being run and the program is small, even though the potential space of path is rather large.

Similarly, you can deal with boxing in a tracing JIT. You can do -- you can try to do escape analysis of operations only within the trace. So if you see an allocation, the thing is used for a bit and it's stopped being used. You can try to remove the allocation.

And you can do this because the trace is not containing the possibility that something very strange is happening. So you don't need -- you don't even see the paths where the object does escape.

So some more technical advantages of a tracing JIT are that they can be added to an existing interpreter unobtrusively, indeed what happened with TraceMonkey and JIT and also the interpreter does a lot of the work of the tracer.

You get automatic inlining for free because if you trace a function call you will just trace the inner operations and in line the function in that way. And it deals very well with finding of the few common paths through the large path space.

The bad points are while you're switching on the one hand you start with an interpreter which is slow, then the switching between interpretation and machine code execution is also slow.

You might have problems with really complex control flows because the sort of loop tangles, trace tangles if you have really complex control flow are really not that optimal.

And then another problem is you have granularity issues. Often the interpreter bytecode is a bit too coarse because the interpreter bytecode does not show you any of the internal dispatching that is going on within the bytecode.

So in theory you would like a bit more detailed traces. Right. And so in this case the optimizer needs to carefully read the decision tree that has been going on in the interpreter.

So if we look at tracing JITs and how they meet the requirements, well, control flow works rather well apart from really complex cases.

Late binding can be handled quite well. Dispatching can be handled. Dependencies is greatly improved because you aggressively split your paths, and unboxing optimizations are much simpler because you can focus on trace stuff. And there's also a nice trick about refried frames. As soon as you try to reflectively introspect the frames you simply leave the machine code execution and go back to the interpreter. And I think indeed this is something that TraceMonkey is doing.

And that means that the reflective axis is not going to be fast but in practice that doesn't really matter. Okay. So the last common approach is implementing your language on top of an object oriented virtual machine.

And if you do that, usually the approach that you take is you implement on top of the JVM or CLR usually by can compiling to the bytecode of that VM. You also have to implement the object model in either Java or C#. And this brings its own benefits of sets of benefits and problems.

And I'm going to look specifically at Jython and IronPython in the following. The benefits of implementing on top of one of these VMs is clear.

The first very nice thing is that you work at a much higher level of implementation as seen -- you don't need to deal with lots of low level details that you need in C like the VM supplies you with a very good garbage collector. Supplies you with a JIT and you have much better interoperability than C level provides.

In Python case, both Jython and IronPython integrate very well with their host VM. That means you can use Java libraries from .NET and Python very nicely. Side point they have much better threading than all other Python implementations.

Of course, you also get a set of problems. And the biggest problem that it's quite often very hard to map the specific context you -- concepts you have in your language to what the host virtual machine provides you with.

And due to this semantic mismatch, your performance is often not improved at all, because the object model that you implement is very untypical for the code that you usually write in Java or .NET, C#.

In particular, your object model typically has many mega morphic call sites, sites that have tons and tons of receivers, and the JIT of your underlying GM is not going to like that very much.

Also, I mean, the JVM has very good escape analysis, I don't know about the CLR but it's not really helping you with boxing at all because there's always paths where your object is escaping.

And to improve things, you can do very careful manual tuning, but since the VM also doesn't give you a lot of customization hooks or feedback, this manual tuning is extremely tedious.

And, right, in Jython and IronPython it's quite clear that they're not faster than CPython even though people hoped they would be. And IronPython is cheating in that it really doesn't implement the feature of refried frames.

And in the Python case, this is even still sort of easy, but if you look at an extreme example where the semantic mismatch in the language you want to host is much larger like prologue it's much, much harder to really get performance out of that.

Most prologue interpreters in Java or prologue implementations in Java are impressively slow. Okay. So this slide is mostly about the JVM because I don't really know enough about the CLR to say something there.

The problems that I described, the JVM is trying to address some of them in the future.

And the JVM in particular is trying to add support for more languages by implementing take-calls and invoke dynamic but it's not really there yet in the current virtual machines.

And again I'm going to predict that good performance, you're not going to get good performance for free. You still have to do a lot of manual tuning and careful controlling of what the VM is doing with your object model.

And those VMs are not really meant for people who care about the exact shape of your assembler, which is what you do if you want to implement the language.

And JRuby felt that very much. JRuby has tried to be a really fast -- Ruby implementation which in theory should be easy because the standard Ruby implementation is really bad. And people poured a lot of effort into it.

And it seems to work. So some cases are fast. But basically things are also completely hot spot-specific and also dependent on specific minor versions of hot spot. So if hot spot decides to slightly change its inlining threshold somewhere then JRuby is going to be slow because some path is not properly aligned or something -- what they did was really staring at assembler for months and deciding, maybe I can split my method here a bit like this and JVM will inline it for me. This is not something that's easy to do or fun.

So if you're on such a VM, you get good implementation of control flow, late binding dispatching can be handled if you put effort into it.

Dependencies depends on how well the optimizations like the code that you have.

Boxing is not necessarily improved because escape analysis doesn't help you and refried frames are either you don't do them or they're going to be inefficient.

Okay. So let's pause here again. Other comments or questions? Good. So now I get to what I've been up to in the last six years. So the PyPy project has been started in 2003 on Python mailing list out of frustration with the existing Python implementations.

And in the intermediate years it has received a lot of funding both from the European

Union, from Google, Nokia and a few other smaller companies.

The goal of the Python project like this from the Web page, it aims at producing a flexible and fast Python implementation. But even though the name and sort of the goal statements only target Python, in practice the whole technology stack is also reusable for other dynamic languages.

If you look at the status of languages we have implemented with PyPy, indeed the most complete and good implementation of the language that we have is for Python and indeed the PyPy Python implementation is the fastest Python implementation in existence.

It also contains a reasonably fast prologue. It contains sort of full squeak implementation but we have not tried to JIT technology with that yet. And it contains lots and lots of smaller experiments.

So at some point somebody tried to implement JavaScript with it. There's a small scheme interpreter. There's very rudimentary Haskell interpreter and all sorts of small made-up languages.

So what is the approach that you take if you use PyPy to implement a language? Well the goal is of course to achieve our original goals within one implementation. And you do that by not writing your virtual machine by hand at all. But instead you want to automatically generate it from high level description of the language that you're trying to implement.

And this generation uses some meta programming techniques and aspects. And how do you describe a language if you want to implement it? Well, the way PyPy chose is that you write an interpreter for the language in high level, in a nice high level language.

And this interpreter is then translated or compiled to be of a virtual machine that's running in various target environments. And more specifically you can translate your interpreter to run as a VM in C.

And we also have back ends for bonnet and JVM but I'm not going to talk about them.

So PyPy particularly consists of two large parts. On the one hand there's a Python interpreter written in R Python, and on the other hand there's a compiler that turns R

Python programs into C code. So what is R Python?

R Python is a rather large subset of Python, and the subset is subset is chosen in such a way that you can perform type inference on our Python code.

But R Python is quite nice high level language. It's really close to Python except you need to think about what the types of your variables are in specific places.

And I want to differentiate it in particular against other meta circular implementations like squeak and Scheme 48, because squeak is also a small thing in small talk but restricted subset of small talk they chose is really close to C. And basically it's just a thin syntax layer on top of C.

And that's not the case for PyPy.

How do you generate a VM? We wrote this custom translation tool chain that takes an interpreter in R Python, turns it into C.

And the main observation of this tool chain is a lot of the aspects of the final VM are really completely orthogonal to your language semantics.

So the idea is that those aspects are inserted automatically for you during translation.

And examples for this is you don't want your interpreter to be confused by the fact that you put your garbage collections into it everywhere. And this is definitely the case if you implement in C. Like if you look at CPython, CPython uses reference counting. So the whole language implementation is littered by all these increased reference, decreased reference macros, and they just completely confuse what the language is supposed to do.

So the other aspect that is inserted in a nontrivial way during translation is a tracing JIT compiler that's automatically tuned for the language that you're currently dealing with.

And I'm going to talk about that too. So the good points of PyPy approach is simplicity, because you can separate the language semantics from all the low level details that don't really have anything to do with the language that you're trying to implement.

On the other hand, you have flexibility, because R Python is really a nice high level language. It supports extensive meta programming and you can do very interesting things with meta programming on the level of the interpreter.

And the performance, if you don't include a just in time compiler is not reasonable. It's not particularly fast. It's on the level of sort of good C-based interpreter.

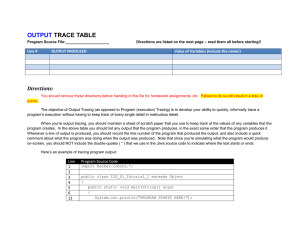

But if you include a just in time compiler, then it can be very fast. And well of course you cannot read this. But this is comparing the performance of CPython blue and PyPy with

a JIT in orange. And it's all normed to CPython for a set of benchmarks, which benchmarks you want to know details about this I can tell you.

But the main message of this slide is that it's much faster, right? So it can be up to I think it's probably 20 times faster for a few benchmarks, but it's usually at least 40 percent faster than CPython.

So how does PyPy's just in time compiler work? It works by a concept that we like to call meta tracing. And the meta tracing approach emerged by looking at the problems that are sort of classical tracing like trace monkey has. The biggest problem is that we cannot use it because usually a tracer is really specific to the one language that you wrote the tracer for.

And that wouldn't work for us because we want the tracer to work with a large variety of languages. Another problem is that the bytecode that you're tracing has often the wrong granularity. So it's actually -- because in the trace you see an add but you'd really like to know which paths through the add space of paths has been taken.

So in specific, in particular, the internals of one operations are not visible in the trace. So the PyPy's idea is we write our interpreters in R Python and we don't trace the execution of the program that is running on top but we trace the execution of the interpreter of the R

Python code. And for that we wrote one generic R Python tracer and this tracing process can be customized by a large set of hints that the interpreter author can insert into her interpreter to tweak this tracing process. And of course the hope is then that if the meta tracer is well written, doesn't have backs, that adding this JIT will not give you language-specific marks. But, of course, until you actually get all the bugs out of the meta tracer a long time will have passed. But, right.

So there is a very immediate problem with meta tracing and people that know about tracing did usually know this problem. If you trace something that's itself an interpreter your main assumption of the tracing JITs is violated, because one of your main assumptions was that subsequent iterations of the same loop will have the same behavior. But if you trace an interpreter that's not going to be the case because two iterations of the interpreter will execute two different bite codes, usually. So we solved this problem with a very, very simple trick and the details of that trick are not that important. But the idea is that you don't just trace one execution of the bytecode dispatch loop of the interpreter you're tracing, but you continue tracing many iterations of the loop and you do that as long as you reach exactly one iteration of the loop that's running in the user program on top.

And then basically you get the effect that you trace -- the length of your trace corresponds to the loop of the user program but what you trace is really the interpreter executing this program. Basically if you do that, then you solve the problem of control flow in exactly the same way as a normal tracing JIT is also doing.

And to optimize late binding and dispatching you have a very, very simple idea. The object model is implementing this stuff anyway. And the object model is written in R

Python, so you just trace through that. And so in that way the meta tracer automatically records the fast path through these operations.

And as in the normal tracing JIT the method tracer is very good at picking the common paths. Yes, I mean the common paths.

And this doesn't always work without manual intervention from the start. So there are a number of hints that you fine tune to the process. For example, the interpreter can also say never trace into this function or always trace into this function. Or you can, if you encounter this function and it has constant arguments, you can constant forward it.

There are a number of hints like that. If you use them, you can really get traces that are short and quite fast.

To optimize boxing overhead, there is a very nice and powerful general optimization that operates on traces. And what this optimization tries to do is defer the allocations that you see within the trace for as long as possible. If you see an allocation within the trace there's no immediate reason why this object needs to be allocated now, the allocation will be pushed down the trace as long as possible and quite often in that way it will simply disappear.

So allocations on the trace happen only in those rare paths where they are really needed.

And the use cases for this originally we wrote the technique mostly for arithmetic so you can get rid of the intermediate boxes if you perform many arithmetic operations. But then it turns out that it's really quite useful for lots of other things as well.

For example, every time in Python every time you do a function call, the argument passing is rather complex. And there's an intermediate object that just holds all the arguments and does the argument parsing. This object doesn't live long, because when you call it you make it and then you consume it immediately and then it's gone.

And then you will see in the trace the creation of that argument holder, you will do a few things on it then it's just gone. And the optimization can remove that.

Or even more powerfully if you trace through, if you inline a function call in the trace, you will get a frame allocation, because the inner function needs a frame to run.

And then that frame also just has a limited lifetime so the optimization can be removed more fully.

In general, I mean this is really just an engineering slide. There's nothing too deep about it. But in general we have quite a nice way to deal with refried frames. Because if you're writing interpreters and the interpreters need some sort of object to hold the, to represent the frames anyway. So you can mark with a hint these frames as to the JIT to say that this is the frames that I'm dealing with. And the JIT will then special case them and the attributes of these objects can, are stored as much as possible in the CPU registers or in the CPU stack.

And on refractive access you simply leave machine code and go back to interpretation.

And while this is really nothing too advanced the only cool thing is that it's really implemented in a language independent way and you can use it for all the languages that you implement with PyPy.

Another sort of meta point is that our tracer tries to be as transparent as possible. So when you start writing an interpreter, you add some hints and then you execute your code and it's not fast. Then you need to understand why.

So we try to have a lot of tools to understand how you should improve the placement of the hints to really get better code. And for that, the most important thing is that you can -- that you actually look at the traces. You don't need to look at machine code, which is what you have to do if you try to do this tweaking on the JVM.

The traces correspond rather closely to the R Python interpreter code. So the traces are just bits of your interpreter. And to actually look at the traces, we have nice visualization and profiling tools that tell you this part of the trace has been executed that often and they connect to this other trace like this and it's very -- a lot of people have learned to read these traces that are not, that don't really work with the JIT just to improve their interpreters.

Okay. So I'm nearing the end of the talk, and I'm going to summarize a bit. So first let me enumerate some drawbacks of our approach that I see. And the biggest drawback is, of course, now that we have all this machinery it's very nice. But actually to get there was a huge amount of effort and it took many years.

So if you start from scratch, it's not clear that you really want to do this sort of meta translation tool chain approach. Now that we have it it's nice.

The same thing is true about garbage collectors. Yes, it's nice that the garbage collector is completely separated from the language implementation, but writing this garbage collector inserted into the virtual machine was still quite a lot of hard work.

And again the same, the pattern repeats. The same is true about the dynamic compiler generation. We started trying to automatically get compilers from interpreters in, I don't know, 2006. And I mean this is version 5. And each version was a complete change of approach. And basically until we tried tracing all the former approaches turned out to not work.

And even the current approach is not perfect. I mean, I talked about the wrong granularity of traces in normal tracing JITs and our traces have a bit the opposite problem, which means they're too detailed because they really spell out all the steps that the interpreter did to implement the behavior.

Right. But on the positive side, I think that PyPy solves many of the problems that you have if you try to implement dynamic languages. The very nice thing from the point of view of the language implementer is that you can use a nice high level language to implement your language, which is much easier than working in C. And also it has the benefit that you can analyze R Python code rather well. It's not that easy to actually analyze C code, for example, which helps, for example, the JIT and other tools.

PyPy gives you rather good feedback if you try to implement the language to learn and understand how you can place your hints better or otherwise improve things.

And it gives you a various mechanisms to express language semantics that are rather different. So that's a set of tools that we have in the hints, I think, is quite nice.

Okay. So thanks very much. And I'm open for questions. [applause].

>>: Curious on the performance numbers, on the tracing JIT, did you guys find what -- I mean, these are great results. Is it boxing? Is it --

>> Carl Friedrich Bolz: You're talking about this and this?

>>: I mean 40 percent is still pretty good, right? I'm curious what was it about CPython was it interpretation overhead, function calling? Was it boxing?

>> Carl Friedrich Bolz: I think it's a bit of all of them. I mean, basically if you get

40 percent, then you can, then you really just get rid of the control flow overhead.

Because CPython really has some overhead in bytecode especially. In those cases up here, you really get rid of all the method call overhead, all the finding the right method.

Boxing and I don't think it's enough to implement just one of them very well.

>>: You don't have numbers on the individual?

>> Carl Friedrich Bolz: No it's probably hard to distribute the speed-ups to the various -- I mean maybe it would be instructive to just turn off some things and look what you still get. But I don't really think you can clearly separate this speed ups?

>>: Does it include overheads and tracing?

>>: They're always with -- there's no warm-up going on.

>>: Do you know how much overhead is?

>> Carl Friedrich Bolz: I can show you -- basically I can show you a small demo. The problem with demos is that they're not very instructive because you get two times the same behavior and one of them is fast. So if you use standard CPython, there's a benchmark included called PyStone [phonetic] and you can say run this. So it takes

0.7 seconds and then just turns it into -- if you do the same thing in PyPy, you get a much smaller number. And so that means that the compile time is bounded by this, right?

Because it still also had to do the actual computation. So the overhead is in the -- I mean, it's rather small. So --

>>: What about memory function? Is that significant? Generate more code?

>> Carl Friedrich Bolz: It's okay. We're good at throwing away code that doesn't seem to be used anymore. But there is still a sizable overhead. So, for example, the largest program we tried, which is our own translation tool chain we can speed that up with JIT by a factor of 2 but it will produce something like 300 megabytes or 400 megabytes of assembler doing so.

And it's a large program. I mean, I think it's 200,000 lines of Python code.

>>: So collecting these numbers so you're only tracing loops?

>> Carl Friedrich Bolz: No. The details of this are a bit complex. We are only tracing loops. And we are tracing, if you call a function that has a loop very often, we also trace from the beginning of the function to the loop.

>>: Okay.

>> Carl Friedrich Bolz: And, right. So those are basically those things.

>>: How about nested loops [inaudible].

>> Carl Friedrich Bolz: We don't deal with recursion. So basically if you have recursion, you will just get a trace for the outer function and in the middle you will have a call that just calls the same assembler again. But it's not like -- I mean, the trace monkey people tried to do that. Just turned recursion also into loop, into a loop but we never got that.

>>: Nested loops?

>> Carl Friedrich Bolz: You do the usual thing. You first trace the inner loop and attach the outer loop as a long side exit.

>>: Okay. And can you quantify, so for the execution time, how much of the time is spent

[inaudible] and how much is the optimized code?

>> Carl Friedrich Bolz: I don't know. I don't know. I cannot -- I don't know numbers. It cannot be that much. Because if you run this with -- if I can somehow turn the threshold very high like this, maybe, no? Maybe.

I mean, if you run this purely in interpreted mode it's slow. So the interpreter cannot run for a large percentage of the time. I don't know actual numbers.

>>: Yes?

>>: Can you speak a bit about the experience in writing a new interpreter in this high level -- so let's say I want to come out and write a JavaScript interpreter or Python or whatever it's called, how do I encode things like type semantics and things like that. How straightforward is that experience?

>> Carl Friedrich Bolz: Basically it's a lot like implementing the same thing in Java or C#.

Basically you write classes to represent your user level objects. You write your operations on them as methods on the lower level or functions or whatever.

The problem is R Python is not -- I mean, there are drawbacks and disadvantages and advantages. The advantages that you can, the meta programming that you have in R

Python is much better than in Java. So, for example, we have a rather high level declaration like syntax to define all the operations that, for example, all the additions that you can do. And this is turned with meta programming into much more code.

So you can do this sort of generative approach and that would be much harder to do in

Java. The disadvantage is that, well, there's type inference going on. And the error messages you get sometimes from the type inference are completely [inaudible] if you're unlucky. So this is one of the things that I don't like. But it's also really hard to improve on that.

But, yes, I mean basically you can write nice things in a very short amount of time. So the Haskell interpreter -- I mean, it doesn't have a pause there. You have to write down the ISTs, basically. But if you do that, it's mostly the core semantics of the language was implemented in 200 lines. And the performance is not horrible.

Of course, Haskell is completely static and it's not clear whether our approach is helping that much there. Other questions?

>>: More questions?

>> Carl Friedrich Bolz: Yes?

>>: So what is left to do or what are your next steps with this. Is now you go evangelize the world and get everybody other people to use PyPy and Ruby.

>> Carl Friedrich Bolz: Excellent question. I mean, on the one hand there's a lot of -- time-wise there's lots of work that people do on the Python implementation. So simply being back-to-back comfortable with CPython is lots of work, because basically all the strange things that you can do in CPython has to be supported because somebody depends on them.

So that's sort of the one side of things. The other side of things is basically if you look at this slide, I mean, if you think about the fact that this end, the blue end represents something like 60 times slower than C. And we speed up by or even 100 times. We

speed up by, say, 10 to 20 times in the best case. So the question is still where do the other -- where does the other order of magnitude come from.

And that is not geared to us at the moment. So we are considering doing a hybrid approach like JagerMonkey is doing to think of ways to first generate a method-based JIT that just is a very fast compiler that doesn't optimize much and later doing tracing for the other cases where it really matters.

Our tracer is not too slow but it's also not really fast. So we are thinking about ways to optimize that. But basically the completely immediate next steps are not entirely clear to us. So we don't have a clear plan what to do.

We're quite happy with some of the numbers but we don't have a completely clear plan what to do with the next order of magnitude.

More questions?

>>: Perhaps I just missed it but I haven't heard you talk about the specific types of authorizations you do on these traces up to Python.

>> Carl Friedrich Bolz: Right. So the most, it turns out that the most, one of the most important optimizations that we really do is this object allocation removal. So if you see an allocation that you really try, put a lot of work into removing that.

And then we do a large batch of other things. I mean, some of them spur is also doing, for example, type strength you call it guard strengthening, I think, we do that as well. We do read and write thinking.

So if you read from the same field twice and you know that no code in between could have written to that, you just remove the second one. The same for Brights. Well, constant folding loop invariant motion. Our loop invariant code motion is not that very effective but it's quite okay.

Yes?

>>: In these optimizations, just kind of a follow-up question, do you make use of symbolic peaks [inaudible] techniques?

>> Carl Friedrich Bolz: Excuse me?

>>: External tools?

>> Carl Friedrich Bolz: No the optimizations are just written by us and some of them are a bit ad hoc. I think you can probably win, you can probably win a few 10 percent here and a few 10 percent there by being a bit more systematic in the optimization. Also our assembler back ends are not particularly great. So another few percent you can probably win by doing better register location.

>> Nikolai Tillmann: Let's thank our speaker again.

[applause]