21864 >> Victor Bahl: Thank you very much for those... you very much for those of you who are watching...

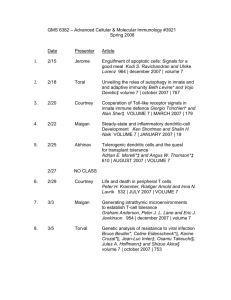

advertisement

21864 >> Victor Bahl: Thank you very much for those of you who are here and thank you very much for those of you who are watching us remotely. This is -- it's a pleasure to have Abhinav Pathak. Abhinav has been my intern. This is his second year, second internship with Microsoft Research. His interests are quite diverse. Worked in the database quite a bit and network data centers, measurement, modeling and other good stuff. But I asked him his true passion is in Smartphones and mobility, use of mobile phones, et cetera, and he's been working on some really interesting ideas related to energy management in phones and energy management as you know is a really important area. And I thought this work just got recently accepted in [inaudible] with lots of great reviews. So I thought it would be good for him to share some of these ideas with the rest of us. So with that, Abhinav. >> Abhinav Pathak: Thanks, Victor, thanks for the introduction. Thank you everyone. I'm Abhinav Pathak. I'm here to talk about our work, fine-grained power modeling for Smartphones using a system called raising. This work has been done along with my advisor Charlie Hu from Purdue, Ming, Victor and Yi-Min at Microsoft Research. This is the year 2011. And I don't need a motivation slide to say that Smartphones are bought, a lot of people use them. It's an accepted fact. But what I'll talk about is one of the most crucial aspects of Smartphone that people face today is energy. Because Smartphones have limited battery time, limited battery life. If you are a moderate to heavier user of Smartphone you have to carry around a charger with you all the time. You need a car charger, need a charger in your office and charger in your home. You need to make sure it doesn't run out. We'll ask a very innocent question. Why do we want to save energy in phones? Obviously, first thing is moderate to heavier user can charge once a day go home and charge and you're happy. But even for light users or people who don't heavily depend on Smartphones a lot, you can provide a lot more functionalities on the phone. You can enable continuous sensing. You can enable -- you can provide them a lot of applications, services, and even the dreaded background applications, anti viruses, search indexing and so on. Now the first question that we need to ask if we want to save energy is: Where is the energy being spent? Which component of the phone is consuming a lot of energy? Which application? Which process, which thread? Can you go ahead and ask which function in your code is consuming a lot of energy? And why do you need a fine-grained measurement is actually because we are targeting at developers. We can give them information that this function, this particular sets of function consumes the most energy. This function you can probably decrease the energy. This function you cannot decrease the energy. They are already optimum. What do we do with the measurements is one thing is you can provide app developers with a heat map. And while you're doing measurements you realize how do components interact. How is energy dissipated in the phone? You can develop low level strategies like scheduling, how do you schedule devices so that energy, you can save energy. So with this in mind, we go ahead and let's show the simplest way how to measure power. The simplest way here to measure power is by inexpensive segment, monsoon power monitor, and you perform in 20-step surgery on your phone. Bypass the battery, connect the terminals. Connect the monitor to an external power supply. And then you run your application. It gives you a nice graph that this is the power consumed, this is the energy consumed here. Now, in this approach, even though it is very expensive, is your mobile -- mobile anymore. You're hooked to your desktop. You're sitting right there. You cannot move. Can you do the measurements in your car? It's very difficult. I know people who have done that. I know what the problem is there. And the second question is, does this answer which component, which process, which thread is consuming energy. This will just give you an overall how much is the device, an overall estimate. This device is consuming this much energy, your phone is consuming this much energy. The silver lining in this methodology is it is absolutely fine-grained. It gives you a measurement every 200 microseconds. It gives you at this particular instance, this much [inaudible] we'll keep this in mind and we'll proceed to what people do, how do people model energy consumption of Smartphones. Feel free to stop me at any time for questions. Power modeling on Smartphones. This is the -- we'll talk about the state of art which is a very intuitive way to model Smartphone energy consumption. So there's several devices. There's several components on the phone. You have the screen you have the SD card you have the CPU, wireless, GPS so on and so forth. So in this approach what do people do? There's two phases, one is the training phase, one prediction phase. Your ultimate goal is to predict what is the energy consumption. So what do you do is in the training phase you calculate on an average what is the energy spent per byte of transfer, per byte of send or receive. On an average, if I use CPU for 100 percent how much is the energy does the CPU consume? Similarly if I write one byte on the disk or some number, how much is the energy being consumed? And then during the prediction phase, I'll every once again I'll read slash Proc like counters which gives me aggregate statistics. In the past one second this much CPU was used. This much byte of data, this much byte was written to the disk and so on. And then I'll multiply it with these numbers usually linear estimation and this is my predicted energy. >>: [inaudible]. >> Abhinav Pathak: Yes. It fails miserably. >>: How did you consider the energy for send and receive because it varies completely with functions such as [inaudible] and characteristics of the network and varies hugely with the size of the data that you're sending and receiving. >> Abhinav Pathak: Right, absolutely. And that is what I'll show in the next slide, even before I show you the numbers I'll convince you it's not going to work. We even tried it with numbers. I'll have it towards the end. >>: I'm curious to see ->> Abhinav Pathak: Sure. Let's see why this strategy doesn't work. I'll read the equation, predicted and consumption is an estimation of how much is the utilization and so on. Now the first fundamental yet intuitive assumption this model makes is only active utilization implies energy consumption. That is only if I'm sending and receiving on the network I'll consume energy. Nothing else. Only if I'm reading, writing to the disk to the HD card I'll consume energy. Nothing else. What we found out that on today's Smartphone, this assumption is wrong. Smartphones continue to withdraw a lot of energy, consume a lot of energy even though you're not actively using the device. >>: [inaudible]. >> Abhinav Pathak: Sorry? >>: [inaudible]. >> Abhinav Pathak: So I have examples, right, on the next slide, what do I mean. A simple example could be you open a file. You consume a lot of energy. You close a file. You consume a lot of energy. You close a socket, you change the power state. >>: But the first example [inaudible] seeking and consume a lot of ->>: It makes syncing on the device ->>: I think your graph ->>: Even when you close a file you still use a component. >> Abhinav Pathak: Right. So I'll show you some of the examples for each of the assumptions which are wrong here in the next slides. The second assumption, devices don't interact in terms of energy. And that is why I can linearly add them. Basically I'm saying no matter whether I'm using disk or not, network will consume the same amount of energy if I send N number of packets. This is also wrong. What we observed is when you run multiple devices together, they need not always add. Third assumption, energy scaled linearly with the amount of work. If I send ten packets it consumes X amount of energy I send 20 packets it will consume 2x amount of energy it's wrong. It may not always hold true. In fact, sending 20 packets could consume 4X amount of energy, and I can write an application that consumes more than 20 packets. Everybody's confused here. I'll show you examples for all of these three assumptions that failed really badly. But what I'll talk about before going to those examples is it is using such models, it is hard to correlate going to the process level, thread level, function level because these counters are not readily available. How much is network sent to see for this particular function? Like these counters are not readily available and there are new exotic devices that do not have quantitative utilization. Like GPS camera. I don't have I sent ten packets per second or I just don't own GPS. Don't own GPS. Camera I turn on I take a picture. >>: The point you're trying to make is the linear model is not the appropriate way to agree with you? >> Abhinav Pathak: Right. Additionally it is these problems. >>: That's not a problem with the model ->> Abhinav Pathak: Right, this is not a stated problem, but this is ->>: This is a side ->> Abhinav Pathak: Sub. Let's jump to examples. Only interactive examples implies assumption. What I do I took a touch Smartphone, Windows mobile 6.5 running, and I did a simple experiment. I open a file. And I plug the energy consumption. X axis I have the time length. Y axis I have the current consume. Throughout these sets of slides I'll talk power in terms of the current assumption. Actual power will be 3.7 volts into whatever the number is here. So what we see here is the moment you do a file open, there's a spike. You don't do anything else. You just sleep after this, the component continues to withdraw a lot of power. There is no active utilization in this zone. And also file open is not considered an activation. It's not in reality a read or write. Similarly, file delete, file close, file create, all of these show similar characteristics. Next example, several components, stale state, we just saw a stale state when we do a file open. We open a socket, we send some data on the socket. We close a socket right here, and then we see for two seconds roughly it still stays in that high powered state. It continues to draw a lot of power. This is seen in disk and Wi-Fi and GPS and Android and Windows mobile, all these. And but more interesting thing we observed is when we change the handset on a touch on a windows mobile 6.5, system calls have ability to change the power states. I start sending data on the socket. I stopped sending data, and until and unless I don't do a socket close, my power is not going to drop. >>: So is this a socket on Wi-Fi or ->> Abhinav Pathak: This is Wi-Fi. This is Wi-Fi. >>: Wi-Fi is much more [inaudible]. >> Abhinav Pathak: Right. It's actually much worse where the tail lasts really long. You had a question? >>: I didn't but I have now. So I can see the last one happening because you're trying to maintain the connection because you're not closing the socket. >> Abhinav Pathak: Right. >>: If you have to maintain the connection you have to stay on. >> Abhinav Pathak: Right. >>: Or you lose the connection. >> Abhinav Pathak: Sure. >>: But I'm puzzled by the first one. Why would the first one happen? Is it because of the [inaudible] remains in a high energy state? Why is that do you have any intuition? >> Abhinav Pathak: So the driver continues, this is what we think it is. The drivers are so close we don't know the answer for sure. The driver continues, the device continues to draw the power in a hope that you'll have more operations. >>: What do you mean by power fail, right? Is it it remains on and doesn't go to sleep mode. >> Abhinav Pathak: The processor, all we see is that the processor is not utilized. >>: Not utilized, will go down a few seconds. It's more likely an efficient driver [inaudible]. >>: The 5-four doesn't have Wi-Fi. >>: Talking about that one. But that one is the SD card driver who knows what happens. Maybe it's a compaction of the flash when you do something like that. It's very ->> Abhinav Pathak: It could be. Right. >>: Makes sense. >> Abhinav Pathak: We don't have tools to measure to know exactly what's going on here. Let's look at the second assumptions. Devices don't interact in terms of energy. I did three simple experiments. In the first experiment I sent the update data. I did a sweep for some time do a socket close to show there exists a detail. On the second one I run a far loop for two million times, sorry, ten million times doing some floating point computation. In the third experiment I do the same two things but I send two megabytes of data, and spin CPU for two million and repeat this five times. Now, in the first scenario, we have X axis we have the time. Y axis again we have the current. What we see is the moment we start sync, we consume an additional 180 million amperes of current. After this send is done, we consume an additional 110 amperes until we record the socket close. Second example, second experiment the moment we start spinning the CPU we consume an additional 200 million amperes, and this is a little -- we complete, do the complete operation. And third experiment, when we do things side by side, what we observe is apart profile like this. Let's look in detail what happens. When we start sync, the first iteration, we send to a bit of data we jump to 180 million amperes, consume an additional 180 amperes, which is perfect according to this here. But what we expect after this is your network driver, network device to consume an additional 1ten million amperes because it will enter into the tail and stop sending at that point. And since I run CPU on top of it, I expect we'll consume 200 plus 110 milliamps. But what happens is I just consumed 110 milliamperes, the first loop. What happened to the tail? Where did the tail go. I go to the last iteration ->>: Your CPU spin cycle seems to be driving ->> Abhinav Pathak: Sorry? >>: The CPU ten million times of the CPU cycle seems to be driving over time. >> Abhinav Pathak: Right. So at this particular time, this is one-fifth of what we're doing here. What we assume here at this time, there should be a network tail, because network device we just used it. We have sent. >>: But the power is used by the CPU, because you have to concur it. >> Abhinav Pathak: Exactly. >>: But the time below is longer. So your total time below is like overstretched to like a 16. >> Abhinav Pathak: Right. >>: The other one is only ten. >> Abhinav Pathak: Upper one is only ten. And we have a six here. >>: I guess the point is you kind of -- that's the basis of this point. You're right. He's right. But the thing, the big point is meta point you can't just look at numbers and add them up and say ->> Abhinav Pathak: What we see is during the last send, after the last send is completed we have the last CPU spin. After the last CPU spin is over there's a tail, 1ten million amperes. After we do a socket close, it's vanished. Clear case of devices are interacting with each other in terms of power. The last ->>: The device, I guess you're talking about components inside ->> Abhinav Pathak: Components. Components. >>: Devices. >> Abhinav Pathak: The third example, energy scaled linearly with the amount of work that occurred. The phone, 6.5, send packets at the rate of less than 50 packets per second, choose any number, choose any bytes per packet. Second experiment I send packets at the rate of 50, more than 50 packets per second, again choose any number. You can choose 55, 45, and what we observe in the first case, this is the power consumption draw. What we observe is in the first case we consume 100 to 125 million am peer spikes whenever we do a send. And in the second case, 300 -- finally amperes. So if you're sending 45 packets per second versus 55 packets per second you're consuming three times the amount. The integration of the energy, is that three times or about the same? Or smaller? >> Abhinav Pathak: That depends on a lot of factors, because the moment you're sending above 50 packets a second you're incurring tails. So you have to take that into account. >>: My point is that we have a experiment showing that you send it faster actually the overall energy, multiple energy consumed is usually less than ->>: There's something missing here. In general it's much better from an energy perspective to send big chunks than sending small chunks, more big chunks once than sending small chunks -- so I don't know what the bug might have been here. But some bad things with the data in this case. >> Abhinav Pathak: So what we ->>: What you're trying to get across is that there's no linear correlation. >> Abhinav Pathak: There's no linear correlation. So in terms of energy, when we do an integration even then this ratio nearly holds true because it's already a three times ratio, one is 2.5 and one is three. When you add a tail along with it you're really talking about the ratio. All the Smartphones we tested we tested one Android, two Windows Mobile, exhibited this. It looks like a particular number of packets configured somewhere that dictates when do you jump to a power state. Dictates how much power you are going to consume. And I'll call these as intelligent optimizations written in the device driver. That dictates how much power. Any questions here? What we have learned so far state of model powering, assumptions are wrong. I'll show you numbers they don't work. There exists a notion of power states here in the device drivers. What we have hinted so far is device drivers they have intelligent power controls. System calls, they act as nice triggers in power consumption and definitely there looks like a scope for energy optimizations. We can probably do something intelligent based on top of all of this whatever we have seen so far. What are the challenges in measuring fine-grained energy? It is that the device drivers are closed source. They're just binaries. We don't have the code and we assume that we cannot get any information out of them. We cannot get when the packet was sent out we cannot get when was the bytes returned to the disk and so on. So our modeling, the aim is very simple. We want to reverse and continue the power logic that was present in the device drivers. We'll do a black box reverse engineering here, because that's closed source. We use something called finite state machine instead of linear regression, adding up everything linearly. Finite state machine is basically simple. It consists of states and transition, states is a notion that says that after -- it represents an abstract power state. It shows how much power is consumed by the device if you are in that state. >>: How do you define power state? >> Abhinav Pathak: It will come. Transitions could be basically anything. It could be a timeout. It could be a system call. It could be any other condition. For example, any more than 60 packets per second. Nodes in our finite state machine represent the power states. We have two here. One is unproductive one is stale. Productive is where you actually do nodes and tails are the ones you aren't doing any work and the energy is being consumed. Edges are the transition rules. And we make an assumption here that the device drivers implement very simple logic, power logic. Like based on simple history numbers, however was utilization last time do I have to open a connection, do I have to do this, do I have to do that? Based on this assumption, we'll try to reverse engineer the power model. The methodology is very simple. We model energy consumption using system calls. System calls give you a very nice interface where you can sit down and watch what all the applications are doing. System calls is the only way application can access the hardware. And once if you see the system calls, then you can figure out how much is the device being used, which process is using it, which thread is using it. You can also say what are the nonutilization calls there. And whatever the kernel does below we have no idea. You just treat it as a black box. You use a very simple I call it systematic brute force approach. It's reverse engineering where first step we model each individual system call. Once we have models this finite state machines for every system call, I'll go ahead and club finite state machines of different system calls that acts as the same component, for example, the wireless card. And step three is I'll try to club finite state machines of different devices different components, club CPU, disk, network, and so on, to get a giant finite state machine for the entire device. Let's look at step one. Modeling single system call. Let's say it's a read give a file descriptive, give a file size. This is how the power profile looks like X axis the time, Y axis is the current. We do a discrete. It jumps to a highest power state. It continues to consume a lot of energy until it falls down. We'll toss on this. Into a figure like this. Where we say you are at base state. State number one. You are at base state. You do a system call here and you do a transition to another state called D 1. At state D 1 you consume some amount of power. After the work is completed at state D 1, you come down to state D 2 and you consume some power for duration of time. >>: How many labels with the states? >> Abhinav Pathak: Excuse me. >>: How do you label D 1 and D 2? >> Abhinav Pathak: How do we label? Because they consume different power. >>: But in the real trace it might give over 100 different spikes, right? >> Abhinav Pathak: Sorry. >>: In a reasonable [inaudible] might get over 100 different bar sites. Doesn't make any sense to label ->> Abhinav Pathak: We're doing one system call at a time. We know exactly in the profile exactly when was the system call started, when was the system call ended, and we are trying to analyze everything in between that. Definitely there are a lot of spikes caused by other components unknowingly we're not running, for example, the phone keeps on ->>: What I'm trying to say if you use a system calls a black box there's no need to define states within this black box just integrate the whole thing as an energy and it should be done. >> Abhinav Pathak: It's not that simple, because we'll induce error there. Because this is just a simple system call. And we are seeing different states here. So what do we do is we come back here and we make a power model, a finite state machine. We represent that variable in the base, you consume a zero million pair, that's a reference. You do a file brief. You go into high disk state, consume an additional 109 million amperes. Stay there. Two, your file state is completed. And then you go back to the disk tail and inactivity of the by state you go back to the base state. This is a single system call model. Any questions? Another example, how do we monitor, how do we model network? This is what [inaudible] was saying that we have multiple spikes, they correspond to each, correspond to one single system call. What we see is since system call is simple, you're in the base state, you see less than 100 package again, go to low network state, spike of 125 million amperes, you go back when that is done. We found out when you increase the amount of data you're sending, the amount of packets you're sending, you go to the low network state. When you see across the 50 packets per second threshold or [inaudible] to jump to a high network tail of 280 million amperes, and then you see spikes there. >>: So don't these numbers that 285 depend on how far I am from the sector? >> Abhinav Pathak: It does. For simplicity here let's say we have a good signal strength here. And in the paper we have details how these numbers would change depending on what is your solution. There is a variation. So this 125 can become 350 depending on what your signal strength is. Similarly, in CPU modeling at what frequency you are running, that will define the actual power. I'm skipping all those details here. But we do model those. So this is system call. One finite state machine for the entire send. Next we model multiple system calls to the same component. Observation is a device, component can only have a small number of finite number of power states. And the methodology is simple you go to the programming order can't do a read or write before you do an open. And then you use something called a C test application. It's a parameterized with code models which you can easily shuffle around. The idea is you want to access multiple system calls together and see if they generate a new state and show you how do you do it. And what you do is you identify and club similar power states. So basically your same system call has a power finite state machine like this. Your closed system call, socket close, has a finite state machine like this, and you see that these two states are the same, the base state is the same. You just compile it. You go on combining system, multiple system calls, going to the same component, and at the end you'll reach what is the finite state machine for an entire component. This is very simple brute force approach. You're trying to access different system calls in different states. That's it. Modeling multiple components. We continue this approach. We have the observation of the different components interact with each other. Methodology again is you try to reach a different combination of states from different places. And you see whether there are new states or transitions. I'll show you one simple example of a system capture for a device. This is a Titon 2 phone on Windows Mobile 6.5. This is how does the networking finite state machine look like. Go to a base state, go to low in the case if you're sending packets and go to a high network and have the spike here. If you have to annotate CPU on top of this, you'll try to run a program when you are in network tail state. You run CPU and you measure the energy consumption. You model it as another state. Similarly, you run CPU in a high network state and realize it consumes the same amount of energy and realize it doesn't go anywhere else. Then suppose this is the disk part. Base state. Access disk, you have the disk tail. If you're in the disk tail if you access CPU you get a new state here. It consumes 130 million amperes. Similarly if you access disk, then you have a device in network tail state there's a new state. It all looks combinatorially you'll blow out, you'll have a lot of number of states, a lot number of transitions. But what we found out is most of these states are send and for three devices, say disk, CPU and network, we can contain under 12 states. So the combinatorial concern how many times you've tried approved for first approach most of the components ->>: That was an interesting diagram, but you seem to model [inaudible] only by one phase. Can you model your state machine with more than one tail? >> Abhinav Pathak: We haven't seen a device that have two tails. >>: Actually 3G device just like that. It have -- I mean there's something called hide-and-seek [phonetic] actually has two tails. >> Abhinav Pathak: Right. Yes. We can model multiple tail states. But for all the devices that we tested we just saw at most one tail state. At most two corrective state. It doesn't stop us to have that kind of modeling where you have more than two productive states or two tail states. >>: Okay. The final state the productive state. I'm saying the tail state. >> Abhinav Pathak: There could be two. Right. We can do that. Now, one of the aspects here, one of the problems we see is the combinatorial approach we are trying to access different states together and you can quickly run into a large number of testing. Now, what we found that's most components they have one or two productive states and most of the components we tested have more than one tail state even you have two tail states. Talking about 15 to 20 combinations for three or four components. And since power modeling is a one-time effort, we can say that this is once you have to do it and forget about it. So a long time spending some time on this is acceptable. What we are working on is how -- we are working on to automate this procedure. This procedure is currently manual. Trying to automate it, run applications which can self -- which can decide whether it's a new state, old state, get the model outside. Regarding the completeness of the power modeling, theoretically a device driver can implement any number of complicated optimizations. If it rains outside then use this much power otherwise use that much power. Nobody's stopping them to do that. See if you can detect. But idly device drivers they're not that complicated. They're pretty simple. They're based on simple history information and practically we saw that. Simple things we were able to use and do reverse engineering for most of the devices we saw. As far as the implementation, we implemented system call tracing on Windows mobile 6.5 and Android. We used C log, extended C log to log additional system calls. It's just earlier above the kernel where we set down all the C applications. We logged down all the system calls, which application, which thread, what are the parameters, when was the return, everything. And Android we used Android sits on top of Linux kernel. We used system tap. A framework to log any calls in the kernel. And since Android has Dalvic [phonetic] running on top of it. They do, already do some kind of optimization for applications running on top of Dalvic. So we have to log additionally what is going on in Dalvic. Our paper contains details of why do we need to do these steps and so on. Valuation. We used three answers, Titon 2, a Touch, S2G magic running 6.5 one running Android. And I've shown most of the finite state machine results on top before here. What I'll show here is the accuracy numbers. So we did end-to-end energy estimation. What we did is we chose a lot of applications, grab your maps, Facebook, YouTube, chase, wire scan, photo compiler for both Android and Windows Mobile 6. We ran the applications. Some of the applications were ran 10 seconds, some 20 seconds and we measured what's a predicted and actual energy and what's an error estimation. So this graph plots the error and estimation using our model, the finite state machine model, and the state of linear regression model that we talked earlier in the talk. The Y axis plus the error. What we see is our model, we are under 4% of end-to-end error estimation. In all the cases. Linear regression, it performs fairly well in this case. Some of the errors are less than a percent. Some of the errors go as high as 20 -- 20 percent. >>: You're talking [inaudible] it's more or less that the integration [inaudible]. >> Abhinav Pathak: Exactly. >>: The devices. >> Abhinav Pathak: Exactly. And the point here I'm trying to make is even in common applications, it hurts when you miss these particular things. Now, let's see what happens when we look the energy consumption under a microscope. Right now we're doing only end-to-end energy measurement. So what do we do in the next set of slides is let's say an application runs for 10 seconds. All right. I want to find out at smaller time intervals did we predict correctly. So I spread the interval into 50 milliseconds and for every bin I compute what is the error in estimation. And I draw a CD of all of this. We have the CDF for our model, we have the CDF for linear regression and we have different applications running on different oasis at both places. How do we read the graph? Let's say this is a point here. We say that 60 percent of the 50 millisecond bins had less than 10 percent error. 40 percent showed more than 10 percent error. What will be an ideal curve on this graph? An ideal curve will be any curve who is sticking on to the Y axis because that way you'll have as minimum an iteration estimation as possible. What we see here is using FSM, using our model, 80th percentile of errors they are less than 10 percent for all applications. That means in 80 percent of these 50 millisecond time period for all applications we're under 10 percent of error. There is this number for linear regression, that is only 10 percent. And we see error increases. Some of them are not at all tolerable, like you have photo up loader showing 50 percent error. And a lot of bins. So what does this indicate is our model you can use it, even when you're looking at small granularity of time period. You need not look at only end-to-end. It is correct at every intermediate position. And since 50 milliseconds is of the order of time where functions at the time functions consumed, we can use this to predict per function energy in your source code. Our paper contains iteratives, results, measurements, overhead analysis. I'll throw out a number, we're under nine percent overhead which is similar to any linear regression scheme. We show why can't you improve linear regression, why can't you improve what are the problems there. I'm skipping everything over here. What are the limitations of our approach? This is noticeable that we are not solving all the problems. But one of the limitations is we're based on the assumption that power optimizations in the device driver are very simple. And this is fairly true, because when you're writing low level code, you are advised not to write really complicated optimizations based on 100 different parameters. But there's nothing stopping anybody to do that. If this assumption is not true, then everything we built will -- it will make our modeling even more hard. The second is devices with high access rates. Network is a very good device. You do send once every 100 milliseconds in the worst case you don't do a million sends a second. The higher the access rate, the higher the system calls, it requires any component requires the higher your overhead would be. For us, right now the most significant overhead is caused by CPU logging CPU system call, basically which application is running on the CPU right now. If there is a device where you require millions of accesses every second we'll have a very high overhead. And last problem we have is we don't have lower level details like exactly when did the packet get out, because that's when the device driver would consume, the device would consume the power. We don't have that information. And definitely we have that information we can incorporate it in -- we can easily write down transitions for that. >>: This is why you say it's true that you can basically have a power function because many times you just micro filing deciding if it's a function that might happen or might not happen this percent of the time. >> Abhinav Pathak: Right. I'll talk about that in three slides from now. So current and future work. So current work, so we did the fine-grained and [inaudible] modeling and measurement. And on top of it we have very -- this is half work done right now -- energy profiler. We call it E prof. It's like the standard G prof tool, which gives how much time the function runs and so on. We're trying to build a an prof tool, how much energy was consumed in every function. Then we'll try to build on top of this an energy debugger. We want to answer a question like: Can you do better? Or is it already the best enough? Then we're also planning to multi-device energy [inaudible] shielding. I'll talk about all this in the next few slides. Let's talk about current work. We're doing energy profiler. So what the problem is we want to measure per function the rate process, energy consumption of an application. The challenge is you want to correctly account. You need to correctly perform the energy accounting. And as alluded earlier we need to account for tail energy. We need to account for device interaction, because if you don't do that, you can terribly go wrong. I have an example in the next slide. The approach we have is we build a finite state machine power models. System calls. They provide a very good interface for you to tell which application, which process, which thread caused it. And to answer which function caused it, we currently just map the function boundary ourselves. Later we'll use compiler techniques. And we use methodology called pinning to handle the tail side effects. What does it mean? Let's see. We have a simple program main caused function F1 and F2. F1 does something. It does a network send. And then F2 does some CPU on the mobile. The power profiler looks like this. X axis is the time and Y axis how much power has been consumed. F1 starts here. Data network send, network send completes there. F1 ends there. Function F2 starts there. F2 ends here. And the network tail ends here. Now, if you use strict timelines, that is, F1 started at that point. F 1 ended at that point and the energy consumed by F1 is only in the area of that graph, you'll see F2 consumes the energy only the area in this graph and then you'll have no answer to the question who consumed this energy. F1 and F2 will go over your application is done. How do you account for this energy. Now ideally what you need to do we'll ask a question how much F1 would have consumed the energy if there was no F2? And that would be all the area in this part. If there were no F2, it doesn't consume an additional CPU, the power profile would have looked like this. And what we say is this should be the entire F1 energy. And only this should be the energy of F2. So the pinning methodology is simple. We pin functions based on what devices they use. And then we attribute the tail to those functions that are paying for that device. So if a function is pinned for network, it will be accounted for network tail. If a function is pinned for disk, it will be accounted for the disk tail. >>: What happens when you have two functions, both axis are ->> Abhinav Pathak: That's a tricky question. What happens if you have two functions. >>: That's what I'm asking. >> Abhinav Pathak: Right. The simple approach could be you can split the tail energy, that's it. But that may not be correct all the time. Because if those functions were executed differently, you may not cross that threshold. You can only send 40 packets, 40 packets but they run together. So this you can give as a hint to the programmer. Say here is a way to -- and this leads policy on top of it how do you decide. >>: I think one thing -- >>: Go ahead. >>: Sort of a new part, I guess. But the sort of thing like you don't take -- you may but you haven't talked about it, you haven't taken too much advantage of any history here, right? I mean, there are certain things that regardless of the applications you're using functions and different combinations we're using them, over a period of time I would suspect you could should be able [inaudible] and that should be able to feed you back some really useful information in fine tuning these models, right? >> Abhinav Pathak: That's a great idea. We can deploy this on user's handsets and do some learning, based on what is the patent and how is the energy consumed and make some analysis. >>: Because you should be able to disambiguate from the question that he asked. It's always the case you always have noisy data and the way you get at noisy data aggregate over multiple sources and you kind of know ->> Abhinav Pathak: Right. That is a way out. Right. So what we did is took an application, up loaded application. It's simple. It has a GUI. You specify on the phone up load all the photos in this particular directory, and then up load all files called reads file one at a time, does hash calculation and then sends the file over the network. It does that for all the files. And what I've plotted here is how much percentage of time did each function consume. And how much percentage of energy was consumed by each function taking the tail side effects? And what we see here is send file, consumes 50 percent of time. It consumes roughly 70 percent of the energy. If you don't consider ->>: [inaudible] across the time? >> Abhinav Pathak: The entire, if the function, if the function runs 10 seconds how much time did the send file function took, how much time. And we make sure whenever the send file is completed, the file is transferred. It's not ->>: It's not exact. >> Abhinav Pathak: It's not. It's end-to-end. So what do we see here is that there's a lot of disparity here among ->>: I'm confused now. >> Abhinav Pathak: Okay. So the blue bars they show what is the percentage of time spent in this particular function when we use a DL clock. >>: Compared to what? >> Abhinav Pathak: So the whole application, let's say it consumes 100 seconds. >>: 50 percent of the whole application. >> Abhinav Pathak: 50 percent. >>: 50 percent of the CPU. But it's the way it's written ->> Abhinav Pathak: [inaudible]. >>: Calls multiple different places. >> Abhinav Pathak: It's called how many of the files were there in the directory, that are in the same place. >>: Sorry. Just for a second there ->> Abhinav Pathak: The thing I want to point out is if you don't consider the tail side effects the 68 percent number, it boils down to 50, 55. And that is not correct, because you're not taking tail input. And another thing is though your hash calculation runs for 20 percent it only consumes 10 percent. You can tell your programmer don't bother about this. This is already too little energy. You cannot save any more. Probably you can do something about that. >>: But isn't this like -- I'm not sure, what are the [inaudible] the learning you're providing. In a sense there's a lot of studies about they take so much energy, the display takes so much energy, such and such component takes so much energy. So we could map that learning into something for the user in some sense without actually having to do ->> Abhinav Pathak: So the first step is you want to give developer an insight, where is your energy being spent. And the next question you want to tell him can you optimize here? So maybe send file consumes 70 percent of energy. But if you can't optimize it there's no point looking there. >>: I guess you're saying that you're doing it at the system call level. That's the difference? >> Abhinav Pathak: Right. >>: This particular system calls takes this much? >> Abhinav Pathak: Exactly. And then using that we pin it on to the function state. >>: I'm trying to understand this, because you are looping through a file to do refile hash calculation and send file. Send file and refile are blocking calls. So I am not sure if I can just separate them as refile how much time hash can, how much time send file, how much time. That is from the user level. But you go down to the lower level before the device, some of the send file might be doing some concurrency, because they can be blocked and then go back and do some hash calculation. So there are probably not easy to have a very clear-cut of how much time refile actually use. Besides, new processor started to have a multicore so that will make the concurrency even more overlapping. >> Abhinav Pathak: Right. So the moment you go to multicore, things changes. And in this particular example, we made sure once the read file is done and entire data is -- entire data is there in the memory. Similarly, once a send file is done the entire data is gone. It's received on the server. And because the buffers on mobile are really small. They get filled up quickly. We made sure of that. There is a hardbound for that. But, yes, in general case [inaudible] so that presents an additional challenge of how do you mark the boundaries of the functions. >>: [inaudible]. >> Abhinav Pathak: Yep, just a couple of more slides. So what we can do is we're planning to integrate -- we're planning to develop a plug in with Visual Studio that you can block a heat map saying send file consumes a lot of time. Read file consumes the next time, once they're marked they're really hard to try to do something there. Future work, energy debugging. Once we have a pro function energy consumption we want to answer the question does the application consume reasonable energy? Or can we say what is the optimum energy? Still future work, we're not yet looking at here. How do you decrease the energy consumption with or without compromising performance? And what are the best programming practices? Should we use a hash table? Should you use an [inaudible] want to answer questions like this so you can give programmers tools on how you save energy. This side, multi-device aware [inaudible] what we saw is during the course of our measurements, a lot of interactions, a lot of cut-offs we can use. And what we are saying is we'll try to use three schemes. One is inertia one is camouflaging and one is anticipation. Inertia is basically if a device is doing some work, let it do the work. Because if you stop it doing work you'll curtail energy. Camouflaging is when multiple devices run together, then the energies don't add up. So you can save energy. Think about a background application. Some application has run into a network tail state you can run the application right there because you can cut down the application by 50, 80 percent there. And then you can do something like anticipation. Stop the processes don't let it send any packet unless you have a lot of packets. So you can just pull this out. You can do that programmers these are being done in Android, coalescing, caching, request, GPS, Android framework. There's a lot of these optimizations. Then you can do tail avoid and still aggregations. Very simple strategies looking at this, the final -- the main contribution here is we developed fine-grained energy modeling. We predict energy consumption at really small intervals of times. Implemented this on two OSs, and we demonstrated the accuracy over the current state of art. And that will be the last slide. Thank you. >> Victor Bahl: Great. [applause] >> Abhinav Pathak: Thank you. Take any questions. >> Victor Bahl: Any questions? >>: I think this is a major improvement over the modeling. It's very good that you are predicting 5 percent. Just wondering if you can expand a little bit in regard of the, for example, the Wi-Fi, you actually, there are many different bandwidths you actually use to talk to the AT. >> Abhinav Pathak: Exactly. >>: So the same device calls for send packets doesn't always end up using the same amount that the energy, depending on the bandwidth that is used at that time. >> Abhinav Pathak: That is correct. And in fact some of the details I skipped here, when you are sending packets from the [inaudible] different power level they're transmitted actually at different rates. One is sending at 6.5. One is sending at 11. That in fact goes on in the packet. >>: Right. Cool. >>: One of the problems is the hardware dependence piece where you have to build the model by having the actual hardware available so you can monitor the behavior the state machines. Have you thought at all about how big a set you need to have in order to come up with some generic or is it even possible to come up with a generic algorithm that will work across multiple platforms at least of the same type, Smartphones, tablets, at least within each category, or the second piece is assuming that we can, which is my assumption, what is the minimal amount of information that hardware manufacturers need to provide in order to feed into a model successfully? >> Abhinav Pathak: So that's a good question. And right now we do modeling for a set of hardware components. You have them upon doing modeling. You can ask the component makers for some questions like give us the power transition codes. Give us ->>: So the IP is going to prevent them from giving you lots of information. But I'm asking what's the minimum we can get from them are the key features, can they be described in a way that would not, A, expose IP, and B, be onerous where the OEMs have to provide, especially since they may not want to go through all these steps themselves? So you need to give them a simple cookbook that says I need these five things from you so I can at least be in the ballpark. >> Abhinav Pathak: Right. So that is actually a hard question, because we are coming up with IP restrictions, because I would have asked forgive me the power states, give me the transitions. That is not useful. So we have to go back. >>: Things like more like on the order of A is twice costly as B. A costs twice as much as B. And [inaudible] is break even X packets per second things like that where they can keep it at a fair fuzzy level but possibly give you enough so you can say at least application A appears to be worse than application B. >> Abhinav Pathak: That could be. >>: Sorry. You had a question. I had a second one. >>: No, I was going to ->>: That's fine. >>: I was probably going to go off. What I was trying to see if you had a product, how long would it take to deploy this and actually get some reasonable numbers, and I was going to allude to what type of information you need out of the hardware. >> Abhinav Pathak: So once we have this, the model available, you have a generic framework system calls which are running on all the phones. You just have to plug in the model and do the computation. There you can do it across all the devices. I have all the devices giving the system call information, and you can update the model whenever you get it or whenever the hardware changes. So that way you don't have to do a push all the time. >>: I guess the first question is are you thinking so one aspect of this is just to somehow random generate an app and puts it into the marketplace and the other one is such that we do ourselves here at Microsoft, right? Which one do you have in mind? >>: I was looking at the Microsoft one. >>: For us. Where we had the source code and things like that? >>: Yeah. >>: Yeah. That should be pretty straightforward. >> Abhinav Pathak: If you have the source code of the device drivers. >>: Source code would be there. You've already profiled a bunch of these. >> Abhinav Pathak: Right. >>: Whatever not, you can do it. Then it's just a matter of ->> Abhinav Pathak: Running the application. >>: Running the app. It should be ->> Abhinav Pathak: If you have source code of the application, then you can do it. >>: That's part of the paper that you're linked over there in terms of how to actually get it started? >> Abhinav Pathak: Right. So the paper has very tiny information, because towards the end how do you get this started. >>: It will be interesting -- we can talk about this, we can get this going. >>: So the next question is if you had the ability to instrument the operating system, again, what is the minimum set instrumentation point that you would want to have put in so that hopefully you can run this 24 by 7 and not have to do it in sampling mode but have the cost where you would always be monitoring resource utilization, what's the minimum number of instrumentation points that you would want to have then? I don't think it's necessarily every API that you'd want to do. I think you'd want to attribute disk IO, network packets, couple other, things GIS, what's the minimum instrumentation point, where we could think how we would potentially put those into an operating system running on a mobile device? >> Abhinav Pathak: So it depends on the device that was devised. For example, for the disk you only need five system calls, log in. >>: Well, file cache is one problem. So file cache it's not a disk operation so you gotta go deeper. >> Abhinav Pathak: Little deeper. Buffer cache what is going on there, is it further going on in the disk. Right. When these things come -- now we're talking about the whole kernel complexity here. >>: I understand. I want to know what's the minimum number of things you need. I have my own set. I was curious if you have a set that's different than that. We can take it off line. >> Abhinav Pathak: For devices I've seen, for the ones that we have tested removing the kernel complexity outside, five to six for most of them would be sufficient. Five to six system calls. And GPS there's only two system calls I need to track. Disk I need to track six or seven, I think. Network I need to track ->>: But there's a lot more than -- there's only sensors now that you're tracking, you have good models for it yet. >> Abhinav Pathak: So sensors, for example, let's say GPS. >>: Accelerometer. >> Abhinav Pathak: Accelerometer. You have one start, one end. Start accelerometer and stop, consumes nearly power. That would be a really good. Camera, for example, start the camera, hardware, close the camera, hardware. Really good. Maybe when the picture was taken that could be another trigger. But if you're talking about something like memory, we have a lot of problem there. Because capturing memory accesses fine granularity will blow up the overhead. >>: Fortunately memory can be roughly you can call CPU and GPU, state memory is going to run linearly with that. Attributed on that side. >> Abhinav Pathak: We did something. >>: Cache manager. It's actually opposite -- the device drivers are a lot of times these mobile things written by other parties. And so we don't even necessarily have access. Whereas, the kernel calls we do. So we actually take the opposite approach of instrumenting the kernel, knowing it's going to pass the device driver and also some frameworks that are being pulled, et cetera. So turn it on its head a bit, see what's inside the device kernel, device drivers. >> Abhinav Pathak: We did it exactly that way. I'd say per device I haven't got anything showing about six or seven system calls which are effective. For example, a dot seek, file system call doesn't do anything. It just goes into a memory. It need not go all the way to the test. So those things we can [inaudible] we have a very, very small set needed to do this work. >>: Actually, some can be very expensive. A structure. So you can have one open caused five IOs. >> Abhinav Pathak: True. >>: Then it does one write and closes. Turns out the open source is part of that. So I would disagree with the statement that you can deal with opens and closes that are part of the meta operations. Meta data takes up one-third of the disk IO that we in the Windows operation. Dollar log file, dollar bit mat, dollar this, dollar MPI. It's one-third of the IO. That's just pure metadata. There's a lot of those cases where attribute other things and just the mainstream. Oh, he wrote and ten bytes and he wrote ten bytes. But also have to update the last access time, reverse address structures, and change things, update some things and all of a sudden it blows up. >> Abhinav Pathak: Right, right now we're above a little. >>: That's the problem with the API level, I think you have to do it done deeper in the guts and bowels of the kernel where it actually knows, oh, 16 IOs were generated as a result of that call. I know the threat ID it might be attributed back a lot of cases. Some cases we don't. But work on that. >> Abhinav Pathak: That is very interesting. >>: I would -- this is a great concept. I would say that universe is restricted in a way, couldn't get in the OS. But the methodology is pretty applicable. >> Abhinav Pathak: Pretty applicable. So, still, we cannot get into the Windows or Android. But even in Android we cannot get into device drivers but you can still use these ->>: Windows source code, we give out source code licenses at the schools. Wouldn't want you to be scrumming through the cache manager on your own. >> Victor Bahl: All right. Thank you. Good job. I gotta go run back here. But thank you very much. >> Abhinav Pathak: Thanks.