>> Eric Horvitz: It's an honor to have Peng... Washington. Peng has been -- he's a PhD student...

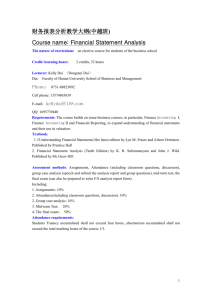

>> Eric Horvitz: It's an honor to have Peng Dai here from the University of

Washington. Peng has been -- he's a PhD student finishing up this spring. He's been working with a close colleague, Dan Weld, over at the University of

Washington who we've known for many years.

Dan and I talked years ago, I think it was at a banquet, at a meeting, about all this -- what we considered ad hoc, yet interesting crowdsourcing going on and couldn't we do something to optimize it with some decision-making under uncertainty to make it more efficient, to route people to problems and vice versa.

And Peng has been working in this space. It's very exciting and promising space. Peng did his bachelor's degree in computer signs at Nanjing University, his master's in computer science both at National University of Singapore and then at University of Kentucky and there with Judy Goldsmith many of you know that's what brought him to Lexington. And he's been working in the anticipation of automated planning decision making under uncertainty, decision-theoretic optimization of crowdsourcing. And we'll be hearing from him today about some of the latter work. Thank you. Peng.

>> Peng Dai: Thank you, Eric. So my background is decision making under uncertainty. And my work is highly motivated by the lack of scalability of decision making under uncertainty. And I'm fascinated by this new application that is quality control for crowdsourcing. And that is my topic today.

So I wonder if any of you have dreamed that one day you're going to be relaxing at your office and all your work has been done by somebody else. If that is your dream, then this talk is right for you, because I mean to tell you how to do that.

And the underlying technique is crowdsourcing. And crowdsourcing is a really new notion which refers to outsourcing tasks through open call to a group of unknown people. Now, crowdsourcing is really knew. It is growing very fast. For example Crowdflower use crowdsourcing to do Haiti earth quake rescue. A game of purpose called Foldit is to let game players to help solving large and complicated computational problems. A company called smartsheet use crowdsourcing to extract useful information from the Web. And Facebook use crowdsourcing to do natural language translation.

So with the rapid growth of crowdsourcing I would argue that it has a large potential market. So thinking of crowd -- outsourcing, outsourcing is a notion that is introduced in the '80s and it's boomed in the '90s. And nowadays it has a large market size of over 300 billion dollars.

And crowdsourcing is only recently introduced a few years ago, and now it reaches market size of over one billion dollars. It will be interesting to -- and reasonable to estimate that one day it's going to be -- have the same order, comparable size of outsourcing. Even if it only occupies 10 percent of the market then it's still over 30 billion dollars.

>>: Where did that number come from, the crowdsourcing market? Do you know what Mechanical Turk is bringing in these days, for example?

>> Peng Dai: Yes, it's from a survey from the smartsheet.com.

>>: I wonder how much Mechanical Turk is bringing in, I wonder. Anybody know the answer to that question?

>> Peng Dai: How many -- how many --

>>: You know, broad revenue of Mechanical Turk, I wonder how big that is.

>>: Very little.

>>: I think it's [inaudible].

>>: And is this -- do you happen to know if these numbers are sort of match transfer numbers or sort of gross? In other words, it's -- is it how much money a company smartsheet made or is it like a billion dollars -- some people paid a billion dollars to some other people in aggregate and then a little tiny bit was taken out off the top?

>> Peng Dai: So notice that this medium does not only occupy -- only takes into account Mechanical Turk. There are many, many other websites for crowdsourcing. And this is only an estimate. It's not exact number.

>>: But it's gross. In other words, it's like a billion dollars of --

>> Peng Dai: Essentially max revenue for the entire crowdsourcing market.

>>: But that means that the people -- okay.

>>: [inaudible].

>>: Okay. That's fine. I was just curious. That seems a little [inaudible].

>> Peng Dai: Okay. And if we can use some sophisticated mechanisms to, for example, cut 10 percent of the cost, then we're talking about billions of dollars of savings here. And Amazon's Mechanical Turk is probably one of the successful marketplace for crowdsourcing. And notice that each -- at each time of day there are over hundred thousand hits or human intelligence tasks available on

Mechanical Turk. And there are two basic incrementals on Mechanical Turk.

One side is to workers. So the workers log on to Mechanical Turk finding the tasks that they're interested in, work on those tasks and earn some money.

On the other side is the requesters, so the requesters post their tasks, get their jobs done and make a payment. So there are over hundred thousands of registered workers on Mechanical Turk.

So even with this ample human resource, people are kind of not using

Mechanical Turk in a pretty conservative way because quality control is kind of hard. And here are some representative tasks on Mechanical Turk. There are some simple tasks such as survey questions and data labeling. And for survey question people don't actually need to do quality control but for some labeling task people use duplication as well as majority vote to guarantee a quality.

And there are some more complex tasks such as ad transcription, document reviewing -- document writings and things like that. To do quality control on this kind of tasks people use workflows that enable workers to improve and evaluate each other's work.

So these are quality control mechanisms tend to be very effective in practice, however, as a computer scientist we ask ourselves are those mechanisms good enough?

For example, there are many interesting questions that we want to ask ourself.

For example, for the duplications, how much duplication is enough? And is really majority voting the best mechanism that we can achieve?

Fork the workflow is there any way to dynamically generate and control such a workflow so that we can get the best cost and quality tradeoff based on the history of answers what we get? And in this work we aim at answering all those questions.

So we built on the first decision-theoretic quality control system for crowd-sourced workflows, and of course our system is based on parameterized models so it proposed efficient mechanisms to learn the model of the system and plugging the learned model into an end-to-end system that achieves statistically-significant better results. And also notice that our system is able to benefit from incrementally updating the model using the new data.

So here is the outline of my talk today. So I've talked about the motivation of this work. And then I'll move on to quality control for simple tasks; that is, to do quality control for boolean questions.

I'm going to propose our worker model and difficult measure and our mechanisms to learn the model and compare that with majority vote.

And then I'm going to move on the quality control for more complex tasks such as workflows. I'm going to tell you how I'm going to build this decision-theoretic quality control engine for workflows.

And there are many interesting related work. And I want to point out that Eric has done some really interesting work on decomposing tasks into workflows and find the best correlation of human and computer resources to complete these workflows. Whereas for our work, we kind of try to optimize a specific type of workflow. And I'm going finish talking about future work and conclude.

Okay. So I keep saying that quality control is hard. But why it is really hard? So we have hundreds and thousands of workers on Mechanical Turk every day and these people are from different backgrounds. They have different expertise.

They have different deliverance level, they have different various motivations, they have different background. So it is really hard to do.

And also, seen for a particular person he might be multitasking, he might be tired, he or she might be careless at different tasks so there are a lot of uncertainty at the time when the worker is completing those tasks.

And the underlying tuition of majority voting is that even if any single worker is not very good in accuracy if all the worker answers independent and they are all better than random, then doing majority vote on these people is going to achieve better results, better accuracy compared to individual workers.

And indeed Ryan Snow shows that the majority vote of an average four workers can achieve the same accuracy level as an expert in natural language annotation tasks.

So when we approach this problem, our first intuition is that the workers are really independent. However, after second sort we feel that it is not. And the reason is follows. So let's think of a task of which object has a bigger size. If I give you these two objects then pretty much have been is going to give me the same answer, which is the left one. However, if I give you these two objects, then the answers may be divergent. So the reason being that the left one is really easy and the right one is hard.

So the workers tend to give you independent results because of the hardness of the task itself. And so we model under intrinsic difficulty of a task through a parameter called intrinsic difficulty, D --

>>: I don't understand. Why can't the workers be independent the hard task?

Let's say they're just half of them say circle and half of them say triangle at random. Why would they be -- why would they be correlated?

>> Peng Dai: So the -- the answer itself is correlated is because more or less people are going to be -- their answer is going to be divergent where the rest if you are giving them and easy task then maybe 90 percent or even higher number of percent -- percent of people is going to tell you left one is the right answer.

So their answers are not independent given the task. Their answers are independent given the task but not marginally independent.

>>: I guess again why would a hard task not be -- given the hard task, why would it not be just a coin flip?

>> Peng Dai: That's right. That's right. So that is actually our intuition. So their answers are independent given the task itself, given the difficulty of the task. But

without the difficulty their answers are not independent because the problem itself can be easy or hard. And --

>>: He's saying the opposite. Easy one is not independent. The hard one is independent. That's right, that's what you were saying?

>>: [inaudible].

>>: Yes. Okay. There we go. Okay. That makes much more sense.

>> Peng Dai: So instead we have this conditional independence which is the workers answers independent given difficulty of the problem.

And now we have this difficulty and we want to find out what is the probability that a person answer a question correctly. So let's use a boolean question example here. If the problem is really difficult -- if the problem is really easy, then that means the difficulty is zero. Then everybody should answer the question correctly. So that means the probability should be one.

And to the other extreme, if the problem is really hard, then the probability should be .1 because the better we're going to do is a coin flip. And these two cases are really rare and they don't usually appear in the practice, and what we're really interested in are all the intermediate cases.

So we use this family of functions to model a person's accuracy. And we introduce the this gamma which is error parameter of that person -- of that person. So a good voter typically have a smaller gamma whereas a bad voter typically has a larger gamma.

>>: [inaudible].

>> Peng Dai: Yeah?

>>: Are you right now treating the error as just a [inaudible] variable or are you going to be looking at for example categorical tasks and you will have a distribution across for error in different categories?

>> Peng Dai: So for this example we're just talking about boolean questions.

>>: Okay.

>> Peng Dai: So now we have this error parameter for every single person. And we want to figure out what are the exact values of these error parameters for people.

So here is our learning task. So this is a typical plate notation for graphical models. And this typically says that we have M boolean questions and we have

N voters and the capital gamma refers to the unknown distribution error parameter -- error parameter distribution of the entire population. And a small gamma is the error parameter of a particular person, particular voter. And it's

dependent on the capital gamma. And B here refers to the answer of a person to a particular question.

So we know that the answer of that person depends on three variables. One is the error parameter of that person; secondly, the difficulty, D, of that question; and lastly V, which is the truth value of that ballot question. Is this clear?

>>: What is the capital gamma again?

>> Peng Dai: Capital gamma is the underlying error parameter of the entire population.

>>: [inaudible].

>> Peng Dai: It's like a prior, yes. Yes.

>>: Your D and D are shaded but aren't they unobserved?

>> Peng Dai: Yes. So for this learning task we presume they are provided by human experts. So we're using supervised learning here. Okay? Yes?

>>: In many of these tasks you use the boolean [inaudible] the person, the task only [inaudible] not the correct answer. And you are doing majority voting to kind of discover what the correct answer is. So you really don't have the [inaudible] to be able to update the error.

>> Peng Dai: Right.

>>: So how do you solve this problem of not being able to know the ground truth to be able to [inaudible] the error parameter.

>> Peng Dai: Okay. That's a good question. Actually for those questions we don't have the ground truth we can instead use unsupervised learning. And I'm going to tell you about another related work on supervised learning. Is that --

>>: I think my question really is a question the problem can be very difficult so the answer is wrong, and by your supervised -- unsupervised algorithm will tell you that this problem is in fact easy because you get to an answer quickly although the answer you get is wrong and the problem is really hard. So even your unsupervised learning algorithm wouldn't be able to [inaudible] so do you have any assumptions like if you can get to the correct answer by [inaudible].

>> Peng Dai: So, no. So we are assuming that the answers are provided in a way that should be aligned well with the model. So using unsupervised learning algorithm assume that the learning algorithm's going to tell you based on their inference what are the correct answers. I know these kind of questions are sometimes subjective, and there is sometimes really no correct answer. But let's assume for now that we do have correct answer which is either no more unknown to us and we're going to figure that out.

>>: I think her question is more towards the previous slide where you actually had this model. I think you're saying that there might be questions that are, in fact, hard but appear to have an easy answer. So even though it's in the very hard side people do agree on an answer and it's wrong. So --

>>: You don't have the ground truth and just depending on the collected answer

I think that will be a problem in [inaudible].

>> Peng Dai: Okay. So we picked this image description task. So what we do is that we give a person an image and let that person write English description which describes the picture and better distinguish that picture from similar pictures.

So this is a boolean question. We give them two English descriptions and ask them which one is a better description. Okay? So we assume that we're getting the boolean answer from the workers. Of course we assume that we're going to get the actual truth values and difficulties provided by the human expert. And our question is how do -- how good each voters are? We want to figure out the error parameters of all the individual workers. And our solution is a maximum a posteriori estimation. Yeah?

>>: What's the highlighting from?

>> Peng Dai: Oh, the highlighting is the difference between the two paradigm wordings.

>>: Then the percent is just the highlighting [inaudible].

>> Peng Dai: Yes. Yes?

>>: [inaudible] make the assumption that there is a right answer and that if someone provides another answer that is wrong whereas when it comes to things like the description it might be that actually 70 percent of the population prefer this description and 30 percent prefer the other description so there is not exactly right and wrong here, it's a matter of preference. So can you explain it to me a little bit how you see that?

>> Peng Dai: Yeah. That's right. So as I mentioned that these questions are sometimes very subjective. And there is no right or wrong answers for that. But let's assume for now that we do have a boolean answer for those questions.

>>: So you assume that there is a correct answer and incorrect answer and whom ever says the incorrect answer is wrong. So there are a number of preferences or multiple meanings or stuff like that, there is just right and wrong?

>> Peng Dai: Right. [inaudible]. Yes. Okay.

And here is the learning results for the ballot model. So we give 20 questions to those people. And there are 45 voters total. And we're comparing two mechanisms. One is to use majority voting and the other is to use the ballot

model. And they both achieve the same accuracy which is 80 percent.

However, we figured out that in reality we don't have the luxury to like ask 45 people for same boolean question. So we randomly sampled a subset of the voters for the people and we compare the two mechanisms. Now, we can see that using our model actually performs -- outperforms the majority vote significantly consistently. And using only 11 votes we can achieve an accuracy which is very close to using all 45 votes. And also we can achieve the same accuracy by using only five votes comparing to using majority vote level votes.

And that means we can save over 50 percent of the money.

Okay. And of course if you don't know the ground truth of the difficulties and the truth values for those questions, you can use unsupervised learning. And

Whitehill, et al, they used this interesting unsupervised learning algorithm and it values that there are no gold-standard data provided and they show that their EM algorithms are pretty robust.

>>: They had the same model you said?

>> Peng Dai: Not necessarily the same model but same look, same independent structure. Yes?

>>: So [inaudible] the key thing that they're providing is that they're providing the modeling of the biases of individual voters, and that's where a lot of the power comes from is that they model both the biases with respect to different answers as well as the kind of two modes where the voter is not paying attention or paying attention and they have different bias models and so the key results there were due to those two factors. So would you be able to introduce those in your model? Have you considered that or --

>> Peng Dai: Yeah, that's -- that's [inaudible] work. We considering -- consider all those factors into our model, yeah, that's true. We consider, incorporate all those things in our model.

>>: Have you -- I mean do you play with it --

>> Peng Dai: No, we haven't done that yet. Yeah.

>>: But I mean this brings up an interesting question. So there is a per voter estimation or a per score parameter like gamma for testing the reliability of that.

But as you were mentioning earlier, and many other studies have found is that, you know, somebody who is good for a while can decline in quality, not

[inaudible] certain questions. It seems like there's almost a per question parameter [inaudible]. I mean I wonder if [inaudible] all these people and you figure out, you know, some value of gamma. Is that really good enough to know for future performance that person if they're going to [inaudible].

>> Peng Dai: Yes, that's a really good question. So learning the temporal model for worker as you mentioned is really interesting topic that I'm going to pursue in the future. Yes, exactly. Yes?

>>: A fact that actually matters is the [inaudible] which you are giving to the question. If you are thinking more, pay more attention [inaudible] in this model or is it [inaudible].

>> Peng Dai: That's an interesting question. Actually according to some work, I forgot their name, but Watts and I forgot the other person, but their discovery is that the quality of the answer does not necessarily go up with the price whereas the response speed goes up with the reward. That's actually interesting observation.

>>: [inaudible] assuming the same [inaudible] you can kind of classify your population for [inaudible] set of workers [inaudible].

>> Peng Dai: Sure. Sure. That's another interesting thing too to think about in the future. Yeah.

>>: So maybe you'll get to this, but right now you seem to be assuming that your workers have a sort of dominant scale of knowledge that, you know, there's -- this good worker that is dominant across all examples where people tend to be good in specific areas, right? And so this worker is maybe highly accurate in this area and not highly accurate in other areas. So are you going to address that or

--

>>: Yeah, exactly. That's actually another future work that I'm going to talk about. How do we use the model for one particular task to another type of task?

Okay? Yeah, that's actually the end of this section. Are there any more questions?

>>: [inaudible].

>>: [inaudible].

>> Peng Dai: So, yeah, so this is actually --

>>: [inaudible] unsupervised.

>> Peng Dai: Oh, the unsupervised. I don't have their -- I don't have their results. But turns out that their simple algorithm is actually performs better than majority vote. But I don't have the statistic on this slide. Yeah.

>>: So you didn't actually do this experiment?

>> Peng Dai: Sorry?

>>: You did not do this experiment?

>> Peng Dai: I didn't do this experiment, yeah.

>>: But what works great [inaudible] the model for the next [inaudible].

>> Peng Dai: Yeah.

>>: So can you go back a slide? I think I'm -- either I'm confused or I don't know.

So you're doing the supervised. You're assuming you know the answers to the questions, right? Did you look at like, you know, [inaudible] answers to some of the questions and then estimate the difficulty and then see how well we would do on the rest or anything like that? Or did you -- is this -- is this result -- is this the

-- like if they gave me 11 answers and I know all the answers -- the correct answers, this is how accurate I can be? I'm a little confused.

>> Peng Dai: No, this is the -- using the cross-validation results. Yeah. So I --

>>: What does that mean?

>> Peng Dai: So I train the data using part of the labeling data and I predict the correct answer for the rest of the -- for the rest of the questions. And I --

>>: Okay. So this means you used 11 known answers [inaudible].

>> Peng Dai: Oh, no. So I used -- so I only -- I only picked a subset of 11 votes out of the 45 votes and trained that model on top of that. And I'm going to predict the rest -- the performance of the rest of the results.

>>: Okay.

>> Peng Dai: Tasks. Yes.

>>: I see.

>>: Which there's still the question of -- are you just taking these 11, the top gamma [inaudible].

>> Peng Dai: No, I -- random. Random.

>>: Oh.

>> Peng Dai: Yeah.

>>: Okay. I mean, it seems like there might be a question of optimal subset also.

>> Peng Dai: Yeah, that's the best scenario. But we tried like a 10,000 random sets and calculate average of that.

>>: So you're not assuming any pre-information about [inaudible].

>> Peng Dai: No, I don't.

>>: [inaudible] pick five at random.

>> Peng Dai: Any more questions? Okay. So now I'm going to move on to quality control from complex tasks. How do I optimize workflows? And here is a task that is handwriting label. So I'm going to maybe give you half a minute and figure out what this handwriting is about.

>>: It looks like my handwriting actually. [laughter].

>>: Well, there you go.

>>: I actually [inaudible] by the way [inaudible].

>> Peng Dai: Yeah. So everybody can see that it is really hard task.

>>: I wonder if [inaudible] a neurologist. [laughter].

>>: [inaudible] for time. [laughter].

>> Peng Dai: And so what Greg Little approach this problem is that he uses iterative improvement mechanism or workflow. So the idea is as follows. At every iteration it gives an artifact which is a deciphered of this handwriting to the crowd and asks them here is the current decipher of this handwriting. Can you improve this decipher for me?

And after that both the new artifact and the old artifact are given to two or three voters, based on whether the first two did agree with each other, and then based on majority vote only one artifact is kept as a starting point for the next iteration.

So instead of letting individual person working on this handwriting task, it just ask people to improve previous people's work iteratively. And that's the idea. So after six iterations we've got this very, very, very good decipher which only got four words incorrect. And the part I'd like the most which is that this thing really seems like a signature, but it turns out to be a score, B minus. [laughter].

>>: So how do you get the ground truth [inaudible] the person that actually wrote this?

>> Peng Dai: They did the handwriting. Yeah.

And they also used this iterative improvement workflow for image this certain task. Same idea. Everybody improve on the previous people's description iteratively.

And here is the first version of this description. And after eight iterations we got a very decent description of it. And that is the power of iterative improvement.

>>: It looks like there's a strong potential for [inaudible] of each round you're going to get heavily [inaudible].

>> Peng Dai: Yeah, yeah. Exactly.

>>: You may want to start a bunch of these you know at ground zero [inaudible].

>> Peng Dai: Yeah, that's interesting. Yeah. To start from different starting point and let people see how that pursues in the future. Yeah, sure.

So Greg Little is an HCI person. When he came out with this iterative improvement idea, had he didn't realize there are many interesting decision points on this workflow. For example, should I take another ballot? Should I perform another improvement? Or whether the description is good enough so that I should submit my current version.

What he does is he uses a static workflow which does not catch a lot of interesting dynamic cases. For example if we have a good ballot people and that means we might need fewer votes. And if we have a good improver then that means we might need fewer improvement iterations. If, for example, we have a consecutive runs of failed improvements, that means that the quality of the artifact is already pretty high. So --

>>: What's the difference between a good ballot worker and a good improvement worker?

>> Peng Dai: So the ballot worker is if you give them two artifacts and let them choose which one is better and that's a ballot question. An improvement question is that you give one artifact and a picture and ask them can you improve this artifact. Okay? Ballot question is just boolean.

So we use this dynamic workflow control. And here is our problem definition. So our workflow consists of a sequence of tasks which are either improvement or ballots, okay? And the observations are the follows. We have a history of tasks that we have posted. We also have all the answers and the worker information for all those tasks.

And our decision is to dynamically generate the next task which is going to maximize our long-term expected reward minus the total cost.

>>: So each worker could participate in one of those [inaudible] tasks. There are two separate [inaudible].

>> Peng Dai: Yes. Each worker can choose to take any -- any single task on on this workflow.

So this question is really hard because it is a sequential decision making problem. The ballot answers are noisy. And hopefully we have already solved that in the previous example. So quality is unknown and workers' improvement results are kind of uncertain.

And so solve this problem, we refer to decision making under uncertainty. And decision making under uncertainty is a critical topic in artificial intelligence. For example the robotics and automated agents are controlled by decision making under uncertainty on solvers and planners. It has been used to a dialog

management system. And reinforcement techniques has been used to for example automatically control helicopter. And of course we use it to dynamically control across workflow.

And here is our solution. We have a decision engine called TurKontrol which has a model of the workers. And based on the model and history of the answers, our planner is goal to automatically generate the next task. And on top of that, we have a learning algorithm that learns the model of the workers.

So we're getting a problem from a person or the boss, and we return the solution to the person. And based on the quality of the solution we're getting a reward from that person. To interact with workers, we post hits human intelligent tasks on Mechanical Turk and awe we collect the answers from people and pay those people.

So here is dynamic workflow generated by our agent. Suppose we are given initial artifact alpha. Our first decision is that do we need improvement? If the answer is no, then we simply submit alpha. Otherwise we're going to generate improvement hit which give alpha to the crowd and last ask the crowd to improve the alpha. Then we get improvement which is alpha prime. And based on our model, we're going to estimate the quality of alpha prime.

Then our next question is do we need voting here? If the answer is yes, then we generate a ballot hit which presents the crowd both alpha now for prime announcing which one is better. Then we get our result -- ballot result B from the worker, and based on that -- based on the model we're going to update our quality estimates.

And then we go back to the question do we need more votings here? If the answer is no, then we're going to pick one artifact that has the high expected quality and go back to the very first decision. So it is clear?

Comparing to --

>>: What's the split-up of [inaudible] I mean [inaudible] assumes that [inaudible] you just submit -- you know, if you have an alpha prime, submit it to the population, they vote on it and you're done, with that same alpha and alpha prime why would you go back to the voting on that same pair?

>> Peng Dai: Oh, so this is just one voting task.

>>: Oh, I see. So you have --

>> Peng Dai: So you might want multiple voting.

>>: I see.

>> Peng Dai: Yes.

>>: So you increase the number of people voting in --

>> Peng Dai: Exactly. Exactly.

>>: I see. Okay.

>> Peng Dai: So comparing to a static workflow our agent dynamically generate two decisions. One is do we need improvement and the other is do we need voting? Yes?

>>: How do you control [inaudible] so you say if there's two opinions that just dramatically differ and then every iteration you could just cycle continuously where workers will say well the previous one is wrong, this one is right and then, you know, in the next round the other judges disagree and so on.

>> Peng Dai: Yeah, that's going to be captured by our difficulty measure.

Because if the questions really get divergent answers that probably means that the question is really hard. Right?

>>: But which of the boxes here will exit out with an uncertain don't know

[inaudible].

>> Peng Dai: Yeah, I mean -- yeah, I mean to talk about that. But it's really hard to like put all those things in the figure. But it's really captured by our decision theoretic planning agent and model.

>>: And so when we show the box -- we show the decision [inaudible] on the question?

>> Peng Dai: Do we need voting -- do we need more voting. Because if the problem is really hard, then we probably don't want to go too much further because we're not getting any value here.

>>: Wouldn't your improvement need -- box need to submit a partial answer then because you go back then to improvement needed.

>> Peng Dai: What do you mean?

>>: To get at the loop it looks like you need to go to this submitting the incomplete or indecisive answer.

>>: He's just saying that like -- I mean if that box, if the [inaudible] box eventually says okay we're stuck, something's wrong, in the diagram you have it seems like

[inaudible] you have to go through that [inaudible] box somehow. Maybe you have some other way of escaping out if the more voting [inaudible] is messed up.

>> Peng Dai: So, yeah, there's a mechanism design designing whether we need any more voting and if we don't need any voting then we pick the best one. And we're going to go back to the previous decision, do we need improvement, right?

And the new improvement iteration is going to just -- is going to start from there.

>>: [inaudible] and I stop and I cannot get an answer out, where do I get out of the system without costing me any more resources?

>> Peng Dai: Yeah. It's going to be -- it's going to be decided by our decision engine when we want to decide whether we need more voting.

>>: So what you're saying is that to be able to get out of the system, the only place you can get out is the first decision [inaudible] the second one? So that's what I'm thinking [inaudible] right?

>> Peng Dai: Yeah. Yeah, that's -- so the problem is actually determined by the decision theoretic engine and decision theoretic engine is going to consider every single branch and make the best optimal choice based on current results. And that is captured by the system itself. Yeah. Yeah.

>>: So there seems to be and assumption here that you could actually make a decision at every point versus let's say I was trying to get people to classify things into a hierarchy, right? Can you imagine at the top of the hierarchy there's a lot of indecision about which branch it goes into and I kept getting back this slope of more voting needed, improvement needed because I can't decide which are the top things. But if I actually said, look, these are the ones that are feasible candidates, pursue these down and come up with the whole classification, now vote, when they see the full thing they might actually be able to make a decision where they couldn't at the initial point. And so like how do you handle those kind of problems that once you actually follow it to completion one of them just doesn't seem consistently to make sense, you can imagine translation that you know you were getting stuck on some anybody word and with this I think it's actually this whole thing if I think it's this. But if I did the whole thing, suddenly it now doesn't seem to make sense.

>> Peng Dai: Sure. Sure. Yeah. I think this problem might -- this might point to the problem that the system might get into more local maximum or something like that. As you pointed out. Yeah. I think another thing is that whether our system is going to be able to do backtracking for some sense. Is that what you suggested? Is that right?

>>: I wouldn't say -- you could think of it as local minimum but you might think that you'd actually pursue each of them. There's some point which is just saying it's worth awhile to say let's push these further and see where we end up and then see if we can evaluate --

>> Peng Dai: Yes, exactly. Yeah, so that. In that case we have more decisions to make, yeah, exactly. Exactly.

>>: I think one question I have here is is the policy of this decision making is always do [inaudible] improvements [inaudible] in a way that you always have two things to compare, or can I have improvements, improvements, improvements, I have many possibilities and then I do one more thing and

[inaudible] alternatives.

>> Peng Dai: That's exactly what you described, yeah. That's the systems --

>>: [inaudible].

>> Peng Dai: Yeah. The system actually works as you described.

>>: Okay.

>> Peng Dai: Yes.

>>: Because from this picture it [inaudible] at the first case where you do any improvement and [inaudible].

>> Peng Dai: So, yeah. After improvement you can decide we don't need any more and so we go back and then do another improvement. Is that what -- is that what you're --

>>: Then you have multiple alternatives, right?

>> Peng Dai: Sure. Sure. Okay. And our system is able to estimate the quality of the artifact based on the model. So here is the model. We have some evaluation measures that we want to capture. For example, first of all, we want to define the quality of an artifact. We know that an artifact is of high quality if it is hard to improve. So we define a quality Q to be that an average person is going to improve that artifact with quality -- with probability one minus Q. And of course this is an unknown statistical measure and can be best estimated by random variable capital Q. And then we define a difficulty of a ballot hit. A ballot hit has two artifacts, one of quality Q and the other of quality Q prime. So we define the difficulty to be one minus absolute difference between the two qualities to some trained constant M. So this means that the closer the two qualities the harder the task is.

And of course we have a reward function from the human or from the boss that is his relative satisfaction with the quality of the artifact that we give it to him. And of course we have some costs, which is the amount of money we need to pay to the workers. And our goal is to maximize the long-term expected reward minus all the total cost.

>>: I'm sorry, where does the M come from?

>> Peng Dai: M from training. So we model this problem as partially observable

MDP, but I'm going to free you from a technical and mathematical details of

POMDP but instead talking about key components of our model.

So our system has -- makes three dynamic decisions; that is three actions to take at every single point, whether we do another ballot, whether do another improvement or we're going to submit.

And the transitions is coming from improvement because after the improvement we get a new artifact alpha prime with different quality Q prime. And of course Q

prime is unknown. And we use this condition distribution function to model an improver's accuracy; that is the quality distribution of the new artifact based on the quality of the old artifact as well as the improver.

And we have some observations in this model that is based on the bought hits.

So based on the ballot hits we're going to update our belief distribution of the qualities because that's being to give us some more information of the qualities of the two artifacts.

And here is an illustration. So suppose our initial artifact has this distribution and we do improvement and we have an estimate of the distribution of the new artifact, which is like this. And suppose we are going to do a voting and we have came across a new distribution of the new quality and replace it -- suppose we're going to do another ballot and we update our qualities of the new artifact, right?

And we replace it. And let's suppose that we do not need any voting, so we're going to compare these two artifacts, we're going to compare the distributions, and we're going to pick a better artifact which is the one that has slightly better quality.

>>: Could you do that in the expectation?

>> Peng Dai: Yes. And we're going to keep that as the starting point for the next decision, for the next improvement iteration. Is it clear? Okay.

So planner. Our goal to do the planning is to make dynamic decisions which is going to maximize our long-term expected utility. And of course this is a too large problem to be solving exactly because we have two continuous variable, Q and Q prime. And so the state space is infinite. And instead we use an approximation algorithm to solve it. And we tried a bunch of approximation algorithms and we find out the limited step lookahead algorithm actually performs the best in practice.

So the learner. So we want to learn how good a person is at improve other people's work. So here we pick 10 image encryptions and we give all those 10 descriptions to 10 workers and let them each improve every description. So now we have a hundred new descriptions as well as the 10 old descriptions. Let's say we're going to get their quality scores through Mechanical Turk graders. And our learning objective is to learn two conditional distributions. One is the worker-dependent model which is the quality distribution of the new artifact based on given the quality of the old artifact as well as the improver.

And another one is the worker-independent model, which we do not have the worker information but we assume that worker performs average. That is the worker-independent model.

So this -- we're going to learn a two dimensional distribution, and this probably is kind of complicated. So we break up the learning process into two pieces. First of all what we're trying to learn the mean quality of the new artifact. And we assume that it is a linear function of the old artifact. And of course this is approximation but it turns out to be very effective.

And the next step based on the linear regression result, we're going to fit three distributions, beta, triangular and truncated normal. So we have these three conditional distributions and we want to find out what is the best distribution for our model.

To do this, we use leave-one-out cross validation. So iteratively reserve data points and we perform a linear regression on rest data points and [inaudible] distribution. And after that we're going to test the model based on the reserve data points. We do this iteratively for every angle data point and presumably the distribution that achieves the highest sum of log likelihood is going to be the

[inaudible] distribution.

And our result shows that for the worker-dependent model, beta distribution works the best comparing with the triangular distribution it has a higher peak, it is bell shaped, has a higher peak in the middle, so this means the worker results are a kind of stable.

And the worker-independent model, truncated normal works the better -- works the best because comparing with a beta distribution it has a slightly higher peak with same mean on on a standard deviation.

>>: Is it a truncated normal [inaudible] renormalized to integrate the one still

[inaudible].

>> Peng Dai: Yes, two, zero, and one.

>>: But I mean once you truncate it, it's no longer going to integrate to one, it's going to evaluate what likelihood you're going to get the wrong numbers. Do you renormalize that [inaudible].

>> Peng Dai: Yeah. Yeah, that's [inaudible].

So this means that a group of workers results are more stable than a single worker. Okay. So we now have the model --

>>: I have a question first of all.

>> Peng Dai: Yes?

>>: So you said earlier you compared it to UCT [inaudible] do UCT experiment have a with you like did you model individual workers or [inaudible] compared to

-- like I'm just writing to understand if your comparison -- if you're comparing models that used details models of workers versus the ones that only have an average --

>> Peng Dai: No, we compared them for the same -- for the same background.

So they have the same information for the planning algorithms. Yeah. They are built on the same model. Same set of models. They're built -- they have both the worker-dependent and worker-independent model. Okay.

So here are the parameters of our execution. So we pay each improvement five cents, we pay a ballot one cent and our reward is 25 dollars times Q, times the quality.

And if we encounter a worker that we have his model then we're going to use worker-dependent model, otherwise we're going to assume that that person is average and use the worker-independent model. And notice that our system is able to benefit from offline updates given the new data.

So here are some key statistics for our costs. For the model learning we spend a total of 21 dollars. And for the execution on this image description task our agent spent an average of 46 cents per image description.

And we give 20 image description task on Mechanical Turk and we're comparing two workflows. One is the workflow generated by our decision engine, and the other is a static workflow. And here we're comparing the ultimate result if they are spending the same average cost. And notice that there are more data points to the right of the line Y equals 2X, which means our decision of dynamic workflow generate higher quality compared to a static workflow.

>>: Statistically significant?

>> Peng Dai: Yes, it is significant -- statistically significant. That's the next thing

I'm going to say. Okay?

And then we're going to compare the average quality of the two workflow and find that static workflow generate an average quality of around .6, whereas for

TurKontrol we get around like .67, which is around like 11 percent more quality, which doesn't sound to be too much, so we perform additional experiment, that is to keep running the static workflow until it reaches the point that it's going to get the average quality of our decision -- of our dynamic workflow. And we find that catch up with this 11 percent more quality we have to spend over 28 percent more money. This is because quality improvement is not linear in the cost.

And we want to find out why our dynamic flow works better. So we checked the execution logs and we find that for seven images the dynamic workflow actually performs fewer improvement iterations and actually six of them are actually having a better and higher quality. So this shows that our system is able to track the quality perfectly.

And one time the system tend to trust the -- just the first voter because that worker was known to be a very good voter. And most interestingly our system is able to make intelligent ballot use as he pointed out.

So if for static workflow it either gets two or three votes at every single iteration, so the average ballot per iteration is pretty flat. However, for our system, it choose to do -- not to do any ballot for the first three iterations because improvement at a time is really easy. Whereas, as the iteration number increases it choose to do more and more ballots. It's because improvement the

really hard and we really want to guarantee the quality there. The only exception is that at eighth iteration the number of ballots drops. Can you guess why?

Because at that time the improvement is really too hard and only the first person says no, this is not improvement. We tend to believe that.

>>: But the average quality is 67, right?

>> Peng Dai: Yeah.

>>: So that means that there are one and three chance that a random person can improve it.

>> Peng Dai: Right.

>>: So does that mean the crosses is done -- there must be some set of -- oh, is it -- the cost stopping criteria and that's -- I see.

>>: What does the measurement for quality [inaudible].

>> Peng Dai: What is the --

>>: Quality. How do you measure the quality?

>> Peng Dai: So the quality here is determined by our model. So it's -- we have this conditional distribution function, right?

>>: So in your bar graph when you are giving this static and the dynamic

[inaudible] comparing their qualities [inaudible] system or is it the quality that you measure [inaudible].

>> Peng Dai: Oh, yeah. That's a good question. So we give this to Mechanical

Turk readers and ask them what is the quality of the artifact. Yes?

>>: Have you taken any adversarial view of this and said if I only care about manipulating a small number of items, right, I can basically do good work until you give me something that I want to manipulate and then manipulate it so -- have you thought any about, you know, analyzing how susceptible that is to that type of adversarial behavior?

>> Peng Dai: Sorry. I didn't get your question.

>>: So in a lot of these crowdsourcing tasks you want to actually prevent the adversary from manipulating what you think is the truth, right? So one way to exploit this type of thing is for me to essentially do good work, right? You start estimating that I'm a good worker and therefore you need to ask for less backup on my device, right? Now I see something I want to manipulate, and I've sort of built up the credits to do it. And so now I just do it and you don't ask for enough backup and you go with it. So I'm actually able to get away from a certain amount of manipulation without you ever questioning that.

>> Peng Dai: Sure. And I think that's going to be cached out by our update of the system because as we're getting more data from people, we're going to perform offline update based on the previous performance of that people. So if a person previously give us a lot of good answers and correct answers at beginning, then we have a -- then we have a belief that that person is pretty good. But afterwards if you are keeping give us like bad answers, for example if a lot of other people disagree with you, then we are going to slowly decrease our credit in you.

>>: Then -- I didn't quite follow that. I think you said that basically you weren't going to ask for backup labels on good workers. So how do you actually end up catching that? If I'm a good occur how do you know to go off and do that and still get the costs?

>> Peng Dai: So for example here if you are listing an average of seven votes, right, and previously we think that you are a good voter and we trust you more, right, and if you disagree with all the rest of the people, then we think that -- or at the end that we think that you actually making a mistake here, then we're going to incrementally like discredit you a little bit, right?

>>: I guess part of the question is can -- a condition to that question is is it observable? Is the -- is your estimation of a worker's quality in any way observable to them? Like could they see their reward go up? Because the more that gets observable to them, the greater the risk for that kind of gaming becomes, right, because then I can say like, oh, my reward's getting higher and higher and higher, they must really like me, and now I'll slack off or something?

As opposed to if I [inaudible], you know, and you never know anything like how many people are voting on this particular round or something like that. The less you keep that information away, the harder it is for the person to get [inaudible] update.

>> Peng Dai: I see. I see. Okay.

>>: Are you really optimizing the reward?

>> Peng Dai: No, we're not --

>>: Or is it just the selection [inaudible].

>> Peng Dai: We're not doing any like dynamic rewarding.

>>: So the reward's fixed.

>> Peng Dai: It's fixed.

>>: I see.

>>: [inaudible] a little bit [inaudible].

>>: [inaudible] the confidence -- so if [inaudible] long enough [inaudible] place which you don't question my labels anymore, right, and then you can no longer catch the fact that I became [inaudible] so it's like -- it's like what's happening

[inaudible] you want to limit the weight you put on every single example

[inaudible].

>>: The real challenge [inaudible] there are just so many users not being picked by me doesn't really matter that much for the worker if you are getting [inaudible] them I'll just give you the easiest answer that I can give and collect my reward and get out of [inaudible].

>>: You just find another task.

>>: I'll find another task.

>>: There's some cost because if you get [inaudible] there's a lot of tasks where you can mark that you want people with this much accuracy [inaudible] from previous tests. So I mean workers they can always start another account, but there is some -- there is some pressure you can put on them, and there is a little bit of [inaudible].

>> Peng Dai: Sure. That's a very interesting question, whether people are going to give you the truthful answer, yeah, based on like the money you're going to give them. Yeah, I think that's very interesting too.

>>: [inaudible] one point just in reality I mean if you ever run set of that makes sense for your own gain, I mean the way that you generally do the filtering besides the post hoc manipulation of labels is you do qualifications and you do bonuses and honey pots. And the combination of these three is generally fairly effective for sort of weeding out the bad apples and the task after that is sort of getting around to the sort of real disagreements between them.

>> Peng Dai: Right.

>>: Just why sort of going back to why [inaudible]. And then so is there a way for your models to take into account the fact that you could [inaudible] you could design, you could incorporate schemes for qualifications or bonuses or --

>> Peng Dai: Yeah, because [inaudible] do qualification tasks. Yeah. We haven't done that yet.

>>: Okay.

>>: So the voting was by [inaudible] choice of A or B, would it be valuable to have people of the third option which is I don't know so you potentially get information -- so you could in that situation bring up I don't know, I don't know and then you could still have the same --

>> Peng Dai: Yeah, that's another option we also considered when we do this task. We're going to -- first of all, it's going to be very useful potentially. And

then it might complicate the task itself because we might need additional -- we need additional models for that. And I was afraid that a lot of people might just you keep I don't know and that's going to give us no information or so it's a tradeoff, yeah. Okay.

So related work. So I'm going to briefly maybe mention the first one. So Eric has done the -- writing the general computation paper. And his idea is that all aspects of the workflow can be crowdsource. Ranging from decomposition and controlling design and things like that.

And there are many, many other interesting related work. I want to talk -- mention that all this related work are very, very new, which means that the area is really new and hot.

So I want to spend a minute maybe just mentioning my other work in decision making. So I have two state-of-the-art optimal MDP planners; one that solves the problem much faster, and the other solves problem much large problems than have previously attempted. And I am very excited to talk about those, but I don't have time.

And also for the ranking policy project I provided the decision maker with another list of sub-optimal policies which gives the decision maker the flexibility to choose in the most appropriate policy based on maybe other criteria.

Okay. Some future work. So first of all, there are many interesting next steps for this work. So first a lot of requester, they really want to attract the best people to work to them, the most accuracy workers for worker for them. And one way is to do a performance -- to build incentive system. And Amazon Mechanical Turk really gives us an option to give us bonus to workers. And the question is when to give such a bonus and how much the bonus should be. And we might also want to investigate maybe other recognition systems such as leader boards.

And also I want to -- I want a system to also incorporate some time constraint tasks and therefore we want to study the relationship between the amount of reward and the response time so that we can better trade off completion time and the cost and also if we can also do a dynamic pricing in order to meet certain deadlines.

And to get the best information, as Kevin also pointed out, we might want to relax ballot questions to multi-value questions and maybe just ask people's confidence levels for those questions.

And my work has been done just to help requesters. But on the other side we also want to count the workers. And here is the current homepage for Amazon

Mechanical Turk workers. It contains some useful information. For example, the number of hits you've improved -- approved, bonus, total earnings, et cetera.

However, it doesn't capture a lot of other really interesting question that workers really want to know. For example, what are the reputations for the requesters in are they really fast at a paying people? Do they really reject a lot of work for those workers in and also some interesting recommended hits so that we can

build a possibly a recommendation system for the workers. And workers might want to know what other people are working at, so want to know what are the popular hits available on Mechanical Turk?

And hopefully in this work have convinced you that artificial intelligence is useful for the specific workflow that is iterative improvement. But not all workflows are iterative improvement. But I want to argue that actually every workflow is going to be -- is going to need AI because we need AI for optimal pricing. We need AI to study and learn the parameters of the model. And we need AI to do decision control and a possibly choosing the best workflow among a number of workflows inform a particular task.

So my long-term goal is to build a self-adaptive quality control system for

Mechanical Turk on Mechanical Turk. So it's able -- so it should be beneficial from the following components. For example, there are some new tasks and therefore we need new decisions. And our system should be useful if it is able to detect and predict spammers automatically. And also it's interesting to learn a temporal model for the workers because the workers performance tend to vary along time of day and day of the week.

And I have talked about a special type of task which is English or description of image. So what about the other task -- tasks? How do we efficiently transfer the model from one particular task to another task? That's the autotranscription or natural language translation. How do we do that?

Also, our system can -- should incorporate an attractive incentive system so that we can attract the best workers to work for us and that will be interesting work in matching workers with tasks.

And you know my expertise is in decision making on uncertainty, so I'm also generally interested in other activity -- in other applications. So for example, bing has described itself as a decision engine, and I believe that Microsoft has a lot of data on user query logs. So how do we use this huge amount of data to understand people's intentions, how to return the best and most relevant information back to the people and use that information to better serve people and potentially targeting people with advertisements?

And social networks is another interesting and enthusiastic area that a lot of people are using it every day. So it will be interesting to study how people make decisions on social networks. And also how to combine social networks with crowdsourcing. I think [inaudible] have done some interesting work showing that people who interact with each other more often on social networks tend to have similar interests. And Eric has done some friend sourcing work, which is a social network version of crowdsourcing.

And I want -- really want to find out how do we use this information to build a better worker model?

So in this work we proposed the first decision-theoretic quality control system for crowd-sourced workflows. And we proposed efficient mechanisms of lending the

model of the system and plugging the learned model into an end-to-end system that is able to achieve statistically-significant better results. And the system is better to incrementally update the model itself, given the new data.

So, finally, I want to acknowledge a lot of help for this work. And I also want to thank you all for your attention.

[applause].

>> Eric Horvitz: Any comments or questions? It was a very interactive session.

Thanks a lot.