>> Jim Larus: So it's my pleasure to welcome... Mowry at CMU. He's on the job market obviously. He's...

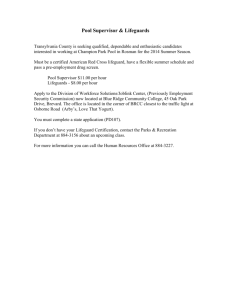

advertisement