20849 >> Eyal Lubetzky: Okay. So hi everyone. We... Krivelevich from Tel Aviv University. Michael will talk about embedding...

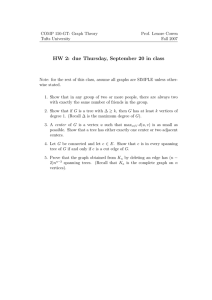

advertisement

20849 >> Eyal Lubetzky: Okay. So hi everyone. We are happy to have today Michael Krivelevich from Tel Aviv University. Michael will talk about embedding trees in random graphs. >> Michael Krivelevich: Okay. Thanks. It's always nice to be here. And it's almost always productive. In some sense this talk will be a instantiation of this idea. So [indiscernible] the story starts from my point of view in 2004, I think, when together with Benny Stockovitz [phonetic] here at Microsoft [indiscernible] and talking about something [indiscernible] and Jan, this gentleman in the back, and [indiscernible]. Now I understand Jeff -- and [indiscernible] asks the following questions whether we know to embed with high probability a bounded degree spanning tree in a random graph form. Probability as low as possible. And then I think specifically asked us about embedding the so-called comb [phonetic] which is a structure by taking the passive N and taking a path of length square root N and taking a point pass. So it's about on the fly for some time and then I remember trying to ask Jeff whether he would settle for a embedded nearly spanning trees. And Jeff, as you can imagine, [indiscernible] so not really interested in partial answers, so left the room. So talked about it and somehow felt that the question is rather hard. And we put aside it instead and started thinking about embedding nearest spanning trees, which means trees [indiscernible]. And eventually, together with [indiscernible], we wrote a paper which more or less sold to [indiscernible]. But the case of spanning trees still remained [indiscernible]. And in some sense this work is, it was the first significant major project since that time. Okay. So let me start with basic definition. So I probably will assume some familiarity with random graphs and random graph notions. But if you feel uncomfortable or would like to ask something, feel free to interrupt me and ask. Okay. So we'll be looking at embedding spanning trees in random graphs. Okay. So briefly there's a definition. So we'll have a tree, which is this sub tree, subgraph of a G, called spanning [phonetic]. If it's the roots of the graph. So we'll be interested in embedding these trees. And actually the fact that all of us need to be involved in the embedding, makes it much harder than some other cases. And when we're talking about random graphs, specifically the model we're talking about is a so-called binomial random graph G and P which is defined usually as follows. So the vertex set is a set of N labeled vertices, one of 10, which I will denote by N in brackets. And for every pair -- every N ordered pair, for instance, IJ, the probability that IJ is MH of the MN graph, the speed main principle will be a function of N. And this N choose two choices are made independently of each other. So flip coins. Coins are not necessarily fair. Based on the interest of this conflicting, you decide what the result. [indiscernible] participation and you're thinking about certain things. So we assume N turns to infinity. And we also say that suppose we have an N event A, we will say that A holds with high probability, which I will abbreviate by WHP in the probability space G and P if the following holds. You look at the probability that random graph G and P has property A. So for a given A, it's a function of N. And for a given P, it's a function of N. You can research its limit and behavior. The limit of this probability is 1 for N to infinity. For N you say this property holds for probability. It's a little bit of [indiscernible]. It's a sequence of events, sequence of properties. And then [indiscernible] several times is so-called double exposure, which says the following thing. So suppose I have three numbers P, P1 and P2 between strictly 0 and 1. And let's say this is 5. 1 minus P is equal to 1 minus P1 times 1 minus P2. So if you look at the probability of space G and P, and if you look at the union of two random graphs G and P1 and G and P2, so you have the same vertex set. And for every H, the H is in the -- if it's in at least one of the graphs. These things are equally distributed. It's exactly the same distribution. So this [indiscernible] exactly the same distribution. And that's immediate. So here's a [indiscernible]. So for every pair IJ between 1 and N, the probability is that IJ is not an H of G and P, is exactly 1 minus P. And the probability that IJ is not an H of the union of G and P1 and G and P2 is 1 minus P1 times 1 minus P2, because these two are independent and you need to fail twice. So therefore the probability of not having this HN is the probabilities, so by our assumption these two expressions are the same. 1 minus P. And obviously both things happen independently of any other pair, and therefore get the same probability space. Now, why is it convenient? It's convenient in particular because of the following facts: It's a corollary. We can say if you look at G and P, and if you compare it with the union of G and P over 2 and not an independent copy of GO P2, then you can say statistically this is a super graph of this program space. Which means if it's convenient for you, it can generate a random space into phases if you generate G and P over 2 and then generate another P over 2. So it's double exposure and [indiscernible], but in principle you can do it as many times as you wish, chop it into pieces, which are not necessarily the same probability and generate different steps. It's very convenient. Okay. So now the question we are going to address is the following one: So suppose we have a tree C. Tree and address N vertices, exactly N vertices, in which case we're talking about spanning trees. And the other parameter you should take into consideration is its maximal degree because high maximum the degree gets, the harder it is to get a copy of [indiscernible], the probability. So we denote maximum degree. Just denote maximum degree. Let's assume it's at most some parameter delta where delta may be in principle a function of N. And what we'd like to know is the minimum value of P, where P cannot -- should be a function just parameter N and delta. So if you generate a random graph G and P, then with high probability G and P has a copy of T. >>: [indiscernible] subgraph, single? >> Michael Krivelevich: Subgraph single. This is a graph single. >>: No, the corollary of the double exposure. >> Michael Krivelevich: Corollary just with computation, if you split, if 1 is equal -- I may ->>: What's the symbol between two Gs? >> Michael Krivelevich: G and P statistically super graph of G and P and G and P2. Because computations, if you want P to be equal, to equal to P2 it comes just above. >>: I don't understand exactly what the precisiveness ->>: You uncouple them. >> Michael Krivelevich: You can couple them. If you're talking about multiple properties, instead of generating G and P, you can generate an independent G and P2 and generate another independent copy of G and P2 and you take a union and what you get you can couple it with G and P. [indiscernible] but that's just fine. So that's another question we'll pose. So given tree, that's important. It's a given tree on N vertices. And we'd like to find a copy of it in our random graph G and P. Of course is P is equal 1 you can find anything [indiscernible] so looking for the smallest variable of P for which there is a very good chance that G and P still has a copy of T. >>: In general or is it a min ->> Michael Krivelevich: It's not really defined, because -- you take it as informal statement. Because whatever I put here is a function of this WHP. >>: If you just say probability of this is F, then it's a precise statement. >> Michael Krivelevich: Yes. You can take -- if you don't want to think much about it, you can put here infinitely. Put probability 1 to sorts. And that will be for -So you won't be much concerned about the issues of the sharpness of threshold if you get this one-half, increase the bit and get much higher then. We constantly have some other things. And, more specifically, so going back to the question of Jeff, the thing we'll be interested in is the crux probably the most natural thing is the following one. So I'm looking at -- that's attributed to Jeff, but that's a natural question. So let's assume that delta of T is bounded by delta. And this maximum degree is constant. Talking about graphs about embedding spanning trees of constant maximal degree. And you still ask for the minimum of P, the minimum of LP of the H, this type of H is random graph G and P has this copy of fixed T. And if you want to be even more precise and concrete, even more concrete -- so let's say you take it to be the concrete tree, which is [indiscernible], so you take a path of length square root N. Square root vertices, and you attach a path of length square root N to each of the vertices, attach path get the comb. And you can ask about embed this particular tree. What's the minimum of each probability for each random graph G and P starts with probability of having copy of T. >>: Is there some reason that's the hardest tree? >> Michael Krivelevich: Well, we can ask Jeff. But actually I will try to explain. In some sense, there's cases -- and in this case -- this is a case of two end points of a spectrum and this case of the comp in some sense interpolates between the two cases. This instance would be hard, which is the case. Okay. So let me try to recover what has been known before. And actually, as I mentioned, the matches of that question is the case of embedding nearest spanning trees. So let's see what's now there. Embedding near spanning trees. So what will the statement be they're looking for? Instead of having to embed a tree on N vertices, we have some delivery, we have some spare epsilon, so we have a tree T. And let's say its degrees is bounded. And let's assume, to talk about something concrete, that there is some constant [indiscernible] for like the case of the [indiscernible]. But in addition you assume that it has 1 minus epsilon times N -- epsilon is some positive constant. You can read it now, but this is a constant pressure. This makes life much, much easier. And supposedly this changes the threshold, at least by an order of factor. So let's see what's known about this case for this. This is the result of [indiscernible] [indiscernible] and myself from 2007 which says the following: So under these conditions, given delta and given epsilon, we can pose that constant -- I don't want to be very formal, because it's not really crucial here, constant degree expander on N vertices. So describe precise what expander. But most important thing here is degree or most all the degrees are constant issues a function only of delta and epsilon, constant degree G and N vertices has a copy of T. And the important thing is the delta of G is maximum degree of expanded. It's not necessary regular, but it may be. It's bounded by some function C, which is a function of the given delta and epsilon, which is nice for several reasons. So one reason is that's a deterministic statement. There's no randomness involved. If you contain one tree on epsilon degree of delta, you can contain as well all such trees. This statement -- this constant degree expanding tree is the so-called universal graph for class of bounded tree which is for spanning. G is universal for this course. And, therefore, you can argue if you know to argue that your random graph G and P contains insight such a nice expander, then you get what you want. So the corollary would be, corollary, if you take G and P, and if P C over N and if C is a reaction, is a function of delta and epsilon. Large enough. Then we can argue that -- it's easy to argue if you -- [indiscernible], et cetera, but if you cannot bid, you get with high probability inside an expander of a bit more than that many vertices. And therefore it contains inside 3 T and therefore the random graph G and P contains with high probability every such T. So G and P with high probability contains an expander on 1 minus epsilon prime times N vertices for some epsilon prime which is less than epsilon positive. But very standard. And therefore we get G and P with high probability contains a copy of T. So the fact -- the fact that you are allowed to use not all vertices but only 99 percent of them, change life completely. It makes it much more accessible than the case of spanning trees. >>: Which method do you use to get expander? Do you have two core ->> Michael Krivelevich: Essentially our purpose -- our statement is a bit strange because not only expander, but we want our graph to be work sparse, which means it doesn't have dense, et cetera, et cetera, but given this you basically start with G and P and you delete flow degree and course K, and then we need [indiscernible] high degree which is also not a big deal. So basically once we get a graph degree, let's say in [indiscernible] factor of 10, you are fine. >>: I have a question. Is there any reason to think that this theorem generalizes two classes beyond trees? Like, I mean, because if you're talking about constant fraction size [indiscernible]. >> Michael Krivelevich: Well, not really. Because, for example, G and P for this [indiscernible] has cycles. For example, if you want to contain a triangle so G and P for C and B equal to C and N contains a triangle probability bounded by 0 and 1. >>: Large spanning. >> Michael Krivelevich: Trees are a completely different business. And so another thing, this one's a little expectations on the level of proofs, it's much easier to embed trees, for example, if you embed trees vertex by vertex then once you embed next vertex you don't need to go back to a previous vertices and therefore it makes it much easier. So made the statement [indiscernible] is easy to obtain and bend statements for graph cycles. Okay. So if we start with the node -- the first talk to be done so let me mention briefly at least what's been known before and what has become known after. So previous work just to put the right names on the board. Previous work. There was a paper by [indiscernible] Dela Vega in '88 who proved that G and P contains bounded degree trees of linear size but not necessarily of size which is quite close to N. Then it was the resolved by Friedman and [indiscernible] from '88. Was actually much more in [indiscernible], because in particular they provide a criterion for an expander to contain a deterministic criterion, to contain trees of large size. And therefore it's much easier [indiscernible]. Our proof uses Friedman and [indiscernible], sub routine. Because Friedman and [indiscernible] allows to embed let's say 10 percent of vertices and you [indiscernible], et cetera, et cetera. >>: Isn't it easier like the techniques are spectral, do you use spectral expansion? >> Michael Krivelevich: Well, what you care about is a distribution. And in particular you can formulate all statements in the following way. If you take the so-called MD lambda graph which is graph on N vertices, deregular or [indiscernible] at most lambda. So if the ratio between D and lambda is large enough constant C, then the tree's already there. So initially you can formulate by spectral terms. You need eigenvalue to say that the distribution is a correct one. >>: Only use it with expansion? >> Michael Krivelevich: Well, [indiscernible] uses expansion and discrete pieces, which you can derive from eigenvalues. So [indiscernible] has also another paper about trees embedding from 2001. So that will happen before and what happened after is further work. There are two papers at least one by Barlock, Chabba [indiscernible] from 2010 and [indiscernible] by [indiscernible] and [indiscernible] is not published yet. So as they refine techniques find all statements, statements are much cleaner, constants are much better. They don't need the graph to be locally sparse. The statement says more or less if you have a nice expander then contains the tree which is nearest spanning. That's what's known about nearest spanning case. It's settled and [indiscernible] factor now let's go back to the spanning trees. So the spanning case is much, much harder. So let's talk about embedding spanning trees. So let's still concentrate on the case random maximal degree constant. Of course you can ask the same question for every class of graphs. So more or less two available results. So the first one is, of course, the classical statement about [indiscernible] so [indiscernible] and [indiscernible] in '83 and independently Bolavash in '84 proved the following. Correct all statement you look at G and P, you take your P, basically to be as low as possible to avoid degrees at most one because in this case don't have a hundred cycle. So P is log N plus log, log N plus some tip-off [indiscernible] so [indiscernible] is any function tending to infinity arbitrarily [indiscernible] so enough P in science they proved with high probability G generate for G and P has 100 a cycle. Which means for our purposes, of course, they're talking about trees. Let's say let's talk about 100 path. So this is one extreme case. If it's roughly N log over N you do get path, and that's the lowest possibility, you can expect because below log over N you have this probability isolated first there's no chance to embed anything spanning. So that's one result. So the result is actually more observations than the result and appear to now in this paper this model so we observe the following thing. So the second case is as follows. So suppose we are still talking about 3 T. N vertices of bounded maximum degree. But in addition to it, we assume that C has at least delta M lips for some positive constant delta, which means that [indiscernible] proportion of the vertices of tree lives. Well, this is supposed to make it much easier to do and indeed we're able to argue in the following way. Then if you take a P to be C times log N divided by N, where C is only a function of the thousand parameters, delta and capital delta, you're talking about spanning trees. The vertices. So take C to be large enough. So then with high probability G and P contains a copy of T. Which means for the spanning case, which is in general hard but an additional assumptions that there's a nonlegible proportion of [indiscernible] leaves we know that. Here's a sketch of the proof you'll see more later. But let me sketch briefly this idea for you. So here's a brief idea. So let's take these leaves out. So we know F the tree tree minus any set of delta leaves. Let's say you take exactly delta leaves, takes them out. Get the tree nearest spanning, good case for us because we know how to handle it. So we look at G and P and we represent it as a union of G and P1 and G and P2 as before, in the case of double exposure. Let's say we don't really care much about constant. So let's say we take P1 to be typical, which is more or less P over 2. Okay. Then we do it in two steps. In the first step you expose the edges of G and P1, that's the first copy. Say this is G1 and this is G2. So expose the edges of G1. And there you find a copy of F. Find with high probability, of course. A copy of F. Now, we know to do it because the degree is bounded. You have nearest spanning case and therefore we can apply machinery from the nearest spanning case to get this. That part's easy. I'm sure you can do it even easy way you don't need much as a fancy -- for the case and P is constant over N. Now, what's left? So now the station is as follows. You have your tree, and most of it has already been embedded. So here's is what's left. Now leafs, touch these leaves to the thousands of graph, so need to do something like this. There's a collection of stars. So these points are given. You have exactly delta N vertices outside. And what you can embed -- what's left to be embed is the edges, a collection of stars, and [indiscernible] double time. So use G2 to embed the remaining steps. So here [indiscernible] to touch every vertex here and it takes probability for the log N over N. But it's an easy statement to do. It's not detractable. And the degrees bound pretty much straightforward reduction to hold. Now that's more or less what's been known before. Now let's roll back to the comp. As you can see here, this comp is in some sense is in the middle between the two deltas of the case or known cases. You go back to the comp. Comp. You can think about it as some kind of interpolation between the [indiscernible] pass and a tree with delta and lips. Why is it so? Is it so because it has also few leaves, only square leaves? Square leaves, and on the other hand if you look at every pass, every pass if you take so-called bear pass we should define -- each pass is on square root N passes. Franks square root of -- so it's not it's far from the case of many leaves. It's far from the [indiscernible] and expect to have some troubles and presumably we can ask Jeff what was his idea behind it. But presumably this is supposed to be related to the hard case if you cannot handle it good chances are we won't be able to handle some other cases. Okay. So that's the station before. Now let me state my result. Okay. Let me first write it down and then we'll try to figure out what exactly is going on. So theorem one. So we have a tree on N vertices is given to us. We're not talking about when you side step we want to embed 1 even tree. And we assume that it's degree is delta. Delta is not necessarily a constant. It applies to the constant case as well. And we have some other constant epsilon between 0 and 1. And we look as random graph G and P. Ask the same question before minimal FP this is a chance to get a copy of G. Here's a statement about probability and so what with it requires the following inequality if NP which is expected degree in graph is as large as -- some concluded expression here but the constant is not really important so the main term is delta times log N and then we need to also add N to the epsilon. So therefore we need from the H probability. Then with high probability G generated according to G and P has a copy of T. So this kind of a bit peculiar way to think. So you need your NP to be as large as delta log N times some constant and the thing starts kicking in only from the case when MP's polynomial, unfortunately. But that's true for any level of epsilon. So let's see what follows from it for the constant degree case. So as a corollary -- so here's the corollary. So if we have the case of constant degree, then if you take our P to be N to the minus 1 plus epsilon, for any fixed epsilon, which means we are slightly [indiscernible] alpha this part can be as small as you want it to be. Then with high probability G and P contains a copy of T. >>: In your statement epsilon [indiscernible]. >>: >> Michael Krivelevich: Correct. Any epsilon as small as you want but once it's fixed -so basically maybe a formal way to say the result is that this probability -- >>: N ->> Michael Krivelevich: What. >>: Epsilon ->> Michael Krivelevich: Epsilon is not a constant. You can make it as small -- maybe a more normal way to write this for given T is the probability of forum minus 1 plus little O. Which kind of nearly solves the constant. Of course, you need P to be as large as N to [indiscernible] over N because [indiscernible] should be at least as large as log over N. At least Markov scale you're almost there. >>: Improve a little 01 there? >> Michael Krivelevich: Can I improve in? In principle you can take the same technology and squeeze more out of it. The same effort it's certainly not unthinkable. So let's try to figure out the tightness of this statement. So here's the second part. Okay. Tightness. Where is tightness? Okay. Tightness. So here's the statement about tightness. So for every epsilon there is delta. So that's a good mathematical statement. Okay. So what we're going to say -- we look at the case of G and P, and we will take over P to be delta times capital delta times log N divided by -- and delta is somewhere in the feasible interval, feasible interval happens to be N to epsilon. So if you're talking about tightness we'll be talking about trees maximal degrees power and it's to make it nontrivial N divided by log N. Then there exists concrete C -- concrete tree C, which impinges on a function of N and delta such that the maximum degree of T is at most delta. And with high probability G and P, with P chosen according to this expression does not contain a copy of T. Which means at least one case, one particular tree which is hard to admit. So at least for [indiscernible] when NP is the [indiscernible] to epsilon the statement is tied up to this finding absolute constant here. >>: Not full constant. >> Michael Krivelevich: Correct. No, this is not constant. For constant, it's the statement is probably not tight and this should not be that -- but at least for the case of delta, which is powerful, the statement -- it's actually surprisingly easy to prove. So here's a proof sketch. So here's this concrete tree C and delta. You take a pass of length N divided by delta minus 1. And for each iteration of the pass your touch, let me run pass by P, you touch star -- stars with delta minus 2 leaves. Okay. So let's take this particular tree. So what's special about it? What's so special about it, first of all, it's tree of degree maximal degree delta. And the same thing is if you look at P -- so V of P has cardinality and divided by delta minus 1. In this particular tree and therefore every graph that contains this particular tree, the vertex of P denotes this. So V of P delineates the rest. Which means for every vertex outside of P there's a neighbor of it, P. Therefore if you want to prove that your graph random -- doesn't contain a copy of P there's a proof that there's no [indiscernible] of this size. So the conclusion will be that it's enough to prove, prove with high probability G and P for this chosen value of P like here does not contain the dominating set of size N divided by delta minus 1. And here we have some experience with coupon collector problem, with the coupon collector problem, you know you need to throw in vertex to pay for the price, and it's a logarithm factor to be 0. So that's quite easy. Okay. So this is about the result. Now let me try to cover something from the proof. >>: [indiscernible] for bounded degree, the system ->> Michael Krivelevich: For bounded degrees, the most natural, the most significant obstacle is existence of the [indiscernible]. So presumably for bounded degrees, I'm pretty sure the answer should be constant log N over N, may be depended on delta for bounded degree. It's maybe the case the constant can be taken. So we are quite far from here. >>: But currently -- if you allow epsilon to be dependent and you get something like log squared N times ->> Michael Krivelevich: In principle if you take this to -- some states, some technical statement which is there for the case of it's a constant epsilon which is not available formerly at least for the case of subpart epsilon. If you're able to prove the statement I think you can take -- which is not so easy to do, but in principle you can take [indiscernible] work and take it as long as something like log squared divided by N. Your trees has bounded degree so there's a hope to do something like this, but most of it is greater than to prove that P which is poly logarithmic and square root is enough to get this high probability. Okay. So now let me try to cover something from the proof. See how we're doing. Proof ideas. So as we saw, if you recall these two known cases, two previously known cases of embedding, at least of constant degree trees, [indiscernible] was the case when you have many leaves, linear number of leaves. And the kind of opposite case, when only two leaves but very long path. So let's try to say that [indiscernible] is we are at least close to one of the cases and therefore we can capitalize on having many leaves. [indiscernible] capitalize maybe not having a long path but having many relatively long passes. So here's a very simple statement. So let me give first the definition. The definition. So suppose I have a tree and have pass P in the tree T. So this pass is called bear if the degree of every vertex in the pass is exactly 2. So essentially something like this. You have your tree and some in the middle say you have a pass which is relatively long, and all vertices of this pass of degree 2 so this will be a bear pass. Okay. Now here's a statement which is not that easy to prove but it's quite helpful. In this [indiscernible] and some other applications about embedding trees. So here it is. So lemma. Let's say lemma 1, suppose you have a 3 T on N vertices and it has at most L leaves. Okay. So we would like to say that if the number of leaves is relatively small we can't help to have very long pass but we can have a relatively long pass and actually we'll have quite a few of those. Let's say we have some other parameter K. K which is an integer parameter. Then the statement is deterministic there's nothing random [indiscernible] and I'll prove it all to you. It's a bit easy. Then T contains a family. So think about L as being linear and none but not very close to N and think about K as being less than constant. You'll find a family of linear number of bear passes. Each of them is a Frank space. Formally you do this, a family of N minus 2 L minus 2 times K plus 1 divided by K plus 1. Vertex is joint. Bear passes of length K -- usually the measure of the length pass by the number of edges in this pass. It has length scale which means K plus 1 vertices. So that's kind of a -- if it has a lot of leaves -- if not, then you can extract from the tree a lot, linear number. Think about K being the constant and L being some small constant times N. You'll be able to extract from linear number of [indiscernible] passes all of them of [indiscernible] so let's see why. So here's a proof. Okay. So let's look at three subsets of vertices. So the first one we want is V from V of T. Whose degree is exactly one. Just a set of leaves. And we know therefore the [indiscernible] is at most L. Then a second set. V2. V of T whose degree is exactly two and there are a lot of bear pass, need a lot of vertices because it's actually indeed a lot in here. And there's a problem complements the first two. Those are V from V of T whose degree is at least 3. So you can think about this set of joints of your graph. So they either have leaves or joints and actually your tree is nothing but a collection of passes between joints and other joints or joints on leaves. So let's see what we have here. So let's look at the sum of the degrees of all vertices in the tree. So, of course, what I get here like in every other graph is twice the number of edges. And since this tree has exactly N minus 1 edges and therefore what we get here is 2 N minus 2. On the other hand what we get here, we go over all these three sets. Every vertex from here [indiscernible] from here 2, from here at least 3 and therefore what we get is V-1 plus 2 V2 plus three we see. So let's write it. So what we get here is twice V1 plus V2, plus V3. Plus another 3 minus V1. Okay. Just two of the previous expressions and we wrote it. >>: [indiscernible]. >> Michael Krivelevich: Say it again? . You want to see it? >>: No, that's okay. >> Michael Krivelevich: It's about calculations. So hopefully you'll be able to recover it. So let's continue. >>: In clean heights ->>: This should be a ->> Michael Krivelevich: [indiscernible]. Okay. Think about the virtual mind. Okay. So you get two N minus V3, plus V3 minus V1. >>: [indiscernible]. >> Michael Krivelevich: Maybe you can take it down. Okay. Never mind. It's not the most important point of this talk. So if you look at this formula, what you get immediately is a number of joints which means number of vertices at most 3 is at most the number of leaves ->>: Can you put that away? >> Michael Krivelevich: What? >>: Are you pulling backwards? >> Michael Krivelevich: No. >>: I'm just confused. >> Michael Krivelevich: No because every vertex from this contributes at least backwards, at least three -- there we go. >>: It's larger. >> Michael Krivelevich: So this way it's another conclusion. After enough consolations, includes the number of joints which is at most the number of joints minus 2. Your tree, as I say, it's nothing but leaves, joints and parses which are bear passes, connecting joints with other joints and connecting joints with leaves. So let's look at bear passes in T, which are between the vertices of this union and this one. So what do we know about them? So the number of these guys altogether is 2 L minus 2. So you get the degrees and therefore we get together at most 2 L minus 3 passes. They contain all vertices but those from V 3 minus NV 1. They contain at least N minus 2 minus 2 vertices. And two of them are bear. So therefore you take the passes and chop them into pieces of length K, that's what you need, you need to find a lot of passes length scale and you'll see most of the stuff will be there. So chop then into pieces of length scale. So there's the whole. Which means that if, going back to lemma, if you have a lot of leaves or in case we don't have a lot which means we have some small constant times N number of leaves, then for some relatively large but constant value of K you'll have a linear number of bear passes which are [indiscernible] from each other and you'll be able to use it to complete the [indiscernible] process. So that's the first lemma. Now let me keep formulating lemmas, some of them. So lemma 2 -- where is my lemma 2 -- it's also pretty easy. So lemma 2 you need to embed not a spanning tree but nearest spanning tree so the picture will be like this. You will have some constant between 0 and 1. And you have a 3 F. 3 on 1 minus A times N vertices. And it's maximum degree is bounded by delta. So if you just think of when delta is a constant. Now I need to embed it. In terms of what the statement costs you look at G and P, and if you assume that A times N times P is at least as large as 3 times delta plus five L and M, so therefore they require from H probability of course you can get from here an estimate for P. Then with high probability the random graph G and P has a copy of F. So, sorry, I don't have much time. So let me prove it by hand. What you do is you'll have a tree, nearest spanning, embedded by vertices. And normally the stage we are in will be at least AN vertices not covered by a current copy of your tree. So you take a tree, you take a copy of your tree, you order it in some way by some search order. Let's see, you take B of S. And then you embed it vertex by vertex. Each time you arrive to embed the current vertex you'll actually have it embedded and what you need to embed? You need to embed its neighbors. And these neighbors should be embedded outside the set of already used vertices but in any stations you'll have at least A times N spare vertices. And therefore the probability of failing at a single step can be estimated as follows. So probability of not being able to -- A, complete the current vertex I. So stations you embed some part of your tree. And you still have A and spare vertices and now you're looking at vertex VI. You're looking at actually a copy of it by [indiscernible] and what you need to find, you need to find its sums in the set of vertices which are not used for embedding. So this probability, now you exposure ZI to the rest. The probability to fail is at most the probability. The binomial distribution of the parameters is A and NP is less than delta. What I need for you to find enough neighbors in the set of new vertices. And you expose the neighbors of this particular state. You haven't exposed before the neighbors in the set of vertices [indiscernible] to fail can be submitted by expression. This expression, if you play as parameters, you see this is much less than 1 over N. Therefore a single embedded step is [indiscernible] 1 over N. You take union bind, you see the [indiscernible] spanning tree, especially if you're willing to give up the log N factor. You can do it very easy. That's lemma No. 2. Now lemma No. 3. So let me skip the formal formulation, but we'll be in the case which in a sense I have already covered, which means the case when I have a lot of leaves. Suppose we're in the case where we have a lot of leaves what's been embedded is most of the tree. What's left to embed is a set of L leaves. Okay. So this has been done in G1. So this part has been done in G1. And this part -- these edges will come from G2. Okay. So now what we need to do, we need to find some kind of G and R mentioned between the leaves. All of them and the [indiscernible] between these two sets. And then you can argue that if your H probability of G2 is chosen to be at least let's say if G2 is as large, should it be delta 1 L divided by L where L is the number of leaves. Delta is the maximum degree for a start here. And then with high probability G2 contains a wired set of starts, which is not so easy to prove which is some kind, for example, the case when delta is 1, you need to prove a image [phonetic], if delta is larger, you can do something similar, use whole general standard tree, and nonresults. So normal graph here so you prove this. So the third ingredients. Let me state the first one and the last one, which is actually the same ingredient, which makes the whole goals go through. So lemma 4. Okay. So think about the case when you don't have much leaves, which means by lemma 1 have a lot of set of linear number of passes, which have constant lengths. So in that kind of almost everything but what's left to embed is a pass between this vertex and this vertex and that vertex and that vertex and, et cetera, et cetera. And all these passes are of lengths exactly K. And because on spanning tree you don't have any spare vertices whatsoever. So this set is gone. This set is gone. You have exactly as many vertices you need to have. And you need to embed this collection of passes. Not really formally designed in the following way. Let's suppose we look at random graph whose vertex set is of size K plus 1 times N not for some parameter K is a constant. K is at least three, and that's a constant. And H probability P. And in it you have two sets of terminals. You have set S1. You have set S, which is S1 up to SN0. S1 up to SN0. And we have another set of terminals C which is C1 up to CN0. C1 up to CN0. And what you need to embed in this remaining piece is pass of length KS1, C2 and N0 and this joint should be point and it should cover all vertices. So here's a statement, which means that's the case, and if your P is as large as the following expression. So I look at G and P. You take your P to be a constant, which depends on the K. Times -- which would be log N, N0 divided N, to the power of 1 over K. Then G -- sorry. That's actually K plus 1 times N 0. So G contains a family PI. I goes from 1 to 0 to vertex disjoint passes of length K which subject EI connects between SI and TI. So here's a picture. Here's what we want. Here's how you get it. So S1 after S0. C1 after C0, and what do we need to find, we need to find P1 length scale, so on up to PN0 between SN0 and CM0 they're all link K and no pair roots left. So the question is where does it come from? So basically some of the parts to figure out is which graph problem, which random graph problem we're studying. And also figure out the right reduction you know how to solve. [indiscernible]. We have two minutes? >>: Yes. >> Michael Krivelevich: Okay. That's something. Okay. So let me just indicate this lemma say in a sentence without writing much how to combine these things together. Okay, how do we do it? So here's the graph. The first thing you do partition vertex into layers, V1 up to VK minus 1. All these layers V1, so on up. N minus 1 cardinality N0. Now we need to find a collection of passes and each system has each pass starting from S passing through to K minus 1 and ending at corresponding value of T. Now for this we define auxiliary graph. It will be defined in the following way. We have V1 and from V K minus 1 as before and the edges will come from this particular graph. But instead of having set S and set C we replace it by the second X host cardinality X is also N 0. And now if we have an H between, let's say, S and I and V and V1, we replace this H by H from XI and V and the same. If we have an H between CJ and V and became in this one we preplace HJ to the same V, X1. That's what they get. Instead of having K plus 1 parts you have a graph that's K parts and instead of looking at it as a collection of passes as opposed to looking at particular points now what we're looking for here, what we're looking for here is actually a collection of cycles. So suppose you have the following cycle. So the cycle which starts at some particular X goes through all layers and prescribed order. We want it -- K-one and tossed is to this level X. What can correspond to this cycle here on this original structure. This H is MH between S and V. So this H is an H between T and V. What we get here instead of having a cycle in this auxiliary graph H is you have a pass between SI and CI. Now, the question is now if you look at this auxiliary graph and you're able to find the collection, what we need is a collection of M 0 cycles of length K. And they should be disjoint from each other. So we're looking for what's usually called a factor of K cycles. And the question is when does this factor appear and this gentleman in the back along the taller gentleman [indiscernible] and one rule proved that if you look at the random graph G and P then the threshold for the K factor type here is given exactly by the discussion replace N 0 by N. This statement, we need a big different version of the statement instead of having fact of K cycles. We needed to pass in some prescribed order. So the parts of the graph, but it should be doable. It's not an easy variation of it. But it's certainly a doable iteration of the idea of Jeff Anders and -- now we have all the ingredients. Let me step away and explain what we need to do. So we have the tree and we're embedding. Trying your patience but hopefully you'll excuse me. You have a tree to embed. Think about the tree of constant maximum degree. Is it the case that when you have a lot, lot means constantly a number of leaves, this is easy just chop off the leaves and embed. The rest it's pretty easy. What's left to embed is collection of stars which is easy to do by one of the lemmas. Relatively easy case. Doesn't take N to the power 1 plus epsilon. Because you will define P, which is logarithmic in N divided by N time constant. That's the first case and indeed the case a number of cases following lambda, small linear number of leaves, or maybe even lessens it, now the leaves and bear passes you have a collection of one bear passes. Takes the bear passes linear number of them. Take them aside. You get tree or forest, in general. Which is nearest spanning, embed it using the same tree. What's left unembedded is exactly this collection of passes. And you know to do it through this lemma. So if you put these things together, this comes out, the result, and that's the end of it. So let me stop here. [applause] >> Eyal Lubetzky: Any quick question? >>: So for the -- we repeat log over N and this N to be ->> Michael Krivelevich: Correct. I mean, in a sense -- it's even not the graph, because sort of having passes of length K which is a constant you have in front of you passes which are much longer. So therefore, the other way to improve it, if instead of just having this statement of Jeff Anders, about having a K factor for constant K, if you're able to extend it and to have smaller realization probability, you will be able to have it for larger values of K, then you just [indiscernible] it's more mechanical and it gives an improvement to all this result. So in principle, if you're able to extend as a JKV it's a case of nonconstant K this immediately translates to an improvement for the constant degree case. >> Eyal Lubetzky: Thank you. [applause]