18894 >> : All right. So we're very happy... Markov to Gaussian connection, Jay Rosen, who will tell us...

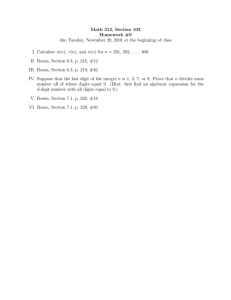

advertisement

18894 >> : All right. So we're very happy to have today with us one of the masters of the Markov to Gaussian connection, Jay Rosen, who will tell us about the sufficient condition for continuity of permanental processes. >> Jay Rosen: Okay. Thank you. And thanks for the opportunity to be here. What I'm going to discuss is a type of processes which is not very well known called permanental processes, and I'm going to explain first of all what they are and more importantly why I think they're important, how we can use them to get very interesting results about Markov local times, and then explain how to actually get results about permanental processes. So that's the basic outline. So for the background, we take a Gaussian vector. That means N Gaussian random variables. Mean you're zero always and variance -- well, the covariance is given in terms of some matrix, Aij, some N by N matrix. So in particular, I just remind you, this says if I'm looking at the expected value of some function, G1 up through GN, then it's given by an integral of the function against the Gaussian density, which is going to be -- you take the inverse of the covariance matrix, IJ, Xi, Xj over two, integrate over all the Xs, and you divide by -- I guess it's two pi N over two and then what's over one for us is the determinant, determinant of A with a square root. And I want to write this in slightly more elegant notation as simply integral of F of X, E to the minus. Write this as X, A inverse X over two. And the same. Okay. So that's basic almost definition of what it means to be a Gaussian vector. I guess that is a definition. And then I'm defining, I'm given some numbers, lambda one through N, and this is a diagonal matrix. And I want to do a computation now, calculation. We look at the Gaussian squared, multiply it by the lambda J, so this is basically the moment of the Laplass transform or the moment generating function for the squared Gaussian squares. This can be written in that form. This is my diagonal matrix lambda. And based on what I had told you over here, we can write it like this. And now you can put these two together. And in the exponent, you get E to the minus X. Then there's lambda plus A inverse, X over two. When you do the integration there for the same reason you get a -- we have a normalizing determinant A to the half. When you do the integration, this gives you the determinant of whatever was in the exponent now. Lambda plus A inverse to the, again, minus one-half. Using the fact the determinant's determinant of A times determinant of B is determinant of the product, we get the following form. So the Laplass transform of the squared Gaussians is of the form -- well, one over the square root of the identity plus lambda times A. So again a basic fact of that squares of Gaussian vectors. So here comes the new definition. This goes back to David Vere-Jones in 1997. He didn't use exactly this terminology, but he started discussing this such vector, such processes. So a permanental processes with kernel gamma is defined to be a positive real valid stochastic process. So it's real valued. The in fact positive, just like Gaussian squares. Gaussian squared is going to be our typical example of a permanental processes. And such so that it's indexed by some arbitrary said T but in the -- require that the finite dimensional joint distributions satisfies -- well, the same sort of thing that the Gaussian satisfied. Instead of Gaussian squares, I should say. So the Laplass transform of the theta XIs takes this form. And of course this is the matrix. IJ entry is the kernel at the point Xi, Xj. That's our definition. Now, we're given some kernel on T cross T some product function. And ->> : [Indiscernible]. >> Jay Rosen: Yes. >> : There's no permanent in the [indiscernible], right? >> Jay Rosen: I will explain. That's a good point. Maybe I should take the opportunity now to explain where -- where the name comes from. So we'll need this later on. So Jones, Vere-Jones shows first of all -- so I can erase this now. He in fact defined what I'll call a beta permanental processes where the la pass transform is over the form. Determinant identity. Pass lambda gamma to the power beta. And what he shows -- actually, he -- instead of the minus, he had a plus. And therefore this will be a minus. Let me make this a minus. So he shows that this has an expansion, so you -- remember this is a diagonal matrix with entries lambda one up through lambda N. And this can be written as a power series in the lambdas. Times what I'll call gamma beta. So I must define for my matrix, so for any N by N matrix, this is called the beta determinant. And it has the following form which looks like the expression for a determinant. So you take the entries will be B1, so the sum is over all permutations of one through N. One comma pi one. B -- oops two comma pi two, dot dot dot, BN pi N. And here we have beta to the power which is the number of cycles in the permutation. If you know, you can write a permutation. Form it into cycles. Start off with one, goes to pi one, and then you look where among the first indices will we have pi one, that goes to some other pi, and you get a cycle and number of cycles. Now, when beta is minus one, this is exactly the determinant. When beta is plus one, this is what had been called in the literature a permanent, plain permanent. Our case corresponds to beta equals a half. So this is where the name comes from. In particular -- maybe I'll just -- can I just sneak something over there for later reference. What you see from this is if you want to get the moments of the product -- I was going to say I goes from one to N of the theta X of I over two. So you would take the derivatives, [indiscernible] lambda set them equal to 0. Well, from that expansion, you see that this is given by the permanent -- I'll put a one-half here for the moment -- of lambda. I'll come back, I hope, if I have time, to use that to explain things. But this is where the terminology permanent comes from? >> : [Indiscernible]. >> Jay Rosen: Class. That will work. It's very real, but . . . >> : [Indiscernible], closer to one than to minus one. >> Jay Rosen: Oh, okay. Just repeating what we said. If we have a Gaussian process, this should have been -- oops. This should have been a singular instead of processes. But if you have a Gaussian process, then the square is a permanental process whose kernel is given by the covariance. Okay. Repeating myself. And the question is what about more general gamma? If we have gamma, which is not necessarily positive definite, not necessarily symmetric, are there permanental processes and what, more importantly, why should we be interested in the continuity of such processes. So in the paper of Vere-Jones, he gives various criteria on a matrix or an N by N matrix that would allow you to get a permanental vector. His criteria is very hard to work with. However, we'll see in the context that I'm going to develop that there's wealth of permanental processes, and I will explain why I'm interested in continuity. So now to give you some background. Let XT be symmetric Markov process in S in some states [indiscernible], you could think of it as the real line or -- but more generally, some nice space S, and eye assuming that it has some symmetric transition density, symmetric for the moment. So we'll get to the case where it's not necessarily symmetric, and transition densities means precisely that if you want to calculate the expected I starting at X for some function of the process at T, you get it by integrating the density -well, starting at X, integrating the density at Y against F of Y. >> : Why is some general dimension or [indiscernible]? >> Jay Rosen: Yes, yes. It's some general measure on S. We call it the reference measure. So I should have written M of Y. I think in the paper I have it right. Okay. Now here's the basic fact. For symmetric, for symmetric densities, this is -- used to be the most important part of the talk, but now we've gotten beyond symmetric, so but I want to show that PTXY for symmetric Markov processes is positive definite. So we study -- this is what you have to look at, a double sum. We have sequence of points, XI through XN, X1 through XN. And now we use the fact, this is the transition density of a Markov process so it satisfies the Chapman Coma Garve [phonetic] equation. So I just break T into two pieces. And I write it like this and now use the fact that it's symmetric to change the order here from XJ, move it -- exchange it with Z. And then you see I get a perfect square that I'm integrating. So we have A, it's positive definite. And next step is we define the potential. This is also standard notation for Markov theory. You integrate in T so once again, be inheriting from the fact that the transition density is symmetric and positive definite, U itself is symmetric and positive definite. Therefore, there exists a Gaussian process whose covariance is U of XY. That's the basic fact about Gaussian processes. Any cause of definite symmetric matrix. And we have terminology that the Gaussian process with such a covariance means there will always exist, and we call it the associated Gaussian process. Associated for the Markov process. Okay. Okay. Now we come to the connection between the -- okay. Associated. I've only told you in terms of the covariance. This is local time for proffer -- our general Markov process X. What this means, F sub epsilon of X you should think of as an approximate delta function at X. So it's a function which is peaked very highly around X as epsilon gets larger and larger, it's -- gives more and more of its weight to X. But total integral of F as usual is one with respect to the reference measure. And we define the local time to be this limit which we can show exists under very general conditions. If the potentials are continuous, for example, this will exist. And intuitively, and this is why it's called local time, this is measuring the amount of time that the process spends at X up till time T. Now, remember, this is a continuous time process and it's a continuous space so generally speaking it's not really spending any time. It's just passing through back and forth. If you were to look at the la bay measure, for example, of the set of times where it's actually where X sub-R actually equals little X, it's going to be 0. But nevertheless, this is a good -- a good definition. For those of you studying -- yeah? >> : [Indiscernible] area of this [indiscernible]. >> Jay Rosen: The definition of an [indiscernible] delta function means its total integral is one, so that [indiscernible]. There's a slight -- if you're in to these things, there's a different definition for semi martingale local time. Which agrees as this does, with for Brownian motion. And there, I think it's off by a factor of two or something. But this gives you the usual, for example, Brownian local time. And also if you're familiar with Brownian motion, you'll certainly know a lot about Brownian local time. And one of the things at the time it was considered an amazing result was -- I can't remember how far this goes back. Maybe the 60s. So trotter, showed that for Brownian motion, LXD is jointly continuous in X and T. Which is interesting because it's a rather singularly defined function in X. So the fact that it's continuous in X in particular is not at all obvious. After that, many people got involved in trying to generalize this to more -- or Markov processes. There are results due to Bloom and Phil and Gatour [phonetic] and their book on Markov processes there's a paper that went further by Gatour and Keston. There's work -- later work on lavey [phonetic] processes. A special type of Markov processes by Barlow and Hawks. They really completely solved the problem for lavey [phonetic] processes. And this is the theorem that is the background for what I want to talk about here. This is a result of Michael Marcus and I. And we showed the following, that -- quite general Markov process, but symmetric. The local time will be jointly continuous if and only if the associated Gaussian process is continuous. Now, as first glance, if you're not into these things your first reaction is well, okay, you had one extremely difficult problem. How do you know if something continuous? And you replaced it with another abstract difficult problem. How do you tell if Gaussian process is continuous? However, the point is that Gaussian processes are -- well, I erased that, but the Gaussian vector is very well -- is very special. There's a lot that one can do with it. And it turns out, it was not easy, but there is a complete understanding, which I'll give you in a minute, of when a Gaussian process is continuous. So let me go there that. >> : [Indiscernible] is defined with respect to a reference symmetric? >> Jay Rosen: No. Gaussian process, all you need is the covariance. And the covariance, though, the associated covariance is, yes, that's the UXY. The potential, yes, is defined with respect to a reference measure. So maybe that's what you meant. >> : [Indiscernible]. >> Jay Rosen: The Gaussian is not -- all you need is ->> : [Indiscernible]. >> Jay Rosen: Oh, sure. >> : Thank you. >> Jay Rosen: There it is. So U is the potential, and the potential does depend upon enable density, so it depends on what measure you take. You can change -- you can change that. And the Gaussian process is simply the one and only, means the Gaussian process, that has this as its covariance. >> : I guess what my question is, if that's its covariance and covariance depends on the reference measure, then where does the reference measure enter into the local time? >> Jay Rosen: Yes, it does. It enters because of the normalization. Here. >> : You say that local time is a version of the density of occupation [indiscernible]? >> Jay Rosen: That's a good way to put it. But I'm looking with my slide where I've defined. Over here, yeah. So this, the approximate delta function, is a function which -- whose total integral with respect to the reference measure is one. So you change the reference measure, you're going to -- you double the reference measure, this has to be halved. Or double. Yeah. Halved. Okay. But that's another way to put it, which I didn't -- I didn't want to get into that, but if you have your Markov process and you look at -- yes. So any -- take any [indiscernible]. >> : [Indiscernible]. >> Jay Rosen: Oh. Okay. So the occupation time formula for -- says that for any function, it's going to be written as F of XLXT and this will be the X but depending on the reference measure. So -- in that sense it's a ->> : [Indiscernible]. >> Jay Rosen: Please. By all means. >> : So given any Markov process, you can define and measure occupation measure for any certain measure is how much time [indiscernible] that measure in some cases is absolutely continues with respect to your reference measure. Then you can look at the density. And the local time is a version of that density. The problem with defining is the density is only defined almost [indiscernible]. If you want to make it a condition of [indiscernible] differentiation which will be defined everywhere and then you will have a chance to prove [indiscernible]. >> Jay Rosen: Thank you. Okay. So as I said, this theorem goes back -- when did we do this. Somewhere in the '90s. Not so new. But this is the background for what I'm really interested in. Let me just flash ahead for a moment. I'll come back to this. Let me explain what's known of the continuity of Gaussian processes. This is a result goes back to many people, but the final nail was done by Tologran [phonetic], nail in the coffin, who showed that Gaussian process is continuous almost surely if and only if you can find a measure on the [indiscernible] space, probably a measure, such that something holds -- it's a little complicated. This is new. It's called the majorizing measure. But the point is that this criteria depends only on the covariance. So there's a metric D, a distance D, which is the alto distance between GX and GY, be which can be expressed in terms of the covariance. And so we have a criteria for continuity which only depends on the covariance. There's no randomness anymore here. All the probability, so to speak, has been -- probability of the Gaussian part has been taken out of it. Now in practical terms, it's not always obvious if you give me a Gaussian process how to find whether or not there exists a measure such that that happens. And okay, so you can say in certain sense the problem is not solved but at least it's been reduced to a different problem. And Uvahl [phonetic] has been working on a special case where gotten his hands on an equivalent to it. Calgron [phonetic] has a more recent version of this majorizing measure condition, and one can get a hand on it sometimes. But I -- I consider this to be a solution for -- okay. So let me turn back a minute to just go again making the connection of what happens. So go back to my -- I skipped. It is forward for you but my sequence, in my sequence, it was . . . Okay. So remember, here, this was my interproximation for the local time. We have my approximate delta function at Z, integrating at R. Take the expectation, well, you interchange. And we know this is integrated F, F one, against the density. So now when you take the limit as epsilon goes to 0, by the definition of an approximate delta function, this integral, the DY integral, just changes the Y to be the Z. So we have a formula for the expected value of the local time. Given by that. And consequently, repeating again, consequently, the infinite local time or this is what we call the total local time is exactly the potential. So on the most basic level, and I hope I'll have time, not sure if I will. I hope I'll have time to go beyond the basic level to explain better the connection but on the most basic level, you see your connection between local time and the potential which means the Gaussian process. Now, this gets made -- this connection is made more precise by the following theorem. This goes back to Dynkin. It's called Dynkin isomorphism theorem. The just the top two lines. Looks a little complicated. So let me try to back down a little bit, back away. First of all, this equality is not what one usually talks about when you refer to an isomorphism theorem. You'd like to say something just depending on the local time is equal to something else, just depending on the Gaussian. Here we don't unfortunately have that. The right side only depends on the Gaussian process. The left-hand side, however, involves a sum, Gaussian local time. And this causes a lot of complications when you want to apply this. However, now, so we have a local time. Which is defined with respect to a Markov process. I'll explain, this is an expectation. I'll explain that more carefully, a type of expectation for the Markov. The Gaussian is taken completely independent of the Markov process, and therefore will local time I refer to its expectation as E sub-G. And by this notation, I mean for any function of the XIs, one could have for example a countable number of the XIs. One can even have a continuum -- if I had a continuous process, for example, I could -- but for our purposes, here's how I want to use this. By the way, this is -- this expectation is known as an H transform of the original expectation. And what you see from here, so intuitively, this measure is the expected value of a process started at X. Started at Z and conditioned to die at X. That's the intuitive idea. See, if you only have some function which lives up to -- well, you're only interested in -- ah, this is not A. This should be an F. But if you have a function of the process only up till time T and if it hasn't decide yet, what you do is you multiply this by -- well, UYX. If you think about it, YX is a -- probably starting at Y. At the end of time, at the end of your time, when you die, you're at X. So that's the intuition. As far as we're concerned, the only thing that's important here ->> : [Indiscernible]. >> Jay Rosen: I don't know if I should. Yes. Okay. So using the Markov property, if you have some function, say a function of a couple of the Xs at times which are less than T. So you first start at Z. You go wherever the first function is, second, then you get to your end function. And then that's a -- then you go to time T. And then you're looking at U of -- at XT comma X. When you write out the -- you do your expectations of the Markov process, what this is intuitively saying that at the end of the lifetime of this process, I'm looking at a process that has -- it's a transient process. The potential is finite. So it dies at some point. So this is saying you do whatever the F is telling you up till time T. And then at time T, you do whatever you want but make sure you end up at X when you die. So that's the intuition. I don't want to get too involved. We says conditioned to die at X? >> : What is [indiscernible]? >> Jay Rosen: Yes, theta is the lifetime of the process. The first time it dies. Is that notion familiar to people? So we have a process which is doing something, and then at some point there are other -- it just disappears. So we call that the death time. For example, okay, you have a -- think of a Brownian motion inside some finite region. You run it. And you -- we'll say as soon as it leaves the -- when it leaves the region, we say it died. We kill it when it leaves the region. So that's an example of a Markov process which has a finite lifetime. So it's a similar thing here. Okay. So let me explain how to use this in a simple way. The way that we need for our purposes, which is, let's assume that the Gaussian process is continuous. I want to show how you can use this to show that the local -- the total local time is also a continuous function. So of course we'll take accountable dense set of the XIs, and for F, I'm going to take the indicator function of the event that the GXIs are locally uniformly continuous. Write that out in epsilons and delta if you want, but you can make that into a nice indicative function of a nice measurable set. And so if it's -- if in fact we start off with a G which is continuous, therefore this is always going to take the value one. And then the expectation here, well, this had covariance, so it's going to get a UXX. Cancels that. It's going to be one. So with probability one, the sum of these two things is locally uniformly continuous on my dense set. We will, the sum is continuous. But our assumption is that G is already continuous. That shows that the local time is continuous. So that's you can see the easy part of using the isomorphism theory. If we know G is continuous, we get that L is continuous. Now, this only tells me that the total local time is continuous. As I said we're after something more that we get joint continuity in X and T. That's a different story. It's much easier. It has to do with various Markov, using various Markov ->> : [Indiscernible]. Easier than what? >> Jay Rosen: Easier than what we just said, which was ->> : [Indiscernible]. >> Jay Rosen: Yeah. All right. Okay. I don't know if -- it's easy. That's the best way to put it. Using Markov techniques. >> : [Indiscernible] is easier. >> Jay Rosen: Yes. It's easy. I take you back to ER. Once you know the total local time is continuous, then there are standard techniques which allow you to get -- now there's standard techniques which allow you to get the joint continuity. Okay. Let me ->> : [Indiscernible]. >> Jay Rosen: Yes. That's -- that was a long time ago. Now ->> : [Indiscernible]. >> Jay Rosen: Okay. This is F of many variables. You can note them as XI. Could be X1, X2, through XN, for example. Could be a function. In the case that I told you about, F is indicator function. You take accountable dense set. Those are my XIs of Xs, and this would be indicative function that restricted to that -those points. It's locally uniformly continuous. Epsilon, delta. Okay. So this is still part of the background. Now we come to the new material, which is what if the potential is not symmetric now. And you'll recall my -- the fact that we had positive definiteness also depended upon symmetry. So when this is not symmetric, in fact, it's also not all -- we have examples where it's positive definite. In general, you don't have positive definiteness. But certainly, you don't have a Gaussian process whose covariance is that. By definition, it will be symmetric. So what can be done? This is where the permanental process will come in. So this is a recent result of [indiscernible] Eisenbaum and Kaspi. They have an analog of [indiscernible] isomorphism theorem for non-symmetric Markov processes where the role of the Gaussian square is now replaced by permanental process. So this is my motivation. This is why it's important. And I want to mention that they show that when you have a Markov process, there always is a permanental processes with UXY as its kernel. As I said, in general, it's hard to come up with examples. In Vere-Jones's paper, I think he only has a few, two other three dimensional examples of permanental processes. But here, you have a whole rich family for any Markov process, there will always be a kernel. >> : [Indiscernible]. >> Jay Rosen: Yes. Well, we call it permanental. >> : The term has been used in ->> Jay Rosen: Other context, right. But now, again, you can see exactly the same reasoning as before says that if I know the permanental processes is continuous in X, I then get automatically that the local time is continuous in X and easy or easier either way, that will give me the joint continuity of the local time. So this is my motivation for studying permanental processes and trying to find conditions which will guarantee that it will be continuous. Conditions that just depend on the kernel. And sure enough, this is -- okay. I'm just repeating. Local time is continuous if the associated permanental processes is continuous. But I didn't -- we don't know at this stage if it's an only if. I didn't explain to you how one goes backwards from the Dynkin's thing. I didn't explain how to go backwards to show that if the Gaussian process is not continuous, the local time is not continuous. That's already a nontrivial -- well, they're all nontrivial, but that's a very much more delicate problem. Just as the criteria for noncontinuity of Gaussian process was a much more difficult problem in the theory of Gaussian process. So all these things are open, and we're just dealing now with finding criteria for a permanental processes to be continuous. And here's our main result. And it should look ->> : [Indiscernible]. >> Jay Rosen: No. No. It's a different talk. If you want to -- if you want to come to me a little later, I can probably reconstruct it, but it's also in my book. Okay. But the point here is that the criteria we have, so we have a kernel. This is just some sort of bound in this condition, which is really not -- okay. But this condition looks -- is the same sort of condition for majorizing measures which give the continuity of the Gaussian process. So the first result is if you have such a majorizing measure condition, then you get a result on the permanentals. Good. I'm glad someone asked that question. I'll come to that in a minute. No, it's not the same. It can't possibly be the same actually, but that's a very important question. I'll come back very shortly. Let me just mention that beyond getting this result -- I'm not using the word continuity yet -- there's also a modulus of continuity result -- oh, J was this -- I didn't want to write it all out. This integral, this sup here, that's what we call J of delta. So in the next thing, if this J of delta doesn't decrease -- yes, if it doesn't go to 0 too quickly, then we get the following result. I'll come back to this in a moment, but I want to now address the problem that you mentioned with the issue, the question that the very important here. Oops. Okay. So for the Gaussian case, my gamma, the covariance, the metric was the L two metric, and you can write it like this. Gamma X, gamma YY minus twice gamma of XY. The D that we use -- forget the constant here. That's unimportant. But it's gamma XX gamma YY minus not gamma XY. I need both of them in there because now it's not symmetric. This is what I need. Now, first, just from the definitions of a permanental processes, one can show for example that this product is positive. So I can take a square root. Gamma XY need not be -- the individual gamma XY need not be positive. But the product is. Similarly, one can show that the thing inside the second square root is also always positive. So the thing is well defined. That's not important. But what's interesting, I think, is that we don't -- it doesn't seem to be a metric, the XY. >> : [Indiscernible]. >> Jay Rosen: No. No. A lot of effort does not yield either an a example or a [indiscernible] example. Nevertheless, so, at the stage we're considering that it's not a metric. Nevertheless you can still define what we're going to call walls ->> : [Indiscernible] necessarily. >> Jay Rosen: Huh? >> : Have to look up the official definition of not necessarily. >> Jay Rosen: Okay. We can still define what we mean by a ball, call it a ball, even though it may not have the same shape. Okay. It is symmetric. These obviously symmetric. And so -- oops, yeah. So this can be defined and -- >> : [Indiscernible]. >> Jay Rosen: Yes. So the last thing I -- was that this does give you -- these balls will give you the same topology or really the open one. Strictly less than you, will give you the same topology as the LT topology of the permanental processes. So in that sense, there's a connection with the Gaussian distance. And therefore, to go back, we could say from this that we get continuity in the L two metric. And similarly, the modulus of continuity can all be determined with respect to the L two metric. But for reasons which I'll explain now, this -- [indiscernible] is the natural object to look at. So let me explain that, where it comes from. So here's the important fact. Let me go -- I guess you saw it already. But the question is how do you get -- Gaussian process, we know so much about Gaussian processes. And just from the definition, it has all sorts of nice inequalities, et cetera. How are we going to get something like this of a permanental processes where we know almost nothing about it? So the one thing that we consider the key fact is that the bivariate distributions are -- well, not normal, but are the squares. So for any pair, theta X comma theta Y will have the same law as the particular mean 0 Gaussian vector squares, whose covariance is given by that. >> : [Indiscernible]. >> Jay Rosen: In order? Why? What ->> : [Indiscernible]. >> Jay Rosen: Yes. They're ordered. They're ordered. Now, it's definitely not true that the -- that this is the square of -- that the process per se is that there exists -- we're not claiming and it's definitely not true that the permanental processes is the process of squares of some Gaussian process on the whole space. It's only the bivariates. Now, if you ask me maybe the trivariate, I don't know. We can't get anything else. >> : [Indiscernible]. >> Jay Rosen: Yes, for every XY, yes, it's going to be different. They won't be connected. I mean, you can have XY and XZ and then the Xs -- well you could see there will be different vectors and not -- there's no joints, nice recommendation. >> : So this key [indiscernible]. >> Jay Rosen: No, no. I'll explain in a minute where it comes from. Just a background for those -- a preliminary to what's coming ahead, the reason this is useful is that when you see how the results are gotten from Gaussian processes, you look at for the continuity condition. You look at the difference of two Gaussians. It's only pairs that come in. So once I have something like this, it's likely, even though these are the squares, not G, but it's likely that Gaussian methods will work to give continuity. If I have time, I will explain more. But now I want to come back to your question, how do you see something like this and why is it only one-half. >> : [Indiscernible]. >> Jay Rosen: Okay. So let's look at the Laplassian transform for a pair. So the kernel -- I want to write it down. You're not going to see it. Write it over here. So the gamma for a pair is going to be the follow formula. You have one plus alpha I gamma XX. Alpha one, alpha one gamma X1. Alpha two gamma YX. And one plus alpha two. Gamma YY. So you do the determinant and you get exactly that form here. So I want to produce another kernel which gives me exactly the same thing. And that's as you could see, change -- well, change gamma to be, instead of XY and YX, you have now the square root of XY, YX. And you could see if you replace this one by the square root and that one, you'll get exactly the same thing. So that shows, it will provide Laplass, the uniqueness of Laplass transforms that we have that [indiscernible]. Okay, now, to explain how the chaining argument is applied here, we -- I just want to introduce the notion of an Orowitz base [indiscernible] norms. And the one we're interested in is X squared. And this is a definition. We want to find some constant if you divide by this, so I'm taking the expectation basically of the random variable squared, and if I could divide it by a large enough [indiscernible] to get it less than one, that gives me the norm. This is tailor -- what? >> : [Indiscernible] squared. >> Jay Rosen: So this is tailor made for Gaussian process. Gaussian process, the expected value of GX squared itself might not be integralable, but if you divide by enough of a constant ->> : [Indiscernible]. >> Jay Rosen: Yes. [Indiscernible] value PX of G squared. >> : [Indiscernible]. >> Jay Rosen: E? >> : [Indiscernible]. >> Jay Rosen: Yeah. >> : [Indiscernible]. >> Jay Rosen: So let's say for standard normal, standard normal, this would be -- this is infinite. But if you divide by enough, four or something like that, it will be finite. So this is tailor made for Gaussian processes. And the methods of proving the continuity and modular continuity et cetera for Gaussian processes depends upon this norm. It's a crucial ingredient. And the point is that Gaussian squareds in the permanental, even pairs of permanentals, are -- as pairs of G squareds, they don't have a finite [indiscernible]. So this is the hurdle we have to get around. Even though we're very close to Gaussian processes, we have to worry about this. That's why I mention that for a normal random variable, you get a -- so here's -- this is the long slide, but this is the key thing. We form a cut-off version of the G squared. You cut it off at lambda and divide. Now, this is a difference of two squares. So of course, you know, A squared minus B squared is the product of the sum and the difference. The sum, because I've cut it off at lambda half divided by one over that, that disappears. And then just simple inequality showed that you can bound what's left by the difference of two normals. That's perfect. That's what I want. So this object I'm going to bound by a difference of two normals and everything is going to work. So I called the Orowitz norm of -- yeah. The Orowitz norm of this object, my cut-off difference, I call that gross of lambda. And it's bounded by the [indiscernible] of this which is by the previous slide, it's just -- it's -- previous slide tells me it's a constant times the L two norm. So the L two norm of the my G of my pair is what I called the XY. The constant was -- okay. Now, so I want to just show that the sup has finite expectation. So the chaining argument tells me that for any lambda, I get the following. My cut-off object which is bounded by the difference of the Gaussian, the Gaussian techniques tell me that this is bounded by -- do you remember what J was? J was the majorizing measure integral. And since role lambda is bounded by D, I get a bound which is universal. Now Z is some random variable which has nice moments. That's all we need here. So this is again can't give you the proof, but this is a standard called chaining result. And now, what we want do is transform this. So this is for each fixed lambda, I want to transform this to actually show the permanental processes it as well, is finite, the sup has finite expectations. So here we use -- well, first step is you multiply by the denominator throughout. Move this to one side. This is some fixed X 0. And now we use a trick that goes back to Martin Barlow, which he used in his work on lavey [phonetic] processes. He had a similar situation. So we define the set where it's greater than lambda. And notice the following. If we're in a -- too many omegas there. Should have been -- okay. But if omega is such that sup is greater than lambda, then from what we just had, remember the sup was less than that. So if for those omegas for which the sup is greater than, this sum is greater than ->> : [Indiscernible] lambda is actually the omega [indiscernible]. >> Jay Rosen: Yeah. This omega should have been deleted. [Indiscernible] lambda is a set of omega [indiscernible]. Now, a simple algebra. Maybe not so simple, but after a few minutes you could figure this out. If you have an inequality like this, you can bound it by that. Which means there's no more lambda here anymore. Okay. Now, if you ask me what's the probability that the sup is greater than lambda, means of this set, well, it has to be on this set we have this inequality, so either this is bigger than half of lambda or that's bigger than half of lambda. And from this, so this is single permanental, as I said, it's marginal. Single permanental is a Gaussian squared, so it has a finite expectation. You integrate these with respect to lambda D lambda, and I also said that these have nice -- this is a random variable that has nice moments. And so we establish that the expect that the sup is finite. That's the main way we get around the fact that we're dealing with theta X which is related not to Gaussian but to Gaussian squares. You can't directly use the Gaussian results. You have to use some sort of cut-off version. And finally, the same thing tells me that by chaining argument, we get the same situation now for if I'm looking where the distance in the metric is less than delta. We get some result like that. But we have -- there's a lambda out there, lambda one-half. And we pick lambda so that it's between the -- close to the sup. And therefore I get this result. So notice in Gaussian processes, you simply show -- you don't have this in there. You show that if you have the difference between two Gaussians, you get the sup over some small interval, is bounded in terms of this metric entropy integral. But this is not an artifact here because the analog for us would be G squared and you'd look -- the deference between two squareds is the product of the difference which is bounded by the metric Z and the metric entropy times the sum of the Gs. So for the sum of the Gs, I have to bound it by this, the square root of the square [indiscernible] super. So this is the right sort of result. >> : [Indiscernible]. >> Jay Rosen: The theta is R positive. >> : Couldn't you try and look at [indiscernible] theta [indiscernible]? >> Jay Rosen: Well, think about it. If we had G squareds and the square root of them, it's no longer a Gaussian. They're the absolute value of Gaussians. And we don't have a whole process. So I don't -- it would be nice to see that, but I don't know how to do that. Okay so if I run out of time, I'll stop here. There were several other things I could do, but I'll leave it up to you. >> : You have four more minutes. >> Jay Rosen: I'll pass. But I'll be glad to talk to anyone else -- I mean, there are two interesting things I don't have time for. One -- maybe in four minutes I can give an -- a little exposition of one of them. The one thing I won't talk about but is not general idea is not so hard. We're on the verge of it, but is explaining how the isomor -- the basis of the isomorphism theorem. We're close, but it needs 20 minutes or something. Which I won't do. The other thing I could just, in two minutes, there's another application of this. Or at least connection, with what's called loop sup. Loop sup local times. And I'll just mention what without getting too involved. But these things have recently had a lot of interest. La John [phonetic], a recent [indiscernible] lectures spoke about this, and they're available on the web. But he dealt with symmetric Markov processes. But here's what you do. So I'm going to erase this. Okay. We start off with some Markov process, and we define the following measure. I'll have to explain everything in it, but -- so [indiscernible] with A. So A is some set of pads. Some pad space for the process. And it's the following integral. Double integral. First you integrate [indiscernible] minus T over T. I guess I didn't -- well. I'll explains what PXX sub-T of A on the set where the lifetime of the pads when they die equals T. And you integrate this -- here I have my -- the reference measure. DT. So it's not at all clear why this is of interest. It's -- in general, it's not a finite measure. It's a sigma finite measure. PXXT is what we call a bridge measure. This is a measure which was built up from the original PX, and essentially it gives you the -- a measure for pairs which are conditioned to die at time T at the point X. So they start at X, they do whatever they want, or they're just about to hit X. So more precisely, X of -- if this is the process, X of -- what we call T minus. The left limit is equal to little X. So these are measures that one can construct from the original process. The bridge measures. And in that sense, we can -- so the starting -- this is also the starting point here. So in this sense, we can think of these as a measure on loops. And this is the loops of length or time T, and you integrate [indiscernible] so you have a whole lot of loops out there. Now, you take this measure of looms and you make a pra-song [phonetic] process, whose intensity measure is this moo [phonetic]. So what that means is, roughly speaking you get a random variable whose value is accountable collection of loops. And you can then form what's called the loops of local time. I'll call it L hat of X. This is going to be -you take the local time, our usually process local time at X, the total local time of of a path, and you take these paths, the sum over the countable -- let me call it ->> : No one can see anything. >> Jay Rosen: Oh. Okay. Do it up here. Loops of local time is going to be essentially the sum of the local times through each path in my sup so to speak. And so the connection is that this is a permanental process. So that's what I wanted to mention. It's another application as to oops loops. >> : More questions? >> Jay Rosen: Any answers? >> : You'll be here until [indiscernible]? >> Jay Rosen: Here about the isomorphism theorem. >> : [Indiscernible]. [Applause]