18843 >> Yuval Peres: Welcome. Actually, so a few...

advertisement

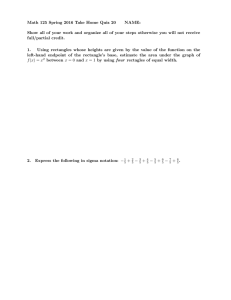

18843 >> Yuval Peres: Welcome. Actually, so a few months ago I was talking with Svante and Jen about the problem, the delicate problem on branching processes. And we found some way to work around it. And then I was really delighted when Svante offered to talk on this very same issue. All right. So very happy to have Svante Janson from Uppsala telling us about conditioned Galton-Watson trees. >> Svante Janson: Okay. So to begin with, many of you know is, let me say what a conditioned Galton-Watson tree is. So to begin with, a Galton-Watson tree. Since this is a software company I'll draw it in computer science way, upside down. So we start with a root and then we have a -take an unconditioned Galton-Watson tree. Take a root. It has a random number of children. Some number of offspring, offspring distribution. Each of them have children according to the same distribution. And some of them don't get any children at all. Like this. So each node has, say, IID children. And I use, if I need it, I don't know that I need it today, offspring distribution denoted by Greek psi. This is a random variable, typical one of these. So this is the standard type of discrete branching process. Galton-Watson process. And we think of this as a tree and are interested in properties like height and weight of it. But, well, okay, so remember also the basic fact here that if the expectation of this offspring distribution is less than 1, then we call it presub critical, and then it dies out exponentially fast. So tree finite almost [indiscernible]. If the expectation is one, we call it critical. Well, there's one trivial exceptional case. If each individual has exactly one child, we'll of course get one infinite line. We forget that case or exclude it. Otherwise, it turns out that the tree's finite in this case too it will almost surely die out. Although the expected size is infinite. But expected if T is the tree here. And this is the size of the tree. This is infinite. And this is something we will use later. So it's good to know direct. Probability that the size of T is N will be roughly N to minus 3 over 2. Whereas in this case it will decrease exponentially. And there may be some more or less trivial parity problems. For example, if you take complete binary tree the size is always odd. Considered odd. >>: [indiscernible]. >> Svante Janson: You're quite right. I need a second [indiscernible] for that. And that's why -and actually I'm going to assume [indiscernible] what I say today. So this is true if we have second -- let's put it there today. We assume that the second is finite. And then we have the super critical case when -- well, of course it's still made but we have a paucity probability of having tree infinite. >>: Can you not use that hyphen anymore? >> Svante Janson: Huh? I'm not going to use the lower super K. >>: The height of the board, above it ->>: [indiscernible]. >> Svante Janson: Where? Let's see. I'll move over here. Hoop vector [phonetic] now I'm going to talk as I said about condition tree. Which means we condition on the size. So now -- so let's say TN is -- well, this Galton-Watson tree which we call T conditioned on the size B exactly N. So this is what we call conditioned Galton-Watson tree. A little liberty in notations. Of course, you can consider Galton-Watson trees conditioned in other ways, too, but this is what I'm going to talk about. And then it's one important basic fact here that one can change the distribution of psi and still get the same condition tree, if we -- so I can -- let me write that, too. If, say, probability of dock psi prime equal to K is some constant normalizing constant times some other constant A to the K times probability XI equals K, then XI and XI prime yield the same conditioned tree. Say TM. I mean, the same distribution. A simple calculation. Do that and that means that you more or less can assume that we are in the critical case, which is nice -- they're both -- there are several advantages of being, using the critical case. So we can, using this, assume one. So it's critical. Well, almost. To be precise, this is -- if we all are in super critical case, if we take A less than 1, right normalized constant C, this is well defined distribution, the mean will be a continuous function of A and so on. So we can just get to write A so we get critical one. In the sub critical case, that is not always true. Of course, we need to take a grade 1 and what you want to do is look at probability generating fraction and if that has infinite rates of convergence or the finite rate is convergent and goes to something infinity, boundary no problem, but there are cases if you, for example, let these TK like the power of K. Then you certainly cannot take any A greater than 1. If you're subcritical case with some power [indiscernible], then you can't make this tree too critical one version. And that's a case that I don't think anyone has really studied seriously. And my co-authors, what I'm going to talk about today, pointed this out when we met a few weeks ago, that that's the case that one can wonder what happens. And it seems that I think we at least convinced ourselves that things are not so nice in that case. One cannot state so good general theorems. But I'm not going to worry about that today. So today I'll assume we reduced critical case, that we can do that and we have finite variance. And one reason that these trees are studied as much is that they contain, we choose different distribution XI, different distributions, different classes of random trees, and they include many of the standard random trees that appear in various applications. For example, if we take random uniform label tree on N vertices. Or if we take binary tree on N vertices and uniform on labeled binary trees or several other standard versions, you can read both that in, for example, David Aldous's paper on continued random tree where he study such trees a lot. And I'll come back a little later to what those papers again. >>: A question? >> Svante Janson: Yes. >>: When the trees cut size N condition. You believe node actually the same probability distribution [indiscernible]. >> Svante Janson: Yes. >>: It's like one by one. >> Svante Janson: Well, I don't think it matters if you do simultaneous or one by one. That doesn't matter. You can take them in any order. Since they have the same distribution and they're dependent of each other. Now, two things you can study. Of course, you can study several things, but two things I will study are the say height of -- let's call it H of TN. So height is the maximum of -- let's say now D denotes the distance to the root of this vertex. So here is the vertex V and this here is distance D of E. And for the width let me denote with a certain level WL of T and that's the number of vertices V that have this distance or depth equal to L. So here is, for example, this must be depth 2, W-2 in this case, is equal to 3. And width of the tree is equal to -- let me note it by W-T and that's the maximum over Ls. And now one thing which is known, have been known for a long time that these are now -- these are both of the order of root N. So actually I want to be a bit more precise here and tell a little bit more about the background. Although that means I go back to what David Aldous did about 20 years ago. So it's a bit silly that I should tell him about it. But at least I can tell the others here. So one way to do this is to look at one can code a tree using walks in at least two different ways. Interesting. We have -- which also means computer science. If you want to go through all the vertices, there are at least two standard ways of doing it. You have the depth first search or say depth search walk. Which means we started at the root and we find its children, and we take them one by one. Start here. And take its children. So in that case we go here. And down. And then we reach a leaf backtrack and we go through all vertices like this. So that's the depth first walk we can call it. So if we do this in the computer, it means that we reach vertex. We are looking at -- we find all the children, and then we put them in a stack and process them one by one, and when we have done them we continue with, we back track, go down in the stack. If we instead put them in the queue and take them in the order we have found them, it means we start with a root and then we first go through all the children. Then we go through their children in order. So we take the grandchildren and so on. So that's the breadth first walk, say, or breadth first search, maybe fair to say in this case. You can code these as both random walks. If we take the depth first walk, it will not get independent increments but we can plot it like this. Depth first, walk -- we start at zero and go on actually to 2-N since we traversed each edge twice here. Or maybe it's 2-N minus 1. So we start -- if we just plot our distance from the root, we'll get something. This will not be perhaps exactly the same tree as we had. But something like this. And what David proved was the big first step walk, if we scale it, scale this properly, it will converge to Brownian excursion. Gershan [phonetic]. Let's call it E of T. If we look at the breadth first walk, well, if we count how many roots, notes we have in the queue each time, how many vertices we have found but not yet processed, we will see that that is actually a simple random walk conditioned on return to zero at the right place. So let's take the breadth first walk. So let's say Q at time T is the number of vertices found and not processed at time T. And if we take a picture of that, it will look something similar. But it's a bit different here. We have all the increments of plus minus 1. Here we can jump up by several vertices at the same time but we always go down by one, if you think of the way we do this. We find a bunch of children, but we always process one at the time, remove it from the queue. So this actually can be described as sequence SK, SK tilde from K to zero to N where SK tilde is sum equal to 1-2 K of XI minus 1. And maybe we should start with 1, I think. Where these are IID the number of children of the different nodes. And so this is a random walk. But we have to condition on two things. First of all, we should -this should end of N steps. So but not before. So SK tilde is strictly greater than zero for all K. Want to say conditioned on. For K less than N and SN tilde is equal to 0. So in other words the first return to 0 should be exactly here. It should start at 1, not 0. But we don't see that if N is large enough. And then this is a more standard thing in probability theory, take a random walk in a condition like this. And it's been known since even longer back in time here that if we scale this, this also converges to a Brownian excursion. So we get two Brownian excursions for same 3. But there are certainly different Brownian excursions. Somehow one of them corresponds to the local time of the other in some sense here. So let me say one thing. If we want to find a height or a width, we can read them off more or less at least from both these. So the height, for example, is certainly the same as the maximum value of this depth first walk. And if we want to have the width there we have to look at the number of nodes at different heights, the same as number of points different height here which will converge to the local time of this Brownian excursion. And so let me continue for a while with colors. So depth first walk. That one. This leads to that if we take the height TN and the width TN, joint distribution and we scale. So we divide both by root N. I have it written down exact scaling. I should say one important thing here. We always get the same limit here. But there's one parameter only. In the scaling comes in the variance of XI. And it comes in different directions. So this height here will be proportional to 1 over the standard deviation, six sigma. The height here will be proportional to sigma instead. And here it's not surprising. If you have the variance here obviously comes in as you root the variance that comes in as the right normalizing factor for the height. That it goes in the opposite direction is it's not so surprising because what I was just going to say that the maximum here would be the width more or less. So if sigma, the standard deviation is large, it means that the width is large and if the width is large you expect the height to be smaller and conversely since the total area is given to be N. So it's not surprising, this will converge to except now I've forgotten the correct scale factors. But maximum this Brownian excursion. And let's see now. I think it's -- I think it comes in the maximal the local time here for it and then with some constant which I probably something like sigma there and maybe two over sigma here. But constant drawing and I just forgot in my office the paper where I have this written down. This also is some old results in any case. Constant you can check if you read any of them. But for the breadth first walk, you get a similar thing. Can also read off both the height and the width. The width is not exact but you can see that it's roughly the maximum of this walk here. So it will converge to in the scaling here sigma times the maximum Brownian excursion, and maybe again there's some constant [indiscernible] that I've forgotten. And the height, well, then you have two, the height is really the number of generations we have if we do the breadth first walk. And since each generation, now the height here, which is in the limit corresponds to this Brownian excursion ELT tells us how much nodes we have in each generation. So we get 1 over this ELT tells us how fast we proceed. How many generations we move for each vertex. So we take total of that will get the integral DT. And we get 1 over sigma. So the interesting thing here, I found this a bit amusing, interesting at least, is that we can obtain for the joint distribution of height and width as we talked distribution, we can express that in terms of Brownian excursion, in two ways which will be completely different. Of course, we know these two limits are the same. So that is some interesting identities for distribution for Brownian excursions, which I think were found before someone realized this, really. Well, this is a kind of background. I should say one thing more, when I talk about this. So it means that these two things, note that a maximum appears in two different places here. So that means that if we do the right scaling, we have the same limit in distribution for the height as for the width. And that distribution is also known explicitly. One can write down nice generating functions and so on. But one thing, even if we scale things so that these things take the right sigma, these things will be, will have the same marginal distributions. Therefore Brownian distributions the maximum bounding excursion if you take sigma to be root 2 in case my constants happen to be right here. But I want to prove that it is not symmetric. 2007 it's two dimensional distribution with the same marginals, but I was able to compute some moments here at least numerically. And I show that it is, if you look at mixed third movements, they are not the same. So this is not symmetric. So there are some limits to symmetry involved here. But now I want to proceed to some more recent things and that is I'm interested not just in limiting distribution, but also I want to have good bounds for moments and for tails of these. So say in particular I want to know probability that this is substantially larger than root N. So this ratio is big and find good estimates for that. And this is I would say joint work with Luc Devroye and Louigi Addario-Berry both in Montreal. And it is not yet completely -- it's not yet written up at all. And not yet completely proven. I'll comment at the end what is still open which we hope we can prove. But I can at least begin with what is proven. Let me continue. Just another way. One thing which -- well, this is again not really new. This is certainly quite old. Let me begin with looking at this width, WL, or TM -- if we have fixed that, this is, well, easy to see it converges to exactly 1 plus L times sigma squared as N tends to infinity for fixed L. And sigma -- so sigma square is the variance of XI. Should have said that earlier. Or maybe I did. So at the beginning these grow linearly with L. Of course that can't go on forever. Typically it will go on with root N where the width will be root N. But then it will die off again. And note here that if we take just the Galton-Watson tree without conditioning, since it's critical, we have the expected number of children in each generation is exactly one. So the conditioning changes this a lot. Now I have -- I've shown once in an earlier paper that we have actually uniform bound here. And this was limit, but we also have, call it theorem. This is bounded by some constant C times L. If L is greater than 0. And this constant C, as all other constants I mention, they will depend on the distribution of psi but not on my N or L or whatever else integers I'm talking about. So this is good for small fixtures. But now let's move on to a growing L. Especially L in the range of N, root N. So one theorem can say here is that -- let me write down several versions. Let's begin with the first thing. If we take not just W, take the maximum of all of them, the width here, really, is of big O of root N. So this seems to be quite fundamental result. We've looked here at the convergence in distribution. And then it's of course very natural to assume that the mean here will converge to the mean here. And as far as I know, if you assume a bit more, at least if you assume exponential moments, which is what people typically do in case they work with generating function. As you know, one school of people doing probabilistic combinatoric stuff looking at the functional generating functions and then it's very tempting and natural to assume that they are analytic also in a region outside the unit disk, which is equivalent to saying we have some finite exponential moment. And I'm not so sure about history literature, but I think it's been proved in that case but not in full generality. Do you know anything more about that? >>: I think full generality. >> Svante Janson: But let me go on also and say we also have a better result. We can also take higher moments here. Let's take Kth moment is exactly what you expect. Big O of K over 2 for any fixed K greater than 1. And here is something which I find interesting. We can do the same thing here. Maybe I should have done that earlier. But as I said this is work in progress. If we take a fixed L and take the Kth moment, this is now to order of L to the K, provided we have a Kth plus first moment. And actually in this case, the limit is some K degree polynomial in L. But this assumption really is essential. This is an if and only if thing. I mean, this is quite easy to see because here we only look at the finite level L. So we only look at finite part of the tree. And you can just as well look kth when L is equal to 1. Just look at the number of children of the node and it's not difficult to show this. And it's not so surprising, I think, that if you want to have some bounded moments here, you want bounded moments of the offspring, number of children of the root, say. What is interesting here is that you do not need then such an assumption. And of course one thing is that scaling is different. Here I don't scale at all. I mean, here we say divide by root N, we have the big root N thing here. But even so I find it a bit surprising. And can -- at first when I started working on getting this top estimates, I was, I was looking at the height. I'll come to that later. But same problem for the height. And to begin with one thing, we have a scaling here tells us that if sigma is large, it's a way of large variation, number of children. Well, that will not really increase. That will keep the height down, which is not surprising, because if you occasionally have very many children, well, it's not so surprising that will tend to make the generations height wider on the average. So the width will be large but that will just decrease the height. You run out of persons. You have to stop. And for the same reason, I thought that, well, you don't need any higher moment conditions in order to get good estimates for the height. But I thought one would need that for the width. But that turns out to be wrong. Let me put on correspond exponential thing here. The probability that the width is, say, greater than or equal to K is bound by some constant E to minus constant K squared over N. Uniformly in K and N. This is now for all K and N. >>: This is just a consequence of the [indiscernible]. >> Svante Janson: No, but it's on the contrary. This is stronger. So this is a consequence of that. Because this says that if you take the width divide by square root of N, it has tails that go off exponentially first. It's like E to the minus X squared. >>: If you can get a bit O uniform K. >> Svante Janson: Okay. Okay. But the big O here should not be uniform in K. Maybe I was a bit sloppy. But I save this for each fixed K. But this is uniformly in all K and M. And the reason ->>: Excuse me. And so sigma comes in the constant. >> Svante Janson: Sigma, as I said the constants depend on not just sigma but depend on the distribution. >>: Exponential bound, how does sigma come in the ->> Svante Janson: I just claim that this is true for some constant depending on the distribution. And also sigma. So I don't claim that this is uniform over all distributions of tri. Certainly sigma should enter here as a scaling factor, but maybe other features of the distribution, too, like tails of XI will also affect the constant here. I have not investigated that at all. And I don't think I intend to. But maybe someone else will. Yes. But we know that the width, it will be typically -- will grow like sigma. You have a factor sigma here for the mean, for example. So that is what you would expect for first thing is that you have -- well, a constant divide by sigma squared here. But as I said, I don't think that even that is uniform. But so after having proven this, I realized that the reason is that we can get such bound without assuming any moment conditions and third moment conditions on offspring distribution is that even if we say here at the first generation or second, we'll get an enormous number of children with a not too small probability. We still have to finish the tree so that the total size is N. And that means that we have -- if we have very many trees here, if we look here, for example, at breadth first walk, that means that we reach some quite high level and have to descend to 0 in this predetermined time. So we have to get this sum of remaining sum of independent random variables has to be now much smaller than its mean. And when we go, note that when we go down, we always go down by at most 1. So that means in that direction, we are not held by the fact that we may have large tails in distribution. So one easily gets the same bounds there if we had just sums of the bounded random variables and it's easy to see that you get this Gaussian behavior. Not just as a limit, but actually as a bound for finite N and K. And that as I said translates in this result for the width. And I don't want to go into detail, but I mean that's exactly how we proved this. So to be more -- to be more precise, if K is now large here, to look at this probability, we'll look at stopping time, the first time this random walk hits a height K. And from that stopping time we know we have to descend to 0 since we have this conditioning, and it follows by straightforward estimates for sums of independent random variables. But I would not take the time to write down the details. >>: You also have the other tail. >> Svante Janson: For small K. I've not thought about that. >>: Small constant times square root of N. >> Svante Janson: Some constant. No. I have not. That's an interesting thing, too. I know that the limit here is such that these limit variables, they have all ->>: [indiscernible]. >> Svante Janson: Yes. All positive -- all moments are finite but also negative moments. If you take one over this limit variable it has all moments finite, which means that the probability of having very small value, but I don't know any. >>: K polynomial. >> Svante Janson: Should be first -- I mean, the probability of being less than X times root N. So in the limit it will decrease and polynomial next. >>: So again. >> Svante Janson: It will decrease faster than polynomial than X as X goes to 0. >>: In the limit. >> Svante Janson: Yeah, in the limit. But I have not thought about that. So that's a good open problem. >>: But if you get a bound about the length, wouldn't that imply -- if you get -- if you get a similar bound about the length ->> Svante Janson: That's right. Yes, you're right. If we take the width here globally, maximum, you're quite right. Because if this is less than epsilon root N the height has been to be less than epsilon root N which gives strong bound which I'll show you soon. But one can ask the same thing for fixed L. Say L is another constant times root N and you look there whether we would expect again some very small probability being less than a small constant than root N. But I don't know. But instead let me proceed and say that one thing, one can combine these things and also show things like -- this follows easily by combining results. But it looks nice, I think. If we take the width at height L, it is -- I had the L here, and I had the exponential decay here. One can -- I think one can get this. Well, okay. Or I have to combine some result for height. But this at least is what we will -- what we can get. And now as for height, let me -- that is where I. >>: You wrote some version of the size. >> Svante Janson: I won't say that. I know I only have a few minutes left. But the tool here is that we used the size bias Galton-Watson tree. And that's a paper by Yuval Peres, Robin Pemantle and Russ Lyons. And the idea, what they did is they looked at an infinite version of the tree. And one way to express this data is to say that we start with infinite spine like this, and then we go on and attach -- so the way I think of it is we have a line of immortal people. Each immortal individual has exactly one immortal child. And the total number of children is not given by the same distribution here but by the size-wise distribution, but all the other children are mortal and they have children in the same way. So we take this spine and attach independent Galton-Watson trees all the way down. And it turns out that if one looks at that tree restricted to some height here, what we get is we just look at this portion here. The same as taking the Galton-Watson tree, but size biassing it meaning size biassing by number of individuals in this generation here. And so that is what we do to look at the distribution of the number of individuals at a given generation. We look at this generation L is better, not N. We do this size biassing up to generation L. But after that, all individuals are immortal. So we'll not have this infinite spine, we'll only have the spine length L and here we'll also attach normal Galton-Watson trees. And that means that if we've -- so we want to have the width at this level expected value of the width at this level, I think the thing I wrote at the bottom of the blackboard, one looks at this tree here and what comes out -- we have condition on size being N. So what is essentially a computation is calculations probability that this size biased finite version of size biased Galton-Watson tree has size exactly N and compare that to the probability that unconditioned tree is size N which as I said N to minus 3 over 2. And I'll skip the details calculation. But let me just say that doing that calculation, using this tree here, we get this result for the width. And if we now want to go to the height, we used this -- if we use this result as I just said, said that probability of the height being greater than K, this is the same as probability that the width at K is at least 1. So by Markov's inequality, this is at most high probability -- I should have some constant there. Constant times K, E to minus K squared over N. Well, if K is like root N, then this is not good because it gives you root N. If K is a bit more, at least root N log N, then we can kill this off by changing constant here. So we think -- let's call it conjecture since we've tried to prove it but there's still a gap in the proof. So E to minus constant K squared over N. So this is proved for K greater than say log N over log N times root N. Well, I mean it's an immediate consequence of what I've written here. And I think that I've now run out of time. So I end with this thing. So I said we are -- I just say that the one ID, both intuitive in the way we want to prove it is to say if there's one element height larger than K, then probability there are lots of them. Let's say at least root of them or small constant root of them, it's not the depth level. So at least over level which is say level K over 2. And then one should be -- then one could use this bound. And we have good reason to believe that but so far it hasn't, looking at the conditioning where the other vert ticks can sit, they ought to be rather uniform distributed, but we haven't been able to get the conditioning correct yet. So as I said here, this uses basic ideas from at least two persons in the audience here, but I haven't been able to go into detail exactly what they've done or what we have done. But I hope I've conveyed some of the main ideas here. So if you want to know further details of the proof, we can discuss that later. Okay. [applause]. >> Yuval Peres: Any more questions or comments. >>: I guess I think of the Brownian results as being somewhat [indiscernible] to the model sort of any -- lots of reasonable models of uniform distribution on some class of size N trees that this works for. Actually I think recently done stuff with sort of unlabeled trees. Of course technically much harder to work with. This is looking at you're tied very closely to the Galton-Watson model. I mean, do you think there's any chance of doing this sort of thing more robustly. >> Svante Janson: Well -- well, I'm not sure. Of course, it seems natural to ask the question. And presumably things are true in greater generality. But then comes the question how to do it. So I don't know how to do it. Galton-Watson trees are easy to handle technically. That's another advantage with them. And, again, so one thing, as I've said, we'll do here is to do this under minimal moment condition. Works under, the result here, I should say it was proved by Flatterly and Lowitsko [phonetic] a long time ago assuming exponential moments even if they didn't state it that way. >>: Which thing. >> Svante Janson: This thing here, yes. If I remember correctly, that is some bound of that type is in one of their papers. But they use analytic method and assumed higher, exponential moments. What you say about going to other trees, as I said, I really don't know. So I think I better leave that as an open problem. >>: So there you have a K squared over N. >> Svante Janson: Yes. >>: And you said you can use for log NK is bigger than log N over root N. >> Svante Janson: Yes, because when this exponent is bigger than log N so if move this K over there, it doesn't really matter. This is the same as that really. >>: I would think root log N would be enough. Why. >> Svante Janson: Okay. I was just making simple. But you're quite right. It's large constants root log N is good enough. >>: I can breathe again. >> Svante Janson: Yes. So if any one of you have a good idea to prove this, I'm here for another week. >>: [indiscernible] [laughter]. >> Yuval Peres: Thanks again. [applause]