18812 >> Yuval Peres: Today we're delighted to have Robert...

advertisement

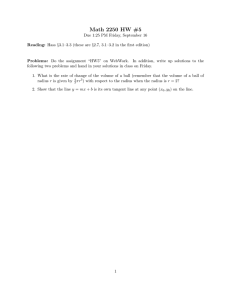

18812 >> Yuval Peres: Today we're delighted to have Robert Masson from UBC, who will tell us about random walks on the two-dimensional uniform spanning tree. >> Robert Masson: Thank you, Yuval, and thank you very much for inviting me to Microsoft this week. It's been a pleasure so far. And I'm sure it will be a pleasure tomorrow as well. Okay. So as Yuval mentioned, I'll be speaking about random walks on the 2-D uniform spanning tree and this is joint work with Martin Barlow from UBC. So I think a lot of you, perhaps all of you, know what the uniform spanning tree is. But I don't want to lose anybody. So I'll review this very quickly. So we just have a graph a spanning tree on a graph G is just a subgraph of G. That's a tree and it contains every vertex of G. So here's a graph. Here's a spanning tree. And if your graph is finite, then it's pretty easy to see that you can define a uniform spanning tree, which is just a probability measure that gives equal weight to every spanning tree on the graph. So if your graduate of is finite, there are finitely many spanning trees so this is easy to define. If you want to look at uniform spanning tree on infinite graphs. P mantle showed that, well, this is more general. This is not only in ZD. But in particular if you want to look at the uniform spanning tree on ZD, you just look at the uniform spanning tree on finite subsets that increase up to ZD, and P mantle showed you can take a weak limit. And that this is well defined. So in general you call this weak limit the uniform spanning forest, because it's not necessarily connected. So for dimensions 5 and higher it's not connected. For dimensions less than or equal to four it is connected. And you can call it therefore it makes sense to call it the uniform spanning tree. So for the rest of the talk we're going to focus on the two dimensional case. We'll let you be the uniform spanning tree on Z 2. >>: [indiscernible] high dimensions. >> Robert Masson: One of the ways to see it is essentially that if you take two random walks, they won't -- and dimensions 5 and higher, they won't intersect, essentially. Sorry. ->>: Uniform venture in the box, half of the vertices, you mention 5 and higher it turns out trivial that the path between them gets longer and longer, typically. So in the limit it could ->>: For finite size. >>: For finite size it's connected. But it has to be limited. >> Robert Masson: Okay. So here's just a simulation of the uniform spanning tree. It's actually a window. You see why it's not connected. This is the uniform spanning tree on the entire plane. And we're just going to look at I think it's a 50-by-50 window of it. So there's a strong connection between uniform spanning trees and loop-erase random walks. And I know I haven't defined loop-erase random walk. I'll give a more precise definition in a second. But it's essentially what you think it is. So given any two vertices in the uniform spanning tree there's going to be a unique path between them. And we're going to let gamma VW be this unique path. And P mantle showed that the distribution of this path is the same thing as running a random walk from V to W and then loop erasing. So this is true in any -- certainly any finite graph, and is also going to be true at least in Z2. Because we have -- because it's recurrence. More generally, Wilson -- actually, there are other algorithms such as the Aldous Broder algorithm which generates spanning trees with this idea of running random walks and loop erasing in a certain sense. So the algorithm we'll be most interested in is Wilson's algorithm, which allows us to construct uniform spanning trees by running loop erase random walks. Instead of writing everything down I'm just going to describe this with a simple example here. So we want to construct the uniform spanning tree on this graph. First of all, we just take a point, whichever one we want. And this is going to be kind of our first tree. Our first tree is just going to be consist of a single vertex. For the next step we just choose any other vertex at random. Then we run a loop-erase random walk from the second to the first. First we run a random walk until this vertex until it hits this one, then we loop erase the path. I'm not going to show you the random walk. But this is, for example, what you might get after you loop erase. Then for the next step you just take any of these four remaining ones at random. You can actually choose them depending on what has already happened, which is interesting. So let's say we chose that one. Again, we run a loop-erase random walk until we hit the tree that we've already constructed. Finally, we're left with the last one. We pick it. We run a loop-erase random walk, we get something like that. This of course gives us a spanning tree you can show all spanning trees are equally likely so we get a distribution of the uniform spanning tree. Let me give a more precise definition of loop-erase random walk because I need to introduce some notation. So again we're just going to be interested in Z 2. You of course define the loop-erase random walk pretty much anywhere it makes sense on any graph, say. Let S be a simple random walk started at Z. And some sub domain of Z 2 will usually let Z be the origin, but not necessarily. Then just this notation, we're going to let sigma D be the first exit time of D. So we run the loop D random walk up to the first time we exit. Then we erase the loop-erase random walk chronologically, and we're going to denote what we get by. So L is just a loop erasure of the random walk from time zero up to sigma D. Okay. Here's just another simulation from zero to the circle of radius 100. >>: Seems like it's always the same picture. [laughter] it's almost like this walk is deterministic. >> Robert Masson: What's interesting, I noticed for simulations, once it seems to pick the direction, it just goes in that direction. It doesn't really mess around too much. Once it's decided where it wants to go, it goes there pretty quickly. It would be interesting to actually quantify this precisely and through something like that. But when you -- yeah, you draw these pictures. You notice they're really always kind of stays on one little part of the circle more or less. >>: Improve the opposite. >> Robert Masson: Well ->>: Once it gets the direction if you wait long enough it will change its mind and go in the other direction. >> Robert Masson: How long do you have to wait, I guess? Okay. So this is a notation I really needed to introduce right here. So we're going to let MN be the number of steps of the loop erasure of the random walk from zero to sigma N. So sigma N is just the first time it leaves the fall of radius N. And the major theorem, which was first proved by Kenyan in 2000, then in my thesis, I proved the same thing using a different technique that was valid for any discrete lattice in the plane, is that the expected number of steps of loop-erase random walk from zero to the circle radius N grows like N to the 5-4ths. The precise statement is these two things are logarithmically asymptotic. An interesting open problem that people have certainly thought about, and I don't think much progress has been made, is to show that the expected number of steps is actually comparable to N to the 5/4ths. So, of course, in this statement, this logarithmically symptomatic step you could have EMN times to the fourth sometimes log factors. But it's certainly everyone believes that there shouldn't be any log factors that these two things should be comparable. >>: Is this -- what would be the value if you didn't take out the loop-erase random walk? I mean ->> Robert Masson: Just a random walk? That would be N squared. >>: Okay. >> Robert Masson: So random walk from zero to -- yeah, that would be like N squared. And that's definitely known up to -- asymptotically -- very precise estimates for that. I guess I should mention this. I didn't prepare any slides. So this is two dimensions. This is definitely a two-dimensional result that it grows like N to the 5/4ths. Three dimensions, the existence of this exponent is not known, but we believes it exists. I think David Wilson has done many simulations in this, and he could tell you the exact value that, well, that he believes or that the simulations tell him. And there's no reason that in three dimensions you'd expect this to be a rational number, any sort of nice number. I think he was 1.62 something. I think he was mentioning. In dimensions 4 and higher, it will be 2. It will be the same as the random walk. Essentially, when you get high enough dimensions you're not creating very many loops and you're going to get if same growth as just a random walk. Okay. This notation, this is to remind me to write this down. So I'm going to be using this capital G and little G throughout. And I just want to write this down just so you know. So essentially if we knew -- essentially, I'm going to get a bunch of results that have this better, some quantities are comparable to something involving capital G and little G. If we knew these things were actually comparable to N to the 5/4ths and comparable to N to the 4/5ths, I wouldn't have to bother with this notation. But, unfortunately, since there might be some hidden log terms here I'm going to use that notation. But whenever I use it you should just think that capital G is N to the 5/4ths and little G is N to the 4/5ths. So now let's get to the main part of the talk, which is random walks on the uniform spanning tree, which is just the last little bit of notation that I have to introduce. The first notation, I think I've already introduced it. Gamma XY is just a unique path between X and Y in the uniform spanning tree or, equivalently, you can think of it as just a loop erase random walk from X to Y. We'll let D be the intrinsic metric distance between X and Y is length between path of them. BD of XR is the ball of radius R in that metric. The cardinality of BDXR is, well, just what I said, it's cardinality. I'm going to be using the word "volume" of BDXR. It's the number of points in that ball. And if I don't have subscript then BXR is just the usual Euclidian ball. All right. So here are a few simulations. This is BD 040. So I didn't really mark off where the origin is, but the origin is somewhere -- well, it's exactly in the center of this picture. And these are just in red are just the vertices that are distance less than or equal to 40 from that in this simulation or this instance of the uniform spanning tree. Sorry. This is kind of the nice, a nice picture. This is in some sense what one would expect or one would hope, which is that this looks roughly -- well, not exactly, but it's somewhat -- it's not too strange. It's a Euclidian ball that's a bit deformed but not too far from the Euclidian ball. Some of these other simulations tend to be not so good. This is a bit of a stranger set. And this is also somewhat strange in the sense that the origin is somewhere here and it seems like all the points that are less than or equal to 40 are all lying on one side. But a major part of this talk is going to be talking a little bit about what these sets look like or should look like. Unfortunately, at least with my kind of weak laptop, it's computationally pretty expensive to generate very large instances of these things. >>: Look like a connector. >> Robert Masson: Yeah, it's pretty -- it's pretty slow. It's not even very good for browsing the Internet. So generating huge uniform spanning trees is not -- it's not very happy about that. But if you generated these average [indiscernible] I could ask him to do it since I'm sure he would be the best person to do it. But hopefully if one generated enormous uniform spanning trees, and one looked at these sets. So BD 0-10,000 or something, well, at least if you believe the theorems I'm going to be telling you later these should look roughly like Euclidian balls. I'll give you a precise statement of what I mean by that shortly. Okay. So now let's look at the uniform -- at random walks on the uniform spanning tree. I guess I promise that I would finish with the notation but it looks like I have a bit more notation. You can probably guess what these things are even if I didn't tell you. PN of XY. These are just the transition densities. So probability of a random walk on the uniform spanning tree starting at X probability that it will be at Y after N steps. So what do I mean by random walk on uniform spanning tree. So everyone is on the same page. We're generating, we have two levels of randomness here. We have the randomness of the uniform spanning tree. We generate a uniform spanning tree. Then given uniform spanning tree we just do random walk on that graph. So simply random walk. You choose the next step. You visit each of your possible neighbors with equal probability. So PN are the transition densities. Tau R is going to be the first time you leave the ball. The graph metric ball of radius R. And tau tilde R is the first time you leave the Euclidian ball of radius R. So the major theorem in this talk, in our paper, is just estimates on all of these quantities. So we actually have various versions of these kind of quenched in the old versions of various precision. But I don't want to go through all these details. Essentially, one just has that PN or P2 N of -- so the return probability grows like N to the minus 8 over 13. The first time you leave a graph metric ball grows like R to the 13 over 5. First time you leave a Euclidian ball grows like R to the 13 over 4. Finally, if you look at the furthest you've been up to time N, this grows like N to the 5 over 13. I don't expect you to remember all these quantities. There won't be any test or anything. So ->>: Thank you [laughter]. >> Robert Masson: You're welcome. Maybe if you wanted to remember one result. This is probably the easiest or the best one to remember, is you can talk about the spectral dimension of an infinite graph. So given an infinite graph, if you just let PN be the transition densities, transition probabilities, the spectral dimension of G is just minus 2 as N goes to infinity. Log P2 N over log N. The reason I've P2 N is because of bipartitism. So what is this? Well, first of all, notice that the spectral dimension of ZD is D. Which is always a good thing. And some sense this just measures how recurrent, or how transient your graph is. The smaller this value, the more recurrent your graph is. >>: The value then of the number of nodes. >> Robert Masson: N is just -- no. The probability of being at the origin after two N steps. So there's been kind of some recent interest in spectral dimension. I just want to highlight one thing since there's a Microsoft tie here. Gaddy Kozma and Nachmias proved the spectral dimension of the incipient infinite cluster were critical percolations and high dimensions is 4/3rds. High dimension I think would be greater to equal to 18; is that right? >>: 19. >> Robert Masson: 19. Sorry. Which is the Alexander Orbach conjecture. That's for critical percolation. So if you remember in the previous slide I had that P2 N grow like N to the minus N over 18. If you plug that into this definition you immediately get that the spectral dimension of the uniform spanning tree on D2 is 16 over 13, almost sure. Where do all these quantities come from? And they essentially come from two sources. They come from volume estimates and effective resistance estimates. So let me talk a little bit about what that means. Let's just talk about random walk on a graph. So right now there's no randomness. Let's just fix any graph infinite connective graph. Let's suppose -- so G is sufficiently regular. I want something like strong recurrence. Locally finite, and a volume doubling property. I think that should be enough. But just very vague terms. Don't ask me for any sort of very specific details. Suppose G is sufficiently regular. Remember this is the volume. Suppose that you knew that the volume of the ball -- so the graph distance ball of radius R grows like R to the alpha and the effective resistance between the origin and the complement of that ball grows like R to the beta. So what is effective resistance? I don't want to talk about so much about that, but just put unit, a unit resistor in each edge and just look at the effective resistance, I guess, between the origin and the complement of this ball. Anyway, I don't think about these things so much. In this case this is something that I like to think about a lot. It's kind of dying. The way I think about it is that in this specific instance, the effective resistance between the origin and the complement of the ball is the same thing as the greens function for the random walk in the ball of radius 0 R at the origin. Is this thing really dead? Oh well. Then if you know these two facts, this kind of tells you pretty much all the basic things you want to know about your random walk on the graph. The return probabilities are going to grow like N to the minus alpha over alpha plus beta. And the expected time you leave this ball of radius R is going to grow like R to the alpha plus beta. So I quoted this paper, but this must have been, things like this must have been known before that I guess. This paper kind of laid down -- it laid down some sort of precise statements of this fact. But I just want to give you a vague intuition of where this is coming from. So how does knowing volume growth and effective resistance growth tell you things about your random walk? So again I'm being very informal here. But if we want to look at this quantity. The expected amount of time it takes to leave the ball of radius R, well, this is the same thing -- so this GR 0 Y, this is the greens function in the ball of radius R. So the expected time it takes is just the sum over all the points in the ball. The GR 0 Y. You can rewrite this greens function GR 0 Y is GR 00 times the probability starting at Y that your random walk hits 0 before leaving the ball. Remember GR -- it's basically dead -- remember GR 00 is just this effective resistance term. I pull it out. We're left with the sum over all points in BD0R times these probabilities. I claim that is comparable to this volume term. Certainly these probabilities are less than or equal to 1. So this is certainly less than or equal to the number of points in BD 0 R. But this is also going to be greater or equal to the sum over all -- oh it's alive again -- the sum over all points in BD 0 R over 2 times these probabilities. Here you're assuming some sort of regularity. If your graph is nice enough, then if you're sufficiently close to the origin, say, within BDR 02 over, and this should probably be great or equal to some constant. Finally, you use some doubling, this volume doubling condition to get that sum would be comparable to this volume term. >>: I missed the last point. You said if, the probability of hitting 0 before leaving. >> Robert Masson: Yeah, shouldn't be that true? >>: In which ->> Robert Masson: Wait. >>: That's not true. >> Robert Masson: Yeah, that's not true in G2. If you have strongly -- strongly recurrent. That should be true, right? >>: Right. >> Robert Masson: I think I did mention we were assuming strongly recurrent. But it is true if it's strongly recurrent, yeah. So we're assuming G is strongly recurrent. >>: [indiscernible]. >> Robert Masson: I think they assume that. At least one of the statements. They had very many different statements. >>: Wasn't stated here. >> Robert Masson: No, it was in the sufficiently regular thing. >>: G2 is pretty regular. Strongly recurring is different from regular. >> Robert Masson: Okay. >>: Resistance goes up. >>: Right. But ->> Robert Masson: That's true. >>: But the term "regularity". >> Robert Masson: If it's sufficiently regular and this is true, then I guess that wouldn't be true for Z 2. Anyway, I was being very informal there. >>: Right. >> Robert Masson: This next statement is going to be even more informal, because there are no equations here. So that gives you this, why this first term, at least some idea why this first term grows like R to the alpha plus beta. What does that tell us about these return probabilities? So this was very rough and informal. So if we let 2 N or N be R to the alpha plus beta, then with high probability random walk after 2 N steps will still be in the ball BD 0 constant times R. Since it's taking this long to leave the ball of radius R. This has this many points based on our assumption about the volume growth. And so the average value on this ball will be N to the minus alpha over alpha plus beta. Again given enough regularity on G. This average value should be close to PTN 00. So that's why hopefully this gives you a rough idea where these quantities are coming from. Because I kind of hate it when people just lay down these theorems with these kind of precise statements and give you absolutely no idea where anything is coming from. But the take-home thing is that if you know things about effective -- if you have precise estimates about effective resistance about volume growth, this tells you a lot of things about your random walk on the graph. Okay. So that was a fixed graph. If our graphs are now random, then you get the same results assuming you can prove things with high probability. Again, I don't want to go through the exact statements of what I mean by with high probability. I'll give you the precise statement of what we're going to needle for the uniform spanning tree. But if you have these estimates with high probability, then you can get again with high probability you can get good estimates on your random walk on your random graphs now. So for the uniform spanning tree, we have roughly -- I'll make a precise statement of that -- we have roughly with high probability our volume grows like R to the 8-fifths and effective resistance grows like R. If we assume that for a second we just plug that in our previous results which tells you how to get information about the random walk from these estimates. I won't do it, but if you remember what the theorem stated, that tells you where all those exponents for the uniform spanning tree came from. The 13 over 5, 8 over 13, et cetera. Comes from these two facts that we have volume growth like R to the 8/5 over and effective resistance like R. So what's the precise statement for these volume growth and effective resistance growths? This is the precise statement. So there's these constants such that for all lambda and R, the probability that the volume of BD 0 R is between lambda G of R squared. Remember G of R was logarithmic asymptotic R to the 4/5th, this is essentially R to the eight fifths, so probability that this volume is between these two quantities -- well, the probability that it's not decays exponentially in lambda. And similarly we get a similar statement for the effective resistance. Note that there's no lambda here, because this is a trivial bound or this is a bound that holds for any graph that is less than or equal to R. So we don't have to put a lambda for the upper bound here. Don't pay much attention to these exponents. They're certainly not optimal. The ones I'm quoting here are possibly not even the best we could do if we really cared about getting the absolute best exponent here. In general, when I have exponential decare I'm not going to care too much what the exact exponent here is. Okay. So I don't really want to say too much about the effective resistance bounds. In some sense this is the easier and less interesting one. So generally if your tree is nice enough, you would expect a linear growth for the effective resistance. So Yuval maybe you can correct me if I'm wrong. But if you have a tree -- so obviously this is not going to hold for a binary tree or something like that. But if at depth R you have at most R to some power leaves, would you expect a linear growth in the effective resistance? Or it's more ->>: Critical. Critical trees. So I think if you look at the path from the origin to the level, the path to level R, there are many paths, but most of them agree up to the first R over 2 or R over N. >> Robert Masson: Okay. >>: If you had polynomial growth wrenching early [phonetic]. >> Robert Masson: Sometimes you just don't want too many possible branches out to distance R. But certainly for our uniform spanning tree we expect that. And in some sense a proof of this kind of comes out of, well, the next theorem I'm going to state. So for the remainder of the talk I'll focus on the first thing, which is showing this volume growth. In fact, we'll show something somewhat stronger, which is actually -- actually describing what this graph distance ball looks like. We want to state that it's roughly like a Euclidian ball of radius G of R. So G of R is R to the 4/5ths. In other words, the probability that BD0R is not a subset of B, G of R lambda decays exponentially in lambda. The probability that the Euclidian ball of a smaller radius lambda inverse G of R is not in BD 0 R. In this case we actually have polynomial decay. So this result, of course, does not follow immediately from this theorem. The upper bound will, because we have an exponential decay here. But, of course, here since we have polynomial we can't immediately get exponential here from this polynomial one. So to really prove this, you need to do a more complicated argument. Use this lower bound and kind of iterate this result to get an exponential decay here. I'm including this lower bound here to show you actually can not do any better than polynomial decay for this lower estimate. So even if we wanted -- even if we hoped to get exponential decay, we actually cannot do it. Again, these exponents are not optimal or there's no reason for us to believe that these exponents are optimal. But when I have polynomial terms, I actually am giving you the best exponents that we have. So we made some efforts to get the best possible exponents that resulted from our arguments. So these are the best possible ones that we have. So there's no reason to believe they're optimal. Okay. So let me give you some heuristics of why you would expect this BD 0 R to be roughly like the Euclidian ball of radius little g of R. This is very rough. Don't interrupt me because I know this argument is flawed. But hopefully you'll, some of you might see why this is flawed. But here's a very rough idea why you might expect this to be true. So let's fix some Z and Z 2. And we want to look at the distance from 0 to Z. So that's just the number of steps of the loop erasure of a random walk from 0 to Z. Roughly we would expect with high probability this Z 0 would be close to its mean. One would hope that a random variable would be close to its mean with high probability. And then what is this? Well, we're at distance absolute value of Z from the origin. We're looking at in some sense a loop erase random walk from 0 to Z. Well, we should hope that that's roughly capital G of absolute value of Z. Remember, capital G, this is just E of MN. This is the expected number of steps of a loop erase random walk from 0. Hopefully you guys can see. Maybe not. I won't write anything more. Or I don't plan on writing anything more. But capital G is just the G of absolute value. Z is just the expected number of steps of a loop erase random walk from 0 to the circle of radius absolute value of Z. So you can hope that that should be roughly that quantity. So we'd expect that with high probability, Z is in the ball of radius 0 R. Z as in BD 0 R if and only if G of absolute value Z is less than or equal to R, if Z is in the Euclidian ball of radius little G of absolute -- little G of R. Okay. But there are a lot of things that are wrong in this argument. Perhaps the most striking one is that this expectation is actually infinite. So it's going to be difficult for us to be close to the mean. So I think this is a very striking and very interesting fact. This was proved by Benjamini Lyons Peres and Schramm. Which is that this is a slight generality of it, but essentially the same proof, for all Z besides the origin, the probability that the diameter of this unique path from the origin to Z is greater than N times absolute value of Z is actually greater than 1 over 8 NF you sum up over all N you actually end up with something infinite. So I find this very striking, even if Z is nearest neighbor to the origin, the expected length of the path from 0 to Z is actually infinite. So it's going to be hard to use this first part of the argument that D 0 Z should be close to the expected value D 0 Z. And there's another difficulty which is perhaps less -- there's a difficulty that it's not actually something wrong in the statement or anything. We're claiming that these balls are close to each other. So we need to -- we can't just fix some Z and show this is true. We actually have to show this is true for all Z simultaneously. In other words, we just need to invert some of quantifiers. Instead of showing for all Z with high probability this is true we actually have to show for high probability with all Z that's true. So we'll deal with these difficulties one at a time. So what we're going to do is use Wilson's algorithm and combine with very precise bounds on the probability that the number of steps of loop erase random walk deviates from its mean. So hopefully the goal was -- I don't know if I succeeded. But the goal was trying to convince you that at this point in the program -- so when we're looking at these volume growth -- well, looking to prove this volume growth estimate, hopefully I've convinced you that it's going to be very useful to get very precise estimates on the number of steps of a loop erase random walk. And we need to know a lot more than just what its mean is. We really need to know -- we need to have very precise estimates on how close the number of steps of a loop erase random walk is to its mean, to N to the 5/4ths or E of MN. Because when we're constructing the uniform spanning tree, we're not just constructing one loop erase random walk. We're constructing a whole bunch of little loop erase random walks. So we need, in some sense we need all of them or most of them to really have the number of steps we expect in order to say anything about the uniform spanning tree. It's not enough just to know the expected number of steps of each of these little loops, because if we have these unexpected number of steps, this can really mess up the way our uniform spanning tree is constructed. Luckily we have these estimates or we prove these estimates. So let me just tell you a little bit of how this project evolved. We started -- I started this project with Martin bar low. We started trying to examine the random walk on the uniform spanning tree and we essentially came to this point, the point that we're at in this talk, where we realized we really need these very precise estimates on how far MN deviates from its mean. So then we kind of -- we took a break from the uniform spanning tree and we went ahead and we proved these estimates on the loop erase random walk. And then went back to the uniform spanning tree and applied them. >>: These estimates were well known [indiscernible] separately? >> Robert Masson: Yes. If you really, really want to know the history of this, I was actually starting to think about this even before I went to UVC. Martin bar low we talked one day and he was talking about the uniform spanning tree and we realized okay we need these estimates and you know it was some sort of miracle that I had already started thinking about these and had some results in that direction. And together we really, we really got very good estimates and precise estimates. I was looking at the second moment. We ended up getting exponential moments for these things. But here's the main result about the number of steps of a loop erase random walk. So it's just looking at the tails of MN over E of MN. So this really just gives you the precise estimates we have. The probability that MN over E of MN lies between lambda inverse. Lambda is greater than 1 minus some term which decays exponentially in lambda. Again, I'm not giving you the best exponent. You can take lambda to the 4/5ths minus epsilon if you want. But when we're applying this to the uniform spanning tree, what we're going to be doing is we're going to be using Wilson's algorithm. We're going to construct part of the tree. Then we're going to go to the next step of Wilson's algorithm which requires running loop erase random walks until we hit that tree. So we need a bit more than just knowing -- sorry, the previous result -- this is just loop erase random walk in the disk or in the ball of radius N. We need to actually get specific estimates on the number of steps of a loop erase random walk in a more general domain when we're using Wilson's algorithm. And so we have the following two results in that direction. So the first one is essentially saying, well, I'll let you read it. We have this condition on a domain D for all Z and D there's a path in the complement connecting -- well, DZN plus 1 N. BZ 2 N complement. >>: Want to draw it. >> Robert Masson: Maybe I should do that. Really what this is saying is just that -- so here's our domain. And, okay, I haven't given you a domain yet. Let's say all right this is the easiest example of a domain to draw. Let's say that this is just a strip, an infinite strip like this. And this is high N. This would be an example of a domain satisfying that property. Because for every Z in that domain, we -- well, we're at distance less than or equal to N from the complement or from the boundary, and the boundary is sufficiently large. The main idea here is if you start a random walk at Z, then with high probability your random walk is going to leave the domain within, before going distance 2 N away. This is really the only fact we need. Just you're not going to go too far away or else your steps might become too large. >>: The quantifier, the assumption what is N? >> Robert Masson: So suppose there exists an N such for all Z the following holds. So essentially you'll take the largest N for which this condition is satisfied. So if this was our domain, I would -- well, I would obviously take that N to be equal to this. And that would give me the following estimate. Because the estimate here depends on N. Okay. The second one is much easier to understand. The second one is if D contains the ball of radius N then the probability of the number of steps is small is going to be small. Okay. Now let's see how we apply those results. So remember I said there were two difficulties. I gave this very rough heuristic argument, which was false. And there are two main difficulties. The first difficulty was we're going to fix some Z and we want to show with high probability the distance between the origin of Z is close to this capital G of absolute value of Z. Remember, we saw the expectation of this was infinite. However, it's still going to be true that with high probability, and I'll make this -- I'll say what I mean with high probability in a second, we can still show that these two things are close. So what we're going to want to do is look at the probability that the distance between 0 and Z is less than lambda inverse of G of Z and the probability that this quantity is greater than lambda G of Z. So one direction is easy. It's going to be very unlikely that this distance is small. Let me draw -- this first one can be easily explained with a picture. So we're at the origin. We have some point Z and we're running a loop erase random walk from the origin to Z. So the really bad thing that could happen is that the loop erase random walk goes very far away before coming here. But for this first term, that's not going to affect the probability that it's too small. If anything, the bad thing is that the number of steps is too large. The probability -- the probability that the number of steps is too small is really not going to be very likely. Essentially, you just cut this off at absolute value of Z over 4 and just look at the initial number of steps. So the number of steps of the red part are certainly less than the number of steps of the blue part. So the distribution of this -- I made a little lie. It's not going to be exactly M absolute value Z over 4 but you can deal with this issue. It's essentially the number of steps of a loop erase random walk from the origin to the circle of radius absolute value Z over 4. But this term we -- this is exactly the situation that the theorem about the number of steps of loop erase random walk was meant to handle. This immediately gives exponential decay in lambda. So probability that the number of steps is too small is really not a problem. The difficulty is showing that the number of steps can't be too large. And again it's these bad pictures where the loop erase random walk goes very far away before coming and hitting Z, which is why the expected number of steps is infinite. Well, we're not going to be able to estimate it directly. But if we restrict the path to stay in some sort of larger ball, ball of radius lambda one-half absolute value Z, if we ensure our loop erase random walk doesn't go too far away we can actually show exponential decay. So this doesn't follow what we did before. But you can believe me or not believe me and read the paper. Therefore, the probability that the number of steps is too large is less than or equal to -- well, probability it's too large and it stays in the ball, plus the probability that it doesn't stay in the ball. And another thing you can show, this probability that it doesn't stay in the ball, you can actually bound by lambda to the minus 1/6. So the second term dominates. So you get a polynomial decay for the probability that the number of steps is too large. Okay. Finally, and just a few more slides. Let me show -- so the second difficulty was -- sorry. The previous result we fixed some Z and we showed that the distance from 0 to Z is about what we expect, which is, well, absolute value of Z to the 5/4ths. Now how do we deal with all Z simultaneously? So there are two directions to show, but I'm only going to show one direction. And that's going to be that with high probability for all Z in the Euclidian ball, the distance from 0 to Z is less than lambda times, well, R to the 5/4ths this would imply with high probability the Euclidian ball radius lambda inverse little G of R is contained in BD 0 R. So I have this condition at the top. So we want to show with high probability for all Z in this Euclidian ball the distance from 0 to Z is less than lambda G of absolute value R. So step one is ->>: The direction is ->> Robert Masson: No. >>: It's not? >> Robert Masson: No. >>: Exponential just by this argument in the back of the Z over 4 and you get a lot of exponential. >> Robert Masson: But you have to sum up over R, over some number of points that depend on R. >>: We know that. You're getting exponential. >> Robert Masson: But the exponential error is in lambda. >>: [indiscernible]. >> Robert Masson: I don't think. >>: You don't want to take lambda easily. >> Robert Masson: No, I don't think you can do it easily. The other direction is actually very similar to -- so what we're going to do is we're going to use Wilson's algorithm in this particular way. The other direction you also use Wilson's algorithm in a somewhat similar but slightly different way. So don't pay too much attention about these exponents. I'll just highlight the main idea and there will be pictures. So what we're going to do is we're going to let D-1 be a set of lambda, the 1 over 10 points in this set. There will be a picture. Spaced distance R apart. So we're interested in the points in this ball, in this ball. We're going to look at a larger ball, and on the first step is we're just going to take some points that are spaced distance R apart. Then we're going to perform Wilson's algorithm. Now perform Wilson's algorithm with those points. And you're going to end up with some initial tree, which we'll call U-1. So you can do it with whatever order. It's just you choose these points to do your first Wilson's algorithm tree. Then if we let N-1 be the largest distance from the origin on our tree U-1 then the probability that N-1 is greater than lambda G of R it will certainly be less than the number of points of D-1 times the probability that each point is greater than lambda G of R. This quantity is just what we determined was less than lambda to the, what did I say, minus 1 over 6 or something? And this we had lambda to the 1 over 10 points here. The point -- the thing is you choose these exponents in such a way that you then have a polynomial decay in lambda. Okay. The next step -- and ignore these things. The next step is just take a smaller ball here and we're going to take -- so we're going to have K iterations of this. And as K goes to infinity, these balls are going to converge to the Euclidian ball of radius R that we're interested in. And we're going to take these points to be closer and closer together. So eventually when we do enough iterations we're eventually going to -- all the points in this ball are going to be on the trees we've created. And okay so then we perform Wilson's algorithm. And the point is now that we've already constructed a tree, the probability of being, that the distance from all our points in the Kth tree to the K minus first tree is large, is less than some quantity, again, I'm just going to skip through this. But you can use these precise estimates on the number of steps of loop erase random walk to just look at the probability that the distance from Z to the previous tree is large. And it's some term like this. Finally, we just performed this algorithm as many times as we need so that all the points in this Euclidian ball that we're interested in are eventually going to be in one of the trees. Then we just use all the previous estimates that we had. So the probability that there exists some Z such that the distance is greater than lambda G of R is going to be less than this sum. Doesn't really matter what this sum is, but it's going to be exponential in lambda. So this first term dominates and that's the quantity we get. So it's really only the first term that's going to matter. The proof of the other direction uses a similar argument. Constructing Wilson anticipate algorithm in a precise way and also using these exponential estimates on the number of steps of the loop erase random walk. Okay. But that's all I wanted to say for today. Thanks. [applause]. >>: Question. So the spherical nature of the ball the intrinsic metrics. So at the end you get it with or without [indiscernible] correction. >> Robert Masson: Want me to go back to the theory? >>: Yes. Go back to that theorem. Yes. Because when it has the double wiggle. >> Robert Masson: That one didn't have any double wiggles. Stop. Ah. I'm not doing anything here. This is my crappy -- all right. Whoah. Is this what we wanted? The next one. This is what we wanted, right? >>: It's there ->>: It's length of G. We don't know G. >> Robert Masson: That's true. >>: But it's -- but you know that it's a sphere. >>: Yeah. >>: So there is some intrinsic interest. >>: Yeah. If all you wanted was to get the exponent, then you could simplify ->> Robert Masson: You mean, if all you wanted was this estimate, just for the volume? >>: What I said before, if all you wanted was the exponent, the end of 4 or 5 then you could take lambda to be [indiscernible] because then to simplify your proof because you could take union bounds over the Zs. >> Robert Masson: Yeah. That's true. >>: Any other questions or comments? Let's thank Robert for a wonderful talk. [applause]