18072 >> David Wilson: So today we have Remco van... Eindhoven. He used to be a frequent visitor at...

18072

>> David Wilson: So today we have Remco van der Hofstad from the Technical University of

Eindhoven. He used to be a frequent visitor at Microsoft. And we're pleased to have him back and telling us about first passage percolation.

>> Remco van der Hofstad: Thank you very much. And thank you for this opportunity to speak here in this seminar. I wasn't entirely sure what the name of the seminar is. So I called it tea time seminar.

So what I'll be speaking about is first passage percolation on various settings, particularly random graphs, but also the complete graph, and trying to describe some recent progress that we've made in understanding the behavior of first passage percolation on random graphs and the complete graph. And it's all joint work with Shanka Bhamidi who is currently not at UBC

Vancouver, but he's in North Carolina nowadays. And a large part of it is also joint with Gerard

Hooghiemstra. And I promised to help you pronounce the name. The name is Hooghiemstra.

>>: Teach me privately.

>> Remco van der Hofstad: I'll teach you privately. I realize that's a hard name. Maybe even harder than mine.

Right. So the whole idea here is to study shortest weight problems. And first passage percolation is a topic which has attracted considerable attention on ZD but also on finite graphs.

And the idea is that in many applications there's actuates that somehow represent the costs of using the edge.

On the other hand, often you're also interested in length of pass, because these lengths might actually indicate what the delay is that you as a customer observe when you're trying to submit data from a source to a destination. So what we'll try to do is actually compute the length of shortest path and the weight of shortest path between two uniform vertices in a graph. So all my graphs will be finite and therefore you can easily take two uniform vertices.

And the whole idea is to investigate what the relation is between the weight structure and the topology of the graph. In particular, if you add weights along the edges, does that actually change distances within the random graph and how so.

So what we'll assume is that the actuates are IID random variables and very often we'll in fact assume that these are exponential random variables. Now if you would do this on the complete graph, this is sometimes called all this is stochastic mean field model of distances.

We'll say something about that too.

Particularly a problem with exponential actuates is really nice and that's what a large part of my talk will be about. Okay. So on the complete graph, first passage percolation, with exponential language has been used a lot and various results are known.

But it wasn't clear what happens to such kind of problems if the underlying graph structure is incomplete. So what we do is we'll look at first passage percolation on a random graph and this

random graph is typically sparse. So the number of edges in the random graph is far smaller than the number of edges in the complete graph, which is gigantic.

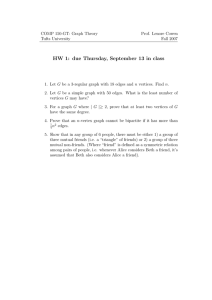

Okay. So that's the setting. And all of this work was inspired by this picture, which, of course, I need to explain a little bit. These are distances in the Internet graph. So one way of obtaining that is just sending e-mails from one place to another place and counting the number of routers it passes through.

Now, there's a lot of difficulty in actually obtaining such pictures and whether they're really trustworthy, I'm not going to say very much about. But the nice thing is that this picture actually looks rather stable. It almost looks like a Poisson distribution. In fact at some point we did a test and Poisson distribution is not rejected by statistical test.

>>: X and Y axis.

>> Remco van der Hofstad: It's a histogram. So it's basically a probability distribution. And you take a random source. You take a random destination. You look at the number of hops in between. And if that number of hops happens to be 19, you put it in this bin. And then after you've done this for a large number of pairs, you renormalize the thing and you hope this actually gives a picture of the proper hub count and Internet.

So at a certain moment we started investigating distances in random graphs in order to understand this picture. But as I'll explain later on, in fact the distances that we initially studied do not really help to understand this picture. So that's why we looked a bit further.

Okay. So the random graphs that I'll be talking about are the so-called configuration model. And

I know that many of you actually know what this model is, but I'll still define it or my particular version of it explicitly. So what we'll start with is a fixed number of vertices N, and N later on will become extremely large. It will be sent to infinity. And we'll consider a IID sequence of degrees denoted D 1 to DN and these IID degrees have a certain distribution.

And in fact in some of our cases we will put special attention to power law distributions, where the degrees obey a power law in this form. So the probability that the degree is greater than or equal to K falls off like a power of K. And the exponent I denote by minus tau plus 1. So tau measures how many moments this distribution has.

Okay. Now, when tau is greater than 3 and in fact you have finite variance, and we actually only need an upper bound here. So okay. Right. So now I've told you how many vertices our graph has and how many edges every vertex has. So the degree of every vertex. But that doesn't quite give us a graph yet. So how do we make a graph out of this?

You do the simplest thing possible. You think of your vertices as being lined up next to each other. One -- this is no good. Vertex one here. Vertex two here. Vertex 3 here and 4, maybe 5.

Now suppose that vertex 1 has degree 2. Vertex 2 has degree 1. Vertex 3 has degree 3. This has 2 and this has 2.

And now what we want to do is somehow connect up these little. Okay. So I think of these little lines here as indicating half edges, and what I'm trying to do is connect the half edges together to obtain complete edges.

And I just do that uniformly at random. So I start from the left. In fact, where you start doesn't matter, because of exchangeability. But I start at the left and I decide this one is connected to that one and this one is connected to that one.

Okay. That's it. This doesn't quite look like a graph yet. So it helps, actually, to draw it in a slightly different way. Okay. And then we see that 1 is connected to 2. 1 is connected to 3. 3 is also connected to 4. In fact, it's connected to it twice. And 5 is connected to itself.

Okay. So this is a graph. Maybe not the most traditional kind of a graph, because they're self-loops and there are multiple edges. In fact, as it turns out, in many cases these edges, the multiple edges in the self-loops only form the very small proportion of the edges. So we'll just ignore them.

Okay. Another thing, I've cheated a little bit, because my degrees are in fact IID. That's how I started. But then in that case it's not always the case that the degree sum up to an even number.

If it's not an even number it's very hard to pair up the edges in such a way that the half edges actually become full edges.

So one way of easily solving that is that in the case where this happens to be odd, we just add an extra half edge at the last one. And in fact because our N will be gigantic later on, this extra half edge doesn't do very much.

So that's how you obtain the random graph from the set of vertices with their degrees. That's what is described here. I always think that the picture helps more. So what we now want to do is investigate first passage percolation. So what we do is on our graph, every edge has an independent exponential weight. And what we try to do is to analyze what the behavior is of shortest path from a source to a destination. So suppose you were to take two vertices, for example, this one and that one, what you try to do is find the minimal weight path that connects these two vertices. If you have a gigantic graph that is a path that connects the two vertices and you try to find one that has the least sum of which.

And the result is as follows: I assume that my degrees are all at least 2. Now, there's a reason for this. Because what I will do is I'll take two uniform vertices. And in some cases these uniform verses happen not to be connected. That's a bit nasty. I don't want to deal with that. In fact, if you assume that the degrees are at least 2, then with high probability, if you take two uniform vertices they will actually be connected. We don't have to mess around with conditioning, et cetera.

Then there is this new parameter, which is the expectation of the degree times the degree minus

1, divided by the expectation of the degree, and I assume that that is larger than 1 when in fact that will be the case in this setting.

Note, by the way, that this could actually be infinity. Because it is possible in this scenario that you have a power law which has infinite second moment. Infinite variance degrees.

Then depending on the power law exponent in the configuration model, in the degrees, what you obtain is for the number of edges in the shortest weight path between two uniform vertices, you obtain a central limit theorem with asymptotic mean alpha log N and asymptotic variance, also log n. Asymptotic mean and variance are the same. And there's a central limit theorem.

As this turns out this alpha is a bit different in this regime compared to this regime. So in this regime, where this new is actually finite, this alpha is just nu divided by nu minus 1 it's a value bigger than 1.

On the other hand, if tau is in between 2 and 3, well, nu is infinity you might guess that alpha should actually be 1. But it turns out not to be. It turns out to be slightly smaller. It's 2 times tau minus 2 over tau minus 1 and you can check indeed this is somewhere in between 0 and 1.

So there's a rather universal picture emerging in the sense that the hop count, the number of edges along the shortest weight paths between two uniform vertices always satisfies the central limit theorem with asymptotic mean a constant times log N and asymptotic variance the same constant times log N.

So if you recall the picture that I was seeing here, well, Poisson distribution has the same mean and same variance. So it might offer an explanation for the picture we see emerging here.

>>: Can you go further and couple this Poisson gets smaller?

>> Remco van der Hofstad: I guess so. But we haven't tried. But I might say a little bit more about that later on. I think that ought to be possible.

Okay. So this says something about the number of hops that you need to take in order to go from one vertex to another vertex. What now about the asymptotic weight of the shortest path.

So the sum of all the weights along the edges in the shortest weight path between the two vertices. Okay. Same assumptions. And then there turns out to be a limiting random variable W.

We can explicitly compute what it is in terms of a branching process, smarting gale limit and

Gumbel distribution, such that if you look at the weight along the shortest path, that is of order a constant times log N plus a random variable.

Now, as it turns out, this constant can be equal to 0. That's precisely what happens if you have infinite mean degrees.

And if not, it's actually equal to 1 over nu minus 1 and you should think of nu as being somehow the expected offspring in a branching process approximation. I might say something more about that later on.

Okay. So while in this setting the theorem is not that different between tau greater than 3 and tau in between 2 and 3, we see a picture emerging here, which is rather different in the sense that the weight along the shortest path will actually converge to a bounded random variable, if you have infinite mean degrees, whereas it will grow like constant times log N plus a bounded random variable in the case where the mean degree is finite.

So this is connected to an explosion of branching process that lies in the background of all of these proofs. Okay. Now I would like to compare this to what happens if you take for weights, just a constant 1. So in that case it's not quite first passage percolation, but rather it is distances in random graphs.

And there the picture is rather different, in the sense that if tau is greater than 3, and this nu is greater than 1 we know the hop count grows like log N. This is a slightly different constant. This looks like the result we had before. Apart from the fact that the fluctuations here are bounded.

So where in first passage percolation you see asymptotic central limit theorems where the means and variance both go to infinity, rather for graph distance you see get a limiting law, well, not quite, because there's not a weak limit if you take the difference between these two it actually does not converge in distribution. But this difference is tight.

So if you look back at the picture for the Internet, where you see that the mean and the variance are alike, this is not the right picture for that. But it gets worse because if the mean degree is infinite, so tau in between 2 and 3, and in fact ground distances are much smaller. They don't grow like log N but they grow like log-log N somehow indicating the fact that you can reach very many points in just a few hops.

And this is sometimes -- both of these results are sometimes referred to as the small world phenomenon, and in social networks it's said you can connect any two people to each other by a chain of six intermediary people and everybody along the chain knows one or two neighbors.

If you think about this and substitute in a value of N which is reasonable for the total population on the world, which is a few billion, in fact this number is something like five or -- so in that sense it might give an explanation for it.

Okay. Very weak one.

>>: Really looked like --

>> Remco van der Hofstad: Ha, that's a very good question. In fact, I don't think this is known.

>>: Not such a good question.

>> Remco van der Hofstad: Excuse me?

>>: It's not such a good question because we know the graph doesn't look at all like --

>> Remco van der Hofstad: No, there's more geometry involved. Yeah. That's true. In fact there's a lot of research going on in studying graphs which are different from the configuration model. Of course, it's the configuration model is an oversimplification of many real networks. So, for example, building in geometry or building in all sorts of other realistic properties that, for example, social networks have such as high clustering or in some cases you actually do obtain similar results. But it's a bit harder. So what are the conclusions and some extensions. I'll say a little bit more about other extensions in the slides following this. This is not the final slide despite the fact that they appear to be.

Random weights have a marked effect on shortest weight problems. It's quite nice, because there's a cool application first passage percolation problems for the slightly super critical

[indiscernible] random graph, and there the idea is that if you look at the Tupor of the random graph, which you get by iteratively taking out vertices which have degree one, what is remaining is the largest subgraph of the [indiscernible] graph where every vertex has a degree at least 2, that can be described in terms of a first passage percolation problem. The reason being that the

edges connecting somehow the degree at least three vertices with each other, the length of that is very close to a geometric random variable with a small parameter. It's almost an exponential random variable when the parameter turns to infinity.

So that's quite nice. The proof all boils down to looking at the random graph and comparing neighborhoods of the random graph to branching processes. Now somehow taking into account the behavior of the exponential random variables. There's a lot of Markovian nature in this growth process because of the memoryless property of the exponential random variables. I'll say a little bit more about that later on.

So it relies very heavily on first passage percolation results on trees, and this is our continuous time branching processes.

The funny thing is that the results are quite universal, whereas in many applications you see rather different behavior for finite variance degrees compared to infinite variance degrees. Here you see results that are quite alike.

And this also leads to a question of which I'll say a little bit more later on. Do these results, can these be extended to other processes on random graphs or other random graphs or, I mean what's the extent of universality in general for random graphs.

So one thing you might want to think about is how strongly do these results rely on the fact that we're dealing with exponential random variables. Exponential random variables are very nice because of the memoriless property that makes the growth somehow of neighborhoods in first passage percolation a very Markovian you basically attach any of the available edges with equal probability because they're all equally likely to be next shortest one to be added to the first passage percolation problem.

Now, how does that change if you're not working with exponential random variables but other random variables. Well the Markovian nature is gone. In fact, it turns out that even when you study such problems on the complete graph, which, of course, is a lot simpler, it's still quite difficult.

So here's an example. We look at the complete graph. KN. And the edges are all there. So every vertex is directly connected by NH. And then we do the same thing as before we put an edge weight on it. But the edge weight has a little bit strange shape. So we take an exponential random variable and we raise that to the power S. If you take S is 1, we're back to first passage percolation exponential weights on the complete graph. But this power S makes it substantially more difficult. In fact, you can think of this S as moderating the behavior of the weights close to

0. If you're doing first passage percolation on the complete graph you would like to take the edges with very small weights in order to go to neighbors.

For example, you start here with one vertex, let's say vertex one, and then this has N minus 1 edges coming out of it. But most of these edges actually have a weight which is relatively large.

So what you would rather -- the edges that you're most likely to use are the edges with the smallest weight.

So here's the smallest, here's the second smallest, et cetera, and the behavior of this smallest one is rather different for, when you vary this S variable. So the smallest one will be like 1 over N to the power S but the density at the 0 of this random variable will either blow up to infinity or it

will converge to 0, both situations are rather different from the exponential setting where the density is basically 1 at 0.

So what is the effect of that? As it turns out, you can again look at the weight and the length or the number of edges of the shortest path between two uniformly chosen vertices. If you look at the weight it again grows like log N, multiplied by some constant, which you can actually explicitly compute in terms of the gamma function. And then there's again a limiting distribution.

And also if you look at the number of hops along the shortest weight path, this is again of the order log N and the variance is also of the order log N, but rather than here being a 1, which you have for the complete graph, you get an S here and an S squared there.

Peculiar. But you always see central limit theorems. Central limit theorems in the sense that the mean and the variance of the number of hops both grows proportionally to log N with a certain prefactor.

So in a certain sense you could say that all of these weights are in the same universality class.

They all satisfy central limit theorems with mean and variance both of order log N.

It's just that the constant is a little different.

>>: Weight W is not Gaussian.

>> Remco van der Hofstad: No, but that was also not true in the previous case. So the shape of this object is actually quite a bit similar to the limiting distribution that you see in the configuration model in the sense that it's a sum of three terms, one of them being Gumbel.

>>: Question for the variance of W?

>> Remco van der Hofstad: I guess that would be the variance of this. This is a nasty beast. It's the sum of three random variables which are independent. One is a Gumbel distribution so you'll get the variance of a Gumbel distribution, and the other two are both logs of limits of a Martindale process involved.

A bit like the Martindale limit of a super critical branching process. And not very much is known about that. So I'm not sure whether you can get anything out of that in terms of a variance.

We know its distribution but computing anything out of it is rather hard. You may believe when you see all of these results that actually you will always get the same thing. You will always get central limit theorems. Here's an example where that is not the case. So it's again complete graph. But now we have edge weights which have entirely different behavior for the density close to 0. The density close to 0 is extremely small.

Not just small as a power of the variable, but E to the power minus an inverse power. This is just minute.

In that case you can actually show that the hop count is tight. So that means that if I take two uniform vertices, you can exceed the shortest weight path connecting this vertex to the other vertex. We'll have a bounded number of edges with high probability. And in fact the bound some explicit constant which only depends on this A here.

Okay. So it's clear that this is a different universality class for first passage percolation on a complete graph. And it immediately raises the question what are all the universality classes?

Have we seen all of them this way or not? We reformulate it slightly.

Does the hop count always satisfy a central limit theorem with the same order magnitude mean and variances when the mean actually does tend to infinity. I don't know.

Okay. So there's a couple of promises I made that I would say a little bit more about certain things. But one of the things that interests me is whether something like this would also be true on a random graph. For example, identify the universality classes for weights on random graphs.

So you have to think a little bit about how to transfer such results. So we see that there are two behaviors. Namely, here there's a behavior where the hop count scales like log N times a constant and the variance has also size log N times the constant. So the question is, is a statement such this as possibly with different constants here also true if you were to do first passage percolation on the random graph, configuration model, with these exponentials to the power S.

I would believe it would be. Okay. Then the second question, here, here we see that if we take our weights along the edges, in such a way that their density close to 0 is very small, meaning that almost all of them will be relatively large, then the hop count is tight.

So it means that basically the hop count is finite. And on the complete graph, being finite is the same as the usual graph distance. Usual graph distance is just 1 between any two vertices. So if you would do this on a random graph, would you get the same behavior for the hop count as for the graph distances if you would do first passage percolation with such weights?

In particular, looking back at the results that we see here, I would believe in this setting that the distances would be logarithmically with a bounded fluctuation. And here I would believe that the hop count would grow doubly logarithmically, which is rather different from the behavior that we see for exponentials which I also believe to be true if you were to take a fixed power of an exponential. So the question is whether all these such results, whether such universality, basically a different behavior on the complete graph, means different behavior on the configuration model, where the search results are true. We're only at the start of understanding such problems.

Okay. So what I would like to do now is to sketch a little bit the proof of the behavior on the, on the configuration model where we have exponential weights. So indicate how a proof such as this, how that goes about.

Shall I switch off the --

>>: Want to raise the screen?

>> Remco van der Hofstad: Yeah. Is that possible? It magically appears. Thank you very much. Will this also magically disappear? Okay. Not yet. Projector will go to standby. Cool.

Okay. But it doesn't say when. But it will happen.

Okay. So what I'm trying to sketch is why it is the case that on a random graph, the shortest weight pass between two uniform vertices are based central limit theorems with matching means and variances that are both logarithmic. With a certain constant.

Okay. In order to understand that, one thing you need to understand is what the neighborhood in such a random graph looks like. Okay. So you take a vertex. Let's say a uniform vertex. That I know what its degree is. So that's D-1 and the probability D-1 is equal to K is the distribution we started out with in order to generate our configuration model. So this is the greedy distribution.

And now we look at its neighbors. So these vertices. Now, what is their degree. I would guess maybe that it would be the same. But it's not. The reason being that if you attach this edge to a vertex, you attach the half edges to each other uniformly at random. So vertex happens to have a lot of half edges you're more likely to connect to it. So that means that the distribution of these degrees changes. We'll denote that by B-1 and the distribution of B-1 is actually a size biased distribution.

Minus 1. So if this one happens to have degree 5, then the probability of this half edge connecting to that half edge or any of the half edges of this vertex is 5 properly renormalized for all of the vertices which have degree 5. But then the number of outcoming edges here I've used up one of these five edges. So there are four left.

So the thing has shifted by one. And that's why you get the K plus 1 here and the K plus 1 there.

And this is sometimes called the forward degree. It's how many edges are there apart from the edge that is being used to connect you to whatever is already, whatever you've already seen.

Now, the nice thing about this process is that if I look at the degree of this one, it's going to be hardly affected by the knowledge of this degree and that degree.

And the same is true for this one and that one. So they all have close to the same distribution independently of each other. So it's very much like a branching process. But it's a two stage branching process. So the degree of the route of the branching process is different from the degree of any of the subsequent vertices. So the degree of the root is this. And the degree of any of the subsequent vertices is that.

Okay. So this is for usual neighborhood, the usual configuration model. Now we put exponential edges, exponential weights on these edges. And we start exploring the neighborhood process of first passage percolation on such a random graph. If this is close to a tree, then the first passage percolation on it should be close to the first passage percolation on a Gobel Watson tree. Can we understand the first passage percolation on a Gobel Watson tree? In particular, what is the distribution of distances in such a first passage percolation problem. So let's first think about that for a little while. First passage percolation on a Gobel Watson tree.

>>: You want the percolation on a regular tree? Because the neighborhood looks like --

>> Remco van der Hofstad: Doesn't really matter. You could but --

>>: But the trees, the weight distribution, why wait?

>> Remco van der Hofstad: So I mean a regular tree would correspond to my distribution. This distribution having a single atom. That's a special case. Right.

Okay. So what do we do? We study first first passage percolation on a Gobel Watson tree. And what we're interested in is distances. So number of edges between vertices in such a tree and the root.

Okay. So let G sub M be the generation of a uniform vertex for first passage percolation on this tree when it consists of N vertices.

So how does this go? We start with our root. Then one by one we grow the first passage percolation tree that is attached to it. So this will be a minimal edge. And now M is 2. There are two vertices. And then there will be the second edge that is attached to it, which might be this one or it might be that one. Suppose it's this one. Now M is 3. There are three vertices here.

And we iterate, and we again draw an extra edge.

Here they're in total let's say three edges, of course we can never get more than three neighbors in the first passage percolation tree. So we do this one by one, and we end up with a tree, something like that. And when the size is the size we're interested in. Let's say M, we stop.

Now we draw a uniform vertex out of this tree and we look at how many layers deep it is. That's the game.

Okay. Now suppose that the degree or the maximal number of children of the Ith vertex equals

DI, this is just a parameter, so what this means is that here I'm drawing the edges that I success civilly find using my first passage percolation. But the first passage percolation lives on a tree.

So there are certain edges here that have not been found yet by first passage percolation up to this size. Let me draw them with dots, like that. I don't know how many there are. There's probably going to be more down here than there are in the beginning where more of the edges have been found.

Therefore, the degree of this vertex, the D, the little d of the vertex, in this case d of the root is equal to 3. The maximal number of children it can have in the tree.

Okay. Then there's the following result, that this GM variable has the same distribution as the sum from I is 1up to M of random variables II where these are independent indicators with the probability that II is 1.

This is not obvious. This is, of course, very nice because it describes the level or the generation of uniform vertex in the first passage percolation process when you found M vertices as being a sum of independent indicators, of which the probabilities actually go to 0. So suppose that these

DIs are all a bit alike, then what you see here is basically some constant, and here you see a constant times I. So this will be of the order 1 over I.

So, for example, if you were to look at the expectation of GM, this is the same as the sum of I is 1 to M, DI over.

>>: When you say the I vertex, the I vertex is reached by the first passage percolation?

>> Remco van der Hofstad: Precisely, yeah. And this is roughly going to be sum from I is 1 up to

M, 1 over I, constant. Let's call this new, times nu minus 1.

All right. If they would all be equal, then it would be precisely something here the same something here I times minus I minus 1. We would get precisely this. Now, if these were to be random variables they don't fluctuate that much. Then something like that is still going to be true.

So this is nu over nu minus 1 times the sum where I is 1 up to M, 1 over I. That's very close to log N.

So that's one realization. The second realization is that these probabilities go to 0. So we get a sum of indicators of which the probabilities tend to 0. That's closely related to a Poisson random variable where the means and variance are equal. This indicates Y this GM satisfies a central limit theorem with a certain constant in this case nu over nu minus 1 log M divided by the square root of the same constant, as G converges to standard Gaussian. So at least we see some of the results that we were seeing for the configuration model, we see them recurring here in the sense that there's a logarithmic growth of the mean and of the variance, with the same prefactor. Okay.

So this lemma gives a lot of hints as to where the result that we've proved comes from. As it turns out, the proof of this lemma is not extremely hard. It just works by induction.

The reason being that the probability of attaching -- I mean if you grow the tree from one to one level higher, you're equally likely to pick any of the dotted edges.

Okay. Right. So how can this be translated in terms of random graph? Yuval was already during the break asking something about that.

So suppose I have two vertices? Well, I was trying to convince you here of the fact that if you look at the neighborhood of a vertex, that looks very much like a tree. Of course, that can be true all the way because in a certain moment the neighborhood process is going to realize that it lives on a finite graph. So in order to make a connection between the two vertices, what works best and easiest is to let something grow from here and let something grow from here until they meet.

So you start doing first passage percolation from this point. Oh, what it would look like. And you start doing it from here to. In fact, we have a complicated way of how we do this, which is for technical reasons. And then at a certain moment what will happen is that one of the edges chooses an edge there. And now you found the shortest weight path.

So that also means that the number of hops in this path is just going to be this number of hops plus this number of hops, plus 1. So hop count is going to be the number of hops within this tree plus the number of hops in the second tree, plus 1. Now, if these were just a uniform vertex here, then by the lemma we know that the number of hops here would be close to a central limit theorem. And the number of hops here would also be very close to a central limit theorem. Also, because these are making use of disjoint vertices and disjoint edges, therefore the exponential random variables here is also independent.

So therefore the two normal limits are going to be independent. And what you'll see is that the total number of hops is this one plus that one will also satisfy a central limit theorem with variance, with the sum of the two variances of the I don't underlying objects. Yes?

>>: HR, H tilde, come from two different symbols in the first half.

>> Remco van der Hofstad: Yes. The H2 level was for graph distances. So you look at which are the vertices that you can reach within a finite number of steps. This is for first passage

percolation. So the edges have an exponential weight on them. This lemma is only true for first passage percolation. This is not going to be true for graph distances. In fact, there you'll see something appearing in the graph distances on the configuration model.

If you would do the same thing here with weight one and if you would pick a uniform vertex, then the height of that uniform vertex will be something logarithmic, and the fluctuations will be bounded.

The reason being that this Gobel Watson tree grows exponentially. If you pick a vertex, it's going to be extremely close to the boundary of the tree. And that's precisely what we were seeing in the theorem for the HN tilde, which were the graph distances, namely that the growth is logarithmic. And that the fluctuations are bounded. So thanks.

Okay. So this one obeys the central limit theorem. This one obeys the central limit theorem, both growth log N size. And therefore the sum also base theorem. That's how the proof goes.

Now, it's a bit tricky. The reason being that I'm sort of cheating a bit here, I'm saying, well, you know this M will be fixed. It's not. M is actually random. The time where you make this connecting edge in between the two trees, the size of this tree or that tree at that moment will actually be random. And that's how the -- I mean the distribution changes because of that not so much because of the hop count but definitely for the weight of the path. And this connection time is related to a Gumbel random variable or a exponential random variable. That's why such

Gumbel random variable appears.

>>: What kind of random variables? Gumbel?

>> Remco van der Hofstad: It was German, I think.

>>: Can you write down the formula for it?

>> Remco van der Hofstad: I'll have to think about it. It's limiting distribution of the maximum of the exponential random variables. It's a double exponential random variable. So let me see whether I can do this.

So let's call this G because of Gumbel. Plus X. Huh? Excuse me? Is that correct? Yeah. So this is the same thing as the log over an exponential random variable. Okay.

>>: You have that little mark on the back, I guess just before the X. [indiscernible].

>> Remco van der Hofstad: This one, you mean?

>>: No, the X on the right-hand side. [laughter].

>> Remco van der Hofstad: This one. So that's what I would like to say.

[Applause].

>>: In this analysis you didn't use it's not exponential, right?

>> Remco van der Hofstad: This is only true for exponential random variables.

>>: Only true for exponentials?

>> Remco van der Hofstad: Yes. Something similar might be true. But I don't think anything as simple as this. Not the sum --

>>: Your power log distributions, you need some other -- there's another technique.

>> Remco van der Hofstad: So, no, for the parallel distributions, when you have exponential weights, this will be true but then the DIs will be the size biased degree distributions. But if you were to take another weight along the edges, for example, the power of an exponential then this result is not going to be true. So life will be much more difficult. That doesn't say that it's impossible. But it's definitely more difficult.

>>: The reason the exponentials are good because otherwise this exploration doesn't really, you could -- it's not that you always take the minimal edge. It stops being invasion, right?

>> Remco van der Hofstad: It is invasion.

>>: I'm sorry?

>> Remco van der Hofstad: It is invasion.

>>: Of the exponential.

>> Remco van der Hofstad: Right.

>>: Otherwise it's not. That would --

>> Remco van der Hofstad: It's still invasion. But you have to remember how much weight you've already used up of a certain age. And if you have exponential random variables by the memory-less property, the overshoot, the amount still remaining is again exponential. That's true for every of the edges and therefore you're equally likely to take any of the edges. That's what makes the exponentials nice. And that's the reason behind this key lemma.

>>: What do you do when you have pounds of exponential?

>> Remco van der Hofstad: We can only do that on the complete graph. And then we relate it to continuous time branching process. So on a random graph that would be harder.

>>: Do you somehow record how much weight.

>> Remco van der Hofstad: Right. Right. So you have to write down --

>>: That's the tail of [indiscernible] greater [indiscernible].

>> Remco van der Hofstad: This one? [laughter].

>>: That means the opposite.

>> Remco van der Hofstad: If X turns to minus -- oh, yes, right.

>>: Unless it's a negative.

>>: So explain what you're doing in the case of variables have -- so you're not on the complete graph but the variables have --

>> Remco van der Hofstad: A power log.

>>: -- a distribution.

>> Remco van der Hofstad: So in that case, the neighborhood of a vertex looks very much like a tree. Now here I actually didn't make any claims about what the distribution of the DIs is. So this actually works conditionally on the degrees. If you have a tree.

>>: If you have a exponentials.

>> Remco van der Hofstad: If you have exponentials yes.

>>: I see the powers of exponential.

>> Remco van der Hofstad: The powers of exponentials you only have results on the complete graph. That's already hard enough. I would love to do that on a configuration model. But I believe it to be really a difficult problem.

>>: Distributions with problems of tails.

>> Remco van der Hofstad: For the degrees.

>>: So switching -- that's where I'm confused.

>>: Do you have any conjecture of like what criteria, the density of 0 would yield CLT or HM goes to 0 on the complete, HM goes to infinity on the --

>> Remco van der Hofstad: I think the example I gave you is really the quicker one.

>>: E to the minus 1 over --

>> Remco van der Hofstad: Yeah. So if you have weights of this nature, I can explain to you why. If you have weights of this nature -- so the weights are always positive and X is a very small number, that's how you should think of this.

All right. Okay. So the whole idea is that if you have exponential weights on the complete graph, the smallest weights along the edges are going to be of order 1 over N. So you're quite happy taking a log number of such random variables because the game in using them is 1 over N. That vastly out beats the de facto log N that you need to take by taking many hops.

That's the idea. Now, if you look at the minimum of N of such random variables it's not a power of

N. But it's a power of log N. So if you take the minimum, I is 1 up to N of XI, where XI is IID and has this distribution. It's, I don't know, a random variable times 1 over log N to the power of 1 over A if I'm not mistaken.

Here it becomes more tricky. Is it best to take minimal edges and have a long path, or is it better to take short path and then live with the fact that along these edges, the weights are not going to be so small.

Here, because of the fact that this is just a power of log N multiplying by a log N it's actually not so good.

In fact, what you can show is there's a two-step path that would actually reach this with a certain constant. And also the minimal edge along the whole graph, in the whole complete graph, it will also be of this nature. So you're never going to take a log N number of steps, because then you're log N larger than you would be by just taking a two step path. That's why the hop count is going to be tight.

Now, if this were to go to 0 faster than a power of log N, then it might actually be worth while taking a longer path and probably if you take a longer path there will be a CLT. That is something we don't know. That would be my guess. Does that explain the intuition a little bit? Okay.

>> David Wilson: Do we have any more questions? Okay. Let's thank Remco again.