>> Nikolaj Bjorner: Okay. It's my pleasure to... resource allocation in the cloud, using ingredients of culinary delight. ...

>> Nikolaj Bjorner: Okay. It's my pleasure to introduce Assaf Schuster. He will talk about resource allocation in the cloud, using ingredients of culinary delight. Take it.

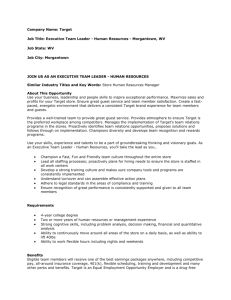

>> Assaf Schuster: Hello, everybody. So I'm going to talk about the works that we double-digit together with a few of my students and colleagues, Orna Agmon Ben-Yehuda, Muli Ben-

Yehuda, Eyal Posener and Ahuva Mu'alem, which has to do with resource allocation in the cloud. Basically, we are looking at several trends of infrastructure as a service, and when looking at these trends, we see that they culminate in one solution that we call resource as a service, and this resource as a service is the topic of my talk today, which will be split into two, two halves.

The first half, I am going to motivate the resource as a service solution, and in the second part, I am going to show you some -- let's say an expert of the resource as a service, our implementation of the part that is doing memory management. All right, so let's look at these trends. The first trend has to do with shrinking duration of rent. We can see that the leading cloud providers today, they already rent resources in granularity and time granularity of minutes. And this trend of shrinking durations has been going on for several years, and the analogy we see is to car rental, which recently, with initiatives such as Car2Go or initiatives like that, recently shrank from durations of granularity of days to durations of granularity of hours. And also, the telecom companies, which used to give us lines and charge us by the minute, and due to the audience pressure, the clients' pressure and also sometimes to quote others, they shrank the charging durations to seconds. So the general tendency that we see here, that when technology improves, the clients get reluctant more and more to pay for the time that they don't use resources, and so the provider is trying to match the clients' -- to make the clients happier, try to shrink the durations of rent and match them with the clients' expectations. So we extrapolate that, eventually, cloud resources, because technology can allow it, will be rented by the second. So you are going to rent certain resources in the machine -- you are an application, you run in a certain machine, you need extra bandwidth, you need extra memory, you need an extra core, you are going to rent it for a few seconds. You're going to be charged by the second, and this is something that technology can do. A second trend is flexible resource granularity, and we can see that more and more cloud providers make it possible for clients to change the configuration of the bundle of resources that they rent, and to adapt them to the client needs. We think that this is not enough, because obviously, constant and fixed combination of resources will never be able to match the needs of elastic and scalable applications. So we see a trend towards our conclusion, and our conclusion is that, eventually, clients will be able to rent only a basic bundle, a core bundle that will allow the application to load, and only that, while the application is running, it's going to rent resources on the fly according to different considerations that we are going to see in the rest of the talk. The providers, so until now we saw two trends that have to do with the clients, with the applications and the people who rent resources in order to run those applications, but the clients have their own -- the providers of the cloud have their own

consideration. The most important consideration of the cloud provider is to overcommit.

Overcommitment means that they want to put as many applications as possible on the same machine. Overcommitment means that you can take a cloud of 2 million machines and if you can overcommit by a factor of two, make it a cloud of only 1 million machines, and this has a high implication on the expenditure of the provider, on the infrastructure, and also on the pollution that this cloud is going to inflict on the world. So in order to overcommit, what the provider is doing, it's taking rich customers, rich applications, that value their operation very highly and match them together with those low quality of service, poor clients that cannot pay a lot for the resources, just in order that when the rich clients need the resources, the provider is going to take those resources from the poor client and give it to the rich. So, in a way, it's acting as Robin Hood in reverse. It's taking from the poor and giving to the rich. But the rich clients don't always need the resources and are not always willing to pay for them, and so from time to time, there's a clearance of some headroom for the poor guys to jump in and to utilize the ideal resources. Some researchers claim for selling performance and not selling resources. We think that this is impossible. Even if the clients let you look at their applications as if they were white boxes, even if they let you hook into the application and measure whatever you like and whatever you find interesting, still there are going to be too many issues for the exact measuring of performance. In the fault when performance is not good enough, who to blame and so on, which makes it impossible to implement. On the other hand, the more likely event, and clients are going to remain black boxes, and now you need to measure the performance that they have, in this case, it is very easy to manipulate the host and fake resource stress, and so we don't think that this is a feasible solution, either. So the conclusion here is that infrastructure as a service providers cannot sell performance. They have to keep selling resources.

>>: So a disk IO and disk space is a clear resource, but CPU utilization is also a resource? Does that correlate with performance?

>> Assaf Schuster: Yes, CPU by the cycle is a resource. You can say that I measure now how high the CPU, and this means that the performance that the application utilizes is a resource that you call a core, its utilization is very high. But sometimes, applications have complementing resources, so for instance, you go out a very large memory, and you need another core to support it. And sometimes, there is alternating resources, so you can either get a lot of memory and use it as a cache, or you can use a core to recomputed intermediate results again and again. So this whole thing is becoming quite complicated, and we'll see in a moment.

>>: You're only making that argument, though, for infrastructure as a service, not platform as a service, right?

>> Assaf Schuster: Yes. I'm only making it for infrastructure as a service.

>>: So why is PaaS different from IaaS in this case?

>> Assaf Schuster: From?

>>: Why is platform as a service different from IaaS? Why does this method not apply to PaaS?

>> Assaf Schuster: Because in platform as a service, you sometimes have already a software stack that you supply. So it may be a white box which was already measured and the provider may already know precisely what is going on everywhere. So I'm talking about the cloud. In infrastructure as a service, some of the claims may apply to platform as a service, but I don't want to claim anything that I did not check. Okay, all right. So another trend, if we are going to rent resources, then service-level agreements. We see more advanced cloud providers allowing customers to make service-level agreements that are tiered in the quality of services they provide. So, for instance, we speculate that very shortly, clients will be able to rent resources according to their needs, according to their temporary needs for [indiscernible]. So, for instance, when the client wants a certain resource with availability for 90% of the time or for 80% of the time, we already have the technology. The cloud providers already have the technology to ensure such service-level agreements, and we don't think that it will happen in the far future. All right, so if you think that all this does not already affect cloud providers, here's a chart from 2012 showing that cloud providers reduce their prices in a correlated way, and some would say even in a synchronized way. So all of them reduce the prices at the same time. In this chart, you can see that on March and between November and December 2012, everybody reduced their prices together. So what about the providers and how are the providers and the clients going to implement everything that we just discussed? So we think that in order to compute everything that is needed for implementing such dynamic and such a fine granularity environment, there's a lot of very complicated decisionmaking that has to be made. And so both clients that will be running on top of the cloud as guests or as virtual machines are going to employ a decisionmaking core, and the host is going to have a decisionmaking agent, and they are going to interact between themselves. Basically, the architecture that we see is as follows, so there is an application. It has some strategic IaaS agent, and the first element of the strategic agent is to -- the first action of the strategic agent is to measure the performance or the type, so it has a performance measurement part that is also estimating -- that is also in charge of analyzing historical data and estimating the future performance that it's going to gain by renting or discharging certain resources. And it also has a valuation of that performance. The performance can come in terms of transaction per second for database application or hits per second for memory applications, for memory caching application and so on. And there's also some valuation in terms of actual money, actual dollars, for the performance, meaning that if you have

1,000 more hits per second, how much it is going to be worth. This valuation is required in order that the strategic agent will now how much it can offer for renting a certain resource. And then the strategic adviser that is collecting all this data is going to decide whether it wants to rent

certain resources, the quantities of the resources that it wants to rent and for what price. And, of course, then this -- the result of the strategy is communicated to the host. The host agent has a decisionmaker part which collects all of these. We still call them decisions, but they are going to be bids in a moment. It collects all of these requests from the different guests. It manipulates everything, taking into account also the global picture, who of the guests is a richer client, who can pay more and who can pay less, and there is a budget, whether the clients on this machine should be split into two machines or more, and the pressure on the other machines and so on.

The decisionmaker takes everything into account, and eventually its decisions are communicated to the resource controller, which controls the allocation of resources. And we think that this is going to happen on the fly every few seconds, this decision procedure. So the guest agent changes the desired amount of resources on a second-by-second basis. It negotiates all the time, so I told you I was talking previously on the complementing resources and the alternating resources that the application can have. It takes all this into account -- suppose it got a lot of memory by a steal of a price, and now it looks to rent another core, and it is willing to pay a little more for that core in order that it can make use of that memory that sits there and waits for it to make use of it. And also, we trade in the future market and it sublets, and it's doing everything that a selfish, rational agent of a guest -- and I'm talking here, when I say guest, I mean the application, I mean the virtual machine. These all are synonyms for me. And what does a host agent do? It is market driven, it has a view of everything that is going on. It attempts to increase. Its most important task is to increase the overcommitment while keeping the clients happy, and I'm going to add here some terminology, so it wants to keep the social welfare as high as possible. So in summary of the first part of the talk, so we are going to look into dividing resources among selfish black box guests, and the method, approach, of course is to give more to guests who will benefit more. And the problem, the hardest problem, will be to understand, for the host to understand, what are the guest benefits from the additional resources? Because the guest would like to give the resources to those guests -- the host would like to give those resources to the guests who benefit the most. And of course, we turn our look into auction theory, and we think, okay, theory is going to save us. Unfortunately, when we really try to implement, what we find, we get into a lot, a lot, a lot of issues, which of course remind us that in theory there's no difference between theory and practice, but in practice there is. All of these problems pop up. Let me just give you one example. So, for instance, suppose you want to devise an auction for splitting the bandwidth between the clients, so you run the auction, some of the clients get some bandwidth, some get less, and when you start running and when you start executing, all of the sudden it turned out that those that got a lot of bandwidth do not need so much, but those that did not want to pay that much for the bandwidth, all of the sudden, they need more bandwidth than they received. So why not take the leftovers from those that got enough and give them to those that got less than they need? So one way to do it will be, of course, to run yet another auction, a second-level auction, that would do a sublet, that would act as a sublet between the richer guys and the poorer guys. Unfortunately, with incoming traffic and all the packets that are coming in, there's no time for that. There's no time in between the

auctions that we already do every few seconds to do yet another level of auctions. Okay, so you're saying, okay, so the host, the provider, is going to do that. It's going to give the extra leftovers to those who need them. But immediately, when you do that, the whole theory and all the assumptions of the theory of auctions collapse, and all the good properties that we will see in a moment that we attribute to auction theory do not hold anymore. So if you want truthfulness, it's not there anymore and so on. All right, so this is going to begin the second part of my talk about Ginseng. Here, I'm going to delve into the details and try to show you an implementation of the part for us -- implementation that we built at the Technion of the part for us that is dealing with memory. All right, so everything is the same in the architecture, except that now we have the memory controlling mechanisms instead of the general resource controlling mechanisms that we had previously. Okay, so we are going to focus on memory, and this is a work that is going to be presented next week in Salt Lake City. All right, so designing a good allocation mechanism. This is why Vickrey, Clark and Groves devised VCG auction, what we call VCG auction. So let us review VCG auctions. Guest bids with valuation of the good, how much it's worth for them, subjectively, so every guests says how much it's worth for him to get the goods.

The auctioneer finds the allocations and maximizes social welfare, which we call SW here. The social welfare is how happy everybody are with the results. The allocation should be such that everybody are very happy, because the valuation of what they received relative to the price that they paid is higher. The auctioneer charges guests according to the exclusion compensate principle. What is the exclusion compensation principle? When you run the auction without the guest, the other guests -- consider a certain guest, guest I. So when you run the auction without the guest I, the other guests get more quantity of the goods than they would get when I is participating in the auction. So you compare the utility, the total utility of the other guests when

I is not in the auction to the total utility that the other guests get when I is in the auction and the difference is called the exclusion compensation principle. So in other words, guest I is going to pay the damage that it inflicts -- that its presence in the auction caused the other guests. That's the payment that guest I is going to pay. All right, so turned out that these VCG auctions have a very good property. Guests bid the real types or the real valuation, the true valuation of the good, regardless of what any other guests do. And this is actually something that you want to happen in our cloud, because if guests will be able to manipulate the cloud provider by telling it different valuations than they actually have -- remember, guests are selfish and they are black boxes. As a provider, we rely only on what they say, so we want to incentivize them to say the truth about the valuation of the resources that they are going to buy. All right, so this was a review of VCG, and in 1999, Lazar and Semret devised a generalization of VCG to divisible goods. They were actually devising this for -- they were working in Bell Labs, and they were designing this for bandwidth allocation, so they decided it's a good idea to auction for bandwidth, to auction repeatedly for bandwidth, and they devised a generalization of VCG auctions that is called progressive second-price auction, PSP in short. How does PSP work? Guests bid with a required quantity and unit price. They do not have to reveal the full type, so they do not have to reveal for every quantity that they will get how much they are willing to pay. Only for the

quantity that they send. Okay. And the guests are sorted, and what does the auctioneer do? It sorts the guest by the bid price to be allocated with the desired quantities, and after sorting the guests by the bid price, it certainly allocates to the guests that bid the highest. And, of course, guests are charged by the exclusion compensation principle that we saw in the VCG, and this turned out to be a very successful generalization of VCG, because they were able to show that this auction is incentive compatible in the sense that all the guests, the best course of action, the best strategy for the guests, is to bid the truth, is to say the truth about their valuation of the quantity of the bandwidth that they want to purchase. So, of course, one may ask why not improve that, and the work with multibid auctions, and indeed, Maille and Tuffin in 2004 extended progressive second price auctions to multibid auctions. They proved that everything that holds for progressive second price also holds for the multibid. It converges to the same social welfare, it provides the same social welfare, and it's incentive compatible. It converges to the same epsilon Nash equilibrium and so on, so it seems like it is more efficient. However, if you think about it a little bit, it is not really needed in dynamic systems, where the configuration changes from time to time and you have to do repeated auctions every few seconds again and again anyway. So if you do repeated auctions every few seconds and you don't get a single bid that you submitted, then you can try a different bid, and you don't need multibid auctions. On the other hand, if you do want multibid auctions, you'll have to deal with a much higher computational overhead on the auctioneer, which is indeed quite problematic when you are talking about a few seconds long auctions. Okay, so we are going to not talk about multibid auctions, and we are going to see if progressive second price, if PSP, answers what we need. So, first, we see that -- first, when we consider progressive second price, we see that it requires guests to hear and analyze how other guests bid. This is a basic assumption that happens in progressive second price auctions that everybody hears what everybody else says, and this is required to prove that the incentive compatibleness and the convergence to epsilon Nash equilibrium. And now I'm going to spoil the next slide and tell you that we are going to develop a different auction called memory progressive second price, and this memory progressive second price protocol, which is based on second bids, is more suited to the cloud in this sense, and it makes it harder on the neighbors in the same machine to spy on the other guests that take part in the auction. Okay, so what is this memory second price auction that I am talking about and that we developed specifically for memory auctions? It goes as follows. So, first, the host sets up with each of the guests with the base memory. We assume that this is the first thing that happened. A base memory allows the guest to load and its economic agent to load and to start bidding. And now, the auction round repeats itself every 12 seconds, so what happens in those

12 seconds? In time zero, the host announces actionable memory to the guests. The host knows that it has a certain headroom, which it wants to share between the guests, and it announces how large is it. It can be -- like in a 10-gigabyte machine, it can be 1 gigabyte, it can be 9 gigabytes.

The host announces this number. And then the guests have three seconds to consider this number, to understand what they need and to come up with the bid for this actionable memory, so the host collects the bid at time three. After three seconds, the host collects the bid, and now

the host has one second to decide on an allocation, taking into account all the bids that it gets.

Okay, so one second is supposed to be sufficient, and then at time four, after four seconds, the host announces the auction results, so we are here, and now we take eight more seconds for the auctions. So what are these eight seconds? So to move memory from one application to another is not very simple. It involves overhead. It's not like moving bandwidth, for instance, from one application to another. And this overhead requires lots of different actions by different layers of the system. For instance, I need to empty my cache. For instance, I negotiate to change my -- if

I'm application, and somebody is taking some memory from me, I need change the data structure that I am working with so that it will fit into smaller memory, and so on. I told you that previously we think that it will be enough to change libraries to do that and not the applications themselves, but I'll have a few words to say about this in the following slides. However, still, we give the guest eight seconds to do all that, and then after eight seconds, the memory changes hence, and then we start the next auction round.

>>: So my takeaway here is that the act of doing the auction is a relatively small overhead. The overhead is to reconfigure the systems.

>> Assaf Schuster: Exactly. But don't --

>>: The numbers are meant for -- so the numbers you've put up on the slide are taken from some

-- do you have some particular scale in mind? Or should I think of them as --

>> Assaf Schuster: Oh, these numbers? They are just -- we ramp above them at night, but really, it turned out that -- okay, so there are quite a few things to take into account here. First, we want that the length of the auction will be such that we will be able to repeat it sufficiently frequently so that resources that become free, we'll be able to assign them again, and somebody will be able to use them. Second, we don't want the auction to be too short, because there is a lot of computation that's going on here. The client agent is collecting a lot of performance information and valuation information here in order to compute the bid we are going to see in a second, what's happening here. The host agent is going to solve a very complicated exponential optimization problem in this second, taking into account also many, many information bids. So moving the memory, maybe eight seconds is an overshoot, maybe it's an undershoot. This is something we just decided on. Perhaps, in subsequent works, we'll be able to lower it to 10 seconds or extend it to 14. I don't know, but this is the ballpark that we talk about. It's not going to be every two seconds.

>>: But there are two dimensions. How many participating in the auction is going to make the coordination time higher, whereas the reprovisioning of memory is local to a client. It will depend on how many instances that has to coordinate. It doesn't necessarily mean a global coordination.

>> Assaf Schuster: For moving the memory between the guests?

>>: Between itself.

>> Assaf Schuster: So, for instance, so currently we have like 23 guests. I'm going to roll forward. For 23 guests, we were able to compute the optimization problem here in one second, but if we have 100 guests here, I don't know when it's going to end, so we are going to have to resource to some approximation algorithms and different algorithms than we used today.

>>: So basically you've got to put some scenario behind this time.

>> Assaf Schuster: Yes. I am going to put some scenario, but I also should for reasonable numbers, so 23 guests on one machine is a reasonable number. A hundred virtual machines on a single machine, 100 virtual machines on a single physical machine, is going to be quite different from what we see today, and correct me if I'm wrong.

>>: But then these auctions are meant to be only for per machine and not between machines?

So if you have a process --

>> Assaf Schuster: I'm focusing more and more about a single machine, because the overcommitment that I want to achieve is on a single machine. The considerations of splitting machines and putting machines together are simpler, and they do not require auction theory.

They are better known today, I think, and they involve, of course, what I said previously, putting together rich guys with poor guys. Okay, more questions? Anyway, so that's our memory. Yes?

>>: Sometimes, am I as a machine -- in Azure terminology, say if it were service here, it could really be allocated to any other host. So in some sense, what you're competing with is the set of all resources in the entire datacenter.

>> Assaf Schuster: Yes, and if I understand correctly, this was Nikolaj's question, and I am going to focus in the rest of the talk on what happens inside the same physical machine. I think that the other considerations deserve a different talk, and they employ different technology. All right, so question, what happens here? Again, what happens when the guests prepare for the announced question, changes? In a way, for a guest to give memory that it used to have is something very strange, because we are not used to think about the applications in this way, and for guests to get more memory and start to utilize this, to leverage it, is also a problem. So if we look at benchmarks, and this is a benchmark from the DaCapo Java benchmark, we can see that, indeed, many applications can utilize more memory to get more performance, so this is a performance curve. And the x-axis is the amount of memory that this benchmark has, and we

can see very clearly that the line is very nice, and the more memory it has, the better the performance, and actually, the line shows a concave monotonically increasing performance as a function of the amount of the memory that is available, with diminishing marginal gain. Okay, so we know that there are many applications that can be elastic in terms of the memory that they use, that can utilize more memory, but some memory cannot, and we think that the right way to change memory is to be elastic in terms of the memory that they can use is not to go and change every application, but actually to deal with the libraries that handle the data structures. Anyway, so now assume that you have an application that can use more memory or can shrink the memory that it uses and still provide the correct answers, how do we move memory between hands in virtualized systems? This involves the balloons mechanism that was invented by Carl

Waldspurger. I hope that I pronounced it correctly -- in publishing OSDI in 2002. And this is a state-of-the-art mechanism from moving memory from one virtual machine to another virtual machine. Every virtual machine grows balloon in it. It's a cloud, but think of it as a balloon.

Here, it's written a balloon. And when you want to take memory from one virtual machine and you want to move it to another virtual machine, all you need to do is to grow the balloon. What does the balloon do? It increases the memory pressure on the virtual machine. The virtual machine gives it pages. The balloon pins the pages that it gets from the virtual machine to actual physical machine, to actual physical pages, and communicates with the host, saying, okay, this physical page is free. You can use it. And those physical pages that the balloon gets from that virtual machine moves to the use of the other virtual machine, where the balloon is shrinked and the application can get more and more pages, those physical pages that were released from the other virtual machine. This is a way that you move memory between machines. Of course, it involves some overhead. It's not a very high overhead, but this overhead is significant enough to need the eight seconds in order to move this memory between the virtual machines and allows applications to start using the memory. Okay, so we talked about moving the memory between the applications, but memory is only beneficial if you use it long enough. Okay, because of this cache warm-up and because it takes time to move the memory from one virtual machine to another, and it so happens that PSP, the original version of a progressive second price auction, in case of a tie between guests, none of the PSP guests win. It's like King Solomon's verdict. You have a tie, both of you gave the same price, none of you wins. But this is unreasonable. It is very fair. It is considered to be very fair in auction theory, but in reality, for memory auctions, it's inconceivable, because you already have the memory. Why is the host going to take it from you, just because you propose the same bid? So in the memory progressive second price auctions, ties are broken in favor of the guest that is currently holding the memory, plus other mechanisms that I don't want to get into. And this creates a much more efficient mechanism.

All right, so what else about progressive second price. Progressive second price assumes concave monotonically rising valuation functions, like the one that we previously see from the couple benchmarks. Okay. So this is a valuation. The more memory you get, it values -- the performance of the application rises with diminishing gains. Okay. So actually, this makes sense for bandwidth auctions, like PSP was originally planned for, but it does not make sense for

memory, because memory sometimes behaves very differently. If you look at the performance of applications, if you take from off-the-shelf applications and you look at their performance, as a function of the memory that they allocated, usually, they have a fixed cache size of the fixed size of a data structure, and when you get to that fixed size, all of the sudden, the applications start working. The different colors here are the loads on the applications, and I don't want to get into them at this point. But you can see that there is a threshold. This is memcached. It's a wellknown caching benchmark, and you can see that until you get to 600 megabytes, it does not really start to work. Okay, why? This is the way it was designed, and this is the way that most applications are designed, and so it doesn't make sense to assume, like a progressive second price auction may assume, that everything is concave and monotonically rising. So this is monotonically rising, but it's not concave. If we look at real-life configurations, we see that the behavior is not even monotonically rising. So this is the memory, elastic memory memcached.

This is memcached after we employ some changes so it will be elastic in its use of memory, and then we put it on some configuration of our network, and we saw this behavior. Why? Because there was this bottleneck here that had nothing to do with memcached and could have been resolved if we knew about it in advance, but usually, in those cloud environments, you do not measure all this in advance and you do not know about it. But on the fly, online, while you're working, while the application is working, you realize that something happened here, so it's not even monotonically rising. So PSP is actually not suited at all for memory allocation, and what we did with memory progressive second price auctions, we decided to solve this by making those places where the application sometimes has a performance hit, making them forbidden ranges.

So it's forbidden for -- so when you send the bid, you send also restrictions saying between 1.5 and 1.9, I am not willing to get any memory. I am willing to get only the memory here and here.

Okay, so memory progressive second prices solves this, and we'll see in a second how. But just to show you that this is not only memcached, here is another example of TOMCAT from the

DaCapo benchmark again, from Java benchmark again, and we just tried it. You can see that working with 350 megabytes is much better than working with 450 megabytes and paying from that. So it's much better for you to bid here than to bid here, and if you know that this is the behavior, you'll decide that this is a forbidden range. In MPSP, you'll be able to say that this is a range that I'm not willing to get. I'm not willing to get 450 megabytes, only 350 megabytes, or if you want, give me more. Give me 500 or more. Okay, so you can see that there are other applications that behave like that, and so how are we going to decide -- how is MPSP dealing with all that? Again, the bid with MPSP, when there is an application that behaves nicely, it's concave here and it's monotonically rising. The slope between the current amount of memory that the application has and the amount the applications want to have, the slope is the average unit price, actually, because this is a price that is -- this is a price for the 1200 megabytes, and this is the price for what it currently has, for what the application currently has, and the slope actually determines the average unit price per megabyte. So the slope actually determines the bid that the application is going to submit, together with the quantity of 1200 megabytes, if it wants to use 1200 megabytes. However, if the application wants to use more, if the application

wants to use more than 2000 megabytes, it is going to bid with this slope. This is a slope that connects the point here, the 2200 megabytes that the application currently bids for and the current amount of memory that it has, and it represents the average unit price. But, of course, since the function is not monotonically rising, we have to declare this part of the x-axis as a forbidden range. Otherwise, we might get -- we might get memory in a place that we are going to perform badly and which is not worth the money that we are going to pay for, because this is the average price, assuming that the function proceeds as concave and monotonically rising.

And so suppose we bid here, with this range as the forbidden range for the MPSP auction, and we do not get it. We do not get 2200 megabytes. In this case, we want to explore, so the host was running the calculations, optimization problem, and did not give us any of the quantities that we asked for. So what can we do now? What we can do is to lower the price, the unit price, that we propose and ask for and let the host take into account also part of this range, but in this case, we are able to pay for it, because we propose a much lower unit price. Okay. So it goes as follows. The current actually explores its options by starting with bidding here. If it doesn't get it, it starts to explore auctions for the host -- for letting the host be more flexible in the amount of memory that it gives it in order that it wins something by lowering its bid. Okay, so if it lowers the bid all the way here, then all this stops being a forbidden range.

>>: So if the client gets, say, 1600 or 1700, as your x-axis suggests, then obviously it should just use 1500 instead of 1600, because it will get better value.

>> Assaf Schuster: Okay, but up to 1600, you are above the slope, so according to your valuation, it's still good for you to get the allocation here, because you're still winning. Okay.

The moment that you get below the slope, you may be losing money, because you propose an average unit price which is higher than the valuations that you have for this performance. So this is why you determine that as a forbidden range when you bid here, for this quantity, but if you don't get it, you lower your price, and then this stops being a forbidden range and becomes a range that you are willing to get an allocation from, which makes it easier on the host in the optimization problems that it executes to assign, to give you something. Once you got it, perhaps you got it here. The price is now too low. The price that you offer to pay is now too low. Suppose you -- again, from the beginning. Suppose you bid here, you did not get it. You lower the price, and now you get -- suppose you get this point. It pays for you now to increase your price as you keep working with this memory until it is the exact price for this point. And why is this the case? Because the price that you offer to pay is not the price that you actually pay. The price that you pay is according to the exclusion compensation principle, and the exclusion compensation principle takes into account your price while calculating the other clients' payments, which means that if you are in a steady state and you increase your price, the other guests are going to pay more, but you are going to pay the same, because you pay according to the exclusion compensation and not according to your bid. And why that? That is because we want later to show that MPSP is incentive compatible. Anyway, so just to show you

quickly the MPSP allocation choices, so MPSP sorts the bids according to the price. This is the price, and this is the quantities that you want, and the price is very high, so you get what you want. And the price here is two, so it's higher than that, but this is the quantity of memory that you have. All of the sudden, the host runs into the situation that it has to give an allocation inside an indivisible, forbidden range. So what does the host do? It cannot give this allocation, because it's forbidden. One thing that it can do, it can apply free disposal, which is a common terminology in auctions, and just forget about this request, but this is, of course, noneconomical.

So the hosts in MPSP, what the hosts in MPSP does is actually to take the lower bid, which is divisible, and give part of the needed quantity to the one who submitted this bid. So MPSP can do that, and it still gets the highest welfare, and we can still prove that it gets to the highest welfare by doing that, even though it has this indivisible fraction or forbidden range that it has to take care of. So forget about the graph here. Just to mention that the PSP, again, is not suited for memory allocation, because it assumes no overhead in transfer of resources, and the question is, how will MPSP take into account the overhead in transferring memory between applications?

And to do that, I have to give you some background, again, on auction theory. So auction theory actually consists of optimizing a social choice function, F, and F is choosing an allocation, A.

And usually, what the host does is to compute the maximal allocation, to compute the allocation that maximizes the social welfare. This is actually a weighted social welfare, so VI is the valuation of the highest guest of the allocation A. And WI is the weight on the valuation of the highest guest. So usually, if you look at auctions, what the host is actually computing is -- it's computing the allocation that maximizes the valuation, the social welfare. This is actually the expression for the social welfare. However, if you look, there is a generalization that says that you can also maximize the allocation, and adding here some constant that is depending only on the allocation. It depends on nothing else. And this the goal of affine maximizers. So now, what we are going to do is to put inside this constant the overhead between the corporate allocation, the overhead of transferring memory between the current allocation and the prospective allocation A and maximize again. Okay, so in this way, we put inside the functions that we are going to find its allocations it maximizes this expression, we are going to put in the overhead. Okay, and the theory says that if F is an affine maximize and the payments are computed using the exclusion compensation principle, then the mechanism, resulting mechanism, is incentive compatible. It means that it's truthful. It incentivizes the clients to say the truth about the valuation of the quantities that they request. And, actually, Roberts' theorem says that these are actually all the possible incentive compatible mechanisms that exist under certain reasonable assumptions. Okay, so this is the way that we are going to handle the overhead, which was not done in PSP. MPSP takes into account the overhead of moving from the current allocation to a new perspective allocation. This overhead is included here, and the maximization process takes this into account. So how do guests find the bids? The guests should find an estimated payment for every Q, and this requires some online learning algorithms.

I'm not going to go through this, but there is an online learning algorithm that the guest, the agent of the guest economic agent of the guest runs. It collects information all the time on its

performance, taking into account some reserve price and other stuff, and finally it knows what is the price. Finally, it has an estimate on the payment that it is going to pay when it will be given a certain forever allocation, Q, that is going to be given. And it also knows the valuation. It also knows how it values the quantity Q that it is going to be given, following the considerations that we previously discussed. So it goes over the Q axis, computing for every potential Q the potential utility from that Q, chooses the Q with the highest utility, and this is going to be the bid that it's going to submit. So I want to summarize the positive results that we have, MPSP maximizes a social welfare for every guest, even from non-concave, non-monotonically rising optimizations. It does so by solving an optimization problem with affine maximizers, and by expecting recursively actually what I showed previously, if you have an allocation that maximizes social welfare but uses a forbidden range, there is some algorithm that we apply in order to solve this, and we either allocate all the forbidden range or none of it, and we actually are able to show that this maximizes the social welfare. And number two, we incentivize the clients to build the true valuations for the memory quantities they ask for. And we show that this is the best course of action, regardless of what the other clients do. Okay. It asks for a specific quantity. There are some final considerations here. I want to avoid them at this point. Let me go very quickly to the experimental evaluation. For the experimental evaluation, we took nonconcave valuation function but still monotonically rising. You can see that the units here are dollars per second, and so this is already a combination of the performance that the host is going to -- that the guest is going to gain, translated into dollars. Okay, so you can see that here we took the square of performance, which is usually what happens in social environments and all to all online gaming and so on. And here we took some Pareto efficiency, some Pareto distributions, which usually models wealth distribution, and you can see that it is actually a step function. Until you get to the 1 gigabyte, you gain nothing, and when you get to the 1 gigabyte, you gain a lot. And if you look at the result, this is for the memory consumer benchmark, you see that -- so we have six lines here. The dashed black line is a strict upper bound, which says that we do not do anything with Ginseng. We just compute the highest possible social welfare for every configuration that we get, and there cannot be any higher social welfare. Those rich guys get the maximum memory that they want. The poor guys don't get anything, and we compute the social welfare. You can see the line here, and the number of virtual machines, and then you have a simulated Ginseng, which means that we do not take the memory transfer overhead into account here on this dashed red line, and the Ginseng achievement, actually, the

Ginseng test bed, where we run Ginseng on a real machine, shows that we are not very far away from the Ginseng simulation and from that upper bound, so we don't lose a lot by moving the memory. We know how to handle this. And on the other hand, hinted host swapping and hinted mom, which are the state of the art systems for handling overcommitment memory, do very poorly. So the more virtual machines we have, the more basic core memory we need to assign to each of them. And at the end, you can see that Ginseng itself also is doing very poorly, because there are too many virtual machines and you consumed already all the memory on the physical machine, which is 10 gigabytes. So each of these virtual machines has a core memory of 0.7

gigabytes, and at the end, we do not have enough headroom to distribute between the machines.

But until we get to 10 or 11, we are here in overcommitment of factor two, because each of these applications can use 2 gigabytes of memory, and we have only 10 gigabytes of memory. So until we get overcommitment of more than two, we are fine.

>>: Is this run in a simulation environment, or is it using applications running memcached?

>> Assaf Schuster: The Ginseng, so the dashed red line is running simulation, so it does not take into account --

>>: But the workload that comes from memcached?

>> Assaf Schuster: And the red line or the orange line is Ginseng itself, running on an actual machine that gets load coming from outside and get and put requests and is answering them.

>>: So how do the requests change over time? Are there any mainly invariant properties of these?

>> Assaf Schuster: Oh, okay. So from time to time, we change the load, and when we change the load, so there are rich guys and there are poor guys. And when we change the load, all of the sudden, the rich guy has a very low load, and he does not need a lot of memory anymore, so it still gets memory, but less than previously, and the poor guy all of a sudden gets a lot of load, a high load. So we change it from time to time, and we accumulate the social welfare while running. So every such experiment consists of 300 seconds, where every -- I think every 60 seconds, we change the load. We just choose randomly. So we have 10 virtual machines running. We choose randomly who is going to get more load and who is going to get less load, but the total amount of load stays the same, stay fixed. And if you look at the other, at memcached, this was the memory consumer. If you look at memcached, you see the same behavior, except that at the beginning here, there was already a very rich guy that probably remained rich all the way to the end, with the very high load. And you can see that there is a very sharp drop here from 12 to 13. If you compute, you'll see that this precisely happens when that guy does not have a gigabyte anymore as a headroom to expand, and remember that here I told you that for memcached, you need at least a gigabyte to get a very high valuation. So everything remains okay with our system, as long as you have enough headroom for at least the rich guys. And all of the sudden, it drops. And the conclusion is that we showed the resource as a service cloud as a future cloud model. We showed an implementation as an efficient prototype for the memory part of the RaaS, of the RaaS cloud, which improves the social welfare by it can be an order of magnitude or order and a half of magnitude, or depending on the valuation function, and there's too much future work. We are doing it. Full multi-resource RaaS machine.

Multi-resource is a real challenge to do with auctions. Every different resource has its own

complications, and another very important challenge that nobody now knows how to solve is the fact that if you run auctions again and again and again, you can put the learning agents that will spy on the other applications that exist in the machine, and when you spy on them and you know the load changes and you know the valuation, you sometimes can hack them and grab the resources that they need before they need it and then sell it to them in higher price. So there is a lot of security and privacy and spying issues that exist here. And again, nobody knows how to tackle them yet, and that's it. Thank you.

>>: Okay. So do you have an overview paper?

>> Assaf Schuster: Yes. It's on RaaS. It's our extrapolations of how RaaS is going to -- the first part of the talk, this is the paper that is forthcoming in July.

>>: So I just got reminded of this paper on lottery schedule [indiscernible]. It's a very old paper.

So the basic idea is there are these different schemes of process scheduling, like you have

[indiscernible] first, and then there's stuff like that. So the idea of lottery scheduling is there is one implementation that can basically approximate any algorithm you choose. So the idea is the scheduler has a box of lotteries for random numbers, and these random numbers are given to processes based on some scheme. And then when it wants to schedule the next process, it'll randomly pick one random number, and based on which process has that random number, it will schedule the process. Now, if you distribute these lotteries equally to all processes, then you get fresh actual schedules. On the other hand, if you distribute based on who has the shortest job, then you get shortest job scheduling, job-first scheduling. I wonder if there is a similar general system that you can define for resource as a service, too, where you have one implementation, where maybe the same lottery scheduling might just work fine, right? You just figure out how to provision this, approximately.

>> Assaf Schuster: So remember our problem. Our problem is here. How can the host compare guest benefits? And any algorithm that assumes that the guests are rational and that the guests are acting according to -- sorry, the guests are obedient and that they act according to the algorithm will fail here, because the guests do not have all the same valuation. Some of them want to run much more than others. Some of them want higher performance much more than others. It's a database application that values its transactions in an order of magnitude more in terms of actual dollars, an order of magnitude more than some offline applications that compute,

I don't know, some testing application, some testing offline job. None of the algorithms that you mentioned take this into account. The subjective valuation of how much money I'm willing to put on this computation, on this resource, because I value my performance much higher, this is the issue here.

>>: I see that, but all I'm saying is your auctioning scheme could be a means to get the lotteries, right? The lottery scheduling doesn't solve that problem.

>> Assaf Schuster: The lottery problem does not solve the problem, because it doesn't take into account the valuation. I mean, it's filled. It's ex ante fill, in the jargon of the auction people. But it will not solve the problem. It will not solve the benefits problem. Okay. Thank you.