>> Zhengyou Zhang: Okay. So let’s get started. ... pleasure to introduce Greg Hager. Greg is a Professor...

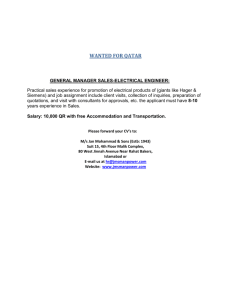

advertisement

>> Zhengyou Zhang: Okay. So let’s get started. It’s really my great pleasure to introduce Greg Hager. Greg is a Professor and also Chair of Computer Science Department at Johns Hopkins University. Johns Hopkins, as you know, is very well known for medical things. Greg used to work in a computer division for general robotics, but later it move evolved into a vision for surgical robotics I guess. And he has been interested now in a lot of other things like interactive [indiscernible] display and today he will talk about collaborative computing in the physical world. >> Greg Hager: All right. Thank’s Zhengyou. all of you for coming today and listening. It’s a pleasure and thanks to So when I was putting this talk together, you know, I kind of chose the title intentionally to change a little bit the thought pattern of the usual talk I give which tends to revolve around collaborative systems, specifically in robotics. And I wanted to kind of broaden it a little bit and thing more about collaborative computing beyond robotics, including robotics, but really computing in the physical world. And part of what I started to think about as I was putting it together is: what’s the difference between where we are now in computing research in this area and where were we roughly a decade or a decade and a half ago? So this is actually, this is a slide for Rick. So this is work that came from my lab really around, I guess the mid 1990s/2000 time frame. And I guess the interesting thing to me really is a lot of the things that I see today were going on back then; we were talking about them. You know, so we have got, this looks speciously like what I guess used to be called the Microsoft surface that we were developing in the lab, just your base interaction, you know real-time feedback from robotics. If any of you remember [indiscernible] he actually built that video game that you just saw go by there; so interactive video games. So certainly both human and machine interaction using perceptive devices and you know robotic interaction with the physical world using perceptive devices was out there. So why is today different than what we were doing back then? And I think there is a really simple answer, which is computing has won the war. You know if you look at what we had in terms of computing platforms and in terms of sensing and robotics 10-15 years ago it’s wildly different. So as you all know we have now got computers, you know winning Jeopardy. We are all carrying super computers in our pockets. I actually checked so that’s about equal to a [indiscernible] two, for anybody who actually cares. And you know computing is just showing up anywhere. This is actually a shot from Johns Hopkins sitting outside the OR. That’s what people are doing, using their computers. And of course obviously people think about computer diagnosis. This I think might actually also be from [indiscernible], it’s third world education. >>: [indiscernible] >> Greg Hager: Yeah, MSR India. And of course now in entertainment practically everything, you know, lives on computing. It’s just, it’s pervasive. In fact just to check it out I looked to see how many computing devices are there in the world? And I will just define that as microprocessors. And so as close as I can guess there is somewhere between 50 and 100 billion active microprocessors in the world right now. So like what does that work out to be? Like 10-20 microprocessors per person on the face of the earth. So you know if this were a virus we would be looking for an anecdote or if it were a form of life we would be worried about it taking over. And it’s just continuing to grow at remarkable speed. The other thing that’s different is, you know, platforms for input. So of course I am at Microsoft so I have to give Microsoft credit for really changing my live over the last 2-3 years by introducing the Kinect, because suddenly you have got a low cost, easy to use platform that gives you direct 3D perception of the physical world. And of curse originally was introduced for gaming, which is a lot of fun. My kids certainly enjoyed it, but in my life just about everything I knew suddenly grew a Kinect. So you know robots sprouted Kinect’s. There are actually Kinect’s at the ICU at Hopkins right now observing patient care. And this is actually a video that almost went viral from our lab where we are running a da Vinci surgical robot from a Kinect. And again this continues to grow. There are probably 20 Kinect’s in my lab at this point doing just about anything that you can imagine. So we now have a rich source of input to connect to all this computation. And in fact it’s not just the Kinect, you know, there is every form of input known to man. So you know accelerometers, gloves, every laptop now has a camera; you know you wear a fuel band and decide how much you moved today. So the world is just now connected to computing in a way that it was never connected before. And it’s the same on the output side. So this is a set of platforms that I can now get in my lab. And again 10 years ago none of these existed, but now you can get the Willow Garage PR2. That’s the DLR Justin robot; it’s just a crazy mechanism in terms of what it can do. NASA Robonaut’s actually up in the space station right now. And many of you probably saw in the New York Times the discussion of Rethink Robotics which is a new low cost manufacturing platform that’s actually just being introduced by, well Rethink Robotics. A company founded by Rod Brooks. So we have not only just the input and the computation, but the output is there as well. And so it really makes for an interesting mix. And furthermore it’s actually having practical input in the real world. So for those of you who don’t know this, this is the intuitive surgical da Vinci system. It’s a commercially available robot that does surgery. Right now there are about roughly speaking 2,500 of them installed around the world. They do in excess of 300,000 procedures a year. And for example if you go to get a radical prostatectomy the odds are better than 80 percent that it’s going to be done through a robotic surgery as opposed to traditional open or laparoscopy surgery. And those numbers continue to go up. So it’s very rapidly becoming a standard of care in certain areas. Now so that’s all great and I think this really exemplifies the opportunities that are out there in connecting people with really computational systems. What’s missing here, I will get rid of the bloody slide. What’s really missing though is kind of the level of interaction that’s going on there. So everyone is familiar with traditional robotics which grew out of automation research. So it’s really a programmable, controlled machine. It’s writing a program just like you write Microsoft Excel, it just happens to take place in the physical world. The other extreme is the da Vinci robot we just saw, which is strongly human interactive, but its direct control. There is really no intelligence in the machine what so ever. It’s there just to replicate hand motion into the patient and to transmit light back to your eyes so you can see what’s going on. And it really promises to do nothing else in between. >>: [indiscernible] >> Greg Hager: There is effectively no force feedback. So it’s really --. And that’s actually not unusual in laparoscopic surgery that you don’t get a lot of forced feedback in the system. >>: So what is the main advantage of using this robotic system; a sort of rescaling of the surgeon? >> Greg Hager: No it’s actually it’s more subtle. So the longer story is this. So we started out with open surgery where you could put your hands inside the patient and you could do anything you wanted. Then in the 80s we went to laparoscopic surgery. And what did we do? We separated the surgeon from the patient using tools, but those tools are pretty much straight chopstick tools. And so when you introduce the robot you introduce two things: the first is you get stereo visualization, which you don’t get in laparoscopic surgery. And the second you get a wrist inside the patient. And so suddenly you can do all types of reconstruction surgery, or reconstructive I should say that you can’t really do comfortably with traditional laparoscopic tools. So you can sit down ergonomically, you feel like your hands are in the patient again, you can see in 3D, it’s just a completely different platform again really for people to think about surgery. And part of the game has been for people really to re-learn and re-think how you can do surgery when you have got that kind of platform available. >>: So is it better than just using your two hands? [indiscernible] >> Greg Hager: It doesn’t save labor. So to begin with it’s minimally invasive. So the big thing is with minimally invasive surgery you are reducing blood loss, you are decreasing morbidity of the patient and reducing scarring. So it has all the advantages of traditional laparoscopic minimally invasive surgery. And then it actually has two really key advantages I think. The first is, you know as we said, you have additional dexterity in the patient so there are things that are just much easier to do with it. And the second is actually it’s a lot more ergonomic for the doctor. So if you look at certain areas of the medical profession they have some of the highest rates of back and neck injury of any industry. And it’s because, I know surgeons who have 18 hour procedures. They just stand there for 18 hours. And usually it’s something that’s not particularly ergonomic. So it provides a lot of interesting possibilities. >>: So essentially they get little tiny hands. >> Greg Hager: Yeah, exactly. >>: They become little tiny surgeons. >> Greg Hager: And you sit down. You quickly understand eye coordination. actually it’s called intuitive and it is intuitive when could sit down and within 10 minutes you would very what’s going on because it feels like your natural hand I feel like I am an add for intuitive surgical here. But, so the key thing though is that we are transmitting the surgeon into the patient, but we are not really augmenting the surgeon in any real way, except perhaps for some motion scaling. And so a lot of what we have been thinking about is well how do you operate in kind of this mid-space where, you know, what you can imagine is in structured environments we can do a lot of autonomy. In un-structured environments, like surgery, we have the human strongly in the loop. Are there places where we can kind of find a middle space? So for example if you are trying to do remote telemanipulation you can actually scan the scene. You have got, the robot has got a model of the scene and now you can phrase the interaction at the level of moving objects around in the scene. Not just raw motion in terms of the robot or if you are doing this particular task it’s a task which has extremely poor ergonomics. You can basically do it for a week or two and then you have to go out for a few days because you have basically got repetitive motion injuries. So could we bring a robot into this task which is hard to automate? But if we could have a robot doing at least some of the more injurious parts of it a person can still be in the loop, but they can do it without injuring themselves. I guess my other video collapsed, but retinal surgery is another area where now it’s about scales. How do we do really [indiscernible] and interaction at scales that people can perceive? And it’s not just these. There are any numbers of places where we can talk about really augmenting human capabilities. So not replacing human capability; not doing one to one, but augmenting human capability. >>: So [inaudible] makes the middle task difficult, but it’s just difficult for robots to essentially tie knots that have the dexterity [inaudible] like rope? >> Greg Hager: Yeah so there are two things. The first is that it’s a complex task from a robotics point of view. It’s hard to model what’s going on. It’s hard to solve the computer vision problem for example just to find the components of the task. Also it’s a small [indiscernible] problem. So that actually is, they are doing cable ties for refurbishment of a Boeing aircraft. And so you do maybe a few thousand of these cable ties and then you are done with that task. And maybe a month later you pull it out and you do it again. And so it’s just not effective to think about completely programming a robot or building a robot that’s going to do just that task. And this is actually very typical right now. This is actually where in fact it turns out the US is still relatively competitive in a funny way, which is these kind of small OT manufacturing cases, because it’s not big enough to off shore it and there is not really an automation solution either. >>: [indiscernible] >> Greg Hager: Yeah, exactly. Right, so you can project, basically you can project people in whatever format makes sense for that particular task, exactly right. So I mentioned it’s not just these tasks. So one of the things I happened to look up the other day was the prediction for the most rapidly growing profession in the United States. And it’s in home assistance. That’s expected to grow I think over 10 years by 70 percent or something like that. And again, if you think of in home assistance it’s not about automation. It’s not like you are going to give somebody a one to one telemanipulation environment, but interactions with people to help them in their daily lives. So how do you develop these sorts of interactive systems that can replace or augment certain functions as needed? And I happen to find this too. So I guess maybe there are even some third world applications of a human augmentation of robotics. I think that’s made out of wood, so it’s also recyclable. Apparently you can throw it in the fireplace, burn it and then make a new one. >>: Is that real? Is it really real? >> Greg Hager: I don’t think it is. and I couldn’t resist. I can’t tell actually. Anyway, so I think there are a number of opportunities where we are now just in the technology curve in terms of sensing, robotics we can start to think about these interesting mixes devices in the physical world, sensing of the physical world interesting things. I just found it because of where computing and of people, to do So a lot of our work has been in medicine. So you guys asked a lot of questions about the da Vinci robot. So you kind of get how it works. So we started to look at it and we realized that it’s really a unique opportunity. And the reason it’s a unique opportunity is because for the first time you are really interposing between the doctor and the patient a computational system. And what it means when you have got a computational system is not just that you can program that system, but in fact that becomes a data collection device as well. We can build a complete record of everything that happened at procedure down to the minutest motions, most minute motions that the doctor made inside the patient. And so we actually have developed a data recording system that now allows us to make those records. And if you think about it we can start to study how humans perform interesting manipulation tasks in the real world now at scale. You know hundreds of thousands of procedures and principle. And you can immediately see interesting things. So for example this is an expert surgeon doing a simple forth row suturing task. You can actually see one, two, three, four rows. This is someone who knows surgery. They have actually used the robot before. They are just still in their training cycle and they are doing exactly the same task. And so you can start to realize that what you would really like to do is take somebody who looks like this and make them look like that as quickly as possible through whatever types of augmentation teaching or training that you would like to have. And so you can start to think about a space of task execution and skill in ways that you couldn’t think about before. >>: [indiscernible] >> Greg Hager: Well, this is a training task. So it’s not inside the body, but in principal yes, exactly. And I will show you in fact in a little bit what that task is. The other thing that’s interesting about surgery though or medicine in general is that it is a highly structured activity. And it’s actually fairly repetitive. So this happens to be the flowchart for a laparoscopic cholecystectomy, so taking your gallbladder out. And you can see it’s highly stylized. There are major areas of the operation and then you can break that down into subareas, all the way down to dissection of specific anatomic structures. And so it’s not just that we can get a lot of data, but we can get it in a case where there is actually a fairly known and taught structure to a procedure. So it really lets us study it both from the data side and from the side of understanding semantics or structure. And so we call this the language of surgery. You know, how can I take data from a robot and parse it into this sort of a breakdown of a task? So just to give you a concrete sense of this here, in fact that forth row suturing task is a training task. So you can see, you can sit down and you can identify roughly eight basic gestures that take place. And this is the way that if a doctor were teaching it they tend to break it down and teach it. And then you can say, “Well how do we model a task like that”? Well you know the language of surgery kind of suggests the natural thing to do would be to start with some of the ideas from speech and language. And say, “Look we know that we have got a set of hidden states, which are gestures. We have got a set of observables which are the video and the kinematic data from the robot. Can we train something like an HMM to actually recognize what’s going on”? And presumably if we can recognize what’s going on we can now start to analyze what’s going on in interesting ways. >>: I guess the language [inaudible] would be different than the language of the human right, because of the physical constraint on the robot? >> Greg Hager: Well, so that’s an interesting question. >>: I just wondered, obviously it cannot do everything a human can. >> Greg Hager: No you can’t, no you can’t. You can recognize, I would argue you can recognize almost everything the human is doing. Whether you can replicate it is a slightly different case. So the interesting thing I think in what you are saying is suppose I was to give all of you video from surgery. I bet whether it’s open surgery, laparoscopic surgery or robotic surgery you could very quickly recognize when they are suturing even though you have not really seen it before; because you have kind of a high level semantic model in your head of what suturing means. And you could probably even break it down into sub pieces of what they are doing. So in some sense this part is invariant I would argue; the language is invariant. What changes is how that language get’s expressed in the device or in the domain that you are actually operating in. And we have actually seen this. So we have applied this not just in robotic surgery, but in laparoscopic surgery and now in retinal surgery as well. So we also by the way had some belief that this would work, which is about 10 years ago we did a very simple test in retinal surgery in fact. And we found that we could actually use HMM’s to parse in some sense what was going on. So we actually, just too kind of again give you a sense of how this goes, so what you can do is you can have people sit down. You can have them do a training task like this. You can record it so you know everything again that happened. And in fact you get a lot of data out of the robot. It’s not just the tool tip position. It’s the entire kinematic chain both master and slave. So it’s really a lot of data. And you can do this with multiple individuals. You can repeat it several times. So you can get kind a kind of repetitive data set of people doing this. Just like if you were training speech you would get a lot of speakers saying the same sentence for example. So you can start to learn the language model. So we do the same thing. And in fact if you do just experts and you confine them to fairly constrained task you can do extremely well at recognizing what’s going on. So this is the difference between a manual labeling and an HMM segmentation of that fourth row suturing task into the gestures. And the main thing you can see is obviously we have nailed it in terms of the sequence of actions. The only real difference is these slight discrepancies at the boundaries. Trying to decide when they transition from one gesture to the next. So when you see me give accuracies like this 92 percent what I am really measuring is how often the blue line and the red line are perfectly lined up. So here 8 percent of the time they don’t line up. And that’s what would consider the error. So that’s good. So what if you tried to do this a little bit more in the wild, which is get a wider range of individuals and you get a wider range of tasks. And you do the same thing. And these are again pretty standard training tasks in robotic surgery. So this is a data set we collected a few years ago. And the first thing you find out is if you just take standard HMM’s and you try to apply them here they don’t go. They just don’t generalize well as the data gets more diverse, which is probably not a big surprise in the end. But at least it was the starting point for looking at this. So then we started to figure out what’s gone wrong here. So part of what’s going wrong is we have got really very high dimensional data relative to what we are trying to recognize. And in fact at different time’s different pieces of that data matter. Sometimes knowing where the tool is moving matters. Sometimes knowing how the wrist is being used matters, for example. So we embroidered the HMM’s a little bit. So these are factory analyzed HMM’s where there is basically a dimensional reduction step that’s taking place off of the hidden discrete state. And in fact once you do that you start to see your numbers pop up. So we have gone from 54 to 71 percent, but there is still a little bit of a “bugaboo” and that’s this: you might wonder what is setup one and what is setup two? Setup one is more or less standard “leave one out” training and testing. So I just take one trial and I throw it away, train everything and then I test. But there is a problem with that. Can anybody guess what the problem is? See now I will go into lecture mode here. Oh, it already says, there you go. You need to --. This happens to me in class all the time too by the way. And sometimes they still get it wrong, that’s the amazing thing. So yeah, we didn’t leave one user out. So what’s it’s getting to do is it’s getting to see the entire user population and then basically recognizing. As soon as you take one user out you see things drop pretty quickly. And so it’s over training in some sense. It’s learning to identify people for all practical purposes, which in itself is interesting. Just as a useful thing to keep in your head. So this is what the parses look like again. This is a good one. So you can see again things overlay pretty well. This is a bad one, but notice that if I look at the sequence, the sequence is actually just about perfect. Really all again that’s happening is it is kind of arguing where the gesture changes take place. So that’s something useful to kind of keep in the back of your mind for later. So we weren’t happy with this so we started to look at other ways that we can improve this. And so there are two things that we have done, both of which right now seem to give pretty good and about the same results. One is to go to a switching linear dynamical system. And so the only difference is we are going to take this, you know, this hidden state and we are going to add some dynamics to it. Another way to think of it is instead of saying we are going to view each discrete stage as essentially having a stationary distribution associated with it now it can have a little dynamical system that changes a little bit over time associated with it. And that has its own dynamics somewhat independent of the discrete state. So if we do that things get a little bit better in the leave one out case, but they get a lot better in the leave one user out case. And so it seems to be capturing more of the essential aspects of the data and not over training into a single user. And then another bit of work, this is really Rene Vidal’s group that did this, is looking more at a dictionary based approach. So instead of just trying to train straight from the data, first develop a dictionary of motions and then build the entire training apparatus on top of that dictionary. And in fact if you do that you get really a roughly about the same performance. So these two kind of are about equal from the kinematic data. What’s interesting about this method is if you look at the video data. So this is some work that appeared in [indiscernible] last spring where we took the video data, not the kinematic data. We did spatial temporal features on the video data and then we trained up these models using the video spatial temporal features. And so if you take something like this dictionary based HMM on the kinematic data these were the sorts of numbers we had. But if you now go to the visual data and you do a little bit more embroidery on the dictionary based methods you can actually start to get numbers which are getting back to that first set of trial that we had. We are now looking at kind of 90 percent, 80-90 percent recognition rates. Interestingly if you add the kinematic data in it goes down a little bit again; which probably kind of makes sense because the kinematic data had a lower recognition rate to start with. And so it’s confusing things just a little bit to put the two together. >>: [inaudible] >> Greg Hager: Yeah, well it’s --. So it’s all the tool tip positions, velocities, the rotational velocities, orientation of the tools; it’s about 190 different variables. It includes things like the gripper opening and closing as well. So when I say kinematics I just mean anything to do with any joint on the robot moving one way or another. But we are getting pretty happy with this now. So we have actually managed to scale it up at least to a more diverse group. Yeah? >>: [inaudible] >> Greg Hager: So the visual data, I am sorry I don’t have, I was going to get the video from Rene and I didn’t get a chance, the visual data --. So we are taking the video and we are doing spatial temporal features on the video. So in every frame you are computing something like a sift feature, but sift in space and time at the same time. So you get that group of features together, you run a dictionary on those features and then you build a dynamical system around that dictionary. >>: [inaudible] >> Greg Hager: I wish I knew what I gain in the video. What I probably gain in the video, you are right, is maybe the best way to think of it as this way. When I learn from the kinematic data it’s like learning pantomime, right. I am just looking at hands moving and trying to guess what they are doing based on when the hands are moving. When I get the video data some of the features are from the surface as well. And so I get contextual information. I now know that the tool is moving to the suture site; as opposed to the tool is moving at a straight line. I can only infer that it’s moving to the suture site. So that’s my hypothesis, but you know it is machine learning, right. So there is a black bock and I haven’t figured out how to open the top of it yet. But, you know, this really is starting to put us, as I said, in the ballpark of really being able to use this data to recognize what’s going on in diverse individuals. So what can we do with that? So I mentioned skill before. So what is skill? Well right now skill means that you stand next to a doctor, you know an expert an attending physician, who is doing surgery. You learn through apprenticeship, they watch what you are doing, they give you feedback and eventually after roughly a year of fellowship for example they will say, “Okay, you are now a surgeon. Go do surgery”. Today there is getting to be a lot more interest in saying well can we define that a little more formally? How do we assess skill? How do we know someone is qualified to be a surgeon or how do we do reassessments? So, you know, how do we re-certify someone who is a surgeon? So for example this is something called OSATS were what you do is you go through a set of stations and you have a set of basically skills you have to practice and there is a checklist. And somebody can more or less grade you on your skills and you get an overall score. But this is still, this is problematic. You have got to set all these things up. You have got to have somebody who is basically licensed to do this sort of evaluation. We would like to say, “Well could we get it from the data itself”? And you can actually see stuff in the data that correlates with skills. So for example if you just look at the sequence of gestures that an expert uses they look very clean, right. They are linear, they start at the beginning, they go to the end, and they don’t go back and forward kind of using intermediate gestures? And this is what a novice looks like. If you just plot how they use the gestures. And in fact if you just do, you take that space you can actually see pretty quickly novices start to separate in the space. you take those basic gestures and So clearly there is something here. and you project it down into a 2D that experts, intermediates and So we can actually start to see where you are in the learning curve in surgery from these models. Now that’s using what we call self-declared expertise. It’s just based on how long you have been using the robot, how much training you have had. You can also do something like OSATS on the data. You can have someone actually grade the task that they did. Interestingly it’s much harder for us to replicate OSATS using these techniques. So we drop from about 96 percent to a 72 percent chance to 33 percent. And you can see if you do the same sort of collapsing that things kind of cluster together more if you look at the by trial performance as opposed to by self-declared expertise performance. So we have been thinking about how to think about capturing skill a little bit more precisely in these models. And we think we have something now that’s starting to work, although this is still in evolution. But what we have done is we have adopted a different coding scheme for the data. So one of the problems that I have been complaining about to my group for a couple of years now is that we are working from the raw data itself. So it’s a little bit like taking I am going to take the raw recorded speech signal and we are going to try to parse it into [indiscernible] names. Well you don’t do that in speech, right. You take the raw signal and you do vector quantization of some sort on it typically and then you start to do phonetic recognition. So what’s the version of vector quantization for this? Well think of this: suppose that I take just the curve that you saw and I build a little Frenet frame at a point. So I can compute the tangent. I can compute basically the plane of curvature that gives me two directions. The third is now defined relative to those two. And now I can say, “Well what’s the direction of motion over a small time unit relative to that local frame”? Now this has a lot of advantages. One of which is now I am coordinate independent. So if I am suturing up here or down there it doesn’t matter. The code is the same and it also abstracts the motion a little bit more. And so we have actually found that using even very simple sort of dictionaries based on these Frenet frame discretizations we can push our recognition up much further. And the way we do it is we discretize and we actually look for the substring occurrences in that data. So you get a long string of symbols and you say, “What are the repeated substrings”? Experts tend to have long repeated substrings. Novices are much shorter and different repeated substrings. And so we can build very simple string kernels, string comparisons that actually get us now again kind of in this 90 percent plus range, which is where we start to feel like we are actually understanding what’s going on. And in fact we can do both gesture and expertise, which is interesting because now we can actually break it down and say, “Well, what gestures are you doing poorly and which gestures are you doing well”? And I can illustrate why you might care about that. I will show you an illustration; this kind of goes now towards teaching, which is what I have done here. I have got two videos as you can see. Both of these are experts and what’s going on is we are taking the video, we are [indiscernible] it. And then what we are doing is we are playing the videos together when the same symbol is occurring. Okay. So you can kind of think of it like if they are speaking in unison the video’s play together and if they are saying something different one stops and the other advances. So it’s almost a visualization of edit distance if you will. And what you can see is experts really do converge to a pretty consistent model. They are running at about the same pace. They are doing about the same gestures. There is not a lot of pausing except this guy likes to readjust the needle a little bit and so you will get a little bit of pause there. So that’s cool, it shows that we can align a couple of experts. What about if I take you and tell you to do this task, sit you down with a robot. What can I do now? So there goes the expert, there is the novice. Actually, you probably could have told me that already, right. So you can see it’s not so easy right? Well, so there are a couple of things interesting, obviously --. Yeah, it’s a little scary isn’t it? a surgeon. Luckily that’s a grad student; it’s not >>: And they call these teaching hospitals? [laughter] >> Greg Hager: Well, so here’s the interesting thing. Well there are two things here. So first just to make a quick point here, this person is doing things completely different, right. They are holding the needle differently. The motions are different, but we can still align them. And that goes back to that diagram I showed you before that we are getting the gestures right in the right order. And we can even do it when there is this wild style variation. The other thing is, and this goes to the teaching hospital point, that one of the problems with robotic surgery, and I think this is going to be generally the case as we go to these more collaborative technologies, is it’s really hard to teach people because you can’t stand next to the person and say, “Here’s what you should do”. You are sitting in a cockpit in this particular case doing something and at best the expert can be outside saying, “Oh you are holding the needle wrong there you should fix it”. But they can’t reach down and say, “Okay, here’s how you should do it”, at least when you are inside the patient. >>: But they can have the same video feed. >> Greg Hager: They can, so they can show you what’s going on. But here what I can do is I can basically just put the expert in the bottle right. I can record that video, you can start to do the task and I can immediately detect when you start diverge from what an expert would do. And you know dare I say a little paperclip that could pop up and say, “Are you trying to drive a needle right now; can I help you”? That may not be the best example, but you see what I mean. You can build now basically a dictionary of what everybody ever did, you know inside a patient, in a training task or whatever. And you can start to detect a variation and you can offer assistance if nothing else by saying, “Here’s what an expert did at this particular point in the procedure”. And so it becomes basically a way to do bootstrap training. And also you can do it at this level now of gesture even. So I can detect that it’s the needle driving that’s the problem because you are holding the needle wrong and you don’t have good control. The rest of the tasks are actually doing just fine. You know, they are passing the needle as well as the expert did. And so you can actually do now really, what I think of as interactive training for these tasks. I will give you one other thing that you can do with it, which is I can also learn to do pieces of the tasks autonomously if I want to. I have got a discrete breakdown of the task again. Certain parts of the task are pretty easy to do like passing the needle. So why don’t I just automate that. So here’s a little system we built. This is Nicholas Padoy a former [indiscernible], so you can see this switch probability. So there is a little HMM running behind here which detects when you are in an automatable gesture. And then it switches control over and effectively plays it back. And the trajectory is actually a learned trajectory too. So you sit down, you do the task for a couple of dozen times, the system learns the model both in terms of discrete states, but also it does a regression to compute trajectories for these automated trajectories. So you learn the model, you break it down into things you want to automate, it learns the trajectory and now again it pretty reliably recognizes in this case when you are done driving the needle. And then it says, “Okay, I could do that automatically if you wanted me to”. Again, I am not advocating that this is the way you want to do it, but at least illustrates the concept that the system is capable of now changing how the robot acts or reacts based on the context of what’s happening within the procedure. So, you know, putting it all together we actually can start to build these systems which work together with people and they actually have measurable impact. One of the nice things about this is it’s not just that I can build it, but I can say, “Well did it change anything”? So in this particular case it reduces the amount of master motion that you need to do or the amount of clutching that you have to do, which actually turns out to be important in terms of efficiency. We can go one step further with this. We talked about the fact that we don’t have perception in the loop. So here are a couple of examples of building perception components that we have picked up. And we can start to look at how we could use perception to provide even more context and make these things even smarter. So I am actually going to use the 3D object recognition side of this. And so we did actually some experiments this summer. This is what we call Perceptive Teleoperation. So again da Vinci console in this case it’s wrapped in [indiscernible], it’s connected to a robot which is over in Oberpfaffen, Germany in the robotics and mechatronics lab there. And so we were interested in saying, “Well, suppose you are trying to do these tasks and for whatever reason you have got a poor communication channel. You can’t really get the information across either with the bandwidth or the delay that you need to do the task efficiently”. Can we use perception to augment the performance? And the idea is to say, “Well again, if I can recognize what you are trying to do and I have got a perceptual grounding for the task I can automate or augment some part of the task around the perception cues that are there”. So this is a little, this is actually work that is going to appear at the robotics conference in May. So this is the robot in Germany. It’s being teleoperated from Johns Hopkins on a da Vinci console in fact. And this is without any sort of augmentation. And there is about probably a quarter to a half a second time delay just depending on what the internet happens to be doing at any point and time in this. And so you can see you end up in this move and wait sort of mode. Where you move as far as you think you feel safe and then you wait for a little bit. And this is what they do with the Mars Rovers on Mars, right. They move them a couple of meters and they wait 20 minutes until they see what happened and then they move them again. And, you know, in this case the operator grabbed it, but in fact they didn’t have very good orientation. So this thing kind of swiveled and moved. And in fact in some cases it will actually fall apart. Now what we do is we turn on assistance. It actually parses this structure, this little orange line says, “Oh, I see a grasp point”. It recognizes you moving to grasp and it simply automates the grasp. And so now you have got it in the right sort of configuration. It happens relatively quickly and now you can go back to whatever task you want to do. And it simply automated that little bit of it. And again you can measure the difference that it makes. So in this case it just about doubles the time, or halves the time it takes to perform the task. And in fact the same idea now is what we would like to do in that little knock time task that we talked about. You know breaking this down into component pieces. So one of my grad students I said, “Just as a benchmark use the da Vinci and see if you can do this task”. So you can see he is using all three tools on the da Vinci in this case. So you have got to switch between tools to do it. So this took, you know we could time this, it probably takes 20 seconds or something like that for a human. And this right now takes about 3 to 4 minutes to do with a robot. And so I said, “Okay, your thesis is now defined. So how do you go from 3 or 4 minutes to 20 seconds with a robot”? And I don’t if he is going to achieve that, but at least we can start to see the sort of benchmarks we have to achieve to be in this sort of space with these techniques. So just the last thing, so I have been talking a lot about robotics. And you saw in the beginning of my talk I had also human and computer interaction using vision. And so I wanted to talk a little bit about that. And in fact that’s part of the reason I wanted to stop bye today. It’s to talk about something else we are doing, which is now still I think of it as collaborative computing in physical space. But the physics is much more about people interacting with virtual environments than robots, interacting with the physical environment. This is a display wall project. So in just about a year ago, a little over a year ago, the library came to us. And they were building a new wing for the library. And to make a long story short they wanted to have some technology focus to the library. Just by way of background Johns Hopkins library at one point in time actually had more digitally stored data than the library of congress. We host the Sloan Digital Sky Survey. We host [indiscernible] data from biology. This happens to be scans of some medieval French manuscripts that are in the archives, so terabytes and terabytes of data. And they said, “Now is there a way that we could start to think about ways that people can interact naturally with data in the library space”? And so we built a display wall for them. This is my grad student [indiscernible] with his back to you who built basically a display wall from scratch. Starting in about December, he came up with the physical design, the electrical design, wrote all the software and we opened it in August and we dedicated it in October; so basically 10 months from conception to completely running. The reason again is that our library really thinks of this as a way to start to think about how people will interact with information in the future and how research will be done around these large data archives. And so this is what it looks like. So it’s about 12 feet wide. You can see it’s running three Kinex for gesture interaction. This is the, kind of the opening screen that you walk up to. It’s just a montage of images from Hopkins. So you walk up, it sees you, it builds a little avatar out of the images and you know you can kind of play around with that if you want to. And then we use this curios put your hands on your head gesture and you get a fairly rudimentary, but still workable interface into a set of applications that you can now drive using gestural input. And part of what we are after here is not that these are the best applications or that the user interface is done as well as it could be. But it’s really interesting that’s it’s out in the middle of the university and about 10,000 people get to see it at various times during their academic careers. And so our goal here really is to think of this as a lab. It’s a lab to learn about how people might want to use technologies like this. And then to try to run after them to figure out what would we need to make this technology useful for them. We of course had to have a video game. So we went live and we have basically got an image viewer, a video game and a couple of other kind of image modalities. I was hoping that I would have this to show you. We actually figured out how to load CAD files of buildings in that. So you can start to do walkthroughs of buildings basically at scale using spatial interaction. So it really is a work in progress, but it’s now kind of flipping around and letting us use a lot of the same ideas and technologies by thinking of it more from people interacting again in virtual space. So for example if you are doing something in biology there is a natural task. You know if I am doing molecular design there are things that I want to be able to do. Manipulations I want to take place, that’s my task. I want to parse that and then I want to do anticipatory computing to say, “Well if they are trying to move this particular binding site over here how can I actually run a simulation that will check and see if that’s a reasonable thing to be doing at this point”? So part of it is just exploring that level of interaction. We have had people already come to us and say they want to do art and dance. So they want to make the system react to people doing like a dance performance in front of it. And make that part of the display. Crowd-source science: so we are trying to put connectomics data on it and let undergraduates be labelers for biology data. We need, you know, it’s basically like Mechanical Turk except now with undergrads. And the payoff for them is we say, “Look if we publish a paper on this you are a co-author”. So I would say my goal is if I could publish a science paper with a large fraction of the undergraduate population of Johns Hopkins than that’s my win. >>: [inaudible] >> Greg Hager: So you know there are physics papers with over a thousand authors on them. >>: Wow. >> Greg Hager: Yeah so I was actually talking about these huge experiments, right. I mean it’s --. >>: [inaudible] >> Greg Hager: You know Hopkins is a research university. We advertise the idea that undergrad’s should get involved in research. So what the heck, here they go. And actually we have got, well I see I can’t spell, these French medieval manuscripts are actually motivated by an artist who wants’ to do research on those manuscripts. And he needs something that allows him to interact with the data with the sort of visual scale that the wall can give them. So I really think of it as it’s really just this lab sitting in the middle of the library. And it’s just trying to create the idea that people can do this as a project to create new ways to interact with data in their field. Just to give you some anecdotes about it. So we are getting about right now 10 to 50 sessions a day. It started out at about 500 a day in fact. We have got about 10 students a semester right now that actually come up and say, “I would like to do something with the display wall”. We get about, on the average, one query a week from somebody in the Johns Hopkins community saying, “I would like to build something that could run on the wall for my research or just for whatever personal amusement I have”. We are actually starting to get sites, other libraries in fact, that are calling us up and saying, “Hey, could we put this in our library as well? And would you be willing to share the code”? I don’t think that most libraries quite understand that this is not quite as much of a turn-key operation as they might like to think. But, it’s interesting that a lot of people are latching onto this now. So let me just kind of close by saying that I started out by saying that I think the world is different today than it was 10 or 15 years ago. And it’s really different because of the platforms that we have, and the computational tools that we have, the output devices, you know that display wall could not have been built easily even 5 years ago probably the way I can now. And so we really have to think of what does this mean as --? You know the world has become porous to computing. You know just about every aspect of life can cross the barrier from the physical world into the computational world and back again. So how does that change how we want to think about computing? So one of the things I am thinking a lot about these days is what does it mean to program these sorts of collaborative systems? You have got hundreds, thousands of different things that you would like these robots or these display walls to do. And you can’t have a computer science student sitting there next to somebody every time saying, “Okay, here’s the code that’s going to make this application work”. So how do you think about combining what I think of as programming in a schematic sense? You know saying, “Look, here’s the thing I want to do” with probably some learning and adaptation that then says, “Okay, now here’s how it gets customized to what I am trying to do”. So in the robot case for example if I am doing small OT manufacturing I know the manufacturing steps. I know roughly the handling that has to take place: build the schema, and then run through it a few times manually in a training cockpit like you saw with the da Vinci, have the robot kind of fill in or the system I should say, fill in some of the details and now I have got a workable program. But what’s the paradigm around that? And can we make that something that’s accessible to a broader group? Shared context is important. If we want these systems to work with people there has to be a shared notion of task in context. I think I already said what’s key also is that we have to have these platforms in the wild because we have to see what people do. It’s, you know, I always said I used to have things like the display wall inside the lab, and we would bring people in and they would be really excited, play with it and then go away. And having that display wall out in the world, you know, we spent two weeks, we had a soft opening, so we had the wall up for two weeks before classes started and it was incredible how many revs we went through in that two weeks. We just watched people use it, kind of fixed things, changed things, until it got to a stable point. So we really have to test these things in the wild. And then I think they key thing is once we have this creating interfaces that now really do focus on interaction as opposed to input/output. So thinking about how do I anticipate what’s going on and adapt to it essentially? Thinking about interfaces in terms of a dialog as opposed to, you know, I type something in and something comes back to me. There is state, there’s history, there’s intent around it. And I think the great thing is there is a real reason to do it, right. There are real tasks, there are real industries, and there is economics that can actually drive this. So I will just close by saying you know, I think we are living in such a cool time. And I am so glad that I was here actually to see it. I think computing keeps reinventing itself, but at least relative to what I do it’s now suddenly in a state where we can do all sorts of things that I just couldn’t have imagined even 10 years ago. And so with that I will thank probably a subset of my collaborators at this point in all these different projects. I will also thank the people that support me to do it. And maybe I will just leave you with that final thought about where we may be going some day. All right thank you very much for your attention. [clapping] >>: I just have a question. So I am just wondering [inaudible]. >> Greg Hager: Yeah, right. >>: [inaudible] >> Greg Hager: That’s right, exactly. >>: But for Kinect I noticed that usually the Kinect [inaudible]. why sometimes [inaudible]. So that’s >> Greg Hager: Uh huh, right. >>: But I wonder if that’s [inaudible]. >> Greg Hager: No it is, absolutely. Well so I should say the kinematic data has super high fidelity. You know --. >>: It’s better than Kinect? >> Greg Hager: Oh yeah, I know where the tools are to you know fractions to a millimeter; at least in relative motion. But what they are missing is the context. So the analogy would be if I had a skeleton, the skeleton doesn’t tell me if you’re holding something. >>: Right. >> Greg Hager: And I might be able, within the context of a task, to infer that. Like if I see you reach down and see a pickup motion I say, “Okay, they probably just picked up the briefcase that’s part of whatever they are doing”. But with the video data I can confirm that and I know that. And I know whether you succeeded or not for example. So in fact one of the projects that one of my students is playing with just too kind of get warmed up is can you detect whether or not, in a fairly automated way, what the gripper is holding; so a needle, suture, if it’s in proximity to tissue, but do it without hand tuning something? Just get a few million snapshots and then correlate kinematic data to what you see in the image. And then learn something about the set of states that the gripper can be in. And just that little bit of information can make a huge difference. And we already know that from some manual tests. >>: Something that you have been hinting at is moving from this pure one to one manual task to sort of manual plus [inaudible]. >> Greg Hager: Uh huh. >>: Just curious where your [inaudible] gallbladder removal procedure fairly standard thing I guess [inaudible] done by a robot a week or so ago. 20 years from now is that going to be a one button or [inaudible]? >> Greg Hager: No. >>: I mean [inaudible]. >> Greg Hager: You know, no for a couple reasons. One is no technically I think that would be incredibly challenging to do. And no socially because I just don’t think it’s an acceptable solution. So there is always going to be a human in the loop system. And I think what I imagine more happening --. So we actually, so to give you an example of the sorts of things that we think about doing, so one of the procedures that is still on the cutting edge of robotic surgery is laparoscopic partial nephrectomy. So it’s removing a tumor from the kidney laparoscopically with a robot. And it’s challenging for a number of reasons. One is it’s, the way you do it is you burrow into the kidney, you expose everything and then you clamp the feeder valve to the kidney and then you go and excise the tumor. And when they do that they start a clock. And the reason they start a clock is because it’s well known that after about a half and hour kidney function just drops off like crazy. And the reason you have to clamp it is because if you try to cut the kidney when it’s fully, when you haven’t done that, it bleeds like stink. I mean you just can’t do anything with it at all. So you have now got this really constrained thing. You have got to go in, you have got to cut out the tumor, you have got to [indiscernible] it, and you have got to suture it. And so we look at places like that and say, “Okay, what pieces of that could we provide some sort of augmentation for?” And it’s not just physical. So we provide a dissection line so we can actually, I have got a video where I can show you. We have registered and we show you where you should dissect to get a reasonable tumor margin. We can actually start to, in the suturing case, we can start to automate. So you have to really use three hands to suture so you have got to switch between hands and that takes time. So if you can automate the movement, retraction, or any piece of it you can basically shorten that time and that improves the outcome. And it reduces the difficulty of the procedure. >>: [inaudible] so with airplanes right and like automatic flight they don’t let you, for instance, put them into a stall. Like are you thinking there are other possibilities for [inaudible]? >> Greg Hager: So my dream is if you had these 300,000 procedures and you had them all basically in a dictionary as it were and you are going along you can always be comparing what’s going on now to what happened in other cases. And you know the outcomes of all those cases now. So you can start to predict, okay given where you are and what you are doing this isn’t looking good anymore and by the way here’s the closest example to what you are doing. And here is what happened. And so you really could start to have, you know again paperclip is not the right model to think of, but something that at least could have a little icon that says, “You know, you have deviated from standard procedure here; you may want to check what you are doing and think about it”. >>: The other thing with like thinking of airplanes and like semiautomatic. And I understand [inaudible], but it’s the transition points right? >> Greg Hager: Right. >>: Where you go from back you know to the, that’s challenging [inaudible]? >> Greg Hager: So situational awareness. The thing I have been thinking a lot about recently is automated driving because everybody is saying automated driving is going. And you know you can imagine having an auto driving car which does great until it gets into a situation that it doesn’t understand that says, “Well, I don’t know what to do; here it’s your problem now”. And so suddenly you know in the middle of barreling over a cliff it’s your job to suddenly take over driving and that’s unacceptable. And I think that goes though to this contextual awareness in a task in anticipation and saying, “It’s not just can the system do it, but should it do it right now; because doing it right now might put the human in a position that later on they don’t know how to recover from”. >>: I guess you are in computer science? Do you know what’s going on in say the mechanical engineering/control theory guys in terms of teleoperation, especially with delay? That really speaks to, I think, having this delay you have to do something locally, low delay, meaning some kind of intelligence. Now are they doing anything that will address the sorts of problems you are looking at? >> Greg Hager: So that’s another one of my hobbies right now, in fact. A lot of the science around this in that space right now is looking at things like: how do you actually prove properties of systems with time delays for example? So I can prove passivity of a system. And if I have a passive system even with time delay the system will not go unstable, which if you are doing surgery you obviously would like to be able to prove that your robot won’t go unstable inside the patient. What is missing I think and this is something that, this is kind of my glass of wine conversations, is if you think about doing those tasks there is a certain amount of information you need to perform a particular task. What are the objects in the environment? What are their orientations? Where am I trying to put them? How much do they weight? Who knows what it is. And if you are doing full teleoperation you are assuming that all of that information lives in my head essentially. And I have enough capability to get movement information to the robot to say, “Here’s how you perform the task using what’s in my head”. If you want to start to automate pieces of it what you are doing is you are moving information into the remote side. So now the remote side plus you have to have enough information to perform the task. So I think there is really this notion of information invariance around tasks, which says, “I could fully automate it if the robot had every piece of information relative to the task”. with it. It probably doesn’t so a piece live with me and a piece lives Now how do we prove properties or think about properties of systems where information lives in both places and the sum of the two pieces is enough to perform the task? That’s not something, as far as I know, the control theory people are thinking about right now. The practical solutions right now, I will tell you, are mostly anticipatory. So you try to predict what’s going on and you feed forward the robot, but you try and do it in such a way that you can maintain safety. Yeah? >>: So [inaudible]. >> Greg Hager: I can’t make the robot data public because that’s actually governed by Intuitive Surgical. I have an agreement not to share their data with people. Um, other surgery data, sure, [inaudible] IRB concerns, so privacy concerns or we could share that data. >>: Do you usually publish your data on website? >> Greg Hager: Not until now and that’s largely because again, I have to do these sorts of studies they involve humans so I have to get institutional review board to approve it. And if I put it out on the website I would go to jail. So I kind of try to avoid that, but I can actually ask them for permission to do it. And we have done it in the past and so we probably will. >>: [inaudible] >> Greg Hager: That’s right. [inaudible]. So that will create some interesting [laughter] >> Greg Hager: Well and the other thing, and I am sure some of you know this, data is gold, right. And so if you have data and you have not yet kind of mined that data –-. So it’s really a question: what’s a reasonable period of time that you have to exploit a data archive before it has to be made public? >>: [inaudible] >>: Did you watch the Academy Awards yesterday? >> Greg Hager: I stopped by Rick’s house just to see the [inaudible]. >>: We walked in the house and Michelle Obama was on the screen, so that’s the part we saw. >>: So there was a teddy bear in the show, which I have no idea if it was computer graphics or a robot, it was too small to [inaudible]. >> Greg Hager: Probably teleoperated would be my guess. >>: It walked out and [inaudible]. >> Greg Hager: Oh, okay. >>: [inaudible] >> Greg Hager: I will go check. sure. It has got to be on YouTube by now I am >>: Was it related to that movie? >> Greg Hager: Yeah, the movie. >>: I wanted to see it again to try to start picking apart whether it was computer graphic, pre-rendered or what. >> Greg Hager: Hmm, interesting. >>: So I have a question regarding the large display on the library stock, which is very cool. And I have two questions about that. The first one I am wondering about the user’s usage. So do most students use it to play a game or use it to get information about the, you know, [inaudible]? And my second question is: have you observed any frustration from the student to using the system? And would that frustration, if there is, would that frustration come from how you navigate the library? >> Greg Hager: Well, so these are the usage statistics. By the way this is the dedication day, so that just about got the highest usage. So our usage right now I think was saying kind of 20 to 30 sessions a day. Most people play the video game. About a third of them use the image visualization app and about a small number of them just kind of go through some of the other how to stuff on the wall. And that’s kind of --. Actually I am kind of surprised this isn’t bigger. So I am pleased that they are at least looking at images. And we have got some really nice images. So it actually is fun to do. Um and I am sorry what is your second question? >>: The second question was: have you observed any frustration? >> Greg Hager: Oh, frustration. Yeah, my frustration is the gesture recognition not as well as I would like. But I will tell you when we started the wall project we looked at both Windows and then at Open Source. For a variety of reasons we ended up going Open Source. So we are using NITE as the gesture recognition software. We are not using Microsoft. So my firm belief is that if we were to run Microsoft it would all work perfectly. [laughter] >>: Are there any, because it’s a large space, do you guys [inaudible] multiparticipant interaction with that? >> Greg Hager: Yeah, most of my interest quite honestly is in multiparticipant collaborative interaction. So how do you have three people solving a problem with computation behind it? And in fact nothing for the wall is one to one; it’s all multi to one. >>: So I have a question of the evolution of the training session. >> Greg Hager: Uh huh. >>: I mean there is no absolute [inaudible]? >> Greg Hager: Yeah, that’s right. >>: Usually people are over confident about their skills --. >> Greg Hager: So we don’t do self assessment. So people do tell us what experience they have and we do compare with that as well, but we actually have some clinical collaborators that go through and they do an objective assessment of the data. So that OSAT score is done by someone who is not part of the trial. All right. [clapping] So have we exhausted everyone? All right, thank you.