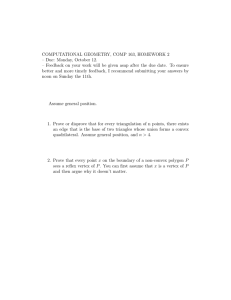

24949 >> Yuval Peres: Okay. So we're delighted to... approximating the expansion profile.

advertisement

24949 >> Yuval Peres: Okay. So we're delighted to have Shayan tell us about approximating the expansion profile. >> Shayan Gharan: Hello, everybody. So today I'm going to talk about the expansion profile, of how approximating expansion profile of a graph and local graph clustering algorithms. This is a joint work with uca Trevisan. So let me -- I'm going to start with motivating the problem and then I'll talk about the results. So I will -- today I will talk about clustering problems. So suppose we are given an undirected graph G. That may, for example, represent friendships in a social network. And we want to partition it into good clusters. Okay. So throughout the talk I'm going to assume that the graph is deregular but the results are generalized to nonregular graphs. And so I'm going to define it rigorously but by good partitioning, by good clustering, I mean a good cluster is -- it is said it is highly connected inside and loosely connected outside. So let me now define how we're going to measure the quality of the cluster. We're going to use expansion. So for a set S, the expansion of S is defined as the ratio of the number of edges leaving S over the summation of the degree of vertices in S. Since we assume that the graph is deregular, the denominator is just D times S. So, for example, here in this picture the expansion of this set S is one-fifth. So the expansion is always between 0 and 1, and it is said to have a smaller expansion, it means it's a better cluster. If we want to think up the expansion as the probability that random walk is started at uniformly random vertex of S leaves it [inaudible]. All right. So is this clear? This is telling you the definitions throughout the talk. The expansion of the graph is also defined as the worst expansion of any set in the graph. This is also called the sparsest cut of the graph. So the sparsest cut problem has been very well studied and there are different lines of algorithms. But let me talk about the Cheeger type inequalities, which would be of interest to us today. So Cheeger type inqualities characterize the sparsest cut of a graph in terms of the second eigenvalue of the normalized adjacency matrix. [[inaudible]] Allen proved that phi of G for any graph is between one-half of one minus lambda two. It's at most root 2-1 minus lambda 2. And in fact this proof is constructed. So you can get a set of this expansion. So this means that you can obtain an order over 1 over root by approximation of the sparsest cut of the graph. So why this is important, because this guarantees independent of the size of the graph. Nowadays, since we have to deal with massive graphs, it would be very good if we can have approximation algorithms that are independent, that approximation factor is independent of the size of the graph. And, of course, this algorithm, the rounding algorithm is very Can be implemented in near linear time. So all you need to do you need to compute the eigenvector, the second eigenvector of normalized adjacency metrics, then embed the graph on the line the value of the vertices in this eigenvector, shoot this line left to right and find the best cut. simple. is that the based on from So in practice, people use this algorithm to kind of, to partition the graph. And so they use kind of a precursor. First they find a sparsest cut, approximation of sparsest cut, divide the graph in two. Then they do this recursively on each of the two sides until the graph is partitioned into a good cluster. But this may not do well in practice because of these two things. The first thing is that although this algorithm is near linear time, you may end up to spend quadratic amount of time for running it, because there is no guarantee on the size of the output set. It could be that after running linear time you may just end up separating three or four vertices from the rest of the graph. Okay. So you may have to run this algorithm order of N times to partition the graph. And, on the other hand, it could be that many of the small communities are misclassified by this algorithm because, for example, once you do this, you separate this giant component, you may end up with classifying a small community because you want which side of the cut you choose doesn't change the value of the cut that you're selecting that much. So in order to overcome the deficiencies, Spielman-Tang suggested the following interesting problem, just called the local graph clustering problem. So suppose we are given a vertex U of interest in a massive graph and we want to find a nonexpanding cluster around U in time proportional to the cluster size. So, for example, think about the Facebook graph, the whole massive graph. Let's say you want to find, for example, the history as a cluster in this graph. And we are given to us, for example, the Facebook page of Yuval. So all we can do is go to the Facebook page of Yuval, and we can look at his friends. And we can go to the Facebook page of his friends and do this again and again. But we are not allowed to jump in this network. So basically the question is: of U to find this cluster? How should we explore the neighborhood And before talking about the results, note that if we can solve this problem, we kind of, you know, get rid of these deficiencies. In fact, we can do the clustering of the graph by running this algorithm over and over and we know that every time the amount of time that is spent is proportional to the size of the cluster that we get, that we're going to get. So the running time would remain linear. And, on the other hand, we know that if we start from small community, then hopefully we're going to find something very close to it. Okay. So first thing -- I'll answer this question kind of and prove the following nice guarantees. So I should also mention that they use this algorithm in their linear time, near linear time Laplacian solver. So they prove the following: For any target set A, if we are given a random vertex U inside A, then the algorithm will find a set S mostly in A with the following guarantees. The expansion of S is at most square root of 5 log cube of N. And the running time is order of the size of the output 1 over 5 squared poly log N. So we call this 1 over 5 squared poly log N as work to volume ratio. This is basically the ratio of the running time of the algorithm and the size of the output. So ideally if this ratio is constant, then by applying this algorithm we can have near linear time partitioning of the graph. >>: 5 is still 5-A. >> Shayan Gharan: Yes, 5 is 5-A. 5. >>: Mostly A is epsilon. >> Shayan Gharan: Yes, mostly A is 1 minus epsilon, some constant. All right. And also this algorithm is randomized. So they can do this with some constant probability. In fact, you cannot prove that for every vertex in A this is true. Can prove that for you. Half of the vertices in A they can do this. So this result has been improved. So under Andersen Chung and Lang improved the approximation guarantee to root poly log N. And the work per volume ratio to 1 over 5 poly log N. And more recently Anderson and Press improved the work volume ratio to 1 over root by poly log N. All of these results -- that the underlying idea of all these results is to run the random walk from view. So basically they [inaudible] random walk but they use very different techniques as you can see here, tell you the truncation of random walks, Andersen Chung and Lang used approximation patient vectors and Anderson and Press used evolving set. So today I will talk about evolving set processes and we'll use that today. Okay. So what do I want to show you from this table? The thing is if you look at the approximation guarantee of all these algorithms, unlike the Cheeger inequality, here there's a dependency on the size of the graph. So it has been an open problem, if one can find a local graph clustering algorithm without any dependency on the size of the graph. And that essentially would give you local variant of the Cheeger inequality because it would give you the same guarantee of the Cheeger inequality. This is basically the object of this talk. And you kind of solved this problem. So we proved the following: We proved that for any target set A, if we're given a random vertex U and A, then we can find a set S such that expansion of S is at most square root of expansion of A over epsilon. And the work payer volume ratio is at most A to the epsilon 1 over root poly log N. So this indeed kind of provides a local variant of the Cheeger inequality because both the approximation guarantee and the running time is independent of a size of the graph. It just depends on the size of the cluster that you're trying to find. >>: Can you explain ->> Shayan Gharan: Yes, up to this poly log N. Yes, I will. So one more thing is that this algorithm generalizes the previous result. In the sense that here you can choose epsilon whatever you want. It features epsilon to be 1 over log of A, we can replicate the previous result. In fact, there's a trade-off between the running time and the approximation guarantee. By choosing larger values of epsilon you will obtain a better approximation guarantee with the worst running time. By choosing smaller value of epsilon you'll get a better running time and a worst approximation guarantee. Any questions? Good. So if we're talking about the proofs or the algorithm, I want to talk about an implication of this result, which is interesting in the theory community. So there's a close connection between this problem and the small set expansion problem. So let me first define the expansion profile of the graph. For any -the parameter between 0 and N halves, then 5 mu is defined as the worst expansion of any set of size at most mu. So, for example, phi of N half is just the sparsest cut of the graph. So it's an expansion problem asks if you can find approximation algorithm for this problem of phi of 5 mu which is independent of the size of the graph. So by Cheeger inequality, we know that we can do this for mu being N half. So basically here the question is if you can do that for any values of mu. >>: From mu which is some constant times the size for any constant, because mu depends on this. So I'm not sure ->> Shayan Gharan: For every mu. It cannot depend on mu. >>: On mu at once? >> Shayan Gharan: Hmm? >>: Mu at once. >> Shayan Gharan: I give you a mu at input, you give me an approximation algorithm. >>: On N. >> Shayan Gharan: Yeah, could depend on N. >>: If it's N, it could depend on G. N is the size of G, right? >> Shayan Gharan: Mu may depend on the size of G. But I want the approximation guarantee to not depend on size of G. So that approximation guarantee cannot depend on mu, for example. It may depend on phi of mu. But it may not depend on mu. So 5 mu is always between 0 and 1. So it may depend on 5 of mu. It could be for example some crazy function of 5 of mu. So the widest problem is of interest to us. First of all, Raghavendra Steurer conjectured that this is an NP hard problem. So the conjecture that for every value of row there exists some delta, much, much smaller than row, such that NP hard to distinguish whether phi of delta N is close to 1. Or if phi of delta N is close to 0. And, more importantly, they proved that this conjecture improves the unique games conjecture. So, in other words, this means that if you want to design an algorithm for the unique game you should start from the small set expansion problem. Because this is an easier -- this is an easier problem than the unique games. Every algorithm -- if you design a polynomial time algorithm for unique games, you'll obtain a polynomial time algorithm for a small expansion problem. >>: So, in other words, you're using these conjectures? >> Shayan Gharan: Yeah, in other words, if you want to refute this, you should start by refuting this. >>: Because if you prove it ->> Shayan Gharan: No. No. Not for the proof. No. It could be that you could design an algorithm for this one but not for the unique games. Okay. So our result as a corollary imply an approximation algorithm for this problem. So we can prove that for any value of mu and epsilon, we can find a set of size at most mu to the 1 plus epsilon and expansion at most root by mu over epsilon. So this that we size of refuted does not refute the conjecture, because the size of the set find is larger than mu. If you could group, for example, that S is within a constant factor of mu, then you would have the conjecture. >>: What's the running time, though? >> Shayan Gharan: The running time is again, if I have a vertex of the target set, then it's going to be something -- it's going to be proportional to the size of the set. It's basically a corollary of the algorithm. So, as I said previously, our algorithm can, our local graph clustering algorithm can ensure you that the output set has a large intersection with the target set. In fact, this gives you the intersection -- the output set, mu minus epsilon fraction of the output set lies in the target set. >>: The definition of 5 mu was global ->>: What? >>: In your algorithm you're used to start with the target set? >> Shayan Gharan: No. Our algorithm, we can run that from every vertex in the graph and just find the best. We don't need to start from the target set. I mean, when the running time would be worse. If you know -- yeah, it depends. For here you don't want to optimize the running time. We just want to find the approximation to the problem. >>: See the different natures, the issue is not locality, the algorithm to do this will start with the vertex, what happened with the [inaudible]. >> Shayan Gharan: So I mean -- so the idea is that if you have a local algorithm, it's a good hope that you can solve this problem, because a local algorithm should -- let's start from the neighborhood of the vertex and then it expands to all of the vertices. So you may hope that along the way you find a small nonexpanding set. So that is why these two problems are kind of related to each other. For example, it could be, cannot prove, could be that if you get rid of the A to the epsilon you could have solved the response expansion problem. Okay. So this result is also proved independently by Kwok and Lau using different techniques. So if there's no question, let me go on to talk about the algorithm and the analysis. >>: So can you go back? You said it's still possible even over there you removed size A could be epsilon? >> Shayan Gharan: Yeah. Yeah, it is possible. And in fact even the algorithm that I'm going to present may do that. But we cannot prove it. I will mention by enough you can that the current exam you don't have any type of example for it. It could be that it can refute this kind of conjecture, don't know. Okay. So this talk, I put more main focus on this theorem. So I tried to figure out the running time. I'm going to show you how we can approximate the small expansion problem. I'll give you some ideas also how we can talk about the running time, too. Okay. So let me now talk about the algorithm for a couple of minutes. Ten minutes, I think. So we use the same machinery, the evolving set process that Anderson and Press used in their work. I will define the revolving set process in a minute, but just, I mean, as I will show you later, the algorithm is, again, very simple. We just run this process and we return the best set that we find. So what is the process? The process is Markov chain on a subset of vertices in a graph. So let me tell you how we can -- define a Markov chain. So for a set S we first choose random threshold U uniformly from 0 and 1, and we include all of the vertices, where the probability of going from V to S with a lazy random walk with [inaudible] is more than U. Right. So, for example, if U is -- if U is less than one-half, then mu set would always be a super set of the old one. Why? Because this is a lazy random walk. So the probability of going from every vertex inside S to S is more than one-half. So it would be a super set of those ones. And if U is more than one-half and mu will be a subset of the whole. So let me show you an example. It would be more clear. So let's say, for example, we run around this process and this cycle. So we start from this graph. Say the first threshold point too. So we include the vertices that have probability more than two going to that single vertex. This vertex probably has one corridor. So we include these two vertices. Then the next threshold is .7, which is not going to change. >>: Perhaps [inaudible]. >> Shayan Gharan: Well, it will have probability one. >>: One? >> Shayan Gharan: >>: No, before. For staying inside. [inaudible] the threshold was .2. >>: [inaudible]. >> Shayan Gharan: So it's above the threshold. >>: Sorry. So then the next one is .1. You retrieve again. And from .8 now you retrieve because these guys have probability only one quarter of going to S. So you decrease the set. And then you can decrease, and you decrease to empty set. So it's not hard to see that this process has two absorbing states, either the empty set or the full set. So since you want to run this process for a sufficiently long time to find a good set, you would like that the process absorbing the full set, the whole set. So in order to avoid observing an empty set, we condition the process to get absorbed in the [inaudible] and we call this new process the volume by S volume set process. >>: [inaudible] the shoots. >> Shayan Gharan: Uniformly random between 01. So here is the Markov kernel for the evolving by active set process. We just need to multiply the original Markov chain kernel by the ratio of the new set over the old set. Just follow simply by the fact that revolving set -- the size of the set is a Martingale volume set process using the new H transform. So let me now prove it. This is going to be a Markov chain for the new. So the rest of the talk whenever I use this hat notation, that means the volume biased process. And whenever I don't, it's the original one. >>: This probably would never be [inaudible] this one could be ->> Shayan Gharan: It could be large. >>: Larger than one. >> Shayan Gharan: This one could be larger than S. >>: Than S. >> Shayan Gharan: Yeah, the probability would never be larger than one. This follows a loop H transform. But, for example, you may see that this will never absorb into the empty set because if S1 is empty, this is going to be 0. So for sure never absorbed in the empty set. Just absorbed in the full set. And it's also not hard to see that this is indeed a Markov chain. So those are the not the probabilities. Okay. So let me tell you the close connection. So as I said, there's a close connection between this process and the random walk process. And, in fact, Diaconis and Fill first started this process in order to compute the mixing time of random walks. So there in the paper they prove a very nice coupling between this process and the lazy random walk. So the finest coupling XTST that has the following properties. First of all, XT is always inside ST. So random walk is always inside the S. And moreover conditional ST being some particular set S, the random walk is uniformly reviewed in that set. Okay. So as an example, note that once the process absorbed in the whole set, the random walk is mixed. So what this means is if you want to compute the mixing time of random walk, you can just run this process and compute the absorbing time of this process. >>: Upper bound. >> Shayan Gharan: Yeah, upper bound. Absorbing this process. >>: [inaudible] all bucks. >> Shayan Gharan: No. So also Anderson and Press in their paper used this coupling to prove that one can simulate the evolving set process. So they will prove that for any sample pad S01, S1 up to ST, we can simulate it in time essentially proportional to the size of ST. So the size of ST times root T poly log N. So kind of what they do is they look at -- they look at the vertex. They do [inaudible] of the random walk and then they condition on the set, on the new set that contains this new vertex and see how it should change. So the upshot is what this means is that this means you can run this process for a sufficiently long time without being verified of the running time, right? So we just need to prove that the process is good. It's going to give you a small amount expanding set. There's no problem with the running time. So think back algorithm, we just do that. Just for small expansion we just run this process from every vertex of the graph and we run each copy once the length of the log is sufficiently large, at least log of mu over 5 of mu, and if any of the other copies find small and expanding set, we just return it and we're done. So very simple. We run the process from each vertex. Find it. Okay. So let me now talk about the analysis. I'm going to show you that there exists a vertex V such that if you run this process, it's some non-zero probability, you're going to find it's not an expanding set. So before going into the mathematical notation, let me give you a high level overview of the proof. So the proof uses the following two observations. The first one is that the evolving set process grows rapidly on expanding sets. And the second one is that the process cannot get kind of get trapped in an expanding set, cannot leave them very easily. So it's kind of putting these together, what you would like to say is if this is the target set, I want to say the process goes very fast until it hits my target set and then it has to spend some time there. So I should be able to find this target set. So, for example, let's say that this is, think about this dumbbell graph. You have two expanders connected by an edge and we'll start the process from this vertex. So you may get some intuition by looking at random walk. So the process works the same as random walk. We know that random walk the first cog in the steps, very rapidly mix in this expander. So the process kinds of do the same thing. Very rapidly expands and covers the whole set. And then for quite large amount of time he can just, he would just add, need some vertices. It will be very close to A. And we should be able to find it. We'll be able to find. >>: You were going over the edge ->> Shayan Gharan: Yeah. >>: So once you get someone over there. There's a difference between random walk and -- I agree, the random walk may take a lot of time for it to keep this vertex. But the process may get increased but it would then decrease. >>: Why won't it then expand into the other set? >> Shayan Gharan: The reason is that from this point of view, like at this time, you can think about running evolving set process from a single vertex. But if you run evolving process with single probability high probability it will go to new set. New process won't be volume biased anymore because it always has this mass at the left. So, for example, if I had this threat, if I include his neighbors, then the size wouldn't change. So it's the same as if you were running the non-volume biased process from this vertex. So with high probability you're going to ->>: Fraction of the neighbors [inaudible] you said is very small. So only [inaudible] next time, the uniform threshold happens to be below this very small ratio. >>: You're at a point, chance going to step into the set. >>: Above the threshold, right? >> Shayan Gharan: Yes. >>: Includes a fraction of its neighbors in this current set [inaudible]. >>: Right. So this vertex is very unlikely to be in the set, in the [inaudible]. >>: It's tough, because it's ->>: That and the objects, to say where, you mean. >>: I was thinking of neighbors. >>: [inaudible] it's an exit. >>: Right. That's probably enough. >> Shayan Gharan: this. If you run it a couple of times then you wouldn't do >>: But it won't group. For those guys' sake -- >>: I don't see where the neighbors won't go into this. >>: For each neighbor, for it to go in, very unlikely. >> Shayan Gharan: I think that the right way to think about it is suppose I just ran the volume, not the volume bias, the evolving set process from a single vertex. Then the process is a Martingale. So the probability 1 over N, the process would absorb in the empty set. Sorry, probability 1 minus 1 over N. The probability would absorb in the empty set. And then only probability 1 over N would get the whole set. My claim is once you get here, then the process -- this new process from the point of view of the expander is just as if you run a nonvolume biased process because you have a giant component at the left. So this ratio is always going to be one. Okay. So let me now tell you how we can make this quantitative. So we're going to prove the following true statements. And I'm going to show you that this is sufficient for the proof. So the first one is the following. We can show it for anytime T if you start the process from some vertex V, for any vertex V, the minimum of the expansion squared of all the sets in the pack is at most order of log of ST with high probability. So, in other words, if, for example, these expansions are large, then you should have a large set by this time. So, again, the theme that if you're going through the expanding set, your sets should go really fast. But in the second statement, I'm going to tell you that, I'm going to show that for quite a large time, the set cannot grow very fast. The set should remain small. Here we're going to show that if you choose T very large, something like epsilon [inaudible] over mu over 5 mu, then all of the sets are small. All of the sets in the sample path are at most mu to the one plus epsilon. Okay. So these two basically contradict each other. One says either you find a good non-expanding set or you're done. If you don't, your process should go very fast. But you know it can't grow very fast because there's a target nonexpanding set. So this means with some probability you should grow slowly. So you should be able to find a small expansion. So let me tell you why this proves the theorem. It's very easy. Just plug in alpha equals mu in the top. Then by union bound you can prove these, both of the things statements cared with some probability mu minus epsilon over 2 something, and then what do you get? One and one you get all the sets are small. They have size at most mu to the one plus epsilon. From the top one you get there exists a set of size at expansion at root 5 over epsilon. So put T equals epsilon 5 of mu, the whole thing will be good five over epsilon. Okay. So now let me tell you how we can group each of the statements. I'm going to start with the top one. First I'm going to show you how we can show the process grows rapidly in one step. Okay. So this is maybe observation due to Morris and Pratt. So they show that for any set S, if you look at the expectation, expectation of the root, of the change in one epsilon is at most one minus five squared. So, for example, think of the five being a constant. This means that you want to set up your set is constant times more than the old one. >>: [inaudible]. >> Shayan Gharan: Hmm. We should have that. >>: More with respect to the handfuls ->> Shayan Gharan: Yeah. So -- so this is with respect to volume biased process. You can write it in terms of the nonvolume bias by multiplying it by S over 1S. You get this. But now this is not hard to prove. So in fact the proof simply follows through the fact that the volume biased process is the Martingale, the size of the set in the volume by process is a Martingale. So this means, the reason is that if your threshold is below one-half, your set, your new set would be 1 plus 5 more than the old one in expectation, and if it is more than one-half it would be 1 minus 5 the old one in expectation. The reason follows from the same reason that the probability of the random walk remain inside the set S is 1minus 5. It's exactly the same reason. So having this in hand, you can just prove this using Jensen's inequality. All right. So now how can we use this to prove that totally like overall the set grows rapidly. So let me call this ratio psi of S. This is a ratio just depends on S. Now, the idea is to define a Martingale. So we'll define this ratio of 1 over root ST times the product of the psi functions of S 0 up to ST. It's very interesting to see that this is a Martingale. Because expectation of NT given S0 up to ST minus 1 is you can write it as expectation of root ST minus 1, root function. Psi of S0 up to psi of ST And then if you take this thing out, thing is a psi function. This is NT ST minus 1 times expectation of root ST., root ST minus 1 times the psi minus 1. Conditioned thereabove. it's just NT of minus 1. This of minus 1 times 1 over psi over ST minus 1 over ST. This is with respect to that. Okay. It's easy to show. Don't worry about it. So what this means is that in expectation, this NT is going to be 1. So it's an application of Markov inequality. You can show it's going to be less than any alpha with large probability of 1 minus 1 over alpha. Plug in the values we'll get the following. With probability 1 minus 1 over alpha, the product of 1 over 1 minus 5 squared is less than alpha times the square root of ST. And if you just take a logarithm of both sides, you're going to get this inequality very easily. So let me now talk about the second part of the group. So here we want to show that the set gets trapped in nonexpanding sets. So let me -let me, instead of proving that all the sets are small, let's just prove that the last one is small. And because the process is growing, if the last one is small, essentially all of them should be small. So how are we going to prove that the last one is small? I'm going to prove for the sample path ST., ST is at most mu to the 1 plus epsilon, with this probability, best probability of mu for some very large T. So here the idea is to use the coupling between the process and the random walk. So my claim is this is equivalent to the following. I just need to prove that random walk started from this vertex V is going to be inside A with some -- sorry. I need to prove that there exists some set A such that random walk is from B is going to be A and T steps with some probability, some non-zero probability with minus epsilon. Why is Y equivalent? Just use the coupling. So we recall what did the coupling say? It said that the distribution of the random walk is -- sorry. It says conditioned on the evolving set process being equal to some particular set, random walk is uniformly distributed on that set. Now, if I look at the distribution of the probability that random walk is some set A, this is equal to the expected fraction of the [inaudible] of ST with A. Now, because we can easily go from the evolving set process to the random walk. If I look at the distribution of the sets, I can just apply the uniform distribution and then take the average. This would give me the random walk distribution. If I want to look at the probability of being in some set A, I can just look at the set distribution projected on to the set A, take the uniform distribution and then take the averages you give me. Probability. Now, this -this tells me the right-hand side is small. I know the left-hand side is smaller than the ratio of A over ST. So because A -- I take A to be small, ST can be at most mu to the epsilon more than A. So ST can be at most mu to the 1 plus epsilon. So I just need to prove this equation, which is just forget about the evolving set process, it's just the random walk. So just -- I just need to prove there exists some set A. You might guess the set A would be my target set. I want to prove that for my target set there exists a vertex that the walk is going to be in that set after T steps with some probability mu to the minus epsilon. So I'm going to prove something stronger. Instead of proving that the walk is at A at time T, I'm going to prove that the walk never leaves A up to time T. All right. And I'm going to prove it with this probability. But these two are essentially the same. If you take T to the epsilon log of mu over 5 mu, and you see these two are exactly the same. So we just want to prove some property of the random walk. Just want to show for every set A there exists a vertex V. Such that the random walk never leaves A with probability of 1minus A to the T. So, again, I'm not going to make it even stronger. I say I'm going to prove if you choose V uniformly at random A it never is going to leave A with this probability. Now this should kind of recall, off the definition of Y of A. So what was the definition of Y of A? It said it's the probability that the random walk leaves A and 1 minus 5 probability that the random walk remains inside A. So intuitively this should hold because if the probability that the walk leaves the set A at each time was independent of each other, you would exactly get 1 minus 5. So you want to say it's even better. So here is some extreme example where you get equality thereabout. And so let's say -- remember that each vertex in A has on average D times 5 of A edges going outside. Now let's say that's the case for all vertices. All vertices has exactly this much amount of edges going out. And then if you do one step of the random walk, the distribution remains uniform inside A. Because everybody had the same number of edges going out. >>: Distribution being conditioned to stay in A? >>: Staying in A. >> Shayan Gharan: Yeah, you do one of the random walk. look at -- the distribution remains inside A. And then you >>: So here you're -- say it again, what are you saying? >> Shayan Gharan: I'm saying start from uniform distribution in A. Now do one step of the random walk. From each vertex exactly the same amount would go outside. If you project the probability back to the set A, you get uniform distribution. But again if you condition A to be in the set. Conditional probability. >>: Some is made more? >> Shayan Gharan: No? Yeah, exactly. >>: When you say ->>: In this case. >> Shayan Gharan: In this -- >>: General, going forward and published. >> Shayan Gharan: In general, some vertices might have more. So what we want to say is that intuitively if some edges go more to the outside, then this probability, the probability of being on them should only decrease. Because if you are on those vertices, you would certainly leave the set faster. So after some number of steps, your probability should be more concentrated on the vertices with less number of edges going out. So you should have a higher probability of remaining inside. So how can we prove this? It's also again very simple. So let P be the transition probability of the lazy random walk. Then this thing is just equivalent of the following: So let me parse this for you. So U of A is the uniform distribution on A. Start from the uniform distribution of A. We do one step up the random walk. Project it back. Y of A it's the identity metrics on set A. You project the walk back on the set. You do one more step, one more step on to the time T where we just add up the summation of probability. And this is exactly that. And then the right-hand side is just this thing T, by removing the T and we know that this is because we know that 1 minus 5 is the probability that the 1 is depending on this. So just have to prove this equation. And I can even deduce it even more. So let X be root, square root of the uniform distribution, and also define this PIA to be a matrix Q. And the left-hand side is just X transpose QTX. All right. I can write this square root of X. Square root of X. >>: All right. >> Shayan Gharan: And the right-hand side is X transpose QX with T. By symmetry. So just want to prove this thing. All right. And this is also simple to prove. So you just need to diagonalize Q and use the fact that positive semidefinite matrix. So you can write X transpose Q of X. >>: The metrics. >> Shayan Gharan: Q is not symmetric. putting an I of A here. But I can make it symmetric by So X transpose QX as summation of X transpose VI where VI are the eigenvectors, so let's say V1 up to VN are the eigenvectors of Q and on the 1 up to the eigenvalues. Then this thing would be exactly this. All set. This is squared. And the right-hand side is just the whole -- so you just want to prove this thing is more than -- and this is again simple to prove because this is just Jensen's inequality. These eigenvalues are not negative. The summation of these guys add up to one because X is a unit vector. This is Jensen's inequality. All right. So I'm almost done. In fact this equation of X -- thank you. So let me finish the talk. So again, we prove that for any mu and epsilon the algorithm can find a set of size at most mu to the 1 plus epsilon expansion root phi over epsilon. This has been the first approximation expansion problem without loss in expansion. So previously there has been many works even with many people in this room where they could give an approximation algorithm with preserving the size of the, preserving the size of the set, the size of the target set but losing the expansion. >>: The log [inaudible]. >> Shayan Gharan: >>: This one? Yeah. >> Shayan Gharan: No, it's log over mu log over N. >>: Log N squared. >> Shayan Gharan: No. This log N, it's not the denominator. >>: Squared N. >> Shayan Gharan: Yeah. Yeah. >>: Isn't that case [inaudible]. >> Shayan Gharan: A bracket here. >>: And also we proved a Cheeger cut can be computed in almost linear time in the size of the target set. And that's containing a local variant of the Cheeger inequality. So let me give you some open problems and finish the talk. So perhaps the remain existing open problem is if one can find approximation algorithm for phi of mu, that's independent of the size of the graph. So, in particular, this is a nice question. If you can prove that, with some inverse polynomial probability mu, all of the sets in the sample path of the volume set processes are at most order of mu. So here we proved that there are at most mu to the 1 plus epsilon and you manage to get this size mu to the 1 plus epsilon to the output set. If you can prove the order of mu you would refuse the expansion conjecture. Now often the problem -- so mu works, they all use the semidefinite programming relaxation, not the random walk. And also would be interesting if one can replicate our results using the expansion results. Semidefinite programming relaxation. It would -- let me highlight some new ideas for the problem. In terms of the local graph clustering problem, one interesting question is if one can generalize these two related graphs, currently we don't know any of the graphs we use through related graphs. And the other one is that if you use a traditional spectra clustering algorithms, as I said, you may misclassify the small communities. But here since you can using local graph clustering you can run the algorithm from every vertex in the graph, you can hope to find overlapping communities in a network. But so there has been -- although there's quite a lot of practical interests in this problem, there hasn't been much of the theoretical works. And it could be very interesting direction to work on. Okay. Thanks. [applause] Questions? >>: What do you make of this using relaxation -- [inaudible] relaxation? >> Shayan Gharan: Yeah. >>: So this result, they use STP relaxation. And if you don't want the set to be large, you can just, you can just plug in mu to be the half and you get Cheeger inequality. But then there is no guarantee on the size of the set. It could be very large. >>: So didn't you say at the beginning you didn't need regular graphs. >> Shayan Gharan: Need regular, but we need unweighted graphs. >>: Multi-graphs? Have to be a simple graph. >> Shayan Gharan: Needs to be simple graph. >>: Needs to be simple graph. >> Shayan Gharan: Yeah. >>: [inaudible]. >> Shayan Gharan: I mean, it's not clear you should. So all the algorithms kind of depend on the number of edges in the graph. But we have a better answer? >>: Interpret, again, the weights are conductances. You just interpret them as multiplicity of edges. Run the very same ->> Shayan Gharan: running time? You can run the algorithm, but can you bound the >>: The bounds will involve these volumes. Involve the weights. >> Shayan Gharan: Yeah. I mean the point is that -- I mean, it might be that the analysis would work. Just none of the people have done this. And I don't know if it is -- you had a difficulty on the problem or not. >>: If the graphs are directed, that's a different course. >> Shayan Gharan: difficult. If the graphs are directed, it's much more >>: So then you mean for weighted graph, the weights are the small, it's the same. Small. >>: Then it's exactly the same. You can just -- interpret it as multiple edges and run the algorithm exactly. There's no difference. If the weights can be very large, the algorithms still work. Just a matter of the analysis ->> Shayan Gharan: Yeah, that there is -- you may have very large edges versus very small, very large weights versus very small weights. I don't know. It could be the analysis works. >>: To be effective, the running time. >> Shayan Gharan: Yeah. Yeah. The running time, but the approximation guarantees would work, of course. >> Yuval Peres: Any other questions? Let's thank the speaker. [applause]