Materials supplies by Microsoft Corporation may be used for internal

advertisement

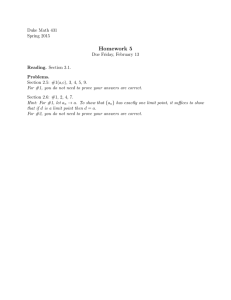

Materials supplies by Microsoft Corporation may be used for internal review, analysis or research only. Any editing, reproduction, publication, re-broadcast, public showing, internet or public display is forbidden and may violate copyright law. [music] >> Yuval Peres: All right. So, very happy to have Alexander Holroyd tell us about Self-Organizing Cellular Automata. >> Alexander Holroyd: Thank you for coming everyone. So I will try to tell you some of what I have been getting up to with Janko Gravner who is at UC Davis and who visited here last month. So I don’t usually do this, but I am going to start with quite a lot of background motivation showing you pictures. Some might call it waffle, before I get, before I get down to things, but rest assured there will be some theorems coming. And the reason I do this is because the motivation for what we are doing is maybe not completely obvious to begin with. So that’s what I will try to show you. And let’s talk about cellular automata. So this is a subject with quite a long history. So certainly Von Neumann and Ulam considered cellular automata and perhaps the most famous example is Conway’s game of life, which probably most people of heard of already, but in case you haven’t you work on the square lat of Z2 and elements of Z2 are called cells and each cell is either alive or dead. It’s two steps and sometimes we call them one and zero. And so you have some configuration of alive and dead cells. And then there is an update rule which in this case is a dead cell becomes alive if it has at least, sorry if it has exactly three living neighbors. And neighbors here refers to the eight of the cells at L infinity distance one. So it’s called Moore neighborhood and if a cell is already alive if, and only if it has two or three living neighbors, otherwise it dies. And you update everything simultaneously in discrete time. So you update every cell according to these rules. You get a new configuration and so on. So there we go, okay. So yeah, so here is a very famous example of Conway’s life. You start with these cells alive and you apply the update rule. So each cell sees how many living neighbors it has and the second step you have this and the third step you have this and so on. And it turns out this is called a glider so this shape just moves diagonally. And there is a huge amount of work that people have done in setting up very special configurations in Conway’s life and other cellular automata to do specific things. So for example this is a configuration that computes twin primes. Very, very complicated things and it does all sorts of complicated stuff. After awhile there will be some output stream coming out here and the locations of these gliders represent the locations of all twin primes. [inaudible] And thanks’ to an extraordinary algorithm called HashLife it’s possible to run these things at an extraordinary speed. So this is already getting to millions if iterations. >>: [inaudible]. >> Alexander Holroyd: And yeah so that was the initial configuration. Here is, I changed one cell. I changed the state of one cell in the initial configuration. And what happens if you run that? Well it starts off looking good and after awhile you realize something is wrong. And well I have no idea what it’s doing, but it’s certainly not computing twin primes. And probably the best description of this is chaos. So it’s very difficult. >>: [inaudible]. >> Alexander Holroyd: I have no idea. It’s unlikely in this particular case. I think that, but I have no idea. So yeah, somehow the point is that a lot is known about what you can do if you start from a very, very specific situation and much less is known about typical initial configurations. For example what happens if you take a uniformly random initial configuration on Z2 and run life? I don’t think anyone has any idea what the answer to that question is. I might come back to that later. Okay, there we go. I don’t want to have to go through that again. yeah that was Conway’s life. So, So as it turns out a lot of the surprising and interesting things that cellular automata can do can already be seen in one dimensional model. So that’s what we will focus on; one dimensional cellular automata rules with a quiescent state 0. So that just means there’s some state so that if all cells are in state 0 then the next time they are all in state 0 as well. And I am particularly interested in what happens if you start from a seed, which is an initial configuration with only finitely many nonzeros. And especially what I am really aiming for is what happens with ‘typical’, i.e. random seeds. So let’s look at some examples. So some cellular automata rules, it turns out, are completely predictable in a strong sense which I will explain. So one example is a so called 1 or 3 rule. So remember it’s in one dimension so lambda TX will denote the state of cell X at time T. And if you want to know the state of XT+1 it depends on three things. It depends on itself and its left and right neighbors at the previous time step. And it’s simply the states of 0 and 1. And its 1 if the number of, if the sum of these is 1 or 3, otherwise it’s 0. And of course another way of saying that is it’s just the sum of these things, these three things modular of 2. Okay, so we normally draw these things with time going down the page. So here is a summary of the rule: if you have 1 or 3 of these three guys are black than the one below it is black. So okay, here is a picture of what that does starting from a single one at the top. So does everyone understand the pictures? So time is going downwards and the universe is one dimensional. So we started off at this time with a single black dot and then it’s times 2, times 3, etc. Now this picture turns out is completely understandable in a certain sense. So it’s, it has a fractal structure, a self similar structure and it’s possible to write down a recursive description of it where this section here is composed of smaller sections that you have seen earlier on and so on. No mystery about this really. What happens if you start from a different seed? So here is a random seed of length 12 or something like that. Well, essentially the picture is very similar. So you still have this fractal shape, but it’s thickened a little bit. And also the configurations in the thickened part are predictable as it turns out. So all these little configurations here are the same and similarly everywhere you see it. So again this is completely predictable. It’s something we call replication and I will come back to define things like this more carefully later. But essentially every seed gives a similar picture for this particular rule. And there is a very simple reason for it in this case, because this rule is additive. So remember it was the state of the cell is the sum of the three above it modular 2. And a consequence of this it’s very easy to prove. If you want to know what happens for any initial seed. For example the seed consisting of these three sites here being one. All you have to do is draw the triangles, the fractal triangles that you get starting from a single one from those three places. You super impose them and then add the modular 2 and that gives you the answer. So everything here is provable. So on the other hand here is a rule that behaves completely differently. This is rule 30. This is Wolfram’s numbering system for elementary fundamental rules. The details don’t really matter. I mean if you see exactly one 1 then you become a 1 and also if you see this then you become a 1. So here’s what happens from a single seed, a single 1. So you might say the best description for what’s going on here is chaos. Although kind of it’s not quite as simple as that because you can see things are periodic along the left hand side, but certainly there is no simple formula that anyone knows to predict what happens a long way down. And not surprisingly if you start from some other seed like this one then you still get apparent chaos. And even in one dimension some of these rules are sufficiently complex that people have proved that they are two incomplete, capable of universal computations. So in particular rule 110 which is another one of these 1s. Cook, Matthew Cook proved that it is too incomplete, previously conjectured by Wolfram. But once again this is, you know, the theorem says you can produce a Turing machine if you set things up exactly right to begin with, that’s very important. So it’s nothing to do with what happens for typical initial condition. Now in some ways the most interesting types of rules are 1s which can exhibit different types of behavior depending on how you start them. And this is such an example. So called the “Exactly 1" rule. It’s simply, you know, you look at yourself and your two neighbors left and right and if you see exactly one 1 then you become a 1. And here is what it does from a single one. It looks kind of similar to the previous pictures, a nice fractal. Here is what it does from another small seed. Also we call replication so a nice fractal, although it’s a little more complicated because the configuration here is not the same as the one here. But still, you know, this is completely analyzable. Now on the other hand if you start from certain other small seeds, like this one, you get apparent chaos in the sense that there doesn’t seem to be any way to predict what happens in the middle. And also from, again the same rule, from some specifically chosen seeds you can get something else, a periodic pattern in space and time. And you can even get mixtures of several of several of these things. Here it’s periodic in a non-trivial wedge at the side, but apparently chaotic in the middle. And rather amazingly some of these sorts of things can be proved. Gravner and Griffeath recently proved that many of these behaviors for this particular rule I just showed you; exactly one replication periodicity. Some of these mixtures occur for infinitely many initial seeds, even in exponentially growing family of seeds. [inaudible] But the conjecture here and this is sort of the point I want to get to. The conjecture here would be that chaos is the typical things. In the sense that if you take --. If you start from a uniformly random seed of length L, you know there are all 2 to the L binary strings that are equally likely, and then with high probability with probability 10 to 1 you see chaos; at least within some cone or wedge. And what does chaos mean? Well, yeah, there is no universally accepted definition in the subject for what chaos ought to mean. And here is one thing you could say here for example that if you look at local patterns, strings of length 10 and look at the densities of them then they converge to something. And if you look at all possible lengths they converge to a non-trivial probability measure which should have some sort of mixing properties. So what happens over here should be [inaudible] independent of what happens over here. So you know you can write down more precise versions of this. The conjecture would be that something like this typically happens. And by the way nothing like this, nothing like this has proved for any cellular automata that I know of, even though this sort of behavior seems very, very common. And you might argue this isn’t quite strong enough because somehow chaos implies it’s not predictable as well. There is no simple formula, but in any case, even nothing like this is proved. Okay. So you might at least imagine that something like this situation is a universal law, something akin to the second law of thermodynamics. If some cellular automata rule is capable of producing chaos, what ever that means, then chaos ought to be the norm in the sense that from long random seeds you should get chaos with high probability. And it’s, you know, it’s easy to postulate a mechanism for this where somehow chaos can start from some local configuration. And once it starts it should win. It should take over everything, chaos should take over. So, yeah, you might guess this is what always happens, but it turns out that’s wrong. So some cellular automata are self-organizing in the sense that the opposite thing holds. And the first real evidence that we know about for this is another paper by Gravner and Griffeath that says they showed that for certain one dimensional rules some seeds give you chaos, but apparently all long seeds give you predictable behavior, but predictable in a non-trivial way. And what we, our goal here is to give a rigorous version of this phenomenon. And now I want to be careful because a lot of the words on this slide, you know, you hear all the time with elaborate claims, and sometimes with not much substance behind, not necessarily. And I don’t want to fall into that same trap. So what we are proving is something very specific about very specific models and this is just motivation. But I just want to give you the idea that we are motivated by some kind of deep questions, even if they are not necessarily mathematical questions. So this is where we are coming from. And I should also mention in a slightly similar vein to this there is this famous or infamous positive rate problem. So there is a result by Gacs, very, very difficult to prove. It’s so difficult that very few people other than the author claim to understand it. But --. >>: Who claims to understand it? >> Alexander Holroyd: Well, sorry Larry Gray has written this thing and –-. >>: [inaudible]. [laughter] >>: [inaudible]. >> Alexander Holroyd: I don’t understand. So the claim is that there exists a one dimensional cellular automata model so that if you run it, but with random noise, so you take an epsilon and at each step, every transition, you have probability. Absent of making an error and changing to a random state then there exists a rule which nonetheless has multiple stationary distributions. Think of it as like a plus phase and a minus phase. So in --. >>: It’s supposed to be running on the infinite. >> Alexander Holroyd: Yeah, that’s right. So for two dimensional models it’s well known that something like that can happen. For instance for the [indiscernible] model, but in one dimension it’s apparently true, but a very, very difficult thing. >>: [inaudible]. >> Alexander Holroyd: Yeah, so that’s aside from it, but it’s the same sort of thing that we are trying to get at. Can you --. Can cellular automata or other similar systems do complex things even in the presence of randomness? Okay, so what we prove is, and I will have to come back and define terms here, but what we prove is that for one dimensional cellular automata in a certain class, started from a uniformly random seed with probability going to one you have replication; which I will have to define. But you should think of it as fractal like pictures, while some seeds do not give you replication. They provably do something more complex and apparently chaos in some cases, although we are not able to prove chaos. Okay. So obviously for this to be a theorem I have to tell you what each of these three things mean: certain class replication in a more complex behavior. Okay, so I will give you one simple example of a rule, which our theorem applies to first of all. It will have three states 0, 1 or 2. And they will, 0 will be white, 1 will be black or grey and 2 will be red. And your state at time T+1 is given by a function of the three things above you; yourself and your two neighbors at the previous time. And the function f (a,b,c,) is if you see 1 or 3 ones in your neighborhood than you are 1. And otherwise you look at A and C, so you just look at your left and right neighbor at the previous time. If exactly one of them is a 2 than you become a 2, otherwise you become a 0. So the way you can think of it is that the 1s are performing this 1or 3 rule, which was the nice predictable one or the additive one. And the 2s try to perform Xor, just on the points not occupied by ones. So Xor meaning they look at their left and right neighbors and take the exclusive all of them. Okay, so that’s the rule. So here’s an example of what it does starting from a very simple seed. This is an apparently chaotic picture, although we can never prove that. So the grey is the ones and they are just doing the additive 1 or 3 rule. So you can think of them as being decided on to begin with. And then the 2s the red things are somehow seeping through, but here’s what happens for a typical long seed. So this is a random seed of length 30 or something like that, totally different. This is replication, so basically you have this fractal picture that we saw at the beginning, except thickened and a few reds around the edges. And you can already see how we might go about proving something like this. And here are some pictures where you don’t have replication. Your provably have something else. Here is an example of something we call quasireplication. I will come back to what that means. There are some non-trivial fractal configurations of 2s. And here is another picture of quasireplication. It turns out that this picture as well can be completely analyzed, this specific seed. One can completely see what’s going on here. I will come back to that, okay. So what’s replication? So first of all lambda is the 1 or 3, the support of the 1 or 3 rule started from a single 1. So it’s this nice fractal triangle thing we saw before. And lambda R is the set of all space/time points within distance R of that set. Okay, so just flatten the fractal by R. And we say the configuration is a replicator of thickness R and ether eter, where eter is a doubly periodic configuration of 0s and 2s on Z2, so space and time periodic. If every bounded component of the compliment of lambda R is filled with a translate of the ether eter. So here is the picture you should have in mind. You have the black which is the nice fractal triangle and you shrink away from it by distance R. And you get these triangular spaces. And each of those is filled with a doubly periodic pattern eter, which we call the ether. So in particular for example this implies, if you have such a configuration that the density of the whole thing is just the density of eter, because these white triangles occupy proportion one of the area. They have density 1 in the forward light cone of the origin, because the black is just a fractal. So it has non-trivial fractal dimension. And also if you have such a situation it turns out it’s, you know, if you have any replicator it turns out that it’s possible to describe the configuration everywhere, even close to lambda. And Even within lambda R and that’s because in these regions in between the white triangles those have bounded width. And over width of about R so there are only finitely configurations that you can see in those places. And it turns out on the basis of that you can describe the whole thing. And this is by Gravner, Griffeath and Pelfrey. So anyway, the take away message is that if you have a replicator everything is predictable. So, here are some pictures. This one we already saw. This is a replicator with 0 ether. You have the gray fractal and you have the white triangles. And in the white triangles you just have 0s. And this is a picture of a replicator of different ether. You have some thickened version of the fractal and each of these voids is filled with this doubly periodic picture. This is for another rule and that our theorem applies to. And this is now for the same rule as the previous picture. That’s different ether, which you can get, so a different doubly periodic configuration. And that would be a replicator with 0 ether as well because you are allowed to have complicated things happening on the fractal as long as these triangles contain 0s in this case. And according to our definition this is something which is not a replicator because we claim --. And again this is another rule that our theorem applies to, but this is an exceptional seed. Our definition said you are only allowed to have a single ether. You have to see it everywhere for the particular seed. Okay, back to my theorem. Here’s what the claim now says. It says, “For certain cellular automata if you take a uniformly random seed of length L then there exists a random number RL which could be infinite and a random ether, eter L so that you see a replicator with thickness RL + L and ether eter L. And furthermore, so okay, if RL was infinity than this statement is vacuous because it says the distance that you pull away from the fractal is infinity. But the probability that RL is infinity goes to 0 and even RL is tight. So the distance that you move away from the fractal is basically just L + something very large. And the same holds even if we change some of the 0s and the 2s, some of the 0s to 2s in the initial configuration with the same ether and the same R. Okay, so that’s the conclusion of them. And now I have to tell you which things it applies to. So think about a configuration of the 1 or 3 rule. Again this is the simple additive rule. And we will define an empty path as just a path of 0s in the configuration that takes steps down left or down right. And an empty diagonal path is a path of 0s where you only take diagonal steps diagonally to the left diagonally to the right. And don’t worry about this too much, but we also define a wide path as a path which is empty, but you can’t move diagonally between two 1s like this. Okay, so the definition is we have three states which we call 0, 1 or 2, 0, 1 and 2. It looks like there is a bracket missing here. We have a range 2 rule, which means you can look at yourself or your two neighbors or your two next newest neighbors at the previous time. And the assumptions are if you just look at ones then they obey the one or three rule. If you are blind to the difference between 0s and 2s then the cellular automata obeys this simple 1 or 3 rule. And the key thing is information about the distinction between 0s and 2s can only pass along diagonal paths. So the 1s you can think of them as being there already, because they just come from this very simple rule that we know about. And then anything that’s white here could be either a 0 or 2 and how do you decide? So if you want to know whether this is a 0 or a 2 then it’s going to depend on stuff above it, but it’s not allowed to depend on whether that’s a 0 or a 2, because it’s only allowed to look diagonally. And so here whether this is a 0 or a 2 can depend on whether that’s a 0 or 2 because that’s a diagonal step and it can also depend on the fact that there is a 1 above it. And there is no reason to prevent that, but information can only flow along diagonal paths. Okay, so --. >>: [inaudible]. >> Alexander Holroyd: Yes, [inaudible] in 0s in the 1 or 3 [inaudible]. Okay so I won’t write down the formal definition, but you can look at the paper if you want to see it. So, okay our conditions as for one of these things that is either compliant with diagonal paths or wide paths we can do as well. This is the conclusion and that includes all the pictures I showed you at the beginning. So what about this provably more complex behavior? So I will be fairly brief about this. So we call a configuration a quasireplicator if there is some exceptional set of space time points. So that each bounded component of its complement is filled with an ether eter. And furthermore this exceptional set if you scale it by A to the N by some fixed number A, which is normally 2, but not always, then it converges to something if non-trivial [inaudible] dimensions. So it’s really a fractal. And, yeah, so it turns out you can prove or rather Gravner, Griffeath and Pelfrey can prove that certain specific seeds are quasireplicators by inductive schemes. So here is a very simple example of a quasireplicator. So the exceptional set is the gray and the red together and you know you can completely understand this picture because you can see what happens here, and what happens here and there are some inductive recursive scheme. You can prove it and it’s not a replicator on the other hand. And rather more surprisingly examples like this you can sometimes prove are quasireplicators as well. And it’s much less obvious what’s happening here by the picture, but it is completely predictable. And that’s a bigger picture of the previous one. And here is another example of a replicator where the ether --. I am sorry, quasireplicator where the ether is this non-trivial periodic patter, but it has these disturbances which nevertheless only live on a thing of non-trivial fractal dimension. So they occupy 0 densities in the bulk, these disturbances. And, yeah so for specific cases it’s often very, very hard to decide whether you have a quasireplicator or chaos. So for instance here we probably think it’s chaotic, but we have no way to tell for sure. Right, so, okay, so that’s the last part of the theorem that for many of these rules that the first part applies to some seeds are quasireplicators and also some are apparently chaotic, but of course we can’t prove that part. Okay. So, and we can say some additional things as well. So you saw in this pink picture of the rule that I called piggyback, which I didn’t define for you and I am not going to, that you can have different ethers, different periodic patterns in the triangles. And basically if you see some ether at all, if for some ether eter it’s possible for some seed that you get that ether and this R [inaudible] finite. Then you see it with positive liminf as the length of the seed goes to infinity. And in fact we can compute rigorous lower bounds on probability of particular ethers. So for instance, for this rule we know that at least 100 ethers have non-trivial liminfs for long seeds and we believe there are infinitely many. Also we can prove this is a slightly kind of different result if you change --. If you take a seed and you change 0s to 2s, some 0s to 2s in the initial seed than I already said it doesn’t affect the ether or the R, but in fact it has no effect at all within some region about log L from the forward light cone. Okay, so what’s, oh wait I should briefly mention this. So although perhaps the particular cellular automata rules that I am looking at may seem a bit contrive, it turns out they are relevant to other things people are interested in, so they have implications for certain two dimensional rules that people are definitely interested in, solidification rules via something called “extremal boundary dynamics”. So I probably won’t have time to discuss that, but that was part of our motivation. So the key tool is one of my other favorite topics, percolation. So we do percolation on the space time configuration of a cellular automata and it’s this 1 or 3 cellular automata, this particularly nice one. So here is a theorem. So if you take again the 1 or 3 rule, this additive cellular automata rule and you start it from a uniformly random configuration on the whole of Z. So in other words if you take fair coin flips 0s and 1s on Z and you run it then, well diagonal paths do not percolate. In the sense that the probability that there is a diagonal path from time 0, so anywhere along the top line to a particular point at time T, for instance T down, goes to Z and decays exponentially to T. And the same applies to wide paths as well, which I didn’t talk about. So here is the picture. Here is the set of points reachable from diagonal paths, reachable by diagonal paths from this interval as for wide paths. And on the other hand if you look at empty paths, which were the ones that can go down and diagonally in the two directions, then they do percolate. So the probability I should have said, yeah there is an infinity missing here. It should have said the probability that exists is an infinite empty path from the origin is positive. And that’s the picture. Okay, so how do we prove this? So it’s on the face of it potentially very tricky because this is a highly dependent percolation problem. We are looking at the space time configuration of the evolution starting from a random configuration. And that’s not our [indiscernible] in any way. It’s highly dependent, because everything is a deterministic function of what happens on the first line. So problems like this highly dependent percolation problems in some cases are very, very tough indeed. So coordinate percolation, Winkler percolations are some of the words and there are a number of impressive results here. I particularly mention this recent result by Basu and Sly, which is on a model of this kind. But it turns out in this case we are lucky. It’s not one of the cases where it’s fiendishly difficult to analyze and there are some other similarly well behaved cases that have been found as well, including these. So the key idea is this. So the space time configuration is highly dependent, but on the other hand we can find certain sets within it. Set’s of space time points where we see independent randomness. So suppose, don’t worry about all the notation so much, let me just explain. So suppose you start from an initial configuration in which some set is uniformly random. Some set A, not necessarily the whole of Z. And suppose you are interested in some set of space time points down here. Well because the rule itself is simply this additive cellular automata rule the configuration that you see down here on S is a linear function of the initial configuration; linear function modular 2. And what we want to do is basically find cases where the matrix that tells you what linear function it is, is upper triangular with 1s on the diagonal. Okay, so if that’s the case than this set of sites you are looking at down here will it be uniformly random. If you just look at that set of sites you see IID coin flips, fair coin flips. And what that basically involves is how do you find out what the matrix is? Well it basically comes down to just looking at the configuration started from a single 1. That’s where all the information lies and this, you know, this picture one can understand. Okay, so that’s the idea. So we sort of now try and find the right sort of percolation arguments that will adapt to the setting. So here is the proof of no percolation for diagonal paths. So here is a diagonal path of length T. The number of such paths is 2 to the T starting from the origin. And I claim for any given path the probability that it’s empty, in other words the probability that all the sites in it are 0s is exactly at 2 to the minus T as if it were everything IID coin flips. And the reason is a dual assignment. For any given path, so I guess I didn’t really define what these pink arrows are, but this is basically how you find the upper triangular matrix. The idea is that each step you take there is a new random site in the initial configuration that you get to look at. That you haven’t seen before. If you are going to the right you have to look that way and when you are going to the left you have to look that way. Okay, so this is true. And of course that doesn’t help because, you know, 2 to the T times 2 to the minus T certainly isn’t going to give me exponential decay, so I have to just squeeze just a little bit more out of it, because this is critical. So, you know, there are lots of ways you can try to do this, but eventually we find one that actually works. So I claim that if you take a given diagonal path the probability that it’s a leftmost, or the leftmost diagonal empty path from the origin, well you can bind it by something a bit better. So the idea is that if it’s leftmost then whenever it takes a right left step like this then that site has to be a one, because otherwise it wouldn’t be the leftmost path. So there is some more information if I know this path is leftmost. And well, it turns out if I only restrict my attention to these right left steps at odd times then there is a dual assignment for them. So in other words if I look at the whole set, the diagonal path and these black squares that occur at odd times then that set is uniformly random. You see uniformly random fair coin flips on it. And the key factor that you need remember, you know, you have to go back to the configuration starting from a single one and the key factor is that down the second diagonal you see alternating 0s and 1s. So it just works. So, basically this allows you to squeeze something extra out of the argument and you get exponential decay. And, yeah I will just show you the pictures, but I won’t say anything. So for wide paths, wide paths don’t percolate and it’s a superficially similar argument, but the details are very different. We just had to find another construction that these dual assignments work for and then proving that empty paths do percolate; again uses a dual assignment, but in a different way. Okay, so then what do we want? So now I want to deduce, so what I just said was starting from randomness on all of Z. So now I want to deduce percolation properties for what happens from a finite seed. So you take a random seed on a finite interval and on the face of it again this seems more difficult still because the space time picture I get is infinite and yet I only have a finite number of bits of randomness to work with. But it turns out this was somehow the surprising thing here. We were just always able to prove exactly what we needed and almost nothing more. So here is a simple result first of all. If you actually start from a random seed of length L then there is, with high probability, there is no empty path from anywhere in the initial configuration in times 0 into this forward light cone by more than distance long L. And how do we prove this? Now we really exploit the long-range dependence. So here is the picture starting from a single 1. If you look at a strip along the diagonal, a strip of width K along the diagonal then the picture that you see is periodic in time. And that has to be true for any cellular automata actually because there are only 2 to the K possible configurations you can see as you go down. But in this case it’s periodic with period or decay. And that’s because it’s this special additive cellular automata. And all these things, things like this, properties of this picture are easy exercises to prove. They are easy exercises. So what that means is that if you start from a random seed again you have these additive properties so this picture is simply a bunch of those superimposed on each other [inaudible]. Then again this diagonal strip has period, the same period as before or decay, or to its width. And furthermore if decay is less than L basically each row of it is uniformly random. So at each time you see fair coin flips, but of course there are a lot of correlation between different rows. So that is basically enough, because if there was a path from up here to somewhere in here then it would have to go through this strip, but for every, you know, each of these is uniformly random so it’s unlikely that you can make your way through it and down a bit. And the picture is periodic with period about K. So if you apply a union bound then you don’t need to look at infinitely many cases, you only need to look at order K cases. So you have the constant K because of the period and you have the exponential decay. So this is small. And basically by the same sort of methods we can prove this more elaboration application of the same ideas. Take the 1 or 3 rule, start it from a uniformly random seed, then with high probability you have these triangular voids of 0s. Above each one there is a strip, which blocks diagonal paths. And by the way, everything I say for diagonal paths is true for wide paths as well. So, right, so there is a strip for which diagonal paths cannot get through into the void below. And furthermore all these strips are spatially periodic, so periodic in the horizontal direction and with the same repeating pattern for all of them. And this is basically where the main theorem comes that’s true and you have one of these rules in our each of these voids you are not influenced by what here. All you are influenced by is this strip and the same periodic strip in every case. from, because if class then within happens outside, up this strip is just So all you can get is an ether, the same ether in each void. And the way we prove that again it’s just sort of a sequence of miracles, semimiracles where you just want them somehow, because --. In the configuration starting from a single one, above each void you have a periodic --. If you go a distance a power of 2 up then you have something that’s periodic with period 3 times 2 to the M. And it’s this very specific periodic thing where you have 1, 1, 0 spaced apart with 2 to the M0 as in between. You know again, anything like this is an easy exercise to prove. It’s not a mystery why it’s true, but it turns out to be the thing we need. So that means that if you start from a random seed then you have some thing similar. Above each void if you go up distance 2 to the M you have something that’s periodic and the same period no matter where you look. And every interval of length 2 to the M within this periodic thing is uniformly random. That’s the way to say it. So then it’s essentially as before. So this is, as I say, what’s behind the main theorem. So as I said, somehow just enough things work to enable us to prove this, but many seemingly closely related questions we don’t know the answer to. So for instance we do not understand very much about super critical percolation started from random seeds. So remember empty paths were in some sense super critical in the sense that if you start with random RZ then infinite empty paths exist. And the way we prove that is we actually prove a bit more that if you take all the points you can reach starting from, oh let’s say the half line origin to the left then it has a frontier. And that frontier has drift, positive drift a quarter. But, for instance, what happens if you start from a random seed of length L? And you look at where can you get to by empty path starting from the left hand end, say? So here’s an example of the picture that you get. Again there is a frontier and the question is at time T how far over has it got? And what we believe is that for typical seeds this gap behaves like a nontrivial power law of T. Now that makes sense because the, remember the black thing is essentially fractal and you presumably have speed a quarter when you are inside the fractal, because it’s kind of random. And you have speeded one when you are in the void. That part at least we can agree on. But it’s a fractal most of the time you are in the void. So that’s kind of why this power law makes sense. But we certainly can’t prove that. And again it’s not true for every seed. So here’s a specific seed and here in this specific case we can prove everything. If you look at this frontier then it’s a microscopic distance from the edge. So even though for the moment we looked at these pictures for a while and we thought this must be essentially the same picture as this, because when do you look at the first thousand [indiscernible] it looks very similar, but it’s not. In this case it’s, this distance is strictly --. This distance over T is strictly bounded between 0 and 1. And in fact the frontier converges to a variant devil’s staircase in the scaling limit. That’s provable for this particular case. And we can prove that this power law holds for some very simplified version of the model that we came up with. And probably something like this power low is behind some cellular automata pictures that we can’t analyze. So here’s an example for one of the rules in our class where probably what’s happening is you have this frontier that obeys a power law and then it causes some other things to happen just on the frontier. So if one could prove the power law then it would prove that this was a quasireplicator probably. And probably something somewhat similar is happening in the chaotic picture I showed you at the beginning. Again, this is one of the rules that our theorem applies to. So long random seeds are nice, and predictable and well-behaved. This one is apparently one, but presumably there is a power law frontier here and then all sorts of complicated things happening behind it. That’s just a bigger picture of the same thing. And you can see there is just a tantalizing mix of order and chaos because you sort of have this power law repeated in lots of places as well and what’s going on here? Well it doesn’t look exactly random. So nothing to say rigorously here and it also completely opens our cases where the percolation is apparently critical. And this is a particular case like that. So lots of problems and we don’t know how to prove that any of these rules have infinitely many possible ethers, although we believe it to be the case. Also very interesting question is trying to do space/time percolation for other one dimensional cellular automata rules, not additive ones. And there are plenty of others. For example rule 30 for which uniform measure fair coin flips is an invariant measure. So that would be a natural place to start with some of these questions. Yeah, so we don’t even know what happens to empty paths if you start from random configuration on the half line and 0s on the other half. And of course proving existence of chaotic behavior in any of these cases is very, very difficult apparently. And, yeah, we are coming back to were we started. Also presumably impossibly difficult, what does Conway’s Life do from a random configuration on Z2? And so the reason I think that’s difficult is on the one hand, you know, you can come up with these very elaborate computers that do interesting things, but they are not at all robust. If a little bit of chaos runs into one then it’s the end. But on the other hand, if you are on Z2 perhaps there are local configurations which do interesting computations and also are very good at defending themselves against attacks from chaos on the outside. You know you have the whole of Z2 to play with, so. And who knows whether those win over the chaoses. So that’s, yeah that was sort of our motivation, but I will stop. [clapping] >> Yuval Peres: Questions or comments? >>: Do you actually prove that anything is not [inaudible]? >> Alexander Holroyd: No, well I guess strictly yes, but in a very, in a very trivial example. The one that was a different ether on the two sides. So it’s a mixed replicator. I mean, and this is more just a symptom of how we define things, but, yeah. >>: Okay, so [inaudible]. >> Alexander Holroyd: Right, right, that’s right. There is a bit more evidence besides eyeballing the pictures than that, which is the, although its round about evidence to say the least. The program that I showed you right at the beginning for simulating Conway’s Life it uses a remarkable program itself which is called Golly by the way, a highly recommended free software. And it uses a remarkable algorithm called HashLife, which somehow stores configurations that it has seen before. And it’s very interesting to see that for some configurations it does very well. If you give it a replicator it goes very, very fast. It gets into trillions of generations in a few seconds. For an apparently chaotic case it’s not much faster than just computing everything as you go, although it’s a bit faster. And for quasireplicators it seems to be into the millions. So there is kind of apparently chaotic ones really are more quasireplicators, but yeah. And I mean could be investigated further. kind of intermediate. It gets some evidence maybe that the complicated then the I think that’s something that >>: Do you expect any classes of rules for [inaudible] same behavior going outside? Now you have [inaudible]. >> Alexander Holroyd: Yeah, I mean [inaudible]. >>: I mean there are just too many rules. >> Alexander Holroyd: Yes, but --. >>: [inaudible]. >> Alexander Holroyd: I mean yeah, I don’t think anything particularly would change if you started looking at bigger neighborhoods, but you would just have to be very careful how you define things. And, you know, all we are doing is --. >>: Is there any theorem which says that if your rule depends on a finite neighborhood that would satisfy certain convictions then you can encounter the following [inaudible]? >> Alexander Holroyd: [inaudible]. >>: [inaudible]. >> Alexander Holroyd: Yes, yes that’s absolutely essential. >>: [inaudible]. >> Alexander Holroyd: Yes, Yes. We --. >>: [inaudible]. >> Alexander Holroyd: Yeah, that’s right. The rules we prove things about are very, very special ones and so one could extend the class of rules for which the theorems work, but would still be very, very special. >>: [inaudible]. >> Alexander Holroyd: Yes, sometimes it does, but typically it doesn’t. That’s what’s new really. >> Yuval Peres: Let’s thank Alexander again. [clapping]