1

advertisement

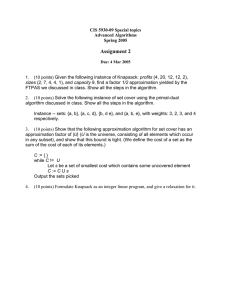

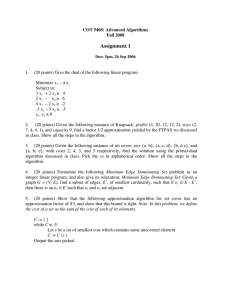

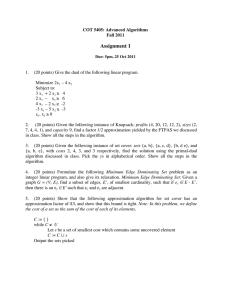

1 >> Kostya Makarychev: Okay. So it's a great pleasure to have Shi Li talking today. So Shi is a graduate student at Princeton University. He's graduating this year. His advisor is Moses Charikar. And Shi did some great work on approximation algorithms. He has Best Paper Award at [indiscernible] and Best Student Paper Award at [indiscernible] and today he will talk about some of his great work in this area. >> Shi Li: Okay. Thank you, Kostya. So I'll talk about approximation algorithms for facility location and network routing problems. Can you hear me? Okay. As you can see from the title, this is about approximation algorithms. So what is approximation algorithms? I guess there are many experts here, but to make this talk to be self-contained, I still need to say something. So we know many natural problems are NP hard. If you believe P is not equal to NP, there are now polynomial time algorithm to solve them exactly. So what we are looking for are algorithms that can find suboptimal solution. Then the approximation ratio will measure how did the suboptimal solution is. There are two major themes in my program, in my research and graduate program. One is orange for facility location problems. The other is black thing, is network routing problems. So why do I make orange, black? Because they are the colors of Princeton University. Okay. So for the major part of the talk, I will be focusing on this part, and the second part, I'll sketch my results on my network routing problems. So here is the facility location problems. And here is a map of Walmart stores in Washington state. You can see Walmart did a very good job. The stores are very well distributed. For example, here, Seattle is a big city. There are many, many stores around here. And this is a problem that Walmart faced as beginning. Suppose we want to put 50 stores in Washington state. Where should we put the 50 stores so that the average traveling time of people to the nearest Walmart shop is minimized. So this is exactly the k-median problem we are going to talk about. And in this problem, we are given a set F of potential facility locations and a set C of clients. You can guess what F and C is, is a previous Walmart example. 2 And we are given a set, number K on the number of facilities we can open, and metric D over F and C. So in the Walmart instance, D is just the travel time. The goal of this problem is to open a set of, at most K facilities such that the distance, the sum of distance over all clients from D, J to S is minimized. Here, the distance from J to S is just a minimal distance from J to the facility IES. For example, in this instance, this might be an optimal solution. We open three facilities. And k-median can also be used as a clustering algorithm. It is a variant of k-means clustering. Suppose we are given a set of data points. We know that from K clusters, how can we recover the K clusters. Well, we just solve the k-median problem, where each point is both a facility and a clients. Suppose we get those K points, then we will know the algorithm of the K points will give us the clusters. Here is a history of k-median from the approximation algorithm point of view. Now we know there are some constant approximations for this problem. But for downtime, we don't know any constant approximation. We only know pseudo approximation and the super constant approximation. For pseudo approximation, there is one approximation with order K log N of open facilities, and there is one with two times one plus epsilon approximation with one plus one over epsilon times K facilities. The super constant approximation, we have log N times log log N approximation, and this was you improved to log K log log K. So for constant approximation, what do we know? The first constant approximation was six and two-thirds approximation due to [indiscernible]. Later, Jay and [indiscernible] gave to six approximation and this was improved to four approximation by [indiscernible]. Now the best approximation is a three plus epsilon approximation due to Aya, et cetera, and this algorithm is based on local search. And in this talk, I'm going to give a new algorithm, which gives one plus root three plus epsilon approximation, and this is joint work with Svensson. And on the hardness, on the negative side, we have one plus two over E hardness of approximation for this problem. So there is another problem related to k-median, it's called a facility 3 location problem. In this problem, instead of our number K on the number of facilities we can open, we have a facility called FI for each facility I. Now, the output can be anything. We don't have the size of S at most K. But in order to prevent us from opening too many facilities, so our objective function will contain a term for the cost of opening facilities in A. The sum of A is F of I. So this is object to function. For example, this is, of course, the facility cost and this is the connection cost. For example if this -- these are the facility costs of facilities, maybe those two facilities are too expensive to open. So optimal solution will be like this, open these two. So facility location problem has rich history, and it was started in 1980s in operation research. Started off with [indiscernible] approximation point of view started in '82. So first approximation algorithm was order of log N approximation due to [indiscernible]. So first, 0 constant approximation was 3.16 approximation due to [indiscernible]. And after long sequence of work, there's a long sequence of work you can see, the current based approximation ratio is 1.488 due to myself. And on the negative side, this problem is 1.463 hard to approximate. You can see there is a very small gap. So there are many algorithms here, and many techniques I used in those problems. This problem is really a test ground for the techniques of approximation algorithms. So I will introduce two problems. Facility location and the k-median. The question is are they related? Well, from the descriptions, you can see they are related in some way. But is there a deeper connection? The answer is yes. I'm going to define it now. So we say algorithm give gamma F gamma C bifactor approximation for facility location if it always output a solution whose facility costs over gamma F plus connecting cost over gamma C is at most OPT. So this is the definition for gamma F, gamma C, bifactor approximation. From the definition, we can see that alpha, alpha bifactor approximation is just alpha approximation. F [indiscernible] is most OPT. And we also say one alpha bifactor approximation is Lagrangian multiplier preserving, or LMP alpha approximation. So now I'm going to define, talk about the connection. 4 Here is the curve of another bound for the bifactor approximation for facility location. That means for fixed gamma F. We cannot do gamma F gamma C bifactor approximation for gamma C better than this number. So there are two important points here. One is when gamma F equals one, we have gamma C equals one plus two over E. This is a hardness we load for k-median. And the other point is when gamma F equals gamma C, which equals 1.463, and this is hardness for facility location. On the positive side, we also have a curve. On the right side of the curve, and the upper bound and lower bound matches. There is no gap but on the left side, there is a gap. There are also two important points here. When gamma F equals gamma C, we have a 1.488 approximation for facility location. When gamma F equals one, we have a two LMP approximation for k-median. You see, I did not give a note for this number here. So what will be a natural guess for the note here based on the previous three notes? Here, hardness, approximation, hardness. Right? A reasonable guess would be is this approximation for k-median? Okay. So the answer is, of course, know, because we only knew a three plus epsilon approximation, right? Okay, in fact, we need to pay another factor of two. So it would be two times two equals four if we use this. So [indiscernible] showed that LMP of approximation for UFL will give a two alpha approximation for k-median. So this is the connection between the two problems. But from this connection, we can only get a four approximation. Okay. Any question before I move to the next part for the introduction part? >>: Is there any kind of [indiscernible] but known gap between LMP approximation and approximation you can't do better than [indiscernible]? >> Shi Li: Oh, this too is tied. Yeah, it's not a hardness without a [indiscernible] example. Use light analysis, you can only get a two. Okay. So I'll move to the next part. I'll introduce our algorithm for k-median. And our algorithm is based on two main components. Both of them are new, and I think they're both interesting. First component is a reduction component. We show that it suffices to give a solution with K plus constant of facilities. And our reduction preserves approximation. We know with k-median, we have a hard constraint, we can only open at most K facilities. But we say okay, we can relax constraint a little bit with K plus constant number facilities. 5 So second components is a pseudo approximation component. We can find a solution cost one plus root three plus epsilon times OPT with K plus order of one over epsilon facilities. This is also interesting, because previously we know if we want to improve three plus epsilon approximation, when you open K plus -- Omega K, K plus Omega K facilities. Instead, we only open K plus a constant number of facilities. And [indiscernible] improve three plus epsilon approximation. So those two components will give us the algorithm. So that is the first component. This is a more specific definition of the component. We have an algorithm A, which is alpha approximation for k-median with K plus C open facilities. Then we can have another algorithm, A prime. Given A as a black box, A prime is alpha plus epsilon approximation with K open facilities. Regarding the running time of A prime, A prime will call A, N to the order C over epsilon times. It's known as C and epsilon are all constants. This is a polynomial time algorithm. And now they're going to describe how the reduction works, but we will just answer all the questions you might have. There are better instances, right? For example, if we have K plus one far away clusters, they are far away from each other, and if we open K plus one facilities, we can open one, each cluster, and then the cluster will be almost zero. But if we open only K facilities, the cluster will be huge since we need to connect a whole cluster to a far away facility. So this will be huge. Then if A gives us a [indiscernible] with K plus one qualities, how can we convert it to a solution with K facilities? And the problem is handled by the definition of dense facilities. We all say that this will never happen after we preprocess in the [indiscernible]. So this is how we define a dense facility. Let Bi be a small ball around i. I will not give the exact definition of a small ball, but there is a definition. For example, this is I, and this will be Bi. We say I is A-dense if the connection cost of clients in Bi it's at least A. And you can see from this instance, I is A-dense A equal roughly to OPT. Because the how OPT solution is contributed by the set of clients here. Right? Then this, we have a-dense facility I. So our algorithm will work directly if there are no OPT over T dense facilities, for T equals order of C over epsilon. 6 So when C and epsilon are constants, this is a constant. lemma. This is the first Then we show that we can reduce any instance to such an instance with N to the order T time, combining those two lemmas, we have our first component. Okay. Questions so far for the first component? >>: If that example is the instance, saying that you're going to rule out this ->> Shi Li: Yeah, so we say that that instance is not good, because there is OPT dense facility, right. So we say that our reduction component can work only if there's no dense facility. By that instance, there's a dense facility. So we will not work directly on that instance. And our second lemma says that we can reduce any instance to such an instance, but I haven't said how to prove this lemma. >>: If this instance in the front is exactly -- >> Shi Li: Okay no. This is a proof of this lemma. [indiscernible] if we know the optimal solution, suppose we know, we know this is not -- this is A-dense, right. Then we all just remove this facility, because this facility is not open. We know that. Supposedly we know OPT. Remove this facility and all the facilities that are [indiscernible] to this facility than this facility. Suppose there is a facility here, because we know the closest open facility of this guys this guy, then this guy won't be open. We remove those facilities. And then we check again. If there is an OPT over T dense facility. If yes, we remove that. So every time we remove a set of facilities, we identify those for Bi of clients. Those ball will be disjoined. So each ball will contribute to OPT over T in the optimal solution so we can only remove at most T balls. That means so even if we don't know the optimal solution, we can just guess the T-balls. We remove the T-balls, then we get a good instance. In this particular example, what instance would we get? We remove this facility and then we have only K facilities. Then it will all be trivial. Okay. Any questions? Okay. I'll move to the next part. We already showed the LMP 2 approximation for the facility location does not immediate integral solution of cost to OPT. But we can relax this a little bit. Instead of 7 requiring an integral solution, we can have a bi-point solution. So if we change this integral solution to a bi-point solution this will be [indiscernible]. So what is a bi-point solution? It's really a convex combination of two solutions. In this instance, we have a solution S1, and we have a solution S2. Think of S1 has K1. S2 has SK2. And the K is between K1 and K2. Then let A and the B be the numbers such that A plus B equals 1, A K1 plus B K2 equals K. Then the bi-point solution will be A times S1 plus B times S2. What this means is we take A fraction of the solution S1 and a B fraction of the solution S2. Then the expectation, we will have K open facilities, right. So this is the solution. Then the cost of this bi-point solution will be A times cost of S1 plus B times the cost of S2. So this is the definition. And [indiscernible] shows that bi-point solution C, we can convert it to an integral solution of cost of 2C. And also, this factor of two is tight, as we can see from this example. So this is a gap 2 instance, but it's also an instance for the [indiscernible] gap. We have two solutions. S1, there is only one facility. And S2, we have K plus one facilities. And from S1 to each client here, the distance is one, and there's mapping here. The distance are zeroes here. Okay? So we can see the cost of this integral solution is two. Why? Because if we have only open K facilities, if you open this one and K minus one facility is here, suppose, then this will have cost of one. This will have cost of one. So the cost is two. Or you can open K plus one -- K facilities here. Then there is a client that will cost two, right. So the integral solution is two. What about bi-point solution? Well, K1 equals S1 [indiscernible] K plus one, and the cost of the first solution is K plus one, right? If you only open one and the cost of the second solution is a zero, if we open K plus one. And the right combination will be take 1 over K fraction of the first guy and the K minus one over K fraction for the second guy. So this open K facilities and expectation. This one we take this, and one over K times the cost of first solution plus K minus one over K of cost times cost over the second solution it will be K plus one over K. So you can see there is a two gap. Okay? So what this means, we have a k-median instance. We can use a factor of two, give a bi-point solution and we 8 have another factor of two to get an integral solution. For these two, we don't know how to improve, and for these two, we cannot improve, because there is a gap instance. However, we can use our first component in order to get our approximation, we don't need an integral solution. We only need a solution with K plus C facilities. Not exactly K facilities. So if we use these, what is a factor we lose here? We show that given a bi-point solution for cost of C, we can have a solution for cost at most one plus square root three plus epsilon over two times C. We use K plus order one with epsilon facilities. So this is our lemma. Before going to prove our lemma directly we talk about the [indiscernible] algorithm and show our improvement. So what is [indiscernible] algorithm to give a two factor approximation. Denotes a factor of two. Suppose this is a bi-point solution, ideally, we wanted to open the facility here with probability A, because we have A times S1, and a facility here with probability B. But we do [indiscernible] away. So we match map each facility here to the nearest facility on the right side, and we see next a set S prime two. So S prime two has the same size as one, and also, it should contain, if a facility is mapped by some facility here, it should be contained in S prime two. For example here, we choose four facilities we must choose these three, because they are mapped and the other one we can choose [indiscernible]. Then the algorithm is with probability A, we open everything here. With probability B, we open everything here. So open this, open this. For the remaining facilities, we open K minus K1 randomly. So here the analysis is based on a command by command analysis. Suppose this is J, we have I1. We have I2. J is connected to the first solution in I1 and the second solution in J2, and we know there is a close facility to I1. The distance is at most D1 plus D1 from I1, right, but here to here is at most D1 plus D2, and I3 is the closest facility to I1. So this is at most D1 plus D2. If we analyze the expected connection cost of J, we do it the following way. If I2 is open, we connect J to I2. Otherwise, if I1 is open, we connect the J to I1. Otherwise, we connect J to I3. Because it will always be open. This we will have a factor of two notes here. Okay, questions? So this is JV algorithm. So basic idea is either I1 is open or I3 is open. We always have a backup. 9 So here is our improvement. So on average we know D1 is larger than D2. Why? Because this is a smaller solution. We open smaller number of facilities. So the cost will be [indiscernible]. So the D1 is greater than D2. And in this graph, the distance between J and I3 could be as much as two times D1, plus D2. Right? So this is big. We don't want it. What if we change I3 to here. Originally, it was here. What if we change I3 to here. Now there's a distance between J and I3 will be D1 plus two D2, instead of two D1 plus D2 is better because D1 is greater than equal to D2. If we use the same analysis, suppose it will go through. What we get is one plus square root three plus -- over two. So that's it. We change I3 from here to here. That means we change to it D1 plus D2, plus to D1 plus to D2. And what's the requirement? If we -- so originally, we map H facility here to the nearest facility here. Now we want to map each facility here to the nearest facility here. We still need to guarantee that either this is open or this is open. That means here we have a star. If the center of this star is not open, we should open everything here. So what's the first try? First try might be we each open star independent. Here with probability A, here with probability B. And that analysis will go through, so the expected connection. But the second part is we don't have a tail bound on the number of open facilities. We cannot guarantee we always open K. Instead, the idea of our algorithm is if a star is very much, we always open the center and then we open some leaves. The probability, we open -- [indiscernible] B, but is roughly B. And we group small stars of the same size, and for each group, we want to use a D2 factor of three. And the number of groups will be order one over epsilon. So we open K plus order of epsilon facilities. This is a rough idea. So any questions for this part? For the second component? >>: [indiscernible]? >> Shi Li: We don't know. But our analysis is tight. We don't have a tight example here. Okay? So to sum up, we have a one plus square root of three plus epsilon approximation for k-median. And the important component is we show that it suffices to give a solution with K plus constant facilities. So our algorithm actually implicitly used Sherali-Adams hierarchy. Actually, at first, our algorithm is based on this hierarchy. Later, we were able to remove 10 the hierarchy and get an algorithm of the same guarantee with the same running time. Where is the Sherali-Adams hierarchy used? >> Shi Li: We call that, we have a instance, right. We say that we can get a good instance. But how do we get a good instance? We guess T events. If we know these T events happened, and then we get a good instance. If we are using Sherali-Adams hierarchy, we don't need to guess. We just look at the fraction of solution. We know this part even happened and then we condition on this -- there's a condition on the event and a reduced by one and then conditioning another event. Finally, we will get a [indiscernible] one LP. So that's how we use Sherali-Adams hierarchy. >>: [indiscernible] approximation algorithm, this was the top point of that curve? >> Shi Li: Yeah, based on that. That is also based on LP, you can see. [indiscernible] so it implicitly used as an LP. It's So now I'll talk about a network routing problems. This is the generally setting. We are given graph G. You can think of it as a network, and then we are given pairs of terminals, S1, T1, S2, T2, and SK, TK. And each pair, SI, TI, wants to set up connection in this network. Maybe each link has limited resource and we cannot connect all pairs. The goal might be route as many pairs as possible. Or if we have to connect all pairs, then one goal might be minimize congestion. What this means by how much should we scale up the resources so that we can connect all of them. Depending on the goal, we have two specific problems. One is called edge disjoint path and the goal is to route as many pairs as possible using edge disjoint path. So each edge can only be used by one path. Is this example -for example, we can connect S1 to T1, S2 to T2, and the answer is two. And another goal is to route all pairs so that we minimize the congestion. This is called congestion minimization problem. In this instance, if we connect all pairs, this edge will be used by three paths. So the congestion is three. So the two problems arrive in the context of VLSI, in the history. In VLSI 11 design, we wanted to put many transistors in a single chip and we used wires to connect pairs of chips. It's desirable to say that we connect many pairs in one layer, or we want to connect all pairs but minimize the number of layers. So this is one setting in VLSI. Also, EDP is an important packing problem in graph theory, and it was studied in a paper by Frank. Moreover, EDP is a very important element in the graph minor theories of Robinson and [indiscernible] from '83 to '04. There are 20 papers over these years. And what's known from approximation algorithm point of view for the two problems? Well, despite of its no history, what we know here is only a [indiscernible] approximation. N is the size of the graph. For congestion minimization, we only have a log N over log log N approximation. This is based on the randomized routing. If we know about randomized routing, probably this is the first algorithm you learned. And on the negative side, we have a [indiscernible] log N hardness for EDP and log log N hardness for congestion minimization. So you can see there are exponential gaps for both problems. Okay. Since we haven't made much progress on EDP, maybe we ask, we are wondering -- we are asking for too much. So congestion -- edge disjoint constraint is really a hard constraint. What if we relax that constraint, we say that what if each edge can be used by C passes. That means we can have congestion C on the graph. This defines our problem, called edge disjoint path with congestion problem, and we say the solution is half our approximation for EDP with congestion C if it allows OPT of other pairs with congestion C. Here, opt is optimum number of pairs you can log with congestion one. So we are comparing ours with optimal solution with congestion one, but we can have congestion C. So what do we know? If C equals one, this is just a normal EDP problem. We have a square root and upper bound, and square root and log N lower bound. And there's a randomized rounding procedure also gives us constant approximation with congestion log N over log log N. For general C, we have an upper bound of N to the 1 over C and lower bound of log N to the 1 over C plus one. If you look at this trade-off, in order to get a polylogarithmic approximation, you need this polylogarithmic congestion. 12 And then in a break through result by Andrews, we show that -- he showed that to get a polylog N approximation, we only need a polylog log N congestion and this result was improved by [indiscernible]. Think of K equals 2 N so those are the same. And for congestion two, we know here we have a square root N. better than the congestion one case. >>: This is a lot What was K? >> Shi Li: K is the number of pairs. We need to connect K pairs. So for K equals two, this readout can only give us square root N. It's no better than the first congestion one case. This was improved to N to the three over seven approximation. So now, what we have, we have a congestion two with approximation into the 3 over 7, and the congestion 14 for polylog K approximation. So what we get is here. With congestion two, we get polylog K. This is a joint work with [indiscernible]. So we improved both results. You can see improve all those results. So it's only problem left is the congestion 1 case. So we show congestion pairs with congestion one to two that there is a polylog K approximation algorithm with EDP with two. Let's recall what this means. We can route OPT over polylog K congestion two. Where OPT is optimum number of pairs with one. Actually, we get a stronger result for free if we change this [indiscernible]. So let's sketch our result. >>: Can you good back to the last slide? >> Shi Li: Originally, there was a [indiscernible] approximation. So OPT is the optimum of number of pairs we can with congestion one, but we get this for free. >>: So suppose the [indiscernible], can you -- >> Shi Li: >>: [indiscernible]. [indiscernible] really important? 13 >> Shi Li: >>: [indiscernible] can you -- >> Shi Li: >>: No, [indiscernible] is 100, 1000, this is still true. Yeah, we thought about it. [indiscernible] on those graphs? I don't know. No? >> Shi Li: Not for that graph. Because all those results are based on the LP. For [indiscernible] graph and we still have a huge gap. Yeah, we don't know. So I only have time to sketch our result. So we solve our natural LP, then we decompose the instance into many good instances. After this step, we throw out -- throw away the LP solution and our algorithm is purely combinatorial. For each instance, we build a cross bar and we solve the problem inside of the cross bar. So the congestion two comes from the cross bar. So what is the cross bar? Roughly speaking, we have many clusters, and then we have many trees. Each tree contains a terminal and contains one edge from each cluster. This is one tree, this is another tree. There are many trees. And if we look at the set of edges here, for one cluster, they are very well connected. Okay. Here comes our problem in a future directions. For UFL and k-median problem, one open problem is what is a gap between the integral solution with K plus one open facilities and the LP value with K open facilities? So why this is important. We conjectured that one plus two over E should be the right answer for k-median. But the natural LP relaxation is not good enough, because it has [indiscernible] gap two. Now we show that that might be enough if we are now K plus one open facilities. Currently, we don't know any gap better than one plus two over E for this question. Also, it might be important, interesting to close the gap for facility location, although the gap is very small now. But there's no gap is better than a gap. So there is another variant of k-median. It's called capacity of k-median. So 14 facility might have a capacities for that problem. We don't know any constant approximation. It might be good to give a constant approximation. For EDP part, one big problem is what can we get with congestion one. And this is even interesting for planar graph. For planar graph, we can only get a square root N. So upper bound is also square root N. And for congestion minimization problem, we can either improve the lower bound or improve the upper bound. The low quadrants are interesting, or so I think. So here is my long term directions. Previously, I just open problem. Open problems related to my talk. And first, I'm opening -- I'm waiting to work on many challenging problems in the approximation algorithms. Those are just a few of them. Then I hope to understand the hierarchies better. There are many results related to LP or SDP hierarchies, but most of them are negative and our k-median result can be viewed as a positive result for using LP hierarchies. And can our techniques use of all the problems and I don't have a candidate yet, a candidate problem yet. It would be great if it can be applied to other problems as well. Also, I hope to understand the limits of LP for approximation algorithms. Recently, there have been results showing that the limit of LP for solving TSP exactly and approximating CLIQUE. It would be great if this technique could be used with other problems. Finally, I hope to get into some other areas such as online algorithms or streaming algorithms. And thank you. Questions? >>: Most of these, a lot of the results which show that hierarchy is [indiscernible]. >> Shi Li: Yeah, that's for hierarchies, not for general. results are for general LP. For those two >>: Yes, but are there problems in some sense [indiscernible] does not look at maybe a smarter [indiscernible]? 15 >> Shi Li: No I don't know. I don't know. We don't know any such -- I don't know of any such result. Maybe because we don't have a good understanding of the LP hierarchies. >>: So for the routing problem, directed graphs so this is -- >> Shi Li: >>: Oh, for directed graph, yeah. Is it soft? >> Shi Li: This is soft. For EDP, square root N is tight. minimization, log N over log log N is tight. For congestion >>: Your result for -- so you have this congestion two, so is it equivalent to say if you have an instance in which every edge is [indiscernible]. >> Shi Li: >>: Yes. Then you get [indiscernible] approximation on that then? >> Shi Li: Yes, if the graph has the property that every edge has a [indiscernible] edge, then you have a point log approximation. >>: I guess that's what I was asking [indiscernible] because this class is certainly [indiscernible]. >>: Yes, but it's smaller. >> Shi Li: >>: Then there is a gap. At least for the LP. What is the status of local search algorithms for facility location? >> Shi Li: For k-median, the local search was [indiscernible] epsilon. That's based on local search. And for k-median -- no, for facility location ->>: And the three there is tight? >> Shi Li: Yes, that is tight. There is a no [indiscernible] gap of three. And for facility location, I think it's 1.7 or something. So I have many results here. I think first this is based on local search and I think this -- 16 I don't know which one, but there's one result based on local search. here are based on LP rounding. >>: So you didn't talk about this 1.488. But all Did you say something about that? >> Shi Li: Yes, it's LP rounding. Based -- so here, we get a 1.5. So it's based on selecting a single number, gamma F. Notice that we have a figure here. So what [indiscernible] did was connect one comma or result here, combined a result here and a result here and connect the two results, get 1.5. What I showed is instead of select one number here, I can select the distribution of numbers that can improve the result. >>: [indiscernible]. >> Shi Li: Just LP. >>: And is there any hope to throw some kind of -- do you just see hardness or some other [indiscernible] ->> Shi Li: >>: For this problem? For facility location problem. >> Shi Li: Facility location. We don't know. At least, we can get a tight bound for now. Because the LP gap is 1.463, and this is also [indiscernible] result. And now we are very close to the hardness result. That means that this should be the right answer. >>: [indiscernible]. >> Shi Li: Yeah, but even if you erase the LPs, this is from the set cover. It's from the max coverage. >>: [indiscernible] together lower bound. >> Shi Li: Oh, lower bound. 17 >>: Lower bound to get the graph before. >> Shi Li: [indiscernible]. So, the hardness impacts the LP. >>: [indiscernible]. >>: This number comes from some solving of the -- >> Shi Li: >>: Which number? The 1.463. >> Shi Li: Yes, this number. from this curve. >>: Gamma C equals -- and one plus two over E also And you get the formula with the red curve as well? >> Shi Li: Red curve, there's a turning point here. point to here then we solve to the ->>: Is there a formula for this red curve as well? >> Shi Li: For this side, yes. computer assisted ->>: If you can't move this For this side, no. This side is based on the Uh-huh. >> Shi Li: We know this is Q and this is 1.488 and the analysis is complicated, but we know it's convex. That's all what we know. Okay. >>: [indiscernible] analysis gives you rigorous bounds on it, right? >> Shi Li: No, I only solve -- I'm not satisfied with computer assisted proof. So I give a ->>: Clarification of what you mean, when you say compared to assisted -- >> Shi Li: No, I solve LP. Solve LP gives us the solution. manually give the solution, say this is a bound. But then I will 18 >> Kostya Makarychev: More questions? Let's thank him again.