>>: Okay. So our next speaker is Dr.... Francisco to Seattle. He got his Ph.D. from U.C....

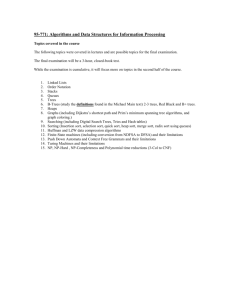

advertisement

>>: Okay. So our next speaker is Dr. Rizzolo. He just moved from San Francisco to Seattle. He got his Ph.D. from U.C. Berkeley under Jim Pitman. He's now an NSF post-doc and research associate at UW. And he's going to talk about the shortest problems and the random trees. >> Douglas Rizzolo: Thanks. I'd like to thank the organizers for inviting me, and the NSF for funding this work on any number of grants. And I'd like to thank Izof [phonetic] for giving such a nice first talk. I would have appreciated it if the bar had been set lower [laughter]. So as the title suggests, I'm going to talk about Schröder's problems and random trees. So Schröder's problems are a class of four problems that were introduced a while ago by Schröder in the 1870s in a paper that everybody cites but I've never seen physical evidence of it existing or even really of the journal it's been published in. But everybody propagates the story that it was published in 1870 so I'll stick with that. So it's four problems about bracketings of words and sets. So the first is a very common problem, comes up in a lot of discrete math courses it's how many binary bracketings are there of a word of length N. So these are bracketings of length four. They're pretty easy to get ahold of. These are one of the classical examples of things that are counted by the Catalan numbers. So the second problem is what if you remove the condition that the bracketings be binary, what if you just had arbitrary bracketings of words lengths N. You require the bracketings to be nonredundant and nonempty so they're finitely many of them. For these you can get a general solution. These have been sort of known for a while. This is just taken off the online Encyclopedia of integer sequences. They're all there, you can search them by name if you want to learn more about them. And so these first two problems are problems of bracketing words. So the elements are ordered. But you can ask the same problem for bracketings of sets. So how many bracketings of a set of size N are there. Binary ones, you can again get a nice formula. And the last problem is what if you remove the binary condition for bracketings of sets? You can do this. Again, you can get a much less nice formula. Looking at this, it goes from the formula it's pretty apparent that doing common tore Ricks for binary things is much easier than the general case. But you can always get formulas, whether or not they're terribly helpful. >>: I'm sorry, I missed the difference between problem one and problem three, could you just ->> Douglas Rizzolo: Yes. >>: Go back and ->> Douglas Rizzolo: Sorry. Stop me if I go too fast at any point. So in these, you're bracketing sets. So the elements aren't ordered. Right? So you can sort of see a difference. For example, for these ->>: These, they're the same number. I get it. >> Douglas Rizzolo: No, here I've only shown five of the 15. There were too many of them to list all of them. >>: I see. >> Douglas Rizzolo: Sort of indicated underhandedly in language. These are some of the bracketings of size four. >>: You could have one and three in a set and two and four in a set. >> Douglas Rizzolo: Exactly. So it's the basic change between problems one and two and problems three and four is you're trading in order structure for a labeling structure. And part of what Jim pitman who is my co-author in a lot of this work has done is figure out what's the relation between labeled structures and ordered structures of these types. Okay. So what we're interested in is what do these look like if you pick one uniformly at random for some large N? And the way we're going to look at this is we're going to cast it in the setting of random trees, which is a fairly well studied field. So the bijection we're going to use with trees is in some sense the obvious one, although there's lots of bijections between these problems and various models of trees. So, for example, the first problem is going to correspond to rooted ordered binary trees, sort of in the following way: It's just there's a somewhat obvious nesting structure that these bracketings have and those nestings become the vertices and leafs corresponding. This is what our trees look like for binary word bracketings. And we can do binary set bracketings and here it's sort of clear that now we have labels and though you may not be able to tell from the picture these trees aren't ordered. They're rooted. And similarly we can do this for the fourth problem and you can see the only difference is that we're allowing sort of arbitrary degrees instead of just binary degrees. Okay. So to look at these, what we're going to do is to frame these as problems of looking at conditioned Galton-Watson trees. And Galton-Watson trees have been fairly well studied. They've come up a bit, but although in the context of conditioning on the number of vertices, never in the context of the number of leaves, which is what we have in this particular case. So let's recall that the definition of a Galton-Watson tree is you just have some offspring distribution and you take distribution on trees that makes the outdegrees of the vertices as independent as possible. So that's -- you have this product form over the degrees in the tree. And just sort of been known for a while that a lot of combinatorial models fit very nicely into the Galton-Watson tree framework. For example, if the offspring distribution is geometric and you take a Galton-Watson tree with that offspring distribution condition, it has N vertices, what you get is a uniform random tree on N vertices. So our hope was to be able to do something like this with the trees appearing in Schröder's problems. And, in fact, you can. And Jim Pitman and I did this along with Curien-Kortchemski at around the same time. It was -- for the first two problems it's fairly straightforward, because the trees are -- the trees in Schröder's problems are ordered and Galton-Watson trees are root ordered trees. They're the same type. And you can just go through it. And what you get are these two offspring distributions. So in the binary case you get the only thing you can really have, which is uniform distribution on vertices of outdegree 0 and outdegree 2. And in the general case you get this somewhat stranger offspring distribution where it's not necessarily clear where it's coming from, but the key point is that for I greater than 2 you have some number risen to the power I minus 1. And the reason that's going to be nice is just because if you sum over internal vertices of a tree, of their outdegree minus 1, what you get is just something, a formula in terms of the number of leaves. So give a quick proof of this to show how these things go. If you look at the trees appearing in Schröder's second problem, if you take a Galton-Watson tree with that offspring distribution, you just get this product form, and you can compute the sum in the exponent of the second one and you get a formula in terms of the number of leaves. What this says if you condition on the number of leaves, everything has the same probability, so it's uniform. And that's basically how that goes for the first two problems. Now, for the second two problems, it's a little more difficult, because you need something to -- you need a way to get from rooted ordered trees, which all Galton-Watson trees are rooted ordered trees to rooted labeled trees. And this is sort of a classical problem. It's been done in the case where you're labeling vertices and in that case it turns out to be a bit easier, but you can also do it when you're labeling leaves. And the basic idea is the following sort of transformation is if you take some rooted ordered tree and just in ordering of the number of leaves in the tree, you just label the leaves from left to right. It's one of the first things you might try. It wasn't one of the first things we tried for some reason. I can't tell you why. And the idea here is if you now take ->>: Can you go back? >> Douglas Rizzolo: Uh-huh. So ->>: Okay. >> Douglas Rizzolo: It might not be clear from the picture. But the tree on the right-hand side is unordered but labeled. All right. So in this case you have for binary trees it's the one and only critical Galt and Watson offspring distribution on binary trees. And the case of the fourth problem, you get this even stranger distribution. But it turns out if you take a Galton-Watson tree with one of these distributions and independent uniform ordering of the numbers 1 through N, and go through this label the leaves from left to right by this ordering and then forget the order, you get the trees appearing in the second, I mean in the third and fourth problems respectively. So what this does is basically cast looking at Schröder's problems at looking at random Galton-Watson trees conditioned on their number of leaves. So looking at Galton-Watson trees conditioned on the number of leaves is sort of a recently developed thing. There are at this .4 or five distinct approaches to dealing with them when they're large. I'm going to tell you about what I think is mostly the right approach or the easiest and most intuitive approach to dealing with conditioning Galton-Watson trees on their number of leaves. Okay. So I should mention perhaps where these have come up before. The trees appearing in the second problem. These are rooted ordered trees with no vertices of outdegree one. These recently came up in studying non-crossing arrangements in the plane and looking at Brownian triangulations and things like that in work of Curien-Kortchemski, sort of where they came at these from. Okay. So how do we deal with these? What is there to say about Galton-Watson trees, conditioned on their number of leaves? So the way I'm going to do approach the problem or at least the way we're going to approach it today is by relating trees and leaves with trees with N vertices and something of a nonobvious way. So the way we're going to do it is we're going to start with a rooted ordered tree with N leaves and we're going to transform it into a tree with N vertices by the following procedure. So we're just going to label the leaves from left to right, just increasing order. Once we've done this, we're going to label all of the edges in the tree. And the labeling we're going to do is we're going to label the edge by the index of the smallest leaf in the tree above the edge. So if you were to remove the edge and look at the tree above it, the tree further away from the root, you'll get the smallest leaf and label the edge by that. And then the transformation is to collapse along these spines to get a tree. So what's happening here is if you look at the vertex labeled one that's coming from the spine from the leaf labeled one to the root and the vertices attached to it are all of the spines that touch the one labeled one. So we have the spine labeled two, five and six. And you sort of repeat this procedure and it collapses the tree into a tree with N leaves, I mean into a tree with N vertices. So it's worth noting, this is something of a classical transformation. This is one of the many known bijections between binary trees with N leaves and trees with N vertices. So this is something of a classical transformation at least in the case of binary trees. And we're going to do it just sort of in general. And what's nice about this is this transformation actually preserves the law of Galton-Watson trees. If you do this to a Galton-Watson tree, what you get out is again a Galton-Watson tree. So here's the theorem. Basically says that if you start with a Galt and Watson trees, do this transformation the result is again a Galt and Watson tree but with a different offspring distribution. You can write down explicitly what that offspring distribution is. And other nice properties, if the tree you started with is critical, that is the offspring distribution has mean one then your new tree is also critical, offspring distribution has mean one and, again, if you start with something that's finite variance you end up with something that's finite variance. And that can -- that last statement can be extended, that if you start with something in the domain of attraction of a stable law, you get something in the domain of attraction of the same stable law. Okay. So just to repeat, sort of to say in a different way that this is a bijection from binary trees to general trees, that if you take the trees appearing in Schröder's first problem and do this, what you get is a uniform tree on N vertices. And that will mention that again as to something interesting that comes out of this study because of this. >>: Can you explain bijection again? >> Douglas Rizzolo: The bijection? Okay. Yeah. So this transformation, you start with -- right, you just label the leaves and you label the edges. And if you look at the spine now labeled one, you attach to it as vertices all of the spines that touch it. So two touches it, five touches it, six touches it and those become the children of one. So you get two, five and six. And similarly, if you look at the spine two, that's touched by the spine's three and four. So you get as a child of two, three and four, in the order that they're appearing as they touch it. And you go through with five and six, they're leaves. And that's the transformation in general. >>: One with longer by one edge, does that make a difference? >>: No, it's not a bijection on general trees. If you restrict to binary trees, you get a bijection from binary trees with N leaves to trees with N vertices. So this in general is definitely not a bijection. But if you look at -- if you do this only to binary trees, then it is a bijection. And that's because you can basically just reverse the process. If you restrict -- if you require everything to be binary, you just sort of expand each vertex, you take its degree and you turn it into a path of that length and attach things as required. And the only way to make it binary. >>: Match Galt and Watson trees to Galt and Watson trees and for all Galt and Watson trees on the right is there a Galt and Watson trees tree on the left? When you get all such, when you get all such Galt and Watson trees out of this? >> Douglas Rizzolo: I'm fair sure the answer is no. You can sort of -- when you write down what the distribution is of the tree on the left, what it is is it's -- it's what I would call a compound geometric distribution. Right? You're summing up -- you're looking at a sequence of independent things until you see the first 0. And then you're summing those up. And I quite frankly would be surprised if you got everything from a procedure like that, but I don't actually have a proof that you don't. Okay. So are there any other questions about this transformation? Okay. So one thing this does is it gives you by this remark it gives you a connection between binary trees with N leaves and uniform and just regular trees with N vertices, and as a consequence of what I'm going to say, what turns out that a uniform tree with N vertices is almost a binary tree with N leaves. And there's a very explicit coupling of the two that has such that the Gromov-Hausdorff distance between them is small. Okay. And the way we're going to get to something like that is through the depth-first processes of these trees. One of the nice things about ordered trees, you can make use of the order structure to get bijections with conditioned random walks something you can't do as nicely with labeled trees, which is sort of why moving to the Galton-Watson framework is useful even for dealing with the third and fourth problems, which are not inherently, are not inherently ordered. Okay. So the most -- one of the basic depth first processes is the depth first walk. Here's a formal definition, but the basic idea is you start at the root and then you go to the its left-most child from there you go to that one's left-most children unless it doesn't have any more left most children that haven't been visited at which point you go back to the parent and proceed around the tree in this manner. And this gives a nice way to order the vertices of tree, sort of the depth first order. And so this is the order we're always going to be using. If I have a root ordered tree and I list the vertices V1 through VN these are going to be listed in order of appearance on this depth first walk. So once we have that, the easiest depth first process to deal with the is depth first queue. So you take a tree. The depth first queue is at step N is just sort of the random walk that sums up the degree of the vertex minus one, as you are sort of going around. I should mention that degrees are always out degree here. So it's the number of -- number of vertices that are adjacent to it and further from the root. Okay. So you get this? As the depth first queue. And the reason this is easy to deal with for Galton-Watson trees is that it's the first passage bridge from 0 to minus 1. So if you have Galton-Watson tree with offspring distribution C, then SN as I said is just a first passage bridge from 0 to minus 1 of a random walk with the shifted step distribution. Okay. So this is an example of the depth first queue of a random binary tree with 11 leaves. Okay. So the reason for introducing these depth first queues or the first step is that the transformation I was discussing before has a fairly nice representation or has a fairly nice action on the depth first queues. So if you look at it, what happens is the following: You take the depth first queue and suppose you only observe it when it steps down. You only observe it after negative steps. And you just take that walk. That's the walk that you have in red here. And then just sort of compress that, right? You only just -- this is just a time shift of the red walk on the previous slide. This again is a first passage bridge. And it is in fact the depth first queue of the transformed tree. Okay. So that's a fairly straightforward action on the depth first queues. And it also shows you that the two trees really aren't that far apart in terms of the distance between their depth first queue, because you're basically just waiting until you have steps down and really how much could go wrong in between the steps down of one of these walks. The answer is a lot. But it turns out that it doesn't in this case, because things are pleasant. Okay. So what we can get out of this is sort of this theorem. And I apologize for having so much text on a single slide, but that was no escaping it. So what this theorem says is that if you take a Galton-Watson tree with fine nice variance mean 1 offspring distribution and you look at the transformed tree and you look at their depth first queues, this gives you a coupling between the depth first queues of those trees, and if you scale appropriately, the joint distribution of the two processes converge to the same Brownian excursion. Okay. So what this is saying is that the distance between -- and so the thing to note on this is that this is the same Brownian excursion here and here. They're converging to the same thing. That's the joint distribution of the two. And the way to prove something like this is let me remark before I remark on the proof. It holds under weaker conditions you can prove sort of the analogous theorems for offspring distribution that are in the domain of attraction of a stable law. You can do this coupling and make it work but it's considerably more difficult to do so. So the way we do it is this sort of proposition of showing that the uniform distance between the two depth first queues is not far apart. And that basically comes just by looking at the picture. The whole thing sort of comes down to you look at the time change of how far apart are the steps down. Not that far apart. You can basically scale those and have them go into the identity. And then if you look at the distances between the two walks, well, I mean, the walks just aren't going to get far apart. They don't have enough time to get far apart when you're doing this. And this sort of works out because in the limit we're going to get standard Brownian excursion, which is continuous. And basically the modulus of continuity, the distance between these two walks is controlled by the modulus of continuity. And that's going to 0. So we can get this type of proposition connecting them. >>: How far do they really get apart? This loop in, loop back out? >> Douglas Rizzolo: Oh, yeah, this is not nearly the strongest statement you can have. In general, for finite variance, it might be. In our cases, we have some exponential moments on the offspring distribution. So you can do a moderate deviations type thing. You can get, I think -- I think you can put an N to the one-fourth plus epsilon and get decay of order E to the minus alpha N to the epsilon. >>: [inaudible]. >> Douglas Rizzolo: Should be longer. Yes. I suspect it could be. I'm going to say I haven't actually -- I haven't sort of looked into how tight you can get these tails assuming exponential moments. But sort of there is obvious theory there that you could use to try to work, to try to work that out. So existing deviation theorems could give you rates for something like this. Okay. So once we have that -- so that's sort of the depth first queue. What its convergence says but the resulting tree isn't that obvious. And it says you some things, but it doesn't -- it doesn't tell you that the whole tree converges, say, in a Gromov-Hausdorff sense. It just sort of gets you close to a result like that. To get the convergence of the tree in a Gromov-Hausdorff sense or as a whole, you need to take -- you need to look closer at the contour process. So the contour process is just the process that goes along recording the distance from each vertex to the root as you go along the depth first walk. So that's what this definition says you. And the nice thing about this is if you assume exponential -- that you have some exponential moments of your offspring distribution, you get these sorts of moderate deviations for the distance between the depth first queue and the contour process of a conditioned tree. So this theorem, when TN is conditioned like a Galt and Watson tree to have N vertex software due to Markert and Mokkadem in around 2003. And more recently it was done in the case when you have N vertices, and you can basically just reduce it. I mean, in the case of N leaves and you can basically just reduce it to their proof. So getting it for trees conditioned N leaves once you have N vertices. Once you have it for trees for N vertices isn't so bad for that though. One thing I should mention, though, since we have this for both, what this says you is that the distance between the contour process of a uniform binary tree with N leaves and the contour process of a uniform tree with N vertices, you can couple them so that you -- so that they're very close together. You have these sorts of moderate deviations for the distance between them at least as Yuval was mentioning, you can probably do better than this. But still this says you that a uniform binary tree with N leaves in a very strong sense almost a uniform tree with N vertices, which I think is something of an interesting coupling. So once we have convergence of the contour process, we still want to say something about the tree itself, especially for the third and fourth problems, which aren't naturally ordered. So it converges with the contour process is nice but it's not really what you're interested in in the first place. So the way to make rigorous something about convergence of the whole tree that we're going to do is Gromov-Hausdorff-Prokhorov convergence. So we're going to let MW be the set of equivalence classes of compact metric measure spaces that are pointed, and we're going to define the Gromov-Hausdorff-Prokhorov distance between them as usual. Usual is not the usual definition but this is the one I'm using, as you minimize over the distance, of the distance between the roots, the Hausdorff distance between the sets and Prokhorov distance between the measures over all metric spaces and isometric embeddings of the two into a common metric space. I should mention that theoretically speaking this slide is complete nonsense. None of that means anything in ZF or choice if you want quantifying over all metric spaces, several times over, you just can't do that. But there is a way to formalize this in terms of classical Zermelo-Fraenkel set theory. So I mention this because usually it doesn't matter that you can't formalize this. But every now and again you'll find people getting in trouble because of that. So sort of mention that you can in fact formalize this. Okay. And the nice thing is that this metric, this Gromov-Hausdorff-Prokhorov metric plays nicely with encoding trees by things like their contour functions, and the interaction is sort of by -- you can take the contour function to define a metric to define a pseudo metric, rather, on the unit interval, and then when you sort of quotient out to make it non-metric you get a tree. That's sort of what this is doing. Is you're defining sort of the pseudo distance between two points by a function is just some the values of the function subtract the minimum between them. And there's nothing inherently natural about this definition the first time you see it. But if you sort of write down what this means for a tree, for a finite tree, you'll see that this sort of gives you the gravimetric on the tree, and you've just sort of filled in the edges by unit intervals. Okay. So if we take this pseudo metric, we quotient out by it. We get a compact metric tree. And we can push forward any measure we want on to that. And nice thing is that this map that takes continuous non-negative continuous functions that start and end at 0 and measures to trees, you actually have a continuous function. This says you sort of everything you want to do in terms of composing them, works nicely. Now I should mention while this lemma has certainly been in the folklore since as at least the early nineties as far as I can tell it's only been written down the past several months. So this is sort of the lemma that lets us actually do things with this procedure of constructing metric trees. And the sort of universal object for this setting is the Brownian continuum tree which is what happens if you apply our construction to standard Brownian excursion and Lebesgue measure. This gets you Brownian CRT, which is slightly different from the one that all this originally defined where he had twice Brownian excursion. But this has become the more standard normalization. And sort of coupling this with the theorem about convergence of contour processes, you get sort of the following theorems for convergence in distribution of the trees appearing in Schröder's problems with respect to this Gromov-Hausdorff-Prokhorov topology where the measure we're putting on them is the uniform probability distribution on the leaves and you get this explicit formula available. Okay. And I think that's it. Thanks. [applause]