>> John (JD) Douceur: So I would like to... candidate at UCSD currently it advised by Amin Vahdat and...

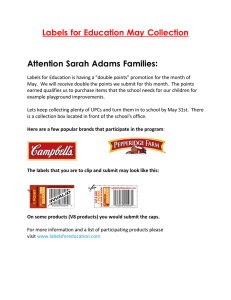

advertisement

>> John (JD) Douceur: So I would like to introduce Meg Walraed-Sullivan who is a PhD candidate at UCSD currently it advised by Amin Vahdat and Keith Marzullo. Despite the fact that she has not finished her PhD, she actually already has quite a history with Microsoft between her Masters and PhD she worked for a year in the Windows Fundamentals Group working on appcompat and she's also had to post docs with Doug Terry at MSRSVC. >> Meg Walraed-Sullivan: Internships. >> John (JD) Douceur: Excuse me? >> Meg Walraed-Sullivan: Internships. >> John (JD) Douceur: Internships, excuse me. >>: Very, very short post docs. [laughter]. >> John (JD) Douceur: Very, very short post docs, really short. >> Meg Walraed-Sullivan: Summer postdocs [laughter]. >>: Pre-docs. >> John (JD) Douceur: Pre-docs thank you. And in fact we have at least one other person in the audience who has been an intern of Doug Terry. For the next few days she will be interviewing with us for a postdoc, got it right that time, position with the distributed systems for operating systems research group. Take it away. >> Meg Walraed-Sullivan: Thanks JD. Today I'm going to talk you about label assignment in data centers and this is joint work with my colleagues Radhika Niranjan Mysore, Malveeka Tewari from UCSD, Ying Zhang from Ericsson Research and of course my advisors. What I really want to tell you about today is the problem of labeling in a distributed network. Anytime we have a group of entities that want to communicate with each other, they are going to need a way to refer to one another. We can call this a name, an address, an ID, a label. I'm going to take the least overloaded of these terms and say a label. To give you an idea of what I mean by a label, historically we have seen labels all over the place. Your phone number is your label within the phone system. Your physical address as far as snail mail or for internet type things my laptop has a Mac address and IP address) so those are labels. So the problem with labeling in the data center is actually a unique problem because of some special properties of data centers. So when I talk about a data center network what I mean is an interconnect of switches connecting hosts together so that they can cooperate on shared tasks. These things as I'm sure you know are absolutely massive. We are talking about tens of thousands of switches connecting hundreds of thousands of servers, potentially millions of virtual machines, and just to drive this point home I've got the canonical, yes data center is our big picture here, I'm sure you're aware [laughter]. So another property that's interesting about data structures is that we tend to design them with this nice regular symmetric structure. We often see multi-rooted trees and an example of a multi-rooted tree is a fat tree which I've drawn on this slide. But, the problem is even the best laid plans; reality doesn't always match the blueprint. As we grow the data center we're going to be adding and removing pieces. We are going to have links, switches, hosts all sorts of things failing and recovering. We could have cables that are connected incorrectly from the beginning or maybe somebody's driving a car down between racks and knocks a bunch of stuff out and puts it back incorrectly. Basically we don't get to take advantage of this nice regular structure all of the time or at least we can't expect it to always be perfect. So just to give you some context, what might we label in a data center network? Ultimately we have end hosts trying to cooperate with each other. So a host puts a packet on the wire and it needs a way to express where this packet is going. It needs a way to say what it is trying to do. So some things that we might label, for instance, are switches and their ports, host NICs, virtual machines, this sort of thing just to give you some concept of what we're trying to label here. So for now let's talk about the options that we have today. On one end of the scale we have flat addresses and the canonical example of this is Mac addresses assigned at layer 2. Now these things are beautiful in terms of automation. They are assigned right out-ofthe-box. They are guaranteed to be unique for the most part so we don't have to do any work in assigning them and that's fantastic. On the other hand, we run into a bit of a scalability issue with forwarding state. Now switches have a limited number of forwarding entries that they can store in the forwarding tables. So this means that they have a limit in terms of a number of labels that they can know for other nodes in the network. We're talking about flat addresses. We run into this problem where each switch is going to need a forwarding entry for every node in the network and at the scale of the data center this is just more that we can fit our switches today. Now you could argue that we should just buy bigger switches, but remember we are buying tens of thousands of them so we are probably going to try to stick to the cheapest switches we can. So from there we might consider a more structured type of address and usually we see something hierarchal and the canonical example of this is IP addresses assigned with DHCP. Now here we solve the issue of the scalability in the forwarding state. This is because what we do is we have groups of hosts sharing IP address prefixes and so a group of hosts can actually take their prefix and have this be corresponding to one forwarding entry in a switch farther away in the network. So we allow sharing and this compacts our forwarding tables. On the other hand, if we're looking at something like IP, someone's got to sit around and figure out how to make these prefixes all work. Someone's got to participate in the address space and spread it across the network appropriately and configures subnet masks and DHCP servers, make sure all of the DHCP servers are in sync with themselves, in sync with the switches and this is really unrealistic to expect anyone to do. This is a significant pain point of scale. So more recently there have been several efforts to combine the benefits that we see at layer 2 or layer 3 and try to address these things. I'm only going to talk about two today. These are the two that are most related to the work that I'm going to tell you about. These two are Portland's location discovery protocol which was done by my colleagues at UCSD and then MSR's DAC, data center address configuration. Now one point I want to make about both of these is that they both somewhat rely on a notion of manual configuration via their leverage of blueprints. So there is some notion of intent of what we want the topology look like, but more importantly, both of these systems rely on centralized control. Now I can make the usual comments about centralized control. There's a bottleneck. There is a single point of failure, that sort of thing, but I think that these things have largely been addressed. What I really want to talk to you about is this idea of how we get the centralized controller connected to all 100,000 nodes. This is a problem. They can't all be directly connected to the centralized controller, otherwise we have 100,000 port switch and that's pretty cool, but we don't have that. So in order to get all of these components to be able to locate and communicate with our centralized controller, we're going to need some kind of separate out of band control network or worse we're going to have to flood. So you could say an out of band control network is not so bad. It's going to be much smaller than our current network, but relatively smaller than absolutely massive is still pretty big. So someone's going to need to deploy all of the gear for this and it's going to need to be fault tolerant and redundant and somebody is going to need to maintain it, so this is again something that we run into a problem that we are trying to avoid before. We don't want to have to administer and maintain all this stuff. So let's try to tease out the trade-off that we're really looking at here. What we have is some sort of trade-off between the size of the network that we can handle and the management overhead that we have associated with assigning labels. And this trade-off looks like this. As the network size grows, it's more management overhead and this is just a concept graph. It's not meant to have any particular slope, maybe it's not even a line, but the trend is up and to the right. The interesting thing about this concept graph is that someplace along the lines of networking size, of network size, we have some sort of hardware limit. And to the left of this limit we're talking about networks that are small enough that we can afford a forwarding entry in the forwarding tables per node in the network. And so to the left of the network we are free to use flat addresses like Mac addresses. However, to the right of this limit, this is where we run out of space in the forwarding tables and we can't fit an entry per node in the network, so we're going to have to embrace some structure in our addresses, some sort of hierarchal label. So just to give you a frame of reference, we talked about ethernet and ethernet sits to the bottom left of this graph, almost no management overhead but small networks. IP on the other hand is going to sit towards the top right. We can have very large networks, but we have to deal with the management overhead. So like LDP and DAC, our goal here is to try to move down vertically from IP to get to this target location where we get to support really large networks with less management overhead. The way I'm going to show you how to do that today is with some automation. Now of course we all know that there is no free lunch. There is a cost to everything, so what I want to point out is if we are going to embrace the idea of automation, then the network is going to do things for us on its own time. Now we can set policies for how it's going to do things, what it's going to do, but ultimately it is going to react to changes for us. Additionally, if we're going to do something with structured labels, that means that our labels are going to encode the topology. That means that when the topology changes those labels are going to need to change and since the network is taking care of things automatically, it's going to react and change those labels. So this is a concept that we're going to have to embrace, the concept of relabeling where when the topology changes the network is going to change labels for us. So now that you have a rough idea of what we're trying to do, what I'm going to present you today is ALIAS. It's a topology discovery and label assignment protocol for hierarchal networks and our approach with ALIAS is to design hierarchal labels so we get this benefit of the scalable forwarding state that we see with structured addresses, to assign them in an automatic way so we don't have to deal with management overhead of 100,000 nodes, and to use a decentralized approach so we don't need some sort of separate out of band control network. Now the way that I like to do systems research is a little bit different. I like to look at things from this kind of implementation, deployment, measurement side of things as well as from a more formal side, from a proof and formal verification side. So today what I'm going to be talking to you about is actually two complementary pieces of work. One that was in implementation and deployment type thing that was in a symposium on cloud computing last year and one that is a more theoretical piece of work from distributed computing last year and these two things actually combined together to form ALIAS, this topology discovery and label assignment protocol that I've just introduced to you. Just to formalize the space that we are looking at right now, what we often see in data center networks is multi-routed trees, and what I mean by a multi-routed tree is a multistage switch fabric connecting hosts together in an indirect hierarchy. Now an indirect hierarchy is a hierarchy where we see servers or hosts at the bottom at the leaves of the trees or switches instead of connected to arbitrary switches. We also often see peer links, and by peer links I mean a link that I have drawn horizontally on this picture so a link connecting switches at the same level. Now one thing I want to call your attention to about this graph, and this is just an example of a multi-routed tree is that we have high path multiplicity between servers, so what this means is that I take two servers and look at them, there are probably many paths by which they can each reach each other, maybe some link or node disjoint paths. And our labels are ultimately going to be used for communication, so it would be nice if at the very least our labels didn't hide this nice path multiplicity, if they allowed us to be able to use that when we communicate over the top of them. So I want to give you a very brief overview of what ALIAS labels look like just to give you the concept. In ALIAS switches and host have labels and labels encode the shortest physical path from the root of the hierarchy down to a switch or a host. So there might be multiple paths from the root of the hierarchy down to the switch and so that switch may have multiple labels. So to give you an example that teal switch labeled G actually has four paths from the root of the hierarchy, so it's got four labels and similarly its neighboring host H in orange has four labels as well. So as you can see we've encoded not only location information in these labels, but ways to reach the nodes. Now a few slides ago I made a comment about having too many labels and now I've just introduced the concept of having multiple labels per node, so it would seem I just made the problem worse instead of better. But in a few slides what I'm going to do isn't going to show you how to leverage the hierarchy in the topology to compact these things, to make some shared state so that we don't have so many labels. Now almost any kind of communications scheme would work over ALIAS labels. Obviously something that leverages the path encoding in these labels and the hierarchical structure would be the most clever. We implemented something that actually does leverage this, but I won't have much time to talk about that today. So I just want to give you some context in terms of what the forwarding might look like. So what you can think of for the purpose of today's talk is some sort of hierarchical forwarding where we actually pass packets up to the root of the network and then have the downward path be spelled out by the destination label. So if someone wants to get a packet to host H it just needs to get that packet up to A B or C and then let the downward path be spelled out by the destination label of each. So what do these labels really look like and how do we assign them? What does this protocol look like? Well, ALIAS works based on immediate neighbors changing state at some tunable frequency. What I mean by immediate neighbors is we never gossip anything past anyone directly connected to us. And we have four steps in the protocol that operate continuously. Now when I say continuously, what I mean is they operate as necessary. If something changes, then state begins getting changed again. If someone has nothing new to say, then the state exchange just reduces to a heartbeat. And these four steps are going to be the following. First we overlay hierarchy on the network fabric. Now remember when a switch comes up it has no idea what the topology looks like, has no idea where it is in the topology, what level it's at and so on, so it needs to figure this out. Next we’re going to group sets of related switches into what we’re going to call hypernodes. After that we're going to assign coordinates to switches. Now a coordinate is just a value from some predetermined domain. So for the purpose of this talk we are going to use the English alphabet as the domain and letters as coordinates, but it's just any value from the domain. And lastly we’re going to combine these coordinates to form labels. So I'm going to tell you about each of these steps in detail so that they make a little bit more sense. So first thing we have to do is overlay hierarchy. So in ALIAS what we say is that switches are at level one through n where of the one at the bottom of the tree and level n is at the top. We bootstrap this process by defining hosts to be at level 0. So the way our protocol works is when a switch notices that it is directly connected to a host, it says hey, I must be at level one. And then during this periodic state exchange with its neighbors, its neighbors say hey, my neighbor labeled itself as level one, I must be level two. And so on for level three and this can work for any size hierarchy. This can continue up any size tree and the beauty of this is that only one host needs to be up and running for this process to begin. So now that we have overlaid hierarchy on the fabric, our next order of business is to group sets of related switches into what we are going to call hypernodes. >>: If a switch doesn't have any hosts [inaudible], then it's going to think it's high in the hierarchy rather than low [inaudible] change once [inaudible] comes up? >> Meg Walraed-Sullivan: Yes. So that switch will be pretty much useless at the top of the hierarchy, so it will be have some pass potentially that it can relay things, but it probably won't have been meant to be at the root of the hierarchy, so it probably won't have a ton of connections, so it will just sit at the top of the hierarchy until its host connects it and pulls it downward. So what are these hypernode things and what are we trying to do with them? Remember labels and code paths from the root of the hierarchy down to a host, and so multiple paths are going to lead to multiple labels and we said we needed a way to aggregate these, to compact these. We're going to do this with hypernodes. What we’re going to do is we're going to locate sets of switches that all ultimately reach the same host on a downward path, so sets of switches that are basically interchangeable with respect to reachability moving downwards. Just because I'm going to use this picture for several slides, I just want to point out that we've got a four level tree of switches here and we've got the level 0 host at the bottom which are invisible because of space constraints. So in this particular picture, as you can see we've got two level two switches that are highlighted in orange. These two level two switches both reach all three level one switches and therefore they both reach all of the hosts connected to these level one switches and so with respect to reachability on the downward path, these two switches are the same. They are interchangeable. So let's formalize this. A hypernode is the maximal set of switches at the same level that all connect to the same hypernodes below, and when I say connect to a hypernode below I mean via any switch in that hypernode, maybe multiple switches in that hypernode. Of course, this is a recursive definition so we need a base case and our base case is that every level one switch is in its own hypernode. Now I think this is actually a tricky concept, so I'm going to go through the example in some detail. So we have three level one switches, each in their own hypernode. Then at level two we have two hypernodes. As you can see this switch to the left in the light blue or teal only connects to two of the level one hypernodes, whereas the two switches on the right as we saw in the previous slide connect to all three. That's why we have the level two switches grouped this way. If you look at level three, we've got the orange switch all the way over to the right and that only connects to the dark blue level two hypernodes, so it is by itself. On the other hand, we've got the two yellow switches. Those both connect to both level two hypernodes and so they are grouped together. Now notice that those two yellow switches actually connect to the dark blue hypernode via different members but they both connect to the same to hypernodes. So to reiterate why we are doing this, remember that hypernode members are indistinguishable on the downward path from the root. They are sets of switches that are ultimately able to get to the same sets of hosts. >>: So they are interchangeable for connectivity but not for load balancing. >> Meg Walraed-Sullivan: Yes, just for reachability. Yep. So now that we've grouped into hypernodes our next task is to assign coordinates to switches. Now remember a coordinate is just a value pick from a domain; for this talk it's going to be letters picked out of the English alphabet. So let's think about what these coordinates might look like and how they are going to enable communication. Remember we are ultimately going to use them to form labels and those labels are ultimately going to be used to route downwards in the tree. So for example, if we have a packet at the root of this tree and it needs to get to a host reachable by one of the switches in the yellow hypernode, it may as well go through either. They both reach the same set of things so we can forward through either for the purpose of reachability and will still get to our destination. On the other hand, if we have a packet at one of the yellow switches and it's destined for the bottom right of the tree, then it needs to go through the dark blue hypernode. It can't go through the one on the left, that teal one, so what this tells us is we don't need to distinguish between hypernode members. Switches in hypernode can share a coordinate and then ultimately share the labels made out of this coordinate. On the other hand, if we have two hypernodes at the same level that have a parent in common, they are going to need different coordinates because their parent is going to need to be able to distinguish between them for sending packets downward since they reach different sets of hosts. So this seems like a somewhat complicated problem to assign these things, so the question is can we make it any simpler. And what we're going to do is we're going to focus on a subset of this graph and a subset of this problem. So we're going to look at the level two hypernodes and think about how they might assign themselves coordinates. So these level two hypernodes are choosing coordinates. Let's call them choosers. Now they're choosing these coordinates with the help of their parent switches, because their parents are going to say you can't have this coordinate because my other child has it, so let's call their parents deciders. So at this point what we've done is we've pulled out a little bit of an abstraction that shows a set of chooser nodes connected to a set of decider nodes in some sort of bipartite graph. This may not be a full bipartite graph, but it is a bipartite graph. What we're going to do is we're going to formally define this abstraction, this chooser, decider business and then we're going to right a solution for this abstraction and then finally were going to map the solution back to our multirooted tree. To formalize, what we have is what we officially call the label selection problem or LSP, and the label selection problem is formally defined as sets of chooser processes which are shown in green, here connected to sets of decider processes which I've shown in red in a bipartite graph. With an eye towards mapping back towards normal multi-rooted tree, these choosers are going to correspond to hypernodes, so remember hypernodes may have multiple switches but for now we're just collapsing that into one chooser node. Then the deciders are going to correspond to these hypernodes’ parent switches. Now the goal, a solution to the label selection problem is that all choosers eventually select coordinates, that we make some progress. Also that choosers that share a decider have distinct coordinates. With an eye back towards mapping back towards our tree, this is so that hypernodes will ultimately have distinct coordinates when they need to, so one example of the coordinates that we might have in this particular graph are the following. So chooser C-1, C-2, and C-3 share deciders, so they all need different coordinates and that is shown in the example here. On the other hand, for instance, C-1 and C6 don't share any deciders so they are free to have the same coordinate if they want to. Formally we define a single instance of LSP as the set of deciders that all share the same set of choosers. This is basically if you want to look at it this way, the set of maximal or full bipartite graphs that are embedded in this one graph. Here we have actually three instances of LSP in this graph. Note that a chooser can end up in multiple instances of LSP, for instance, C-4 and C-5 are both in two instances. And this begs the question of what do we do with choosers when they are in multiple instances? Now in one hand what we could do is we could assign them one coordinate that works across all instances. This means they only have to keep track of one coordinate which is nice, but on the other hand if they have to have a coordinate that works across all instances they may be competing with a few choosers from each instance and this may give them more trouble in terms of finding coordinates that don't conflict with someone else. Go ahead? >>: Is there a disadvantage to having a big enough coordinate that it's, for example, hundred and 60 [audio begins] or even [inaudible] Mac address? >> Meg Walraed-Sullivan: So there could be. If we could use the Mac address as a coordinate, that would be nice. However, we're going to end up tacking coordinates together to form labels and we would like to limit the size of the labels. >>: Why? >> Meg Walraed-Sullivan: Well, we might want to use them; we want to use them for forwarding. We might use them for rewriting in a packet, different things, so we want to keep them to a reasonable size. So on the other hand, if we didn't assign a coordinate that worked across multiple instances, what we could do is assign a coordinate per instance. This means that C-4 and C-5 would each have two coordinates. Now this gives us the trouble of having to keep track of multiple coordinates, but on the other hand we're keeping the sets of switches that might conflict with each other smaller, and ultimately keeping the coordinate domain smaller, which will give us smaller labels. So it turns out that either of these specifications are just fine. We've actually implemented solutions with both; we've actually looked through both and they are fine, but there is a nice optimization as we map back to ALIAS if we do the second. Did you have a question? >>: If you use a single link into the system do you break the uniqueness… >> Meg Walraed-Sullivan: Next slide. >>: Could you move to the next slide? [laughter]. >> Meg Walraed-Sullivan: I will in one second [laughter]. We decided to go with the one coordinate per instance just because it gives us a nice property when we map back to ALIAS. Just to show you how that works, what that means is C-4 and C-5 are each going to need two coordinates, one for each instance that they are in and note that there are no constraints about whether we have the same coordinate for both instances, whether we happen to pick different ones et cetera; it's just for instance and if they happen to be the same, no worries. All right, so here is your slide [laughter]. At first blush this seems like a pretty simple problem to solve. In fact, our first question was can we do this with a state machine, with Paxos? The difficulty here is, and remember, we are formally stating the problem; we're not actually solving it here, but one of the constraints of this problem is that connections can change. When a connection changes it is actually going to change the instances that we have going on and this doesn't map nicely to Paxos. So for instance, no pun intended, if I add this link between chooser C-3 and decider D-3, what this does is it actually pulls chooser C-3 into the blue instance and then C-3 needs to find a coordinate for that instance. So the difficulty here and one of our constraints of this problem is that the instances can change and in fact we expect them to change. I'd like to reiterate that any solution that implements the label selection problem and its invariance is a perfectly fine solution. There are many ways that we can do this. We designed a protocol which we call the decider, chooser protocol just based on what we were looking for in terms of mapping back to ALIAS. So the decider chooser protocol is a distributed algorithm that implements LSP. It's a Las Vegas style randomized algorithm in that it's only probabilistically fast, but when it finishes it is guaranteed to be correct. So this is in contrast with a Monte Carlo algorithm where we finish quickly but we're not guaranteed to be absolutely perfect when we're finished. Now we designed this decider chooser protocol with an eye towards practicality because we are going back into the data center and we want something very practical, something that converges quickly and doesn't use a lot of message overhead. And we also want something that reacts quickly and locally to topology dynamics, so this issue of connections changing. We want to make sure that we react quickly to them and we want to make sure that a link added or changed over here in the network is not going to affect labels over here. We definitely don't want that. So to give you an idea of how the decider chooser protocol works, our algorithm is as follows. We have chooser select coordinates opportunistically from their domain and send them to all neighboring deciders. Let's suppose choosers C-1 and C-2 select X and Y respectfully. They're going to send these to all of their neighboring deciders. Now when a decider receives a request for a coordinate, if it hasn't already promised that coordinate to someone else, it says sure, you can have that. In this case neither of these deciders has promised anything to anyone so they both send yeses back to these choosers and then of course they store what they promised. Now if the decider has already promised a coordinate to another chooser, then it says no, you can't have that coordinate for now. I promised it to somebody else, and here's a list of hints of things that you might want to avoid on your next choice. If the chooser gets one no from any decider, it just selects again from its coordinate domain and tries again. Now it does this avoiding things that have been mentioned to it in the hints from its other deciders. Once a chooser gets all yeses, it's finished and it knows what its coordinate is, so in this case both choosers got yeses and they are finished. Now of course, I've just shown you the very simplest case here. There are all sorts of interesting interleavings and race conditions and we have coordinates that are taken up while they are in-flight or while they are promised by a decider that ultimately won't work out and this sort of thing. There are all sorts of complicated issues here. I’ve just shown you the simple case so you can see what the protocol looks like. Let's talk about mapping this back into our multi-rooted tree. So what we have is at all levels in parallel we have as many as needed instances of the decider chooser protocol running on a small portion of the tree. We have several instances at each level on smaller chunks of the tree happening all in parallel, so each switch is really going to function as two different things. It's going to participate in a chooser for its hypernode and it's also going to be helping nodes below it switch their coordinates and it's going to be functioning as a decider. So to look at this in more detail, at level one in our example tree we have three hypernodes, so we have three choosers, and their parent switches, the three deciders help them choose their coordinates. Now things get a little bit trickier as we move up the tree. Remember that all of the switches in a hypernode or going to share their coordinate, so they all need to cooperate to figure out what that coordinate can be. The reason for this is that each switch in the coordinate might have a different set of parents and the parents are going to impose restrictions about what the coordinate can be based on their other children and what coordinates are already taken. We need every single switch in the hypernode to participate in deciding what the coordinate can be or rather what it cannot be, so we need input from every switch in the hypernode. Now our difficulty here is that the switches in the hypernode are not necessarily directly connected to one another, and unless we suspect some sort of full mesh of peer links at every level, we really can't expect them to be connected to each other, so what we do is we leverage the definition of a hypernode to fix this. Remember, a hypernode is a set of switches that all ultimately reach the same set of hosts, and so if they reach the same set of hosts that means that there is one level one switch that everyone in the hypernode reaches. So we select one such level one switch for each hypernode via some deterministic function. In this case I've gone with a deterministic function of I drew it farther to the left on the graph. So what we do is we use that shared level one switch as a relay between hypernode members. This allows them to communicate and share the restrictions on their coordinate from their parents. Because this is more theoretical work and we've changed the protocol little bit, we need to give it a new name, so it is surprise, the distributed chooser decider chooser protocol, because we've distributed the chooser across the level one switch in the hypernode. Coming back to our overview, we have overlaid hierarchy. We've grouped into hypernodes and we've assigned coordinates that are shared among hypernode members. Our next task is to combine these coordinates into labels. This is actually quite simple the way that we do this. We simply concatenate coordinates from the root downward to make these labels. For instance, this maroon switch here has three paths through different hypernodes and so it has three labels. Now to make this more clear if we didn't have hypernodes it would have six labels, because there would be six paths. So what does this give us? Really what we get is that hypernodes create clusters of hosts that share prefixes in terms of their labels, so that when we have a switch here in the network, it can actually refer to a whole group of hosts over here by just one prefix, so this is compacting our forwarding tables in the same way that you might expect with something like IP except that we didn't pay for the manual configuration here. We did this automatically. Now I would like to bring your attention briefly back to relabeling, remember that was our no free lunch thing. So when we have topology encoded in the labels, when we have paths encoded in the labels, if the paths change we are going to have labels changing. We call this relabeling. An example of this is if I fail this link here shown in the dotted red, it's actually going to split that hypernode into two hypernodes. This is going to affect the labels nearby because remember labels are built based on hypernodes. So in our evaluation we show that not only does this converge quickly, but also that the effects are local just to the nodes right around this failure here. Now I know I said I wouldn't talk about communication much, but I just want--oh, go ahead. >>: So you showed that it’s local you show empirically or you choose that there's actually a bound on [inaudible]? >> Meg Walraed-Sullivan: We showed it was a model checker, so we verified that it happens, and we also did some analysis to convince ourselves. >>: So the punchline, if you will, is that if you get hierarchal labels but without manual assignment… >> Meg Walraed-Sullivan: Yes. >>: So let me play the strawman alternative system and maybe you could compare what you described [inaudible], so I'm going to run DHCP and then take an open-source DHCP server [inaudible] bound and do some hacking on it and configure a essentially [audio begins] so that each DHCP server doesn't just give out addresses, but it also claims portions of the address [inaudible] DHCP server based on the hierarchy, so the top-level switches let's say they have entire class a [inaudible] and then [inaudible] smaller and smaller [inaudible] distribution [inaudible] recursively [inaudible] lowest layer you have DHCP servers that give out individual addresses to host rather than dividing up something similar so you end up with still hierarchal labels fully automated but also have the advantage of also being a real IP address which means that existing routing works and you would also have [inaudible] addresses [inaudible]. So I know [inaudible]… >> Meg Walraed-Sullivan: No problem. >>: What would be the difference between what I just described to what you described? >> Meg Walraed-Sullivan: This is something that I would actually like to think about further. I have given some thought about what we could do we distributed some sort of controller or something among nodes and this kind of looks like that sort of thing. My first concern would be getting all of the DHCP servers in sync with each other and agreeing with each other and who tells them which portion of the address base they can have or if they decide amongst themselves how do they sync up with each other. This is definitely something that we've been looking at as current and future work. There are a lot of different ways that we can kind of distribute something centralized, but logically distributed across certain portions of the graph. >>: One more question? >> Meg Walraed-Sullivan: Sure. >>: So you showed an example of a local change [inaudible] failed, but isn't it the case that the introduction or the unfailures of links can change the level in the tree, of the switch? It seems like it could be very disruptive. Is that still [inaudible]? >> Meg Walraed-Sullivan: That's a good question. So first of all in terms of just, in terms of failing a link verses recovering a link, it turns out there actually is not much difference. When you fail or recover a link what really matters in terms of the locality of the reaction is what happens to the hypernode on top of that link, whether that hypernode ends up joining with the existing one, splitting apart, that sort of thing. Now of course if you change certain links you can change the level. If you break your last link to a host then you are going to move up in the tree from level one. >>: I mean a host off of the [inaudible] switch? >> Meg Walraed-Sullivan: right, then that is going to pull that route switch down. >>: Doesn't that disrupt the tree globally? >> Meg Walraed-Sullivan: It does, not globally usually unless you do something… >>: [inaudible] log n [inaudible]? >> Meg Walraed-Sullivan: It depends where the failure actually is and it depends on how many nodes are below the hypernode, so with your example, if you hang a host at the root of the tree, this is actually going to pull this root down and this could have some bad effects in terms of generating peer links where there used to be up down paths, right? So one of the things that ALIAS gives you that I think is really nice is this notion of topology discovery. We have several types of flags and alerts that you can set so if you don't expect, if you didn't build the network with many peer links and you start to see many peer links, something is wrong. This isn't what you intended and we send an alert, so lots of these things that are going to cause disruptions about like this are going to be able to be found immediately and detected. >>: So your point is that kind of change would in fact have dramatic effects and yet you consider that insertion failure. It's not that all changes cause local perturbations, it's that change is either cause local perturbations or they are an indication that you did something horribly wrong [inaudible] effects. >> Meg Walraed-Sullivan: That and also some of them that don't cause only local perturbations are not going to matter, so if you pull a root switch down, then you are definitely losing paths, but because of this nice path multiplicity, you're not losing connectivity, because there are still several root switches that will provide that connectivity. So just a touch on the communication that we would run over these labels, I know I promised I wouldn't talk about it but I want to give you some context as to how we might use them. >>: I just think about you were talking about the network that you had before the root switch on there, if I just go and plug my laptop into the root switch because I'm debugging the network… >>: [laughter] don't do that. [inaudible]. [multiple speakers]. >>: Obviously, it's like the worst case, but it's something that could clearly happen. I mean… >> Meg Walraed-Sullivan: Right, but of course we can set up our protocol, right, so that your laptop doesn't act like a server. >>: Well, what if there is somebody pushing a cart down and knocks out cables and plugs them back into the wrong place… >>: Well, but you said something interesting which is that it makes paths go away. That seems like a strange property, that plugging the laptop into an empty port on a root switch will eliminate--I mean I haven't removed any links. >> Meg Walraed-Sullivan: Right. >>: I have the same network except that I used one more port… >> Meg Walraed-Sullivan: It doesn't make physical paths go away, but based on the structure of the communication that we’re going to talk about and that we use, it will make some logical paths go away, but we posit that there are still plenty of paths. But they're not actually going to go away. >>: It's just a weird property to have that you are losing the ability to route across certain links because you added a host that is not doing anything, that is reading diagnostics. >> Meg Walraed-Sullivan: So ultimately we are encoding topology into the labels and we are restricting how we route across those labels, so we need some sort of well defined way of structuring these labels and this is a cost that comes with that. >>: So this is the fallout of the fact that [inaudible] that you use hosts to identify the trees… >> Meg Walraed-Sullivan: Yes. So if you are willing to admit some sort of scheme where you labeled nodes at particular levels, then you could make this go away, and that's not that unreasonable a thing to request. We could say switches within a rack or something and so on. >>: No. I just [inaudible] the overall affect [inaudible] the network [inaudible] what level to expected. >> Meg Walraed-Sullivan: So we wanted to opt for something automatic but you could make this go away if you were willing to label. Just look at our communication scheme, what we do is actually something very similar if you are familiar with the Portland work, to what they did for communication. So as a host sends a packet, at the ingress switch at the bottom of the network we actually intercept that packet; we perform a proxy APR mechanism that we've implemented to resolve destination Mac address to ALIAS label, to one of the ALIAS labels for the destination. Then we actually rewrite the Mac address in the packet with the ALIAS label. Now I'm sure you're thinking right now, this means we have a limited size for the labels in this particular communications scheme. There are also other schemes. So what we do then is we forward the packet upwards and then across and then downwards in the network if we choose to use any cross peer links. This is based on the up star, down star forwarding that was introduced by Autonet. And then when the packet reaches the egress switch at the end of its path, the egress switch goes ahead and rewrites the ALIAS label back for the, swaps it back out for the destination Mac address, so the end hosts don't know that anything happened. If you aren't willing to rewrite Mac addresses in packets, or if you didn't want to have fixed length labels, then you could use something, encapsulation tunneling or some other way to get these packets to the network. This is just to give you an idea of how you might implement this. So now I would like to tell you about how we evaluated this. So again, we kind of approached it from two sides, from the implementation deployment evaluation side as well as the prove it and verify it side, but ultimately our goal was to verify that this thing is correct, that it's feasible and that it is scalable. That's really what we care about. So I'm going to talk about correctness first, because if it isn't correct it doesn't really matter if it's scalable. So our questions that we wanted to answer as far as correctness were, first of all is ALIAS doing what we said it does and does it enable communication. So to figure this out we implemented ALIAS in Mace which is a language for distributed systems development. So you basically specify things like a state machine, so if I receive this message, I send the following messages out. If a timer fires, I do this. Now Mace is a fantastic language for doing this kind of development because it comes with a model checker. If anyone isn't familiar with Mace and wants to be, please come talk to me because I would love to tell you about it. What we did with the Mace model checker is we verified first of all that the protocol was doing what I said it would, that I didn't write tremendously buggy code. Then we verified that the overlying communication works on ALIAS labels, that two nodes that are physically connected are in fact logically connected and can communicate. This is actually a great use of the model checker because it turns out that there are some strange graphs that we found with the model checker where communication didn't work and it was based on an assumption that we had made incorrectly early on in the protocol when we were designing it. So again, model checker is great because you can find these sorts of things. The last thing that we verified was the locality of failure reactions, so making sure that this invariant of if I fail a link here, no one over here changes, just making sure that holds. Then because Mace is a simulated environment and we never trust these things, we ported it to our test bed IECSD. Now we used the test bed that was set up for Portland so it already had this up star, down star, forwarding set up, and so all we had to do is make sure that our labels worked with an existing communications scheme. And they did work, and this gave us a way to sanity check our Mace simulations. The other things we wanted to look at in terms of correctness were first of all does the decider chooser protocol really implement LSP? Remember I said anything that solves it is fine, so did we come up with something that actually solves it? So we verified this in two ways. First we wrote a proof and then second what we did is we implemented all of the different flavors of the decider chooser protocol in Mace, so having one coordinate per multiple sets of instances, having multiple coordinates distributing the chooser, et cetera, and we made sure that the invariants that LSP has were held, so progress everybody eventually gets a coordinate and distinction, this notion of if you share a parent you can't have the same coordinate, so we made sure that these things held. The next thing we wanted to check was did we really pull out a reasonable abstraction with LSP or did we just look at something random? Was this a good place to start? So what we did is a formal protocol derivation from the very basic decider chooser protocol all the way to ALIAS. What I mean by this is we started with a very, very basic decider chooser protocol. It's not that many lines of code. And then we wrote some invariants about what has to be true about the system. Then we did a series of small mechanical transformations to the code where at each step we proved that the invariants still held. Ultimately we did the right series of mechanical transformations such that we ended up with the full distributed version of this operating in parallel at every level. So this convinced us that this is actually a reasonable abstraction to have pulled out. >>: How did you do the mechanical transformations? >> Meg Walraed-Sullivan: So this is an analysis process, so we took it, for instance, to go from the non-distributed chooser to the distributed chooser, we formerly said where we would host each bit of code. We defined queues that would actually later be based on message passing et cetera, so it's actually like a grab and replace where we made strategic choices about what we would replace and made sure that we didn't break any of our invariants. The next thing we wanted to check was feasibility because if we implemented something that is never going to run on real switches, then it really again, doesn't make sense to use it. It doesn't matter if it is scalable. So first we looked at overhead in terms of storage and control. Now storage, I don't mean forwarding tables, I just mean the actual memory on the switch to run the protocol. We just wanted to make sure that we weren't going to overwhelm these switches. We looked at our memory requirements and they were quite reasonable for large networks. We looked at this both analytically and on our test bed. The next thing we looked at was control overhead and with control overhead we have this trade-off between overhead and agility, so this is based on both the size of the switches and the frequency with which we exchanged state with our neighbors. If we have nodes exchanging state very frequently, then of course when something changes we are going to converge more quickly. On the other hand, we’re going to pay the cost in having more things exchanged more frequently, more control overhead. What I gave here was some representative topologies, some different sizes, and what the control overhead might look like for these. The reason I say 3+ in terms of depth and these 65K plus and so on in terms of hosts, is because this number doesn't depend on the depth of the tree, so this would work for any size tree with this size switches. This column here that I've got, this is worst case burst exchange. This is the absolute worst case that we could ever expect to see. This is if we have one level one switch acting as the shared relay for every single hypernode in the topology and managing everything and if we have every single switch come up at once, then this is what it's going to have to send once for this topology, so this is a pretty worst-case behavior. Then I have a few different cycle times listed and just showing you what the control overhead would actually be corresponding to the cycles. And we actually think this is quite reasonable given its a worst-case and it's, in terms of how much of a 10 G link that it would take up. The next thing we looked at was convergence time. Is this protocol really practical? What I want you to do for a second is suspend disbelief about the decider chooser protocol. Let's just say that everybody magically picks coordinates that work and that there are no conflicts. And let's look at what the base case convergence for ALIAS would be in this case. So on one hand to measure convergence time we just measure it on our topology. We perturb something, we start it up and then we look at the clock. We found that our convergence time was what we expected from our analytical results, which I've shown here. Now in this case our analytical results only depend on the depth of the tree, not the size of the switches. And essentially, our convergence time is going to be based on two trips up and down the tree. This is one to get the level set up and one to get all of the hypernodes and coordinates set up. Now of course we can probably expect it to be less than this because there is going to be some interleaving of these cycles that happens, but just to give you an idea, here are some example-based cases for some topologies and I've shown you some cycle times that are corresponding to nice control overhead properties from the previous slide. So now you can un-suspend your disbelief about the collisions and the decider chooser protocol and let's talk about how bad that is. Remember I said that the decider chooser protocol was probabilistically fast? What does that mean? Is that really reasonable? Well, the way that we decided to look at this, we looked at it analytically. It turns out that there is a very complicated relationship about the coordinate domain, the thickness of the graph, of the bipartite graph, and so on to do this analytically, so we moved away from that since we have numbers for that but they don't really convince us of anything, and we moved to an implementation using the Mace simulator. Now the Mace simulator is a lot like the model checker, but it actually runs executions in different orders and it picks a little bit differently about how it represents non-determinism. The beauty of the simulator is it actually allows you to log things. You can actually say X cycles have passed and this property is turned to true. What we did was we built three types of graphs. The first was based on a fat tree, so we took two levels out of the fat tree to represent the decider chooser problem. The second was a random bipartite graph and the third was a complete bipartite graph. So as you can imagine a complete bipartite graph is the absolute worst case scenario because we have many more choosers competing with each other than we would expect, and they are competing across many deciders so each chooser is going to collide with every other chooser and is going to be told so by every other decider. The next thing that we varied was the coordinate domains, so we tried things where the coordinate domain was exactly the minimum number that it could possibly be for that graph. We tried things where it was a little bit bigger than it could be, by 1.5 times the number or two times the number of choosers. So these are still pretty reasonable coordinate domains, because remember in practice we expect instances of the decider chooser protocol to be these small chunks of the graph. And what we found was that for reasonable cases this thing converges quite quickly, two or three cycles on average. This is including the cycles that I mentioned in our base case for establishing connectivity between the two levels. Now the worst case was the complete bipartite graph with the smallest coordinate domain possible. And the reason for this was that the simulator decided to generate some interesting behavior from me, much to my dismay. So at one point we had one chooser for which every single response from every single decider was lost 89 times, so it took 89 cycles to converge because the chooser couldn't get any information about what its coordinate could be, but when it finally heard back from all of the deciders and heard that you can't have any number but X, it took X and it moved on. So of course, this is not a very realistic scenario, but this is where we see the stragglers when the Mace simulator decided to give us some sort of crazy behavior. >>: What do you think the failure rates for the components [inaudible] like what you think the implications are for [inaudible] protocol? >> Meg Walraed-Sullivan: I would love to know. Unfortunately I don't have a data center to look at, but I'm told that actually link failure is a pretty big problem; it's very common. Links flap, they come back, they fail and I'm told that it is a pretty significant problem. That's why we designed this protocol both with one of our big constraints in LSP being that we need to be able to deal with the instances changing and one of our big constraints overall saying that we need to be able to react quickly and locally. We definitely think that it's a real problem, not something that's going to be intermittent. Now the scalability. >>: It looks like you designed the protocol assuming that people would be laying out their networks in a treelike topology [inaudible] unfair to… >> Meg Walraed-Sullivan: Good question, good question. So ALIAS will work over any topology. Of course it's going to be ridiculous if you try to overlay a tree on a ring. So it really does work best over something that can be hierarchical. Now we do see multi-rooted trees pretty often in the data center. I know that that is not always the case, especially in this room, but we do tend to see them. We are told by network operators that that is often the structure, so we designed a protocol that would work for them. If you have a data center that is structured in a different way, probably ALIAS is not the right choice. So scalability. Does this thing really scale to these giant, giant data centers that I mentioned? Well, our first blush at making sure it was scalable was to just model check large topologies. The reason for this was that sometimes as I'm sure you all know, when we scale out a protocol weird things happen that we didn't expect. So we just used the model checker to make sure that nothing changed at scale and nothing went weird. Then because the model checker only scales to so many nodes, we wrote some simulation code to analyze network behavior for absolutely enormous networks, networks that are more realistic in scale. And so what the simulation code did was it laid out random topologies, and then it set up the hypernodes. It figured out what the coordinates could be, assigned forwarding state and then looked at the forwarding state in each router. >>: How large was the model checker? >>: The model checker, only up to a couple of hundred nodes, maybe 200. Not enough to convince us that this thing was scalable. The simulations that we did up to tens of thousands, sometimes hundreds of thousands, but I don't display the numbers for hundreds of thousands because we didn't run enough trials to be sure, because it took hours and hours and hours to run. So here are the results of what we simulated. So let me explain this chart. On the left we have the number of levels in the tree and the number of ports per switch. And so what we did is we first started with a perfect fat tree of this size. Then in this percent fully provisioned column what I did is I failed a portion of this fat tree. Now you may wonder why I failed the fat tree. The reason for this is that in a perfect fat tree, the hypernodes are going to be perfect. Everything is going to be aggregated really nicely. What we wanted to test was as we get away from this nice perfect topology and as we start admitting failures, are the hypernodes still really doing their job? Are they aggregating, are we still going to get this compact forwarding state that we wanted? Now the next column shows the number of servers that each topology supports and what this gives us is a very worst-case comparison to using layer 2 addresses, so this is not a fair comparison, but it just gives us a base case, the very worst-case scenario. So if we had layer 2 addresses, we would need this many entries in our forwarding table. Of course the last column gives us the number of forwarding entries on average in each switch in ALIAS. So as you can see, orders of magnitude lower than the worst-case at layer 2. Now of course we could expect to see similar numbers for something like IP if we cleverly portioned the space and everything, but of course we would have to pay the manual overhead in this case, the manual configuration cost and here we didn't have to. So what we see is we get forwarding state that is like IP without paying the cost associated with IP. So to conclude, oh, go ahead. >>: So did you actually build to order [inaudible] understands peer addresses? >> Meg Walraed-Sullivan: Yes, we did. >>: How difficult was that? >> Meg Walraed-Sullivan: We did it both in Mace and we did it using our test bed that we had already set up for Portland. Portland assigns addresses that look a lot like this but in a centralized way, so we actually already had that forwarder setup. Unfortunately, the performance is pretty hard to measure because we did it with OpenFlow and updating flow tables is pretty slow and so we were limited in our evaluation by the speed of updating flow tables which ended up being a limiting factor. >>: Okay so, I guess I want to bring you back to what I asked you earlier on. >> Meg Walraed-Sullivan: Sure. >>: You introduced a lot of complexity around finding noncompliant addresses or labels, I guess I was, I would be more convinced that you couldn't just used big random labels if you actually showed that there was something that broke. But it sounds like if you actually built a forwarder what would happen [inaudible] the border actually fall over if you used the 25 labels, maybe just used random [inaudible] designs [inaudible]? >> Meg Walraed-Sullivan: Sure, another idea would just be to carry Mac addresses down the tree and maybe have someone in the hypernode be the leader whose Mac address got to represent that hypernode. So this is definitely something that we could consider, a lot less complexity. The problem is that we do run into these long addresses and what this does is it makes our entries in our TCAMs for forwarding longer, makes them take up more space, and I think… >>: [inaudible] hundreds of them and why does that matter? >> Meg Walraed-Sullivan: Well, if we are scaling out to the size of the data center, we may have a lot. We may be able to fit and we may not. So it's already a bit of a known issue that we are running out of TCAM space and TCAMs are expensive, especially in commodity switches. Now if we're talking about using bigger switches then this is a nonissue and I would definitely go with something like what you're saying, but we're trying to buy these cheap commodity switches and we can't expect them to have a ton of space for forwarding state, and so if we have this sort of prefix matching business going on and we have a really long labels, we're actually going to be taking up multiple lines in the TCAM with each entry. So if we can afford the forwarding state, then I'm all for it, but I think that for the purpose of this work that we are looking at smaller forwarding state, then we can afford that. >>: I'm sorry one more. [inaudible] switch obviously because it has a [inaudible] routing algorithm that's [inaudible] so… >> Meg Walraed-Sullivan: Right, but then we do have things like OpenFlow they give us the ability to modify the routing software on the switch. >>: But then you have a component problem which is that you can't actually--every single packet now is on the slow path. >> Meg Walraed-Sullivan: How so? >>: Or at least every flow is on the slow path. >> Meg Walraed-Sullivan: Every flow is on the slow path, yes, and there has been work to addresses and to make things like OpenFlow faster, but if we are going to, we can upgrade the firmware. We can use something like OpenFlow, so we feel that it's okay to modify the switch software, probably not the hardware. >>: Okay. So do you have a graph showing that Jeremy's scheme only achieves this number of servers and yours achieves--I don't have a sense of how many more times machines you can get. >> Meg Walraed-Sullivan: I don't. That's something that I really should do for future work and that's definitely something that we should talk about later if we can. So to conclude, hopefully I've convinced you that the scale and complexity of data centers is what makes them interesting and that the problem of labeling in them is interesting because of these properties. I've shown you the ALIAS protocol today which does topology discovery and label assignment and it does it by using a decentralized approach so we avoid this out of band control network. It leverages this nice hierarchy in the topology even though we have switches and links failing, we still have this nice hierarchy so it leverages this to form topologically significant labels, and then of course it eliminates the manual configuration that we would have with something like IP still giving us scalable forwarding state. So I would be happy to take more questions. [applause]. >>: Question, very interesting talk. How in this work can you incorporate work like load balance into the network? >> Meg Walraed-Sullivan: That is a very good question, because what we're doing is we're really restricting with the label to a set of paths and it's difficult to do something like global load balancing on top of this. This is one of the topics that we are actively looking at right now, how do we get this to play nicely with load-balancing. So along those lines, one thing that we are looking at, so I think it's important to understand that there are actually two levels of kind of multi-path within ALIAS. The first is picking a label. If you have multiple labels, then each label corresponds to a set of paths. And then within a label, we still have this ability for multi-path. So this relates to load-balancing because we have a set of paths that we can possibly load balance between, and so this is something that we are actively looking at. Can we select the best label? Can we take a best label, selected and then map other labels to ALIAS, no pun intended, to this label so that we can use all paths for this label, and this is definitely something that we are looking at. >>: And also related question is when you need to incorporate load balance into [inaudible] is it including, basic includes memory consumption on the switches, meaning basically you need to record multiple entries onto this [inaudible] because then you can decide which entry to choose when [inaudible]. >> Meg Walraed-Sullivan: So I'm not sure I see what the question is in that. >>: So the question is basically in this framework two destinations potentially you have maybe [inaudible] multiple [inaudible] in your TCAM basically entries do you need to record multiple [inaudible] or [inaudible] basically just one line? >> Meg Walraed-Sullivan: Without load-balancing, it's just one line. Because what we need know is, for local things, we need to know the address that goes through this particular switch to get to them, and then for faraway things we just need to know their top-level hypernode and know how we can route up into the tree to get to something that reaches that type level hypernode, so of course with load-balancing, then this story could change. >>: So when you [inaudible] few things [inaudible] when you've got 100,000 machines, how many labels do you wind up with? >> Meg Walraed-Sullivan: Not too many. Not too many. For example, you can see here that we end up with each switch actually needing to know, are you talking about how many labels end up per switch or… >>: No I meant per for a host, how many labels for a host? >> Meg Walraed-Sullivan: This depends on how broken the tree is. As you start to break up hypernodes, as you start to fail more of the tree, then you are breaking into more labels. On the other hand, as you break the tree further you are actually breaking paths and then the number of labels go down because there are fewer ways to reach things, so the base case is if you have a fat tree, you are going to have one label per host. We did lots of different topologies. Not all of them are realistic because the model checker decides to fail what the model checker decides to fail. But we didn't see too many labels per host and our topologies on the model checker which was smaller, a couple of hundred nodes; we saw four or five labels per host in the worst case. These were really fragmented parts of the tree. I mean the model checker will generate something that looks like a number of just strings coming down that have no cross connects. >>: And then I'm wondering how you imagine integrating this into any operating system so [inaudible] place where [inaudible] IP addresses are exposed like this is the guy who sends you a packet and he needs something [inaudible] labels on, is that, am I imagining that correctly? >> Meg Walraed-Sullivan: So the end, you mean the operating system on the end host? So the end host doesn't know anything happened, so the end host, because we have in our communication strategy anyway, because we have this nice kind of proxy ARP things set up, all we need is a unique ID for a node and we resolve that to an ALIAS label, forward through the network and then rewrite back into what that unique ID was, so you can imagine a scheme where we did this on IP addresses. Although then you have this overhead with assigning them. My example is with Mac addresses but in fact you could do this with anything. In fact, I think the interesting thing here is that you could do some sort of any cast type thing if you were willing to modify the end host, so you could send a packet to printer and then your ingress switch could go ahead and resolve printer to a number of ALIAS labels, maybe corresponding to different nodes, and then pick one of those and send towards it based on whatever constraints. >>: So where does the transition happen? >> Meg Walraed-Sullivan: Where does the transition happen? >>: The translation from IP or Mac address to… >> Meg Walraed-Sullivan: At the bottom level switch where we are connected to an end host. >>: So that switch, how does it, it keeps all of the labels? >> Meg Walraed-Sullivan: No, we did a proxy ARP mechanism, so what we do is because we have to pass things up in the tree anyway in terms of setting up hypernodes and so on, we actually pass mappings between ALIAS labels and whatever our UIDs are going to be, in this case Mac addresses, up the tree, and so the roots of the tree all know the mappings and so what we do is we have the level one switch ask the roots of the tree, so we have it send something upwards like an ARP request. It actually intercepts real ARP requests from the host, sends these upwards and gets an answer back down. So there are a number of ways that we could write this and it's just some kind of replacement for ARP. >>: Could you encode a [inaudible] label as a [inaudible]? >> Meg Walraed-Sullivan: I'm sorry. >>: Could you turn the label for an end host, translate it in some way directly to a [inaudible] like a [inaudible] address [inaudible]? >> Meg Walraed-Sullivan: I would think so. This isn't something I considered, but I think that as long as it fit in the space that we were trying to use and as long as it didn't have any sort of conflicts. If this were visible externally, then this would be a problem. I don't see why not. That being said, I reserve the right to see a reason not to in the future. >> John (JD) Douceur: Anyone else? All right, let's thank the speaker again. [applause]. >> Meg Walraed-Sullivan: Thank you.