23907 >> Andrew Baumann: Thank you all for coming. ... Simon is just finishing up his Ph.D. in the Systems...

advertisement

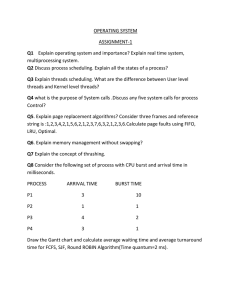

23907 >> Andrew Baumann: Thank you all for coming. It's my pleasure to introduce Simon Peter. Simon is just finishing up his Ph.D. in the Systems Group in ETH Zurich and he's going to talk about operating systems scheduler design for multi-core architectures. >> Simon Peter: Hi everybody. Before I start with the main topic of the talk I'd like to say a little bit more about the context of my work, which is the Barrelfish multi-core research operating system. I assume that most of you already know about this. So we'll keep this fairly brief. Barrelfish is a cooperation between MSR and ETH Zurich, and the overarching problem that we're trying to address with this work is commodity computing is and has been for the past couple of years in a period of fundamental change. Multicore architectures mean speedup through more processor cores and processor dyes instead of just simply faster processors. Now, unfortunately, this development is not transparent to software and so we actually have to write our software specifically or modify our software to make use of the additional cores in the architecture. However, at the same time we see fast pace of quite nontrivial developments within the architecture into an EMD are turning out a new multi-core architecture roughly every one and a half years or so. So that means we have to keep modifying our software in order to make it work on new multicore hardware architectures. Now, operating systems struggle very much to keep up with these developments. Especially commodity operating systems is what I'm talking about. There's an extreme engineering effort being put into a commodity operating systems like Linux and Windows to have them work right now on multicore architectures and it's projected that that effort has to increase even further as more fundamental changes are being put into these architectures. This is a structure problem. With these operating systems Linux and Windows are quite complex monolithic programs that have been developed back in the early '90s with uniprocessors and quite simply multi processors in mind and these quite disruptive changes in the architecture that impact software very much were just not around at the time. Now, Barrelfish is a new operating system that's been built from scratch for multi-core architectures. One of the -- in order to address these problems one of the main problems that Barrelfish is -- sorry, one of the main ideas that we're using within Barrelfish is to use distributed systems techniques in order to make the system both more scaleable as we add more cores to the architecture, as well as something that we call agility with the changing architecture. Make the operating system easily changeable or adapt to different hardware architectures. Barrelfish is similar to other multi-core operating system approaches like Corey, Akaros, fos and also tesselation that have been proposed within academia over the past year or two. And so my hopes are that the ideas that I will present in this talk are also applicable to the rest of the multicore OS space. Now, I have been involved with many things in the Barrelfish operating system and I encourage you if you get to talk to me later to ask me questions about other things that I've been working on as well. And I'd also like to say that we can make this talk fairly interactive. So if you have any questions just raise your hand and I can keep track of time. Okay. So back to the topic of this talk, which is multicore scheduling. Why is this becoming a challenge now? What's different in the way we need to schedule applications now and in the future as opposed to how we used to schedule applications in the past. Well, it's that applications also increasingly use parallelism in order to gain computational speedups with the additional cores that are now in the architecture. If I look over the past couple of years, I see a vast number of parallel runtimes emerging. There are things like interest thread building blocks. Microsoft has peeling. There's also a lesser well known runtime called Conquer T. Open PS has been around for a while but we're seeing increased usage. And other things like applet's grand dispatch, for example, and all of these runtimes are essentially made to encourage programmers to use more parallelism and programs are starting to use these runtimes in order to do that. There's a new class of workloads that is anticipated by many in the community to become more important for the end user commodity computing space. These workloads have an umbrella term. They're called recognition mining synthesis workloads. An example would be recognizing faces on photos that an end user might want to run on their desktop box in their photo collection. The common property of all of the applications in this class are that they are quite computationally intensive. While also being easily parallelizable is benefit quite nicely from the additional cores in the architecture. Now parallel applications like these have quite different scheduling requirements as opposed to the traditional single threaded or even concurrent applications, and that's something that's quite well known, for example, in the super computing community that has been dealing with parallelism essentially for decades. And quite adequate scheduling algorithms for these parallel applications have been developed in this community. However, the focus there is mainly to run a big batch of usually scientific applications that run for a very long time to a super computer. So you submit that. And then you have to wait usually for hours, days, sometimes even weeks. You don't really change the system while you're running these applications. And then you collect your results back later. What's new now is that we want to run these parallel applications in a mix together with traditional commodity applications. So maybe alongside a word processor that a user might be running or as part of a Web service. So the main two differences in this scenario are that the setting is much more interactive. We now have to deal with ad hoc changes to the workload as the user provides input to the system, and it's also providing us with a new metric, responsiveness to the user is now important. We have to be able to make scheduling decisions quickly enough so the user can actually feel a difference in the system as the scheduler is making decisions. >>: [inaudible] there's the word processor and there's a massive scientific calculation alongside? >> Simon Peter: Compute intensive applications. Face recognition, for example, is something that's really compute intensive. It takes a while to recognize faces on photos. But it's still something that you might want to run either in the background or you might want to run this as part of a Web service, for example, you might just want to do this when people submit photos. >>: Why is the response more challenging than this way? Seems like I've always wanted ->> Simon Peter: This type of workload, that's true. But I was comparing it to what people do in the super computing community. So there they have developed scheduling algorithms for parallel applications, but their use cases are typically throughput oriented batch processing where you have a big batch of applications and you just want to run -- there's no real latency requirement for a particular job, for example. Okay. So to put that into a nutshell again the research question that I was trying to answer is how do we schedule multiple parallel applications in a dynamic interactive mix on commodity multicore PCs? I'd like to motivate this a bit further and actually make it more concrete by giving you an example of what happens when we run multiple parallel applications today on a commodity operating system. In my case I ran two parallel Open MP applications concurrently at the same time on a 16 core AMD Opteron system, fairly state of the art. On the Linux operating system I used the Intel open library which is also state of the art, Open MP library. Both of my applications essentially execute parallel follows which is one of the main ways in Open MP to gain parallelism. One application, however, is completely CPU bound. It's just chugging away doing computation, and it's using eight threads. And the other application is quite synchronization-intensive. It executes a lot of Open MP barriers. If you don't know what a barrier is, I'd like to introduce it real quick. It's essentially a primitive where you have to wait until all the threads that enter the barrier have to come out of the barrier. Synchronization across all the threads. If one thread enters the barrier it will wait for all the threads to enter the barrier as well, and they can all leave the barrier again. This primitive is something that's used quite often within parallel computing, especially at the end of computations and end of sub computations because you want all threads to finish computing their chunk of a parallel dataset and you do an aggregation operation over that computation. So yes? >>: Are these real applications that have these properties or for this purpose are you synthesizing applications that exaggerate these properties? >> Simon Peter: In fact, these are applications that I have synthesized, though they're actually doing some computation. It's not that they're just going for barriers all the time. They're doing some computation. >>: Like a relationship ->> Simon Peter: Yes but I synthesized them so I could show the effect. So what I do then is as I run these two applications concurrently, I vary the number of threads that the barrier application uses as both applications run. In order to have some notion of progress that these applications are making, I count the number of parallel follow-up iteration s that they're going through over some time -- in my case it was a second. And then I look at the speedup or rather the slow-down as we will see on the next slide of these two applications as they run concurrently. So here's what I got. On this graph you see in the top, the kind of reddish looking line is the CPU bound application and the purple line is the barrier application. On the X axis we have the number of threads that are allocated at any point in time to the barrier application. Remember that the CPU bound application is running on eight threads all the time and that we have 16 cores in total in this machine. And then on the Y axis, I have the relative rate of progress that these two applications are going through. Normalized to the two threads case here on the left-hand side. So let's start and focus actually on the left-hand side of this graph up until eight barrier ->>: Is there a lot of variability in the amount of computation between those threads and the second case, the case where they're synchronizing is there a lot of variability in what each thread is doing. >> Simon Peter: In this case there's not a lot of variability. >>: Same amount of work, same barrier, same amount of work, same barrier? >> Simon Peter: Yes, this is pretty much -- I would argue if you use a lot of synchronization, most of these algorithms are actually trying to optimize for that case, that actually most of the threads are doing similar amounts of work. If you end up having one thread doing a lot more work, then if you're going through the barrier, it would delay the execution of all the other threads essentially. >>: Are you surprised the degradation given -- >> Simon Peter: I can explain that. Yes. I'll explain it. So up until eight barrier threads, things looked pretty much benign. We have the CPU bound application essentially stays there at a progress of 1, which is what we would expect because it has the same amount of threads allocated to it. And there's nothing else running inside the system. So the operating system here is doing something sane, and it's scheduling the CPU bound application somewhere where the barrier application is not running. We can see that the barrier application is actually degrading in its performance, and that's due to the increase in cost of going through a barrier with an increasing number of threads. You have to synchronize with an increasing number of threads. If you're going through the barrier often enough you can actually see this degradation. Here's why the application is degrading. >>: You've got more cores also working on the problem, too? >> Simon Peter: Sure. But the progress that I'm showing you here is actually over all the threads. So I could have graphed it differently and then it would have stayed up there. But the difference comes from the barrier. >>: So this is relatively -- you're dividing by the number of threads that you have. >> Simon Peter: Yeah. Yeah. Sorry, I should have probably said that earlier. So, yeah, it's divided by the number of threads. >>: The CPU intensive, is that the same curve as you would get if you didn't have the CPU intensive workload working on the other eight cores. >> Simon Peter: Yes, you would see this degradation. Exactly, the degradation is due to the synchronization that the application is doing over an increased number of cores. But there's no impact from the other application and doing memory contention, exactly. And actually I verified that the operating system is doing core partitioning here essentially. It's assigning the eight cores to the CPU-bound application and then it's scheduling the barrier-bound application on the other eight cores that are still available. It's essentially doing a load balancing algorithm. Now on the right-hand side, from nine threads onwards, this is the first case where we have more threads in total in the system than we have cores in the system. The operating system starts to go to time multi plexing, the different threads over the cores. And with the CPU-bound application we still see something that we would expect and that is that CPU-bound application degrades linearly as it's being overlapped with more and more threads of the barrier application. So that's quite straightforward. However, finally we see that the barrier applications progress drop sharply almost immediately down to zero. And that is due to the fact that it can only make progress when all of its threads are running concurrently. If one thread is being preempted by another thread of the CPU-bound application, then all of the other threads in the barrier application now have to wait for that one single thread to enter the barrier as well before they can all continue together. So this is something that we do not want, and that can be alleviated. >>: One CPU-bound thread can still see the inter[inaudible]. >> Simon Peter: Sure, exactly if you had 16 barrier threads and just had one CPU bound thread running you would still see a barrier like this. So you could set up this experiment in different ways and you would still ->>: For example, running Word and the symbols -- versus the ->> Simon Peter: Yeah. Yeah. So there are scheduling techniques to alleviate this problem. Parallel scheduling techniques, essentially. One is called gang scheduling and that's a technique to synchronize the execution of all the threads of one application. So you make sure you're always dispatching all of the threads that are within this one application at the same time. And you never have one thread execute out of order essentially with the other threads. And then there's also core partitioning, which is dedicating a number of cores to a particular application. So there is no overlap with any other application. And these work. However, we can't easily apply them in present day commodity operating systems because there is no knowledge about the applications requirements. The applications today are essentially scheduled as black boxes using heuristics by the operating system, completely unaware of what the requirements are, while the applications and the runtime systems, especially within these applications, could have known that there are threads that are synchronizing within the application. So in this case, for example, in the Open MP case interacting threads are always within an Open MP team. So either the runtime or maybe with help from the compiler the runtime could have known that -- I don't know what that was. That these threads are synchronizing but there's no way to tell the operating system of this fact. Also, we can't just go and realize better scheduling solely from within the runtimes themselves, because it's much more of a problem of coordination between different runtimes. We might not just want to run Open MP programs. Maybe we want to run programs that use other parallel programming runtimes. How do we do the coordination between these different runtimes? If we did it just within the runtimes, then several runtimes might contend for the same cores. Even if they are very smart, to figure out where to run within the system and how to schedule if two different runtimes make the same decisions they might still end up battling over the same resources and all the benefits that they have calculated for themselves might be void. So this is a case where policies and mechanisms are essentially in the wrong place. Now, my solution to this problem is to have the operating system exploit the knowledge of the different applications to avoid misscheduling them. For example, the knowledge about which threads are synchronizing within an application. On the other hand, also applications should be able to react better to different processor allocations that the operating system is making. For example, the CPU-bound application could modify the number of threads that it's using as processor allocations change with the barrier application or to give the barrier application more CPU resource. So my idea is to integrate the operating system scheduler and language runtimes more closely, and the way I do this is essentially firstly by removing the threads abstraction at the operating system interface level. That doesn't mean I'm taking threads away from the application programmers they can still be provided within the runtime. This is something that has been done in operating systems before. There's the K-42 operating system at the site key operating system and scheduling technique called inheritance scheduling that essentially does not require threads at the OS interface level. In my case, I provide what I call processor dispatchers at the OS interface instead, and those give you much more control over which processors you're actually running on and give you the information on where you're running on. So the operating system won't just migrate a thread from one core to a different core. Instead, if you have a dispatcher you know you're running on a particular core. So it's kind of closer to running on hardware threads essentially. And then I extend the interface between the operating system scheduler and the programming language runtime. And before I get to that, I should also say since extending the interface usually raises questions with are people actually going to use this, do we now have to modify all of our applications or to use this interface, a claim that now is a quite good time to do this move because application programs are increasingly programming against parallel runtimes instead of right to the operating system interface directly. So we would only need to modify the parallel runtimes to integrate more closely with the OS. Instead of every single application. Okay. So I call my idea end-to-end scheduling not to be confused with the end-to-end paradigm in networking. Just call it that way because I kind of cut through the threads abstraction to integrate the OS scheduler and the language runtimes more closely. And end-to-end scheduling is a technique for dynamic scheduling of parallel applications that interact with time scales. Three concepts to it. The first one is that applications specify processor requirements to the operating system scheduler. So OS scheduler can learn what is important to the application. The second one is that the operating system scheduler can notify the application about allocation changes that it's doing as the system runs. So the application can react to system state changes. And finally, in order to make this work at interactive time scales, I break out the scheduling program and schedule it at multiple time scales instead so we can react quickly to ad hoc work changes. I'll explain on a later slide how that works and why that is so important. Okay. First to the first concept, which is the processor requirements. In my system applications can say how many processors they want. They can also say which processors they want. They can say when they want them and at what rate. For example, an application can say something like I need three cores all at the same time every 20 milliseconds. That is somewhat similar to Solaris scheduler hints although it's much more explicit. Schedule hints in Solaris are essentially a feature where you can say I'm currently in a critical section, please don't preempt me now. The scheduler can still decide what it wants to do but it takes the hints from the applications in order to make a more informed decision. So this is a bit more explicit than just that and a bit more expressive. The idea is that the applications submit these processor requirements quite infrequently or at the application start. In my system they actually submitted in plain text so there's a little -- it's not really a language. It's just more of a specification IDL that allows you to specify those, like a manifest. I do realize that applications are going through different phases, especially in this more interactive scenario. Some of them might be parallel phases. Some might be sequential -- single threaded phases and these phases might have different processor requirements. So I allow the specification of these different phases in these processor requirement specifications directly. And an example would be if you have a parallel, if you have a music library application it might do some parallel audio reencoding essentially have two phases, one phase is the phase you're pretty much running the graphical user interface. That might be a single threaded phase. And then you could have a phase where you do the parallel audio re-encoding and you're actually using multiple threads. So you can say in my processor requirement specifications you can say I need one core in phase A and I need three cores in phase B. And then I added a new system call that you can call in order to switch between these phases. So you can say I'm switching to phase A now. Switching into phase B now. The second concept is that the operating system schedule notifies the applications of the current allocation that it has allotted to it. This is very similar to work that has been done before. There's scheduler activations and also work that's been done in the psyche and K 42 application systems. So applying these ideas in my system. So the operating system can say something like you now have these two cores every ten milliseconds, as other applications are going through different phases or new applications start up. Finally, since we're in an interactive setting we want to be responsive -- yes? >>: The OS says these two cores, ten seconds of delay. How much -- you'll probably have [inaudible]. >> Simon Peter: So when the operating system tells you this, then you have them. The operating system has a right to change this at any time. In my system, it will do that whenever an application goes into a different phase or another application comes up or an application exits. So we have these quite defined phase boundaries essentially where the operating system can change its mind and give you a new allocation. >>: But can't [inaudible]. >> Simon Peter: Yes. So if it tells you, you have the cores, then you do have the cores. Yeah. >>: Even if a priori operating system shows up, have the cores, you have two minutes you have two minutes. >> Simon Peter: It will tell you things like you have it every ten milliseconds. But it won't tell you you will have it guaranteed for the next two minutes or something like that. So it can still change its mind. So we're in this interactive scenario we need quite small overhead when we're scheduling. We certainly can't take a very long to change our mind. And this is a big problem because computing these processor allocations, especially taking into account the whole architecture, the topology of the architecture is a quite complex problem. It's essentially heterogeneous -- scheduling on a heterogeneous platform, essentially. You have different latencies of communicating between different cores; you have different cache affinities, different memory affinities. This problem is very hard to solve. And actually if you have multiple applications, it takes quite a while. I will have in the evaluation section of the talk I will show you for one example problem how long it took to solve it. Also if we're doing things like gang scheduling we have to synchronize dispatch over several cores. If we do that every time slice and we're talking about interactive time slices typically on the order of one millisecond to tens of milliseconds this might not scale with a lot of cores. So we'll slow down the system. So in order to tackle that problem I break up the scheduling problem into multiple time scales. There is the long-term computation of processor allocations, and I expect this to be on the order of minutes. So the allocations that I'm providing are expected to stay relatively stable for at least tens of seconds or minutes. Then there's the medium term -- the medium term allocation phase changes that the applications can go through with their system call. And that's on the order of say desi seconds. I would expect that it doesn't make much sense to go through different resource requirements much faster than that. But you want to have something where you want to be fairly interactive with the users so you might still change very quickly between different phases. Finally you have the short term pure core scheduling that's the regular scheduler that runs in the kernel and does the time slicing of the applications, and that's on the order of milliseconds. Okay. So how is this all implemented within the Barrelfish operating system? I realize all of this by a combination of techniques at different time granularities. First of all, I have a centralized placement controller. That's the thing on the right-hand side. I should also say how this schematic is built up. We have the cores essentially on the X axis here and there's as many cores as there are cores in the system. And we have some components that run in kernel space and some components that run in user space. So the placement controller is the entity that's responsible for doing the long-term scheduling. So this will receive the processor requirement specifications from the different applications, and then go and produce schedules for different combinations of phases that these applications can go through. And it makes sense to have this be centralized because it's doing something that we can take some time for computing and it also needs to have global knowledge of what's going on within the system in order to do this, the placement of different applications on to the cores. And then the placement controller will download the produced schedules to a network of planners that there's one planner running on each core. These planners will, first of all, just store that information locally so that we don't have to do communication when we're going through different phase changes with the placement controller. Then we have the system core that allows an application to change a phase. And when that happens, the application will send one thread of the application will send the system call and communicate with the planner to change with the local core planner to change the phase. That planner then has an ID for the phase that it communicates with those other planners that are involved in scheduling this application. So there's just one message being sent, one cache line essentially with the ID of the new phase being sent to the other planners. And the other planners just have to look up that ID in their database of different schedules that they have for their local core. And can then activate that schedule. And the way they do that is essentially by downloading a number of parameters to the kernel of the scheduler for that particular phase. I have evaluated how long it takes in the worst case to do such a phase change. If we change every single scheduling parameter on in my case a 48-core machine which was the biggest machine I had available at the time when I did this experiment, I did a phase change over all of the cores. And it took 37 microseconds. So since we're speaking on the order of desiseconds that a phase is lasting, this is quite low overhead for doing this. Finally, just as another number I have the context which duration of the system is four microseconds which is the other number that I have there. And so doing this scheduling at multiple time scales allows me to do something that's pretty cool. Something that I call phase log scheduling. The way I do this, and this is a technique that allows me to do synchronized dispatch like gang scheduling over a large number of cores without requiring excessive communication between these cores which will not scale very well if I do it at very fine time granularities, the way I do this is I use a deterministic scheduler that's running in kernel space per core. In my case I use the RBED scheduler which was presented by Scott Brandt in 2003, which is essentially a hard real time scheduler. Though I'm not using it for the real time properties, I'm using it for the property of being deterministic. And then I do a clock synchronization over the core local timers that drive each scheduler on each core. And then I can essentially download a fixed schedule to all of these deterministic schedulers and know that these schedulers will dispatch certain threads at certain times without any further need for communication. So I do not have to do this communication over all the cores that will be involved in say gang scheduling and application every time I want to dispatch or remove the threads from the particular cores. So I have a slide on this to show you how this works. And this is an actual trace that I took of the CPU bound and the barrier application running on the 16 core system. In the first case, we're just doing regular best effort scheduling, and this is also to show you the benefits of gang scheduling the applications. So the red should probably explain the graph. The red bars are the barrier application and the blue bars are the CPU bound application, and X axis we have time and Y we have the cores. In the best effort case, the barrier application can only make progress in these small time windows when all of the threads are executing together. Then you can gang schedule. In the classical case you'd have to synchronize every time with every core that's involved in the schedule. >>: What's the application that makes just one core? >> Simon Peter: Well, so this is where gang scheduling becomes harder and this is why it takes a long time to compute optimal gang scheduling, essentially a bin packing problem. You have this other application that would run in the system and it might either be also gang schedule, runs on one core there's no point in gang scheduling it. You now have to figure out how do I fill the two dimensional matrix essentially of time and cores optimally with the gang and then this one application. Either you leave a lot of holes in which case your scheduler is not very good or you're actually trying to do bin pack and try and fit everything in. So it's quite hard to compute an optimal gang schedule. And this is why scheduling in the long-term this placement controller is quite a, takes quite a long time. So this is why it's important to break up the scheduling problem and not do it ad hoc essentially when the workload changes. Okay. So in the phase log case, what I'm doing is I synchronize the core local clocks once. For that I need some communication in order to synchronize the clocks. You can either use a readily available protocol for that like NTP to synchronize the clocks or use a reference clock. In my system I used the reference clock. There's the pit in the system essentially that I can use to synchronize the core local AP clocks. And then you agree on a future start time for the gangs. For that you also need a little bit of communication. And then you also agree on the gang period. And finally this will just schedule itself now each core has agreed and has its deterministic schedule and now we can go ahead and gang schedule. And we only might need to communicate again at some point in the future when we need to resync clocks. I've actually evaluated whether that is the case on x86 machines and it turns out that it's not the case. It seems that the APIC clocks are all driven by the same court. I ran a long-term experiment on several machines where I started the machine synchronized the two clocks and saw that there was drift over a day or two and there was no measurable drift in these machines. So it seems they just have an offset and it's based on when the core will actually start up. But they're all driven by probably driven by the same clock. Okay. So let's go back to the original example and see if end-to-end scheduling actually benefits us here. So this is the same two applications, just not running on Linux but running on Barrelfish. Otherwise same conditions. The barrier implementations -- sorry, the barrier application slows down a little bit faster and it's due to the barrier implementation that I had available in the Open MP library that I used within Barrelfish. Obviously I couldn't use the interloping PL because of the closed source I wasn't able to just port it to Barrelfish. We also see a little bit more noise. And that's mainly due to the fact that Barrelfish has more background activity. There are these monitors and planners running in the background whereas Linux is typically almost completely idle when you don't do anything. The pitfall that is still there, that's the important bit, now if I activate end-to-end scheduling, and I tell the operating system that I would benefit from gang scheduling from the barrier-based application, then it will actually do so and we can see that the pitfall is now gone. So the barrier application keeps its progress due to gang scheduling. We still see a little bit of degradation due to the barrier. There's more time taken away from the CPU bound application, and so it slows down faster. That's what you would expect, because we have to take the time from somewhere where now alotting to the barrier application. We're now much fairer in this workload mix to the parallel barrier application. Okay. So so far I've only talked about cores. Now, multicore, it's not just about cores. There are more things like memory hierarchies, different cache interconnects, cache sizes and things like that, that's another main outcome of the Barrelfish work, is the system topology is very important. So how far away are different cores on the memory interconnect? Barriers implemented by probing shared memory and I did this measurement on the 16 core AMD Opteron, you see the schematic here. I measured it takes about 75 cycles to do a memory probe into a cache that's on a core that's on the same dye within the same system. And it takes about twice as much to go to a core that's on a separate dye within the system. And so it depends very much on the application's performance executing these barriers depends very much on where the operating system is actually placing those threads. If it happens to place the threads close to each other the application will make more progress if we place threads on cores that are further away from each other than we will see less progress. And other multicore platforms look different again. So we have to be able to accommodate all of them in order to make good progress with the application. We can actually see this in the original example. So this is back in the Linux system. By the big arrow bars that we're seeing in the barrier bound application. As you can even see this most pronounced in the two threads case, we only have two threads and the operating system is making arbitrary decisions over many runs which I did in this benchmark, where in many cases it happened to place it on the two cores that were close together and we had very good progress. If it happened to place these threads on two cores that were further away from each other we essentially have half the progress in the barrier application. Now, how do we incorporate that? There is a component inside Barrelfish that's called the system knowledge base that's mainly work done by another Ph.D. student in the group, Adrian Shipper. The system knowledge base is a component that contains abstract knowledge about the machine. It knows, for example, what the cache hierarchy is and what the topology is between different cores. And it gains this information either via runtime measurements or whether through other information sources like ACPI, for example, or CPUID. So it's one abstract store of all this information. And what I did is I interfaced my scheduler with this component. So the system knowledge base is now informed by my scheduler about the resource allocations that the scheduler is doing. So it knows about utilizations in the machine. Also I should say that the system knowledge base is a constraint solver. So you can query it using constrained cost functions, and this allows us to now ask questions like or ask queries like I need at least three cores that share a cache. More are better. Instead of just saying I want these cores and things like that. So I worked with Adrian and Jana Givech another Ph.D. student in the group to port a parallel column store, essentially a key value store, to Barrelfish. As a workload, we ran a real workload on this application. It's all the European flight bookings coming from a company called Amadeus, which is responsible for that. Essentially they're interested in high query throughput to book flights. And we ran this column store together with a background stressor application inside the system. Again, on the 16 core AMD system. And we specified six constraints for the application. There were things like cache and memory size as well as sharing of different caches and numeral locality in there. We asked the system to maximize CPU time, and so by submitting this constraint query, essentially it took the resource allocator several seconds to produce a final schedule. This is just to give you an idea how long it can take to do this. And it ended up deciding that it would partition the machine between the column store and the background stressor application. And on this graph down here we can see the result in terms of query throughput. Let's start with the middle one, which is the way to naively run the two applications by just starting the background stressor and starting the column store. In that case the column store just goes ahead and tries to acquire all the cores in the system. So it overlaps with the background stressor application and we can see the query throughput is down there at a little more than 3,000 queries per second. There's also a way of just manually placing these two applications, trying to partition them. Then we get a throughput of 5,000 -- 500 queries per second. And finally on Barrelfish, we are really close to the optimal way of placing these two applications. So Barrelfish did the right decision of deciding to partition the machine between these two applications. Finally, I'd also like to say a little bit more about my evaluation of phase log scheduling. Turned out after evaluating it it's maybe not yet important on the x86 platform. When I just used synchronized scheduling, synchronizing every time slice essentially over all the cores, there was no real measurable slow down up until 48 cores which was the biggest machine I had available to measure this. The reason for this is that both timers, the core local APIC timers and interprocessor interrupt, which is what I used in order to keep the schedule synchronized over all the cores, generate a trap on the receiving core. And taking a trap takes around an order of magnitude longer on x86 than the act of sending an IPI to a different core. So in this case it doesn't make any sense to distribute receiving interrupts which is essentially what I do by phase log scheduling now every core uses its core local timer that generates the trap. However, it does use less resources. I don't actually send the interprocessor up to the other cores. Now x86 architecture that doesn't make much of a difference because x86 has a dedicated interrupt bus. There's no contention on that bus essentially for multiple applications trying to send interrupts. But it does produce less contentions on systems that don't have a special interrupt bus. For example, the Intel signature cloud computer, which is another architecture that I investigated Barrelfish on, used the same mesh interconnect for both the messages that are being sent between cores and cache lines as well as the interrupt. So if you have a lot of traffic on that interconnect, using these interrupts, interrupts could actually be delayed on the interconnect, and then you don't have a schedule that's as nicely synchronized. So the insight here is that one should always investigate the cost for intercore sends and receives individually. That was something that we hadn't thought of within Barrelfish thoroughly before we always thought there was one cost associated with a message that's being transmitted between two different cores. But we didn't look so much at receive cost and send cost. Obviously this is only -- there's more future work with this. One thing that I haven't evaluated so far is how expressive the operating system and runtime API should be. So far I've only looked at these exact processor requirements essentially. If one were to deploy this in a real system, you would probably want to do this expressiveness analysis first in order to not give the user this very expressive interface to essentially do everything like the constraint solving and all that. It's nice because it allows you to experiment a lot with different things, but you probably want to figure out what adequate interface for applications is, and one thing that I looked at a little bit and I found interesting was number of core speedup functions that might be provided by applications in order to give an operating system an idea how well the applications are scaling with the workload they're running, and they could do that based on estimates. And there's actually related work here. There's a system called Parkour presented two years ago in HotPar by the University of California and San Diego that allows you to do exactly these estimates. So you will give it an Open MP workload in this case and it will figure out how well that workload will scale. Essentially you can feed that information into the scheduler and the scheduler could automatically figure out how many cores to allocate for a particular allocation over some other applications that might scale either better or less well. Another thing that I haven't looked at so far is IO scheduling. So for everything that was mainly done within memory, and they just looked at cores and caches. So there's still some work to be done on devices and interrupts, for example. What if we have a device driver that wants to be scheduled? Finally, I also find heterogeneous multi-core architectures to be important in the future. Things like programmable accelerators are becoming quite pervasive. Like GPUs but also network processors and the question is what are the required abstractions here, are the abstractions I've presented so far adequate for this or do I need to integrate more abstractions? Okay. Also like to say a little bit about my Barrelfish-related achievements to date. Throughout my work I was able to author and co-author several papers and top ranking conferences workshops and also journals. With Barrelfish I participated in building a system from scratch. I'm the proud owner to be the first check in for the coat base which dates back to October 2007. Barrelfish is a very large system. It contains around 150,000 lines of new code. If you count all the ported libraries and applications we amount to around half a million lines of code. It had more than 20,000 downloads of the source code over the past year and generated quite significant media interest. Being a Ph.D. student, I was also involved in supervising several bachelors and masters students, the most important ones were one who implemented a virtual machine monitor together with me for Barrelfish and then another one who implemented the Open MP runtime system that I then used for my experiments on Barrelfish. I also had two bigger collaborations going on. One with Intel in order to evaluate Barrelfish on the single chip cloud computer. There is how I gained most of my knowledge there. They also provided some other test systems for the group to do experiments on. And then there was a collaboration with the Barcelona Super Computing Center they were interested in running parallel runtime that was based on C++, and we didn't have a C++ compiler and runtime system for Barrelfish at the time. I collaborated with them in order to essentially port one to Barrelfish. So to conclude, multicore poses fundamental problems to the design of the operating systems. Things like parallel applications as well as the fast pace of architectural changes. Commodity operating systems need to be redesigned in order to cope with these problems. I've focused on processor scheduling where I found that combining hardware knowledge with resource negotiation and parallel scheduling techniques can enable parallel applications with an interactive scenario. Also like to say that some problems are only evident when dealing with a real system. For example, the problem or the insight that one should investigate messages sent and and receive separately and the cost is quite different on a multicore machine. The very end I'd like to say a little bit about myself and projects I've worked on besides Barrelfish. Main two areas are operating systems as well as distributed and network systems. And other projects I have worked on are a replication framework for the network file system version 4 that I did my masters thesis. This is essentially a framework that allows you to specify different replication strategies and also writable replication for the network file system which were not around at the time. I accomplished two internships throughout my Ph.D. both at Microsoft Research. The first one was in Cambridge, working with Rebecca Isaacs and Paul Barnum where we applied constraint solving techniques in order to aid solving the problem of scheduling applications on heterogeneous clusters, in our case it was dried link applications that we wanted to run on heterogeneous clusters. I've ended another internship with [inaudible] working on the Fay cluster tracing system that got published in SSP last year in 2011. Fay is a system that allows you to trace through all the layers of the software stack on a number of machines all the way down to the operating system kernel and doing so safely as well as in a dynamic fashion. Finally, just before starting my work on Barrelfish, while I was already on the Ph.D. program, I did a study of timer usage within operating systems that got published in Euro Sys in 2008 where I found that many programmers actually set their time-outs to quite arbitrary values without looking at what the underlying reason for setting the timer was in the first place and what the timer is being used for. And I provided several methodologies out of that problem in the paper. Finally my preferred approach to especially doing systems research is to find a sufficiently challenging problem, understand the whole space, and build a real system. Thank you. [applause] questions? >>: Can you talk more about the constraint system used to the scheduler. >> Simon Peter: Yes. So it's based on the Eclipse prologue interpreter, not to be confused with the Eclipse integrated development environment. It is prologue with added constraints essentially. We've ported this to barrel finish with currently single threaded centralized component. >>: What kind of constraints does it solve in this case? >> Simon Peter: Well, there are different solvers inside the system that you can use to solve the constraints. You can specify constraints like this value should be smaller than another value in the system or smaller than a constant or bigger than, constraints like that. I don't know if you want to know ->>: I'll follow up later. >> Simon Peter: Okay. Yes? >>: Your experiments we're using all the applications provided you with scheduling information? >> Simon Peter: So in the experiments I do that. The system itself does not rely on getting information from every single application. It's more of a thing where applications can support the scheduling by providing information. So if you don't provide information, then the operating system scheduler will essentially resort to best effort scheduling for you. >>: How much does it work out to? If I have a bunch of legacy applications [inaudible]. >> Simon Peter: So I have not evaluated that thoroughly. But I definitely -- definitely the background service s that are running that are not providing any information did not impact the system that very much. You can see that there was more noise in the system. So there is some impact. But for the sake of running the experiments, it was still okay. >> Andrew Baumann: Other questions? >>: You mentioned earlier, you mentioned trying to decrease the latency or increase the responsiveness, I guess, the experiment you showed us with the throughput. >> Simon Peter: Yes, that is true. I'm sorry I don't have one for me about the responsiveness. The kind of the idea that I wanted to get across that we needed to be able to make scheduling decisions quite quickly, and if we do this, the constraint solving, for example, every time the workload changes then that is clearly not responsive enough. So for the purposes of this talk it's more about that. >>: The application give you a hint that makes your constraint solving run longer than you expect? >> Simon Peter: Yes. So that's one -- actually that's a good and bad point about the constraint solving. Yeah, so you don't know up front how long it might take to do the solving. However, the good thing about constraint solving is that it kind of searches the problem space. So it allows you or it will give you a quite good solution very quickly, and then it can go on and solve further. And that's actually something that I'm planning to use in the system as well that the placement controller essentially will go off due to constraint solving, it will start downloading scheduling information as it gets to the schedules. So we can start using these. And then go on and solve some more. The interesting thing about that is also that it seems to be trading off quite nicely with different lengths of phases that you have. If you have an application that has quite short phases, then we'll go through these phases quite quickly. In that case we might not have the optimal schedule available at that point. However, since the phases are quite short, it might not matter too much to have the optimal phase allocation available. And if you have an application that has quite long phases, then it also allows you to -- it allows you more solving time essentially to come up with a better schedule for these longer phases and then the decisions will also have more impact. Any more questions? >>: So did I -- this is a general purpose question for the schedule. Are you offering that we should integrate gang scheduling in this time of framework into existing systems? Should I put a gang scheduler in one of my phases, is that going to solve ->> Simon Peter: You shouldn't just put the gang scheduler into the problem. I'm just saying that the problem will definitely become much more, much harder because of the parallel applications that you potentially want to run in your system. They do have scheduling requirements like gang scheduling. Synchronize dispatch. >>: Doesn't having [inaudible] isn't that how you get scheduler [inaudible] that's different scheduling [inaudible]. >> Simon Peter: That depends a little bit. That depends on how much you really care about the best effort applications. I mean, if you don't care so much about the progress of these applications, and since they're just best effort anyway, it probably doesn't matter so much for them. You can just try and ->>: [inaudible]. >> Simon Peter: That depends on -- right now, I mean -- that depends a little bit what the user wants to do with it. If he's actually, like actually using Word at this point in time it's important that you give him the service at that point in time. But it's still a best effort application. I'm not sure what you're trying to get at right now. Okay. >> Andrew Baumann: Any other questions? Thanks [applause]