>> Yuval Peres: Hello, everyone. We're very happy... Fadnavis, who has visited here before, but now she'll tell...

advertisement

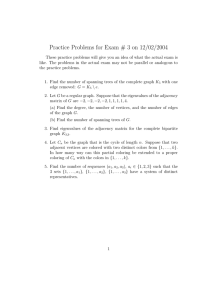

>> Yuval Peres: Hello, everyone. We're very happy to welcome Sukhada Fadnavis, who has visited here before, but now she'll tell us about the generalized birthday problem. >> Sukhada Fadnavis: Thank you, Yuval, for the invitation. And I'm going to talk about a nice generalization of the birthday problem today. So the birthday, the classical birthday problem asks for the minimum number N of students that we need to randomly sample so that at least two of them have a matching birthday. And the well known answer is 23, which sometimes is paradoxically small. But we can see that it's easy to compute. The probability of at least two of N students having a matching birthday would be 1 minus the probability that they all have distinct birthday to be 1 minus 365 chosen birthdays for the N students and then factor of As. And 1 over 365 would be the probability of each birthday. But of course we are assuming that all birthdays are equally likely. That is not the case. In the statistics in the United States show that there are more children born in the summer months than in the winter. Or if you [inaudible] to a particular year, even there are fewer births during the weekends than during the weekdays. So certainly the distribution is not uniform. And of course you want to address this question in general, not just with 365 birthdays. So here is Q, the notation for number of birth periods, which will be everywhere in the clock. So that's Q if it's birth month it's 12. So seasons it's 4. So on. So if you really want the birthday demonstration to succeed in class, it's crucial that we have at least half a probability of matching birthdays. And the initial calculation that we did using assuming uniform distribution on the birthdays is not sufficient at all unless we somehow prove that that is the worst distribution indeed, the uniform distribution. So let us set up some notation here. We have Q birthdays. And there's a distribution in the birthdays. Birthday I goes with probability I. And let P sub-N, P1 up to PQ denote the probability that all of them have distinct birthdays. Okay. So we are concerned with the probability distribution that maximize this probability. And assuming that probability distribution should find N so that we can assure that even if you don't know the distribution of birthdays taking 23 students actually tells us that we have at least .5 probability have matching birthdays. Turns out that we were safe, the uniform distribution was -- is indeed the one that maximizes the probability of distinct birthdays. So we can write down an expression here. P sub-N, P1 up to PQ is just taking some [inaudible] in subsets of 1 up to Q, taking the product of the probabilities of those birthdays occurring times N factor for distributing the birthdays of N people. For example, if N is 2 and we have 3 birthdays occurring probability P1, P2, P3, then P sub-2, P1, P2, P3 is two times P1, P2, plus P2, P3, plus P3, P1 for the six ways of distributing the birthdays. And in general this expression is symmetric. Also it's concave. And that would imply that it is actually maximized at the uniform distribution. So this proves that the calculation -- the initial calculation assuming uniform distribution was a safe calculation. Okay. So now we modify the problem a bit. Instead of just being interested in any two students having a matching birthday now, we are interested in having two friends having a matching birthday. The classroom comes with an underlying friendship graph. So, for example, in this picture A and B are friends with each -- B and C are friends with each other, but A and C are not friends. So suppose the teacher says okay, talk to your friends and compare your birthdays, see if there's a matching birthday? The suggestion would not show up, since A and C never talk to each other. But in the second situation would show up where I'm using illustrative node birthdays. This model has been studied before. It can be see at the birthday attack. The birthday attack can be see as this model with the complete bipartite graph as the underlying graph or in statistical physics is the Antiferromagnetic Potts model at zero sure, which is the model with an external field allowing this [inaudible] to have different properties. So now, I'm going to translate all of this into graph language. So birthdays become colors now and the friendship structure becomes a friendship graph. So we have nodes for the students and an edge between the N is their friends. And no two friends sharing the same birthday would mean that we have a proper coloring of the graph. So our interest is in getting proper colorings of the graph. And of course this question is interesting only if we allow the number of colors to be greater than or equal to the chromatic number of the graph because of the -definitely we would have a model chromatic edge. Okay. Of. So this is the other question we are going to be interested in today. We have a graph G. And we have Q colors. And our distribution P1 up to PQ in the colors. So color I occurs with probability PI. And let P sub-G, P1 up to PQ denote the probability that under randomly coloring each vertex independently with these colors the result -- where the result is a proper coloring of the graph. Okay. And the question that are interested in answering is whether this probability is always maximized if -- by the uniform distribution. Okay. If not, then when? The answer is no, it isn't. So here's an example due to Geir Helleloid. It's simple example, the graph, underlying graph is a 4-star. It's a node in the center connected to four edges. And we have two colors. So Q is 2. So there's exactly two ways of getting a proper coloring. The center node gets one color and the outside nodes get the other color. And suppose that two colors have probability P1 and 1 minus P1. The probability of getting a proper coloring would be even for the center times minus P1 to the fourth to the outside plus P2 for the center and P1 to the fourth for the outside. If you plug this against P1, this is the graph we see. So it's definitely not maximized at the uniform distribution. In fact, it's maximized in this picture at .2 and .8. So we can ask two questions at this point. We know that the uniform -- at least when the underlying graph is the complete graph, that was the classic birthday problem. And in that case it is maximized with the uniform distribution. Is there a bigger class of graphs for which this probability is true? And for all graphs what can we do to save some intuition? So there is some intuition for general graphs that it should be maximized for -- by the uniform distribution. So program if you consider the expected number of monochromatic edges resulting out of this is the number of edges times summation PI squared, which is minimized if the distribution was in the form. So can we somehow rescue this to answer when the uniform distribution would be the best distribution. So I'll answer the first question first about the class of graphics. So there is a class of graphs for this -- for which this is true. And these are call claw-free graphs. I'm not saying that these are the only graphs, but this is a big class of graphs for which this is true. So that's our first result that if G is a claw-free graph then the probability of getting a proper unreasonable is maximized when the distribution is uniform. And, in fact, this function P sub-G is a schur-concave function on the set of distributions. So let me define what claw-free graphs are. A claw is a graph on four vertices-- it's a 3-star. It's a graph on four nodes where the center is connected to the three. And the claw-free graph is a graph which was no induced subgraph that is isomorphic to a claw. What that means is that there should be no subset of four vertices such that if you remove all edges not containing these vertices what remains is a claw. There should be no such subset. So for example, the cycle over here, it's claw-free. It doesn't even contain a claw. Also, this complete graph on four vertices is a claw-free graph. It contains claws but they're not induced claws. It's claw-free. Claw-free graphs are very well studied. They have been characterized completely by our Paul Seymour and Maria Chudnovsky. It's a series of seven, eight papers that runs in hundreds of pages. They've classified them into simple basic types. So two easy ones to describe would be line graphs or complements of triangle graphs. The other ones are a bit complicated to describe. And then they say that all claw-free graphs can be used by some basic operations on these basic is types. Some algorithms have been well studied on claw-free graphs. For example finding maximum matchings or maximum independent sets and so on. And so those are the first class of graphs for which this strong perfect graph conjecture was proved. Okay. So unquestioned definitely at this point is why claw-free graphs? From the property we are talking about, it's not obvious that it would be true for claw-free graphs. And it's also not the first class of graphs that one tries something on. So as I say, this is a conjecture of -- was a conjecture of Persi Diaconis. And it ruled from another conjecture from algebraic combinatorics due to Stanley-Stembridge while the poset change conjecture. So let me say what the poset chain conjecture is. The poset chain conjecture states that if G is the incomparability graph of a 3 plus 1-free poset, then the Stanley chromatic polynomial of the graph is e-positive. Okay. Lots of words. Maybe not at all clear what they mean. So let me start defining some of them. So let's start with the Stanley chromatic polynomial. If G is a graph and let Q be greater than or equal to the chromatic number of the graph, then a Q coloring of the graph would be a map from a set of vertices into 1 of 2 Q says that no two adjacent nodes do the mapped to the same color. And then the Stanley chromatic polynomial on Q variables X1 of 2XQ of the graph G would just be a sum over all these Q coloring sigma of the product of X subsigma. So for example if H is just an edge and we want the Stanley chromatic polynomial on three variables, X1, X2, X3, that will be two times X1X2, plus X2X3 plus X2X3 plus X3X1 for the six colorings. And the crucial observation of Persi Diaconis here was if you evaluate this polynomial at P1 up to PQ, this is exactly the probability that we are concerned with of getting a proper coloring of the graph. >>: Which observation ->> Sukhada Fadnavis: Sorry? Okay. He made the connection between this conjecture from outside the field. The incomparability graph of a poset P, how did I reach that without talking of poset? First I'll -- so a poset P is a set which has a binary relation which I denote by less than or equal to here such that it's [inaudible] so A is always less than or equal to A. And it's anti-symmetric, so if A is less than or equal to B, B is less than or equal to C, then A is less than or equal to C. And it stands -- sorry. Anti-symmetric is A is less than or equal to B and B less than or equal to A would imply that A and B are the same. And it stands there which means A less than or equal to B, B less than or equal to C implies A less than or equal to C. So for example here is a poset on six elements. And the direct -- the direction [inaudible] say F is less than or equal to E and E is less than or equal to D and by transitivity that would also imply F is less than or equal to D and not fill that in. But it's implied. And so in this chain here, F is less than or equal to everything is the element -- is the minimal element of this poset. And B is greater than or equal to everything is the maximal element. Though there is no comparison between A and C, A and D and A and [inaudible] we can [inaudible] there's no relation between them. So that brings us to the concept of a incomparability graph of the poset. So for every element of the poset we'll have a vertex in the graph. And then there's an edge between two elements, if they're not comparable. So for example here only the pairs AC, AD, and AE were not comparable. All other pairs were comparable. So the resulting graph is this graph [inaudible]. A 3 plus 1 free poset is a poset where there is no subset of four elements -- sorry, is there a question? >>: [inaudible]. >> Sukhada Fadnavis: Oh, a 3 plus 1 free poset is a poset where there is no subset of four elements so. The 3 of them are mutually comparable. But none of them are comparable to the fourth one. So the poset in our example is not 3 plus 1 free because there were these three elements, C, D, and -- yeah, C, D, and E that were all mutually comparable but none of them were comparable to A. And that led to a claw in the incomparability graph, and induced claw. And, in fact, the converse is, in fact, true. If your poset is 3 plus 1 free then incomparability graph is a claw-free graph. So that's the second connection. And let me just say what's symmetrical, the elementary symmetric polynomials are. So the Kth is symmetric polynomial on the variables X1 over XQ would be a -- some overall K subsets of 1 of 2Q and the product of XI1 up to XIK. So for example E1 of X1, X2, X3 is X1 plus X2 plus X3. E2 of X1, X2, X3 equals X1X2 plus X2X3 plus X3X1. And we say that a symmetric polynomial is e-positive if it can be returned as a linear combination of products of elementary symmetric polynomials all with positive coefficients. So for example, X1 squared plus 4X1X2 plus X2 squared, which is E1 squared plus 2E2 is e-positive. But X1 squared plus X2 squared, which is E1 squared minus 2E2 is not e-positive because of the negative co efficient. And I say that this representation is unique. This forms a basis for symmetric polynomials. So the definition is a good definition. Okay. So coming back Stanley-Stembridge conjecture. Let G be the incomparability graph of a 3 plus 1 free poset which tells us that it is claw-free. Then the Stanley chromatic polynomial chi sub-G is e-positive. And also chi sub-G evaluated at P1 up to PQ is the probability of getting a proper coloring on this graph from our process. So since chi sub-G is -- if this conjecture was true, we know that chi sub-G's e-positive, now, each symmetric -- elementary symmetric polynomial is schur concave. And so are the products and so are the sums if they're positive sums. And that tells us that this P sub-G is also a schur-concave function in the set of distributions. It's symmetric schur concave, hence it must be maximized at uniform distribution. So this is what trying to verify this conjecture is what led to that -- to the conjecture that we proved. So this would -- this would imply a result for a subset of claw-free graphs which are incomparability graphs of 3 plus 1 free poset. That's not the entire set of claw-free graphs. It would imply this result for the subset and then [inaudible] conjecture may be it's true for all claw-free graphs. And indeed it is. And a nice byproduct of this is that the edge coloring problem is a good problem in the sense that if you consider the same problem except that we are coloring edges instead of coloring vertices. Now, we have Q colors. We color each edge independently with these Q colors. Then what's the probability -- and we consider the probability of getting a good coloring -- a good edge coloring, which means no two adjacent edges should get the same color. Then is this probability maximized by uniform distribution? And it is. Because this problem is the same as considering the vertex coloring problem on the line graph of the graph, which is per the edge you get a vertex and then you have an edge between two vertices if the corresponding edges were intersecting. And line graph's always claw-free. So as an interesting byproduct we know that all results are good for edge coloring problem. Okay. So let me sketch a proof of this result. Some interesting properties of our claw-free graphs. All right. Our strategy is going to be that if you start with the distribution P1 up to PQ if -- without loss of generality, suppose P1 is not equal to P2. We'll show that it's better to make those two equal. And this would imply that the uniform distribution always gives us the best result for the highest probability of getting a proper coloring. Okay? So the way we go about it is we fix the densities P3 up to PQ and we also fix the vertices that get colors 3 up to Q. Now, it would suffice to show that the remaining graph is best colored if -- the probability of getting a proper coloring of the remaining graph is maximize if P1 equals P2. So the first observation is the remaining graph should at least be bipartite to be properly colored with two colors. If not the probability's zero and we disregard the case. So it's bipartite. Also it's an induce subgraph of a claw-free graph. So it's claw-free and bipartite. What this implies is that the maximum degree of the graph is at most 2. Okay? So to see that -- this is visible. Okay. So we have our bipartite graph. And suppose our vertex had degree at least 3. But then it's also claw-free. So that would imply that there should be an edge at least between one of the -- between some pair of these three vertices. But that [inaudible] claw-free of the graph. So that tells us that the maximum degree at most 2, which means that the remaining set of vertices is the disjoint union of even cycles or paths. Even cycles because it's also bipartite. Okay. So now we can, since it's a disjoint, we can look at them separately. Now, suppose we have a cycle of even length 2K and we are coloring it with these two colors. Then the probability of getting a proper coloring is 2 times P1 to the K plus P2 to the K, which is maximized if P1 equals P2. And a similar calculation for paths who also show us that P1 equals P2 would maximize this probability and hence we have proved the result. And, in fact, we have proved that P sub-G is not just maximized by the uniform distribution but is a schur-concave function, because we showed that if we take any two PI, PG not equal it was better to replace them both by their [inaudible]. So I will stop here with claw-free graphs and go back to general graphs. The example that I showed of the 4-star and two colors, we had this -- the graph of the probability mapped against P1. What happens if we increase the number of colors? So suppose our I increase the color -- number of colors from 2 to 3. Here is the contour graph of the probability. So this is P sub -- P of P1, P2, and P3 would be 1 minus P1P2, so I'm plotting it against P1P2. And this edge over here is exactly this plot. So we know towards the edges it's maybe but then towards the center, the value's increasing and also maybe the contours are becoming more and more concave rather than wavy. And this hints that maybe increasing the number of colors is the answer. Now, if you increase the number of colors fixing the graph, the uniform distribution would be the best distribution. So some prediction from the Stein's method is not rigorous calculation or strong, just something hinting towards this that increasing colors would be a good idea. So the Stein's method would tell us that we have XI of finite collection of random variables, binary random variables and let PI be the probability that XI is 1. And PIJ be the probability that XI and XJ are multiple. And let W be the sum of XIs and lambda be the expectation of W. Then W is the -- the total variation distance between W and the Poisson lambda distribution can be bounded about by this expression. Then in that case if we let -- if we have a random variable -- binary random variable for each edge and it's 1 if edge is monochromatic and zero if it's not, then we are interested in the case when W is zero; that is we have no monochromatic edges at all. Yeah. And PI is the probability of 1 edge being monochromatic. That would be summation PI squared. And PIJ when I and J intersect is the probability of both the edges being monochromatic, which means all three vertices get the same color. That summation PK cubed. And lambda is the expectation, so it's E times that. E times PI. So then that would tell us that in total variation distance W and -- when the Poisson lambda distribution not bounded about by this expression. So if you make this assumption on the PIs, let these two -- the top quantity moves to zero, so we want the total variation distance to go to zero and we bound the expectation by some constant, then we would conclude that in the element the probability of getting a proper coloring or W equal to zero is E to the minus number of edges then summation PK squared which is maximized if summation -- if all the PKs were equal. Okay. So that's hinting towards this. But in these conditions what we have implicitly assumed is either that the graph size is growing big -- but even if the graph size is growing big it assumes that Q is much greater than delta. Because then these conditions otherwise could not have been satisfied. Or even if we fix the graph once again it implies that Q must be greater than the highest degree -sorry. I should say what delta is. Delta is the highest degree of the graph. Okay. So somehow there's a hint here that maybe if we increase the number of colors proportionate to the highest degree, then the uniform distribution might become the best distribution. And that's actually what we prove. So here's our main result. For a graph G with maximum disagree D, if Q is greater than this humongous constant times D to the fourth, then the function P sub-G is indeed maximized with the uniform distribution. So it's not the theorem, it's maximized starting at some finite point it is always maximized by the uniform distribution. Okay. And a special case of that is ->>: [inaudible]. >> Sukhada Fadnavis: Sorry? >>: [inaudible]. >> Sukhada Fadnavis: Okay. I'm really happen if you think so. >>: So if you ask the students [inaudible]. >> Sukhada Fadnavis: Okay. So a special case of this theorem is not directly a special case but the same analysis deserves the special case that if you compare the probability of getting a proper coloring using Q colors of uniform distribution then the probability -- that's greater than probability of getting a proper coloring using just Q minus 1 colors of the uniform distribution. When Q is greater than 400 times highest degree to the 3 by 2. >>: And so this [inaudible] example [inaudible]. >> Sukhada Fadnavis: Yeah. It's going. Yeah. So, yeah. And there's a conjecture. Let me just conjecture this. That if Q is strictly greater than the highest degree instead of this condition, so if we can have a weaker condition Q and the statement will still hold true, okay, and if this is true, if this conjecture is proved, it would prove famous conjecture from combinatorics called the shameful conjecture. >>: [inaudible]. >> Sukhada Fadnavis: Sorry? >>: [inaudible]. >> Sukhada Fadnavis: Okay. I at least wanted to be [inaudible] so that it proves the shameful conjecture. But maybe theorem [inaudible]. >>: [inaudible] tell us what [inaudible]. >> Sukhada Fadnavis: Yes. Yes. >>: Because I dare to say if you're not going to tell us, at least tell us why it's -why it's called that. >> Sukhada Fadnavis: The shameful conjecture that, using the uniform distribution on Q colors, you would get a proper coloring. The probability of getting a proper coloring of the graph is less than -- is greater than the probability of getting a proper coloring of the graph using Q minus 1 colors with uniform distribution. And, in fact, the conjecture initially was that distributed [inaudible] more colors, better chances of getting a proper coloring. Distributed for all Q. But it's not. And there's a counter example provided by Colin McDiarmid showing that this is not true. And then the conjecture was changed to say that if Q is greater than or equal to N where N is number of vertices, then this is true. And this is no more a conjecture. Now, this has been proved by Professor Dong I think in 2002. . But using combinatorial properties of this because this can also be seen as the number of proper colorings using Q colors divided by Q to the N. So it's a combinatorial -- so if our conjecture is true for Q greater than the highest degree since the highest degree is at most N or N minus 1, it would also imply the same -- it would be a much stronger version of the shameful conjecture. So as it stands now, it implies the shameful conjecture in the cases when the degree is not too high. But not that always. Okay. So let me prove this. We can prove the conjecture for n-stars. And the proof is instructive and here's how we go proving the general case. Okay. So let SN be the n-star. So we have a node in the center connected to N vertices outside. And let Q the number of colors be greater than or equal to N plus 1 since the highest degree is N and we want Q to be [inaudible] than the highest degree. Then we can just write down an expression for this probability of getting what proper coloring. So to get a proper coloring whatever color the center gets not all nodes outside should get that color. So that would be summation PI center, hence 1 plus minus PI raised to N for the outside. Okay. So let's look at this function X times 1 minus X to the N. It looks like that this is for N equals 4 or 3, I don't remember. And its properties are that the maximum is A1 over N plus 1. And it's also concave from 0 to 2 over N plus 1. Now, suppose all the PIs were engaged between 0 and 2 over N plus 1. Then because the function is concave, we can conclude that definitely the value's maximized if they were all equal. It's definitely better to make them all adequately. Now, suppose some PI was lying outside this region of 0 to 2 over N plus 1. Then since we've assumed that Q is greater than or equal to M plus 1, the average definitely lies between 0 and 1 over N plus 1. You have this. Maximum is 02 over N plus 1. And up to 2 over N plus 1 it's concave. And suppose some PI was lying outside here. Since the average is less than or equal to 1 over N plus 1, there must be some PG in this region over here. And it would be better to replace PG by 1 over N plus 1 and put in PI so that the sum does not change. In the process, the contributions of both PI and PG are increasing. From there there's no contribution to the sum. And so in this way we can pull in all the PIs from the outside region to inside. And then once they are inside, we can argue that because of the concavity it's still better to make them all equal while iteratively keeping or increasing the probability of getting a proper coloring. So that proves the result for n-stars. And in some sense the general result is proved in a similar manner, except there are more complications. So what we want to show is that near the center, near the uniform distribution and some polydisc this P sub-G is not concave but we show log-concave function. It's log-concave and it's symmetric. That will imply in that region it is maximized by the uniform distribution. And then the outside of this polydisc is directly compared with the uniform distribution and we show by direct comparison that the uniform distribution performs better. And, hence, it is actually the maximum of this function. So to outline the steps more concretely, we show that if any PI is -- okay. So the graph is G with the maximum degree D. If any PI is greater than two times square root D over Q, then definitely it will be better to have the uniform distribution on the colors than the distribution we started with. And then inside this polydisc where all PIs are less than or equal to 2 times square root D over Q, if Q is greater than this constant times D to the fourth, then P sub-G is log-concave in that region. And then by symmetry you can conclude that it's maximized in that region. And then adding the two items together we complete the proof. Okay. So for step 1 that's the easier part of the proof. We have two inequalities. One is P sub-G evaluated at the uniform distribution on two colors, which would also be the number of proper colorings using Q colors divided by Q to the N, when N is the number for vertices is greater than or equal to product over all the vertices, Q minus the degree of the vertex divided by Q. This is just to comparing the number of colorings. So you take the vertex, maybe all of it's colored with distinct colors already. You still have Q minus DI options to color it. So the inequality follows from that. And the second inequality, suppose S is this set of disjoint edges in the graph. Then the property of getting a proper coloring of the graph is definitely less than or equal to the probability of having each of these edges properly colored, which is one minus summation PI squared raised to the size of S since these are all disjoint edges. Okay. And then comparing the two inequalities and using the assumption that P -- at least 1 PI is greater than 2 times square root D over Q begins us that that is greater than or equal to this. For step 2 we show that P sub-G is first nonzero in the polydisc where all PIs are less than or equal to this 2 times square root [inaudible]. Once it is nonzero we can use the exponential formula to get a Taylor series expansion of the log. So log of P subQ, P1 up to PQ, the first is minus E times PI squared and then higher degree terms. And then we show that under the condition that Q is greater than this constant times T to the fourth and the PIs are all close to uniform we can show that the remaining higher degree terms don't make such a huge impact on where this function is maximized. It is indeed the first time that the [inaudible] is maximized. And that is maximized at the uniform distribution. So let me say how we showed this, that the function is nonzero in this polydisc. So this work is inspired by the work of Sokal and Borgs in which they found -they show that roots of the chromatic polynomial are all bounded about by constant times the highest degree of the graph. Okay. So if you have a graph G with the highest degree D and chi sub-G of Q is the chromatic polynomial of the graph, all of its complex roots are bounded in that full value by constant SD for this explicit constant K. In fact, they show something stronger. They show that for Q such that value of Q is [inaudible] D, the absolute value of log of chi sub-G evaluated Q divided by Q to the N is less than or equal to 2 times number of edges divided by 5. And hence, the log is finite, it's nonzero. Re-stating this in our notation, what it says is that the absolute value of the log of P sub-G evaluated at the uniform distribution the less than or equal to 2 times the number of edges divided by 5. And now to show that our function is nonzero in this polydisc what we need to show is that the log of the function evaluated inside the polydisc is also less than or equal to this. But because we are allowing this to be not just uniform distribution but around it, we lose in the condition. We need that Q should be greater than constant SD to the fourth. So let me just say something about the methods used in showing this, that it's nonzero. So the crucial thing is to get some good control on this expression P sub-G. We know that it's -- the Stanley chromatic polynomial evaluated at P1 up to PQ, but using that is difficult because we need a good understanding of the set of coloring of the graph G. We could just straight away try to use inclusion-exclusion principle. So probability 1 recolored, but then of course some edges went wrong, so minus number of edges times the probability that they went wrong. But then of course we have overcounted for two edges going wrong and so on. This would give us a polynomial for this -- polynomial expression for our probability. This is good. And if you could control the coefficients then we might be able to argue that indeed it is maximized. Because once again, we had this minus E times submission PI squared. But we weren't able to directly control the coefficients but we were in the sense that when we take the log we can control the coefficients. So the real problem is to write this expression down inductively in a good manner and that is achieved using the abstract polymer system, which is what gave us the nice inductive combinatorial formula. And this is all inspiration from the work of Anne Sokal and Christian Borgs. And then after you drop it in this abstract polymer system manner, we can use the Dobrushin's theorem to say that it's nonzero in this disk and then use the exponential formula to get the Taylor series expansion of log and complete the proof. So let me just quickly say what it means to write an abstract polymer system in our case. So that means we are going to social a new graph G with our graph G such that the graph will have weights, depending on P1 up to PQ in our original graph. All the vertices will have weights. And our probability P sub-G can be written as the independence polynomial of this bigger graph. Once it's gained independence polynomial of the bigger graph it has a nice reoccurrence, can be written as the independence polynomial of the graph remove unknown plus the weight of that node times the independence polynomial of that graph remove that node and its neighbors. And the inductive formula is good for using the Dobrushin's theorem. So what's the big graph in our picture? So if G is our graph with maximum degree delta, let scrib G be our new graph at the set of vertices is this set of subsets of vertices of the original graphs. For every subset of the original graph we have a vertex in the new graph. And then they intersect if the two set of -- the two subsets of vertices have an intersection in them. And then the corresponding weights would be for the subset S of the original graph, the weight of the corresponding node is summation PI raised to the size of S. I am summing over all connected subsets this expression. What this is, by itself it's not clear what's happening. But the way I think of it is that it's inclusion, exclusion written out in a slightly more organized manner with respect to the vertices. So let me explain what I mean. Suppose you wanted to use the inclusion-exclusion principle to find P sub-G. So this G is our straggle. And so with the inclusion-exclusion we would say 01 minus the probability that the edges failed. So three times. But then we overcounted for two edges failing at the same time. So three times. If two edges fail, all three vertices get the same color. But then we overcounted for all three edges failing, so -- and that again means that all three vertices got the same color. So even though in these four cases they were counting different subsets of word edges going wrong. So for example here these two edges could be going wrong or these two edges could be going wrong. In all cases we are talking about the same set of vertices getting the same color. So what this is doing, it's combining all these cases because they're really talking about the same set of vertices getting the same color rather than looking at different edges getting the same color. So that's how I think of it. And this is what it will -- the formula did. And -- okay. So that's the amount of detail I want to get into on this. And that's the summary. We use the abstract polymer system and then Dobrushin's theorem and Exponential formula. And to summarize the result, we could prove Diaconis conjecture for claw-free graphs and our strong conjecture for star graphs. In the general case, we can prove that the uniform distribution maximizes the probability of a proper coloring if Q is greater than constant [inaudible] to the fourth. Also it's related to the Stanley-Stembridge conjecture and the shameful conjecture. The stronger conjecture about Q being greater than delta is still open. There are definitely other generalizations that could be considered, for example allowing a constant number of monochromatic edges we could ask the same question. And maybe even using the same proof that I have in step 1 where I have to use that sum PI is greater than two times square root D over Q, if I could reduce that found and still get the same result I could prove improve the result with the same proof, allowing Q to be even smaller. Okay. So I will stop there with a list of references. Thank you. [applause]. >> Yuval Peres: Are there questions? >>: So if you prove that the condition holds for claw-free graphs and the counterexample is actually a [inaudible]. >> Sukhada Fadnavis: Yes. >>: So is there -- is it true for four claw-free graphs? Is there a counter example for a graph [inaudible]. >> Sukhada Fadnavis: So the 4 star itself is four claw-free, okay. And it doesn't -- oh, sorry, it is a four claw. >>: It's a four. >> Sukhada Fadnavis: Is there an example -- I don't actually know the answer to that. Oh, so I don't know of an example. >>: So for K13 it's still true. >> Sukhada Fadnavis: K1 ->>: For a three claw ->> Sukhada Fadnavis: Yes, yes, the property's still true for the claw, yes. >>: Otherwise the example would be [inaudible] [laughter]. >>: [inaudible]. >>: If a property is -- if the property is true for a graph, is it also true for its [inaudible] in use of graphs? >> Sukhada Fadnavis: This property? >>: Yes. >> Sukhada Fadnavis: No. So, for example, if you take a big complete graph and add a small claw in the end, the property would be true. But if you just get that small induced -- that is four claw out of [inaudible] then if you look at that [inaudible]. >>: So the counter example you showed Borgs broke [inaudible] but he didn't break it by much. >> Sukhada Fadnavis: Uh-huh. >>: So if I make an [inaudible] I say okay, I lose 20 percent, but I have a simpler argument. Are there worst counter examples where a naive assumption really breaks? >> Sukhada Fadnavis: So even if the ->>: Where -- so equal division is just stupid, really stupid not just [inaudible]. >> Sukhada Fadnavis: So even if the case of stars with two colors what happens as N increases, so as N increase we allow uniform with just two colors, so that's going to be two times half to the N plus 1 with the uniform distribution. But the best would be to have the center colored with the color that's -- so best would be to have distribution 1 over N plus 1 and N over N plus 1. So best would give us a -- so suppose 1 over N plus 1 for the center plus the other which is going to be small. But this seems much bigger. So already for the star graph it really is a big difference. >>: Is anything known of the computation of problems or given a graph find that the probabilities which [inaudible]. >> Sukhada Fadnavis: Find the distribution which minimizes. I don't know. Yeah, I don't know. >>: [inaudible]. >> Sukhada Fadnavis: I don't know about this. But if you had to actually evaluate the expression first for doing this, it's more difficult than evaluating the chromatic polynomial itself, which is hard I assume. I would think it's hard, but I don't know that. >>: So in -- most of the results have a maximum degree. Is it possible to replace that by the average or ->> Sukhada Fadnavis: That's a good question. By -- by the average degree. I'm going to guess not because you had some big stars and they be other smaller things it might not. >> Yuval Peres: So [inaudible] again. [applause]