>> Yuval Peres: Hello, and welcome everyone. It's... Schwartz from Technion. Roy is a PhD student of...

advertisement

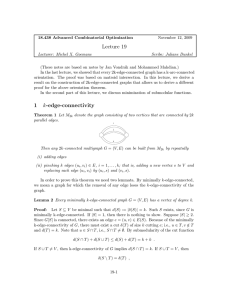

>> Yuval Peres: Hello, and welcome everyone. It's my pleasure to introduce Roy Schwartz from Technion. Roy is a PhD student of Seffi Naor, who is a frequent visitor here. And Roy is going to tell us about how to optimize -- how to maximize submodular functions using continuous methods. >> Roy Schwartz: Thank you all for coming. So we're going to talk about submodular maximization today or more precisely how to use continuous methods. So this talk will have three parts. In the first part we're going to recall what the submodular function is and also see interesting submodular optimization problems. In the second part we're going to discuss which is perhaps one of the most basic submodular optimization problems, which is the unconstrained submodular maximization problem. And we'll actually see how to get tight results for this problem. And if time permits in the last part the time we'll see how much time we'll have to discuss whether in depth or not. In the third part we're going to see how to compute good fractional solutions to submodular maximization problems. Of course if there are questions or remarks for everything, feel free to stop me at any time. So let's start with the introduction. So what is the submodular function? So we have a ground set N, and we have a set function defined over all subsets of elements. And function is submodular if for every two subsets the value of the union plus the value of the intersection is upper bounded by the values of the subsets themselves. So this is the formal definition. The problem is that this definition is not always so intuitive or not so easy to use. Luckily there is an equivalent definition which is much more intuitive and it uses what is called decreasing marginals. So what is is a marginal of a set? So if you have a set A and an element U, the marginal value of the element is just the change in the value of the function once you add the element of the subset. As you can see here, the change in the value of the function. So what is the decreasing marginals property? It means that if you take two subsets, A and B, and A's not only smaller but it is actually contained inside of B, then the marginal value of any element that doesn't belong to both is larger with the smaller subset. So this is exactly what is written here. In economics, this property's also known as diminishing runs. So this definition is usually much more intuitive and more convenient to use an is equivalent to the first definition I gave. And the last definition in this talk is that of monotonicity. So formally it means that if you have two subsets again and A is contained inside of B, the value of A is not [inaudible] than the value of B. Intuitively it means that if you are the subset and you started adding elements you can not lose, you only gain more. So either you stay the same or you gain more. And this is essentially what monotonicity means. So notice that it is also the relation here is inclusion. So let's see a couple examples of well-known submodular functions. So this is the first example. We have all these little points and the elements of the grounds sets are actually subsets of points. So you see all these subsets. And the function is the number of points you've covered. So let's say we choose -- so let's see that this function, for example, has the can diminishing returns property, so we'll see this by example of course. So let's say we choose this element and I'll add in here the ground set and we at to it this element. So what is the change in the value of the function? It's all the dark points because we just count the number of points we've covered. So now let's choose a larger subset of elements in the ground set? Let's say we choose these two. And now we add again the same element in the ground set, this element. And we can see that the change in the function is smaller here. And you can see that actually you gain only a subset have the points you gained before. So this is an example why this function which is called the covering function has the diminishing returns property. So probability it works for any subset of elements in the ground set. And this function is also monotone. The more elements you choose, the more points you cover. The second example is also quite known is the cut function in undirected graphs. So the ground set in this case are the vertices of the graph. And the function is just given subset of vertices which is a cut, count the number of edges that cross the cut. So let's say we have two cuts here or subsets of elements. A is the smaller one and it is contained inside B, which is the larger one. And now let's see what happens once we add a vertex V. So what happens when we add vertex V to the smaller cut A? So we lose all of the dashed edges, right, and we gain all the bold edge, all these edges. So this is the change in that value of the function. What happens when we take the same vertex and add it to the larger cut? So, again, if we look at the dashed edges we lose the same edges we lost before and possibly more. And we gain only a subset of the edges we have gained before. So the change is smaller and also possibly negative. So again this means that this function has diminishing returns property, so it is submodular. But this function as opposed to the first function is not necessarily monotone. So this is an example for a non-monotone submodular function. So these are just two examples. So where else can you find the submodular functions? So as we said, we have covering functions, cuts in undirected graphs. This also works for directed graphs. Hypergraphs. Also the rank functions of matroids are submodular functions. And these are quite classical examples in combinatorial optimization. Also you can find submodular functions in economics or game-theory. It usually is utility functions. I might mention a few examples this talk, depending on how the time goes by. So this is where you can find submodular functions. So let's look at the basic submodular maximization problem. So all you have is just a family of physical subsets and you want to maximize submodular function over all feasible solutions. So this is a very basic problem. An obvious question before we start looking at specific cases of this problem is how is F given? So note that if F is given explicitly, so if you have the least of all possible subsets and their values, this problem is trivial, assuming that you can check feasibility efficiently, right? Just go over all subsets and the least you are given check feasibility and you are done. Unfortunately this is not very realistic in let's say all or almost all replications of this problem. So what is usually assumed is that the function is accessed through an oracle. So in the very -- the most basic oracle that is widely accepted and widely used is what is called a value oracle. So it means the algorithm has a black box access to some oracle that it gives it some subset of elements S, just receives the value of the function of this subset. So this is the only way the algorithm can interact with this submodular function. So now let's go over a few submodular maximization problems. So as I mentioned, this is perhaps one of the most basic submodular optimization problems. And it is the unconstrained submodular maximization problem. So you get -- you get similar to function F which is not necessarily monotone and just find the best subset. There's no -- there's no restriction, no constraint. Any -- actually any solution is feasible. Of course this function, if it's monotone, then the problem is trivial. So you have this huge ground set and just find anything with -- that has good value or the highest value. So why is this problem interesting at all? So from an combinatorial point of view it captures for example max-cut, just ground set as the vertices of the graph and the function is the cut function and of course any cut is feasible and you want the largest cut. So this works also not only for max-cut but also for max-directed cut, and it captures also maximum facility location, even a few variants of max-SAT. So this is from a combinatorial point of view. Also apparently this problem is quite useful in other settings, most of them usually in game theory or economics. So I want to go over the entire list. But for example, it has been used on what is called marketing over social networks, when you want to find a strategy to sell things in the social network, or even the most basic scenario, when you want to maximize some utility over a discrete choice. So usually economic utilities have ->>: [inaudible]. >> Roy Schwartz: Huh? >>: [inaudible]. >> Roy Schwartz: No. And even -- if it's monotone and it's unconstrained, then just choose everything. So you have -- so in these problems the objective's not always monotone. There are examples. And also in some of these works they actually say if we have an alpha approximation for the unconstrained submodular maximization this implies some function of alpha approximation for our problem. So in some of these cases it is used as the approximation algorithm. For the submodular problem, it's used as a black box essentially. For example in these two works it is mentioned. So this is just a partial list and supposed to convince you that this problem is interesting. So what is known about this problem? So apparently it was studied quite a lot in the operations research community. And this is just a partial list. The only problem is that all these works are not rigorous in the sense that either they examine a very specific submodular function or they give some heuristics that converge quickly but there's no proof on the value of the output, or there are algorithms that actually compute the exact optimum but you can't of course bound their running time. So in some of these works use integer programming or mixed linear integer programming and also all other NP hard problems essentially because this problem is hard. It captures max-cut. So the first rigorous study of this program was done by Feige, et al, and they actually give three algorithms. So I'll just go quickly over them. So the first algorithm they give is quite simple. It just says pick your random subset. The surprising thing is that you can get one-quarter approximation for this. So if you pick a random subset this is exactly the approximation you get in the maximum directed cut case, right? Random cut and maximum directed cut gives you one-quarter approximation. And the amazing thing that this works for a general submodular function, not necessarily just in the maximum directed cut setting. In the papers they also improve this and show that if you do local search you can get one-third, and if you also tweak the local search a little bit and change its objective, this is what is called non-oblivious local search. You can get up to 0.4. So this was the case [inaudible] '11 Gharan and Vodrak actually showed that you can squeeze even a little bit more by the [inaudible] technique and the remarkable thing is that they actually were able to prove something. Usually simulated annealing is used as a heuristic. So in this case, they took -- so I didn't explain exactly how the objective here is defined. But it is defined using some probability and noise. And they show that once this local search got -- gets stuck, just increase the amount of noise and continue the same local -- the 0.4 algorithm. And they were able to prove that you can get 0.41. And after that, we were able to squeeze little bit more by using what is called structural tools. I won't -because of time constraints I won't go into this thing in this talk. And the obvious question is what is the hardness? How high can you get or how low can you get? So there is an absolute one-half hardness. So what do I mean by absolute? So this is unconditional. So they actually prove that any algorithm for any fixed epsilon that gets one-half plus epsilon approximation needs to have -- needs to access the oracle an exponential number of times. So even if in the worst case P equals NP, this hardness still holds. In the worst case ->>: [inaudible]. >> Roy Schwartz: I don't know. Depends on which side you're on. So an obvious question is what is right answer? So here it's nice but you start squeezing things, and these are ugly numbers. And the proof's in that -- huh? >>: [inaudible]. >> Roy Schwartz: Yeah, diminishing returns. And the algorithm gets more and more complicated. And also the analysis and so on. So the obvious question, because one-half is a nice number is a direct answer. And what we will see in this talk that, yes, this is right answer. And that only the right answer you can actually get a tight algorithm which runs in linear time. It's extremely simple. It actually simplifies all these except this algorithm is also quite simple. But all these other -- it's much faster and gives you a tight result. So this is the first problem we're going to discuss. The second problem is what is called the submodular welfare problem. So we have an element, unsplittable elements in K players. And each player is equipped with a utility function. So -- and the goal is to assign elements to the players. So let's say this player, player I got elements, 1, 3, and 4, so just -- the revenue or the utility you get from this player, that's the utility of the subset of elements you cut. And the goal of course is to assign all elements to the players and maximize the social welfare or the sum of the utilities. Of course we assume that the utilities are monotone. So the players actually compete over the elements. So nothing is -- a player doesn't lose by gaining more. So the formal definition of the problem is this. You just have M unsplittable items. K monotone submodular utility functions, one for each player. And the goal, just maximize the social welfare by assigning the elements. And this problem also is very -- arises very naturally in the combinatorial auctions. This is probably one of the main reasons for considering this problem. So what is known about this problem from an algorithmic point of view? So one-half was known even in the late '70s, using greedy approach by Fisher, Nemhauser, and Wolsey, and actually it was reproved in 2001. They probably were not aware of this result. Reproved again by Lechman, et al. And what is known today prior to the work I'm going to describe about this problem. So there's the Vondrak algorithm that achieves 1 minus 1 over E. And so we will see this is quite close to the hardness result. And this seems quite nice, except for the annoying fact that before that was Dobzinski and Schapira algorithm which asymptotically if the number of players increases is roughly one-half. But only for the case of two players it gets two-thirds approximation which is better than this. So this is quite annoying why the case of only two players should be better, is it special? We don't know. So there were additional works on this problem for special objectives by Dobzinski and Schapira. And Feige also studied sub-additive utilities. And also, if you have a stronger oracle or the main oracle, this was also studied by [inaudible] and so on. So there's a long list of works on this problem. And of course the interesting question is the case that K equals 2, is it special? So unless -- so this algorithm is kind of annoying in the sense that it kind of [inaudible] the Vondrak algorithm for this special case. Why -- what is going on there? So what is known about hardness. So first of all, you might ask why do we look at submodular utilities, not general utilities? So first of all, submodular utilities are common in economics. So it makes sense. But if you look at general utilities, then you can show that this is equivalent to set-packing and you can hope to gain anything meaningful here. So what is known in terms of hardness for the submodular case? So there's -- there is this hardness, 1 minus 1 minus 1 over K to the power of K. So first it was proved assuming P does not equal NP by Khot, et al, and then this assumption was actually dropped. It's not needed. You again get an absolute hardness, which is unconditional. And you can see that as K increases, it converges from something larger to 1 minus 1 over E. But, for example, what happens with two players it gives you only three-quarters hardness, right? So not only the case of two players. Interesting there's a gap even for the Dobzinski and Schapira algorithm, which gives you two-thirds. So what we will see in this talk is actually the sort of in this question is we get that this is the right answer. You can get exactly as this. So you can -- for any number of players you can get this approximation for the problem. Actually this also settles the approximability of the submodular welfare problem. And the last problem I'm going to mention is what is called maximizing submodular function over a matroid. So again we have a matroid in a submodular function. We just than want to find a independent set in the matroid that maximizes the value of the submodular function. So why this problem? Interesting for first of all, it captures the submodular welfare problem. I won't go into the details but actually the submodular welfare problem is a special case. It's a partition matroid. And also maximum coverage, generalized assignment is extremely two basic problems. And also refinement of these problems and so on. All are special cases of maximizing a submodular function over a single matroid. So, again, what is known about this problem? So here we have to be careful because it depends whether the function is monotone or not. So if the function is monotone, so there's one-half algorithm I mentioned before, so actually it was proved for a general matroid, so it captured the submodular welfare problem. And then a few years ago, by Calinescu, et al, it was improved to a tight 1 minus 1 over E. And the reason it is tight, and this is also quite amazing, because even in the late '70s these guys proved an absolute hardness for this problem. They showed that for any fixed epsilon there is a matroid that if you get approximation better than this, the algorithm needs an exponential number of -- an exponential number of oracle queries. And this is kind of amazing because it was done even in the late '70s where people did not -- it wasn't very common to prove hardness results. Of course they didn't get the exact number. They got only one-half. Of course there were a lot -- tons of work on special cases and also you cannot forget to mention always hardness result which is the same but of course it assume P does not equal NP for the maximum coverage problem because it's a special case and there the function is actually given implicitly by -- in a compact way by the sets themselves. So what is known for the non-monotone case? As you can see the numbers here are not very nice. And the reason is probably due to the algorithmic technique he used in order to get these approximations. So the 03.9 was later -- the same sort of paper was improved to roughly 0325. And even the hardness result is not very nice. And again, all these are fractional local search or extensions of local search using simulated annealing and so on, as opposed to, for example, the greedy algorithm that gives a nice result here. So again, the question is why aren't there any nice numbers, for example, for the non-monotone case? And the answer is that there are. The only reason is that we need to know how to get them. So what we will show is that if you actually you can extend the techniques here in quite a different way and get improved and not only improved approximation but also nice numbers. So this also raises a few interesting questions I might mention at the end of the talk. So these are the three problems we saw. And I'll just mention that there are additional applications, for example, the submodular max-SAT where you just have a monotone submodular function defined over the clauses of a [inaudible] formula or you can change the matroid constraint by a constant number of knapsack constraints. So these two problems also fall under the general framework we're going to show. So let's -- a few minutes just mention what are the algorithmic techniques that are known in this case. So as approximation algorithms you can use combinatorial approach. We saw that greedy works, local search works, and in some cases this gives you all the convex results you know or even tight results. So you can do better. For example a knapsack constraint Sviridenko showed that this is tight and intersection of K matroids by Lee, et al, this is the best you know. And even later on we were able to improve this result and actually show that for example for submodular matchings in general, perhaps you can match the result known for bipartite graph. So this is open even from the late '70s. But the problem with all these techniques it's very tailored to the application you have. So you need to find the exact greedy rule or some local search. So it's not easy to work with all the time. So as an approximation algorithms there is the continuous approach where you just formulate a relaxation, find a good fractional solution, and round it. So why is this so -- we were not the one to invent this approach. Actually this is an approach that was used to obtain the tight approximation for monotone submodular function over a single matroid by Calinescu, et al, the work I mentioned. And surprisingly in most applications, essentially in all the applications I mentioned in this talk, the bottleneck is actually the second step. How to compute good fractional solutions in this -- well, see, it's a hard problem. So usually the rounding is not a big issue. But the issue's how to find the good fractional solution. And also this step, the second step of finding a good fractional solution is different -- differs from the monotone and non-monotone case. I mean, the non-monotone case you have very involved algorithms using simulated annealings and then give you all these not very nice numbers as guarantees. This also is -- was by attended Chekuri, Vondrak, and Zenklusen very recently. So interesting question as we saw, can we gap some of the -- bridge some of the gaps? And in some cases I told you in advance that we can. And also can simplify, for example, the computational fractional solutions from non-monotone functions can we improve the result or, in other words, can we get nice guarantees somehow? So what we will see in this talk, so already told you that we will see a linear-time tight algorithm for the unconstrained problem. And we will also see a simple way of computing fractional solutions. And the advantages of this method is that first of all, it will be a single algorithm that works both for the monotone and non-monotone. So essentially there's no distinction any more between the two cases. It will give improved results for the monotone case and also give improved and nice results for the non-monotone cases. Again, everything with single algorithm. So no needs to distinguish between simulated and annealing and continuous, greedy and so on. And for example, as I mentioned a few applications, immediate applications of the second step are information type results for submodular welfare with any number of players or submodular max-SAT. Or this is the guarantee we get, 1 over E for the non-monotone case when this improves, for example, these two results immediately. So again, as in approximation algorithms, if you improve the so sever or how you compute fractional solutions, everything improve essentially all the results. So these are just two well studied examples. So if there are no more questions about this introduction, I'll start with the unconstrained submodular maximization problem. So first let's see why the greedy approach fails? So why do we look at greedy? Had because greedy was useful in the monotone case. So we saw that it gives one-half in the discrete setting, a greedy one-half approximation. I'm telling this is what is called the continuous greedy algorithm, which I'll mention at the end of the talk. So it gave tight result in the monotone setting. So let's see why it fails, completely fails in the non-monotone case? So this is a very simple example we have, N plus 1 elements in the ground set. And let's say we have a V and U on up to UN and we have an indicator function whether the subset contains V. And this function is of course submodular. And we have an indicator function for FUI, which says whether UI's in the subset and V is not in the subset. And this indicator function is also submodular. And the objective is just non-negative combination of those but would put a slightly larger weight on these function. So what the greedy will do now -- so there's nothing. And which element is best to choose? Chooses V because it gains 1 plus epsilon. And once it chooses V, it is done. It can't gain anything more. And what the optimal solution, just choose all the Us. So there's a gap of N. So actually it means that the greedy approach is meaningless in this case. At least in the combinatorial setting. So now let's see a very -- it looks very simple and yet extremely crucial are these observation. So let's say we have some greedy algorithm that achieves something for the unconstrained submodular optimization problem. Then we can trick it. Instead of giving the algorithm access to F, we give it value oracle access to the complement of F. So what is the complement of F? Just the value of F on the complement of the subset we've given it. An algorithm doesn't know that. So if the algorithm has any hope in achieving something, then why start with an empty solution and gain elements slowly? You can start with everything you start dropping elements, right? Because it's a complement of F. So this is probably why the greedy doesn't make any sense. So why -- in the sense very asymmetric. And know that the optimal value of the complement of F has the exact same value and optimum is actually the complement of the original optimum. So everything is just mirrored. And these are the two obvious questions we ask. Why start with nothing and where you can start with everything and start dropping things. So let's start with the first attempt and see if we can do something. So this is the vertices of the hypercube are the possible solutions. So let's start with two solutions. So two sets, the empty one and the full one. And now let us look at the first element let's say this direction. So delta 1 is just the change in the value of the objective once we move this point here, which is equivalent of choosing the first element. And delta 2 is the change in the value of the function once we remove the first element. So it's moving from here to here. So now what do you do? Just greedily choose the best option. Let's say delta 1 is bigger, so we move this points here. Now, we look at the second element and let's say delta 2 is bigger so we move the upper point there. And in this case, let's say delta 1 again and these two points collide. And let's say this is the output. So this is extremely simple. And this is actually the upper. So go make one pass over the elements I 1 to N. And this is the definition, the formal definition of delta 1, delta 2, and if delta one is better, then choose that element. So S just remembers what the algorithm chose so far. And if not, then removing that element means not adding it to S, so just do nothing. So this is the formal way to write the apology metric interpretation now. You saw before with the drawing. And surprisingly this very simple algorithm gives you one-third approximation. So it's not the one-half we want, but it's a kind of very natural extension of the greedy approach. But in a sense, it utilizes two evolving sets and symmetry property. Of course this runs in linear time. And we can prove with the Moran Feldman and Seffi Naor that fives you -- this gives you one-third. So how the proof goes. I'll just go over the overview of the analysis. It's not very complicated. But it will take a few minutes. So because we're not really in the greedy setting, we're not going to bound how much we gain each step, we're actually going to see that we do not lose much. And the amount we lose will depend on how much we have gained. So when we say -- when I say how much we lose, the question is lose where? So we will lose here. So what -- so what do we have here? So let's say SI is just the element the algorithm chose up to the end of the iteration I and opt I is just the elements of opt from the last N minus I element. So these two sets are always disjoint, right? This is the subset of the first I element, and this is a subset of the last N minus I element. And the creature we are going to look at is SI the union of opt I, the union of these two. So with 0 this is just the optimum, right? We haven't done anything. And as the algorithm progresses at the end we get to the output. So this is just an artificial creature you look at in order to analyze the algorithm. So this loses. So you start from A 0 and you end it with AN, and you lose somewhere along the way. We started with opt, and you end with the algorithm, the value of the output of the algorithm. And the heart of the analysis is just to bound the loss in two consecutive steps of this thing. And how you bound it. So this case it means we chose the element. And in this case we show that the loss is upper bounded by delta 1, which is exactly how much we gained in this step. This is the step we did if the algorithm chose the Ith element. And otherwise we gain again the change in the value of the function of what the algorithm did in this case, which is not to choose the element. So when you sum everything up, just get one-third approximation. Intuitively we see that the total loss from here to here is the total change that both problems had along the entire execution of the algorithm. And this -- when you look at the rates of the change, it gives you one-third approximation. So now look -- let's look at a very special case. So this is just a reminder of what delta 1 and delta 2 is. So first of all, you can prove that always the sum of these two is non-negative and just in submodularity for this. So it means at least one of the steps you will not lose. So if, let's say, 1 the -- the first option is beneficial and the second one is not, then of course it is obvious, at least intuitively, that we want to choose the element. And the other case where it is -- we lose by adding the element and gaining by dropping it, then of course dropping it is equivalent to not adding it. But let's say both are beneficial. And not only let's say -- let's make the question even harder and let's say not all both are beneficial of the same amount. So it's exactly the same change. So what do we do then? So like everything in life, when you have two options and they both look the same, so just flip a coin. So what we're going to do is in this case where both changes are beneficial, we're going to choose randomly. And of course the probability will be proportional to the ratio between these two of course. So this is the algorithm. So this is exactly the same algorithm. These are the two cases before. If the second one is non-beneficial, choose the element. If the first option is non-beneficial then don't add it, so it does. I mean, do nothing. But if both are beneficial, just add the element with this probability. And this is the new algorithm. And quite surprisingly once you add -- you turn this algorithm essentially into a random process, request this time Niv also joined the gang. You can prove that this gives you a tight one-half approximation. So in a sense -- yes? >>: [inaudible]. >> Roy Schwartz: With expectation. So if you're okay with an expected value it's fine, if not you can just run it a few times. But it's highly concentrated. And this is an expectation. For example as in max-cut, the Gomez, Williamson algorithm can just -the value of the cut is expectation. It's one-half -- at least one-half of the optimum and expectation and also runs in linear time. So I'm not going to go over the proof overview of this algorithm. But I will actually show what is called the continuous counter part of this algorithm that will also give a few intuitions on the third part of the talk, which involves fractional solution to submodular problems. So in order to define what is continuous or fractional, we need to actually extend the submodular function because it is a set function. It is defined only over the vertices of the hypercube. So now we want to define it over the entire hypercube. So we are going to use what is called the multilinear extension, so there's user expression here, but it has a very simple probabilistic interpretation. Here a fractional point is every element of value. So define the distribution in the following way. Each element chooses itself independently with this probability. This gives you a distribution over subsets. Just take the expected value of the submodular set function over this distribution. So this is exactly what is written here. So note that if you -- if X is not [inaudible] but an integral vector then the distribution has only one outcome with probability 1. So in this sense, it is an extension, actually extends the function. This is not the only extension. There are other extensions which are useful in other settings. For example, the [inaudible] extension and so on. But I want -- I'll go into this. So this is -- actually so this is the new objective, the multilinear extension. And what is the continuous counterpart? I'll just show it with the picture. So again we start with these two points and we look at the change. But let's say this time both are beneficial so instead of choosing randomly we'll just go to some point in the middle that depends on the proportion of what actually determined the probability. So we get here and there. And now we look at the change by moving from here to here. But now look, these are the marginal values according to the multilinear extension, not the original submodular set function. And again, let's say this option is beneficial so we move it here. And again, let's say both are beneficial here. So we end some fractional point in the middle. And this is the continuous counterpart. So it's not an equivalent algorithm but intuitively it is what you can call the continuous counter part. So how the analysis goes here. So this is exactly the same thing that was written before, but instead of S we have a fractional vector that it's -- that what the algorithm chose the fractional values up to the end of the Ith iteration. And this is defined exactly as before. So, again, this is the iteration and we bound the change in two consecutive elements. But now instead of the total movement of the points, because we are in the continuous setting going intuitively in a random process in the combinatorial setting, we bound it by the average change in the value of this point. This is exactly delta 1 and this is delta 2, and we actually bound it by the average instead of the total. And now you actually get lower loss. It means that no matter the total -- the average that -- not distance, but change in value, the points that they use is actually the amount you gained. So we actually meet in the middle, which is one-half. And this -- so formally you needs just telescopic sum, but intuitively this is exactly what you get. So quite surprisingly this is a very simple algorithm that settles the approximability of this kind of basic submodular maximization problem. And it's very simple, runs in linear time and gives you the tight results. So this finishes what -- so I am going to prove these things. But this is essentially the heart of the proof in order just define this creature and bound the loss you get with it along the execution of the algorithm. So now, the -- we see that continuous interpretations could be nice. Of course there's a proof for the randomized combinatorial algorithm as well. But we've seen that the proof of the continuous counterpart is also quite easy. And now we get to the third part which also involve fractional solution or continuous algorithms. And as I said, we'll see how to computed better fractional solutions to submodular maximization problems. So these, just recall all the problems we have. So we settle this problem. And now we will see actually all these applications with one shot, essentially. So the first question is how to formulate a relaxation to submodular function -submodular maximization problem? So we have the multilinear extension. So it is just find as in any approximation algorithm, find a polytope in hypercube that contains the feasible family of solutions and just maximize the multilinear extension at this time over all points in the polytope. So this is a very simple way to -- once you have the multilinear extension to formulate the relaxation. The problem is the objective now, the multilinear extension is not convex and it's not concave. So this usually is kind of problematic because you can't use off the shelf some solve or something. This function doesn't have a very nice shape. But as we will see, it has some properties that still make it possible to compute good fractional solutions spite the fact that it has -- it's not convex and it's not concave. So let's look at what is called the continuous algorithm -- or continuous greedy algorithm. And this is a Vondrak's algorithm. So let's say this is our polytope and so what do I mean continuous greedy? So it's greedy we start with let's say this dot here starts with nothing. So it's all zeros. And let's look at the marginal values of all elements. We have element I. So the marginal value is just adding that element and the current point X and see the change in the value for the function. And again note that we're looking now at the multilinear extension, not the original submodular set function. So we look at the marginal values according to the multilinear extension. And now once we have these weights we can actually finds the best point in the polytope that maximizes this as a linear function. So intuitively, why do we do this? Because as we -- I'll just mention, the multilinear extension extremely locally is almost linear in directions which are parallel to the Xs you have. So it makes sense that if we're not going to move -- just move slowly in this direction, it makes sense to find the best point in the polytope that maximizes this. So this is kind of a replacement for the gradient in a sense. So we find Y and just remove X. We just move a small delta step size to the direction of Y. And now the marginal weights are different because we're a new fractional point X. So we have a new Y. And again we move a small delta step there. And again and again we calculate each time the new Y and move each time a small step until we decide to stop. So this is the apology metric interpretation of the -- of Vondrak's continuous greedy algorithm. So this is -- so if you just want to withdrawal the algorithm, remember this picture. It's always easier. This is the formal definition. The important thing to note here is intuitively it is easier to think of that as -- that algorithm runs in continuous time. So actually the time starts -- this is zero and then delta 2, delta 3, delta until we stop. So it will be easy to talk about time when running the algorithm. And as we said each time, compute the marginal values according to the multilinear extension, find the best point of the polytope, move in that direction and update the time. And do this of course we stop at time 1 because we are guaranteed then to have a con vehicles combination of points in the polytope, right? And if we have a convex combination, then we are feasible. So this guarantees we have a feasible solution. So as we said, it is convenient to think of the time as moving in jumps of delta. It gives a convex combination, and it was first proposed by Vondrak in the convex of this submodular welfare problem. So what did Vondrak prove? So this is not exactly the original statement. But it can be written this way. And it will be convenient in our setting. So if F is monotone and P is down-monotone -- so what do I mean that the polytope is down-monotone? So since we are in maximization problem this is essentially a packing problem, right? So you can always choose less and still be feasible, because the objective is to maximize something. So this is what I mean by a down-monotone polytope. Then if you terminate the algorithm at time T you get the feasible solution whose value is at least 1 minus E to the power of minus T times the optimal value. Get a fractional solution. >>: [inaudible] exactly the amount of what delta is? >> Roy Schwartz: So hiding some of the details because you can't choose delta 1, delta needs to be small enough, and there are also minus little O of 1's losses here. And in some cases the little 1 -- little O of 1 losses can be omitted. So there are additional tricks. For example in the case of a matroid, they can be omitted, for example. For the knapsack constraints we don't know if they can be omitted. But I think, for example, in the matroid setting it's enough to choose 1 over N squared or 1 over N cubed. I don't remember exactly as delta. So it's not like 2 to the minus N. So it's a small polynomial size but it's not extremely small. So that will be enough. So I didn't tell in the beginning of the talk, so some of the details I'm hiding and [inaudible] so as long as you don't ask questions, they will be hidden there. So here -- yes? >>: So the [inaudible] extension is a function of R? >> Roy Schwartz: Yes. >>: The subset R [inaudible]. >> Roy Schwartz: What do you mean subset? Oh, the hypercube essentially, yes. So you need to be containing the hypercube, otherwise it doesn't have any meaning. >>: So R is the hypercube? Is that what ->> Roy Schwartz: No [inaudible]. >>: [inaudible]. >> Roy Schwartz: Oh, yeah. I mean, when [inaudible] yeah. R is just summing over all -- it's not the calligraphic R. Maybe it's not the right choice of notation. But it means that R is the random set according to the distribution defined by the fractional value as I described before. So there are a lot of technical details here, but essentially this is what it means. And the problem is that this fails for non-monotone functions. So the fact that F is monotone here is important. And as I said before, Chekuri, et al, gave ways to solve the multilinear relaxation we saw for non-monotone cases but there you use simulate annealing techniques and you don't get nice guarantees. Nice numbers, I mean. So let's look at again the solution where I'm going to show is extremely simple. So let's see what's going on here. So the algorithm at each step chooses the marginal values according to the multilinear relaxation. These are the weights. And it maximizes on the best point of polytope according to the -- according to these weights as a linear objective. So where is the monotonicity used in the proof at all? If it's not used there it isn't work also for non-monotone functions. So the only place it is used is just to show that the marginal weights, marginal values are upper bounded by the derivative, the partial derivative in the direction we are looking at. Why is this true? Because the function is monotone, so the multilinear extension is also monotone. So this is non-negative. And once you add, divide by this, then you can only increase it. There's no -- there are no negative numbers here. And of course you can divide by this because if you fix all coordinates and just change one coordinate the function is linear, essentially. This is what is called the multilinear extension. So I haven't proved it, but it's like one line proof. So because the derivative is linear, this behaves like this. So the question is can we do better or -- so what is actually going on here is that intuitively we would like to use the derivative, the gradient, if not the marginal values. So how can we correct this? So we know that the marginal value is actually the derivative times one minus XI because if we position XI this is just because it is linear. It is multilinear. So we are just going to change the update step, right? We are going -- instead of adding delta times Y in the [inaudible] of I, we're going to correct this in order to mimic the effect of the gradient or the derivative. So this seems quite intuitive enough, and this is essentially the algorithm we get. This is the only change. So this might look like only a technical change we're doing to the algorithm but remarkably it has a very deep effect. So what we can prove with again this -- with Moran and Seffi that once we do this tiny change with the algorithm -- so, first of all, the algorithm works also for non-monotone objectives as well. And we can prove the following. So if you terminate the algorithm at time T, if you are not monotone, you have this guarantee; and if you're monotone you have this guarantee, 1 minus E to the power of minus T as before. So this kind of surprising because it tells you that the original continuous greedy, the Vondrak's algorithm, is kind of wasteful or the step size is very wasteful. Why? Because even in the monotone case, the more you gain, the more value you can have, because everything is monotone, right? And we do actually smaller steps. We move physically less the point because we have this correction factor. But the rate in which we gain in the [inaudible] of this solution is exactly the same. So we move less and gain the same. So it essentially means that there is something wasteful in the original algorithm. Then probably the right way to correct it is what we did. And this of course in a very straightforward way. I'm not sure time of permit me to show the overview of the proof for the non-monotone case. You can actually prove that it started working in the non-monotone setting as well. So let's see now. So this is the guarantee we have. So what happens in the non-monotone case? So we know that if we stop at time T we get T times E to the minus -- E to the power of minus T. So the best T is T equals 1, right? This maximize you get 1 over E. And if T is 1, then we are guaranteed to be feasible. Why? Because we are dominated by a convex combination, essentially. And if we stop at T equals 1 we get the convex combination so we dominated by the feasible solution and the polytope is down-monotone, so we're still feasible. We're something less than a convex combination. So this is fine. And know that this is kind of surprising because earlier in the talk I showed you that the greedy approach fails for non-monotone function. So if it fails for the unconstrained case, of course of fails for all the constrained cases. So -- but there the subtle difference was that we were in the combinatorial setting. So once we look at the greedy approach in the continuous setting with the right algorithm, it actually works. So this is -- so this is probably why it wasn't examined before because people knew that the greedy fails for non-monotone functions. But the point is it fails only in the greedy setting for non-monotone functions. [inaudible]. >> Roy Schwartz: In the combinatorial setting, yeah. Sorry. So what is going now in the monotone case. So here the situation is a little bit tricky. So as I told you all -- so that gives the one over E for the non-monotone applications but what about the submodular welfare problem or the submodular max-SAT? I said that we improved the results and got something tight. So how do we do this? So again, we gain at the same rate. And here we wish to end at the latest time we can because the larger T is, the better we are, right? The more we execute the algorithm because it's monotone, the more we gain, the better we -- the better solution we have. So the key insight is that we advance less when you compare it to the original continuous greedy algorithm, because we do smaller steps. So the obvious question is can we stop at times which are greater than 1 and still be feasible? So intuitively this means that the previous algorithm would actually go out of the polytope that would essentially what will happen because it will be more than a convex combination. But here we gain less and can we stop at a later time and gain here in the value? But the situation is a little bit tricky because the correction factor is different for each element and it's different at each step, so it's not clear. And apparently there are property that dominates the time can help you choose the optimal stopping time depends on what you might call the density of the polytope. So the polytope is just a pecking polytope. So all these are non-negative. We have let's say N constraints and all that sum non-negative combination of variables at most some non-negative numbers all pecking constraints. So what is the worst constraint? So for each constraint we can look at the right-hand coefficient divided by the coefficients of the variables. Don't forget that these are all 01. Either you choose something or you don't. So the most constraining or the most constraining -- constrained in the polytope is the one that minimizes this ratio. And this is what you might call the density of the polytope. And you actually can prove no matter how the algorithm does the steps that you can -that the solution is feasible as long as the stopping time is this expression that depends on the density of the polytope. And this when you plug this in the result you actually get this approximation. So what happens now, for example, for the submodular welfare with two players? So in this case the density of the polytope is just one-half because all the constraints are that each element can go to one of two players. So when you model the problem as a matroid, you just give each element two -- each element in the welfare problem two elements in the ground set two copies, one for the first player and one for the second player and just one to distribute them. So when you look at all the applications, for example so that for two players if you have K players you get 1 over K and this gives you exactly information theoretic type results. For the welfare problem with K players for any K and submodular max-SAT is as I said before for the non-monotone cases it gives you a nice guarantee of 1 over E. So I didn't mention -- so as I said before, usually the heart of the problem in these type of questions is how to find good fractional solutions. The rounding is not an issue here. There's the Ageev and Sviridenko or what is called the pipeage method to rounding solutions, fractional solutions over matroids so we don't lose anything. Here in line a little bit in the case of knapsack constraints you use some epsilon. So because if you put the linear objective, it's like getting an optimal solution. So there's -- so there's the epsilon of the F betas here. But these are all just technical details. So the surprising thing is with a very simple and probably correct change of the algorithm we can make it work also for the non-monotone setting and get all these -- all these information theoretic type results quite simply. So I guess I don't have time for the proof overview of the non-monotone case. I'll just say so -- I'll just tell you the phases of the proof. It's not very complicated. So if X is the current position of the algorithm and Y is the direction we move at, so the first step is to show that -- so this actually means that change or how much we gained by moving doing one step from time T to time T plus delta, and we show that we gain -so delta is of course, it's linear, but we gain something that depends on if you move in the direction of opt. So if you are currently this position and you would have moved to the direction of opt this is how much you would have gained. But we move to better direction, we choose the best one and so on. So we prove this. And this where all the little terms come from. And then we just bound this. And here we actually use the Lovasz extension, which is really nice. And just then you obtain the recursive formula and you are done. So the proof is not extremely complicated. So short proof is always nice if you have them. So this is roughly the proof. So what also let's just wrap things up. So what did we see? So the first thing we saw that just this very simple symmetry observation and the fact that we can have two evolving sets gives you tight linear-time algorithm that essentially resolves the approximability of this, of the unconstrained submodular maximization problem. And also we saw that essentially there's no computational difference between non-monotone and monotone objective in the fractional setting or in the continuous setting. So there's no need to distinct -- the proof of course is different. But the algorithm is the same algorithm. And essentially the bottom line is the greedy does work for non-monotone objectives but it only works in the continuous setting and not in the distributed setting. So there are course tons of open questions and ongoing projects. For the first open question is whether the 1 over E the right answer now in the non -- for computing fractional non-monotone -- with the non-monotone objective. So this is the first time there's a nice number. And it's better than all the -- so it's not only a nice number, but it's better than the previous not nice numbers. So the question is whether this is tight. And remember that the hardness resulted for a single matroid, for example, is this 0.498 something. Which is also very interesting because it took a lot of time to get a hardness which is below one-half, the Vondrak paper. So we have no idea whether this is tight. We've tried a little bit. But we don't know. So this is a very interesting important question and essentially say what you can do over a single matroid. Because in the round there we don't lose anything. Also several other projects that use ideas I mentioned in this talk. So I mentioned also maximization over the intersection of K matroids. So this captures like K-set-packing, K-dimensional-matching and so on. The problem is the techniques that are known for these types of problems are all combinatorial and all local search. And there are gaps even in the linear objective case, not only the submodular setting. So this problem is still open on the usual sense of approximations. And we don't know whether our continuous tools work here. And here the problem -- so we can get a fractional solution. But this time the rounding doesn't work well. So it's not a single matroid. So even for K equals 2, there's a gap. We don't know how to close it. So two other important questions, for example, can techniques used here be used for problems which are not submodular? So I mentioned max-SAT a few times and generalized-assignment, which is a special case of the -- of the matroid constraint. So we have a few intuitions and probably once I go back home this is one of the things we are going to start doing. So can we actually improve one of these two basic problems using the continuous techniques or even the symmetry properties that are also -- that we saw in the unconstrained problem also during max-SAT problem, the usual max-SAT. So can we use this to get better results? And also another interesting problem, and there is some advancement on this from two or three weeks ago. So we've talked about matroids. But what happens about bases of matroids? So even if you took a look at the uniform matroid and say you want to choose half the elements, so this captures maximum bisection, maximum directed bisection in graphs and apparently -- so we know that the continuous greedy can work in the non-monotone setting. However you don't get a convex combination of points. You get something less. And completing doing this small you jump from something that you dominated by convex combination to a convex combination we can't involve the base and not an independent set might cost you a lot. And it can't be done. So actually we use symmetry properties here. So we have improved results on these. But the general problem only for a uniform matroid but general matroid, we don't know yet what to do there. So this is a partial list of I hope interesting problems. Thank you. This is it. [applause]. >>: First off, I think this is a very impressive [inaudible]. >> Roy Schwartz: Thank you. >>: And also, I think [inaudible] the continuous algorithm you the demonstrated I think this is [inaudible]. >> Roy Schwartz: No. >>: If you know [inaudible] almost exact that is only difference is that you use FX with one [inaudible] increment. It's ->> Roy Schwartz: Uses marginal values instead of the gradient or the derivatives? >>: Yeah. It's [inaudible] it's kind of [inaudible] continuous optimization. It's the exact [inaudible]. >>: [inaudible] if you look at it. >> Roy Schwartz: So but it was in the context of linear objectives or any ->>: [inaudible]. >> Roy Schwartz: Yeah, but [inaudible] but the point here for example Vondrak's proof. So he was the one who suggested the continuous greedy. The monotone setting was to show that this works with the non-linear objectives. The objective there is also not convex, not concave, it's the multilinear extension. So it might be the same algorithm. But for example Vondrak -- I guess that Vondrak's proof is guide different because the objective has completely different shape. >>: [inaudible] the algorithm looks like. >> Roy Schwartz: Oh, actually it's a very simple, very natural suggestion. So ->>: Very natural [inaudible]. >> Roy Schwartz: Yes. So it's not surprising that it was used, yeah, a few times. >>: [inaudible] greedy approximation, people have some -- it's also people discover that this is also [inaudible] so it will be interesting. >> Roy Schwartz: So [inaudible] again and again and again. [brief talking over]. >> Roy Schwartz: Here the ->>: Is non trivial. >> Roy Schwartz: Oh. >>: [inaudible] but I just point out that the algorithm framework looks like this one. >> Roy Schwartz: Yes. So essentially if you get something an algorithm that is conceptionally simple and you can prove something that is always better, so if you ever simple solutions that work, of course that's always better. Any other questions? >> Yuval Peres: Thank you. >> Roy Schwartz: Thank you. [applause]