22868 >> Ivan Tashev: It's my pleasure to present Ngoc... to give a talk about probabilistic special modeling capable and...

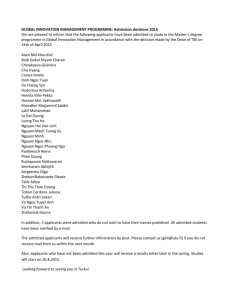

advertisement

22868 >> Ivan Tashev: It's my pleasure to present Ngoc Q.K. Duong here, who is going to give a talk about probabilistic special modeling capable and how you apply it to source separation. He took his Bachelor's degree in his native Vietnam. Took his master's degree in Korea and is going to finish his Ph.D. in India and France. So this alone is an interesting part of doing research, most of it in the area of audio signal parsing [phonetic]. So without further ado, you have the floor. >> Ngoc Q.K. Duong: Okay. Yes. Thank you for the introduction. And good morning, everyone. So first I'll briefly again talk a bit about my background. I'm Ngoc Q.K. Duong. I'm Vietnamese. So for four and a half years I took Bachelor's degrees in electrical engineering in Post and Telecommunication Institute in Vietnam. Then I have almost one and a half years working as a system engineer in industries, mainly for the Polycom video conferencing products, like audio and audiovisual. And for two years I moved to the Korea, South Korea, to pursue master degrees in electrical engineering and mainly on signal processing. After getting the degrees, I had happy years working as a research engineer in [inaudible] company, also that companies because on sound and speech technology which transfer to the more [inaudible] industry. And then after finishing -- not really finishing with that, I get funding for the Ph.D. and I move to France, France National Research Institute in [inaudible] India, to pursue my Ph.D. Up until now, [inaudible], it will be October of this year. So still four months. So exactly three years Ph.D.. that is briefly my background. Today, I'm happy to present my Ph.D. work, probabilistic space model for rebuilding and diffuse sources applied to audio source separation. So please feel free to interrupt me at any time for your question or comments. Okay. Here we go. So problem we're looking at is a cocktail party effects where we have many sound sources mixed together in meeting room, for instance, or in a patio, which prevents people from understanding. For instance, here's a real recording in a meeting room I recorded in India. [recording]. Okay. He's the director of India. We agree it's very difficult to understand, especially for the acoustic, for the recognizer to recognize a talk with lots of background noise and interference like that. So our goal is to separate the sources from the recorded signal, which is a mixture. And this problem is known as a blend sort separation. It's BRS. And BRS have loss of practical application, for instance, for hearing aid system, for speech recognition, which is very familiar with you. And so for like robotic or music information retrieval in this community we have people who try to separate the different components like [inaudible] or speech component to do some music information retrieval task. So considering the recording environment, we in reality we have two types of environment. One is [inaudible] and the other one is reverberant. What I say is any [inaudible] environment come from the perfect studio or in the outer recording where the [inaudible] environment come from a meeting room or to a [inaudible]. For instance, if you record a mixture in any environment you will hear quite clear sounds like this. [recording]. >> Ngoc Q.K. Duong: Main voice you can claim. But you can do the same thing in [inaudible] with a lot of reverberation, what you will hear is this [recording]. >> Ngoc Q.K. Duong: Hear a lot of echo and you feel that this one is actually very difficult to process. And why you have such differences is because in an environment you only record [inaudible] from the source to the microphone without any reflection, why in the reverse is true, you have a lot of sound reflection from the source to the megaphone. So this is a nice picture from the cafeteria in India. So as you hear the sounds and you see from here it's very difficult to model exactly the acoustic path from the source to the microphone in any reverse environment. And the source separation here remain very challenging, why a state-of-the-art profile already some good resource in the case of any good environment. That's why in my Ph.D. work I focus more on the modeling of [inaudible] environment which get more practical application. Okay. Now, if you look at some state-of-the-art process, there's two kinds of information we can explore. The first one is to model and explore the spatial information that is looking at the spatial position and location of the sources. And another one is to model and explore the spectral cues which is more important to the spectral information of the sources. More detail, as a spatial [inaudible] one of spatial cues, you can image in this place and intensity differences. For instance, you look at any recording environment and if the sort is here, which is closer to the microphone I C1 so the signal I C1 will be larger than I C2. So you'll have a very simple pro personal amplitude between I C2 and like that. If the source move to the position B which is closer to microphone I C1, you have the spectral cue now it's blue 1 B, so that ice 2 is larger than ice 1. So this is very simple gives you the basic ID of the spatial cues, and for the spectral cues you can explore, for instance, like if you look at the spectrum of speech, it can be explored [inaudible] the smoothness, for instance, the spectrum here is very smooth over the frequency reigns and it's capacity because here a lot of [inaudible] point, the coefficient is close to zero. And if you look at the state of Art for source separation we found there's much work on spectral cues that is based on statistical model like GMM, HMM or non-negative metric factorization, for instance, and there's actually very little work on spatial cues, and most of them based on the [inaudible] model. For instance, in the [inaudible] recording and the deterministic [inaudible] model is the source position by the face and intensity differences. We are far from the actual characteristic of the reverberant environment and also for the diffuse background noise where you cannot model deterministically the spatial information of the sources. So for our Ph.D., looking at that problem, you have assist from the deterministic model to the probabilistic model framework for spatial cues, that is our main contribution. And then we design general architecture for model parameter estimation and source separation. And then we propose several probabilities prior over the spatial position of the sources over the spectral information of the sources and [inaudible] the potential for the proposed framework in various practical shaping that I'll show later on in the simulation. But here it's summarized of the contribution and I will address this problem later on. Okay. So given that very general ideas and overview. So here will be the contents of my presentation. In the next part, I will focus on the general frameworks and parameterization where [inaudible] propose general Gaussian modeling framework, spatial co-variant parameterization and also source separation architecture EJ for general case. And then in the next part I move to the center of the problem is how to estimate the model parameter, right? We have framework. We have parameterization, but it's very important that we merge the parameter in order to perform our work, then I will present several probabilities prior in order to help enhance source separation. And finally I will come with some conclusion. Okay. So now we look at the problem in a more mathematic way. We have to formulate it right. So you have original sources it's denoted by X by J where J is the sources in the patio room and what you observe at the microphone is not actually the cell. It's a recorded signal [inaudible] and CJ is recorded source images it means it's a contribution of the sources at the microphone. And it's the proposed of source separation is that given the observed material here, you will need to estimate the source indices CJ. And since we have mixture of several sources, what you really observe at the microphone array is a mixture it's called I sub T, I by one vector where I is the number of microphone and IT would be the sum of TJ, J over 1 is the total number of sources. Okay. So now propose given XI you estimate the CJ. Okay. So let me emphasize that. We are not getting estimate in the original sources but we try to estimate the source indices. Okay. So first ask of the modeling framework, most across I use deterministic model for a spatial cue with realize on the two main assumptions. The first assumption performance is pro source assumption where acoustic path from the source microphone is modeled by the missing vector H sub J. So what you observe at the microphone CJ will be the conclusion of the original sources with the missing vector S sub J. And then here is a convolution in time domain, if you assume that the window in line is much larger than the actual hinterland of XJ you have a narrow band approximation where the conclusion in time domain is approximated by the complex value multiplication in the frequency domain. So given those two basic assumptions, most set of the across view they're source separation system. So we see that in practice these two equations do not house due to a cup of reason, for instance do not say the SJ, so when I talk I'm not straightforward like this, sometimes I move, sometimes I move my head. So we cannot model by deterministic machine vector S sub J and also because of diffuse reverberation sources. You have very diffuse, very lots of reverberation, modeling by single missing vector may not be enough. So for that reason we switch to probabilistic framework, and here it is. So in our proposed framework, we propose to model the source indices CJ as zero mean Gaussian random variable with the co-variant matrix is sigma J. And here is the form of probability function for Gaussian, it's simple. Let me emphasize a bit why we use the Gaussian modeling here. For instance, in source separation, many people use Laplacian distribution for their sources or something, but instead of Laplacian we use Gaussian here. Couple of reasons for this, because first if you consider the physical acoustic, so the reverberation is generally model extra Gaussian, is acoustic point of view. And it is easy to handle the computation. So if later on I will show with the Gaussian modeling we will just in a close-up date format for the parameterization, which is good. We showed, of course, that it worked. So this is a very important point. Okay. So furthermore, now what you have is this one. This is -- you have zero mean already, and you have unknown co-variant sigma A and furthermore in our framework we factorize sigma A by two parameters VJ and IJ, where VJ is a time variant source, variancies where we encode the spectral temporal of the spaces. So if you [inaudible] so it's modeling spatial temporal power. And IJ is actually we call it a spatial co-variant matrices where we encode the spatial information of the sources, what we refer to spatial information, it will include the spatial -- the spatial direction of the sources and the reverberation in the room. So both spatial information of the trusses is encoded here and all the spectral information of the sources, for instance, it can be modeled by G and M and S and M, for example, is coded by VJ, so you separate it by two components and all of this is encoded in the spatial co-variant, in a co-variant matrix sigma A. >>: Can you go back to the previous slide? So that the dimension of the vector here is just frequency? >> Ngoc Q.K. Duong: Vector here, C? >>: You have N and F, when the matrix -- you're looking at frequency is the dimension of the matrix? >> Ngoc Q.K. Duong: No it's the dimension of the metric is in the -- it's the number of microphone. So if you have two microphone, you have two-by-two matrix. So you have a C here is a vector of the -- let's say I have I microphone. So C is the vector of I by 1, right? So you have one source here. What you observed at the I microphone is a vector I by 1. >>: So all frequencies are, one frequency dependent on the next one, the adjacent one, right? >> Ngoc Q.K. Duong: No, not really. So here we model for -- each time frequency independently. >>: Right. That's what I'm saying. We're making the assumption that what happens at this frequency, the next frequency, it's independent. >> Ngoc Q.K. Duong: Yes. >>: Sigma J is separate for each request but not [inaudible]. >> Ngoc Q.K. Duong: Yes, true, general or something. But later on when I come back I'll make the less strict something where I can assume some dependencies in terms of frequency point. But at this time it's for general framework let me assume first that it is independent and let me model first for each frequency point by this one. Okay. So, again, V will be the scalar and R would be I by I, square matrix, where I is the number of microphone. So if you have stereo case, you have two-by-two microphone. For each time frequency point, N for sources J. Is that clear or something? Okay. And in most of the following we will more assume that IJ is time invariant. So we will not have time here. The reason we assume that is, for instance, now we don't deal with some very challenging case for moving source. So we assume more source utilization or [inaudible] so we assume that IJ modeling the spatial information is time invariant. But also let me come back to time varying case later in my presentation. But at this point, okay, for that. So this assumption is assumed by most state-of-the-art process, where the spatial location of the sources is modeled by the missing vector, which is time invariance. Okay. Okay. Let me -- so we'll hear, for instance, there's a model of the spatial information by time invariant S dependent F. So now we make the same things like this one. Okay. So now as I said before, our work is mainly focussed on modeling the spatial cues which means we focus on the model for IJ and the next slide I'll present the parameterization for IJ. Okay. So now again look at the state-of-the-art process for IJ in the inadequate recording where there's no reverberation. So IJ can be computed directly from the missing vector. Right? So, for instance, in the inadequate missing environment, if you know the source and the microphone position, so the knowledge from the room acoustic would allow you to compute directly the missing vector from the source to the microphone like this, where here, I refer to the N is the missing environment. So if you compute IJ, analyze this one, so the co-variant metric I of J can be computed like H and [inaudible] that is simply [inaudible] and why I refer to the right one here, because this is the product of two vectors. So the metric is one, one, metric. Okay. If you look at the popular parameterization based on the narrow band approximization I presented before we have CJ approximated by the product of IJ and original sources. So now if you compute the co-variant metrics of CJ, so you have sigma A, expectation of CJ and CJ eight and you reduce in this form, why this part is scalar and is VJ, it encodes a special temporal, spectral temporal information of the sources, and the spatial co-variant metrics IJ can be computed by SJ. SJS. And again it can be the 1-1 metrics. Right? So what I say in this slide is that whatever you consider the inadequate recording environment or reverb environment, the state of the S parameterization for IJ is the 1-1 matrix in both K. So what we do is that we don't want the 1-1 matrix, we want more full rank matrix with -- for more free parameter to better model the reverberation. So we look at what we call here is the full rank direct diffuse parameterization where we explore the knowledge from the room acoustic. This model is partly new because it is considered -- it was considered in the context of source localization before but the first time we look at it in the context of source separation. Okay. So if you assumed that the reject path from the source to the microphone and the reverberation is uncorrelated, so the co-variant is the sum of the co-variant of the direct path which is computed right here, by the missing vector. And the co-variant of the diffuse reverberation path. Okay. So the underlying assumption is that the [inaudible] and reverberation path is uncorroborated, where SJ of NI is computed before. So if you look at the state of the art. So state-of-the-art modeling is this one. So if we look at a full ranked parameterization where we adhere some contribution of the reverberation. Okay. >>: I have a question here about -- if you go back to the previous slide. So built in the lower equation, right, some of your channel characteristics are now being captured in the covariance only in the expectation, statistics over the expectation of the inner product. That's going to lose something it's not the entire channel characterization it's some characteristics, expectations. My question is when you go to the next slide you're saying okay now we're going to open up this matrix to be full rank, the matrix to be full rank, do you have some intuitions behind covariance is a good means for capturing the reverberation channel characteristics because it's not 100 percent. It's capturing it in expectation, what do you lose in that presentation? >> Ngoc Q.K. Duong: Okay. So what I said before is that for state of the art most processes like deterministic model for spatial information by the missing vector, so if you apply directly sky of deterministic model in our framework, so for Russian modeling framework, this one, it's our proposed modeling framework. And if you bring directly the state-of-the-art parameterization -- so you will get the right one. >>: I understand that part. But I get that. But what I'm saying is like here you're saying that you're going to expand that by using full rank, right? But there's still this question of why is the covariance structure the right way to represent reverberation? Is there enough information there in the covariance to really tell you about the reverb channels? >>: Because the question, is it Gaussian or not. Because if it is then that's probably fine. If it is. But maybe it isn't. >>: I was wondering also what's being lost when you turn this into an expectation quantity over ->>: But if it is Gaussian, then you're just many -- you should not be adding that term. Maybe some other combination. >>: That's right. >>: But it may not be Gaussian, we don't know. >>: Yes. >> Ngoc Q.K. Duong: Okay. I will demonstrate -- I understand your question is why we need the full rank and why we need to ask more parameterization in the co-variant message. >>: That's not really my question. It's okay. I can ask you later, too. That's okay. Sorry. I'm really -- I'll say it again but you don't need to necessarily answer it now. Maybe it will become clearer in your talk my question is the Gaussian a good way -- is the Gaussian approximation a good approximation for what's actually happening? >> Ngoc Q.K. Duong: Okay. I would say Gaussian is the best way, it's a good way. But as I point out several reasons why we consider Gaussian in previous slide. Of course, you can consider another one, for instance, Laplacian or something, but it may be more difficult for you to estimate the parameter later on or something. But I haven't been doing this. >>: My point is if it turns out that it's not very Gaussian, then adding more parameters to a Gaussian representation won't necessarily help it. But if you have a strong argument for why it is a Gaussian ->> Ngoc Q.K. Duong: Okay. Now I understand your question. But for sure, for physical acoustic, if you look at the acoustic book, where most people in physical acoustic, the community will model the reverberation as a Gaussian. I mean, not modeling both as a Gaussian. I mean, if you consider the reject path, it's okay, it's not Gaussian. But for diffuse reverse path, they really model Gaussian. So that is one of the reasons we consider. >>: It's a staging -- this thing it passes from the walls where the speech signal distribution basically moves from the sharper [inaudible] and more and more Gaussian and there's the tail of yet this completely Gaussian. Pretty much an infinite number of [inaudible] but the direct path and the tail kind of a -- goes from the sharper distribution and this is what actually is missing there. >> Ngoc Q.K. Duong: Yeah. I'm sorry for that. For example, if you look at the modeling for the origin itself, for the S -- for -- okay. Let me come back to this one. If you look at the modeling for the sort here, it's obviously not Gaussian. It's more like lap lap or something, but now you're looking at the model for it's the image to the microphone. There's a lot of reverberation, so there could be some less sharper as evaluation. >>: Okay. Thanks. >> Ngoc Q.K. Duong: Now we stop here. And this model have been considered in the context of source localization in 2003 for the first paper I saw. It's now we bring this one in the context of source separation and we see how it performs in this context. >>: So the second matrix -- sorry. >> Ngoc Q.K. Duong: So, yeah, if Z is something that is satisfied and if you know that the [inaudible] is shutting, then you know everything. The knowledge from the room acoustic will allow you to compute omega and sigma directly from the room acoustic. For instance, if the room is rectangular, if you know the reverbs abrasion co-vacation you know the time and you know the area and so you compute the proportion between the reverberation as it reaches the path and it's very nice that this co-variant matches here. It's like a sync function. So, yeah, it's proved already. So it's kind of deterministic. If you know everything, you have this model. >>: So the second -- all I could think is in 1954 paper I can't find it, I believe, what did you get the second -- what did you get the second at? >> Ngoc Q.K. Duong: This one? >>: Yeah. >> Ngoc Q.K. Duong: So from the textbook. >>: Okay. >> Ngoc Q.K. Duong: It's a very old textbook like the '60s or something in its proof already. And this equation is so written -- it's my mistake, I should mention some reference here. In Marco Wire [inaudible] in informing. >>: I think his paper [inaudible] it's the second, I think. >> Ngoc Q.K. Duong: Micro Wire so easy to model ->>: That's earlier paper. The 1954 is the complication of the [indiscernible] governance matrix, but no one uses it. >> Ngoc Q.K. Duong: That's why it's not really new but it's new in the context of source separation. I consider it here. But really this is not what I want. I just move from the ring one to the one I want. So what I really want is that here you see that it is full rank. But these two must constrain, you have to know everything and this contribution of this part and this contribution in this part is too much constrained. And what we want is we want to relax all the constraints and we want to present IJ as really a full rank matrices. Okay. So what this looks like. If you consider state-of-the-art rank one where rank one [inaudible] or rank one convoluted, the co-variant -- the spatial co-variant mate trick is a product of two vectors. We say 1-1 matrix. What I propose in my work we propose to present IJ with a homogenous message where we release all the constraints. So you see that over slides. This one is too much constraint. It's less constrained. We ask more. And this one is what we propose here. It's really unconstrained. So we have more parameter to model through reverberation. Okay. So now we already have Gaussian modeling framework. We have parameterization for the spatial co-variant matrix, and it's time to reside the source separation architecture. Okay. Now look at it. You have observed what's I sub T you transfer into the time considered domain you have ice NF. Given the Gaussian framework, it's a parameter you need to estimate is source variances for each sources and the spatial co-variant matrix for its source array. And once you estimate this one you can perform monkey channel [inaudible] to estimate C of J and then you converse back to time domain to get what we want. Okay. For multi-channel filtering, if you have an equation here, if you know VJ and IJ for all time frequency points, for all sources, right, so you -- okay. So this is simply an equation. But here let me emphasize that here we assume that the sources is uncorrelated. So we have a co-variant matrix of X is the sum of the co-variant message of each sources. So this is uncorrelated assumption which is less than the independencies, so this part and this part is ready. What we want now is this part. How we estimate VJ and IJ. Okay. This will be our focus. Okay. But before actually estimating this parameter, let me first demo and show the potential of the proposed framework in article strapping and semi blend setting. For instance, in the Oracle parameter estimation all the parameter here is estimated from the known sort in this. So this means that this will provide the upper bounds of the source separation performance. If you know everything and you can compute parameter, it will show you the upper bound. So I just want to prove that upper bound on source separation of the proposed framework is higher than the upper bound of the performance of the state of the art across. So if we succeed in this way. So later on we will manage to estimate the parameter blindly for the parameter. So that is the proposal of this work. And in the semi blend, because semi blend parameterization, it means we estimate the spacial parameter from the known source images but we blindly blend it from the source parameter. So I will skip the detail and present later on. But that parameter is estimated in the maximum likelihood, using EM expectation maximization algorithm. And we evaluate the performance, the source separation performance using the well-known criteria like signal to distortion SDR. And signal to interference ratio or something. Are you familiar with this iteration matches? >>: The signal distortion issue and [inaudible] factorization. >> Ngoc Q.K. Duong: Okay. So for this criteria if you have the estimated signal, so say that estimated signal can be factorized by the sum of several components. So you have estimated signal, maybe the sum of the original signal, plus some artifact, plus the interference from other sources. Right? So we have several components. So the signal to interference ratio, we mean the ratio between the two source signals and the contribution of other sources which is in factorization. And signal to distortion ratio we have the range of the overall distortion. It's the range of the good sources to all the other distortion. And the signal to artifact will measure the ratio between the signal and the artifact and the musical noise for instance. So you see SDR we measure the overall performance of the system. Why this we measure the specific application. >>: So you're trying to estimate the actual signal or a filter version of the signal? >> Ngoc Q.K. Duong: A filter version of the signal. >>: I have a question of the number of parameters here. So the covariance metrics it's full rank and full constraint. N squared over 2 parameters for each covariance matrix, right? And then you have that separately for each frequency band, right? >> Ngoc Q.K. Duong: I get -- I'll prove that later on in my slides. Thank you for that. Okay. Yeah. So as I said this is well known. So here is the resource in two case. This is the average for ten speed [inaudible] resources with the different UI, and the microphone spacing we use is 20-centimeter from the distance from the source to the microphone is 1.2 meter. And we compare the resource of our proposed full rank unconstrained parameterization with the full rank direct [inaudible] partly new as I presented before. And we compare with the state-of-the-art rank one convoluted and rank one inequitable model and we compare with the two baseline across. This is well known. It's called Valerie masking and L norm minimization. And this is the resource we have. Regarding your question about the number of parameter. So, of course, you see for the full rank unconstrained you have maximum parameter than another one, right, because you have three parameters for each sources. Each time frequency point. So if you multiply by the number of time frequency point and you multiply by the number of sources, three, you get this total number of parameter, need to be estimate. For rank one convoluted, which is mostly used, we have half a parameter, because this is rank one so there's only two. And it's very interesting that for rank 1 diffuse two you have very little parameter. >>: Rank 1 would be the square root, right? This is -- the 3,000 is that coming from the filter length of the convoluted, the estimation of the convoluted filter? Because I mean if you have a rank one matrix versus full rank matrix, the square root, the number of parameters, right, not half? Rank one is just a vector ->> Ngoc Q.K. Duong: Okay. Now you consider the status of ->>: When you say convoluted, is that the number of filter taps that you're estimating for the filter response, or why is it so large? >> Ngoc Q.K. Duong: No, no, okay, for instance, here I use 1,024 taps for the filter. So we need to estimate positive half of that, right? So this would be 513, is the number of frequency band, right? So we multiply 513 with the three sources, multiply by three. And if this true stereo case, you have two-by-one vector for each missing metrics. So it's 513 multiplied by three, multiplied by two and we get this number and for the full rank you get that. >>: How many microphones? >> Ngoc Q.K. Duong: Two microphones are used. >>: So is this a real recording or it's a simulated? >> Ngoc Q.K. Duong: It is simulated. >>: And using image methods? >> Ngoc Q.K. Duong: Yes, I am imagine method. >>: Up to the 60 the room ->> Ngoc Q.K. Duong: It is 250. >>: Okay. >> Ngoc Q.K. Duong: Yeah. >>: What's the sampling rate for this. >> Ngoc Q.K. Duong: It's 16 Q Herz. >>: How long our reverberation can you handle with a thousand taps assuming the limitation of the product? >> Ngoc Q.K. Duong: Okay. So for 16,000 and now you have only 1,000 depths, so it's like one -- 16 or so. >>: 57 seconds. >>: Much less than 250. >>: So you pass the one derivation goes down, and DB and explains the best numbers there. >> Ngoc Q.K. Duong: Okay. Again, so I have something. So if you have -- we have 16 ->>: 16 kilohertz. 1,000 frames. It's 16 of a second which is 87. 86.66 milliseconds. This is roughly ->> Ngoc Q.K. Duong: Okay. >>: It's what you have is the 16. Which is where the derivation goes down to minus 60 degrees. So mature, taking the stand of the reverberation where it goes down to minus 20. >> Ngoc Q.K. Duong: That's our proposal because we need to take the short filter compared to the actual reverberation if you take it has no meaning. >>: If you have [inaudible]. >> Ngoc Q.K. Duong: Misunderstanding here. >>: Work on it anymore. >> Ngoc Q.K. Duong: Okay. So, yeah, here is the resource for Oracle. For Oracle, of course, you have a much larger number because everything is estimated from the known sort images and the separation performance is the degree in a semi blend but overall you can see the proposed full rank unconstrained outperform another. >>: So you said that your matrices were number of microphones, the number of microphones. Here they're just two-by-two. >> Ngoc Q.K. Duong: Yeah. >>: So then -- then the explosion parameter is very small, because N squared is just four. As opposed to like if you had ten mics. So I wonder, so I'm just wondering, because so could you actually break down the 46, 17, where those parameters are coming from, where the 46 / 17 are coming from for the rank case. >> Ngoc Q.K. Duong: For the full microphone case. >>: No, no, just for your case, just want to know the breakdown of 4617 matrix of where those parameters are coming from. >> Ngoc Q.K. Duong: In terms of a spatial parameter? >>: Just what are the -- because you broke it down for the rank one, convoluted one you said 512 tops, times ->> Ngoc Q.K. Duong: You want ->>: In this case. >> Ngoc Q.K. Duong: Okay. So for this one, we also have 513 frequency band. And you also have three sources. Let me emphasize that. We are focusing on the undetermined case where we use smaller number of microphone to separate larger number of sources, which is much more challenging. Okay. So that's why I used stereo for three sources. So let's come back. You have 513. You multiply by three. And you multiply by four for each point. So you have that. But what we really are happy and surprising is that for this model with very little parameter, but it provided quite good resource is a bit less than the state of the art here, but it used very little. Let me explain why it is eight. For instance, if you look at here, so what you need to know is that microphone spacing is one parameter. And you need to know the distance from the source to the microphone. So if you have two microphones and three sources, you have six parameters, right? You have six parameter for the distance and microphone spacing is seven. And then you immediate to know a bit about reverberation time, and that's this. >>: So are you estimating the room parameters as well in those figures? >> Ngoc Q.K. Duong: No. For that I used -- I assumed that it is known. Yeah. >>: But you couldn't estimate those in principle. >> Ngoc Q.K. Duong: That is one of the pieces of work I would mention, yeah. It would be nice if we can estimate the parameter and incorporate it, yeah. But at this time let me first -- I should assume we know ->>: So actually if you look at the upper table, the full rank unconstrained, the full rank by diffuse are fairly close. It's only when you do the estimation. >> Ngoc Q.K. Duong: Yeah, yeah. >>: That all of them -- >> Ngoc Q.K. Duong: Yeah. That is really amazing. It means that if we know exactly everything. So this model is this quite good to handle the reverberation part. >>: Even before you maybe do this future work of trying to estimate those, it might be interesting just to see what the sensitivity of those results would be with respect to small perturbations in the room estimate. Because if it's robust to ->> Ngoc Q.K. Duong: I see what you mean. When I presented at some conference some people asked me about the variation about that. To explain that. But for a short time of my thesis actually I haven't got much time to investigate this one. I'm more investigating this one because in France we have almost two and a half years for doing research and a half year to write a thesis. So actually for me I only have two years to do those things. This is shorter than in the U.S. But you're right. Okay. Let me continue something. So now I proved that for the upper bound on the source separation you have good performance. So let's move to the real parameter estimate. >>: We don't want to hear ourselves then? >> Ngoc Q.K. Duong: Okay. Nice. [laughter] we have like 15 minutes more. Okay. Nice. Okay. Here is just one specific case. I did a long time before. But recently I write my thesis so I test on a very large database and we get similar ones with different sort of microphone different duration time. They're all there and so I get -- for instance, you have this [recording]. Okay. There's three sources. And for the right one convoluted K, state of the art, one voice. [recording]. Okay. And in our case you hear. [recording] okay. Again. [recording]. >>: Full rank in the thesis. >> Ngoc Q.K. Duong: Okay. Yeah, you have more. [recording]. So in this, you have lots of physical noise, right? There's artifact. That's why we use SAR, and you have more musical noise. >>: So in the Oracle results, what you mean by Oracle is just the geometry. >> Ngoc Q.K. Duong: It means we know everything, we know the source. >>: You know the whole signal. >> Ngoc Q.K. Duong: We know the whole signal, yes. >>: Not just signals. [laughter]. >> Ngoc Q.K. Duong: It will give upper bounds of performance. >>: I know the signals. >> Ngoc Q.K. Duong: Okay. [laughter]. That's why I didn't want to place out here because I know everything. So just know where to put it out here. But I just want to prove if the parameter is estimated from the very good -- I mean, this is known. So we prove that. >>: Lower case the geometry is known but not the source images, right? >> Ngoc Q.K. Duong: Yeah, yeah. Now ->>: I have one question about this. Your figures of merit do they depend on frequency as well? >> Ngoc Q.K. Duong: Yeah. >>: It seemed like for the full rank unconstrained, most of the signal to -- most of the interference was low frequency. >> Ngoc Q.K. Duong: Yeah. >>: That there wasn't a lot of leakage of high frequency from the other sources. >> Ngoc Q.K. Duong: Uh-huh. >>: Is that -- why would it -- first of all, is that truly happening, and why would that -- what's causing that? Why would the low frequency leak more than the high frequencies from the interfering sources? >> Ngoc Q.K. Duong: Of course for the speech sources most of the energy of the sources would lie in the low frequency, right? So, of course, in the low frequency range you have more interference. I mean, you can hear more interference. Because actually it's a mixture itself. It would have more energy lying in the low frequency range, right? And in terms of mathematical things I don't see the differences in my framework. The differences with the low and the high, because we treat the low and the high equally, and we apply the same parameterization for the old frequency mean. >>: The interference sounds for when you played the full rank unconstrained weren't a low volume male speech, it was a muffled male speech where the low frequencies were leaking through. And so you have a look into what could be causing that in your framework or -- >> Ngoc Q.K. Duong: Yes, actually I didn't really look closely inside the spectrum feature. Does that really affect the speech recognition system or something? >>: Yeah. >> Ngoc Q.K. Duong: Okay. Okay. Thank you for that. I will look at more or maybe it is just one specific case, because we have three sources and I play only one sources. When you hear another source maybe it's different, or different sources, I don't know. But for this I feel equally. >>: I guess similar thought. How much is the difference -- maybe you haven't done this analysis but particular spacing, you know, is going to make some differences then, make -- you are not going to have the same estimation capabilities for different frequencies given a different aperture. That might be where the limitations are coming from. >>: Either that or the framework ->>: Yeah, exactly. >> Ngoc Q.K. Duong: For that frequency solution, let me present in this slide, because I also have some work on the frequency resolution where instead of trying [inaudible] form we provide a linear resolution I use some more like acoustically motivated representation we provide more resolution at the low frequency range. I'll present later on in this work. So let me move. Okay. So now we move to the next part where I present general framework to estimate the parameter from the mixture. Okay. Here is the structure. I choose expectation maximization EM. It is well known for the Gaussian mixture model. And for YEM [phonetic], you may know that it's very sensitive to the initialization. So we need to design a good parameterization initialization scheme. And in the maximum likelihood, since we measure it independently in the frequency mean, so there may be some permutation problem in the domain, so later on we need to show the permutation problem.. So what is new in this framework is that in most signal processing processes, so the input for the parameter estimation is of the signal ice. But in our case we consider the input by the empirical mixture co-variant sigma of X is that of the signal itself. So I will explain this one later. But now here we have several block ices for the bland parameter estimation and we also consider the parameter estimation in the maximum posterior sense where, okay, there's a mathematical term. And M this case if we have some prior knowledge about the parameter. So we can estimate the parameter jolly [phonetic] in this so we don't need to show parameterization problem. So this is the general block. And following I will present in detail in each step. And for these two steps, I just explore the existing methods which is not our main contribution. And our main work we focus on these three parts. So here is the parameter initialization. I mainly rely on the work of Winther [phonetic] presented in [inaudible], where he tries hierarchical clustering of the mixture at each frequency mean after some [inaudible] and amplitude normalization. So for the source of time let me skip this slide because it's not our main contribution, it's just for parameter initialization. Okay. I'll explain more about the empirical mixture co-variant, why we use input is sigma hat of X instead of the signal itself. So, okay, I believe we need to estimate VJ and IJ using this relation, sigma X. But in practice sigma X is not observed. What we really observe is the empirical mixture co-variant sigma hat of ice. And we compute sigma S of hat as the average over the neighboring frequency point like this one. So and this one can be interpreted as [inaudible]. Later on I will present the resource with the equivalent rectangular bandwidth. This is more acoustically motivated time representation. And why we want to estimate the parameter from sigma X instead of the signal itself because compared to iso sigma X, it will provide information about the interchannel coefficient and it will take into account the neighboring frequency as you mentioned before. So we slice this and rely on the neighboring frequency point. >>: Wait. Go back. So you're mixing between neighboring channels and neighboring specs here is that what you're saying. >> Ngoc Q.K. Duong: Not channel. But time frequency reason only. >>: No, I understand. But time frequency regions. >> Ngoc Q.K. Duong: Yeah. >>: So this is time N and this is frequency. So give the same frequency being back in one more back. >> Ngoc Q.K. Duong: Yeah. >>: And previous frame give plus the two neighbor frequencies. >> Ngoc Q.K. Duong: Yeah. In the general case. If you set the length of that two is two. So you have two-by-two. And I test several cases with different length of [inaudible] and two-by-two I realize provides the best performance. We said okay. This one is the main point and this will one will contribute half of that. So you have a shape of filter. Okay. And here's your general expectation maximization algorithm. It includes N step and M step. In each step we try to estimate the multi-channel [inaudible] and the estimate co-variant for each sources, sigma FJ. I will skip the detail. And for M step, the parameter is the iteratively update, like this one. VJ and IJ. So update of VJ will depend on IJ and update IJ will depend on VJ. This is a maximum likelihood sense. And in the maximum post [inaudible] VJ and IJ can be estimated by the co-sponsored including the contribution of the likelihood and the contribution of the prior which I will present later on, where I introduce some [inaudible] parameter to take into account ->>: You estimate both these NPRs simultaneously? >> Ngoc Q.K. Duong: Yeah, because we don't know these two parameters, right? Yeah. >>: From the same amount of data. >> Ngoc Q.K. Duong: Yes, from the same amount of data. >>: Because the V in theory you could learn with a lot more data, nothing specialized right? >> Ngoc Q.K. Duong: Yes, I see. There's many -- processes learn VJ. But to learn VJ you need to know -- I mean the training data should be close to the isolated data. But, for instance, in source separation context general case when some people go into a room and talk you don't really have training data. And also in state of the art many models for VJ is present like you can present VJ at NMF model or mixture of some Gaussian. And they will train the Gaussian mixture model to get the estimation of VJ. But here, because my framework is focused on IJ. So we assume that there's no prior information on VJ. >>: But VJ is a bunch of M and F. So it's like a ton of parameters, right? >> Ngoc Q.K. Duong: Uh-huh. >>: As long as they are trans length? >> Ngoc Q.K. Duong: Yes. If you do not put any prior information, yes, it has a tons of parameters. But if you put some model for VJ, for instance, in MF order so you have VJ would be some activation and some pattern, some pattern, for instance. So you have less parameter. >>: Are you doing this utilization separate than for -- >> Ngoc Q.K. Duong: Yes, it is doing separately for each sources. Each frequency. >>: But not each frame. You're doing this tied across the entire input. >> Ngoc Q.K. Duong: Yes, because you see here R would be the sum of O frame for VJ. Yeah. Okay. Is that okay? Yes. So for permutation problem, let me skip, because I just reproduce the work of [inaudible] in Japan where he explored the DOA, the direct -- the source iteration to sole permutation problem. Okay. So here is some results we get. So this is the actual results. We also average SDR, which measures overall distortion over 10 simulated speed mixture. So we compare the results of the full rank unconstrained with the rank one convoluted case and the baseline across is by remasking L1 minimization because at this time we don't take into account another model like full rank [inaudible] because we already proved that it provided lower upper bound performance. So at this state of the research I only consider the full rank unconstrained. And here is the results for very low reverberation time of 50 millisecond. So you will see for low reverberation as rank 1 convoluted and full rank profile it leads to the same results because it's very low. But as far as we move to the higher reverberated room. So output proposed parameterization will clearly outperform the baseline across and the baseline across. >>: Is it resource [inaudible]. >> Ngoc Q.K. Duong: No, it's really blocking. >>: Static source locations or are they moving?. >> Ngoc Q.K. Duong: Source location is fixed, but we don't know. Yeah. For instance, now you hear the sounds. In 250 millisecond. [recording]. So rank one. [recording]. Okay. For our proposed part. [recording]. >>: I thought I heard results from the rank one convoluted mixture that is some better than this. >> Ngoc Q.K. Duong: In terms of? >>: From other researchers. >> Ngoc Q.K. Duong: In terms of interference or artifact or overall? >>: Overall. >> Ngoc Q.K. Duong: Okay. We can hear again. [recording]. >>: Just my recollection of some companies here ->>: Maybe they weren't in a reverberant room. >>: That's probably the biggest difference, yes. >>: So which depends ->>: How reverberant. >>: Go back one second, what level were you showing those samples at? >> Ngoc Q.K. Duong: 2,000 -- I'm sorry, 250. >>: Okay. >>: So maybe that's the difference, yes. It's possible. >> Ngoc Q.K. Duong: But even for this one, I tested in many cases, full rank clearly mattered. I don't know. >>: I'm saying what I've heard in other conferences, the separation was much better but perhaps it was much less reverberation. >> Ngoc Q.K. Duong: Yeah. >>: Because there they look almost the same. >> Ngoc Q.K. Duong: In low reverberation time it's almost the same. But as I said before, our result is getting the I for the reverberated environment. >>: But compare that with lower reverberation times you do as well as ours. >> Ngoc Q.K. Duong: Yes. Or even recently I test for the thesis I test a larger number of datasets with different scenario. So at the time I observed that even the rank 1 performed better at the low reverberation time. >>: It was even better than 10 DB. Maybe 50 milliseconds better than 10 DB. That's my recollection. >>: 17. >>: I'm sorry? >>: 17. You said [inaudible] this is what they used when they -- >>: Like the guys from ATR, the demos I listened to, to me they sound better than 10 DB. I know that reverberation is ->>: They have different definitions. >>: Did you use headphones when you listened to them? >>: I tried both. >> Ngoc Q.K. Duong: Okay. So this is one case. >>: I have a question for the room. Was it -- I'm not familiar with the data you're using for this. And to me both examples sounded unintelligible. I was maybe able to pick out two or three words from either the rank 1 convoluted or the full rank unconstrained. So it doesn't really seem like the difference is useful at 250. Is this the biggest difference that you see with this technique, or are we going to see better results? >> Ngoc Q.K. Duong: Yes, if you look at the number, you do see differences but here as I mentioned as I display one specific case. >>: Here I see 2 DB difference at 250 milliseconds. >> Ngoc Q.K. Duong: Okay. It's like one DB or something. >>: One DB. Quantitatively they're different but qualitatively I found them to be both be unintelligible. Do you have some experience that shows that there's some domain where you use this technique and there's qualitative results that are positive? >> Ngoc Q.K. Duong: Okay. I will show you later on. But let me emphasize that. In source separation community, one DBSDR is really a last improvement. If you look at the performance year after year. So we see that it's a process like 1.3 or 1.5 FDR or something performance, so it's not easy to get like one DB. >>: This is a significant change. >> Ngoc Q.K. Duong: Yeah, yeah. Let me emphasize that this is really a significant change. And in this slide I saw some more and resources compared with state-of-the-art across. So in source separation community, we have a campaign which is organized by one and a half years by the steering committee, associated with the source separation conference, which is known as the [inaudible] conference. And for this campaign we are the organizer have some common data, and the participant will use their algorithm to separate the file and submit the results so that the organizer will evaluate the performance. So they give the blind data. So all the participants don't know anything, and they give back the wave file and the result is evaluated by the organizer. And we participated in the data 2000 -- recently it's 2010 already, and we compared with five -- state-of-the-art process, for instance, [inaudible] came from an entity in Japan, well known for source separation and many of them come from Columbia University with Dan Ellis, so very famous and [inaudible] came from Tokyo University. So it's all state-of-the-art process. And here I test with five microphones based datasets and here's the results we have, with three sources and two sources compared to state-of-the-art process. >>: So that was the number of the entries? Because of those number? >> Ngoc Q.K. Duong: Yeah. I compared to all the numbers. >>: 250 millisecond it looks like the key method is 3.7 versus your 3.8. It's close to ->> Ngoc Q.K. Duong: Here? >>: Yeah. >> Ngoc Q.K. Duong: Yeah, sure. I don't [inaudible] with that I outperform everything. This is really hard. And let me also emphasize that CDs with five meter spacing and with less number of microphones facing even outperform our methods because our method we need to solve permutation problem. If you have large microphone distance, so you have many face wrapping, so it will degrade a lot of performance, yeah. So that's why I choose five-centimeter. But so the permutation is not the main goal of our process. >>: So your implementations of their algorithms or this is what they published? >> Ngoc Q.K. Duong: Yeah, yeah, and the data is tested and submitted themselves, not mine. I mean we all submitted to the same organizer. So that's another thing. And actually recently we were in 2010 I also attend and I didn't write resources here but let me summarize. In 2010 the group of entities over there outperform another one. And there is two other people outperform this method. One from EPIPI Switzerland and one is LSA but these two people use our framework. That means that he used exactly the full rank I proposed here in 2009. But he have more constraint on the source variances. So here the source variant is free. I don't use any more VSA but for those two across, they [inaudible] mode of VSA and Gaussian model for VSA and they get better performance. So it's usual. Yeah. >>: So one other question you may have said this before, but in all these cases the number of sources is known, right. >> Ngoc Q.K. Duong: Yeah, sure. >>: It's always specified. >> Ngoc Q.K. Duong: Yes. For estimating the number of sorts is remaining challenge I think. >>: But in all these experiments ->> Ngoc Q.K. Duong: It's known. Okay. So regarding the question about the frequency solution before. I test between the certain full array presentation where the frequency bandwidth is linearly equal for all frequency ranges. And if you apply EIB, which is more acoustically motivated, it will provide narrower band at the low frequency rate, where most cell energy lie in. And our resources show that the EIB will perform better performance than the software full array transform, why we have this because, yeah, so the difficulty of estimating the model parameter is that we have a lot of time frequency overlap between sources. I mean, if the sources is really spare in the time frequency region, it is easier to estimate the parameter, right? But if it's really overlap -- so it is more difficult to estimate model parameter. But if you use EIB, we have a narrower band at the low frequency range. So we have less overlap. That's why it outperforms the certain for array transform and these results were published in our ICI paper in 2010. Okay. There is PP. And now for a short time I'll move quickly to also a good contribution of the paper is defining some probabilities prior. Up to now I just present a general framework as a parameter is estimated in the maximum likelihood sense, without knowing anything. Right? But in practice, in many cases, for instance, if you look at the speed enhancement in your car you may know exactly the position of the driver and the co-driver. Or in a formal meeting, too, the position of its delegate is fixed. It means that in such situations the geometric is known. So that you can explore this knowledge in order to enhance source separation. So as far as that motivation, we design the spatial location prior. Okay. Let me also consider another one. So up to now I just assume that IJ of F is this time invariant. But if you consider the moving sources, for instance, if I talk like this, I keep moving. Or if you consider the run, so a separate run like he had in there, so IJ should not be time invariant, right? So in that time, we should consider time varying spatial position of the sources, but we can design some continuity prior. It means that we assume that it's time varying, but it should have some structure over time. So that's why we use reside some space continuity prior for us and these two different prior is published in two different ICAS papers this year. Okay. Or if you look at spectral information of the sources, for instance, if you look at spectrum of the room, it's quite mostly over time. Why if you look at a spectrum of harmonics, a piano, it describes harmonicity over smooth frequency range. And given that you can also design some spectral continuity prior to enforce the smoothness over time of frequency range. So these are a series of prior information we consider in order to enhance sort separation in specific setting where we know something. We detailed about spatial prior. So here is the model you have seen before. The full rank diffuse [inaudible] but this model, we test with large data and we found that it's quite good in terms of the operation fro the mean for the spatial co-variant methods. It means that if you have a lot of personal sources, the mean can follow this one. But in practice, it will vary over the mean. That's why we can define some distribution of IJ by the inverse we set prior where the mean of IJ is defined by this, and we need to estimate or to learn the variant. So that we know the distribution and we can estimate the parameter is the maximum post [inaudible] sense, right? Let me go a little bit faster and just give you a basic idea. And also we get close form update in MAPSIN. And here is some results. Here I just want to show that we -- if we know some knowledge about the geometric setting we can get better performance, compared to the blind initialization in a maximum likelihood sense, right? Okay. For spatial continuity prior, we consider this prior in the context of musical sort separation, where the music -- okay. And we are [inaudible] some property distribution IJ. So the mean at the current time frame depends on the value at the previous time frame. So we have continuity constraints. So now I come back with experience to show some practical where our framework is shown to provide good performance. For instance, if you look at music mixture. So it can be considered as a mixture of harmonic component and [inaudible] component and [inaudible] in the mirror community people try to separate harmonic component and drum component in order to perform some other music information retrieval, and we apply our framework with the continuity prior, so we get results for multi-channel harmonic and focus separation and we compare this result with existing single channel SPS which is proposed by the group in the University of Tokyo. Okay. And here is the results we get. Here is the, for example, one music mixture. [music]. Okay. So here is the harmonic component, we separate. [music]. Here's focussing component. [music]. Okay. If you compare with state-of-the-art for focusing you will hear [music]. So a few differences now. Because our last question is I -- for speed it's difficult. But I have some co-work with a group at the University of Tokyo where we tried to explore this framework in the context of music information retrieval. Yeah. So here instead of the when you hear loss of artifact, so the RAM is mass resource, you may not hear the actual one I play again. [music]. >>: That might not be the important thing. The goal you're trying to find features for mirror kind of stuff you might care about tempo and which [inaudible] and stuff like that. The actual acoustic artifacts are if humans are listening to the output. Another question I have in this case -- are you manually defining what the priors are for the different types of sources here or are you estimating them from data? Or where are they coming from? >> Ngoc Q.K. Duong: For the prior, there's only two parameters. One is mean. And one is variant. So for mean it's okay. It's well defined and for variant, I manually choose it. >>: Okay. >> Ngoc Q.K. Duong: But I observe that the reason it's not very sensitive to the two of the variant. >>: One extent ->> Ngoc Q.K. Duong: Yeah. So it varies between 10 and 100, it's okay. But of course, okay, we can learn from training data. >>: The source is regular state of recording? >> Ngoc Q.K. Duong: Stereo recording. >>: Stereo or mono phonic, single channel. >> Ngoc Q.K. Duong: For single channel we separate the source in each stereo channel separately. >>: So you did like, you took one of the stereo channels and then for each of the results? >> Ngoc Q.K. Duong: Yeah. So this is not a fair comparison, I will say, because I compared the multi-channel. >>: The single channel. The one is the multi-channel. >> Ngoc Q.K. Duong: I will say it's not fair but for state-of-the-art process I didn't use any multi-channel process, because for music separation, most people see the channel and they explore the mode for the spatial temporal of the sources in order to separate like. So, yeah, I tried to look for the state-of-the-art multi-channel, but I didn't see one. Okay. So here's the conclusion. So in this work we introduced probabilistic spatial model for the reverberation and diffuse sources. And we propose unconstrained parameterization of the spatial co-variant matches which overcomes the narrow band assumption to a certain extent, and we better account for the reverberation as shown in the experimental research. We consider the local and empirical co-variant for the parameterization. It's sigma hat of S which we provide additional information. And recently we proposed several probabilistic priors which have enhanced the separation in a certain context, where we know some information, yeah. Okay. For future works, we can consider the work that was blind source separation explored the spatial location prior. It's very related to the question before where the full rank [inaudible] diffuse model. And even for this prior we need to know the mean. It means we need to know that matrices and if we can find a way to estimate the parameter like estimate the source UA and derivation time it's smooth like this. And we can consider the use of the proposed framework in -- another field of signal processing. For instance, in a mirror, that is what we are doing now. And recently I found that there's some people already use our framework. For instance, Hitachi, told me Hitachi use our modeling in their acoustic canceling system and Anarchi [phonetic] also combined our process with the time frequency masking. So to enhance sort separation. >>: Is this [inaudible] 2011 paper? >> Ngoc Q.K. Duong: Yeah. Because these two guys from industry. So they consider the practical application. So so-called use the ID but he do not use the same estimation technique because ours requires more time. So combined with the process and used sort, faster estimation parameter. And we also can consider the proposed framework in spatial audio where we annualized and run the space [inaudible], yeah. Okay. And I think that is the end of my presentation. And here are some references you can see. >> Ivan Tashev: Any more questions? >> Ngoc Q.K. Duong: Thank you very much. [applause]. >> Ngoc Q.K. Duong: Thank you very much for your attention.