>> Prasad Tetali: Welcome back, welcome back to the... Impractical sad at the timely. I was asked to...

advertisement

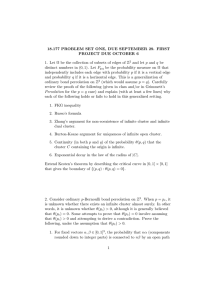

>> Prasad Tetali: Welcome back, welcome back to the afternoon session. Impractical sad at the timely. I was asked to introduce the next two speakers. It's a real honor and a pleasure for me to be here. And I want to thank the theory group at Microsoft Research, particularly Jennifer and Christian for having me visit several times, without which I think I wouldn't have gotten to know Oded Schramm. I didn't know him all that well except for playing soccer with him several times at lunch time. And so I guess I'm an Oded groupee in some ways is why I'm here. One of the first times I met him, he told me about a result of mine on hitting times using electrical resistances that he had used, and so I was pleased to hear that. But after hearing everything this morning, I'm regretting not having asked him for his proof of my theorem. [laughter]. And I did work with him just briefly for a couple of days on a problem that he'd asked me about. And I asked him why he was interested in that because he -- it was something I had thought about, and he said Mike Freedman e-mailed him and he said, you know, I like to help Mike and give his characteristic smile. [laughter]. So I think Mike will talk about some of the later work tomorrow. Let me -- it's a pleasure for me to introduce Olle Haggstrom from Chalmers University. He's going to speak about percolation. >> Olle Haggstrom: Thank you. This is the title of my talk, Percolation, Mass Transport and Cluster Indistinguishability. So Wendelin hinted in his talk at the crucial importance that Itai had in recruiting Oded to probability theory, and this is the first page of -- one of the first papers that came out of that collaboration. It's a 1996 paper in the electronic communications and probability, percolation beyond Zd, many questions and a few answers by Benjamini and Schramm. This turned out a very influential paper. And they -- it's sort of a rough outline of a research program with many very interesting questions and conjectures, also some really nice results. But it's the conjectures that get us going. Here's a list of further -- just a selection of other papers by Oded and coauthors in this field. Shortly after the Benjamini Schramm paper, they joined forces with Russ Lyons and Yuval Peres. These two papers is part of the outcome. And there are further papers. And the one that I will be focussing most on in this talk it's a result from the Lyons Schramm paper on indistinguishability of percolation clusters. So I will try towards the end of the talk to prove that result to you or at least outline the proof. And then finally there is this manuscript by myself which surveys much of the work did by -- done by Oded and his coauthors in this field. So this is about percolation beyond Zd, but just for context, we need to pick up something about what happens on Zd, so we are talking here about IID bond percolation, meaning that you take every edge of the lattice, throw it out with probability one minus P, keep it with probability P independently of all others, and you look at the connectivity structure of what you have. And one of the central results is that in this setting you get almost surely no more than one finite cluster. For D equals two, this goes back to Harris, 1960 and Arzenman, Kastan and Newman first proved this for high dimensions in '87. Shortly after the Arzenman, Kastan, Newman paper, Burton and Keane gave a different proof of uniqueness of the infinite cluster. And it's a very nice proof, and I'm convinced that they found the proof that is up there in what Ardis [phonetic] referred to as the book containing all the right, all the best proofs of mathematical results. And I'll say very briefly how their proof goes about. It's a proof by contradiction. General ergotics theoretic considerations show that the number of infinite clusters is an almost sure constant for a given P, and this constant in fact has to be zero, one, or infinity, because if it were anything else like seven you could easily connect a couple or three or more maybe of these clusters and you get fewer infinite clusters just by local modification and that contradicts the almost sure constants. So the only case you have to rule out is having infinitely many infinite clusters. And if you have infinitely many infinite clusters or in fact three or more suffices, then you can find some box which is with positive probability intersected by three of these infinite clusters and you can locally modify the configuration to show that this picture also has positive probability, mainly these three infinite clusters connected to each other by paths in such a way that there is a single vertex in the middle which we call and encounter point. It has the probability that if you remove it, you'll disconnect these pieces from each other. So provided that uniqueness fails, there will exist encounter points. And so I'm having some technical problems here. My slides are too big. Yeah. Okay. The picture works any way. Thank you. So when you're having counter points, there will be a positive density of them, and they're connected to each other in a kind of binary tree structure because you can't have cycles in their interconnections because that contradicts the notion of encounter point. And binary trees have boundaries of the same order of magnitude as their inside. And that means that since the number of encounter points inside the box grow as to volume, the number of points in infinite clusters on the boundary also have to grow like at the volume, like N to the D. But the boundary itself grows only like N to the D minus one, which is a contradiction. So you can't have infinitely many clusters. This idea is going to turn out to be useful, also, beyond the Zd setting. So when we go beyond Zd, we still have to make some assumptions on the graphs in order to prove pretty much anything. So one class of graphs emphasized by Benjamini and Schramm are the transitive graphs. So photographs are transitive if for any two vertices there is a graph auto amorphism, mapping one vertex on the other, so the graph looks the same from every single vertex. And there's this natural general assertion of quasi-transitive graphs which means basically that you have only finite number of kinds of vertices. So examples include Zd of course, regular trees have been much studied. Various tilings of the hyperbolic planes and so on and so on. Once you have examples of transitive graphs you can take the Cartesian products and get other examples and so on. I'll show picture here of. There are many hyperbolic tilings. So this one -- I guess you can't see it. Here is it's planar dual. So this is the planar dual of the regular tiling of hexagons. And I think Ken Stephenson showed the corresponding circle packing in his talk. So these hyperbolic tilings are examples of so called non amenable graphs. So what -- what's amenability? Well, first we have to define the isoperimetric constant of the graph, and that's defined as this ratio where S is any finite vertex set in the graph, DS is the exterior boundary of this vertex set, and we're counting the number of vertices in these things so this is a kind of surface to volume ratio. And then we take the theme over all finite S of this ratio. And that's the isoperimetric constant. So that's in a sense the smallest surface to volume ratio that's achievable, or the theme of such ratios over all finite subsets of the graph. And the graph is said to be amenable if this isoperimetric constant is zero as in Zd lattice just witnessed by taking bigger and bigger cubes. And it's non amenable if this constant is positive, as happens for the regular tree case and also hyperbolic tilings and many other examples. And if we go back to the Burton-Keane argument, it's -- if you look at it, it's clear that it goes through for the case when G is quasi-transitive and amenable. And the Benjamini and Schramm paper I talked about contains a conjecture of kind of converse. So to this -- so what they suspected and which is probably true is that for -- whenever a graph is quasi transitive and non amenable, you can find some Ps producing infinitely many infinite clusters. And the conjecture is a little bit stronger than that. So that then we can define two different critical values. PC is the usual critical value for the onset of infinite clusters. And PU is the smallest, the [inaudible] over all P so that we get almost surely a unique infinite clusters. And the conjecture of Itai and Oded is that whenever Q is quasi transitive and non amenable, PC is strictly less than PU. So this has been established in many cases but the full conjecture is still open. So having such a regime with infinitely many infinite clusters gives rise to several questions that are not there in the Zd case. And this is what brings much of the excitement to the -- this field of percolation on these more exotic graph structures. So the one result that I want to focus on here is cluster indistinguishability. And this is from the paper by Russ and Oded. So we focus on graphs having this non uniqueness phase, and when you have many finite clusters you can ask are they all of the same kind or can they -- can there be finite clusters of different kinds? So here clearly we need to make precise what we mean by different kinds. So obviously one property of infinite clusters that can be different for different ones is the property of containing some prespecified given vertex. So that's not a kind of property that we're interested in. But the natural property to look at are so called invariant properties. So a property of infinite clusters, we can identify it with a Borel measurable subset. So a property of infinite clusters. We can identify it with a Borel measurable subset of zero one to the edge set. And we call a property invariant if it's invariant under actions of the automorphism group, meaning that if we have a infinite cluster in a percolation configuration and then we move the entire percolation configuration by means of this graph automorphism then the moved cluster still has the same property. And the Lyons and Schramm theorem says that for any such invariant property, and we do IID, bond percolation on a non-amenable unimodular transitive graph, I'll explain to you in a moment what unimodularity means, and any invariant property A, then almost surely either all finite clusters have this property or all of them don't. So that's the indistinguishability of infinite clusters. And to explain how the proof of this result goes about, we had to backtrack a little bit to the first of the Benjamini Lyons Peres Schramm papers that I mentioned in this list. They considered a setting broader than the IID setting that I've been talking about so far, namely automorphism invariant percolation. So the distribution of what happens on a part of the graph doesn't matter -doesn't depend on where in the graph you are. So invariant under graph automorphisms. And it turns out that there's this condition of unimodularity that is relevant for the study of such percolation processes. So what is unimodularity? Here is the definition. So first of all, the stabilizer of a vertex in this graph is the set of automorphisms that fix this particular vertex. And the graph is said to be unimodular if for any two vertices this -- yeah. Any two vertices in the same orbit of the automorphism group. We have that if we take one of the vertices, apply it stabilizer, then the other vertex maps on a number of vertices which is the same number as if we interchange the roles between U and V here. So this is the kind of symmetry. This tend to hold. Unimodularity tends to hold for all reasonable examples, including automatic Cayley graphs that you try to think of, unless you specifically try to construct graphs which are not unimodular. This is my experience anyway. But there are non unimodular examples. So here's a basic example. Consider the binary tree with fix an end going off to infinity, a path going off to infinity in this tree. And we add an edge from every vertex of the tree to its psi grandparent. And the resulting graph is transitive but it's not unimodular. And the reason is that in this construction every vertex has two children but one parent, so that asymmetry is the reason for the non-unimodularity. So basic technique introduced in this Benjamini-Lyons-Peres- Schramm paper, they were constantly very generous in quoting an earlier paper of mine for inspiration, but it's really they who understood what's going on is this mass transport technique. So the idea is that you take some function here, a non negative function of three variables, two vertices, and a percolation configuration. And this should be invariant under the diagonal action of the automorphism group. We should think of this function as an amount of mass transported from one vertex to another given the percolation configuration. So every vertex looks around at the percolation configuration and deciding how much mass to send to each other vertex depending on what they see. And the rule has to be automorphism invariant. And the mass-transport principle, which is formulated here, says that if the graph is unimodular, then the amount -- the expected amount of mass received at the vertex equals the expected mass sent away. And the reason why you need -- to see that you need unimodularity or some condition, you can look at [inaudible] graph and consider the mass transport where every vertex sends unit mass to each of its two children. Then every vertex sends mass 2 but receives only mass 1. But on unimodular graphs, mass is preserved in this way. The proof of the mass-transport principle. Here's the Cayley graphs case, just to show you that it's not more than a three line calculation and in the general case you need a little bit more, but it's still particularly complicated. >>: People were in the middle of reading the proof. >> Olle Haggstrom: Yeah, yeah, but [laughter]. Okay. So here's a toy example to show you that a -- how the power of the mass-transport method took. I'm calling an finite cluster slim if it consists just of a single naked infinite branch, infinite path. So there is no branches on it. It has a single vertex where it begins and then it goes off to infinity. And the question is is it possible to construct an invariant percolation on G which contains slim infinite clusters? And on Zd, I'll do this rotation again, the answer is no, and it's because of this. It's basically part of the Burton-Keane argument. If you have slim infinite clusters then these end points will appear with positive density and for each end point there will be some point on the boundary that it connects to and different end points give rise to different infinite clusters. So again we get this contradiction between volume and surface. So this argument works on non amenable graphs but -- I'm sorry, on amenable graphs but in the non-amenable case you have to think about something else. And in fact, if we go to the Trofimov examples, slim infinite clusters can occur. And what you do to construct such a thing is that each vertex tosses coin to decide whether to have a link to its left child or to its right child. And this produces a percolation configuration consisting entirely of slim infinite clusters. So the question is here, okay, maybe we can't do anything in the non-amendable setting. But in fact if you -- the restrict to unimodular graphs, existence of slim infinite clusters has probability zero. And it's very easy to prove if you know the mass-transport method. So here's how it goes about. So what you do is you define a mass transport where every vertex sitting in a slim infinite cluster sends unit mass to the end point of this cluster. No other vertex sends anything. It's only those vertices sitting in slim infinite cluster that sends unit mass to the end point. So clearly the expected mass sent from a vertex here is at most one. Now, if there do exist slim infinite clusters, then some vertices, namely the end point, will receive infinite mass, meaning that the expected mass is going to -expected mass received is getting to be infinite. And we get this strict inequality between expected mass sent and expected mass received, contradicting the mass transport principle. So that was the warmup example. Yes? >>: You proved that you wouldn't have probability when you did not see [inaudible] clusters? >> Olle Haggstrom: I show that with probability one there won't be any slim infinite clusters. >>: Oh, I see. All right. But it seems that it's slim infinite clusters extremely unlikely because of like a branch. Is that a correct intuition? >> Olle Haggstrom: Because of ->>: [inaudible] it seems very unlikely that with a branch at every point along ->> Olle Haggstrom: No, no, no, because here I can define the percolation process in such a way that there is no branching going on. >>: [inaudible]. >> Olle Haggstrom: This was not IID percolation. >>: Okay. >> Olle Haggstrom: I just required the percolation to be automorphism invariant. >>: So it's just some probability measure function [inaudible]. >> Olle Haggstrom: Yes. >>: [inaudible]. >> Olle Haggstrom: Yeah. Automorphism invariants. Okay. So now for the real thing here. Clusters indistinguishability from the Lyons and Schramm paper. And I organized -- how many minutes do I have? I think I started five minutes late. >> Prasad Tetali: Seven minutes. >> Olle Haggstrom: Yeah. Okay. So there's a lot of ground to cover here. And I've organized it in like five steps here. And I go right on to the first step, which is the existence of what I call -- or what they call pivotal edges. And what's a pivotal edge in the percolation configuration? It's an edge which is close -- it's off but with a property that if you would turn it on, it would change the status of an infinite cluster. So regarding this invariant property that we're looking at. So we're fixing an invariant property A. We're assuming for contradiction that infinite clusters with property A and its negation coexist. And claim that if this is the case then there's also going to be pivotal edges sitting around. And in the case where there exists finite clusters with probability A and A complement sitting within unit distance from each other, then it's clear that including this edge is going to change the status of one of these infinite clusters. Because they're going to merge and become a single cluster. And in the general case, these clusters are going to sit at some distance, some finite distance from each other, and then you can do the same thing via a local modification argument. That's the first step. Then, there is something called delayed random walk on the infinite cluster which is a crucial tool here. So what we do is that we define a random walk starting at the given vertex, and it moves only along edges that are present in the percolation configuration, and it's almost simple random walk, except that it has some holding probability. So it stays put with a probability equal to the proportion of edges in the graph that are neighboring edges in the graph that are closed. So one way to describe this is it picks a G neighbor uniformly, it moves to that neighbor if the edge to it is open, otherwise it stays put. And the crucial lemma here is that the percolation configuration as seen from the random walk is stationary. The way that the percolation looks from the point of view from the vertex where the random walk stands is stationary. So I'm not going to formalize or prove this. I'll just mention that it's a central mass transport argument to show this. And I'll move on to the next step which is the existence of encounter points and plenty of encounter points in the percolation configuration in this setting. So it's the same notion as in the Burton-Keane proof. The vertex with three separate paths to infinite that would fall in different components. If we remove the vertex. And if we consider the mass transport in which every vertex sitting in an infinite cluster with encounter points send unit mass to the closest encounter point with some tie breaking rule or maybe just equidistribution in case of a tie. That's the mass transport. And this first those that any infinite cluster with encounter points has to have infinitely many encounter points, because otherwise one or more of the encounter points would receive infinite mass while all vertices had sent only unit mass. So that gives the usual contradiction to the mass transport principle. So once you have encounter points, you have to in a cluster you have to have infinitely many. And if you combine this with local modification, you get that any infinite cluster has to have infinitely many encounter points. And the idea here is that let's say this is an infinite cluster that has only -- that has no encounter point, then by local modification we can get this picture and the mass transport that I just described will send infinite mass from this part of the cluster to this point. So I -- every infinite cluster has infinitely many encounter points. The main reason for doing this is that once you have this abundance of encounter points in the graph, your -- the random walk, the delayed random walk on this cluster, intuitively it's clear that this should be transient. Because of this sort of treelike structure that the encounter points creates. You can in fact deterministically construct non invariant percolation processes, which have these encounter points and which make the random walk recurrent. But because of the invariants here, there are ways to demonstrate that the walk becomes transient. And there are various ways to do this. And I'll omit the details. I'll be happy to describe it in one-on-one sessions to anyone interested. Or you can turn to Russ if you want to hear the full story. And then finally we have all the ingredients and want to put things together, so we apply local modification to a pivotal edge. So what I'm going to do is or what they do is that they run simple -- I'm sorry, delayed random walks, starting at a point here, and what I forgot to mention about this delayed random walk is that you can extend it to negative times by running two copies of it, one going forwards in time and one going backwards. And if we have these two different infinite clusters and then this pivotal edge may -- using local modification we're allowed to turn that edge on and get something that still happens with positive probability, we can get them with positive probability random walks trajectories where in positive time it runs off in this direction and in negative time it runs off in the other direction. So that gives -- if you look at -- we get a stationary process in time to indicate whether the random walk seized property A or property A complement and when you take time average going backwards in time you converge to something, and when you take time average going forward you see something else. And that basic ergotic theory tells you that this is impossible. I'm cheating here as some of you may have seen because when I introduced this edge, I'm going to change the property of one of these infinite clusters. So it looks here like the argument doesn't work. But if you replace property A by some finite range property as seen from the random walker, this gives you an approximation argument that that works. So this argument in fact shows that you do get this decide contradiction from the coexistence of the infinite clusters in different times. And I should finally mention that the result much Russ and Oded was formulated in great generality. If you inspect the proof that we sketched here, it works under various modifications. So for instance, IID percolation which I insisted on here, can be weakened to having automorphism invariant percolation with the extra property of insertion tolerance, also known as positive finite energy. Meaning that an edge always has positive conditional probability of being present given what you see on the configuration on the set of all other edges. Also, whether we look at bond or side percolation turns out to be relevant. And transitivity of the graph can be weakened to quasi transitivity. So that concludes my presentation. Thank you for your attention. [applause]. >>: Time for questions. >>: Well, I just had a comment. I think Olle is much too modest in describing his own role. First, he was the one who introduced not only mass transport technique in the percolation but also the technique of using delayed simple random walk into percolation. And also, he and Yuval had earlier proved partial result in indistinguishability within additional hypothesis of monotonicity of the property. >>: In the non unimodular test. >> Olle Haggstrom: Yes? >>: Can you give an example where indistinguishability fails for IID percolation? >> Olle Haggstrom: Yes. That Trofimov example in fact works. >>: [inaudible]. >> Olle Haggstrom: Yes. Because every finite cluster if you do IID percolation in Trofimov's examination is going to have an uppermost vertex. You can look at the degree in the cluster of this vertex. That's an invariant process. >>: Mike? >>: During your talk I was thinking about the Cayley graph for solve geometry, you know, which is an example where the growth is exponential but it's still [inaudible] truth. It seems to me that -- I was wondering whether the hypotheses of non-amenable is related to [inaudible] or were there [inaudible]? It seems like the [inaudible] argument is destroyed if the ->> Olle Haggstrom: No. >>: No? >> Olle Haggstrom: In fact, amenability is the crucial property for it. You just have to pick the -- you don't have to look at both, throwing both, but you have to pick some sequence of sets that witness the amenability and their argument will work. >>: I see. Okay. >>: In the interest of time, let's move on. [applause]. >> Prasad Tetali: We'll go ahead with the next talk. The next speaker is one of the organizers and manager of the theory group, Yuval Peres, and he's going to talk about an unpublished gem. Connectivity Probability in Critical Percolation. >> Yuval Peres: So good afternoon. Let's see. Turn this on. Good afternoon. I'm going to tell you about a theorem which really a lemma, but it's one of the really striking ideas I heard from Oded. He never published it because he felt he had the lemma had great promise and he wanted to actually realize that promise before he, you know, released the lemma into the world. But he told the -- some of us about it, and to share that with you. There are already some nice applications of the lemma. So the setup is the setup that Olle described in his talk. So of -- so G is non-amendable, and assume a Cayley graph, so you have an underlying discrete group and with a finite set of generators and you connect two elements of the group who's ratio is a generator. Or you could think of the more general setup that Olle described of a more generally a -- the -- a transitive unimodular case. Everything works in both so whichever setup. And for those, you know, who haven't worked in that setting, it's good to focus on some examples. So think of either those hyperbolic desolations that Olle showed in his talk or one concrete example that we can all visualize is think of a product of a tree, say a regular tree and the integer. So that you could think of a tree existing each kind of plane and every tree in a plane connected to the trees above and below it. So that's tree -so that's one concrete example. And this lemma for that is related to the open question, open and general, whether G non-amendable -- well, G as on the left implies that a PC, the critical parameter for having an infinite cluster is less than PU, the critical parameter for having a unique infinite cluster. So this is still open. And there are various cases that this is known, so in fact before this theory existed, before the time of Oded's paper, there was a paper by a [inaudible] and Newman who showed the motions of PC and PU were not formally defined, but they did show in effect this for a certain trees times Z Benjamini Schramm extended it and also proved PC less than PU for planar non-amenable graphs. And there's a paper by Igor Pak and Nagnibeda where they prove it in a powers of so given any non-amenable graph you can pass to a certain power of that graph so you connect things that are distance K in the original graph but passing to a large enough power you will get PC less than PU. So this uses some insights from this Benjamini Schramm paper. But the conjecture is still open. And to me one of the most promising avenue news is the following lemma that Oded found. So if we use to add criticality we used to from statistical mechanics that signature of criticality is having polynomial decay rather than exponential decay of let's say connectivity probabilities. But if we think of a -- if we think of the kind of degenerate example of a tree, so consider for a moment percolation on T on the tree, so on the tree PU is just one, so it's kind of a degenerate example for this theory, so in these -- in these interesting examples PU is less than one, but on the tree PU is less than one. But you also see on the tree that for all, you know, for all P less than one that you have connectivity probabilities decay exponentially. So although if you are working say on the three regular tree, even three regular tree PC, so here PC equals one-half, let FPC or even above PC you don't have polynomial decay you have still exponential decay if you have two vertices and ask what's the chance they are connected, the probability decays exponentially in the distance. So the idea is that this is a signature of the fact that PC is not the right critical parameter for connectivity, it's PU, which is the right parameter for that. So remember PC is defined as a critical parameter for having infinite clusters. But infinite clusters can only explore a small part of the space sometimes, and that's why here you have connectivity probabilities decay exponentially. And Oded's -- so Oded's lemmas that I want to tell you about is that for such G, so non-amendable Cayley graph or transitive unimodular, we have the following kind of exponential decay, so we look at the probability of connecting from a vertex X0 to a vertex XK in PC, and this probability decays exponentially less than lambda to the K with lambda less than one. And lambda is in fact -- I'll identify it for you in a moment. Now, what are these points, X0 and XK? So these are not just any two points but X -- so X0 you can fix it with the identity of the group or any fixed vertex, but XK is actually simple random walk on the Cayley graph. Okay? So you consider simple random walk on this graph. And this probability statement is a joint probability space. So we run a -- we do critical percolation with parameter PC on the graph, exactly the critical parameter for having infinite clusters. And we also independently run the random walk. We run the random walk for K step. So here is X0, here is XK. It's some distance at most K, but in fact on these graphs we know that the random walk escapes at linear speed. So at some distance linear in K. And we ask for these two points, what's the chance that they're in the same PC cluster? The answer is this decays exponentially. So this is pretty good indication though not yet a proof that PC is less than PU. So there is, you know, something still missing and this was the motivation for Oded to prove this was to get to the PC less than PU. He never published it I think because you know the last step was still missing to go from this to PC less than PU. But the proof of this he sends -- actually sends a few of us by e-mail and this one literally made me fall off my chair. So when I read it was a very short -- very short paragraph because you know, we knew all the tools involved, but it was just such a standing combination of these tools. So let me tell you these tools, some of them you've seen in Olle's talk. So non-amendable graphs are designed, as Olle told you, by having these ice o per metric constant or expansion constant, the ratio of surface to volume being bounded below. But for probability there's a useful equivalent definition, which is Kastan's theorem. So I'll right this for the case of groups it's more general. Kastan proved it for the case of groups. So for G, which is a Cayley graph, which -- okay. This is -- okay. Kastan's theorem is more general than what I'll state, but I'll just do this version. If you look at G non-amendable is equivalent, is equivalent to the spectral radius, which is defined to be the [inaudible] of the probability of returning to the starting point, but I mean I can just define for any G. It doesn't matter what G I fix here. So this -- we take this probability of returning -- of reaching in N steps starting from some fixed point 0, the probability of reaching some vertex G. We take this to the -- take the Nth root of that. So basically we're asking is there exponential decay or not? And lambda is less than one if and only if G is non-amenable. So again, it's -- the correlation -- this is equivalent not to exponential growth, the exponential decay of the return probability is equivalent not to exponential growth but to non-amenability. Okay. So that's the ->>: [inaudible]. >> Yuval Peres: Yes. And Oded's theorem works with the same lambda. Okay. But here there is no -- here there is no percolation at all. This is just a fact about random walk going back to 1959. Basically Kastan's thesis. Okay. So that's one tool that comes in. A second tool is a theorem already quoted in Olle's talk, theorem from the paper by Itai and Russ Lyons, [inaudible] Russ Lyons, myself and Oded, which is a theorem that in G as above, so say Cayley non-amendable, we have theta of PC equals zero. So theta is probability that the vertex, specific vertex is in an infinite cluster which probability is zero, in other words if you work in PC almost surely no infinite cluster of open edges. Okay. So we're doing bond percolation here at the critical parameter PC and this is a famous open problem whether it's through in Zd for intermediate values of D or in any transitive graph. But this is in the case, class of cases where it's known all the Cayley non-amendable graphs are more generally non-amendable transitive unimodular graphs, theta of PC is zero, I won't give -- you know, I won't recall the proof of this, just going to use this as a tool. Because again, when Oded sent his e-mail, this was after we had proved this result. And the third tool that comes in is actually goes back further, it's Olle Haggstrom's theorem which was one of the earliest uses of mass transport in percolation in his paper on -- involving and automorphism invariant percolation on trees. And Olle's theorem, and he had several theorems in this paper, the one I need here is the fact that for a -- so for invariant, and here I mean invariant under all automorphisms, percolation, this is remember our statement here is about independent percolation, but as a tool we use result about more general invariant percolations. So these are just measures on random subgraphs, no independent assumption, but invariants under automorphism. So invariant percolation on a regular tree. Regular tree T. So let's give this invariant percolation a name, say omega. If we know that almost surely there are no infinite clusters, so almost surely no infinite clusters in this percolation, then that forces the expected degree in the percolation of a vertex. And since I'm assuming the graph is transitive, it didn't matter which vertex I put here. The expected degree of a vertex in this percolation is at most two. Okay. So any way you build percolation in a tree, it has to be automorphism invariant. So here again in Trofimov's, if you take -- so here is a counter example to the theorem, of course it doesn't satisfy one of the hypotheses [inaudible] if you take -- if you just take a binary tree with a distinguished end, so a three regular tree with a distinguished end, and you just -- you have this notion of levels of the tree, right, because you have a distinguished end, now you just remove every level with probability one in a thousand. Independently of each other. So this will be a process that will cut the graph into finite clusters but the degree will be close to three here. But that's again a non unimodular example. So if you stick to unimodular examples and do percolation on trees, which is automorphism invariant under all automorphisms, then the finite clusters force the expected degree to be two. Okay? So that's last two. And let me just show you the proof because it's yet another cool application, and it's one of the earliest ones of mass transport. So here's the proof. I am -- so we're assuming all the clusters are finite. So okay each vertex takes its degree, each vertex X sends its degree. The omega of X out to its cluster. And it divides it uniformly. So a -- so the mass transport MXY, the random mass transport, so it depends on X, Y, and omega, will be a degree of X over the size of the cluster of X if Y is in the cluster of X and zero otherwise. Okay. So that's the mass transport. And if you look at the MXY, which is the expectation of the mass transport, then the total mass sent out from a vertex is just it's expected degree. Because when we add over all the cluster, the denominator here cancels, we get the degree, and then we take an expectation, so we'll just get the expected degree. And this is the expected mass coming out. But what's the expected mass coming in to a vertex? Okay. Well, for every vertex, the -- let's first look what is the mass that can come in. So the mass that can come in to a vertex is at most the sum of the degrees in the cluster divided by the sum of the cluster. This is going to be a -actually the expectation of the sum of the degrees which is twice the number of edges in the cluster divided by the size of the cluster. >>: [inaudible] over X ->> Yuval Peres: So I'm signing over Y. So here the math is sent from Y to X. Okay. Sorry. I switched to keep you awake. So we're looking at this ratio. But because we're in a tree in any finite tree the number of edges is at most the number of vertices. So this ratio is at most two. Okay. The ratio inside this position is at most two. So by mass transport we get -- since these quantities are equal we get the expected degree is at most two. And you saw that we need unimodularity for that [inaudible] in Trofimov example then -- yes? >>: [inaudible]. Contradicted your ->> Yuval Peres: Again. What you do again is you remove every level of this ->>: [inaudible]. >> Yuval Peres: With probability epsilon. That is automorphism invariant when you only restrict to automorphisms that keep the levels. But that's -- so that's not the ->>: Expected degree in each cluster [inaudible]. >> Yuval Peres: So this ->>: [inaudible]. >> Yuval Peres: Okay. I'll -- right. That's good point. But this -- you know, so -so let's do this in Trofimov's example, you know, we connected every vertex we connect to the grandparent. So we add -- we can add those -- we can add those extra edges. So okay. So I'm -- all right. So it proves for you this tool and now -okay. So the last thing I want to do is just tell you how these get put together. So all of these are tools that, you know, that we in the business knew very well. But then how does this give the statement? So here is -- okay. So I'm going to have to erase this. Now that proof was just a one paragraph e-mail given these tools, but still finding that paragraph is something. So he says the following: Let's draw a tree. This is going to be a regular tree, but it's going to be of high degree. It's actually the degree fix the number of K, the degree of this tree is going to be a lambda to the minus K, the integer part. So every vertex has lambda to the minus K neighbors. It's a regular tree. Okay? And we need to define -- again, we're in the set of proving lemma. We're going to define an invariant percolation on this tree. So -- and we are to do it using a tree index random walk on the group. So fix -- here is the root of the tree. Say call it 0. So -- and this is going to be mapped to a point X0 which you can think of the identity of the group. And then for every vertex V, we're going to have a variable XV. But the way that it is obtained is by running K steps of the random walk. So if XV -- so if VW are neighbors, XW is obtained from XV by running K steps of simple random walk on the group G. So here we have the tree and we're kind of mapping -- we're defining a random mapping from G to the group using this random walk. So if you're going to trace any path in this tree, you're just going to see a simple random walk. Every edge of the tree corresponds to K steps of the walk. Okay? But the walk -- if here I have the walk as it branches from this vertex V, the walk going here and the walk going here are going to walk independently. Okay? So think of every edge in the tree corresponds to a path of length K and all these paths are glued together independently to define a random mapping from the tree to the group. Now, what is the percolation? So we define V and W -- VW are open. This edge, VW is open in the tree, so here is V, here is W, so VW is open in this percolation omega if and only if these points XV and XW are connected at level PC in G. But remember our key object is percolation in the group. Independent percolation in the group. So if XV and XW are connected in this same PC cluster, then VW is open. This defines a percolation on the tree. Apple it's easy to see. But this is an automorphism invariant percolation on the tree. Okay? Now, this -- all right. Now, why did we define this degree in the tree? Because this is the basically the largest degree we take to make this a transient tree indexed walk. Because if you want to see what's the expected number of visits from this walk to a specific vertex G, so sum over all vertices, the probability that this walk will visit G, XV is this tree indexed walk, well, you can obtain this just from simple random walk by summing over N the number of vertices at level N of the tree, okay. Well, this is a fill-in equality number of vertices at level N of the tree times the probability for simple random walk. Now, XN is back to random walk [inaudible] the probability that XN will equal G. You know, all started from this fixed vertex. And this we can certainly bound the growth of the tree is lambda to the minus K minus one. That's a number of children at each level. The first level is a little different, so I throw in this too for safety. And then I have to take this to the Nth power. So that counts the size of the Nth level with this factor of two. And then this probability we know from -- so here we have to put XNK because N, level N of the tree corresponds to NK steps of the walk. So we get lambda to the power NK. Right? And because of this one we know that this is finite so the expected number of visits of this tree indexed walk to any vertex is finite. So the walk is transient. It will exit the compact set along any path after finite time. Okay. So what does that tell us? That tells us that this invariant percolation has only finite clusters. Because think about what would an infinite cluster mean? It would mean a sequence of vertices all connected in PC and the sequence of vertices are going off to infinity. But that would give us a infinite PC cluster that we know doesn't exist. So it follows here that -- okay -- that omega has only finite clusters. Okay. So that's the key point combining the transients of this walk with the finiteness of the clusters in PC if the walk -- if you had an infinite cluster in omega, an infinite -- so an infinite path of open edges in this omega, it would yield for you an infinite collection of vertices here, all of whose images are connected in the PC cluster. But these are going off to infinite. So you would get an infinite PC cluster in G. But we know those don't exist. So in omega, there are only finite clusters but now recall Olle's theorem that I proved to you before. This means that the expected degree in omega of any vertex is at most two. But what this expected degree? We can easily calculate that. This expected degree is exactly the branching of the tree, so integer part of lambda to the minus K, times this probability that X0 and XK are connected. If you go to this definition, what's the expected degree of a vertex is exactly this product of the number of edges in the tree expected degree number of edges times this probability. So getting -- right. So this tells you that this probability is at most two over lambda to the two minus K which is already exponential decay, so in the very last half minute I want to get the actual statement there. So Oded left this last step off his e-mail because it was too obvious. But let me include it here anyway. How do we get to the nice exponential decay? From this we just use some multiplicatively property so the probability that if we look at the probability that X0 is connected to XK and take that -- and take that probability to the power L, this probability is at most the probability that X0 is connected to XKL. So to connect from X0 to XKL you need to connect from X0 to XK then from XK to X2K and so on, and using the FKG inequality you have this inequality. But then this argument applied to KL tells you that this is at most two over lambda to the minus KL, all of that integer part. And now if you take Lth roots of both of these and take L to infinity you'll get the desired statement. Thanks for your attention. [applause]. >>: You missed something obvious. So why is it that this doesn't apply that PC is less than PU? What's ->> Yuval Peres: Well, maybe it does. But the problem -- okay. The problem is that we don't know anything about the length of the paths that connect. So what's the natural approach to go here to say that, you know, suppose -- right, suppose PC equals PU, then you could get a -- you could get very far away points the probability of connecting would stay positive and then you change the probability a little bit. The problem is that we don't have good control in super critical percolation of what's the length of the paths connecting. So even though, you know, you look at the points -- points that are distance K, it's possible that the only connections are extremely long. And they be the trivial argument. >>: If you had a unique cluster then you take X0 and XK, they each have a positive chance that there can be a unique cluster and it's positively correlated so there's a positive chance they're both in the unique cluster. >> Yuval Peres: Right. >>: So how come that doesn't already contradict exponential decay? >> Yuval Peres: Again. Remember that we already know that at PC itself there is no finite cluster, certainly not even the -- not unique and knowing -- so the problem is you have to chance the piece, right? >>: Oh, I see. Okay. Never mind. I see. >> Yuval Peres: So well maybe you know think a little more and maybe you'll [laughter]. As I said, this looks [inaudible] we haven't been able to completely it for the original purpose but recently [inaudible] has new applications of the idea in this proof to actually derive [inaudible] various new graphs. But I won't, you know, get into that. >>: So how do you know an exponential decay for any point of distance K then you would know that [inaudible]. >> Yuval Peres: No. [laughter]. >>: Okay. [inaudible] during the break and [inaudible] 3:30. Thank you. [applause]