21665 >> Nikhil Devanur Rangarajan: It's my pleasure to introduce...

advertisement

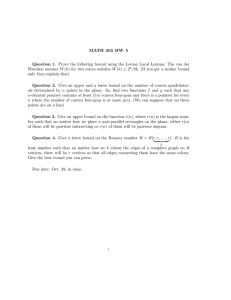

21665 >> Nikhil Devanur Rangarajan: It's my pleasure to introduce Deeparnab Chakrabarty, who is a post-doc at UPEN. He was also a post-doc at Waterloo, and before that he graduated from Georgia Tech. So Deeparnab works on approximation algorithms and algorithmic [indiscernible] and many other things. Today he's going to tell us about universal and differentially private Steiner tree and other things. >> Deeparnab Chakrabarty: Thanks, Nikhil. It's sort of a short talk. So stop me whenever things need clarifying. Okay. So what are universal and differentially [inaudible] algorithms. Essentially an algorithm takes an input, does some operation on it and gives a solution. And it's assumed that the algorithm has complete access to the input. And we worry about things like efficiency, how much time it spends on that input. But often we need to revisit this assumption that we have complete access. So the universal and differentially private algorithms are basically two ways in which these come up. For instance, part of the input might not be known to the algorithm, and yet it might have to come up with a solution, which performs well when you get to know the unknown input. So the same solution should work well for all instantiations of this unknown input. And that will be, I'll make this precise as we go, but that will capture what universal algorithms, because it works for no matter what part of the input is. And the second way in which you might not have complete access is that input might be controlled by different agents. And agents might have different -- so the fact that the agents control these inputs puts a restriction on the algorithm because the algorithm has to take care that the agents have incentive, some sort of incentive to actually give the input to the algorithm. So these things put restrictions on the algorithm's behavior, and therefore the question that we are looking at is how do these restrictions affect the performance of algorithms. So let's take an example. So the example is the following. So we have this undirected network. So think of this there's a distance function on these points, it's symmetric and all that. And the algorithm that we want to do is the -- the problem we want to solve is the following. So the input would be a set of clients among these set of nodes. And there is this green [inaudible] root 92 and the algorithm's goal is to connect all these things to the root. Now, if I know who the clients are marked by X, then this problem is called the Steiner tree problem, it's a well optimized problem and there are good algorithms in the sense they're constant factor of problems. So this problem is -- well, understand it quite well. So the case of universal algorithms is the case when we don't know who the actual clients are beforehand. And yet we must return a solution so that when the actual set of clients come up we actually look at the set of solutions induced by the clients and still be good with respect to them. So what do I mean? So the solution should now -- since I don't know who the clients are, one solution, possible solution, universal solution, could be just return spanning tree, a tree which contains all the clients, all possible clients, and when the actual set of clients appear, when you know what the actual set of clients are, I just look at the induced tree and this is the cost I pay on this set of clients. Now, this induced tree might not be the optimal Steiner tree if you knew the set of clients, and therefore the performance of the algorithm on the set of clients will be defined as a cost that you pay on the induced tree divided by the actual optimum. That's the factor that you lose. And the quality of your solution, quality of this master tree that we return is called alpha approximate. If no matter what set of clients come up, the cost that you pay is within alpha times the optimum. So this is the universal Steiner tree problem, where the solution concept is returning spanning trees of all client set. All possible client set. Now, again for the Steiner tree problem, here's a stronger solution concept. Instead of returning a spanning tree on these nodes which connects to the root, for every vertex in the graph I'm going to return a path to the root. So this diagram might be a little confusing, because it shows that all parts are disjoint. This might not be the case, of course, because the parts have to go through other vertices and the vertices are these yellow vertices. So every vertex I return a path. And when a set of clients come up, I just look at the collection of the union of those paths and that I call P of X and I pay the cost of the union. And my performance is the cost to every optimum. So let's now see that this is a stronger solution concept, because if you are just returning a tree, the tree induces paths, which is just the unique path to the root. Now I'm saying, well, you're allowed to deviate from these set of paths. >>: For any particular concept it's restricted where the representation space? You can say, okay, give me -- any sequence of [inaudible] there aren't ->> Deeparnab Chakrabarty: So you have to be -- well, we'll consider these two natural, quote/unquote, natural solutions but there could be another way of representing the solution which could be universal solution. But it's not clear to me at least what is all possible ways of realizing that. Of course, you don't need to even return anything. Every time a new comes up, you can -- the induced solution is just compute the Steiner tree. So I'm not going to go into the definition of what is this induced solution, but these are two natural things which have been studied in the literature. It's natural, because I'm computing my computation time for induced [inaudible] is very small then, than actually computing the optimum. Okay. So for this path solution, the algorithm which returns the election of parts is alpha approximate if no matter which clients come up, the issue of what you pay to the optimum is at most alpha. So that's for Steiner tree, and for the traveling salesman problem, again, the problem is very similar. A set of clients show up and now instead of a tree connecting them, you want a tool. And you want to minimize the cost of this tool. So what is the solution concept that we will study? So I don't know the set of clients beforehand. So what we will return is a master tool. So from the green point connecting all the cities. So think of -- the way this was sort of motivated was that there is a postman who goes from the post office which is a route and it has to deliver letters to all these addressees but at everyday a different set of people get mail. He does not want to compute his route every day differently. There's one master route. And so there's something subtle here which I should point out. The master route just identification an ordering of vertices. When a set of clients show up, he's going to follow that set of clients as per the ordering. He's not -- so I should point out that -- maybe I should use this. So if X comes up, this is the route that he'll take because that's what the ordering says. While going from here to here, he will not go all these -- he'll not pay time to traverse these edges. He'll go straight from here to here. So it's not the union of edges anymore. He actually shortcuts. So that will be the cost that he pays once again. This algorithm, this master tool is alpha approximate. If no matter which clients show up, the cost of this induced tool is at most alpha ransom. And so the question is how small can alpha be. So that's for universal problems. Oh, yes, so I should also say that the algorithm, instead of returning one master tool, deterministically, you can think of randomized solution where -- this is a tree, for instance. I return distribution on trees. So instead of returning one spanning tree, this is the first thing we study, I return a distribution. And whenever a set of clients comes up, I look at the expected cost that I pay and it's an issue to the optimum. So that's the randomized universal problems. So that was universal. Now let's look at -- here's the more, newer definition where the client will come up, actually are concerned about the privacy of whether they're clients or not. So they are clients, but they don't want to let others know that they're clients. That is the bit of information they want to keep private. So let me again give an example. So this is a setting. The set of clients is X. And now the algorithm knows -- the algorithm knows who the set of clients is. But it is constrained that the solution that it returns should not leak information about X. So should not let anyone else know whether this client is in X or not. For instance, if the algorithm returns X and it returns the optimal Steiner tree ->>: The leaves? >> Deeparnab Chakrabarty: All the leaves are clients. Anyone who -- we'll at least get that bit of information for sure. So that is not an allowed solution concept anymore here. So what is allowed? What is the notion of privacy. So intuitively we should feel that the universal Steiner tree solution is private, because the universal solution did not even look at who the set of clients was and returned a master solution. When I returned the master solution, after that the clients can take their paths to the route along the tree or in the stronger solution case I'm actually returning paths to the route so it takes paths to the route and they pay their cost, whatever the cost they pay. So this is a notion that we'll have that universal algorithms are sort of stronger. It's a stronger restriction than private algorithm. Whatever this private is we'll come to in a minute what private is. Okay. So how do we capture this privacy. So we will try to do this using this notion of differential privacy due to Dwork et al. The notion of privacy actually came up in statistical data analysis. So you want to know how many people have certain -- certain fields one in a database. And you want to return the value of that answer, and without revealing which entry was one or not. So it mostly came out of work in data analysis. And in 2009 there was a paper returned by [inaudible] Gupta and others which asked how many optimization problems like the Steiner tree problem can be captured by the differential privacy notion. So we're going to follow their solution concept. And the solution concept is the following: So we want to preserve privacy via randomization. So what does that mean? So the algorithm, when it takes a set of clients X, is going to return not one Steiner tree but a distribution on the Steiner trees, which is going to be called DX. Okay. And how it's going to capture privacy is that if the set of clients X and another set of clients X prime are close by, then the -- sorry, then the distributions that the algorithms return should also be close by. >>: What do you mean by "close by"? >> Deeparnab Chakrabarty: Yeah. So we will come to -- in fact, here is what I mean by "close." So this differential privacy has this parameter epsilon. And what it says is that if X and X prime differ in one vertex. So if there's one client X and X prime, then the probability that a tree T is returned by the algorithm in the distribution for X is within one plus epsilon, I should have a one plus minus epsilon. It should be within one plus minus epsilon within the probability that it should be returned in the X prime. >>: Does it mean that the supports are the same? >> Deeparnab Chakrabarty: Yes. It means that the supports are the same. In fact, they're going to infer something from that. It also means that no matter what X is, the algorithm must return spanning trees. Because when X is all the clients, the algorithm must return feasible Steiner trees which connects all the clients. Since this shows the support is the same, every time the algorithm returns spanning trees. Now, there's a relaxation of this which is called epsilon delta privacy, where you are allowed to have this multiplicative once plus epsilon and additive delta. And in that case the support would not be the same because of that delta, but we're going to stick to this. I think we can capture even whatever lower bounds even with the additive. But I'll have to think -but let's stick with this notion. So since the supports are the same, the algorithm, no matter who the set of clients is -- and this is what I want to impress -- whoever the set of clients is, algorithm is going to return a collection, distribution on spanning trees. In particular, the algorithm is going to return a solution what the universal algorithm also returned. Except that this distribution depends on X. Okay. So this is the definition, and we will use this easy sort of assumption that epsilon is small so I'm going to replace 1 plus epsilon by E to the epsilon. It's just going to be handy. This is just a technicality. That's what a differentially private algorithm does. It has access to the set of clients X. It returns a distribution on spanning trees, depending on X, and it is constrained in a way that if X changes by one vertex, the distribution point-wise does not change by much. It changes by at most one plus minus epsilon. So that is the definition of a differential private algorithm for Steiner tree. That will be the same essentially for TSP. You return a set of tools. But when a client comes it changes, point wise plus epsilon. The question we want to answer is you know how do these constraints affect the performance, how small can alpha or alpha approximate be? Okay. So what was known. Let's look at upper bounds first. Let's look at first this observation, that if I have an alpha approximate Steiner tree, universal algorithm, then I have two a 2 alpha approximate algorithm for DSP, and how you do this is look at whatever tree is returned and just consider the order by the depth for search order, and that returns it within a factor of two and it's not hard to see even if you take a subset X of clients here, the induced tool is going to be within two times the induced. So an upper bound for Steiner tree implies same order upper bound for TSP. So vice versa, if I do lower bound for TSP I'll get a lower bound for Steiner tree. Except that the Steiner tree is returning a set of spanning trees. This sort of does not work if I look at the stronger solution concept where every vertex is returning a path to the root. So lower bound for this will not necessarily imply a lower bound for this stronger solution concept. The path solution for the Steiner tree problem. But for now forget this. We'll come back to this at the very end of the talk. All I want to say is lower bound for TSP implies similar lower bound for Steiner tree. Now, upper bound for Steiner tree, so again I want to find a distribution on spanning trees so that for any set of clients I don't pay much more than the optimum. And this basically follows from this. I'm building result due to factor and poly tau [phonetic] which says that any metric can be embedded into the distribution of trees where each edge in fact is distorted by at most order log N. Again, if you think about it, this is quite strong. So if you take a set of clients and you look at the optimal Steiner tree that distorts away at most order log N as well. So this basically means that just returning this distribution as the universal solution will be an order log N approximate universal Steiner tree. Right? Because even the edges are distorted by at most this much. So this means, again, order log N approximate for Steiner tree implies order log N 4 TSP. So what was quite remarkable is the following result, that so that universal -- this notion of universal algorithms was introduced by Jia, et al. in 2005, and what they showed us was there was a deterministic, a single spanning tree, which actually gives the poly logarithmic approximation. It's known that you cannot have such an approximation for edge by edge, but if you are going to connect to a single root, you can return a single deterministic tree which gets poly logarithmic approximation, and this is improved a year later by Gupta et al. to get an order log N square approximation. Order log N can be done by randomized solution and deterministically get order log square N. And you cannot do much better. So actually there's an omega log N lower bound for Steiner tree. And this follows basically because if you think about it, if you can solve the universal problem, you can solve what is known as the online problem and the terminates are coming one by one and you have to connect them to the root as they come. And that has been known to have an omega log N lower bound. So that follows from online algorithm world. So this sort of closes the gap up to this log N. Deterministic and nondeterministic. For TSP, however, so Jia et al. basically said, gave a constant lower bound and asked what is the right answer. And in 2006 [inaudible] Jia et al. proved this lower bound which is log N by log log N to the one by six. So cannot be a constant factor. What's interesting is this lower bound works even if the points are on the Euclidian plane. That's why this is a quite very interesting result. The conjecture that the answer should be omega log N even for TSP. So for TSP you can get order log N, randomized order log square N deterministic but you cannot get better than omega log N. Okay. So that's the story known for universal algorithms. Now, privacy, as I said, this is sort of a new notion. So all that was known is that the universal algorithms are private if you have an order log N approximation for universal you have an order log N for private thing. And no better algorithms are known, no better lower bounds are known. In fact, this work started with a question by [inaudible] which asked for Steiner tree can you get a constant factor deterministic algorithm? So what are the results? So our results are -- well, the first is sort of an observation. And then the observation has consequences. The observation is that universal algorithms are private. So upper bounds are universal imply upper bound for privacy. What we'll show is that sort of a converse relation. If you can prove strong lower bound on universal algorithms, and I'll show you -- I'll come to in a minute what strong is. Then they'll imply privacy lower bounds. And we basically prove an omega log N strong lower bound for universal TSP, which implies strong lower bound for universal Steiner tree which will imply omega lower bound for privacy. So that's the result. And I'll tell the whole result in half an hour. And I should mention here that there was an independent work within months before our result in approximately 2010 which issued log omega bound for this TSP program. But it's not strong. They did not want to really care about privacy. And also their methods won't give strong. At least it doesn't seem to. But I'll mention this work later on when I show this lower bound. So the rest of the talk I'll show the conversion, observation, basically you'll know the definition, and then I'll show the lower bound, that's going to be the rest of the talk. So what are strong lower bounds? What do I mean by strong lower bounds? So let's think again by definition what I mean by lower bound for universal TSP. It means that no matter which distribution you have on tools, so for any distribution D on tools, there is a set of clients so that the expected cost paid by the algorithm is at least alpha times the optimum cost. That's the definition. So if I apply Markov's inequality I'll get the probability that the cost the algorithm pays on the randomized over the tools is at most two alpha, is at most a half. So less than half probability. Each tool returned is within, is less than two alpha times the optimum. That's Markov's inequality. So this event I'm going to call a good event. Good event is that I pick out a tool from your distribution and it's less than two alpha. So this -- all you can say from this lower bound, any lower bound, is a good event happens at probability less than half a constant. Okay. So what we want, what we call a strong lower bound, is so for a parameter epsilon, the probability that something good happens, that the cost of your induced tool is at most some order alpha of your optimal tool, happens with much smaller probability than half. And this probability is going to be exponentially decaying in the size of your client. So let me just impress this. So normal lower bound only says that this good event happens with probability at most half or some constant. And we'll come to in a minute why I need the strong lower bound. The strong lower bound says, given this parameter epsilon, this good event happens with exponentially small probability. Okay. So that's exponential in the size of the set of clients. So let me now just show you why strong lower bounds will imply privacy lower bounds. So what does privacy lower bound -- so privacy says if X and X prime differ by one, the probabilities of what tool you return change but not by much. It changes by one plus epsilon or E to the epsilon. So I'm going to do this hybrid. You can guess what I'm going to do. I'll first feed the algorithm the MT set and see what set of tools it returns. I'm going to treat that set of tools as a universal solution. Because I know it's sort of -- it's a distribution of master tools. I know that any distribution on tools I have this strong lower bound. So there is a set of clients Y so the probability that you do a good, you return a good tool for Y is exponentially small in the size of Y. Okay. So that's one part. And then I'm going to return, I'm going to give this set of clients Y to the algorithm. Now, look at the bad event. So this good event -- look at the good event where the tool returned is very small compared to the optimum. This happened exponentially small probability here. When I add Y more clients, well, the probabilities can increase, this good probability can increase but by how much. Each time I add a new client it increases E to the epsilon. It increases only E to the epsilon times the size of Y. But the bad event happened with really small probability. So even when I return Y, any change or distribution in bit, you cannot change it by much. So you get really beaten. You return a bad probability at least a half. So that's -- and it's just simple observation. But all it says if you prove some strong lower bound for universal problems, you get automatically lower bound for private problems. And this is quite general. It does not need to be -- there's nothing about trees or tools or anything. This is connecting universal algorithms with private algorithms. So none of the lower bounds were strong before. So basically we give strong lower bounds for TSP and Steiner tree with the stronger solution concept. And the lower bound is quite simple. So in the rest of the 15 minutes, I'll show -- I'll give the proof almost of this UTSP lower bound. So what do I want to do? I want to come up with a metric space and show that no matter what distribution of tools you give, I'll give you a set of clients so that your success probability is going to be very small, exponentially small. How I'm going to do this. So first of all let's assume for now that the algorithm returns one tool. Returns a set of deterministic algorithms. We'll see how to make it work for randomized. You'll see it. So right now the algorithm is returning a single tool and it's supposed to be alpha approximate. So here's the idea. Suppose I can find among these vertices two classes of vertices, red vertices and blue vertices, that satisfy the following probability. That red is close to reds which means there's a path that connects these red vertices. And blues are close to blues. The blues are connected. But each red vertex is very far away from each blue vertex. So this is in the metrics. I'm trying to think of a metric space, and I'm going to find red vertices and blue vertices, red vertices form a clump here, blue form a clump here but each red vertex is far from the blue vertex. And I'm going to show that -- so this -- that no matter what tool you return, the algorithm is going to sort of ultimate is going to do red blue red blue red blue quite often. So I want to claim that this is, this will prove my lower bound Y, because I'm going to pay the red/blue distance, whatever that large distance is, once, because optimal is going to cover all the reds then jump to the blues, cover all the blues, and go on. It's going to pay that red/blue distance once. It's going to pay, red blue, red blue, red blue as many times. This is the general idea I want to capture this world. So how are we going to do that? So we're going to do that by using expanders. So the metric space is going to be the shortest path metric of a constant daily expander. So what is an expander? An expander is basically a D regular graph where D is the constant. Here's the definition. There is some C, so that no matter what subset you take of size at most N over two the number of edges that come out is at least C times the size of this. And another way of seeing it is the normalized second eigenvalue of the matrix is strictly less than one. All I need is the following. I need diameter of expander order log N and this crucial property. That I have a graph and I pick a constant fraction 90 percent -- some subset of vertices as a set, call it S. And I do a random walk. So I pick a vertex at random. I go to a uniform, enable uniform random, do it T times. So then an expander, this basically looks like picking these T vertices uniformly at random from on the graph. So more precisely, the probability that this T step random walk is completely contained in this set of size delta N, I guess the size of S is delta N, is, well, it's not delta to the T, which it would be if you're in samples. But delta plus P plus beta to the T where beta is quantity less than 1. So exponentially small basically if delta is small enough. That's going to be the property that we're going to use. And so basically random walk spread out of small sets. Okay. So here is the lower bound. So the metric the shortest path metric of the constant expander. What are the red and blue vertices? They're two independent D step random walks where T is around log N by four. So pick a vertex at random, do a small walk. Pick a random that's a small walk. So by definition the red vertices are red vertices within log distance, log N by four distance, say walk. Blue are close to blue vertices. Why are the red vertices far from the blue vertices? That's because I'm not walking too much. So if the first two vertices are far enough, then the two vertices are -- then all the vertices in the walks are far enough. And why are the first two vertices far enough? Again, it's an expander. So the number of vertices, it's a constant degree expander. Number of vertices within distance log N by three is much, much smaller than N. It's N to the one-third or something. So if you pick two vertices, the distance between them is going to be omega log N. If you take a walk suitably small constant then log N divided by suitably log constant they'll never meet and they'll be far enough as well. Okay. So to conclude here, so if I take a red random walk and a blue random walk, which is PT log N but quite log N by maybe a hundred, say, then every red vertex is quite far from every blue vertex with high probability. So this is the -- I needed three properties. I needed the edges are close to edges that I have blues are close to blues and reds are far away from blues and I needed no matter what tool you take there's a lot of red blue red blue red blue. So this part actually did not use expander, just used constant degree. The red blue, red blue, red blue will use the fact that there's an expander. Why is that? So I want to show that this ultimate offers. So suppose the way to show it is the following. Take a tool and split it up into these many parts. Some order log N chunks. If I showed that the red walk hits three-quarters of these chunks, and if I show the blue walk hits three-quarters of the chunks, then on a quarter of these chunks I have both reds and blues. And, therefore, I have alternation. I have to show that with high probability at random walk when an expander hits three-quarters of the chunks. >>: What's the order of choosing here? >> Deeparnab Chakrabarty: Half. So my random walk did not even depend on the tool. I'm going to choose my clients by random walk. Then you come with your tool. But you're oblivious. >>: Inbound ->> Deeparnab Chakrabarty: I'm going to show for any tool, any fixed tool ->>: Won't the tool kind of depend on your choice. >> Deeparnab Chakrabarty: No, the tool cannot depend on my choice. The tool does not know who the set of clients are. It's universal. What could happen is my clients could depend on the tool. See? I'm going to try to prove a lower bound. I'm going to see your client and give your clients. That can -- is allowed. But I'm not even doing that. Because I want to argue with a randomized profile and with very low probability. So saying something much stronger. That's the strong lower bound, that I'm going to form my clients independent of your tool. Your tool does not see my clients obviously. Your tool is just a tool. But my clients are independent of your tools. >>: Actually order of twice plus the algorithm fix the reverse and then you pick the clients with the correct ->> Deeparnab Chakrabarty: I don't even look at the tool. I could. But I don't. Okay. So basically I want to show that you take a random walk. Then with high probability random walk hits three-quarters of the chunks. Why is that? Well, if it didn't, then there is some three-quarters of the chunks in which the random walk is constrained. So there are three-quarters of the vertices in which -- this is just what I said. So if the random walk did not hit three-quarters of the chunks then there are 3 N some 3 N by 4 vertices in which the random walk is constrained. But I know that the probability that a random walk is constrained in a set of size delta N, where delta is three-quarters here, is exponentially small. And in fact it's so small that if I do a union bound over all these chunks, I still get exponentially small probability. And that's the proof. So alternation happens. And once I prove that alternation happens, this is what I said, opt-based 2T, it follows the red and then it follows the blue, and in between it's probably three times the diameter of the root and back. Well, the algorithm pays log N times 4 this distance omega log N. So opt order log N is alpha omega square log N. And this is for a fixed tree, but if you do the calculation ->>: This is not just the strong lower bound? >> Deeparnab Chakrabarty: This is the strong lower bound, because I'm saying what is this with high probability? With high probability exponentially small in T. T is the length of the tool. Length of the tool is log N. Number of clients is log N. So it's exponentially small in epsilon times size of X. So it's exponentially small in the size of the client set. So it is strong. So, right, as in -- so ->>: E to V epsilon X, right [phonetic]. >> Deeparnab Chakrabarty: Yes. >>: X is log N. >> Deeparnab Chakrabarty: X is log N. >>: N to the epsilon? >> Deeparnab Chakrabarty: No, no, no. Strong lower bound wants it to be minus epsilon X. And my lower bound will give delta to the T, which is some constant to the log N. So there are constants. So it will not work for any epsilon but for some constant epsilon it will work. So that's it. That's it for the -- this is the universal TSP strong lower bound. Implies omega log N privacy lower bound. It implies an omega log N lower bound for universal Steiner tree and differential Steiner tree. It does not quite imply lower bound for the path solution and the path solution, well, you have to do something more. So we need -- so the earlier case any constant degree expander would work with a particular beta. But now we need a external property that the expander should have high gut. There are no small cycles. In fact the gut is omega log N. And such expanders do exist. What are called these Ramanujan graphs. And in fact the proof of Gorodezky, et al., which also got this omega log N was on these Ramanujan graphs. And so how do we use this high girth? So here's the solution. Collection of paths. All I do is I do one single random walk on the expander and whoever I visit these are my set of clients. Now, suppose this is the random walk edge, from here to here. Now, if it happens that the path from this fellow to the root and the path from this fellow to the root do not contain this edge then the sum of these path will be at least log N because this will form a cycle. And each cycle is a size at least log N. What I need to worry about is what is the probability that these edges are not these edges. And again I'm doing a random walk so this happens with high probability that most of the edges are not these, quote/unquote, first edges coming out of the vertex. So that implies this omega log N. So that's it. So universal -- so what are the take-home points? So there is the strong connection between universal and private algorithms. One way is clear, the other way is not so difficult. And this is pretty general. So it might work for other classes of problems. Although I should confess that universal problems apart from the Steiner tree and TSP, nothing else has been much successful. There's a set cover problem, but it gives bad -- bad lower bounds. So for Steiner tree and TSP, theta log N is the answer for both universal and privacy. Sort of answers [inaudible]'s question and again expanders are something that one should try. More? >>: So [inaudible] is that priorities are universal? >> Deeparnab Chakrabarty: Yes. >>: And you showed that private is as [inaudible]. >> Deeparnab Chakrabarty: No, so I show that if you prove strong lower bound than universal then you get lower bounds on private. >>: Yeah. >> Deeparnab Chakrabarty: Which is, yeah, it's just staring at the definition. If you show that good things happen in universal with only probability going down exponentially with the size of the client set, then that implies by definition a lower bound for privacy. It's nothing. It's just an observation. But it's a good observation in the sense I don't need to really bother about what is differential privacy while proving a lower bound. I just prove a lower bound which is strong for universal. It's one way. >>: Again, how is universal and online connected? >> Deeparnab Chakrabarty: So universal is simpler than on line. If I have a universal algorithm I have an online algorithm. Because whenever a client comes, I look at where the universal algorithm connects it. And I disconnected that. >>: Universal is the harder. >> Deeparnab Chakrabarty: Harder than online. Both harder than online, harder than [inaudible]. >>: But in the TSP case, online client can come in wrong order. >> Deeparnab Chakrabarty: In a TSP case, what is TSP online? So online is only for Steiner tree and it makes sense. >>: TSP or can say changes with some other location. >> Deeparnab Chakrabarty: Exactly, yeah. So, yeah. That's that. >>: And meet the definition of privacy? >> Deeparnab Chakrabarty: So the one weaker is this plus delta. And so actually what I said was a weaker definition anyway, because what is actually privacy is that -- so this says that what I said here is the probability that is Steiner tree does not change by much than one plus epsilon. What the actual definition is, you fix a collection of trees and the probability that you return something from that collection also does not change by more than one plus epsilon. So this is the same if you go point to point. Implies this. If I have a delta, additive delta, if my set is of size K, the delta will come with the K. So the delta has to be really tiny in this, even if I apply allow a delta additive error in the supports are not the same the delta has to be really tiny. It has to be smaller than one by the number of trees. And if delta is so tiny then I think you are still in trouble, because it does not buy you that much. But that's differential probability. So differential privacy is a bit of a strong, in my opinion a strong privacy constraint. So either weaker notions of privacy which is not, goes away from different -sure, that could be. In fact, that's probably one can think about. Then what do we need out of privacy? So okay going back even more, I find that this privacy notion is a hard one to explain for this [inaudible] optimization problems, apart from the Steiner tree with the path solution. If you think about it, it does not make sense for either Steiner tree or TSP because you're returning collections of trees. How is the solution set? Is it printed out on a board? How do the clients know what the solutions are? So it's a tricky ground. So I think it's not meant for comment [inaudible] problem. I don't know. In case general definition. Every [inaudible] define a new way of defining privacy. >> Nikhil Devanur Rangarajan: All right. Thanks. >> Deeparnab Chakrabarty: Thank you.