>> Yuval Peres: Good afternoon. We're delighted to... here. Some almost 45 years ago he wrote the...

advertisement

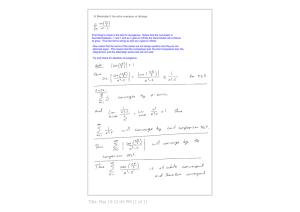

>> Yuval Peres: Good afternoon. We're delighted to have Krishna Athreya here. Some almost 45 years ago he wrote the bible on branching processes that even though there's a lot of further work on the topic, it still looks state of the art today, and many, many results were just optimal, so there's nothing better to do. And yet he's continued and had lots of impact on the area later. In particular, he'll tell us something about branching processes today. Please. >> Krishna Athreya: Thank you. Thank you, Yuval, for asking me to speak here. This is really nice. I want to talk about a problem called coalescence. And I'll talk about coalescence in branching trees. So here is the very simple version of the problem. Take a binary tree. So you -- a rooted binary tree, that is. So you start with one vertex at time 0, splits into exactly two vertices, and that in turn is splits into two, and so on. So you notice that at level 1 -- at level 0 there is exactly one vertex, at level 1 there are two, and at level 2 there are four, 2 squared and so on. And you can guess that at level N there's going to be 2 to the N vertices. So now supposing -- so supposing you choose two vertices from level N by what is known as simple random sampling without replacement, which means there are 2 to the N vertices available, there are 2 to the N choose two pairs that you can choose, and you choose one of those pairs with equal probability. Supposing you choose, say, two vertices, and now you trace their lines back until they meet, called a -- called a generation where the lines meet X sub-N. So pick -- so then trace the lines back, their lines back in time till they meet, say, at generation X at level XN. Clearly they will meet -- they will meet at the origin, but they could be much before that. So the question is what is the -- XN is going to be a random variable. The tree itself is deterministic, but I injected randomness by my sampling procedure. So the question that we want to ask is what is the distribution of XN 1 and 2, what happens to this as N goes to infinity. So these are two questions that we want to ask. And this is actually very easy to answer in the following sense. You notice that the following probability calculation is very easy. If XN is going to be anywhere from 0, 1, 2, 3 up to N minus 1, I'm looking at the Nth generation, so the meeting place must be at least N minus 1 and all the way down to 0. But if I want it to be less than K, then at level K I have 2 to the N vertices. Each of these individuals have a time N minus K left to them, so they would have 2 to the N minus K descendants by time N. So if I want XN to be less than K, that event happens when I have 2 to the power K vertices and I choose two of these guys and then look at their descendants, and then I want to choose one from each of these things. So there are 2 to the N minus K times 2 to the N minus K. So these are the number of favorable ways of producing XN less than K divided by the total number of choices which is 2N choose 2. So this is the exact probability for K equal 2, 1, 2, all the way up to Now we can choose this is -- this is indeed equal to 2 to the K times 2 the K minus 1 over 2 times 2 to the N minus K, 2 to the N minus K. And same 2, so denominator is going to be 2 to the N times 2 to the N minus over 2, and that 2 will cancel the numerator 1. >>: [inaudible] so XN less than [inaudible] so -- >> Krishna Athreya: >>: N. to the 1 Right. XN is less than K. So wanted to first choose the common -- >> Krishna Athreya: So what I do is I go to level K. There are 2 to the K vertices, and I choose two of those guys, and I look at the number of descendants at time N minus -- at time future time N, which is N minus K. So that's what this calculation is. But this is -- this is equal to -- I can pull out a there's 1 minus 2 to the minus K, and then pull out which is 1 minus 2 to the minus N. And then I have K, 2 to the 2K. 2 to the 2K cancels 2 to the 2N, 2 cancels. 2 to the K from here, so the 2 to the N from here, 2 to the K times 2 to the to the 2N. All of it So this is exact probability. And this converges as N goes to infinity to 1 minus 2 to the minus K. So we have shown that X sub-K -- X sub-N has an exact distribution which is approximately geometric, and in the limit it is a geometric random variable. Now, clearly what we did for the case of binary tree can be done for any binary tree [inaudible] tree where it's the tree is deterministic. Well, since I'm -- I worked in branching processes, as he said, for a long time, so a natural question is what happens if the tree is not deterministic, is a purely random tree. So the question that we want to ask is so this is what if the tree that you have, the rooted tree that you have, is a Galton Watson, or if I would -- or to be honest with Australians, BGWBP, [inaudible] Galton Watson branching process, and is a tree. Now, you remember how that is generated. So you are given the following: A probability distribution PJ, J [inaudible] equal to 0, PJ nonnegative, and then summation PJ equal to 1. Then you generate -- so this is 1, 2. Generate a doubly indexed family of random variables, J [inaudible] or equal to 1, N to 0, 1 to infinity of i.i.d. random variables with distribution PJ. Then you define the population size in the Nth plus generation to be the number of children born to the individuals in the Nth generation. So if there are certain individuals in the Nth generation, each one of them produces children according to this process, and then C and J is the number of children born to the Jth parent in the Nth generation, and this works if [inaudible] is positive. If [inaudible] is 0, the population is already gone, there's nobody to produce anything, so it will stay at 0. Oh, sorry. So 0 is what is called an observant barrier. Once you hit 0, you're stuck at 0. Otherwise you can go this. So then the following result is a well-known theorem. Goes back to Galton himself and then [inaudible] and Watson who produced a long solution is that the probability [inaudible] equals 0 for some N greater or equal to 1 conditioned on zed not equal to 1 is equal to 1 if the mean number of children produced per parent is less than or equal to 1 and is strictly less than 1 if this N is greater than one. So that's a very well-known result. Mainly the mean number of children produced has a role to play in the tree surviving for indefinite time or terminating in a finite time. So there are many more results, and here is a classification. M is the mean, the quantity there. M greater than 1 is called 1 -- sorry, 1 less than M less than infinity. That's called supercritical. M equal to 1 is called critical. And M less than 1 is called subcritical. And I'm going to discuss one more case which has not been discussed in my book or in any other book called [inaudible]. It's called M equal to infinity case, explosive case. I'll talk about that aspect. All right. So now let me describe the results for these cases which have been well studied in the literature first. So if you take a supercritical branching process and let's assume that there is no extinction, there is P naught 0. So here is the first result that we can state. So theorem one let P naught be zero, no extinction, and let the mean number of children born to one parent is be finite. So then while I'm picking only two individuals at random from the Nth generation, the Nth generation is not going to be dead, it's going to be growing very rapidly. Then the probability I'm going to call the coalescence time XN2. That's the generation number when these two randomly chosen individuals meet, the lines meet, XN2, is less than K conditioned on the tree itself. So, in other words, remember there are itself is randomly generated, and then But suppose that the tree is generated tree here and then you do the sampling conditioning means. two sources of randomness. The tree I'm injecting randomness by sampling. in the next room and you bring the on the vertices. That is what this Given the tree, you look at this quantity, limit as N goes to infinity of this exists, and it's a function of K and T, the tree, for K equal to 1 to infinity exists. And what is more, for almost all trees, this is a proper distribution. And pi K of T converges to 1 as K goes to infinity. So, in particular, the limited distribution exists for XN2 is going to depend on the tree. And of course by the bounded convergence theorem, the unconditional leverage also exists. So this implies in turn that the probability that XN2 is less than K without the tree averaging over all tree also exists because this is bounded by 1 and instead of taking expected values of K will converge to pi K which is equal to expected value of pi K of T. Now, so this is theorem one, when M is finite but greater than 1. So this is the supercritical case. And if I have time, I will indicate the proof for you. Then let's look at the critical case. Here is a problem. In the critical case, we know that the tree is going to die out at probability 1. But then I want to do some sampling. So I'm a mathematician, so I will assume that the tree is not dead. So I'm conditioning on an event where the event probability is actually going down to zero. I'm not the first one to do this. [inaudible] did this. [inaudible] did this way back in the late -- or early '40s. So the critical case is this is M equal to 1, and of course M equal to 1 is a possibility with P1 equal to 1. But that's a purely deterministic tree. Everybody produces exactly one child. And we want to rule that out. If we rule that out and say let's assume P1 is less than 1, if P1 is 1, there is no extinction. The same, everybody produces one child and so on and so forth. Okay. In this case, what is your intuition? XN2, well, we have to condition on the fact that at time N the population is not extinct, number one. Number one, it has at least two individuals. Well, fortunately, for [inaudible] it proved very beautiful, just long ago, and that is the following. It requires a finite second moment hypothesis. So let me code the result for you. So M equal to 1 and then P naught, P1 is less than one. And supposing you assume that the finite second moment exists, so assume all of these three conditions, it's a given, if all these conditions hold, then the [inaudible] probability is going to die like 1 over N and the population size is going to be [inaudible] then one, the probability [inaudible] is positive, there is -- the process is not extinct, we know that that probability has to go to 0, but N times that converges to sigma square over 2 as N goes to infinity. This is something that [inaudible] had established, number one. Number two, the probability that [inaudible] over N is less than X conditioned on non-extinction, if the process is not extinct, how big is the population? Roughly speaking of order N. Which is distinctly different from the supercritical case where the population explodes at a geometry grade. It turns out that this converges to 1 minus E to the minus 2 over sigma squared X for any X positive. So he proved this result. So ->>: [inaudible]? >> Krishna Athreya: >>: Hmm? The second one is [inaudible]. >> Krishna Athreya: Yeah, it's -- well, [inaudible] was his student. It was not -- the results are subcritical to [inaudible]. So we did some research, and then eventually we had [inaudible]. But you are right. The name of -well, again, there are two [inaudible]. >>: Right. >> Krishna Athreya: So we have to be [inaudible], which one. thought I'll [inaudible]. So I just So then it turns out that in the critical -- there is one more result that we need which was not proved by [inaudible] but I which I proved just a few -couple of years -- or a few years ago, and that is the following. Here is time 0 and here is time N. Supposing at time N the population is not extinct. Condition [inaudible] very small probability. Then go to level K. The population is not extinct by time N. Therefore it cannot be instinct at time K. But then there are a large number of individuals at that time, say ZK. And look at the lines initiated by each one of them. Each one of them has a certain number of individuals left, but some of the lines could be dead, some of the lines are not dead. So now look at the following process. B sub-N is going to be you look at ZN minus K super K comma I divided by N minus K times the indicator function of ZN minus KI super K positive for all those -- for I equal to 1 to Z sub-K. In other words, I go to level K, there are ZK individuals, each of them initiates a line of descent. And then look at what happens to that population size in each one of them, divide that by N minus K, and look at only those lines for which the population is not extinct by time N. So now this is a collection of points on the nonnegative half line. It has a random collection of points. So it's what is called a point process. Question. What is happening to this point process? And I show that this point process, so this is -- blah, blah, blah, you can put my name on it if you like -- 3, BN conditioned on ZN great or equal to 2. Or ZN not equal to 0, that's good enough, too. This is a point process. So you have to go to the space of point processes and talk about reconvergence in the space of one processes. You can use the Laplace function or blah, blah, blah and so on. So -- >>: Fixing K or K also with variables? >> Krishna Athreya: Yeah, that's a good point. K has to go to infinity and N has to go to infinity. So let me write it down. Let K then go to infinity, K go to infinity, such that K over N goes to U where U is between 0 and 1. So it has to be roughly the same order as N. Okay? Supposing that happens, then -- so this is third result -- then VN conditioned on [inaudible] being nonzero converges in distribution to random point process where the number of individual -- number of -- so it's going to converge to a number that is -- this is a nonnegative quantity, a whole bunch of nonnegative quantities, and this will converge to the following is going to converge to eta one, so eta two, eta, say, a random variable V, where the etas are going to be exponential distributed and V is going to have a certain well-defined distribution coming from here. So let me write down that precise result, because this is very useful so that it comes up in another context also in -- it is not in this paper; it is in this paper. Yeah. I don't remember where it is. There it is. It converges to a point [inaudible] 1 eta 2 eta N sub-U where the eta I's are independent random variables explicitly distributed, N sub-U is geometric with parameter U. So you generate a sequence of i.i.d., the exponential random variables, and then generate an independent geometric random variable with parameter U, call it N sub-U, then go fix only these random variables. So this is going to be a random point process on the nonnegative half line. That's what this will converge to. So it's not easy to prove the result, but it requires a Laplace function. And then this book of this Norweigian mathematician [inaudible] what is his name? I keep forgetting. I will give the reference [inaudible]. Calenberg [phonetic]. >>: Calenberg. >> Krishna Athreya: Calenberg has a very nice method called Laplace function method to prove this. So you have to use that to do it. So the -- here XN2 -- so in this case you look at XN2. XN2 is the condition on nonextinction. The population is not extinct. Now XN2, you take two individuals of random in the Nth generation, trace their lines back, and see when they meet. And the question is what is happening to XN2. Now, notice in the supercritical case XN2 is way back in time. Here it is not way back in time except is roughly of the order N. It's less than U converges to some function H of U, and this H of U, H of 0 plus is 1, H of 1 minus is 1, H is absolutely continuous on 01. So there is a proper distribution function such that XN2 over N converges to that. >>: H of 0 plus is 1? >> Krishna Athreya: Well, XN2 over XN has to be between 0 and 1. So, in other words, the random variable that it goes to is [inaudible] 0 to 1. >>: Yeah, but H of 0 plus is 0 -- >> Krishna Athreya: It is 000. Sorry. Not 1. Of course. Thank you. It's 0 plus 0. I meant 0. So the H is a distribution that is sitting on the [inaudible] 0 to 1 is an honest distribution, it is a nontrivial distribution. So the coalescence takes place somewhere in the middle of that interval. It's neither close to the present nor close to the [inaudible] past. >>: Can you write down what H is? >> Krishna Athreya: Yes. I can write down. But, well, you asked for it, so I will write it down. It's a really complicated thing. It is going to involve this random variable. Say H of U is going to be equal 1 minus expectation of phi of NU. And now NU, remember, is going to be a geometric random variable with parameter U. And then so our phi of J is going to involve expectation of summation 1 to J eta I squared over summation 1 to J eta I whole squared, where eta, 1 eta 2 eta J are i.i.d. exponential one random variables. So the formula for H is not very simple. It's a little bit -- it comes from this point process idea. Now, notice that this quantity -- this is an interesting problem in itself. If you take II random variables, XI be i.i.d., so this is a problem ->>: [inaudible] use a different pen. >> Krishna Athreya: >>: This one is -- Hmm? Maybe you want to use a different pen. That pen is too -- >> Krishna Athreya: All right. Okay. So I have the cover here. So here is an interesting problem. Let XI be i.i.d. random variables, say positive random variables, then if you look at 1 to N XI squared, the numerator, denominator is 1 to N XI quantity squared. The behavior of this sequence was studied by number of people in Bell Lab, for example, long ago. And notice that if the mean of -- if the random variables of finite second moment, the numerator is going to be like N, the denominator is going to be like N squared, so this whole thing will go to 0. But if XIs are -- have heavy tails, if XI is [inaudible] in the domain of attraction of a stable law of order alpha less than one, then these two guys are going to be roughly the same order of magnitude. And so they will not go to zero. But that kind of a thing comes up here. But then here there's no problem because the eta I's are going to be i.i.d. Eta I are i.i.d. exponential one random variables. So everything is going to be okay here. This is going to be dying at the most [inaudible] 1 over J, and that's enough to make this thing proper. So in the supercritical case, coalescence takes place way back in time. In the critical case, coalescence take place neither close to the infinite past or the present, somewhere in the middle. And it turns out that the -- in the so-called subcritical case, the subcritical case is the following where M is less than 1. So theorem three, subcritical. Here M is less than 1. Remember what M is. M is the mean number of children produced by -- per parent. And if it less than 1, the population just dies out. So the question is to gain the other condition, so it turns out so that theorem is less than 1, so theorem, then the probability XN2 N minus XN2 is less than K conditioned on certain positive. So the coalescence is very close to the present. It is not way back in time. Remember the -- in the subcritical case, the number of individuals alive at time N is going to be a proper -- going to be a proper random variable. That will -- that's -- so this converges to pi K. And for every K 1 less than or equal to K less than infinity and then pi K goes to one as K goes to infinity. So to summarize, we have the following situation so far. If the mean is greater than 1 and the population is not extinct, coalescence is going to be way back in time. If the mean is one and the published is not extinct, then the coalescence is going to be very -- some line in the middle. So roughly the order N times U, where U is somewhere between 0 and 1. And if it is M is less than 1, the coalescence is going to be very close to the present. So now I want to consider one more case, and that is the explosive case. And then I would like to apply these arguments to what are called branching random walks. So explosive case. Explosive case is when M is the infinity, when each person produces number of children, random number of children, whose mean is infinity. But then it turns out that I need one more hypothesis, the probability. So I -- this is in addition. I have to assume that summation PJJ greater than X, this is the so-called tail probability, and it's going to go to 0 as X goes to infinity. But I want it to go to 0 reasonably slowly, is Z equal to X to about minus alpha times a slowly varying function. That means 1L of CX over L of X goes to 1 as X goes to infinity for every 0 less than C less than infinity. Like log X would be slowly varying, but any power of X will not be slowly varying. Square root of X will not be slowly varying as X goes to infinity. And with alpha between 0 and 1. So that's my hypothesis about the distribution of PK. If this one, the probability that the coalescence now -- and again I'm naught is 0, so there is no extinction at all possible, and in again, the coalescence is very close to the present, X is less converges to pi K as K goes to infinity. is so, then, assuming P this case, than K In other words, in the rapidly growing population, coalescence takes place very close to the present. In reasonably growing population, coalescence takes place very close to the beginning. In a critical case, it's somewhere in the middle. In the subcritical case, also it's close to the beginning. Now, in the explosive case, something interesting happens also. This is -so here is a [inaudible] result. This is due to P.L. Davis [phonetic], a Danish -- a Dutch mathematician, and then David Gray, an English mathematician, and that's the following. So assume M is the infinity and PJ belongs to the domain of attraction of a stable of [inaudible]. Namely this condition. This is a necessary and sufficient condition for PJ to be in the [inaudible] attraction of a stable law proved by Feller. If this is the case, then you take two individuals, have them produce branching independently of each other. Then one of them will wipe out the other. So it is as follows. Namely, if you look at the population size of the N plus plus generation, that is equal to summation C and J, J equal to 12 Z sub-N. The population is made up of the individuals produced by the people in the Nth generation. Now, these random variables are all independent, identically distributed, conditionally independent of ZN. So we divide by 1 over ZN to the power of 1 over alpha and multiply by Z into the power 1 over alpha. So take logs on both sides. Log ZN plus 1 is called this quantity, say V sub-N for the moment, is equal to log VN plus 1 over alpha log ZN. So that in turn suggests that alpha to the power -- oops, again, I keep [inaudible] N plus 1 times log ZN will be equal to alpha to the power N plus 1 times log VN plus alpha to the power N times log ZN, ZN plus 1 here and log ZN. And I can go down the line, and then it will alpha to the J plus 1 log VJ, J equal to say remember, alpha is less than 1, alpha to the summation alpha to the J converges. VJs are in distribution to something. -- it essentially -- so the form 0 to N. So this would suggest, J is a geometric sequence, identically -- or will converge So it takes some more work to show that this quantity converges. And if that is so, so this is a theorem now. Let me write down as a theorem. And it is an indication of the proof, but it's not really a proof yet, it's a theorem, let PJ -- let M be equal to infinity and summation PJ, J greater than X, be asymptotic X to the power of minus alpha times L of X, L slowly varying at the infinity, alpha between 0 and 1, if that is the case. Then alpha to the power N times log zed N converges to random variable, say U, with probability of 1, and the probability that U equal to 0 is 0. U is a continuous random variable which says basically if you think like on intuitive basis alpha to the N times log zed N is approximately like U. If that is the case, log zed N is approximately like 1 over alpha to the power of N times U. Alpha is less than 1. 1 over alpha is bigger than 1, call it rho, equal to rho to the power of N times U. If that is the case, the population size zed N, is like E to the U times rho to the N. That means the population grows unlike the [inaudible] grows. It is super exponential. And the pop- -- well, we know the [inaudible] distribution doesn't have a finite mean, so this is not that much of a surprise. But here is the interesting thing. Supposing you start one line of descent and I start one line of descent, both to the same distributions, then zed and super 1 is going to be like E to the U1 times rho to the power N. Zed and super 2 is going to be like E to the U two times super to the power N. UN has a continuous distribution. So UN cannot be equal to U2. Which means if I were to take the ratio of these two guys, it's going to be U1 minus U2 times rho to the power N, later go to plus infinity or go to 0. So one will wipe out the other. So which may explain the fact why the coalescence is very close to the present. If you take two individuals, very likely they are coming from the, you know, same ancestor. And that's sort of proof, that's an economist proof, not a mathematician's proof. But that's roughly what the idea is. So now so I've described some basic results for what's called coalescence. Coalescence in this case is the problem of generations that are meeting, okay, after some time. Now I want to give an application of some of this so you can see where to go, a number of directions. You can think about what happens to a continuous time process, what happens to two-time branching processes, [inaudible] branching processes and so on. Lots of things. So if you have good students, put them. But I want to go in a slightly different direction and I want to talk about what's called branching random walks. This is the problem. I kept it outside for too long. I keep on right now. Okay. Branching random walks. So branching random. What is a branching random walk? Well, it's a branching process plus a random walk imposed on it. So you started the ancestor at time 0, and then this ancestor has a random number of children. And this ancestor is located at some site X. X naught is the location of this ancestor. But these children essentially migrate from where the parent stays and go to a new place. So they go to X naught plus X plus 1, 1, X naught plus X12, and then X naught plus X1 Z1. The number of children born to this parent. Okay? And then the children of these children do the same thing. They migrate from where the parent lives to a new place and so on. So if you look at zeta N is the actual position of all the individuals in the Nth generation, XN1, XN2, XN zed N. So this is the position, the point process generated by the positions of the zed and individuals in the Nth generation. Question. What is going to happen to this? In particular, we know, well, this question makes sense only with -- let's assume that P naught is 0 and 1 is less than M less than infinity for the moment. Consider that case. And now what will happen to this point process as N goes to infinity. There is two source of randomness. The tree itself is random, and then the jumping is also random. So we need to make some assumptions about the jump distribution, and I already mentioned my assumptions about the tree structure. So let P naught be 0. Let X11, X12, X1 Z1, this random vector, well, supposing I make a simple assumption, they're all identically distributed with mean zero and then finite variance. So that's not such a -- well, [inaudible] subtract of the mean and then [inaudible] 11 squared equal to same as square is finite. So now the question is what is going to happen to the empirical distribution? What is the distribution that I'm looking at? 1 over N summation indicated a function of XNI less than or equal to XI equal to 12 ZN or 1 over ZN. That's what I'm looking at, the proportion of individuals which are to the left of X. But I roughly know, well, okay, so here is the idea of analyzing this thing. You have a large number of individuals located all over the place and you want to know what is going to happen to the empirical distribution of the points on the real line or whatever space that you are in. Now, notice if I pick two individuals at random from this collection and trace their lines back, they're going to be way back in time, which means that they have lot of time to evolve independently. That means their positions are going to be like an ordinary random walk and they're independent as well. And therefore you will look at the empirical distribution; namely, this particular quantity. So this is a theorem. Under this hypothesis, if you look at number of individuals which are over to the left of, say, sigma times square root of Y -- sorry, sigma times Y times square root of N divided by the total number of individuals, this is the total number of individuals. This is the proportion of individuals whose children are a number in this Nth generation who are located to the left of sigma times Y times square root of N. Not surprisingly, this will converge to the normal standard normal distribution function in probability as well in mean square. And the reason for this is that if I take two individuals at random and trace their lines back, they're going to meet way back in time. independently developing. >>: So essentially their lines are This is also horizontal search? >> Krishna Athreya: almost surely. Well, yeah, that's a little bit harder, but, yeah, it's So this is one result. And now you can also -- there are some variations of this. If you don't want to assume finite second moment but if you assume that these displacements are in the domain of attraction of a stable law, then the corresponding version of that, but I will not go into that. So I will -- one last result that I want to state is that is what happens in the explosive case. So, again, take the branching random walk. P naught is 0. M is infinity. And summation PJ belongs to the domain of attraction of a stable of order alpha [inaudible]. In this case, notice what is happening. If we take two individuals of random from this collection, then their lines of descent are going to meet much sooner, very close to the present. So they have a lot of portion that are common. And then there's a small portion which is not common. And that's going to get wiped out by the denominator. So what happens is this. In this case, this is the theorem. Assume again that the expectation of X11 is 0, expectation of X11 is quite as equal [inaudible] is finite, then if you look at the number of individuals to the left of sigma Y times square root of N and divide that by N, ZN, consider the stochastic process Y, indexed by the parameter of Y over the whole real line, for each fixed Y, this converges to a random variable. So this is converging too. I want to claim this delta Y minus infinity less than Y less than infinity where delta Y is going to be the indicator function of a standard normal random variable that's not equal to Y, where N is N0Y. And then here I have to -- I deliberately left in what sense do I mean by this? Well, this is a family of random variables indexed by minus infinity to plus infinity Y. This converges to this for every finite collection Y1, Y2, YK. But then these processes live in this [inaudible] space minus infinity plus infinity. So it would be nice to prove that this converges to take place in this [inaudible] topology as well. But [inaudible] convergence plus tightness is required. I have not been able to prove tightness yet. But I've been able to prove the finite [inaudible] converges. So this converges in distribution to this delta Y where delta Y is you go to the next true, generate a standard normal random variable, bring it here, and then construct the trajectory. Notice that if I give you the value N, the trajectory is going to be zero for a long time and then jump up and then [inaudible] 1. So I have proved this is something namely of a finite number of coordinates Y1 Y2 YK. The convergence distribution takes place. But I would like to prove that this is tight as well, which I have not been able to prove. >>: Then this is the number of particles that have moved less than sigma [inaudible] have this explosive ->> Krishna Athreya: Yeah. very close to the present. So the -- remember, the coalescence takes place That means the -- yeah. So this is the super -- >>: Close to the -- coalescence happen close to the ->> Krishna Athreya: Present. That means that much of the trajectory is how -- much of the things has a lot of things in common. Only the last bit is going to be different. All right. So let me see. now, right? >>: 4:20. I'm supposed to stop You can take -- >> Krishna Athreya: >>: What time do I have? Yeah. Well -- -- few more minutes if you -- >> Krishna Athreya: Okay. So let me -- where is the original paper? Right here. They we are. Then there is -- there are many, many directions you can go to. For example, one direction would be what happens in the two-type case of a multi-type case. In the multi-type case, you choose the two individuals at random at the Nth populate, at the Nth level. They could belong to two different types. And their ancestor could be an Nth other type what would happen to these things. And then you can talk about what if individuals live a random length of time. In other words, continuous time branching processes. And then there is a process that my -- [inaudible] my brother's son, he's a probabilist as well, and also in the Statistical Institute in Bangalore, and [inaudible], we worked on it, and we considered the following problem. You have a branching process, and each individual has a certain random length of life. And during their lifetime they move according to your Markov process. So this is a branching random walk, but branching Markov process as it were, and then they gain a look at the distribution of the point process generated, and there are some results. And there we -- I -- that paper was written long before I did this work on coalescence. So we have only results with the finite mean, but the infinite mean case is open and a number of problems are open. I have listed a whole bunch of open problems in that paper that I wrote for the -- a conference in Spain about six months ago so I can describe them to you. stop. >> Yuval Peres: But there I will Thank you very much. [applause] >> Yuval Peres: So are these papers -- >> Krishna Athreya: >> Yuval Peres: Those papers on the archive, or where can -- >> Krishna Athreya: >> Yuval Peres: Yes. I don't know how to do that, but it is -- On your Web page? Where can you find it? >> Krishna Athreya: Yeah, my son was able to find all of them there. You know, I told him -- he did something, and then out showed up all these papers. But I don't know how to do them myself, but ->> Yuval Peres: Well, then we should -- >> Krishna Athreya: yet? >> Yuval Peres: [inaudible] >> Krishna Athreya: >> Yuval Peres: -- he made -- do you have copies of all my papers or not Yeah, you can download them. Find them online, you can find them. >> Krishna Athreya: Yeah, actually, yeah, so in the last page of this, there are -- there's references. And I can at least write down the references. So you can take a look at -- by the way, there's a beautiful paper by Laggal [phonetic] extension theory and random trees where they also talk about the coalescence problem. And that appeared in this statistics or stochastic process in application. And there's another paper by [inaudible] Lambert, coalescence times for branching processes [inaudible] probability. And then [inaudible] the evolving beta coalescence in a [inaudible] probability. And then there's a bunch of papers of mine. So the references are here. >> Yuval Peres: [inaudible] find it. >> Krishna Athreya: >> Yuval Peres: All right. Okay. Thanks. Okay. >> Krishna Athreya: >> Yuval Peres: [applause] Thank you. Let's thank Krishna.