>> Sebastian Bubeck: So today I am very happy to... young probabilist from MIT working with Scott Sheffield who has...

advertisement

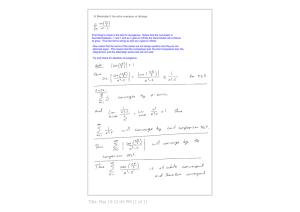

>> Sebastian Bubeck: So today I am very happy to introduce Ewain Gwynne, a brilliant young probabilist from MIT working with Scott Sheffield who has been an intern here this summer with David and me. He will tell you about our joint project on the first passage percolation. >> Ewain Gwynne: Thanks. So today I am going to be talking about a variant of the Eden model, first passage percolation, where edges are weighted by a positively homogenous function. This is also called the Polya aggregate model. This is based on joint work with Sebastian Bubeck. So I am going to start by introducing the on weighted Eden model and the first passage percolation model and then I am going to talk about the particular model that I will consider in this talk. So the Eden model is a random growth model on z.d. It’s important in a variety of fields because it can be used to describe things like the growth of bacterial colonies, lichens, mineral deposits, the diffusion of information through a network and so forth. So to define this model we start by sampling an edge uniformly at random from the set of all edges of z.d. which are incident to the origin. And we let a1 tilde just be the singleton SAT which consists of this one edge. Then inductively if for some positive integer n the SAT a.n. minus 1 tilde has been defined we sample an edge e.n. uniformly from the set of all edges which are incident to the boundary of a.n. minus 1 tilde, but which are not actually contained in a.n. minus 1 tilde and we let a.n. tilde be the union of a.n. minus 1 tilde and the singleton SAT e.n. So maybe this is what it looks like after 2 stages, and then after n stages we get a big cluster of edges and then we add 1 more at each stage. So, first passage percolation is another random growth model which in some sense can be viewed as a continuous-time re-parameterization of the Eden model. So this model is a little bit more complicated to describe, but it also is more attractable mathematically. So to define this model we start with a collection x of e for each edge e of z.d. of i.i.d. exponential random variables. And for a path a.d.a. in the integer lattice by which would mean a connected sequence of edges in the integer lattice we let the passage time theta, which we call t theta, be the sum of x.e. over all of the edges e in the path a.d.a. And for a vertex v of the integer lattice we define t of 0 v, the passage time from 0 to v, to be the minimum of t of theta over all paths a.d.a. which connect 0 to v. Then we define a growing family of sub-graphs of the integer lattice, which we call a.t. as follows; for each t we let the vertex set of a.t. be the set of all vertices v in z.d. whose passage time from 0 is less than or equal to t and we let the edge side of a.t. be the set of all edges in z.d., which lie on paths started from 0 whose passage time is less than or equal to t. So this gives us a growing family of sub-graphs of the integer lattice parameterized by the positive real numbers. And it turns out that first passage percolation is equivalent to the Eden in the following sense. So suppose that for each positive integer n we let t sub n be the smallest t for which a.t. has at least n edges. Then this gives us another growing family of edge sets parameterized by the positive integers and it has exactly the same law as the Eden model clusters. This is just an easy consequence of the “memoryless” property of the exponential distribution. So one of the most fundamental theorems about –. Yes? >>: I have a question on this slide. [indiscernible]. >> Ewain Gwynne: No they don’t form a tree, because you are just selecting uniformly from the set of all edges that are adjacent to the current cluster that you haven’t selected yet. So like in this picture, maybe here instead of selecting this red edge we could select this edge here and then we would form a cycle. >>: Okay, so it’s not the edge that connects to the outside? >> Ewain Gwynne: No, it just has to be some edge, which has not been hit yet. So one of the most basic theorems about first passage percolation, which was originally proven by Richardson and various strengthening and generalizations were proven by Cox-Durrett and Kesten and it says that there is a deterministic, compact convex set bold A, which is symmetric about the origin such that the first passage percolation clusters converge to a.t. in the following sense. For each t it holds except on an event of probability decaying faster than any power of t, which is what I mean by this notation o sub-t infinity of t here. That the vertex set of a.t. is approximately equal to t times bold A intersected with z.d. So although we can get very strong conversion statements about the convergence of the FPP clusters toward bold A we know almost nothing about the set bold A itself, beyond the fact that it is compact, convex and symmetric about 0. It is not even know, for example, whether or not it is a Euclidean ball, except in dimension greater than or equal to 35. So this is a very poorly understood object, but it’s not hard to simulate the set bold A by just running a simulation of the Eden model. So here is such a simulation which was generated by Sebastian using MATLAB and as you can see the limit shape appears to look like a Euclidean ball, but maybe it’s off by a little bit. So it’s approximately a Euclidean ball, but its conjecture that it’s not exactly a Euclidean ball. So in this talk I am going to be interested in the following natural variant of the Eden model, which can be described as follows: Suppose we have a weight function, which we call wt, on the set of all edges of z.d. So each edge is associated a wt of e and suppose we do the standard construction of the Eden model, except that at each stage instead of selecting the next edge e.n. uniformly we select that edge with probability proportional to the wt of e from all of the edges which are incident to the previous cluster. So as is the case of the ordinary Eden model this weighted variant of the Eden model is equivalent to a weighted variant of first passage percolation where we have independent exponential random variables at each edge, but instead of all having parameter 1, the parameter of each exponential random variable is given by the weight of the corresponding edge and again this is just a consequence of the “memoryless” property of the exponential distribution. So in this talk I am going to focus on the weighted Eden model where the edge weights are given by an alpha positively homogeneous function for some real number alpha. So there are a number of reasons for considering this particular variant of the weighted Eden model. First is that it’s just a natural generalization of the standard Eden model, both because of just intrinsic mathematical interest and because in the situations that the Eden model can be used to model, remember like the growth of mineral deposits, and the spread of information and so forth. It is natural to consider what happens if you allow the growth rate to depend both on the distance to the origin and the direction in which you are growing. Additionally it turns out that diffusion limited aggregation or DLA, which is another important random growth model is actually equivalent to a weighted variant of the Eden model on a d-ary tree where instead of having edges which decay polynomially as we move away from the origin or instead of having weights which depend polynomially on the distance to the origin we have weights which depend exponentially on the distance to the root. And this model was studied by Aldous and Shields and by Barlow, Pemantle and Perkins. And finally, recently in the computer science literature a variant of the weighted Eden model with positively homogenous edge weights, which is the particular model that we are considering in this talk was proposed as a protocol for spreading a message while obscuring its source. So these are some reasons to be interested in the model considered in this talk. So now to formally describe the situation I’m going to consider for the rest of the talk fix a. we fix some real number alpha and we fix a function f 0 from the Euclidean unit sphere to the positive real numbers, which is Lipschitz continuous. And we let f be the function which is given by the Euclidean norm of z to the alpha power times f 0 of z over absolute value z. So f is locally Lipschitz and positively homogenous of degree alpha. For example we could have the f of z is equal to the f of power of some norm on r.d. >>: Is it positively homogenous because alpha appears without a minus sign [indiscernible]? >> Ewain Gwynne: No by positively homogenous I mean that f of constant times z is equal to the absolute value of the constant to the alpha times f of z. A function which was just homogenous would mean that if you took like f of negative z it would be equal to negative f of z and that’s not the case here. >>: [inaudible]. >> Ewain Gwynne: Right so we consider positively homogenous rather than signed homogenous, but alpha can be positive or negative. So in this talk we are going to consider the weighted FPP model where the weight of each edge e is given by the value of f at the mid point of that edge and we call this model f-weighted FPP. And note that in the case when f is identically equal to 1 so that alpha is equal to 0 we just get the standard FPP model. And in the case where d is equal to 1, so we are just working on the line, and f of z is equal to one over z we get the Polya urn model. So in some sense our model can be viewed as kind of a vast generalization of the Polya urn model and this is the source of the name Polya aggregate. >>: [inaudible]. >> Ewain Gwynne: Yes, sorry it should be plus one here. So now that we have introduced the model I can state the main results of my project with Sebastian. So first in the case where alpha is less than 1 for any choice of the function f we get a deterministic limit shape in the same sense as for on weighted FPP. So we have some simulations in this case. So if we take alpha to be equal to negative 2 and f of z to be the l1 norm to the negative 2 power then our limit shape kind of looks like a slight rounding of the l1 unit ball. And if we take alpha equal to 0 and f of z to be the ratio of the l1 norm to the Euclidean norm to the third power this means that the restriction of f to the Euclidean sphere, which we called f 0 is going to be big on the diagonal directions, but smaller on the horizontal and vertical directions. Then we get this kind of four-leaf clover shape. All right. So that’s what happens in the case when alpha is less than 1. In the case where alpha is greater than or equal to 1 things are a bit more complicated and we need to introduce a new notation to describe our results in this case. So if alpha is strictly greater than 1 there almost surely exists a finite t for which a.t. is infinite. This should be strictly greater, not greater than or equal to and we call time infinity the smallest such t. So time infinity is in some sense the time that it takes for the FPP clusters to get to infinity. This is kind of easy to verify and will be an easy consequence of some of the estimates, which I will describe later in the talk. >>: [inaudible]. >> Ewain Gwynne: Sorry what? >>: [inaudible]. >> Ewain Gwynne: So it’s an easy estimate to prove. So our first main result in the case when alpha is bigger than 1 is the following: so for each alpha bigger than 1 there exists some norm mu which may depend on alpha on r.d. such that if we take our weight function to be given by the alpha power of n.w.e. then almost surely all but finitely many vertices of the time infinity cluster a time infinity are contained in some Euclidean clone of opening angle less than pi. So, here is a simulation in the regime where alpha is bigger than 1. So this is with alpha equal to 5 and f of z given by the Euclidean norm. And as you can see we start here and we just go off to infinity in one direction. So this is very different than the case where alpha is less than or equal to 1. Note though that this Euclidean norm is not the norm that we actually prove the result for. The purpose of this picture is just to kind of illustrate what happens in this case. >>: Do you think it’s true for the Euclidean norm? >> Ewain Gwynne: Yes, we do think it is true for the Euclidean norm, but it’s not the one we can actually prove it for. >>: [inaudible]. >> Ewain Gwynne: But not for all norms as you will see from our second theorem, which says that for any choice for the function f 0, which you remember is the restriction of f to the unit sphere, there is some small positive constant c, which depends on f 0, such that for any alpha between 1 and 1 plus c are most surely z.d. minus the vertex side of a time infinity is finite. So all but finitely many vertices of z.d. are almost surely hit and in particular the cluster is not contained in a cone of opening angle less than pi. So in particular this tells us that in theorem 2 we cannot take the same norm mu for every value alpha. So for example if we took the Euclidean norm there would have to be an alpha slightly bigger than 1 for which the cone containment result is not true. So here we have a simulation in this regime where alpha is slightly bigger than one. So as you can see it doesn’t appear to have a deterministic limit shape because it is growing at different rates in different directions, but on the other hand it is growing at a positive rate in every direction so it’s not going to be contained in some cone. All right. So here is a summary of the results and I just want to point out here that in each of the three results the corresponding object is a deterministic functional of the function f in the standard FPP limit shape bold A. So even though we don’t know bold A explicitly if we did know what bold A looked like we could give an explicit description of the limit shape in the case where alpha is less than 1, the norm mu in the case when alpha is bigger than 1 and the constant c for a given f 0. Now that we have stated the main results we can proceed to the proofs. >>: [indiscernible]. >> Ewain Gwynne: No we do not know that. This remains an open problem to answer whether it’s true that if you fix a norm mu then it’s almost surely contained in a cone for large enough alpha. >>: Can you flash the results again from the previous slide. >>: [inaudible]. >> Ewain Gwynne: It get’s to infinity and finite time so it’s always true that if you fix some vertex then with positive probability there is a path to that vertex with passage time, which is less than the time to infinity. So you are never going to have –. Things can always fluctuate by a finite amount. So now for the proofs of our results: So the main idea of our proofs is that we are going to approximate f-weighted FPP passage times by a certain deterministic metric, which depends on f and on the standard FPP limit shape bold A. So to define this metric we first define a norm mu, which is just the norm on r.d. whose closed unit ball is bold A. So, mu of z is the smallest r for which z is contained in all r bold A. This gives a norm because bold A is compact convex and symmetric about 0. Now if we are given a piecewise liner path gamma from some interval 0t to r.d. We say that gamma is parameterized by mu length if for each t in 0 big T the sum of the mu lengths of the linear segments that gamma has traced up to time t is just equal to t. Okay so it travels t units of mu length and t units of time. And for such a path gamma we define the d length of gamma to be the integral from 0 to t of 1 over f of gamma of t, d.t. And for z.w. in r.d. minus 0 we define the d of z.w. to be the [indiscernible] over all piecewise linear paths gamma from z to w of the d length of gamma. So this d is a metric on r.d. minus 0 and it extends to a metric on all of r.d. if in fact alpha is less than 1. And it has the following scaling property: for any z and w in r.d. and any r greater than 0 the distance from r.z. to r.w. is equal to r to the 1 minus alpha times the distance from z to w. This is just an easy consequence of this formula here. All right. So the reason why we are interested in this metric d is the following lemma which is going to play a crucial role in the proofs of our main theorems. So what this says is that we are given any stopping time for the filtration generated by the FPP clusters and any positive integer m. Then except on an event of probability which decays faster than any power of m. It holds for each vertex in z.d. which is not in the time tau cluster and has its Euclidean norm proportional to m. It holds the passage time from 0 to v minus tau is approximately equal to the d distance from v to a tau in the following precise sense. So we get these exponents which tell us how close the approximation is and then we have error which is some negative power of m. So I’m not going to give a fully detailed proof of that lemma because it’s a bit technical, but I am going to give kind of a heuristic argument for why we should expect it to be true. So suppose we are given a vertex u in the time tau cluster a tau. Then we observe that near u, our clusters grow like standard on weighted FPP scaled by f of u and translated by u. And by the limit shape result for standard FPP the limiting shape of this process is going to be the ball of radius f of u centered at u in the norm on mu, which is just f of u bold A plus u. So for each small epsilon greater than 0 it holds with high probability that a tau plus epsilon minus a tau is approximately equal to the union over all vertices u and a tau of the mu ball of radius epsilon f of u centered at u intersected with z.d., right because we just apply this observation and then we take the union over polynomially many vertices, because the limit shape result holds exception on event of probability decaying faster than any power of m and we only have polynomially many vertices with distance to the origin proportional to m we get that this approximation holds with high probability. Now if we are given a positive number t which use some small epsilon and we break up the interval from tau to tau plus t into increments of size epsilon and then we use the definition of the metric mu and we find that in fact a tau plus t minus a tau is approximately equal to the D-ball of radius t around the vertex set of a tau intersected with z.d. We are just kind of averaging the function f over increments of which size depending on mu. So if we are given any vertex as in the statement of the lemma we find that the time it takes to get from a tau to v is approximately the d distance from v to a tau. And that’s why this lemma should be true. So this kind of illustrates what’s happening here. If we start with a set a tau, which is this side in blue then it’s going to grow kind of like the FPP process after time tau. It’s going to grow kind of like the D-balls centered at a tau. So if alpha is bigger than 1, which is the regime that’s shown in this picture then it’s going to grow faster when we are further away from the origin and it’s going to grow slower when we are closer to the origin. All right. So now that we have got our main lemma we can move onto the proofs of the main theorems. So first we are going to consider the case where alpha is less than 1, which as you may recall is where we are claiming that there is a deterministic limit shape of the FPP clusters in this case. So when alpha is less than 1 each point of r.d. lies at finite distance from the origin in the metric d. So we can extend d to a metric on all of r.d. So in particular the ball of radius 1 centered at 0 in the metric d, which we call bold B is well defined and by scaling we note that bold B is just equal to r to the negative 1 over minus alpha times the D-ball of radius r centered at 0. And it turns out that in fact bold B is the limiting shape of f-weighted FPP in the following sense. So we take these exponents chi and chi tilde, which will give us our rates of convergence. Then for any t it holds expect on an event of probability decaying faster than any power of t that the vertex set of a.t. is approximately equal to t to the 1 over 1 minus alpha bold B intersected with z.d. So this is essentially the same as the limit shape result in the case of on weighted FPP, but just with somewhat different exponents. So to prove this theorem we proceed as follows –. >>: Do you think the fluctuations are actually in different order or it’s just that the exponents in the proof are different? >> Ewain Gwynne: So the fluctuations here will actually be of different order, but the exponents are probably not optimal. The reason for this is that these exponents come from the exponents in the limit shape result for standard FPP and those fluctuation exponents are not expected to be optimal. >>: But do you think, never mind. >> Ewain Gwynne: So for each positive integer n we let tau n be the smallest t for which a.t. is not contained in the D-ball of radius n centered at 0. So if this guy here is say the D-ball in blue then tau n is just the first time we are not contained in it. And we know that for any n tilde greater than n the D-distance from the D-ball of radius n to the D-ball of radius n tilde is equal to n tilde minus n, obviously. So by the D-approximation lemma tau n tilde minus tau n is going to be approximately equal to n tilde minus n, except on an event of probability decaying faster than any power of n, provided that n tilde is proportional to n. So if the n tilde is between 1 plus epsilon n and 1 over epsilon n. So this means that with high probability the vertex set of a tau n tilde is going to contain everything which is at D-distance slightly less than n tilde minus n of a tau n. All right. So this means that if at time tau n our cluster a tau n contains most of the points of the Dball of radius n, then also at time tau n tilde our cluster is going to contain most vertices of the D-ball of radius n tilde. So by induction our proof will be complete provided we can show that with high probability there is some n 0 for which a tau n 0 is not too much different from the D-ball of radius n 0. In fact we can just use some basic estimates for exponential random variables, essentially just fix some path between two vertices and estimate the sum of the exponential random variables along that path. We can find some epsilon greater than 0, which does not depend on n 0 such that with high probability the vertex set of a tau n 0 contains each vertex of z.d. which is in the ball of radius epsilon n 0 centered of the origin and such that the time tau n 0 is between epsilon and 0 and epsilon inverse n 0. So things are off by most a constant factor with high probability when n 0 is large. This should actually be an o.n. 0 infinity of n 0, because it’s a probability. So o.n. 0 of n 0 doesn’t exactly make sense, but because n 0 is fixed this error epsilon inverse n 0 is just dominated by n when n is large. So it doesn’t matter where we started at because when we make n large enough just the error for the starting position just kind of is dominated by n. And it turns out this is enough to prove the theorem. So this is how we get the limit shape result for alpha less than 1. So next I am going to turn my attention to the result which I call theorem 3 above, because it’s easier to prove than theorem 2. This one says that for any fixed f 0, so f 0 is the restriction of f to the unit sphere, if we take alpha a little bit bigger than 1 then almost surely a tau infinity contains all but finitely many vertices of z.d. So to prove this we first are going to quantify how close to 1 alpha needs to be. So if we are given z and w in the boundary of the Euclidean unit ball we let gamma delta of z.w. be the set of piecewise linear paths from z to w whose linear statements have length delta and which have end points in the Euclidean unit sphere. And we let lambda be the D-circumference of the Euclidean unit sphere, which is defined by this formula. So we look at the [indiscernible] of the –. So essentially what we are doing is if we are given points z and w in the unit sphere we wanted to find the Ddistance from z to w considering only paths which are in some sense contained in the Euclidean unit sphere, but of course you can’t have a piecewise linear path contained in this sphere. So you have to take piecewise linear paths which are almost contained in the sphere and you take the [indiscernible] of the lengths of all of those and this gives you the distance within the sphere from z to w. Then we look at the maximum distance over all points in the sphere of the distance of z to w and we take the [indiscernible] as delta goes to 0. So this quantity can be interpreted as the D-circumference of the unit sphere. All right. Is this okay for everybody? >>: These paths go in the ball right? They are not inside of the sphere? >> Ewain Gwynne: Well the end points of all of the linear segments will be on the sphere and the linear segments will be short. >>: [indiscernible]. >> Ewain Gwynne: Well they are going to have to be contained in the Euclidean unit ball by convexity. >>: In the ball, okay. >> Ewain Gwynne: Yes. >>: So this looks kind of like a diameter instead of circumference. >> Ewain Gwynne: Well the diameter I would think of as the D-distance between any two points without any constraints on the paths. But you are requiring the paths to be in the sphere. So it’s really the distance around the sphere not the distance [indiscernible]. >>: Okay, that’s what I was asking. [indiscernible]. >>: [indiscernible]. >> Ewain Gwynne: Right, but they are piecewise liner paths and all of the statements have length less than delta and the end points are contained in the sphere. >>: [indiscernible]. >> Ewain Gwynne: Yes, so the path is very close to being contained in the sphere, but it’s not quite, because it has to be piecewise later. >>: [indiscernible]. >> Ewain Gwynne: It’s half the circumference, right. >>: And are we assuming alpha is strictly greater than 1. >> Ewain Gwynne: Yes. >>: But there’s a typo in this slide title. >> Ewain Gwynne: Oh sorry, it’s between 1 and 1 plus. It can be equal to 1. >>: It can be equal to 1. >> Ewain Gwynne: It can be equal to 1, I’m sorry and this makes sense. It just has to be greater than or equal to 1. So the important point about this quantity is that it’s positive and it depends only on f 0, because the function f is just identically equal to f 0 on the Euclidean unit sphere. So now we are going to prove the theorem for you. So it’s not hard to show that the D-distance from the Euclidean ball of radius n to infinity is at least some small constant times alpha minus 1 inverse times n to the 1 minus alpha, where here this small constant does not depend on alpha, but it may depend on bold A and f 0. For each positive integer n we let sigma n be the first time that a.t. is not contained in the Euclidean ball of radius n. So this is similar to the times tau n in the previous slides, but we are using Euclidean balls. >>: Sorry what is alpha minus 1 inverse if alpha is below 1? >> Ewain Gwynne: Um, infinity. So in the case when alpha is equal to 1 the D-distance from anything to infinity is infinity. In the case where alpha is equal it’s kind of a trivial statement. >> Okay, sorry. >> Ewain Gwynne: It’s hard to be precise of all of the little details. So by the Dapproximation lemma we get that except on an event of probability decaying faster than any power of n tau infinity minus sigma n is not too much smaller than b times alpha minus 1 inverse times n to the 1 minus alpha. This is the D-distance to infinity so the time to infinity is approximately the D-distance to infinity, which is just the same. On the other hand any two points of the integer lattice which are in the Euclidean ball of radius n plus 1 minus the Euclidean ball of radius n lie within D-distance slightly more than lambda times n to the 1 minus alpha from each other. This follows from the definition of lambda together with a scaling property of the metric d. So if lambda is less than b times alpha minus 1 inverse then the time it takes our clusters to get around the boundary of the ball of radius n is smaller than the time it takes the clusters to get to infinity. And so this means that with high probability it’s going to absorb every vertex in b.n. plus 1 minus b.n. after time sigma n, but before time tau infinity. And this holds expect on an event decaying faster than any power of n. So in particular the probability of the compliment of the event is sum able. So we can apply Borel-cantelli to get that almost surely this is true for all large enough n, which implies that almost surely the cluster a tau infinity contains every vertex. All but finite many vertices of z.d. and that’s what we wanted. So here’s a picture, we look at the time that it exits the Euclidean ball of radius n. The time it takes to get to infinity is b.n. to the 1 minus alpha over alpha minus 1, at least at the time that it takes it to get around the boundary of the ball is lambda n to the 1 minus alpha. So if this guy is smaller than this guy then it’s going to hit all the vertices of z.d. near the boundary of the ball before it gets to infinity. So that concludes the proof of theorems 1 and 3. Now I am going to turn my attention to theorem 2, which says that if alpha is bigger than 1 then there exists a norm mu such that if we take f of z equal to mu to the alpha the almost surely all but finitely many vertices of a tau infinity are contained in a cone of opening angle less than pi. So note that by theorem 3 we can’t take an arbitrary choice of norm, there has to be some dependence of this norm on alpha, at least when alpha is close to 1. So in order to define the particular norm that we are going to consider we need to introduce a couple more quantities, which depend on the standard FPP eliminating shape of bold A. So first I let [indiscernible] bar be the smallest r for which bold A is contained in the Euclidean ball of radius r –. >>: What is know about that? >> Ewain Gwynne: Absolutely nothing. >>: Really? >> Ewain Gwynne: Well I think there may be some very trivial bounds on it, like as d goes to infinity it grows at a rate that is at most d over log d, or something like that. And I think that’s probably the best that’s available. So this quantity is not very well understood at all and likewise if we let bold X be the set of x in the boundary of bold A for which absolute value x is equal to [indiscernible] bar. So, bold X is the set of these blue points which are at maximum Euclidean distance from the origin. Also there is almost nothing know about this set bold X, but it’s a well defined quantity. It may be the entire boundary of the Euclidean ball if bold A is actually equal to a Euclidean ball, but probably it’s not. >>: Maybe you can say that if you knew more about bold X then –. >> Ewain Gwynne: Yes, so if we knew more about bold X then we would be able to say more about the norm that we are going to define shortly. >>: [inaudible]. >> Ewain Gwynne: Yes, the main obstacle in proving this for the Euclidean norm or the L 1 norm or something like that is really that we don’t know very much about bold A, and therefore we don’t know very much about the metric d, and therefore we don’t have very good estimates for the FPP passage times in that case. So now we are going to fix some x in bold X and we are going to define 3 planes. The first piece of x is the d minus one hyperplane which is perpendicular to x and passes through x. P minus x is the d minus one hyperplane which is perpendicular to x and passes through minus x. And p.x. 0 is the plane perpendicular to x passing through the origin. And we note that because p.x. intersects the Euclidean ball of radius [indiscernible] bar, only at x it also intersects bold A only at x. So here is an illustration in the case when d is equal to 2. So these hyperplanes are just lines and these two on the ends intersect bold A only at a single point. Now we fix a set q which is contained in p.x. 0. So the q is a d minus 1 dimensional set and we are going to require that q is compact convex and symmetric about 0 and that q contains the intersection of the Euclidean ball of radius [indiscernible] bar with p.x. 0. And for a given s greater than 1 we let script q’s of s be the s stretching of q, by which we mean the set obtained from the following operation. So say we start with q, which is here depicted as a red line because we are in dimension 2. So q has dimension 1 and then we stretch it by s in the direction which is perpendicular to x. Then we stretch it by 1 in the direction which is parallel to x. So this gives us a D-dimensional set, which is kind of a cylindrical shape, which is kind of tilted in the direction parallel to x and we call that set script q of s. So it is easy to see that the script q.s. is compact convex and symmetric about 0. So we can define a norm new s whose closed unit ball is q.s. And this norm new s is the one that I am going to prove the statement of the theorem for. So, in particular for each –. >>: So for any x one has such [indiscernible]? >> Ewain Gwynne: Yes, right, there is a norm for any choice of x. >>: And any s? >> Ewain Gwynne: And any s. >>: And any q. >> Ewain Gwynne: Right, so this is a family of norms. You can actually get a family of functions which are not norms also, which are defined in kind of a similar way, but I am not going to talk about this in the talk, but it is in the paper. You get a slightly wider class of choices of f than what I describe here. So the kind of more quantitative version of theorem 2 is the following: for each alpha bigger than 1 there exists an s greater than 1, which depends on alpha such that if we take f of z to be new s of z to the alpha for this choice of s and we let script k be the union over all r of the intersection of q.s. with the plane p.x. So the set k is contained in some Euclidean cone of opening angle less than pi then it’s almost surely the either all but finitely many points of a tau infinity are contained in k, all but finitely many vertices a tau infinity are contained in minus k. So this is kind of note worthy for a couple of reasons: first we do get an explicit description of the cone and you can kind of see what it looks like from the set script q.s., it’s just the cone who’s boundary lines pass through the boundary of the intersection with q.s. of the plane piece of x and furthermore there is this a tau infinity has to go off to infinity in one of these two directions. It never can go off to infinity in one of these directions. So the support of the center line of the cone is not the entire set of possible lines. It has to be in this direction or this direction. >>: Ah wait a second, this direction dependent on x, right? >> Ewain Gwynne: Right. >>: There could have been an x on one of those other places. >> Ewain Gwynne: Right, but the norm depends on x. So we are fixing an x and we define a norm –. >>: [indiscernible]. >> Ewain Gwynne: So the reason why we chose this particular norm is that with this particular choice of norm we can get sharp estimates for the metric d even without knowing anything at all about the set bold A. So in particular we can show that for each q bigger than 1 the D-distance from the boundary of script q.s. to little q times the boundary of script q.s. is equal to 1 minus q to the 1 minus alpha over alpha minus 1. And furthermore if we have a point z in the boundary of q.s. and a point w in q times the boundary of q.s. which lie at minimal distance from each other then it has to be the case that z is in one of those two planes, p.x. or p minus x and w is just equal to z translated by plus or minus q minus 1 x. So here is a picture. So the inner box is q.s.; the outer box is q.q.s. and if we have a point z in the boundary of the inner box and a point w in the boundary of the outer box then z has to be on one of these two green lines, which are the intersections with the planes p.x. and p minus x and w has to be the translation of z in the direction parallel to x onto the red line. So in particular w has to be on one of the two red lines. >>: If z and w are –. >> Ewain Gwynne: Yes, if z and w are in minimal distance from each other. So the reason why this is important is that if we take this picture and we rescale everything by 1 over q so that the outer box is mapped to the inner box then the red lines will be mapped to proper subsets of the green lines. So if you have a point on the boundary of q script q.s. which lies at minimal distance from script q.s. and then you rescale by q inverse you end up with a point and a boundary of script q.s., which lies at minimal distance from the boundary of script q.s. So this is crucial for our proof of the theorem which is going to be an induction argument. So to set up this induction argument we define for each positive integer n. We let tau n be the smallest t for which a.t. is not contained in n script q.s. So, to prove the first theorem we use D-balls, and for proof of the next theorem we used Euclidean balls and for proof of this theorem we are using [indiscernible] of s balls. So almost surely there is a unique vertex use of n of a tau n, which is not in n script q.s. And we let g.n. be the event that u.n. over the new s norm of u.n. is contained in one of these 2 planes, p.x. or p minus x. So in other words a tau n exists the set n script q.s. at a point which is close to one of these two green lines. Now we have an n tilde which is a little bit bigger than n and in the event g.n. occurs then except on an event of probability decaying faster than any power of n. In fact this is conditional probability given everything up to time tau n. We have that tau and tilde minus tau n is at most n to the1 minus alpha minus n tilde to the 1 minus alpha over alpha minus 1 plus a small error. This just comes from the D-approximation lemma, because we know the distance from the set n script q.s. to the set n tilde script q.s. So by a second application of the D-approximation lemma we get that each point which lies at D-distance bigger than this quantity from n.q.s. is not contained in a tau n tilde. But we know from our deterministic estimates for d that anything which is not in one of these two red lines lies at bigger D-distance from the set n.q.s. than the stuff that is in the red lines. So that means that nothing which is further than a little error away from these two red lines is going to be contained in a tau n tilde provided the event g.n. occurs. So this means that if g.n. occurs then with high probability also g.n. tilde occurs, because we have to hit the boundary of the next ball also at a point which is in one of these two good phases. So this means that if g.n. 0 occurs for some large end 0 then with high probability also g.n. occurs for all n a little bit bigger than n 0. So by summing over an appropriate sequence of n’s 10 to the infinity we get that with high probability if g.n. 0 occurs then for each n greater than or equal to 1 plus epsilon n 0 we have that time to infinity minus tau n is at most n to the 1 minus alpha over alpha minus 1 plus this small error. Now if we make s big enough and q 0 big enough, depending on alpha then we have for each q between q 0 and 2 q 0 we have that the D-distance from the part of q.n. times the boundary of q.s. which is not contained in one of the two planes to n times the boundary of q.s. is bigger than this plus delta n to the 1 minus alpha for some fixed delta which doesn’t depend on n. So by the D-approximation lemma we find that any point in this part of the boundary of q.n. script q.s. is never hit by a tau infinity. So here’s a picture, here’s q.n. script q.s., here’s n script q.s. So if g.n. 0 occurs for some end 0, which may be much less than n then with high probability nothing in one of these two red lines is ever going to be hit by a tau infinity. So this means that if g.n. 0 occurs for some large n 0 then in fact a tau infinity is going to be contained in the union of the set script k and minus script k, because this holds for every large enough n. Now there are two pieces left: the first one we need is to show that in fact g.n. 0 occurs with high probability when n 0 is large. So this is kind of a similar situation to what we dealt with in the alpha less than 1 proof. But here in order to deal with this we need to make s a little bit larger and in fact we can arrange that. By making s big enough we can arrange that in fact every point in the boundary of q.s. is closer in the metric d to the part of q star times the boundary of q.s., which is contained in the two planes than it is to the part which is not contained in the two planes. So then the D-approximation lemma implies that the g.n. 0 is going to occur with high probability even if g.n. 0 over q starred does not occur. So here is the picture. So if s is very big then everything in the boundary of the inner box is closer to the green part of the outer box then it is to the blue part of the outer box. So that means that no matter where we hit the inner box it is going to hold with high probability that we hit the outer box for the first time on one of the green lines, which means that g.n. 0 occurs. So this shows that almost surely all but finitely many vertices of a tau infinity are contained in the union of script k and n minus script k. But there is still one problem left because it’s possible that, well I guess we can worry that maybe all but finitely n vertices of a tau infinity are contained in the union of these two sets, but there is infinitely many in each. So it’s not actually contained in one of the two sets. So we still have to rule out this possibility that it goes to infinity at the same time in both directions. So let’s let e be the event that this happens and let’s let sigma plus respectively sigma minus be the first time that a.t. intersects script k, respectively a.t. intersects minus script k is infinite. So on the event e it is going to hold that sigma plus is equal to sigma, is equal to tau infinity, because it get’s to infinity at the same time in both directions. And furthermore for some large n it holds that a tau infinity minus a tau n is contained in the union of k and minus k, because all but finitely many vertices are contained in that union. So e.n. is the case for a given fixed integer n. So if e occurs with positive probability then for some deterministic n the even e.n. occurs with positive probability. But on the other hand the random variables sigma plus and sigma minus are conditionally independent given f tau n and the even e.n. because they depend on distinct collections of independent exponential random variables. So if sigma plus is equal to sigma minus is equal to tau infinity on the event e.n. then the conditional law of tau infinity given f tau n has to have an atom with positive probability. And it turns out that this cannot possibly be the case for any stopping time tau by kind of a straightforward probabilistic argument, which I am going to skip for the sake of time. I can explain it afterwards if people are interested. Then I am just going to end by mentioning some open problems which are related to this model. So first we show that for each alpha bigger than 1 there is some norm new for which we have this cone containment result, but it remains an open problem to classify for a given alpha what choices of f are such that a tau infinity is almost surely contained in a cone of open angle less than pi. So in particular if we are given a fixed norm then does it hold for large enough alpah that we have the containment with that particular choice of norm? And it’s possible that you can do a little bit better than our result without knowing any more information about the set bold A, but I would expect that a full solution of this problem would require some more information about the standard FPP limiting shape bold A. So here is another interesting question: Is it true that for any choice of f either a tau infinity is almost surely containing the cone of opening angle less than pi or almost surely a tau infinity contains all but finitely many vertices of z.d. So we know that both cases are possible for some choices of f, but there are other choices of f which neither of these is true. For example maybe there are some choices of f which a tau infinity has a spiral shape or a tau infinity is contained in a cone with positive probability, but not almost surely. So you would need to rule out this kind of thing to answer this problem. Then in the case where alpha is equal to 1 do we ever get a deterministic limit shape in this case and if not do we ever get a random limit shape? Then also what happens if we take edge weights, which are not positively homogenous, for example we can consider periodic edge weights or edge weights which are large in some spiral shaped region, but small in the compliment of that region. So we try to force the limit shape to actually have a spiral shape or something like that. Then there are many more open problems, a total of 15 listed in the paper, which will be posted to the archive soon. So here is the list of our references and that’s it. >> Sebastian Bubeck: Thank you. [Applause] >> Sebastian Bubeck: Any questions or comments? >>: So you mentioned that not much is know about this set bold A, but I am wondering if there are any conjectures about what it should be? >> Ewain Gwynne: So I don’t think there is a conjecture about exactly what bold A should be. One significant conjecture is that bold A is strictly convex, meaning that it doesn’t have any corners or flat spaces. This is still not known; if you could prove that it would be a very significant result about first passage percolation. >>: Does it make sense to talk about what happens after time tau infinity? >> Ewain Gwynne: It does, eventually everything in z.d. will be hit, because everything is connected but to the origin by some path, but not immediately. >>: [indiscernible]. >> Ewain Gwynne: I think in the case when alpha is bigger than 1 it can hit every end point. >>: You cannot give an [indiscernible] bound, but it will happen. >> Ewain Gwynne: Yea I think if alpha is bigger than 1 then almost surely everything is hit in finite time. >>: So you could image at each time greater than tau infinity there is an array to infinity in certain directions within the set, but not in other directions. So you have a set of directions which you can reach infinity in, it’s kind of expanding and eventually it becomes all directions. >> Ewain Gwynne: I think maybe you could have a picture like that, but the one disadvantage of considering what happens after tau infinity is that it has no relation to the Eden model anymore. >>: [indiscernible]. >> Ewain Gwynne: Yea there are still some interesting questions about what happens after time tau infinity. >>: [indiscernible]. >> Ewain Gwynne: Because the Eden model you are adding one edge at each stop. >>: [indiscernible]. >> Ewain Gwynne: Okay, yea maybe you could. >>: [indiscernible]. >> Ewain Gwynne: No, in the case where alpha is bigger than 1. >>: No the Eden model itself. >>: [indiscernible]. >>: So just a basic thing about alpha equal 1, I think my confusion was just in the case alpha equal 1 tau infinity is infinity, is that right? >> Ewain Gwynne: Yes. >>: And a tau infinity is always z.d. >> Ewain Gwynne: Yes. >>: It would be interesting [indiscernible]. >>: Meaning what? >> Ewain Gwynne: I think alpha times tau infinity every time is an explosion time in that sense, because you are always going to be adding infinitely many vertices in every increment of time after –. >>: Let’s define an explosion time as array from the origin to infinity get’s accessible that it’s –. >> Ewain Gwynne: So if you start out at time tau infinity you have this cone shape here and so like does an array like that count? How far away from the cone do you have to be? >>: It has to be not in the closure of what was accessible at previous times. >> Ewain Gwynne: Um maybe, maybe there is something interesting to be said here. Are there any other questions? >> Sebastian Bubeck: Let’s thank Ewain again. [Applause]