17926 >> Kamal Jain: Hello. My name is Kamal... Oveis Gharan. And sorry if I mispronounce your name. ...

advertisement

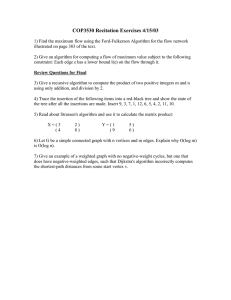

17926 >> Kamal Jain: Hello. My name is Kamal Jain. Today we have a guest, Shayan Oveis Gharan. And sorry if I mispronounce your name. He came here for an interview for his fellowship candidate, but he thought he could give a talk since he had some time. And I think it's a talk prepared on short notice. So people on the camera, or later, so he proved recently a break-through result in a long-standing problem which is asymmetric traveling salesman problem. And it could be considered one of the center problem in approximation algorithms. So Shayan will explain his new ideas here. >> Shayan Oveis Gharan: Thank you. Okay. So today I'm going to talk about the asymmetric traveling salesman problem. And I'll try to tell you some of our main ideas for this problem. I will tell them in the talk. So that's a start. So here's an outline of the talk. First I will define the traveling salesman problem, and I will tell you a brief history about it. And then I will define the concept of thin trees and its relation to ATSP. And, finally, I'll try to discuss some of our main ideas for these approximation algorithms for ATSP. Let's start with the problem definitions. Traveling salesman problem is general form has a simple definition. Suppose like we have a set of cities. There's some cost between them. And the traveling salesman who wants to visit each of these cities exactly once. So, in fact, we want to find the shortest tool such that that visit vertex is exactly one. So this problem was initially proved to be implicated by [phonetic] Karp in 1972, and it was one of the first problems to be proved to be in the complex. Traveling salesman problem in its general form is very hard to deal with. So we'll relax it a little bit. So, in fact, we consider this a little relaxation. So in ATSP, we have a set V vertices and a nonnegative cost function. We're looking to find the shortest closest tool that visits each vertex at least once. We have substituted that exactly once with at least once here. For example, consider this graph. The cost of each edge is one in this graph. And as you see, this tool I plotted in red is a tour of cost tool. And it visits some vertices more than once. In terms that if you have this property you can assume that the cost functions satisfies the triangle inequality. I.e., this inequality. So you can do that by easily considering the shortest path distance of going from U to V as the cost of U. So, in fact, symmetric traveling salesman problem, which is well-known traveling salesman problem, we'll call it STSP here, is a special case of ATSP. The main difference is that in that problem the cost of UV, we call it from the cost of from VG. So there are quite a lot of work to solve this ATSP. This is, in fact, one interesting instance. This tool is found on like over one million cities throughout the world and the cost of cities only six person of the optimum. In fact, the heuristics works very well for this problem. But here we'll focus on the approximation algorithm. So let me describe, TSP has a lot of applications in various areas. Here is one application in genome sequencing. In genome sequencing there's a process called shotgun sequencing which divides DNA into a set of short fragments. And the question is you want to assemble these short fragments in order to obtain the original DNA. So if you think, you'll find out -- sorry, in fact, this problem is the optimization problem. We want to find the original DNA to be as short as possible, be as short as possible in length. So if you think you'll find out that this is in fact minimum super sim problem. Super sim problem, you have a set of streams and you're looking to find a super string with the smallest length such that given each string, it's a sub string of the super string and you can convert that problem to the ATSP problem. So, in fact, here these five vertices correspond to five different streams. ATG and C are the DNA basis. And we're looking to find like this super string, each of these strings is a sub string of it. And the tour is the optimum tour. One difference is that here what we're looking to is to find the maximum ATSP. Note here that the cost of going, for example, from this vertex to this vertex is maximum suffix of this, which is equal to the prefix of the other. So max ATSP can be solved within constant factor. So this problem can be solved in constant factor in approximation. Let me briefly define what do I mean by approximation algorithm. Suppose that we have a minimization problem. We say that an algorithm is off a factor, is factor of optimization. If it only produces a solution, which is off a factor of the optimum. So the main difficulty is that when we're looking for this approximation algorithm, it seems to be hard to find actual optimum, otherwise we'll find the optimum. In fact, if it's hard to find the optimum, how can we compare the cost of our solution with the optimum. So typically we will use a lower bound on optimum solution. For that we'll use a linear programming relaxation. This linear program relaxation always gives you the lower bound for the optimization problem. So here are now the results for both symmetrics, asymmetric ATSP. You can see there's a lot of work. I've only mentioned a small portion of them. So this is the best constant factor approximation algorithm for STSP, which is tree approximation by Christofides in 1976. On the other hand, for ATSP, there are a huge number of works. Like four works here. But as you see, only the factor in front of the log n has improved. So they all give up an order of magnitude for general approximation. But for symmetric here, see there are quite a lot of works. It's a special case when our metrics is defined on a special graph. In particular, polynomial time approximation is key, having found first for metrics defined on unweighted planar graphs than for metrics defined on weighted planar graphs. And finally for weighted bounded genus graphs. >>: Are they run by STSP, too? >>: Shayan Oveis Gharan: This is for TSP. There isn't any special results for ATSP in specially bound [indiscernible] graphs. So here are our main results. We finally broke the log n barrier. We give the first order of log n over log log n approximation algorithm for general metric ATSP. This is joint work with Asadpour and Saberi from Stanford and Mitchell Goemans Alexander Madry from MIT. Moreover, we give the first constant approximation algorithm for shortest path metrics defined unweighted bound genus graphs. This is joint work with [inaudible]. So as you see, similar to the symmetric TSP, where the approximation factors are much better for the special case, here you have sort of the similar thing for ATSP where our approximation factor is constant for if it's a special case. Okay? So before I tell you our approximation algorithm, I need to define the Held-Karp relaxation because I want to compare our solution with the Held-Karp relaxation. This is the definition of the Held-Karp relaxation, this Held-Karp relaxation is proposed by Held and Karp in the 1970s. And it gives a lower bound for the optimal solution of ASTP. So here's some notation for set S delta minus of S is a set of edges that are pointing to this set; delta plus of S is those pointing out of this set. So, in fact, the fractional solution of these relaxation is the vectors where they have two properties. The size that -- there are in fact a fractional one flow. Because the minimum size of the cut is at least one, the size of each cut is at least one, as seen in this inequality. The inequality says that the amount of incoming flow to each vertex is equal to the amount of outgoing flow, equal to one. So it is in fact flow as you see, because the amount of incoming is equal to outgoing and it is one flow because the amount of outgoing edges down to flow through these edges is one. Also remember at L top S is the union of these two sets. It is all the edges in this cut. Okay. So in fact if you see, you'll find out that the optimal solution of ATSP is in fact an integral one flow, not a fractional one flow. So it has both of these properties, both of these inequalities integrally. This is why this linear programming is relaxation for ATSP and therefore its optimal value is a lower bound for the upper bound of ATSP. Moreover, optimum solution of the relaxation can be computed efficiently using ellipsoid algorithm. And also integrality gap is defined as the maximum ratio between integral solution over a fractional solution. So there are also quite a lot of work to find integrality gap both STSP and ATSP. As you see, there is this gap here for symmetric TSP. And even improve it by epsilon factor with greater result. For ATSP core [indiscernible] conjecture that integrality is equal to four and equal to symmetric TSP. But then [indiscernible] Goemans [indiscernible] found a set of counter examples where the integrality gap is at least two where they show that integrality is at least two. So it's open whether integrality gap is constant or not. So here's a simple example that shows that integrality gap is at least one and a half. Consider, this is the same graph I said before. The cost of minimum tour is 12. But if you consider the half integral solution in this graph, you'll see that it is in fact a fractional solution of Held-Karp relaxation, its cut is eight. So the integrality gap instance is one and a half. And if you play with it, you can find a set of examples where integrality converges to 2 if N goes to infinity. Okay. So let's try to work on the approximation algorithm. First I will go through the thin trees and we'll define it and tell you its relation to ATSP. So what's the thin tree? A thin tree is that spanning tree that has fractionally small number of edges in each cut, small number of edges. So in particular, we say that spanning tree is also comma sigma ten if it has both of these inequalities. This says that the number of edges of our tree in any cut is at most alpha times the size of that. So remember that delta of S is all the edges of this cut. Except L top S is the sum of the fractions of all the edges of this cut. So I want that my tree has roughly small number of edges in this cut. And, moreover, the cost of my tree is within sigma factor cost of X. So if our tree has both of these properties, I say it's alpha comma sigma. So is the definition clear? There is this observation which says if you have an alpha comma sigma thin tree with respect to optimal solution of ATSP, then it is possible to find an integral invariant tool, variant tool based on this thin tree where its cost is at most two alpha plus sigma times cost of X. Remember that X was an optimal solution of Held-Karp relaxation, therefore its cut of lower bound ATSP, therefore the cost of -- therefore, this tool is an alpha -- is an alpha plus sigma factor optimal solution of ATSP. I will not prove this observation for you, but the proof is mainly maximal mean cut argument and all you need to do is find the minimum cut invariant augmentation of this tree. And for that we will use this fractional solution of Held-Karp relaxation. Okay. So here's an overview of our algorithm. So it has some similarities with well-known Christofides algorithm for symmetric STP. In fact, suppose that this is a fractional solution of Held-Karp relaxation and this is our thin tree, these green edges. So what I need to do, I need to add some edges to this tree such that the point of graphical formulary. So sometimes I have to add multiple copies of an edge. So here are corollaries. First of all, if we had a polynomial time algorithm that can find constant comma, comma constant thin tree with respect to an optimal solution of Held-Karp relaxation, it can be a constant factor approximation algorithm for ATSP. Moreover, even if we cannot find such a tree, when we can prove its existence, this implies the constant integrality gap of ATSP. So it's a great result. So even the existence of this thin tree is a great result. So let me show you an example what these thin trees are. Suppose we have this graph. So if you -- in this graph, the cost of each edge is one. And suppose that I consider half integral solution in this case. So, in fact, this will be a fractional solution of Held-Karp relaxation. Suppose we have these two thin trees. It turns out that this has been a better thinness. And why is that true? Because consider this cost, that's the previous version from rest of the graph. This tree has two edges in this cost. This has four edges. But let's see the size of the cost. Because the fraction of each edge is a half. The size of this cut is two for both of them. So this tree is 2 over 2-1, 1 comma 4 over 5 thin. But this is 2 comma 4 over 5 thin. We call this 4 or 5 is related to the cost of our tree versus the cost of the graph. In fact, this is alpha and this is sigma. So if you see, this star-shaped tree has fractionally a small number of edges in many cuts of the graph. So, for example, if you consider this cut separate this vertex, this tree has two edges, but this one has only one edge. But you can see there's a very bad cut for this tree, its thinness will be much worse than the other, than this [indiscernible]. So if you see this example, you'll find that the duration of the edges doesn't have anything to do with the thin tree. If I drop the direction, I will have the same thinness, because the definition of the thin tree I've never used -- I haven't used the direction. So this is a great result. We can drop the direction with this thinness property. So if you drop the direction, what you get is a fractionally two connected graph. Because, remember, that X was a fractional one flow, the amount of outgoing edges of each set was one. The amount of incoming edges was one. If you drop the direction, you get fractionally two connected graph. Moreover, you can either consider our fractional graph or an integral graph. So you can convert our fractional graph to integral graph simply by multiplying the fraction of each edge to some large number of K. For example, consider this graph, you multiply every fraction by 2, you'll get this graph. So, in fact, what we need to do is either to find an alpha comma sigma thin tree in a fractionally two connected graph, or alpha over K comma sigma over K in a KH connected graph. So remember if we multiply everything by K, I get a KH connected graph but instead I need to prove alpha over K comma sigma over K thin tree, instead of alpha comma sigma thin tree because we multiply everything by K. Also we can do one more simplification. We can get rid of the cost function. Why is that true? Let me define another distribution for these thin trees. I say that a thin tree, spanning tree is alpha 10, not alpha comma sigma. Only alpha 10. If it has only the first inequality. It has fractions of any one of the edges of the graph. Suppose this is true. I'll show you that we can get rid of the cost function. How can we do that? Consider KH connected graph. Suppose it has like C over K thin tree for some constant C. We can extract this thin tree from our KH connected graph. And because this tree has small number of edges in any cut, the graph will still be highly connected. So we can do this again. We can consider again a thin tree in the new graph and extract it. So if we do that, you'll find out after some time we'll get a bunch of thin trees and we get the tree with the smallest cost. And this will be a good sigma for it, for us. So all I need to do, I need to find alpha thin tree. I need to have only this inequality for two to the N cuts. So to recap, I need to do, either to find alpha thin tree in a fraction in a connected two graph or alpha over K thin tree in a KH connected graph. Both if I can solve either of these problems I will get alpha approximation algorithm for ATSP. Okay. So from now I will only focus on how to find these thin trees. So let's think about it. For ATSP, first we have some problem in directed domain. Then we try -- we find this notion of thin tree and we converted the problem from directed domain to undirected domain. But if you think, you'll find out that finding these thin trees is not an easy problem. In fact, if you have a graph and you know the best thin tree in this graph, and you know its thinness, proving this thinness to someone else is a difficult problem. Currently the best approximation algorithm for this we know is log n approximation for finding the thinness. In fact, this thinness problem does not seem to be an MP. So it seems to be a very hard problem. In fact, at least ATSP was an NP. So it seems to be much harder. But as you will see, in some cases we can measure the thinness with some tools. And that leads us to approximation algorithm for ATSP. So here are our algorithm. First I will show you a deterministic algorithm. This deterministic works for shortest metrics defined unweighted bound genus graph. It gives constant over K thin tree with respect to KH connected bounded genus graph. And for certificate I will use properties of dual graphs. Also when we're working on this algorithm, we found out that mathematician called Louis Goddyn conjectured that any chain connected graph contains constant of K sentry. If his conjecture is true, then the integrality gaps of ATSP would be constant. Then I will present a randomized algorithm. This will work for general metrics ATSP. And it will find an order of log n over log log n thin tree with respect to fractionally two connected graphs. I would find constant over K thin trees that do KH connected graph. But here is fractionally, with respect to two fractionally connected. Both of them will give us, so this will give us constant trees -- constant approximation for ATSP will give us -- it will give us order of log n over log log n approximation. So first I'll discuss the deterministic algorithm and then randomness. So okay. We're good. Next I will show you this constant factor approximation algorithm for finding the thin trees unbounded graph. So let me define what I mean by bounded genus graph, an orientable surface with genus gamma is simply in sphere with gamma additional hand-offs. For example, this surface is the surface for genus tree. And we say that a graph has genus gamma if it can be embedded on genus gamma, while edges don't cross each other, don't cross each other. For example, a genus planar graph is zero, can be embedded on the surface and you'll find genus of complete graph is an order of n to the 2. For example, if you have a city with many one-way streets and a few bridges or underpasses, its genus is bound, or genus is small because you can easily embed it on a surface, genus embedding by each bridge or pass by a handoff. So this is our main theorem. We give a constant factor approximation algorithm for ATSP metrics defined weighted bounded genus graph or approximation factor is in order of square root of gamma log of gamma, and gamma is the genus of the graph. So with that even if gamma is not a constant but is sufficiently small will get good approximation. So in fact if gamma is like order of log n we get order of square root of log n approximation algorithm. Okay. So truly our algorithm implies constantality gap of Held-Karp relaxation for bounded genus graphs, prove integrality for thin trees. It will have more -- it's a bit more general. In fact, even if the inputs instance of ATSP do not have bounded genus but we can find an optimal solution of Held-Karp relaxation where it can be embedded on a surface bounded genus, then again we can use this algorithm. So we do not need the input graph to have bounded genus, we need to have fractional solution to have bounded genus. So here is the overview of the proof. First, I will tell you an unsuccessful attempt. Here I mainly prove our algorithm for planar graphs. I'll tell you some of the ideas for extension for non-planar graphs. For planar graphs I'll tell you unsuccessful attempt and I'll tell you our certificates of measuring thin tree and I'll tell you our thin tree selection algorithm. For that I will use the concept of trees defined. So let's use this by unsuccessful attempt. Suppose we have this graph and we're looking to find a thin tree in this graph. This is 5-H connected. So what's a [indiscernible] certificate. So suppose we have this tree in green edges. So one very simple idea is to chart it at with its parallel edges. And you can prove that if this edge will -- well, will contain a cut if and only if the power edges contain that cut too. So this implies 1 over 5,1 thinness of this tree. Okay. So let's see if we can generalize this. The answer is no. The answer is yes, but it will not work for any planar graphs. So suppose we have this graph and consider this cut and consider four edges in this cut. And because I'm looking to find the spanning tree I need to select at least one edge from the edges of this cut. But none of the edges of this cut have any parallel edges. So I cannot use the previous idea. So instead what I'm going to do, I'm going to first select these green edges. The first step I will not have any problem. I can select these green edges and charge them with the parallel edges. This is the first. So an idea might be that if we select these edges, what if we contract and try to solve the problem recursively? That seems to work. Seems to work. So let's try to do that. So if we do that, we get this graph. Again, we can select these green edges and charge them with the parallel edges and then contract it and finally select this edge. So if you see, you'll find out that these edges will make spanning tree. Why? Because each time we contract a set of edges we select at least one of them as an edge of our thin tree. So that planar graph will be spanning. But let's see -- you might expect the final graph to be 1 over 14 because each time we charge its edge to parallel edges and have three parallel edges. So unfortunately that's not the case. In fact, this is the final graph. And this is the tree I selected. If you consider this cut, our tree has three edges of this cut. But the size of the cut is 7. So at best it's 3 over 7, 3, 7, 10. And why did that happen? The problem is that consider this edge. This is the edge of selecting the last set of our algorithms. You charge this edge with these three edges. But in this cut only this edge is contained. None of the edges we charge is contained. So in fact our charging method will work only in the first step. When we contract the edges it will not work. But in the rest of this proof I will show you that the same algorithm will work with a bit of modification. Okay. So let's reach our certificate. Let me tell you how we are going to measure the thinness of our thin tree. So here's an observation. We'll use a simple observation planar graphs. In the planar graph, dual of any minimal cut is a cycle. And vice versa. The dual of a cycle, the dual graph is the minimal original graph. For example, in this graph the dual of this cut in blue is this red cycle. So if I want to prove the thinness on my tree, all I need to do is to prove its thinness in the minimal cuts, just to measure its thinness in the minimal cuts. For that, all I need to do is to measure its thinness in the cycle of the dual graph. If my tree has fractionally small number of edges in each cycle of a dual graph, it will be thin. So how can I do that? Suppose that I can select a tree where the distance of its edges in the dual graph is at least P. So this in fact implies 1 over this thinness. And why is that true? Because our tree can have at most 1 over D fractions of the edges of each cycle in the dual graph. So, for example, suppose that this is a cycle in the dual graph. This is equal to tree. So the worst case my tree has at most two edges in the cycle. >>: [indiscernible]. >> Shayan Oveis Gharan: The distance is the shortest path between the edges in the tree. So the distance of this dual tree. >>: Between the edges or? >> Shayan Oveis Gharan: Between the edges of the dual. So I'm considering my tree. Considering the edges of the dual graph. If they're far apart, they're at a distance D with each other. At most my tree would have 1 over D fractions of edges of n cycle, as you see, for example, on this example. So because it has at most one over D fractions in each cycle it will have at most one over each fraction n cut of the original graph and one over distance. So all I need to do is to find a set of edges where there are far apart in the dual. And also they make connected sub graph in the original graph course. So, let's see, it's true in this example. Remember I proved this is 1 over 5 thin. So let's see our thinness certificate. If you see -- the dual is plotted in red here. So these edges are selected. Have distance 5 in the dual graph. So this implies perhaps the thinness of our tree is 1 over 5. So next I will tell you our thin tree selection algorithm. I will tell you how we can find these far apart edges. So for that I will use tricks. What is a thread? A thread is a path, you may think of it as a long path, where all of its vertices, internal vertices has degree 2. So if you see, you'll find that the dual of the thread is a set of parallel edges. So suppose that I have a thread like this thread. And this edge, its middle edge, is the only edge of this thread I've selected as an edge of my thin tree. So no matter what the other edges of my thin tree, the distance of this edge from the rest of the edges will be at least D. At least D over 2. If D is the length of the thread. Suppose length over thread is 5 here. This is the distance of this edge from the rest of the edges will be at least 5 over 2. So what will be an algorithm, if you want to? Each time select a thread dual graph, pick the edges, the edges of our thin tree and then delete it. And do it recursively. And that's exactly our algorithm. These are relatively fine long threads dual at single edges of the edges of our thin tree and deleted tree. Note that interestingly the algorithm works with the dual graph. But will show we'll select the thin tree in the original graph. So let's go to the proof. First of all, what I need to show is that selected edges are far apart. This is true by definition of thread, as I said before. One more thing is that I need to prove that the selected edges would make a connected spanning sub graph. And why is that true? The reason is so simple. Consider the thread as the dual of the thread, a set of parallel edges. So when I'm deleting these parallel edges, sorry, when I'm deleting the thread edges, in fact I'm contracting these parallel edges in the original graph. So if you simulate our algorithm in the original graph, each time you select a set of parallel edges, one of them as an edge of our thin tree and then contract it. And we'll do it again. This is an algorithm I said before, but this time I pick my edge of, pick the edge of my thin tree smartly. And, trivially, the set of selected edges would make connected spanning sub graph as before. So it turns out, if you select it smartly like this, it will give you thin tree. Also, there is one simpler proof, which says this algorithm will select at least one edge from any cycle of a dual graph. If you think -- the proof is so easy, because each time you pick a thread and all the internal methods of a thread has degree two, the algorithm should select at least one edge of the edge of dual graph. Now because the dual of any minimal cut is a cycle our algorithm will select at least one edge from any cut. Okay. Great. So the only thing I haven't proved yet is the existence of these threads. And it turns out that they're real easy. So if you can prove that if we have a planar graph of girth D it contains a thread of length G over 5. So this implies that, if you do the calculations, this implies that our tree will be 1 over G starting. So once G is started you start the gear of the dual. Remember our algorithm was working with -- we were trying find this thread dual graph so it's 1 over GS starting thing, order of 1 over GS starting. So the only thing I need to show is that GS star is large, and this is the case in fact. In fact, for planar graph high edge connectivity implies high dual graph, because the dual of any minimal cut is a cycle. The dual of any cycle is minimal cut because the minimal cuts have size K, the K of dual is K. GS star is K and we get order of 1 over K thin tree. And it is in fact 10 over K for planar graphs. So, yes, this is our thin tree selection algorithm. So for extending this idea to nonplanar graphs I will not tell you much about it. The main -- I will tell you the main idea. The main idea is that for nonplanar graphs the dual can have very small cycles. It's not the case that if our graph is highly connected the girth of the dual is so large. In fact, it can contain very small cycle. But fortunately we can get rid of these small cycles. And the idea is that we can somehow delete their edges. The minimal algorithm is we consider the smaller cycles, delete their edges from our original graph. If we do that, because they're small, they will not disturb the connectivity of our graph and we can convert our graph to planar graph and everything will be fine. But there's some tricks. Okay. So this is the ideas of our some of our concept approximation algorithm. And next I will focus on our order of log n over log log n approximation algorithm. Is there any questions? Okay. So let's move on. So again remember that for in this case we are trying to give a randomized algorithm. It will work for general metrics ATSP. It considers a fractionally two connected graph as input and it's looking for an order of log n over log log n thin tree. So let's start by simple observation. Suppose that this vector of y is the vector of our fractionally two connected graph. But if you see, you will find out that this vector Y is in fact not too far from the tree itself. In fact, if we multiply by 1 minus 1 over n what we'll get is a fractional spanning tree. It's a spanning tree that's a vector applies to top line vertices of our graph. And why is that true? In fact, because this vector Y is fractionally two connected if we multiply by 1 minus 1 over N, still the fractional size of the edges any cut is bigger than 1, at least 1. Moreover, if you do the calculations, you'll find out that some of the older fractions in y, some of the older fractions of edges in y is n. Because it turns out from the properties of Held-Karp relaxation and you multiply size 1 minus 1 over n the sum of the fractions edges will be n minus 1. It will be a fractional spanning tree. So in fact all we need to do is to consider fractional spanning tree z and find spanning tree t with respect to z. I mean, that is t with respect to z across any cut. Note that previously we were looking to find a tree that is t with respect to y. But because z is roughly equal to y within some small factors if we find a tree that's thin with respect to z everything is fine. So let me start by unsuccessful attempt. Suppose that I round each edge uniformly at random. Each edge of the -- with its probability. If it is z, if it has a fraction of ze, I round it with probability z. So if I do that, the expected number of edges of my tree across any cut, across any cut is equal to the size of that cut. So suppose that, for example, this is the cut. This is one of the cuts. So what I need to have, I need to have a tree that is spanning. I need that this selection algorithm selects at least one edge across any cut. But if you want to have that, you need that the size of the cut, expected size of the cuts to be at least order of log n. Otherwise there will be some cuts which are not of the edges selected by the algorithm. So but if you have this, the thinness of our tree cannot be better than order of log n. So what we're going to do instead of just uniformly round the edges, you will select -- we have log n thinness here. So instead we will select a tree T from some specific discrete distribution that preserves the marginal probabilities. So in fact the probability of selecting an edge is equal to z of e, where z is the vector. So if you have such a distribution, what we'll get is that again expected number of edges of our tree in any cut is equal to the size of that cut. But here we will have something more. Because we are sampling the spanning tree, it will have at least one edge in any cut. It is connected. So we'll not have any problem for that. But the only thing we need to prove is that we have concentration for this equality, only in the right tail. We need to prove that the number of our edges of our tree is not that large in any cut. So previously we need to -- we had to prove its concentration in the left side, too, because our tree's spanning it has at its edge one cut and we need to have concentration in the left tail, and we need to have concentration on the right and we have 2 to the n equations. So this is why we can get better approximation if we want to measure -- if we want to have concentration on the right tail, because the chain of bound for the right tail gives us a better bound, comparing the share of bounds for the left tail. So let's see how we can get concentration. Previously, because we were sampling the edges uniformly at random, independently uniformly at random, we had independence, and we could use shared types of bound. But here we cannot because we were sampling a tree and the edges are not independent or are not selected independently. So we need to use negative correlation. So if we do not know the definition of the negative correlation, we say that sampling procedure has negative correlation if the selection of an edge into our, for example, tree can only decrease the probability of other edges. In particular, for n is set of f of edges probably that all of these edges are selected in our tree less than recall their marginal, the multiplication of their marginals. So remember that if you had negative correlation, then you would have equality here. But here -- sorry if you have independence you would have equality here. But here because you have negative correlation, it gives inequality. But in fact this is all we need to have the chain of types of bound. If we have negative correlation, then we can prove the correctness of any general types of bounds. And this is the idea. So in fact there are three possible, currently three possible approaches to sample our random spanning tree while having negative correlation. And while having the probability of selecting edges equal to its marginals. So this is the method maximum distribution used in our paper. It was previously defined by Amin and Arash [phonetic] in selecting random matching. There's also a pipeage rounding idea by the work of Calinescu Checkuri Polin Vondrak. And in fact this -- I mean they proved -- they then prove exactly this negative correlation, but they proposed a randomized algorithm and this negative correlation can be found through their proofs and be applied through their proofs. Recently, after our paper, Checkuri Vondrak and Zenklusen found some new approach called randomized swap rounding. This approach is purely combinatorial. And its running time is better than others. In fact, it's more general. I won't show you how it will generalize it. But however all of these algorithms gives us negative correlation concentration bounds. So after that, suppose that we have negative correlation concentration bound. Let's see what will be our chain of bound. In fact, the probability that our tree has more than log n over log log n the number of edges of our tree is more than log n over log log n of the size of the cut, is less than or equal to the n minus the constant times the size of that cut. And this is in fact where the log n over log log n comes from. Because this chains of types of bound are for the right tail. If you have log n over log log n, you want to have log n over log log n deviation, the probability will go to n minus constant. But, yeah, so by this inequality we'll have concentration for one cut. So all we need is to have concentration for all the 2 to the n cuts. For that we'll use Karger formula. And Karger in fact proved that in any graph, through at most n to the 2 K cuts of size at most K times the minimum cut. Suppose we have a graph, related graph. The number of cuts of size k times minimum cut is percent 2 K. We'll use this formula and we'll use it through a union bound argument. So in fact in our, as I said before, because we had fraction of 2 connected graph, its minimum cut is roughly one or two. So the number of size of K, say between K minus 1 and K is at most 2 to the K. If you multiplied this n2K by this and N minus constant, it will be K if you considered the cost of K to the minus K. These two will cancel out. It turns out you can set this constant C to be large enough just by placing some factor in front of the log n over log log n. So you can prove easily with high probability our tree will be thin, will be log n over log log n thin in each of the cuts of size of size L for L 1 and a half. So if you use union bound for all the cuts, you'll find out that our tree will be log n over log log n 10 with high probability. So let's briefly -- let me briefly tell you where this log n over log log n come from. I mean, is it -- show you a counter-example that we cannot do better than log n over log log n. Example is simple. Consider complete graph n vertices and suppose the fraction of each is 1 over n. So in this graph, even if you -- if you select each edge uniformly and independently at random, so suppose that we're not looking for proving anything for the left. We already proved -- we're only looking to prove something for the right tail. Suppose only we -- suppose that we select the edges uniformly at random, but turns out this will be something like [indiscernible]. In fact, for any edge, because the fraction of each edge is 1 over N, the size of this cut is 1. So the probability that an edge of our tree will be selected will be incident to this vertex will be 1 over N. So if you think, we'll select n edges like n minus 1 over n edges and each time there is 1 over n probability that this edge will be selected to one specific vertex. So it is like a balls and bins problem where vertices are bins and edges are balls, and there's 1 over n probability that an edge is incident to a specific vertex. So it's proved that in a balls and bins, n balls and n bins, there's a bin with high probability that contains log n over log log n balls. So in fact if we run this -- if you select our edges uniformly and independently at random, there will exist a vertex with degree log n over log log n with high probability. In fact, even if you only consider the cost that separated the vertex from the rest of the graph you cannot do better than log n over log log n. In fact, there are divorce costs in this example. So this is a generalization by Checkuri Chenoff and Zenklusen. They generalize the idea to any matrix. Not just the spanning tree. So they proved that suppose that we had a fractional solution of matrix polytope, and we're looking to sample a base from that matrix also by preserving marginal probabilities. And they prove that we can do that while getting negative correlation and therefore we have n type chain of bound for any linear combination of the variables. So in fact their algorithm works for a number -- for various problems, and they give, they drive like log n over log nn approximation for number of problems. But always you cannot do better than log n over log log n because of the right tail of general bounds. But as I said before, the algorithm is purely combinatorial. You can find the proof. But their proof is much smaller than comparing to us. You can find it in Vondrak's home page. Okay. So any questions? Let's conclude. So here first we consider the problem in directed domain. Then we try to have some simplification. We convert it to another problem undirected domain. But although the probability in undirected seems to be much harder, but as you see we have found good results for that. So I mean trying to get rid of this direction would be a very good idea. Also we've shown some interesting relation between ATSP and graph embedding problems, dual graphs, planar graphs and these sorts of things. And, finally, we prove that integrality gap ATSP is constant for shortest path metric defined unbounded genus graphs. So the interesting point is that the Checkuri Goemans and Karloff integrality graph encountered example is planar. So in fact they proved that integrality gap of ATSP on planar graphs is at least two. Therefore, in fact at least for planar graphs we know that integrality gap is constant. It's bounded low by 2, above by 22 as we prove. But for nonplanar graphs, if there are bounded genus still everything is okay. But if they're not bounded to genus, they're from general metrics is still open whether their integrality gap is constant or not. So let me tell you some often problems for future direction. So, first of all, is it possible to generalize our ideas to find constant trees for general metrics. Not only for rated bounded genus graphs. Not rated bounded genus graphs. And, moreover, is it true, is it possible to get P task for ATSP on planar graphs, like because we have P task for symmetric genus planar graphs so it is hard to find P task for problems in directed domain. There are a lot of difficulties. But it's a nice problem to work on. Moreover, here we proved that this guarded conjecture any KH connected graph contains a constant over K thin tree implies constant integrality graph of the ATSP. Also, there is some conjecture by Jaeger on number of zero flows. Does not tell you the details, but this is a well-known conjecture number of zero flow. It says like any 4-H connected graph contains 2 plus 1 over K number of flow. We can search for it on the Web. But turns out that this conjecture implies some version of the Jagger conjecture which has not been proved. This implies that this conjecture seems to be a very hard problem. But anyway these are the directions we know, but it's open whether any other direction is true, whether, for example, we can prove Goemans conjecture from the constant integrality gap of ATSP. If it's true, at least we know that although this is a thin tree problem is a hard problem but it is something like ATSP. And we lose nothing by reducing our problem to thin trees. Also some directions between these two, these others are very interesting. >>: [indiscernible] approach at the most you want to ->> Shayan Oveis Gharan: Yeah, there exists six numbers of flows for two edge connected graphs. But the Jaeger conjecture is that if we increase the connectivity to some very large number can we get 2 plus epsilon for very small epsilon or not. 2 plus epsilon over 0 flow. >>: Epsilon? >> Shayan Oveis Gharan: 2 plus epsilon. >>: What does epsilon mean? >> Shayan Oveis Gharan: Pick any epsilon. Suppose you pick any epsilon. And I should show that. >>: No [indiscernible] integral. >> Shayan Oveis Gharan: You can define for fractional graphs, too. By setting the -- I mean, allowing the fraction of each edge to be between 1 and 1 plus epsilon. You can -- they are similar. This Jaeger conjecture asks if you can find -- so, for example, if the graph contains a 2 plus epsilon, number of zero flow, it is very near Eulerian graph. >>: They're much bigger things, conjecture. So the types of conjecture s that if there is a poor connected graph, you have at least three ->> Shayan Oveis Gharan: This top conjecture is special case of Jaeger conjecture. For K equals 1. >>: But you don't have to do epsilon. There's still a conjecture -- I led you to the constant with the [indiscernible], can you show with a thousand connected graph you can find three normal zero flow. >> Shayan Oveis Gharan: This is still open. >>: So you don't even have to talk about epsilon or anything. >> Shayan Oveis Gharan: But at least for planar graphs you can do that or for bounded genus graphs. If the connectivity is sufficiently large we can get 2 plus epsilon. >>: Planar graph. >> Shayan Oveis Gharan: Our algorithm implies this. Also, there's some various works, truly only on Jaeger conjecture that proves that Jaeger conjecture is true for bounded genus graphs. But our algorithm is a bit more general because it implies -- Goddyn conjecture, proves the Goddyn conjecture which is more general than here. Okay. Thank you. [applause]