Document 17844179

advertisement

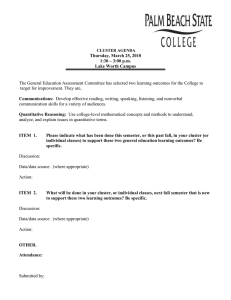

Future of Scientific Computing Marvin Theimer Software Architect Windows Server High Performance Computing Group Microsoft Corporation Supercomputing Goes Personal 1991 1998 2005 System Cray Y-MP C916 Sun HPC10000 Shuttle @ NewEgg.com Architecture 16 x Vector 4GB, Bus 24 x 333MHz UltraSPARCII, 24GB, SBus 4 x 2.2GHz x64 4GB, GigE OS UNICOS Solaris 2.5.1 Windows Server 2003 SP1 GFlops ~10 ~10 ~10 Top500 # 1 500 N/A Price $40,000,000 $1,000,000 (40x drop) < $4,000 (250x drop) Customers Government Labs Large Enterprises Every Engineer & Scientist Applications Classified, Climate, Physics Research Manufacturing, Energy, Finance, Telecom Bioinformatics, Materials Sciences, Digital Media Molecular Biologist’s Workstation High-end workstation with internal cluster nodes 8 Opteron, 20 Gflops workstation/cluster for O($10,000) Turn-key system purchased from a standard OEM Pre-installed set of bioinformatics applications Run interactive workstation applications that offload computationally intensive tasks to attached cluster nodes Run workflows consisting of visualization and analysis programs that process the outputs of simulations running on attached cluster nodes The Future: Supercomputing on a Chip IBM Cell processor 256 Gflops today 4 node personal cluster => 1 Tflops 32 node personal cluster => Top100 Intel many-core chips “100’s of cores on a chip in 2015” (Justin Rattner, Intel) “4 cores”/Tflop => 25 Tflops/chip The Continuing Trend Towards Decentralized, Dedicated Resources Grids of personal & departmental clusters Personal workstations & departmental servers Minicomputers Mainframes The Evolving Nature of HPC Scenario Focus Scheduling multiple users’ applications onto scarce compute cycles Departmental Cluster Conventional scenario IT owns large clusters due to complexity and allocates resources on per job basis Users submit batch jobs via scripts In-house and ISV apps, many based on MPI IT Mgr Cluster systems administration Manual, batch execution Personal/Workgroup Cluster Emerging scenario Clusters are pre-packaged OEM appliances, purchased and managed by end-users Desktop HPC applications transparently and interactively make use of cluster resources Desktop development tools integration Interactive applications Interactive Computation and Visualization Compute grids: distributed systems management HPC Application Integration Future scenario Multiple simulations and data sources integrated into a seamless application workflow Network topology and latency awareness for optimal distribution of computation Structured data storage with rich meta-data Applications and data potentially span organizational boundaries Data-centric, “wholesystem” workflows SQL Data grids: distributed data management Exploding Data Sizes Experimental data: TBs PBs Modeling data: Today: 10’s to 100’s of GB per simulation is the common case Applications mostly run in isolation Tomorrow: 10’s to 100’s of TBs, all of it to be archived Whole-system modeling and multi-application workflows How Do You Move A Terabyte?* Speed Mbps Rent $/month $/Mbps $/TB Sent Time/TB Home phone 0.04 40 1,000 3,086 6 years Home DSL 0.6 70 117 360 5 months T1 1.5 1,200 800 2,469 2 months T3 43 28,000 651 2,010 2 days OC3 155 49,000 316 976 14 hours OC 192 9600 1,920,000 200 617 14 minutes FedEx 100 50 24 hours LAN Setting 100 Mpbs 100 1 day Gbps 1000 2.2 hours 10 Gpbs 10000 13 minutes Context *Material courtesy of Jim Gray Anticipated HPC Grid Topology Islands of high connectivity Simulations done on personal & workgroup clusters Data stored in data warehouses Data analysis best done inside the data warehouse Wide-area data sharing/replication via FedEx? Data warehouse Workgroup cluster Personal cluster Data Analysis and Mining Traditional approach: Keep data in flat files Write C or Perl programs to compute specific analysis queries Problems with this approach: Imposes significant development times Scientists must reinvent DB indexing and query technologies Have to copy the data from the file system to the compute cluster for every query Results from the astronomy community: Relational databases can yield speed-ups of one to two orders of magnitude SQL + application/domain-specific stored procedures greatly simplify creation of analysis queries Is That the End of the Story? Relational Data warehouse Workgroup cluster Personal cluster Too Much Complexity 2004 NAS supercomputing report: O(35) new computational scientists graduated per year Parallel application development: Chip-level, node-level, cluster-level, LAN grid-level, WAN grid-level parallelism OpenMP, MPI, HPF, Global Arrays, … Component architectures Performance configuration & tuning Debugging/profiling/tracing/analysis Domain science Relational Data warehouse Workgroup cluster Personal cluster Distributed systems issues: Security System management Directory services Storage management Digital experimentation: Experiment management Provenance (data & workflows) Version management (data & workflows) Separating the Domain Scientist from the Computer Scientist Parallel/distributed file systems, relational data warehouses, dynamic systems management, Web Services & HPC grids Concrete workflow Computer scientist Concrete concurrency Abstract concurrency Computational scientist Parallel domain application development Abstract workflow (Interactive) scientific workflow, integrated with collaboration-enhanced office automation tools Example: Write scientific paper (Word) Collaborate with co-authors (NetMeeting) Domain scientist Record experiment data (Excel) Individual experiment run (Workflow orchestrator) Share paper with co-authors (Sharepoint) Analyze data (SQL-Server) Scientific Information Worker: Past and Future Past Buy lab equipment Keep lab notebook Run experiments by hand Assemble & analyze data (using stat pkg) Collaborate by phone/email; write up results with Latex Metaphor: Physical experimentation “Do it yourself” Lots of disparate systems/pieces Future Buy hardware & software Automatic provenance Workflow with 3rd party domain packages Excel & Access/Sql-Server Office tool suite with collaboration support Metaphor: Digital experimentation Turn-key desktop supercomputer Single integrated system