>> Michael Freedman: So very recently Microsoft Research created... QuArC group, which Krysta Svore leads. And we're very...

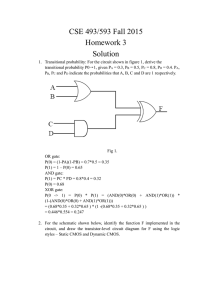

advertisement

>> Michael Freedman: So very recently Microsoft Research created a new group, the QuArC group, which Krysta Svore leads. And we're very pleased to have her here today to tell us about some of the research done in that group by herself and her collaborators. Thank you. >> Krysta Svore: Thanks, Mike. Okay. So yesterday we had a very brief tutorial on quantum computing. And a big part that we didn't discuss is the need to break down a unitary into fault tolerant pieces or operations that we can actually perform on a quantum device. And this comes up a lot in the quantum chemistry algorithms when we have these controlled phase rotations that ultimately we need to decompose. So today I wanted to spend just 25, 30 minutes talking about this decomposition process and the cost. And Ken yesterday in his talks showed a slide about this with the stars and the different methods, the cost of decomposing a single qubit unitary. So today we'll dig a little deeper into this. And I'm going to present a new method just really recent work that I did with my intern this summer Guillaume Duclos-Cianci. He's David Poulin's student. And so I'll present what we did. So this is going to be on how to implement and arbitrary or any single qubit rotation. So the problem that we're exploring here is we want -- we have some angle qubit unitary U and we need to decompose it in these fault-tolerant pieces because ultimately almost any quantum device, of course hopefully topological devices won't require error correction, but almost any device is going to have to use some form of error correction. And as Ken mentioned yesterday, we know how to do the Clifford gate within a code space and like the T gate. So we want to decompose into a basis which we know how to protect. And we want to do this decomposition in a margin that minimizes the number of ancilla qubits we need to use and minimizes, of course, the length of the sequence we're going to produce. So we want a short -- you know, a small depth circuit that replaces the original unitary. And, of course, we also want a small width circuit. So there's some tradeoffs here. So in Solovay-Kitaev decomposition, one basis we can use for decomposition is this H, T basis. So Hadamard gate plus this pi over 8 gate, which is a pi over 4 rotation about the Z axis. And I'm going to -- the cost I'm going to use for the cost of these protocols is not just -it's not the length of the decomposition sequence we're going to produce, I'm actually going to count the number of T gates. That's because the T gate is a non-Clifford gate. It's not really free in our codes. It costs a lot more to implement a T gate in practice than to implement the Clifford gates, okay? So typically we have to do something like magic state distillation. It could cost 15 or 15 squared or 15 cubed qubits to produce a single state for the T gate. So the resource is the T gate. And with Solovay-Kitaev, so this is work that I did with Alan, who is here in the room, Alex Bocharov, and we have -- we came up with a method to improve Solovay-Kitaev decomposition for the H, T basis. And these are the results. So the previous best scaling -- so, first of all, I should say Solovay-Kitaev scales the length of the sequence or the number of T gates you get out scales as -- it's log to the C of one over epsilon. And the C typically in practice, the best we've seen in practice up to a few months ago is typically around four. And the constants there can be large. So this work with Alex we're able to -- well, C comes down a little bit, but in practice, the order -- we gained several orders of magnitude improvement in the length of the sequence so you have, say, thousands and thousands fewer T gates required in the sequence. And, of course, that's for the H, T basis. And it uses no additional qubits. So that's something to note that in the protocol I'm going to tell you about, we're using it some resource states. But, of course, to do all these T gates you need these resource states as well, so that's -- we cost the T gate, the T count. So this is a plot that's very similar to what Ken showed yet. But now it's plotted slightly differently. So on the bottom we have the accuracy epsilon to which we want to have the sequence approximate our original single qubit unitary. So we have epsilon. And then here we have the T -- the T count, this resource cost that we're looking at. And these are on log scales. And this -- so this method with Alex that I just mentioned is this blue line here. And if you just look at this purple line is the deal -- Nielsen Dawson implementation of Solovay-Kitaev. So this is kind of state of the art for many years, at least seven years maybe. If you're not doing brute force lookup. So brute force lookup is exponential time. So you can get something closer to this if you allow exponential time. But if you only have polynomial time, then the best was this purple line here. And then the method I just mentioned that Alex and I worked on, that brings down several orders of magnitude better resource count and at the same time you're getting a much better, you know, for a given -- say I can only afford a thousand T gates, well you can see you get much better precision on that decomposition or the approximation to that unitary. These other -- this blue and this green method, these are two of the methods that Ken mentioned yesterday. This is Cody Jones' work and others. And this is using this technical called phase kickback, which is like phase estimation. And then also this programmable ancilla rotation technique. So you can see that these -- you know, there's tradeoffs in which curve you're going to want to use, depending on what your accuracy needs to be. And what I'm going to talk about today is how we -- this red line, which is the work that Guillaume and I have done and so I'll show you how this works, this red line. >>: [inaudible]. >> Krysta Svore: Which one? >>: Well, the ->> Krysta Svore: The red or the blue? >>: Well, I think it's pale lavender. >> Krysta Svore: Oh, this one [laughter]. >>: Yeah. >> Krysta Svore: So this one here, so we have a -- so you can do a brute force search. You can create a lookup table of the minimal T count sequences and you can do that up to a certain length. So we have a certain size database that we produce all of the minimal length T circuits. And then we use actually the Nielsen Dawson algorithm, which is a come mutator base, it grows like the length to the fifth. And so we plug in our database into that algorithm and that's where you get this improvement: And there's more tricks in there that we can ->>: [inaudible] also be interesting to have on that slide is the theoretical best, which we know is roughly. >> Krysta Svore: Sorry. It sits right here actually. So the theoretical best is C equal to one. And it's going to be -- it follows pretty much like this, if you follow where my laser pointer is. Yeah. So the solution I'll talk about today is that the red curve there -- and we're going to use state distillation techniques to achieve the rotation. So we're not going to decompose into a long consequence, we're actually going to use resource states to produce the rotation we need. And this is a trick that has been used in the past. And, of course, we hope to use fewer resource states or fewer of the -- if you want to think about it in T count cost, fewer T count or fewer resource states than these Solovay-Kitaev style methods. And the way I'm going to -- whoops. Sorry. And when I talk about contrast here, for small angles it's just basically becomes the difference between the rotation angles. And we're talking about really small angles in a lot of cases here. So how do we implement in general a Z rotation using a resource state? So this -- this formula or this technique is not new. We did not come up with this. It's probably been known since the late '90s. So we know that we can -- and this is what we use to implement a T gate, for example. So here we have a resource state, this Z of theta. So this is some resource state which I'll define in a moment. And we have our state psi. And we want to be able to apply a rotation to psi. But we don't want to do it just by applying the gate directly. We're going to do it by applying a resource state. And we also want to be able to do it using only Clifford gates, these cheap, cheap gates, if you will. Clifford gate's a measurement within the circuit itself. Okay? So now the state psi comes in. We apply a controlled not gate. You can also use this to do an X rotation. But I'm going to go through the Z -- the case of performing a Z rotation. So you applied a controlled not gate and then you measure. And depending on the measurement outcome, depending on the measurement out, you'll either perform the angle or you'll perform the negative of that angle. So here the resource state we're using is this zero plus E to the I theta 1. And, of course, the incoming state isn't some arbitrary single qubit state. And now we can step through the circuit. So you see that we start in this state, then we apply this controlled not gate which results in this state here, and then we measure. And when we measure, we either get out this state or this state, which is equivalent to applying theta or minus theta. So this is a probabilistic application of the rotation. But we also apply theta or minus theta. >>: Why is [inaudible] this resource cheaper than applying [inaudible]? >> Krysta Svore: So it depends what you're device -- you know, the fidelity. If you had -- so it's not cheaper if you had extremely high fidelity to apply this rotation because then you really don't need error correction. And maybe for your algorithm -- you know, your algorithm is a couple gates long. You don't need super high fidelity to make it through that circuit with a successful result, right? But in general our circuit as we've been talking about yesterday, are -- you know, have order N to the fourth or, you know, have 10 to the 15th, 10 to the 15 operations. So we're going to have to error correct and protect the operations because we know that we can't perform those high enough fidelity. So we have to go about -- so in this case now we're going to break it down to components that we know we can protect with error correction. We know we can protect measurement and control not. So these are the Clifford group and they're easy to protect. That does that help? >>: Just as an extra comment. This think which I think which is key for quantum error correction is you can't -- you have to not allow yourself arbitrary rotations. Because if you did, you'd just be like an analog computer and you couldn't correct that. But we still have to do arbitrary rotations. >> Krysta Svore: Right. >>: So you have these state [inaudible]. >> Krysta Svore: Okay. So one of our favorite non-Clifford gates is the pi over 8 gate. So let's look at how this works within the scheme I just showed you. So as we saw yesterday, the pi over 8 gate is the rotation about the Z axis by pi over 4. And the resource state, I'm going to call it an H state. So the resource state is going to be this state here. Which sits on the block sphere right here. Now, so I'm going to -- I'm performing this -- so I can actually convert the resource state I showed you in the previous slide is actually just -- if I use this H State, it's applying HSHX to this H state, and I get this Z of theta state that I had in the previous circuit diagram. So we have -- I have this resource state here. And then I can just go through the circuit just as we just did on the previous slide. And you'll achieve either the application of T or the opposite and actually T adjoint. What's nice about the T gate is it's deterministic to fix this. So if I accidental apply minus theta in general the next application of the circuit I have to apply to theta, hoping that I get theta and then I'm back to the theta I originally wanted. In this case, because SS, being the matrix 100 I, ST adjoint is equal to T. So I can fix up my circuit. If I apply minus theta here, I can fix it just by applying S right after. So this is a really nice trick for T gates. This is not true in general. >>: S is Clifford? >> Krysta Svore: S is Clifford, correct, yes. >>: This is what [inaudible]. >> Krysta Svore: Yeah. So this follows my rulings of using only Clifford operations in my circuit. Thanks, Alex. >>: So what's the T cost of making H? >> Krysta Svore: Oh, I'm -- actually I think the next slide I think I say that I'm making some assumptions. Perfect segue. So to obtain the H state, we have to use magic state distillation. Or that's a technique we know we can use to get the H state. And there's several protocols. So I'm not reviewing how those protocols work today, because we don't have a lot of time. But there's three great protocols for doing this. One being a really recent protocol by Knill and his colleagues, Meyer, based on the 4, 2, 2, detecting code. And then there's the more standard one we all typically think of, which is this 15-qubit protocol by bravado. There you have 15 -- you create 15 copies that are pretty noisy of the H state. You basically go through an error decoding scheme. And then you're left with one H state that is much cleaner than the original 15. So and depending on your base fidelities for all the Clifford operations that happen in that circuit, you might have to do this a few times. So you would -- you know, you might have to go 15 to the N where N is set by your fidelities. And then there's a more recent protocol by bravado and what that looks very promising as well and should have slightly better scaling than these others. So, again, we are assuming here that we have one of these methods to purify our H states. You know, even for doing the Solovay-Kitaev just to T gate you also have to do these methods for each H state. So we're making a fair assumption here, the same cost. And, of course, we have these perfect Clifford N measurements. So I just reviewed all this. And just for notation, I'm going to call this H state that has pi over 8 as the angles, I'm going to call it H0. So if we look at this circuit, so now this is very similar to what we just saw. I'm going to take in two H states. Then I'm going to perform a controlled not and a measurement. And what happens here? Basically if we step through the controlled not, this is the resulting state after the controlled not. But what's interesting is when we measure, if we measure the state zero, then the -- and the probability actually is this here, the probability measuring zero is given by this formula here. And the probability of measuring one -- sorry, this is the resulting state. And the -- when we measure one, this is the resulting state. And when we calculate the probabilities of achieving each of these states, we actually have -- they are not equal. They're unequal, right? So the probability of measuring zero is actually three quarters in this circuit and the probability of measuring one is actually one quarter. So this is interesting because what's actually happening is on the next slide I'll show what I'm going to assume my definitions here. What I'm actual doing is if I measure zero, I'm actually moving to some other state that I'm going to call HI plus one. And when I measure one, I'm actually moving to a state I'm calling HI minus one. And when we define what those are, we're actually moving to these states every time. So we're either -- and you can kind of think of this moving up a ladder or down a ladder of these H resource states. So I've defined -- I can solve exactly for what these thetas are. And I know what angles -- what resource states I'm producing. So I can use this circuit to produce a whole set of new resource states, new non-Clifford states -- or new states that I can use to perform non-Clifford rotations. So this circuit uses purely H states. And what's nice is -- okay, so imagine I start with two HOs here and I either get out an H1 and on the first step of the ladder I'm going to toss it if it's not H1. If I get H1, I can feed H1 back in with -- now I use one more H0 state and now I can go up to H2 with high probability and then feed it back. And I go up to H3 were high probability and so on. So I'm creating a whole set of HI states. And I have a higher probability of moving up the ladder, up the ladder than down the ladder. So kind of pictorally what happens is I feed into H0 states. I can move up the ladder with a given probability and maybe continue moving up the ladder with high probability I'll continue to move up the ladder. And what's that probability as we move along the ladder? That probability of moving up the ladder is always between 75 and 85 percent. So if I need to reach some high HI state, I have a really good probability of making it there. And it's only costing me one H0 resource state at each point on the ladder. >>: Sorry. >> Krysta Svore: So why is this useful? So these H states are useful because now these -- recall the original circuit I showed. I had some resource state coming in. And I can use that to directly apply that angle on a state psi. So what angles can I apply using these H states? Well, the H states sit at all of these red points if you look at the -at these different angles. So it allows me to actually perform these different angles around the Z axis using just the scheme of finding the HI I need and then -- and then applying that rotation using the Z circuit I showed earlier. But now we don't need something that is exact. We need something that's approximate. So we can use this scheme if our angle is sitting here, then we can slowly start to approximate, you know, maybe I'll use this state to get there and now I have this gap so maybe I'll use a small angle to get the rest of the way there. And so now I can repeat, repeat, repeat, and pretty soon I'm real close to the angle I need. So I used some number of H states to get there. But I can achieve an arbitrary rotation in that manner. So what I would do for the protocol is find out what accuracy I need, which epsilon, get the target rotation angle that's close to the angle I'm looking for, distill the HI state that yields that angle or that rotation, and then I'm going to run the ladder, you know, run the ladder to get it, check how far I am and repeat. So I'm going to repeat until I'm close enough. >>: You have the 85 percent chance of doing [inaudible] but so if you had [inaudible] to the ladder, that's [inaudible]. >> Krysta Svore: Correct. Yeah. Yeah. So we have -- I'm going to show the cost, the predicted ->>: It's not a big setback if you walk down the step, you still have a good chance of [inaudible]. >> Krysta Svore: Yeah, so you might ->>: You don't throw it away? [brief talking over]. >> Krysta Svore: Exactly. Yes, a biassed walk. So I still have -- even if I step down the ladder, the next time I'm likely to step up the ladder. So I'm going to consider two different costs for the scheme. So the online -- whoops. I'm sorry. The online cost is going to be the cost -- the number of T gates I'm actually applying to my state psi, right? And then the offline cost is the number of H states I use to produce the state that I'm using to apply on psi. Does that make sense? So we have -- you know, I'm calling this online and offline because offline we could prepare the resource states. And they could be doing that in parallel in a factory. So it's not costing me circuit depth in terms of my computation depth. Okay? And so for this scheme that I just presented, we now have C. So the C value, the best you can do is 1. Now the online cost the C value is roughly 1.23. So we've gone from something that's, you know, three and a half to 4 to 1.23. So this is really a nice improvement. The offline cost -- now, again this can be happening in the factory. So we care a little less about what the offline cost is. But the offline cost is roughly 2 or 2.22. >>: [inaudible]. >> Krysta Svore: So offline is my cost in a factory. How much H states my factories having to produce to achieve the online rotation. The online cost is the depth -- the number of H states actually used in the -- basically the logical computation. So for example the Z circuit, we'll use that to apply the rotation. That takes in some H state. And we might have to do it many times. So I talked about, you know, compressing this angle ->>: [inaudible] ultimately using the [inaudible] uses in some online application? >> Krysta Svore: Right. So a given H state, if it's, you know, H10, that took additional H states to reach H10 because I've [inaudible] H0 to get up the ladder. But I can be doing all of that in a factory. So it's an offline cost. I can pre-prepare -- I can prepare those in advance. >>: Okay. >> Krysta Svore: So I don't want to count the HOs. I just want to count how many HIs I'm using. >>: Okay. >> Krysta Svore: So here we have the accuracy versus the cost in H resource states. And the solid line is the mean. And then we have the deviation about the mean. So on average, these are the C values we get. So I just -- we have some enhancements to this protocol, which I'm going to step through very quickly. So we have other possibilities, other ways to produce a ladder -- a ladder of states. And this is the circuit for another possible ladder. Again, using only H0 resource states as input Clifford gates and measurement. And it outputs a different state given down here. Also again with high probability of moving up. Here's another one and another one. You can turn to the paper for more details. But these denser ladder of states, so now recall that before I just had these red points as the angles I could achieve. But now you can see that we have a denser set of angles we can achieve and a denser ladder. So we can use this and we can see how this improves our cost. So now using our denser ladder of states, C goes to 1.04 for the off -- I think I -- sorry. I flipped these graphs. Apologies. This is attached to here. So C goes to 1.04 for the online cost and 1.64 for the offline cost. So this denser ladder gives us much better resource scaling. >>: I wasn't going to split these into offline and online costs [inaudible] I did everything sort of online, do these numbers add or should I make them [inaudible]. Suppose I didn't make this distinction and say, look, I'm just going ->> Krysta Svore: You just want to cost it. I think you want to add -- well, these are in the -- let's see. >>: I think they're 2.68 or should I [inaudible] the total ->> Krysta Svore: I think it's [inaudible]. [brief talking over]. >> Krysta Svore: Okay. So now we can actually minimize the online cost further and bring it down to constant. And this is a similar trick to what Cody Jones did in his work. So now instead of doing this approximation, you know, apply the angle, do the next one, do the next one until I'm close, right, which requires several online steps, just try to apply the closest angle up front by pre-preparing that state. And I may or may not get it. If I -- I may get theta or minus theta. But with 50 percent probability I get theta. So then I can take one more step, if I get minus theta, then I'll prepare 2 theta. So you can imagine in advance I maybe pretty theta and 2 theta just in case I fail. And with pretty high probability I'm going to succeed. So now this is what I just said in words. The expected online cost goes to 2. So this is 2 steps, you know, purely. So now the expected online cost is 2. Sometimes it obviously takes more. And then the offline cost is going to remain this 1.-- C equal to 1.64. So how does this compare to more traditional Solovay-Kitaev? Here, this is just for Z -considered just Z rotations. And I have accuracy epsilon versus the cost this resource cost. The -- this is the -- let's see. The full line here, this is the Solovay-Kitaev Dawson Nielsen tile in this original purple curve that was up to the right. And then this here is our offline and then our online cost. And you can see that the scaling on -- clearly is much better for this protocol. For most -- for most accuracies. So if you need an epsilon that's smaller than 10 to the minus 4, you know -- sorry, that's bigger than you can use Solovay-Kitaev. But for anything where you need really small -- really high accuracy you would want to use this protocol. And you can use this for any random rotation. So we know that any rotation is a set of -- we can do with 2X and a Z rotation. So we can use this protocol to do both the X and Z rotations. And so we can use it to any arbitrary rotation. And here's the scaling for any arbitrary rotation. You can see it crosses just a little -- you want to use it for just a slightly different set of accuracies. But the scaling is still quite a lot better. And again, here you can use the online trick I mentioned where the online cost is just 2. But here I'm graphic the worst -the worst scenario I suppose. And in the QFT ->>: [inaudible]. >> Krysta Svore: Solovay-Kitaev with Dawson Nielsen. >>: But not your ->> Krysta Svore: Yeah. I'm pretty sure this graph is -- I might have grabbed the along one. This might be ours but I'm pretty sure it's Solovay-Kitaev with the Nielsen Dawson implementation. I'll double check. >>: [inaudible]. >> Krysta Svore: Well, it's still pretty much -- it's pretty close to this. >>: So the [inaudible] check point is to look at the [inaudible] minus 8 [inaudible] 3,000 gates there. So it looks like our ->> Krysta Svore: Oh, it might be ours. Yeah. Yeah. It must be ours because ->>: This is all [inaudible] T gate, right? >> Krysta Svore: Yes. It's ours. So this is the quantum 3. So if we're going to implement the rotations regard for the quantum 4 transform here's just a sampling of three rotations that we would use in that circuit and three different accuracies, three different epsilon values. And then comparing the Solovay-Kitaev cost to these different again online can be set to 2 if you like. And these are -- the prime says we're using the more enhanced ladder, the denser ladder. And basically you can see, well, the dramatic improvements in this terms of the resource cost. So you're looking at -- looking at pi over 16. If you go out to 10 to the minus 12. If you use Solovay-Kitaev decomposition, you're looking at 30,000 T gates required. And in this case, you have -- you know, you're preparing roughly a thousand offline and then it's a cost of two online. So this is a really big improvement. And then that's true for any rotation. So in conclusion what I've just shown is a distillation based, a resource based alternative to Solovay-Kitaev. And it has some nice improvements in the scaling in terms of the resource cost and also in terms of the accuracy. So it's easier to achieve more precise rotations as well. And so here's the numbers we've already discussed. And then again you can use this online trick to get a constant online cost which is great. I also -- I didn't mention that the errors are very well based in this scheme as well. So we did study how the errors behave. And it might be, what we're looking at right now is how we might be able to decrease the distillation cost because the ladder itself has a little distillation -- you know, some distillation property to it. So you might be able to feed in an even noisier H state and still achieve a fairly clean HI state. So that could save us some additional cost as well. And of course other things to look at is when we would use these different schemes. It would be great to have an compiler that says I'm going to use this scheme when I need this rotation to this accuracy and so on so we could choose between these different schemes. It would be great to also look at this for other bases and so on. So hopefully this gave a introduction to circuit decomposition. And thank you for listening. [applause]. >>: [inaudible]. >> Krysta Svore: Yes? >>: You still suffer from this like 50 percent chance of getting the wrong [inaudible]. >> Krysta Svore: Uh-huh. >>: So I know in the Jones paper he says you pick sum K, you try K times, you punt and then [inaudible]. >> Krysta Svore: Right. >>: So is that also your plan? >> Krysta Svore: Yeah. When you do the online, the cost ->>: Yeah. >> Krysta Svore: Yeah. So far that's what -- that's what we're -- we -- that's what we were thinking with this scheme is that if it starts to go is -- you know you could pre-prepare two or three or four or ten, but choose some threshold and then if not, switch to just preparing the -- you know, doing this other scheme that has the higher online cost. >>: Say we take Matthias' Hubbard model and we add arbitrary couplings and then what's nice about it is we can do kind of all these rotations in parallel but ->> Krysta Svore: Oh, yeah, now you're going to be waiting -- >>: Yeah. I'm just curious if -- I'm curious if the depth -- the time depth you lose -- like you must lose some system size. So if I have a million sites on my [inaudible]. >> Krysta Svore: Okay. But you're going to lose, but the alternative is a huge cost. >>: But ->> Krysta Svore: I mean, all of these schemes with probabilistic. Right. Online time. All of these schemes are probabilistic, and they're all magnitudes and magnitudes better than doing the Solovay-Kitaev pre-compile you know your exact circuit and the gates. So you don't ever -- well, in some cases you might still do that because maybe it's hard to implement a factory or -- you still need a T gate, you know, H state factory. So I think in general you're better off to kind of -- maybe you can look into the pre-- you know, preparing in your factory, preparing in advance -- you could imagine you have all -- a bunch of H states available at any given time point. And if you're wrong, maybe you give a little extra depth. But -- but I think it's still -- even if you end up having to wait in your circuit, it's still going to be a lot better than doing the Solovay-Kitaev sequence. >>: I guess I'm just ->> Krysta Svore: Where it's paralyzed from the beginning. You already know the time steps. >>: I guess I'm concerned about I have to cut off at some point, so some probability that happens. >> Krysta Svore: Right. It will happen -- [inaudible] it will happen when separating [inaudible]. So in terms of like the actual time of the [inaudible] I don't know, it's not clear to me how much that ->> Krysta Svore: Well, and that algorithm what happens if you -- these are tiny rotations. What happens if you just simply miss one? >>: I think what's funny is it's not -- I mean, you rotate the other way. So I guess you could skip it once in a while for sure. >> Krysta Svore: Yeah. I think ->>: [inaudible] David was talking about. >> Krysta Svore: Yeah. It's really -- I think it's worth exploring how -- which -- when to use the protocols and how the -- you know, this probabilistic scheme affects you. >>: [inaudible]. >>: Because you're doing so many millions of Solovay-Kitaev. [inaudible] make sure the average comes out to the angle you want. It won't make any difference on the result. >> Krysta Svore: Yeah. >>: Just to add a comment to this. Perhaps there's a solution to this coin toss problem. There's a notion called forced measurement. Actually [inaudible] different context has a paper on the archive just a week ago. So there's an idiotic idea where you can deform the Hamiltonian of the system to drive the ground state from where it is initially to, you know, a fixed outcome. And that produces a result on the ground state which is likely [inaudible] except it without the probabilistic aspect. So it's possible there's some kind of forced measurement scheme that solves this coin toss problem. So we should think about that. >> Krysta Svore: Yeah. That would be great. >>: Other questions? Is this. >> Krysta Svore: Yeah. >>: You have the definition of [inaudible] exactly on the slides but [inaudible]. >> Krysta Svore: Oh, you want to see the. >>: They're transcendental; is that right you can these data that you find? >> Krysta Svore: [inaudible]. >>: I mean, there's not particular rational combinations [inaudible] are they? >> Krysta Svore: No. Yeah. >>: Well, the table was only the red ones though. >> Krysta Svore: Oh, I'm sorry, that doesn't have a table. >>: He wants a table of the original ones. >> Krysta Svore: Sorry. I know which one. This one. >>: There you go. >>: No. >> Krysta Svore: No? >>: No? >> Krysta Svore: Oh, this is the theta ->>: The previous slide. >> Krysta Svore: Previous. This? >>: Really? >> Krysta Svore: I think now we're ->>: That was the only. [brief talking over]. >>: The very bottom. >> Krysta Svore: Oh, I thought -- sorry. >>: The cogent of theta is co [inaudible]. >> Krysta Svore: You want [inaudible]. [brief talking over]. >> Krysta Svore: For achieving the table. Yeah. This is what we solve for the thetas. >>: That's it. Yeah. >>: [inaudible] [laughter]. >> Krysta Svore: Okay. >> Michael Freedman: More questions? So actually I just wanted to comment. This is a really beautiful work. It seems to me that if I understood correctly what you did is you didn't solve the problem that so many people were thinking about, instead you worked around the problem. Because I think the way people visualized this problem of improving Solovay-Kitaev was to find a more systematic way of exploring the group out to a certain radius. And you just said well forget about multiplying in the group, let's do a measurement based protocol, right? >> Krysta Svore: Yeah. Well, we started to think about -- we started this thinking we wanted to improve magic state distillation schemes and then realized, boy, you don't -we can actually use the similar scheme to achieve any rotation. >>: You have very beautiful, beautiful stuff. >> Krysta Svore: Thanks, Mike. >> Michael Freedman: Thank you. [applause]. >> Michael Freedman: So last speaker of the first session, Dave we can, I'm very proud to introduce one of our chief architects of LIQUi. Is this a LIQUi tutorial? >> Dave Wecker: Yeah. >> Michael Freedman: Thank you, David. >> Dave Wecker: So this is going to be quick. So I apologize for things flashing by. But the idea is just to give an idea of the system. This is usually more like an hour and a half talk with follow-on. This is why we handed out the paper yesterday for people to look at the overview. But to be honest there's 150 page user's manual. There's a large API document set and so forth. So we'll just give you a taste of what the simulation looks like. We did have some basic goals. We wanted simulation environment that makes it easy to create complicated quantum circuits. We wanted simulation that should be as efficient as possible with as large number of entangled qubits and sets of them as possible. Give you some statistics along the way here. Circuits should be re-targetable. This subject just a simulator stand-alone. We want to be able to send this down to other, be it classical or quantum, so this thing has a life of its own over time. We don't want it to just be a closest and that we throw away. And we want to provide multiple simulators targeting tradeoffs. Because there's lots of different things you want to do, lots of different resources, and you should not have to figure that out up front. You should be able to design a circuit and then try it in different ways. And we want maximum flexibility. So we start with a language. In our case the top-level language is F#. Any of you know ML or Caml or OCaml, this is our version. It's a functional programming language. The main reason it was chosen is because it's compact, it's very easy to write a lot of complicated things very tightly, and it's very provable what you can do in it, since it's functional. So there's lots of ways we can optimize the compilation. However, you're not stuck there. There's a scripting language so that if you want to just use this from an interpreted level, if you wanted to submit to a cloud, if you want to do things in various ways other than sitting there in a programming environment you can do that. You can come from C# or actually any other programming language that can link to a DLL on Windows. So you don't have to sit in F# the simulator itself is written in it. And all of these compile into gates. Gates are basically functions. They're subroutines. They call each other. You can define new gates that contain other gates inside ever them. And all of this gets compiled down to be run on simulators. And I say simulators in plural because we currently have three different ones. There's a universal simulator which will let you do any quantum circuit you can come up with. Currently in 32 gig of memory you can do about 30 qubits. No restrictions. Fully entangled, any way you want. There's a stabilizer simulator lets you do tens of thousands of qubits. Of course, you're trading off here. You're going to have to stay within the Clifford group. This is basically the equivalent of CHP from Aronson. And there's a Hamiltonian simulator. We want to get down into the physics. We'll talk about that a little bit more since this group will care. We have a fairly flexible Hamiltonian simulator that's extensible. This gets sent down to one or more runtimes. Runtime client is a client runtime. Run this on your laptop. A service which lets you run this across any number of machines in an HBC cluster on your LAN, doesn't matter, it will self-install across your LAN. It's a benevolent virus if you want to think of it that way. It actually installs as a Windows service on its own across all the machines you tell it. It will then distribute your work, send it out to all of them, bring them back and give you results and then shut themselves down. And you don't have to do any install, as long as you have privileges on the machines that you're allowed to talk to. And then there's the cloud version. Run it up in Azure. Go to the web, submit the script, it compiles it, runs it, sends you your results back. But there's another path. These same functions, instead of being compiled into code can be compiled into circuits. Circuits of data structure. Now you have a data structure you can manipulate. It's a large number of tools that lets you work with these circuits. Optimization tools that will let you do anything from relaying the circuit out, substituting information. You can also do things, say, for Hamiltonians where we break everything down into a single unitary and then operate on a decimated version of the unitary that understands physics so we can make the matrices much smaller. Quantum error correction. I'm not going to actual show examples today because I don't have time, but you can say any circuit and say apply this QECC algorithm, it will rewrite the circuit and then simulate it for you. Example. Stevenson is built in as an example, but you can put anything in there that you like. Solovay-Kitaev. So the work that Krysta because talking about, you can sit there and say take all my rotations, let's replace them all with my favorite replacement. Let's take a look at the gate costs. You can get statistics out of it, you can run the circuits. Export. Since the data structure you can now send this somewhere else. You have two general choices for back ends, classical, where you have a super computer. You want to go out somewhere with lots and lots of processing and you want to do linear algebra. Or you have a quantum machine and you want to use this as a front end, to be able to define circuits, you want to be able to compile down to the machine. Yes, that doesn't exist yet. Look at Brian smiling at me. But I'm an architect. My idea is to make things not be obsolete over time. So I try to make sure it will last at least 10 years. And rendering. You want to draw pretty diagrams. You want to do things with the circuit so that you can publish and you can show what you've done. All of this is extensible. So the user themselves can do what they want on top of it. I should give a little bit of syntax for F# because you're going to see some going by. Parentheses don't mean function calls. They're just grouping. Arrays are accessed with a syntax with a dot between the array and the index. Lists have square brackets with semi-colons. Function calls use white space between the arguments. Output can be piped between functions, so I can take F of A and B and pipe it to G as the argument that's going into G. The empty argument is just two parens. And then we've implemented our own operators. Some very simple one. The typical complex math you'd like to be able to do. Multiplication, Kronecker Product vectors and matrix and the like. So all of that is just very, very tight. You just say M star bang M and you've done a Kronecker Product between two matrices. And you can map a gate to a list of qubits. You can also map it with a parameter. This lets you say CNOT. This qubit with all these other qubits, map it across or measure all of these qubits and do it in one operation. And you can take any general syntax that you like, if you hand it to bang bang, you wind up getting a legal list of qubits back. We'll see examples in some of the code. It just makes shorthand for quickly operating on qubits. So let's do teleport. I'm not going to go through the actual algorithm. I think everyone in the room has seen it too many times. But this is the simplest thing I could define. Let's define an EPR function. Takes a list of qubits. And it's a Hatamard on the head of the qubits and a CNOT on the first two. By convention every day it just eats as many qubits off the list as it needs. This way you can have a large number of qubits and you just hand it, the gate, as an -- the gate takes it as an argument and pulls off from the front of the list. If I ask the system to rendered it, this is what you get out. So no other code is necessary. You just write the function. If I want to write the full teleport, again it takes a list of qubits. I'm going to give names to the first three in the list so I can use them. Take EPR of qubits one and two. CNOT them, Hadamard them, measure qubit one, do a binary control gate which is of an X gate on qubit one as the control, qubit 2 as the gate we're operating on. Likewise the binary control of the Z gate. That's the entire function. There's what it looks like when you ask the system to render it. Done. I can put a little more in. I have some dummy gates like a label gate. Map it across. And now I've got labels sitting on the diagram. It's not that pretty, so I can say we have a circuit now. Let's fold it together and now everything lines up pretty and we can see where the parallelization can be done in the algorithm. Yes? >>: Sorry, Dave. So how do you -- what if I wanted to do this CNOT upside down or ->> Dave Wecker: Change the order of the qubits. It's just whatever order the qubits happen to be handed. You hand it -- think of it as wires. I can hand any of these to any wires and it will figure out where everything goes. It should also be noted it's extremely efficient. It doesn't actually expand out the unitaries across the wires. It keeps everything the size of a CNOT never gets bigger than a CNOT, no matter if I'm applying it to wire zero and wire 37 it doesn't matter. Everything still stays as tiny as possible. If the state isn't entangled it also doesn't make that any bigger. And it keeps substates that only entangle together. So the use of memory is very, very efficient. We'll show you some complicated circuits. We've got some with millions of gates in them. If I put a harness just regular language code around it, it just looks like a function. The language doesn't know any different. And if I just hand it the qubits initialize one to a random state, run it, look at the output, here you can see we teleported the same state all the way through and the bits on the end was whether we measured a zero or a one to apply the X or the Z. Likewise the entire system can be run from scripting. I just wanted to show what a script looks like. It's not that important, other than there's just a little bit of a harness of what you need for libraries, Lotus script and if this is run interactively you call the routine you defined. And so it's the same source language. You don't have to learn two different languages. But it can be run in several different ways. If you ask the F# interpreter run it, it will run it, it will exit. You can also say to it, I want to use the script which will run it but then stay in the interpreter. Now all the variables are available. I can sit there interactively working with the code I just generated. I can say run it through the top level executable and now we compile it. We actually compile it into DLL, all of your code, and then you can call whatever routine you want. And since you compiled it, later you can load it if you don't want to compile it again. And now you have just a DLL that's loaded into the executable. So it's very efficient. There are a lot of built in gates. This is just a small list to give an example what they look like. Standard gates that we've all seen, parameterized gates, we go down to pseudogates that get more interesting that are nonunitary, lets you bring qubits back to life once you've measured them. Metagates like binary control. And then things that wrap another gates, adjoint. Take any other unitary, the adjoint of it. Controlled gates. Controlled, controlled. A wrap gate is a meta gate that lets you say take all these gates, consider them a new gate. So, for instance, a QFT can become a gate. Now you can say in your diagrams what level you want to see on output. Do I want to go all the way down to the bottom, do I want to go up to the QFT, do I want to see a modular adder, do I want to see Shor's algorithm as one gate? And you can lay them all out that way. Transverse is an example of a quantum error correcting gate. Take any gate, do its transverse version. And the Hamiltonian gates. None of these are baked in. I'll show you that on the next slide. But, you know, you can wrap these together, call them inside of each other. So I'm doing measurement on the head of the qubit and binary control of the adjoint of a T gate on those qubits where the first qubit was measured. You can get these very, very tight, easy to work with. This is a CNOT. When I say it's not baked in, you can redefine anything you want in the system or create your own. So I could override this gate. In this case CNOT has a name, some help if you want. A sparse matrix. It defines where the elements are. And how to draw it. Go to line zero -- excuse me, draw a line from zero to one, go to wire zero, put a circle that's closed, go to wire one, put an O plus. So you can get up all the drawing instructions you want. And there are boxes and labels and all sorts of things you can do. But all of those were functions. I said you can also go the other way and make circuits. Circuit compile says take that teleport function with these qubits, give me a circle back. When I say dump it, I get back out, okay, teleport had a Hadamard, a CNOT, another CNOT, this Hadamard. These are the binary -- the measurements in the binary controls. And since it's a data structure, I can dump this in any format I want. If I want to put this out in straight matrix format, if I want to hand it off to Matlab, I want to hand it off to my favorite linear algebra package or I want to do this as controls 4 piece of hardware, it doesn't matter. It's just data at that point. We can actually do some fairly sophisticated things very easily. So here's just an entanglement test. You notice when we measure we get out all zeros or all ones because everybody's entangled. The actual code is take a Hadamard to the first qubit. Remember it. For everybody in the tail, CNOT not the first qubit with it. That gave us the whole middle. And then finally map a measurement across all the qubits. And this is pretty efficient. The numbers in the brackets are seconds it took to operate, .04 second or whatever the numbers were. This was I think on my laptop. Here's a little more sophisticated version where we grouped these so we can do them in parallel. If I say fold it, they're not brought down to a fold it form. You can see what the parallelization looks like. The actual code I'm not going to go through, because I do some tricks here on purpose to show some things could you do in F#. But very good at list manipulation, very good at data structure manipulation. So it's fairly tight. And here's 20 qubits, took two seconds per run to do. Fully entangled. And this is running out on my cluster. There's 24 hardware threads on each machine. You'll notice the machines are up around 90 -anywhere from 90 to 100 percent in most cases, all 24 threads in use. This is running through, did a thousand runs of 22 entangled qubits in three and a half minutes. And just to show out of a thousand runs, 489 got all zeros, and five 11 got all ones. So our random number generator seems to work pretty well. Shor's algorithm. So everybody publishes four bits, you know, factoring 15. It's 8200 gates. This is a higher level view showing the modular multipliers as we're going through. This is using Beauregard's algorithm. We've done 13 bits. We factored the number 8189 to show you how far. That's about half a million gates. And that's running on one machine, each one of these. So when I say we're distributing, she's are ensembles. Each one is running because it's just too expensive to distribute the actual computation. But this is in under 32 gigamemory running on anywhere from your laptop to your server. And by the way, it takes about five days. So to give an example, this is the modular adder. The actual code that generates this looks like do the controlled, controlled add, do an inverse add, do an inverse QFT. This is the actual F# code that generated this. Do the QFT, do the control add, the CC add, do the I be verse QFT, clear the ancilla that you used and finally do the QFT and the final add. But you can build up a library then of all these subroutines that you used and it becomes very clean, very easy to work with. To show actual performance, this is factory numbers from 45 to 8189. They're factors, the amount of time it took. The blue graph -- or the blue line at the top is the first version of the simulator we did, the optimized version. V2 and now V3 is in green. You notice we went from three years down to five days to do that 8189. So a lot of performance work has been done. This is a very efficient implementation. And I'm proud of it. >>: What causes the break in the green line? >> Dave Wecker: I have to switch algorithms. I'm using a memory intensive algorithm in this one. I run out of the 32 gigamemory, so I switch algorithms at that point. So let's talk a little bit about Hamiltonians. So this -- I left out the stabilizer simulator completely since, again, time. But I think everybody here knows what's involved. And it's basically if you know CHP, it's an equivalent implementation for that. The Hamiltonian simulator, this will -- the one version does Adiabatic simulation. It has built-in simulator for doing spin-glass models. Everything that we've seen published by D-wave we've tried implementing. And sure enough, we can simulate and we get their results, which is always a good thing. There's a sample. Every one of these has samples built in. We have a ferromagnetic sample that you build ferro chains and entity ferro chains and clamp the ends or not clamp the ends and just anneal them to see how they do. But, again, we're circuit model. So we're actual building circuitry with rotations in X, rotations in Z for the clamps on the end double rotations for the joint term. And so this is all just a simple circuit that we can then optimize and work with in the circuit model. We also added a decoherence model, an entanglement entropy measurement. So this is random measurement errors where you can also do single bit flip and phase errors. And this is, for example, annealing schedule going up to the right. So as we're annealing through the log of the error probability and then the entanglement entropy, depending on where the error happens. And so you can just get an idea of your probability of getting a correct result and where the system goes. A lot of these things I'm now showing as results are papers that are in progress. They should be coming out in the reasonable future. Depends on how we get things done. >>: [inaudible] Hamiltonian what was the largest end you can simulate? >> Dave Wecker: Again, I can do 30 qubits in 32 gig of memory and I can -- so that -- I can go up to 30 qubits here. And as you double your memory, I can add a qubit. It's not a system restriction. It's the amount of memory you have. So Alex has 192 gig machine, so you get a few extra qubits. But you know, you've got to get very big to get anywhere much larger. >>: 30 qubits [inaudible]. >> Dave Wecker: Yes. Yes. Sorry. We do traveling -- a little work on traveling salesman. >>: [inaudible] memory requirement, say 30 qubits is ten to the ninth? >> Dave Wecker: Uh-huh. >>: And then ->> Dave Wecker: They're complex, double precision complex numbers. >>: Double precision complex. So that's 16, right ->> Dave Wecker: Right. >>: So you should be 16 gigabytes? >> Dave Wecker: Right. >>: Then you have a one ->> Dave Wecker: You have an input and an output vector and you've got a matrix [inaudible] the matrix in between are the product of products in between. So, yeah, you -- that's -- and we can actually fit that in. So the full operation fits. We talked a little bit about doing distributed, being able to go to the disk, the speeds get ridiculously slow. There's no point. And it's more the feeling of there is a reason you need a quantum computer to go lots of qubits. So if your 30 is enough, you know, for an average machine for people to do the experiments they need to do, to prove that when they had enough qubits they could get what they wanted. Someone did ask me to have a petabyte store in the back end of the cluster could we do 45 quits because it would fit. Then I did the math for what one gate operation would cost and everyone stopped asking. This is Matthias' Hamiltonian he gave me for traveling salesman. We're encoding edges here. The problem is without higher order constraints even though we get the green answer, which is the right answer, this is just six cities, the system says no, no, no, you want a salesman on the east coast, salesman on the west coast because there's no constraint to keep it from having a non-broken loop. So they're all closed loops. But now you have two of them. There's no one it says it has to be a single loop. So you then have to start adding higher and higher order constraints every time you break things. But we're able to do eight cities, which is 28 qubits. So that gives you an idea simulation side why. So we can do eight-city traveling salesman. We also looked at adding more sophisticated terms to the Hamiltonian. For instance, by doing pair of edge flippings, so pairs at a time instead of single edges we're able to get a doubling of speed. And usually this is where the papers stop that say, look, we figured out how to double, you know, the rate of getting answer to traveling salesman. Well, unfortunately it's four times the cost when you actually do the gate cost to add that term. So there's no point. But it's the type of thing the papers are working on to show actual gate depth, to show actual costs for doing these sorts of things. >>: Did you have a sense that to compare to code which is [inaudible] Hamiltonian simulations and you [inaudible]. >> Dave Wecker: We're terrible. And we know it. But that's not the point. >>: But what is the relative point of magnitude ->> Dave Wecker: We'll show you are, and Matthias will give you some of that, I think also, in comments. I see him waiting. Everybody's seen the graph. I won't talk about it again. I won't say why it's good to do quantum chemistry I think that's why everybody is here. This is H2. This is from the Whitfield Biamonte paper. I should say Whitfield et al. But this is an automatic against from the system of what it looks like. This is an example of our results. The blue dots from the outputs from the simulator. It should be noted that this was done in about a minute on 20 machines in the cluster to generate the whole thing. So, again, it is pretty good at what it does. I this also mention that the X is what Whitfield got or published in the paper. We don't agree completely. You notice we're a little bit lower for the blues. And actually Matthias did an exact solution, and that's the red circle. So we actually found a couple of mistakes in the paper that we fixed. And there's mistakes in every paper. That's okay. >>: [inaudible]. >> Dave Wecker: Say again? >>: I'll let James ->> Dave Wecker: You'll let him know. Oh, good. He also sent me the wrong data. But that's a separate -- this grad student had done some work on it, and it was the wrong version. That was -- just had no way of knowing. This is why we need data provenance and version control in academic work. But, anyway, all of this, though, is doable by script. You don't actually have to write any code. We actually have a general firmionic simulator that lets you sit there and say, okay, here's the information. Here from down is actually the data coming out of a traditional quantum chemistry simulator. So these are all the constants. And you can tell it things like I actually want a single unitary out. I don't want to do a full circuit. I want to collapse the whole thing down and use one matrix and run the whole thing. You can also tell it I want to decimate the matrix into physically realizable values, for instance parity stays the same conserving regular momentum. If I'm looking for a ground state I assume half the electrons are spin up and half are spin down, and so we can decimate the rows and the columns, the matrix. And all of that's in here which now gets away from quantum computer simulation but now back to actual more Hamiltonian simulation. So thanks to Matthias we actually got something that isn't too bad. But you're no longer simulating what a qualm computer would do. So we've got to be careful how much effort I want to put there, versus how much I want to put on this is a quantum computer simulator, right? But this makes it very flexible. We can just, from the script level, do whatever you can fit into 30 qubits. Here's water as an example. So we get some fairly reasonable results. You know, it's different than what either Hartree-Fock or DFT give us. However, when we do an EigenSolver solution, again, thanks to Matthias at 100 disagrees and 1.9 for the bond angle, we get -- we were only asking in this case for 14 bit accuracy and we got 14 bit accuracy. So we're actually right on the mark of where we're supposed to be. Of course as has been brought out before, this is 34,000 gates to do water. And then 2 to the 14th, they get 14 bit accuracy. Of course to get that you need a trotterization of about 2 to the 10th. And then there's 50 samples because you don't always get the ground state. So you've got to try about 50 times to make sure you got the ground state at every point. And then there's 546 points to make a 3D graph here. And that gets us out to about 10 to the 16th gate operations. This is without error correction, without Solovay-Kitaev. So let's talk about that a little bit. Before I do, I couldn't simulate this on the simulator as a circuit. There's just no way I could pull this off in my lifetime. So now we do the tricks we talked about. We conserve electrons, we conserve total spin, we make up spins equal down spins. And our 2 to the 15th by 2 to the 15th matrix becomes 441 by 441. And since we have a single matrix, of course, 2 to the K really becomes K matrix multiplies itself. And so now I can do this in minutes instead of years or decades. But if we take a look at the real numbers given the size of water in a very small basis in the bottom, STO-3G and we now add the Solovay-Kitaev rotations, the quantum error correction and the bottom of the graph fell off, but that's okay. We wind up at around 10 to the 18th operations necessary. If we look at ferredoxin, which is what IARPA has been doing, I get up to about 10 to the 23rd operations. And this is not surprising. This is where Matthias started yesterday morning. So this is not what I would be trying to solve. This is more to prove that my dog actually barks or talks, not how well he talks. Okay? We've also lately been working, speaking of the Hubbard model from yesterday on doing cuprates. So this is what a circuit looks like for I think this was one Plakette. We're doing two Plakettes currently. And we have circuits for permutations and basis changes and the like for kinetic energy measurement. Matthias previously published work in the area just to show we get the same results. We're able to reproduce the results. And now we've built this version where we can Adiabatically prepare the Plakettes, we can join them Adiabatically, then we can do phase estimation on them. We hand them over to the phase estimator. It will then do energy. We can bring it back, we can change the Hamiltonian like the couplings in it. Now you're close. You can bet Adiabatically close to something else and do it again and the like. So anyway, there's a lot of work going on in this area. And I'm out of time. So thank you very much. [applause]. >> Michael Freedman: So time for some questions. Yes. Of. >>: So I wanted to follow up. I mean, this is all very -- it's very interesting. I was just curious, taking out, you know, the physics constraints of symmetries and [inaudible] number, I was just wondering, you know, your architectures always seem more general in the sense that you were set up to do many, many other things than just say simulate time evolution of the Hamiltonian. So compared to the code that would just simulate time evolution in the Hamiltonian but didn't take any to any special symmetries, what's the overhead of your architecture compared to -- you know, is it minimal or ->> Dave Wecker: Are you saying if I'm -- if I'm just doing circuit simulation, not doing the -- how much overhead there is there because it's general? >>: Yes. >> Dave Wecker: None. >>: There's nothing ->> Dave Wecker: Non. It's a matrix -- matrix vector multiplier. >>: [inaudible]. >> Dave Wecker: Say again? >>: [inaudible]. >> Dave Wecker: No, hard -- I was doing the opposite question. Right. I was saying if I'm just looking at as a circuit simulation, if I'm doing it as a -- like you said, as a Hamiltonian simulator, yes, factor of 8 at least. >>: 8 compared to the hard coded combined version matrix where you just optimize it [inaudible]. >>: What do you mean by hard coded ->> Dave Wecker: They're going to do ->>: Code, vectorized, optimized code for [inaudible] for example [inaudible]. >> Dave Wecker: Yes. >>: So say now if you had like -- it's an exact idolization thing but you applied it to do time inhibition, that would be 8 times, and order ->>: It's about 8 times faster if we were do that. >>: And how much is to do with, like, a [inaudible] like you have the choice of language and, you know, [inaudible]. >> Dave Wecker: Some. This is a managed language. I don't have control of the bits at the bottom, right? >>: Right. >> Dave Wecker: I have no SSE. I have no ABX, I have -- you know, I can't do the nice vectorizing instruction. Now, on the other hand I can link in, picture your favorite linear algebra library and use it. I'm trying not to on purpose because we're trying to make a stand alone useful tool you can run on your laptop and you don't need a license for MKL or whatever you prefer to use. But we already have that available in the system actually that's how we do our Eigenvalue solver for doing entropy and the like. We actually link in a linear algebra package when you need that. So if you said, gee, I really need to get the fast Hamiltonians or the fast simulation we could link it in and talk to it. In fact, I've been asked to do that a couple times and I keep fighting it. Because I said our point is to be doing a circuit simulation for quantum computers. And the fact that we're within a factor, say order of magnitude of the hand coded -- I'm fine. Right. I mean, this is a garbage collected line. There's a lot of tricks going on here to get it this fast. >>: [inaudible] is due to, you know, implementation overhead after you guys have become involved in how much it's due to the unreliance choice of F# and the managed language. >> Dave Wecker: I'd say I'm going -- oh, boy. I don't have a good answer. >>: [inaudible]. >> Dave Wecker: Probably half and half. >>: [inaudible] the coupling [inaudible] might be more than scripting feature [inaudible] to the language. That's my guess. >> Dave Wecker: Yeah. The language isn't hurting you that badly. It's a very good optimizing compiler. But there's certain tricks I can't do. I can't get my hands on it. Yeah? >>: [inaudible] try to ->> Dave Wecker: Oh, we -- that graph was putting the bonds in all different directions. >>: Equilibrium. >> Dave Wecker: Well, the equilibrium was the point that it all came down-- we showed the equilibrium, yeah. >>: [inaudible]. >> Dave Wecker: Oh, yeah. I'll bring it back. >>: [inaudible]. >> Dave Wecker: Well, we did both. >>: Oh, you did both? >> Dave Wecker: Yes. That's why one is bond length, the other is angled. >>: [inaudible]. >> Dave Wecker: And so you can see. And this was the minimum that we found. And at a specific point I compared it with Matthias's result. I also actually have graphs of Hartree-Fock and DFT versus this, but I didn't have time to put them up here. >>: You implemented these. >> Dave Wecker: Uh-huh. >>: [inaudible] do you have any methods in here that are beyond that, so [inaudible] some algorithm on 5 qubits. >>: [inaudible]. >>: Standard implementations of that, the plug-in here. We haven't worked in that space as much to improve the methods yet. But that's our next step. [brief talking over]. >>: Decomposition. I mean, there's two or three methods. The cosine [inaudible] [laughter]? >> Michael Freedman: I just think this is an amazing amount of work and Dave showed us in a very short amount of time. And I'm always impressed with people that actually accomplish things. I just like to think about things. So let's thank David. [applause]