>> Krysta Svore: So I'd like to welcome Clare... assistant professor at Keio. And she's going to talk...

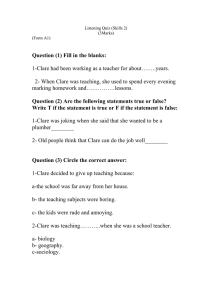

advertisement

>> Krysta Svore: So I'd like to welcome Clare Horsman here today from Keio University. She's assistant professor at Keio. And she's going to talk to us about quantum picturalism for topological quantum computing. So, Clare. >> Clare Horsman: Thank you, and thank you for having us all and letting us talk surface codes ad nauseam, ad infinitum, actually. So what I'm going to talk about today is yet another instantiation of the surface code and a way of linguistically representing the surface code rather than circuits or matrices, this is actually going to be a high-level language that we can use for representing the braids and the defects within the surface code. This is work I did when I just arrived at Keio. And this uses a particular form of pictorial quantum language called quantum picturalism that has come out of Oxford recently. It's all based on category theory. You'll be very pleased to know I am not going to teach you category theory. I can if you like ->>: Others have tried. >> Clare Horsman: Others have tried. But the great thing about this way of doing it is it's quantum mechanics in pictures. And the pictures are guaranteed to -- they're a proof algebra. So you can manipulate pictures instead of equations. And everybody suddenly gets much happier when you say that. So what I am going to talk about? First off, I'll give a brief introduction into the 3D surface code model. I've got a few extra slides I just put in at this lunchtime, so we'll go through the different types of surface code and where that fits in with what Shota (phonetic) talked about this morning and what I talked about with some of you yesterday about the planar code. There's a whole family of surface codes. And I'll also say a little bit about how they relate to the anionic topological model, because these are all closely related. And one of the things I would like to see at some point is the use of this language within the anionic topological model, because I think it should work natively, the same way it does with the surface code. And then I'll start talking about quantum picturalism. So quantum mechanics in pictures. And I love this. It's a process algebra which means there are some slightly nonintuitive aspects to it, because you're representing processes rather than qubits, but that then makes it easy to map to the surface code as we see. And I'll go through the work that I did which was showing that you can use quantum picturalism as this native language for the surface code. Because that had been thought it was probably that. Raussendorf, Harrington, and Goyal, when they invented this in their original paper on the 3D surface code, said, you know, this kind of looks like quantum picturalism, but we don't know how to make that formal. So what I did in this work, and the paper's up in New Journal of Physics, I made that formal. So we now have an absolute isomorphism between the geometry of the quantum picturalism flow diagrams and the geometry of the defects within a 3D quantum computer, which means you can use, as I want to use this, picturalism as a compiler language for the surface code because you have a 1:1 correspondence between the geometries. You can rather than manipulating circuits or matrices or anything at a low-level like that, you can at a high-level manipulate the quantum picturalism diagrams and then immediately use those as almost VLSI-type layout diagrams for the surface code, which it also gives you -- and I'll talk about this at the end -- another way to optimize. Because within this language you have a whole sequence of rewrite rules. And you can automate these rewriting. And think rewrites give you at parameter space to optimize over. So let's dive in. 3D cluster state topological quantum computing, what's that. Instead of doing what Shota was talking about this morning of having a two-dimensional system that evolves over time and you measure the qubits and you reinitialize them and then you measure them again, the three-dimensional code uses strings of, if you like, single-shot qubits. You measure, say, a photon, it's gone. You can't use it in the 2D surface code, you can't reinitialize it. You can use it in the 3D because you only ever need to measure. You don't need to reinitialize. You don't need to perform stabilizer operations. The third dimension in the 3D cluster model is standing in for time. Instead of having a two-dimensional system that evolves over time, you have a three-dimensional fixed system, and we'll see in detail how that works. This is the fundamental unit within the 3D model. So every point is a qubit. Almost certainly a photon. The lines between them are controlled zed operations. You tile this in all three dimensions. You have this huge three-dimensional cluster state. So this is a highly entangled surface, the same way as in the two-dimensional case you have a highly entangled two-dimensional system where the entanglement comes because you've measured all the syndromes. >>: [inaudible]. >> Clare Horsman: That's just differentiating between -- it's to make it easier to see. The white and the red are identical. They're just photons, you entangle them. So let's look, do a brief digression into all the different types of surface code. So this is a two-dimensional planar surface code. So these will be made out of qubits that you can initialize, measure, reinitialize. Solid state qubits. And what you're going to do, this is your syndrome qubit, these are your data qubits. You're going to measure the syndromes exactly as Shota said this morning. With the planar surface code, it's one qubit per surface, which of course how do you then interact them. That's another story that I'm not going to get into today. That was the first surface code that came up. One surface, one logical qubit. This is another type of two-dimensional surface code. You have a single defect in the middle. So this is where you're not enforcing that syndrome. You don't measure the syndrome of these four qubits. You have a degree of freedom in your lattice. You have a logical qubit. But if you look here, so these are your logical operators. You have to operate on all of these qubits simultaneously to perform a logical operation. You can't change the degree of freedom held in this surface except in these rings or chains. And the chains go between the defect in the middle on the edge. So, again, this is one qubit per surface. How do you get more than one qubit on the surface? You do this two defects per qubit. And then your logical operators are defined between the defects rather than between the defect and the edge of the lattice. So you can have a big, big, big lattice with lots of these defect pairs in them. And then you braid them. So you move them around each other. And you intertwine their logical operators. And when you intertwine logical operators of encoded qubits, you've performed a gate. That's what a two-qubit gate is in this type of surface code. So what the 3D code does is it takes this one and for each qubit, each data qubit and each syndrome qubit, it substitutes in the third dimension, so this way, in and out of the board, a line of entangled qubits. So you've got instead of a single qubit evolving over time, you have a sequence of entangled qubits. It's like each qubit stands in for this at a given time. And the 3D model proceeds by measuring two-dimensional faces of this huge three-dimensional cluster for each time step. It's exactly like this you can think of as watching a movie. And the three-dimensional cases you've printed out all of your movie stills and you stack them up. Each one of them is a time step. But instead of just having a single screen, you need all of these stills to give you the same motion and the same interactions. So the relation between the 2D and the 3D is the reason why we want the difference between the 2D and the 3D is how we're going to implement this. Like I said, we would implement the 2D on something like quantum dots, flux qubits. Anything you can keep there. Measuring doesn't destroy them. You can reinitialize them. You can measure them over and over again. You can't do that with photons. So you can't have an optical two-dimensional surface code. If you measured this, if you're measuring the syndrome, it's gone. You do a CNOT operation. The data qubits are gone. So instead what you do is you create all of the entanglement that you need for syndrome measurement before you create this massive three-dimensional entangled structure, and then you consume it as you go. Rather than creating the entanglement as you go and the syndrome measurements created beforehand, and then all you do is measure. >>: Is that the same, then, as [inaudible]? >> Clare Horsman: It's a one-way computation. So what you're doing in the one-way model is you're teleporting from one time slice to another. So how -- when you measure a time slice, you teleport to the next one. And that gives you your progression over time. It's entirely a measurement-based system. Prepare your cluster state, you consume your resource down. You create defects by measuring in the zed basis. That removes qubits from a cluster. You create -- well, you find the rest of the lattice by measuring in the X basis. And so at each time step you have a complete readout of what your measurements were. And from those you can reconstruct syndromes, stabilizers, find your errors, do error matching. Austin's code that Shota was using can handle both 2D and 3D. The matching is very, very similar. Rather than having measurements at different times, you simply got different two-dimensional slides measurements. So this is a schematic way of thinking about that similarity. Here in the 2D case. So this would be measuring a zed syndrome gathering. So this is four qubits on a face. And you do control-nots between the data qubits and the syndrome qubit that gathers your syndrome for those four qubits. And then at the next time step, you're measuring the X syndrome. So you're measuring these two, and there will be another two up there. And then at the next time step you're measuring your zed syndromes again. So if you look at just a tiny piece of the lattice, this is what you have over time, Time 1, Time 2, Time 3, as you go through zed X zed. >>: Can you explain a little bit more of the semantics of the arrows? >> Clare Horsman: Oh, the arrows ->>: [inaudible] X and Z [inaudible]. >> Clare Horsman: Yeah, the errors are just the control-not in whichever way around you want. It's not anything other ->>: The arrows point to the control ->> Clare Horsman: No, the arrows point to the syndrome qubit. In this case ->>: Which is the controlling? >> Clare Horsman: No. They're different. The X ones -- so for the zed syndromes, you have to control on the zed syndrome X. So the X ->>: [inaudible] X the opposite of the two. >> Clare Horsman: Yes. One -- one ->>: [inaudible]. >> Clare Horsman: You get bit flip errors on the zed -- >>: [inaudible]. >> Clare Horsman: -- syndrome. >>: Well, you -- you pick up your bit flip errors on the zed syndrome measurements, which means this is controlled target and this is controlled target. >>: [inaudible] controlled target is [inaudible]. >> Clare Horsman: Yes, but there are different ways around. >>: [inaudible]. >> Clare Horsman: On the X. This -- is syndrome is the control and the data is the target on the zed. This syndrome is target [inaudible] control. That's all. So we have these three schematically at three different times. And in the three-dimensional case, we would have this unit element, and we'd just have these five qubits at the back, these five at the front, these eight in the middle, all joined by control zed operations. So you can see structurally how they relate to each other. And that's our unit cell. It's exactly what I showed on the previous slide. That's why we have this as the unit in the 3D cluster. >>: [inaudible] I'm confused now [inaudible] zed and who is X in the three dimensions? >> Clare Horsman: It doesn't -- well, I would normally talk about zed as the simulated time. So think about that as going into and out of the board. So what we do, as I said before, is we measure out individual qubits. So here if we wanted to make a defect in this lattice, we would measure this qubit and this qubit, and that would punch a hole through the lattice. And a pair of -- well, one of those holes and the edge of the lattice supports a logical qubit. If we want more than one qubit per lattice, we need a pair of holes exactly as in the 2D case. All the measurements we do are either X or zed [inaudible] measurements. We gather our syndromes from those measurements. So you don't punch a hole in the lattice first and then come along and measure. At every time you have a measurement pattern that says right where my whole is I measure zed, and everywhere else I measure X. So it all happens at the same time, both creating the defects and measuring your syndromes. We're doing the equivalent of syndrome measurement. And then just as in the 2D case, you braid. You move one defect around another in a given pattern. And we'll see more of that in a bit. Everyone happy? You all know everything about the 3D surface code now. >>: So do braid in 3D you need to run this [inaudible]. >> Clare Horsman: You need what, sir? >>: [inaudible]. >> Clare Horsman: You have a pair of tubes supports a single qubit. And then you move one of the tubes from your second defect around and in between the two. That's braiding. It starts looking for similar to all the space-time anion pictures that you get. So the -- I mean, I don't know how much you've gone into the connection between the two, but basically this pattern of interactions between these qubits and this geometry simulates the [inaudible] of Hamiltonian. It's an exact -- we're creating -- it's almost like we're doing an emulation of the [inaudible] of Hamiltonian. We are emulating anions. However, it's an awful lot easier because we can do it in things like photons and quantum dots. We don't have to go off and try and find fractional quantum pull effects. So that's the connection between these two. We're simulating the anionic Hamiltonian rather than going into nature and finding something that actually works on that Hamiltonian. So this is showing how we put together this unit cell. Like I said, you tile it in all three dimensions, you get your lattice. And this is part of a braid. So everywhere within this, within this block, you're measuring out in zed. Take it out of the cluster. Everything else outside you'll measure in X. And, again, the size of your defect here is determined by the code distance which is determined by how long you want the computer to run at what accuracy. So the code distance here is the distance around one of these defects. It's also the same as the distance between the defect pair. That's your code distance. You create these tubes from singular points in exactly the same way as in the 2D case. So if you want to start, say, in the zero state, you initialize everything -- well, you're always initializing all of your qubits in the plus state because you're trying to build a cluster state. So you take one, measure it in zed, that gives you a zero, then you expand out the region that you've measured in zed. And then you turn a corner like this. And you end up with a horseshoe shape. So if we've got simulated time going like that, you start off this horseshoe. So you start measuring. And the first time you hit it, you have a long band of measurements in zed. And then the second time, it striates into two. And that's how you create your defect pair. And if you're doing singular state injection, simply right at the beginning you inject the state there before you spread out. So it all works analogously to the 2D code. So what we have for an algorithm is a collection of braiding patterns. It's a collection of these tubes wandering around in this lattice and braiding each other. It's a three-dimensional tube diagram. Like that. So this is a CNOT. >>: [inaudible]. >> Clare Horsman: Yeah, can we -- this -- >>: Ah. >> Clare Horsman: Can we kill the lights? Thank you. >>: I didn't do anything. >> Clare Horsman: Yes, I know. I was thanking whoever can obviously hear me. This we're measuring out faces here at each time, and time is going that way. So you start off, you've got one, two, three qubits and a braid. So the reason why you do the CNOT in exactly this fashion is slightly complicated, and we can get on to that later. Essentially this is your control. This is your target. And everywhere that's shaded you're measuring out in zed. Everywhere else -- so all of these little dots, each one is a single photon, all of those you will measure at your measuring bank here, it's coming at you, you'll measure them at X. And you just measure through the cluster like that. And that gives you a CNOT. So by the time you've finished your target qubit and your target qubit, which are still propagating through, are now braided. Thank you. Can we have lights? Thank you. So that's what one CNOT looks like at quite a large distance. I mean, we've got, what, one, two, three, four, five, six, seven, eight. So distance 32. When we go -- so braiding. So when we go to a full algorithm, how tractable do you think this is going to be? I'm going to show you a tiny part of the distillation circuits for singular state injection for the 3D code. About between 75 and 90 percent of the braids required for 3D algorithms, and it's the same for 2D, are distillation circuits, to distill your singular states. That is a tiny fraction of the braids you need to distill one T-gate state. You want to optimize that by hand. You want to lay out the -- you want to give the quantum computer the instructions to measure in that pattern by hand. You look at that. If I hadn't told you what it was, would you know what it was? How can anybody eyeball that and tell me, oh, yeah, that's distillation circuit, these are all CNOTs. If one of these is wrong, how can you tell? We don't have the tools. And we need to develop the tools, and that's why I want to develop the language that I'm going to present now. Because this is too complicated to do by hand. So that was bad news. Now the good news. We don't have to do it by hand. So we want to optimize. We want to design. We want to pick out whether something's wrong. We need a compiler. We need a high-level language for these braid patterns. We need something that we can automate, something that we can program, something we can send to a computer and say tell me what this braid pattern does. Something where you can design braid patterns for algorithms and say -- >>: Asking the computer what a braid pattern does looks to me like a decompiler [inaudible] can be a hardware problem [inaudible] compiler. Maybe you can get buy without a did compiler or not. >> Clare Horsman: Well, we need something that translates between braid patterns and circuits. >>: From circuits to braid patterns. >> Clare Horsman: And vice versa. >>: [inaudible]. >> Clare Horsman: Because I want to know -- I've got a braid pattern, is it right. Is it giving me the correct answer. Also, I do something slightly strange with optimization. I gave it to some sort of genetic algorithm. It spits me back something that looks really weird. How do I know it's right. How do I know what I've optimized to is actually the correct algorithm and isn't suddenly giving me garbage or doing illegal moves. >>: The integrated circuit world, this is called LVS. >> Clare Horsman: Yes. Very similar to that. What we want is those tools for quantum. >>: In particular [inaudible] this case often what it amounts to is verifying that one pattern you constructed is equivalent to another pattern that you already have. >> Clare Horsman: Yes. >>: That's a classic optimization problem. >> Clare Horsman: And all of these things, because they map so easily to the classical problems, we know they're NP. We know they're NP-hard or complete. We're not going to solve these exactly. We need the tools. We need to get it good enough. And we don't even have the slightest tools at the moment. Not aiming for perfection in any of the optimization stuff, but we just need better than we have. >>: So I have crazy question here. Once you have a quantum computer, does NP stop you? >> Clare Horsman: At the moment that is unknown and left up to people's intuition, and I -- I would say it's an interesting question whether we can get polynomial speedups of NP problems. I would very much doubt is P equals NP. So I very much doubt if we would get exponential speedups to the problems. But using a quantum computer to compile circuits for quantum computers would be a very good thing to do. So this is just what I was saying. These are the three things we want to use it for. Which set of gates is this braid pattern implementing? How do we get a set of different patterns that will all implement the same thing to optimize over? Let's do compilation. And this is what I want to use for a language compiler. So quantum picturalism. Category theory in pictures. So this was work, as I said, this came out of Oxford in the last five years. The first paper on this version of quantum mechanics in pictures is called kindergarten quantum mechanics. And the head of the group, Bob, always goes on to say I can teach quantum mechanics to ten-year-olds this way, I can teach quantum mechanics to five-year-olds this way and they can do calculations that my undergraduate students can't. It's a very powerful way of thinking about quantum mechanics, and you don't need any equations. But as I said right at the beginning, it's a process algebra. So when we start looking at the elements in the calculus, even though it looks just like balls on sticks, each ball is a process that you do rather than a qubit. It's always worth barring that in mind. Then you get to the 3D surface code, and that merges together, but we'll -- I wish to start you off unconfused, even if you become confused later. This is also verifiably a proof calculus for quantum mechanics. If you perform the rewrite rules on the graphical language and you get out a result that is the same, you know that you will get the same results if you worked through the equations. I can give you references for that. It's a very nice paper by Peter Selinger. And this was my work showing the topological and geometrical isomorphism between what you get in quantum picturalism, the flow independency lines in the process algebra and these tubes that you're trying to braid in the 3D model. So this is the paper reference. It's also in New Journal of Physics now. So any questions before we dive straight into quantum picturalism? >>: When you say rewrite rules, what kind of [inaudible]? >> Clare Horsman: Well, we'll go into that, because I'm -- to present the system, I present the rewrite rules. It's not a large system. You don't need have many rewrite rules to describe the whole quantum mechanics, and you need even fewer to describe the 3D model. You only need a subset. So quantum picturalism can describe any quantum process. You only need a subset of the rewrite rules in quantum picturalism, and that subset has some very nice properties, which we'll get to, to describe the 3D surface code. So the idea that this has got anything whatsoever to do with the surface code is on the surface that just do why would it? Why would this weird category theory stuff have anything to do with error correction? Actually Raussendorf, Harrington, and Goyal in their original paper right at the end, you know, just before the acknowledgments sneaks it in. They say connection with category theoretic work of Obranski and Kirker [phonetic]. They go is this the category theory line of information flow. They didn't have the tools back then. This is in 2007. The picturalism wasn't developed enough to be able to give this connection. But now it is. So this is the fundamentals. Everything I'm going to show you corresponds to an element in the symmetric monoidal category with strong compact closure, which I'm sure means everything to you. You have rewrite rules on these elements. There have been a lot of ways down the years that people have tried to do quantum mechanics graphically. I don't know whether any of you who've seen this one, Roger Penrose, 1977, Penrose's tensor calculus. So this was Roger Penrose going off on one -- going, hey, I can do quantum mechanics graphically, and people kind of scratch their heads going this is slightly weird, but it actually swarmed the entire category theory diagrammatics industry that we now have. >>: Is it [inaudible]? >> Clare Horsman: Yes. So this actually has -- this has a strong relationship to what I'm about to show you and actually relates, as we'll see eventually, to the horseshoe beginning of a braid. That's what his triangle relates -- it maps onto. But quantum picturalism isn't the entire family that have come out of the Penrose's tensor calculus. It's specifically the category theory diagrammatics. So, yet again, remember it's process algebra. And we've seen the dependencies. We've seen which processes depend on the output of the other processes. If two processes are connected, that means they depend on each other. So let's dive in with an example. I don't want to take you straight into the here's all the ways you start it and build it up. I just want to use it. I'm going to prepare a Bell state. So this is cubic preparation. Time flows down the page. Once we start getting into more two dimensions, it gets fun. We'll see some four-dimensional diagrams later on. >>: What do you mean time flows down? >> Clare Horsman: Process time. >>: What does that mean? >> Clare Horsman: That means, as we'll see in a bit, you have two qubits, you do this process, and if there were any more processes here, you do those. >>: [inaudible]. >> Clare Horsman: It's what? Sorry? >>: So it's dependence? >> Clare Horsman: It's dependence, yes. >>: And what about the error of time here? Or put another algebra in perspective [inaudible] field theory where time place a secondary role [inaudible]. >> Clare Horsman: Yes. That's not -- the formal diagrams aren't really process algebras, though. >>: No, I understand. >> Clare Horsman: Yeah. So this is every dot represents a process. So these dots with no input are state preparation. So the green one you prepare a plus state, the red one you prepare a zero state. And then what we do -- so there's our individual qubits. Now we're going to do a CNOT between them. And that's this. So we prepare and then we perform these processes to do a CNOT. So your diagram for the EPR state is the preparation procedure. So you prepare and use CNOT. So this is the picturalism representation of the EPR path 00 plus 11. So you can state getting a flavor -- you know, we've got two different colors of dots that have got something to do with the X basis and the zed basis, because the green was preparing in plus and the red was preparing in 0. And then when you connect them together, that's a gate. So you're making each qubit dependent. >>: So there is a little ambiguity here. You started -- your starting point was to say that all of those round shapes are processes. >> Clare Horsman: Yes. >>: But now CNOT just called it a process, but there is no round shape for CNOT here. >> Clare Horsman: Yes. This -- so this is state preparation. This is CNOT. So you create an EPR pair by doing the state preparation and then the CNOT. >>: So they're not -- the processes are not [inaudible] is a round shape? >> Clare Horsman: Yes, it is. It's visualized by these two round shapes connected. >>: Okay. I got it. >> Clare Horsman: So each dot is a single qubit process. Although, that's not strictly true, as we'll see later. So you start getting the idea. Why process algebras? A bit too much words on this slide. But we're trying to find what depends on what, where does the information go in an algorithm. Which qubits do you need to interact with which other qubits. That's why people starting looking at these process algebras. Now, in the classical world, it's obvious that you do a process algebra for an information process. Information doesn't change unless you poke it. In the quantum realm, a lot of people think you can have nonlocal change of information; that if two things are entangled and you change this one, this one will automatically change. So how on earth can you have a process algebra if there's nothing connecting those two. You poke this and this one changes. And that was the starting point for the category theorists. They were saying we want to create a quantum process algebra. We want to be able to do quantum mechanics locally. Information flows. It doesn't jump from here to here. It goes in direct flows between them. And it was actually a big deal that they showed you could do this. The only problem is a lot of the time if you interpret that what I said was the process time as real time, you have information flowing backwards in time. But we'll see how once you use this to describe braiding patterns, that ceases to be a problem. And we have process rewriting, as I said. Now, all of these algebras that came out Penrose's tensor calculus have normal forms. Which means you rewrite, you rewrite, you rewrite, and you hit a normal form for that particular unitary. And we have that in quantum picturalism as well. And that's very, very useful when we start applying the rewrite rules to braid patterns. We're not quite there yet. But we can get down to normal forms. And if two things have the same normal form, you know they're identical. >>: That's just a proof that a normal proof exists, not a recipe for actually getting it. >> Clare Horsman: Correct. But ->>: [inaudible]. >> Clare Horsman: Exactly. It's a big deal to have that. Okay. So, for example, this is the normal form of the EPR Bell state. So we take what we produced before and we apply rewrite rules that I'll get to in a bit. And we create this. And this is demonstrably the normal form for the Bell state. That's just one example of normal form. So here's some axioms. We're using, as I said, a subset of the complete quantum picturalism. We're using the X zed category of observables, because this is the one that maps most directly to what we're doing in error correction. So we have essentially two favors of processes, one in the zed basis, one in the Hadamard basis. So these are our basis state preparations. And if you want to prepare a zero state, it's plain red one. If you want a one state, it's a red one with a pie face. And we've got all sorts of rewrite rules of how you move these faces through diagrams. But you need to keep track of these faces here to tell you which one of [inaudible] one is it. And then we could do the opposite. We can measure. And this is an input with no output. It's the measurement. Cease is being qubit at that point. And the other fundamental element is a Hadamard box. And as you would expect, a Hadamard box allows you to go between red and green. So you put a Hadamard box on the input of a green, takes it to a red with Hadamards on the output. Or you could say a green with Hadamards on both inputs on outputs becomes just a red. And, again, there are various rules. So a Hadamard box takes you from red to green. This is the first of the rewrite rules. Cancellation rules. Two Hadamards one after the other is the same as doing nothing. Two reds one after the other, same as one red, two greens, same as one green. Now we have the most important rewrite rule of all. We have a couple of processes, and this works for red as well, you can combine the faces of them. If you're doing them sequentially, you can combine them into one process, you combine all the inputs and all the outputs into the inputs and outputs of one process with a combined face. This is called the spider rule, for obvious reasons. And because Bob, the head of the group, has a thing about spiders. Don't ask. >>: That particular spider? >> Clare Horsman: Black widow. Yes. He has a large plastic black widow spider in his office along with a large plastic rattlesnake. >>: He has issues. >> Clare Horsman: What? >>: He has issues. >> Clare Horsman: It was a very interesting place to work. So we can actually write out the equations for these green nodes. So this is the equation for the spider. So all of these, they're processes, they're projections. You put a qubit into them. So you stick this as an operator on a state, and it tells you what the output is. So takes 0 N 0 outputs, N 0 inputs, take N 0 inputs to N 0 outputs. And M identity inputs to N 1 plus face outputs. Just the way it works. And we can use this to build up gates. First thing we can see from it is the green node copies red nodes and the red nodes copy green nodes. So if you input a red into a green, it splits it into two reds. If you input a red pie into a green, it splits it into two red pies. This is an interesting one. This is really useful in the surface code. You put a red into a green and the red is a state preparation, suddenly it's as if you prepared two reds but they're unentangled. The green node here, you can think of it like this. If it was a red node, so invert the coloring, you do all of this in the Hadamard basis. And now we have gate operations. So these are the patterns for gates. This is the pattern for the CNOT. This is the pattern for the C zed. And this down here, if you really want to verify that's the correct pattern for the CNOT, this -- so this is where rail 1, rail 2 -- sorry. Rail 1, rail 2, rail 3. All I've done is write down the spider rule for each rail. And suddenly you pop out as a CNOT. It's kind of cute. You can either draw this picture, or you can do this math. It's up to you. And we do stabilizers in this. This is the reason I chose to look at the red/green calculus for the surface code, because we're always dealing with stabilizers. We're dealing with X stabilizers and zed stabilizers. The code works on a lattice of X stabilizers around each vertex and X stabilizers around each face. So I wanted a pictorial representation of that. And this is our X operator and our zed operator. So we start thinking in terms of the red is X and the green is zed. And we'll see once we get on to how the lattices look in this. We can start actually eyeballing stabilizers, which is not something you can do if you look at circuits or if you look at the matrices or if look at the states of these things. You can actually pick off which ones are stabilizers. So we can verify what's a stabilizer by applying the rewrite rules that I've given you. So first one I've started off with the EPR state and then I've added in the X X operator, applied the rewrite rules, applied the rewrite rules, I've got back to normal form of the EPR pair. That means X X is a stabilizer at the state. Same here. Zed zed. Rewrite rewrite. Back to the normal form. Showing it's a stabilizer. Again, quantum mechanics in pictures. Not an equation in sight apart from that one. >>: So [inaudible] which vertex you started writing on [inaudible]. >> Clare Horsman: The entire rewrite system -- I was going to say this at the end, but I might as well say it now, the subset of the rewrite system we use for the 3D code is fully confluent. It doesn't matter. You just do it as greedily as you want, grab any node, start rewriting. Doesn't matter. You'll always ->>: [inaudible]. >> Clare Horsman: Yeah. And you'll get ->>: [inaudible]. >> Clare Horsman: Always get the same. Whichever rewrite rules you start from, you'll always hit the same normal form. So let's do this in the 3D model. And cluster states in quantum picturalism. So another reason I use the zed X calculus is because cluster states have a really, really nice form in it that was known beforehand. You have a two-dimensional cluster state here, so just put it in this form so we can see, and the process diagram for it is a two-colored diagram in an extra dimension. And we always see this. When you go from an N-dimensional physical system, because you need to add in a process time dimension, your process diagram is N plus 1 dimensions. So this is a three-dimensional plot. You can think of these as time -- process time going into the board. And it goes up one dimension. We can do 3D. So these are the 4D diagrams I've promised you. So that's your physical layout. This is your red/green layout. So that's the first face, the middle, the back face, and then process time is into 4th dimension, whichever one you want to say is that. >>: I'm curious to see on the previous slide how the [inaudible]. >> Clare Horsman: What do you mean? >>: It's against the law of [inaudible] so the gray one [inaudible] and then suddenly increase because [inaudible]. >> Clare Horsman: So the gray one. Sorry. This arrow shouldn't be there. That doesn't mean rewrite. Yeah. But forget that arrow. The gray one is the physical cluster state layout, the geometry of the physical cluster. And then this is how you write it in the picturalism, and this is an equivalent form. >>: Yes, there is no arrow [inaudible]. >> Clare Horsman: There is no arrow. No arrow. Yeah, there's no arrow here. >>: Okay. >> Clare Horsman: So it suddenly starts looking like it's very nice. Now when we look at the 3D lattice for topological computing, we start seeing structures. We start, for instance, seeing the primal and the dual lattice. If you go back to the original element in the cluster state that I've showed, if you tile that, the whole cluster is self-similar. It is spacing of half a lattice. So you have essentially two intertwined lattices, primal and dual. And we can see those in picturalism as red facing and green facing cells. So this would be a cell on the primal lattice, so faced with red front and back. And this at half lattice spacing in each direction is a cell on the dual lattice, spaced with green. So suddenly we have a very neat way of showing dual and primal. Logical qubits, as I said at the beginning, are holes in this lattice. And this is what you get. So what I've done here is taken two of these cells back to back and measured out the central qubits there. I performed rewrites, and this is what you get. So this, again, is a four-dimensional process diagram. And you can start seeing where your stabilizers are. Because this, these blue ones, are the stabilizers of the lattice. They're not angles. I've just put in which ones the stabilizers and the lattice are. To see you can start, as I said, picking them off by sight a ring of green. It's a zed stabilizer. A ring of red, an X stabilizer. A ring of red, an X stabilizer. A ring of zed, zed stabilizer. >>: So these purple boxes you don't actually need for the sets, just to show you where [inaudible]. >> Clare Horsman: Yes. This is to show -- so when you look at -- this is the correlation surface that supports logical qubit. And you can start seeing it by hand. >>: [inaudible] middle, right? >> Clare Horsman: Yes. And what you can do is start adding in stabilizers, and you can see by inspection that they cancel in the middle. So it's a very simple way of seeing what otherwise is an incredibly complicated thing to find what the stabilizers of the state are. So we need two more elements to show that when you punch a hole in the lattice, what you get is topologically equivalent to one of these process diagrams. First thing is we need to get rid of one of those pesky dimensions. I said we started doing four-dimensional diagrams, but in the end we want to say this is geometrically the same as the three-dimensional system. How do we get rid of the extra dimension? This is where the measurement of the cluster comes in. When you measure any cluster state, you take this open process graph, so it's got these legs here that you can add extra things to. I've added in measurements, these green dots are measurements in X, to all my qubits, performed the rewrite rules, and this is what you get. And you can do this to the entire cluster. And you go from something with legs and Hadamards all over the place to simply a two-colored N-dimensional process diagram. Number two, at the moment all of these diagrams, so here I've got the processes involved in each individual qubit. And what I'm trying to show is that this single qubit line here can be represented by one line in a process algebra. So how do we go from that multiqubit, multiqubit process diagram to single qubit process diagram. And that, again, we just perform rewrite rules. So what I did is take the unit cell, this is put it into a more schematic form, put it into the red green, it's a four-dimensional diagram, put in the measurements, rewrite it, suddenly out pops a single line. So the defect strands, each defect tube can now be represented by a single process line. And we can see our logical operators. So in the 3D model, zed operators -- logical zed is a ring of zed operators around the face of one of the defects. And this is it schematically around the -- this is a double defect here created here and this is the end, and we've put a ring of zeds around, rewrite it. It rewrites to a zed operator on the single logical qubit. We can do the same with X. Rewrites from a chain between the two to a single X on a single logical qubit. Final one we can do is braiding. So you can think of the green qubits as on the dual lattice and the red as on the primal. And if you're simply doing a two-qubit braid for a CNOT you go from primal to dual. Now, remember that very complicated CNOT I showed you right at the beginning? That's to go from a primal qubit input to a primal qubit output. If you don't care about where your, sorry, primal qubit control to primal qubit target, if you don't care that your target's on the dual, you just braid. You don't need the 3 plus braid. >>: When I first saw this paper, it seemed to be one of the greatest strengths of this, is being able to convert at any basis you want at the time that you want. And it sort of I think gained some efficiency even independent of its about to represent things. It also sort of as a language has its own write [inaudible]. >> Clare Horsman: Yes. You can pick what red and green means. There's symmetries between them. But this -- so this is a rewrite of what happened. This is the normal form rewrite of what happens to braids being -- to qubit tubes being braided around, rewritten all the way down to single process lines. And it's exactly what you would expect. It's exactly dual process being braided with primal lattice. >>: [inaudible]. >> Clare Horsman: Sorry? >>: It's also an original drawing [inaudible]. >> Clare Horsman: Precisely. You can create -- but this I created by going right back to the full lattice, making that a red/green process algebra, rewriting down. This is what you get out. So it's a good way of -- if you can get the same thing more than one way, you know you're right. So that completed the proof; that if we want to lay out braids from picturalism diagrams, all we have to do is look at it and say, okay, it's green, that means it's on the dual lattice, if it's red it's on the primal, or vice versa, as long as you're consistent it doesn't matter. And if there's lines between the two, we braid. Simple. You can take this and use it to layout braids. Rather than manipulating braids, you can manipulate these diagrams. And these are all formal equivalence proves. These aren't going to come back and bite you. There aren't sort of corner cases lurking there going, oh, yes. But in one case you could rewrite and get something that's not the correct braid pattern. This as far as -- I shall be bold and say this is cast iron. One thing I've not gone into in great detail, which I want to look at some point, is just to check the equivalence with the two- dimensional surface code. Because in the 3D case you've essentially got your time dimension as a space dimension. It's there. It's the third dimension of the cluster. In the 2D case we're going to have to go to space-time diagrams to get the equivalence, and there may be some things lurking in there. I don't think so. But for the moment this is just provably equivalent for the 3D case. Now we can also start looking at error correction in this language. So in the 3D cluster, as I said, around each -- that's not actually correct. Okay. That slide came in from a 2D presentation. I do apologize. >>: Needs error correction. >> Clare Horsman: It does. Yes. It needs a bit flip somewhere. But suffice it to say when you measure and you put your measurement results in a minimum weight-matching algorithm, that tells you where errors are. And so you then know which bits you need to flip, which means every time you do a measurement cycle, you're essentially post selecting given outcome. You want it to be zero. If it's not zero, you flip to make it zero. Now, there's a particular -- all of these diagrams, when I've added in the measurement, I've post selected onto the zero measurement result. I didn't tell you I was doing that. That gives you the normal form. And the first time I presented this in Oxford, someone stuck their hands up and said you're post selected, this is rubbish, you know, you can do anything as long as you post select. And that's where the error correction comes in. Error correction allows you to rewrite these diagrams with post selection, because that's what you're doing. So that was a very neat thing. The only reason why this works is that you're error correcting. So we can show how our error correction is working. This was showing that any time you error correct you always get back to the normal form that I've been using. So any combination, these four we're measuring out these four and an even number of them have to be zero to have the correct parity. So here I've got two measured as one and two measured as zero. These pies, when you rewrite, cancel out. As long as you've got an even number of them, they'll cancel out. So that's fine. So that enables us to say as long as we're doing error correction, this is our normal form. So what ->>: So is -- would it be possible to -- maybe I'm not understanding. Is error correction just more rewrite rules really? >> Clare Horsman: No. Error correction is ->>: Could it be? >> Clare Horsman: Error correction is using -- so we've got our error correction. We've got our normal forms. We've got a compiler language. What are we going to do with it. The first thing I want to talk about is how to automate this, how do we automate the rewrite rules so that you can program in an algorithm into the red/green calculus, get it to rewrite to a whole slew of other red/green diagrams. And that gives you, for instance, a whole stack of things you can optimize other. You've got a whole set of red/green diagrams that correspond exactly to braid patterns that are not topologically equivalent. You can't necessarily continuously deform one red/green diagram into another. The rewrite rules break out of that. You can rewrite things that aren't topologically equivalent. So that's one way that this can help optimization. And we can also do pattern checking. >>: They're not equivalent, do they have the same topological strength? >> Clare Horsman: They implement the same algorithm, but topological equivalence means you can continuously deform the braids of one into the braids of the other. But there's no requirement that two braid patterns implementing the same algorithm are topologically equivalent. They implement the same unitary, but they don't have to -- you don't have to be able to find one by continuously deforming the other. And a lot of optimization strategies people have come up with involve continuously deforming your braid sequence, so this can kick you out of that. So it can give you another dimension to your search space. And I want to do ->>: That's interesting. You might have multiple implementation of the same process. >> Clare Horsman: Precisely. >>: [inaudible]. >> Clare Horsman: Precisely. >>: How interesting. >> Clare Horsman: Yes. >>: [inaudible] given your resource desire, or ->> Clare Horsman: That's entirely up to people like you who are doing cost functions and whatever. This just spits out options. Yeah. And also pattern checking. So I want something -a piece of software where you can give it a braid pattern and it will rewrite, rewrite, rewrite, say, bing, this is your normal form algorithm. >>: Well, you could certainly translate [inaudible]. >> Clare Horsman: Absolutely. >>: And then take them both in [inaudible] form, see if they're equal. That's not [inaudible]. >> Clare Horsman: And there's a tool out there that the Oxford guys have started developing that will help us do this. Quantumatic [phonetic] automates the rewrite rules. Apparently this was started by a couple of grad students who were fed up of doing rewrite rules by hand and keeping on getting them wrong. So this is a screen shot from Quantumatic. This is your process diagram that you're trying to rewrite. This is I think part of the Fourier transform, several rewrites down. These are the available rewrites. You can get it to apply all of them greedily or only one, subsets of rewrite rules. So one thing I'm doing at the moment is isolating the subset of rewrite rules used for the 3D cluster state model so it can actually have a module on this that is the 3D model rewrite rules. Because the full set of rewrite rules isn't confluent. But the subset that I'm using is. So I want to just be applying those rewrites. I don't want any other ones to nastily sneak in. So that is a very cool potential way to go forward using Quantumatic. >>: So the complete set of rewrite rules doesn't give you a normal form, right? >> Clare Horsman: Correct. >>: That's unfortunate. >>: It does not? Or there's no recipe for getting them? >> Clare Horsman: It does not guarantee to be normal norms. You can get into infinite rewrite ->>: Seems like there would be for the general case. >> Clare Horsman: Yeah, the general case you can potentially get into infinite rewrite loops. >>: Has anybody done a gate to this [inaudible]? >> Clare Horsman: Um -- >>: Going the other way? >> Clare Horsman: Not yet. >>: Shouldn't be too hard. >> Clare Horsman: It shouldn't be too hard, no. I mean, at the moment with Quantumatic the problem is getting bodies using it, working on it. So I will wrap up there. Conclusions. We've got a graphical language for the 3D surface code and hopefully for the 2D surface code as well. Braid ->>: [inaudible] pretty good, don't you think? >> Clare Horsman: For? [multiple people speaking at once]. >> Clare Horsman: Oh, yes, absolutely. I'm just saying I haven't proven this, so I can't say to the same degree of certainty as the 3D case. And the proof -- yeah, the proof as it stands only works for 3D. But, yes, it almost certainly should for 2D. So we have this topological isomorphism, which means we can use this once we've got a red/green pattern. You can almost send that to the control computer for the measurement devices. What you need to know is what your code distance is which tells you how big your defects are, and then you simply blow up the red/green diagram by that scale factor as your measurement pattern. Very, very simple once you get to that stage. And we can use this as a compiler language. >>: [inaudible] intermediate form maybe? >> Clare Horsman: Okay. I'm a physicist, not a computer scientist or a systems ->>: Yeah, I mean ->>: [inaudible] okay? >>: Yeah. Well, in intermediate generally [inaudible]. By the way, I have another quibble with your slide back there. VLSI [inaudible] circuit boards and it's used to make chips. It's no big deal. >> Clare Horsman: I stand corrected. >>: You're forgiven. >>: No, no, no, no problem. >> Clare Horsman: Yes. And finally putting this all together into an automated tool is the way forward. And also putting this -- once you have an automated tool for this, it can start searching through algorithm space and come up with new algorithms. That's where I think I'll end. Thank you. [applause]. >>: [inaudible]. >> Clare Horsman: The category theory has hidden so well, hasn't it? So each of the elements in the calculus correspond to elements within a category. And the fact that they have that structure enables you -- well, enabled Peter Selinger to prove that when you apply the rewrite rules, that's an exact algebraic equivalence between input and output. So when we apply the rewrite rules, we know it works. >>: [inaudible]. >> Clare Horsman: Oh, this is entirely the fruits of category theory approach. The fact that these are elements within categories with that structure ->>: Category theory [inaudible] objects. >>: [inaudible] it has other [inaudible] theory applied. I've never seen [inaudible]. >>: It would be interesting to know what a universal [inaudible] universal object here, which is [inaudible]. >> Clare Horsman: Well, I think what you're asking for are -- because this is -- so this particular one is a category of observables, and the X and the zed observables are the two fundamental elements, so I think that's what you're wanting. I have quite a lot of references, if you want to go further into them after this. >>: [inaudible] somewhere on my laptop. >>: It's both on the archive and already published in New Journal of Physics, which is [inaudible] even if Microsoft didn't have a subscription [inaudible]. >>: We have to pay to that [inaudible] we've all read it actually. >> Clare Horsman: Okay. So the Selinger paper is referenced in that. Bob's kindergarten quantum mechanics is referenced. The whole shebang. You can happily spend half a year going through the references there. >>: Basically what you do. >>: And the Quantumatic tools you presented there at the end, the very first thing [inaudible] start working with us is [inaudible]. >> Clare Horsman: Yes. >>: So do you have the diagram for the snapshot you showed in the beginning [inaudible]. >> Clare Horsman: No. I haven't got to that stage yet. But what I need -- I need the circuit ->>: The generators. >> Clare Horsman: Red/green generator into Quantumatic in order to do that. So I need a student to do that. >>: So I wonder if that level if it's actually easier to analyze. >> Clare Horsman: It will be because it will rewrite. >>: Collapse down. >> Clare Horsman: Yeah. And you'll be able to see, oh, yes, distillation circuit. >>: What are the applications [inaudible]? >> Clare Horsman: What I would hope is that your space time anion diagrams are equivalent to this. I should expect there to be the same equivalents as there are the defects. >>: You mean just the braids? >> Clare Horsman: Yeah. >>: Which means they could work in the subset perhaps? >> Clare Horsman: That's what I'm hoping. >>: If you can work in the subset, then you have a stronger numeral form character. >> Clare Horsman: Yes. In all of this I'm trying to keep into that nice confluent subset where everything works. >>: What happens when you reverse all the arrows? That's another thing you do on category. >>: Right. Exactly. >> Clare Horsman: Well this, is what I was saying about when they first came up with this as a way of describing quantum mechanics locally, people said, well, actually, yes, some of your arrows are going back within real time. But with the 3D model we have time laid out. We can have a braid doing this ->>: But that's why ->>: Yeah, but you can run it backwards. You can flip the arrows the other way and go back to the processes. >> Clare Horsman: If you wanted to. It would ->>: There's no forward, backward. It's just ->>: There's a line. >>: It's constraint of ->> Clare Horsman: Well, absolutely. But what -- what you have, a process time you're defining by [inaudible]. When I create this state, I do this, I get -- that's my input. And because it's a computation, you have defined inputs and outputs. >>: Yes. >>: The thing I'm interested in is what -- so it's pretty clear that the preparation and measurement guys ->>: Are endpoints. >>: -- are duals. >> Clare Horsman: Yes. Oh, absolutely. Every single work ->>: And seemed like that should work that way too, I think. >> Clare Horsman: Totally. Well, this is all unitary quantum mechanics. [multiple people speaking at once]. >> Clare Horsman: Yeah, it's all reversible. >>: Does this also answer the question we had earlier about does this tell you when I become unentangled? Because you had an example of that. So can I watch the diagram flow and find out where I went from entanglement [inaudible]? >> Clare Horsman: Yes. Because if you're entangled you depend, if you're unentangled, you don't depend. >>: Right. So this would tell me along the way for simulation purposes if it's useful. >>: Is that true? >> Clare Horsman: Yeah, because you don't -- well, if you don't have your variables depending, it could -- the problem is it might be classical correlation rather than quantum entanglement. So if they weren't joined, if they weren't dependent, you'd know for sure. If they were dependent, it could be that they're classically correlated. But if you said not dependent, I'll treat them as unentangled, you'd be fine. >>: Well, my earlier [inaudible] stuff we had the same case where if it specifically [inaudible] you may or may not know. >> Clare Horsman: Precisely. >>: You want the same thing that we all wanted for simulation purposes. [multiple people speaking at once]. >> Clare Horsman: It's really easy when it's not quantum. >>: [inaudible]. >> Clare Horsman: Yes. >>: Or you measured at the end of one of the lines he goes away and everybody else goes down. Yes. >> Clare Horsman: Yes. >>: [inaudible]. >> Clare Horsman: Well, there's -- there's -- no. Because what we call classical correlation is simply a subset of entanglement that can be simulated efficiently classically. >>: [inaudible]. >> Clare Horsman: Yeah. All of this is working in global quantum mechanics. Everything's quantum. There is no classical world. The classical world is a subset of the quantum world. So there's no way of knowing whether any of these -- whether any of this entanglement is classically [inaudible] or not efficiently. >>: And then for the other logical [inaudible] logical CNOT [inaudible]? >> Clare Horsman: Well, you'd use state preparation for that. >>: So you allow state -- you change your state preparation? >> Clare Horsman: You change your state preparation and you put a teleportation circuit into your lattice. Any more questions? [applause]