>> Rich Caruana: It is my pleasure to introduce Been... today. Let’s see you graduated from MIT in the...

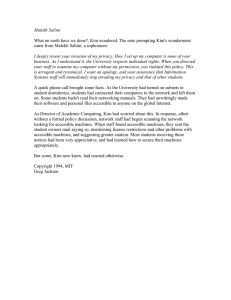

advertisement

>> Rich Caruana: It is my pleasure to introduce Been Kim and have her here for a talk today. Let’s see you graduated from MIT in the last year or so? >> Been Kim: Yeah this year, this summer. >> Rich Caruana: Okay, great and you have been at AI2, the Allen Institute for Artificial Intelligence for what 6 months now? >> Been Kim: 2 months. >> Rich Caruana: 2 months, okay so you are very new to the Seattle area. The weather will get better in 6 months. Been Kim has been doing research in interactive machine learning and she is going to talk about that today so welcome. >> Been Kim: Thanks for the introduction and thanks for inviting me to speak at MSR here today. Today I am going to talk about my PhD work on interactive and interpretable machine learning for human machine collaboration. A quick vision of my research is harness the relative strength of humans and machine learning models. Humans have years of accumulative domain expert knowledge that machine learning models may not have, whereas machine learning models are able to perform complex computations efficiently and precisely. The goal of this work is to have them work together in order to help humans make better decisions. When we want to achieve that we need machine learning models that can intuitively explain to humans what are the results of machine learning methods for humans who may not be machine learning experts. We also need machine learning models that can incorporate human domain experts’ knowledge back to the system in order to leverage that. So my research objectives are developing machine learning models that are inspired by how humans think that could first infer decisions of humans. We first need to know what decisions humans have made in order to maybe make a better suggestion. So I built a model that could infer humans’ decisions from human team playing conversation for disaster response. The next part is about building a machine learning model that can make sense to humans, clustering methods that could intuitively explain to humans what the results are trying to say. And finally to close this feedback loop I built a machine learning system that could incorporate human domain expert knowledge back to the machine learning system implemented and verified in a real world domain computer science education. I will talk about that today. So among these three portions I am going to focus on these last 2 sections of my thesis: make sense to humans and interact with humans. So let’s jump right into building machine learning models that could communicate intuitively to humans about its machine learning results. When you think about building machine learning models that could intuitively explain and communicate with humans you have to maybe first think about how humans think. There has been rich cognitive research that shows that the way that humans make these technical decisions are based on [indiscernible] based reasoning. Particularly if you are a skilled firefighter the way that you figure out what you are going to do with this new incident, maybe a new fire, is you think about all the previous incidents that you have dealt with, think about the closest example to your new case and apply a modified solution to this new case. So I argued that if we want to build a machine that could support better human decision making we need to represent the information in the way that humans think. >>: My intuition is exactly the opposite. So if I want to teach another human how to buy a car I could go and point to the color of that car or I could teach my feature, which is that you should look at the reliability record, you should [indiscernible], those are features. So I teach my feature, I don’t teach by example. You don’t teach by teacher. >> Been Kim: So imagine a case where it’s not a car anymore. Imagine a data point where you have thousands of features. Can you teach those thousand features and enumerate all the features to humans? It might be difficult, right. >>: [indiscernible]. >> Been Kim: I think that is a really good point. In fact the work that I do here is roughly what you are saying, pursuing scarcity. So example with features that are important and those are the ones that I point out along with the example. >>: Oh I see, so you teach individual feature plan example. >> Been Kim: Yes. >>: So you still teach by feature, but you teach and do your feature by example? >> Been Kim: So example with the peak features that really matter. >>: I teach an example that supports this. If you want to teach a pigeon how to add the first thing you do is put a piece of tape in the cage and you reward for being the right half. So you are teaching by example, but being the right half of the cage is a feature. Once you have taught that you move to the tape then you reward for pecking on the wall and in some way each of these is a feature, but each of these features are taught by example. >> Been Kim: Right that’s true, a very similar idea, exactly. But this idea of example based is really motivated by people studying humans. When they study there are people making important critical decision. The way that humans’ brains work is by example so it’s to leverage that sort of rich cognitive research as a start. And leveraging the examples and intuitiveness of examples has been studied, it’s not new. In classical AI it is called a case based reasoning. Case based reasoning has been applied to various applications successfully, but case based reasoning always requires labels. So if you are trying to apply cased based reasoning to fix your car for example you need to know all the previous cases that you tried to fix your car in order to decide what solution is appropriate to fix your car right now. It doesn’t also scale very well to complex problems. I think going back to your question if fixing your car requires you to read pages and pages of document it starts to lose that intuitiveness. And of course it’s not designed to leverage global patterns of the data. We do have machine learning models that can leverage global patterns of data and in particularly related work as interpretable models. There are decision trees or sparse linear classifiers in order to do this. And what I mean, which I just said, is if you give me high dimensional data points then I am going to select the subset of the features that are important and give it to you so that we can scale to complex problems. My work is about combining these two by maintaining the intuitiveness using examples while leveraging global patterns of the data using machine learning, but pursue scarcity so that we can scale to large complex problems. So our approach we call it Bayesian Case Model and formally we leverage the power of examples which we call prototypes and subspaces that are hot features, features that are important. This is just a way to say, “We have this complicated important thing that we would like to explain to you and we are going to explain it to you using examples.” Formally we combine Bayesian generative models with case-based reasoning. So let me explain to you how BCM works using examples. So these are a set of recipe data where a data point consists of a list of ingredients to cook the food. It’s not about instruction, but just a list of the ingredients that you need to cook it. If you look at it can you cluster these data sets into say 3 clusters? >>: Crepes. >> Been Kim: Crepes, yeah. >>: Meat. >> Been Kim: Meat? >>: Mexican meat. >> Been Kim: Mexican, yes and there is one more. >>: Strawberry. >> Been Kim: Yes so strawberry dessert things. So those are three clusters. So, one way to cluster this data set is Mexican food, crepes cluster and dessert cluster. BCM is the clustering method where it clusters this data in a non-supervised manner and at the same time tries to learn the best way to explain each cluster. So in this case someone said Mexican food and we are lucky in this case because we somehow had a name for this cluster to describe this cluster, some extra abstract higher level idea. But if you worked with real data often when we cluster data we don’t have a convenient name to describe a cluster. So instead I can explain to you this cluster as the first cluster is like taco. If you have eaten tacos you know what’s in there, but really the important ingredients that define this cluster as a group is salsa, sour cream and avocado. The second cluster is like a basic crepe recipe where important ingredients are flour and egg. The last cluster is chocolate berry tart. The important ingredients are chocolate and strawberry. >>: So here is my comment to these two slides. >> Been Kim: Yeah? >>: You should do a user study where you can see where people can understand this information faster than in the previous slide. This slide to me I see the clusters much more clearly. I understand them much faster and I didn’t have to read anything. >> Been Kim: I have a human experiment that –. >>: In the previous slide I couldn’t easily see the clusters, but once you put things together like this I can see the clusters. >> Been Kim: So the entire birds eye view of all the data points, that’s what you are saying? >>: This is better than the previous slide. >> Been Kim: The previous slide? >>: The one before. >> Been Kim: The one before? >>: Yes, that’s hard. >>: This is not good, this is a jumble. This is good and I see where the clusters are. >> Been Kim: Right, right. >>: The next one is not as good as the previous one for me. >> Been Kim: Oh interesting, so you would like to see all the data points? >>: If I see cluster B I know what it is right. I don’t have to read anything. I see them and instantly know what it is and I see cluster A as the same without looking at the ingredients, without reading anything. I would rather see 5 examples of cluster B than 1 example and explanation. >> Been Kim: Oh I see what you mean. You want to see multiple examples. >>: So I wonder most people are like. Are they visual like this or are they methodical like you have on the next slide? >> Been Kim: I see, so this one is a way of I randomly selected examples and you are saying that multiple examples are better than just one example and subspace? >>: [indiscernible]. >>: My answer is counting things I can see, like the clouds and so on, but somehow I want to train something I can just spot rather than read through. >> Been Kim: So I have a human –. >>: But recipes would be interesting to see whether it is really true. I don’t know if it is actually true. Is it just me or do some people see it this way and other people really prefer to just see a few ingredients and read them rather than see these things? >>: That’s interesting because I prefer to read the ingredients. >>: The ingredients? >>: Yeah. >> Been Kim: I talk about this in the later slides where –. >>: [inaudible]. >> Been Kim: I talk about people who prefer a different way and depending on what domain experts you are working with people have different preferences and the interactive system that later in my talk is going to talk about what are the different ways? Not exactly in the way that you said, example verses distribution of the data, but how can we leverage what the preference of the domain expert wants and bring it back to the machine learning system? But to answer your question briefly and I will show you in the later slides we have a human subject experiment to compare this sort of representation verses not exactly like this, but non-example based methods. So instead of this I show you sort of a distribution of hot features of each cluster. So I will show you that in a bit. So BCM performs join inference on cluster labels and explanations, which are provided in the form of prototypes and sub spaces and formally we define prototypes to be quintessential observation that best represents the cluster and subspace to be sets of important features and characterizing the clusters. So there are two parts in BCM; clustering and learning explanation. Remember that in the inference that these two happen simultaneously, but for the sake of explaining I will divide this into two parts and explain. So the first clustering part we leverage widely used model called Admixture model. This is something that LDA uses as well. What Admixture model does and also allows flexibility in that way is that it assigns multiple cluster labels for a data point as opposed to assigning one cluster label to one data point. So if you take this sort of new data point, say this is a Mexican inspired crepe, and I talked about this in front of my friend from France and he got a little offended like, “What are you talking about a Mexican inspired crepe?” But if you have such a recipe then what Admixture model would do is assign the ingredients that belong to Mexican cluster to be cluster A and other ingredients that belong to crepe cluster to be cluster B. So you can think about if you were building a vector of Z where it has got some A’s and some B’s. You can think about another example where we have a crepe that has chocolate and strawberry. So some of the ingredients, chocolate and strawberry, will belong to cluster C where the other ingredients like crepe belongs to cluster B. You can also think about normalizing this vector to get cluster distribution vector. That’s a pie vector, it’s a common representation of Admixture model, pie vector right here. And if you combine that with the supervised learning method you can use it to evaluate your clustering performance and that’s exactly how it is done in LDA paper and that’s exactly how we evaluate our model. Thanks to a nice conjugate prior we have you have some control over how you want the cluster labels to be distributed using the hyper parameter alpha. The next part is learning explanation part. We have prototypes and subspaces as a way to explain clustering results. The first prototype is in the generate story. Remember it’s not going up to the inference story, but in the generator story. Prototype is simply a uniform distribution over the data point that you gave me. And this is the key portion of why BCM is intuitive because if you are a doctor and you gave me patient data, patients that you have dealt with in the last decade maybe then I will cluster them and the way that I will explain each cluster is using one of the patients that you dealt with. Subspaces are simply binary variable, one for important features and 0 for not important features. And together with prototype subspaces forms this function G that feeds into sample the fee latent variable which describes characteristics of clusters. So what is this function G? Well function G is really simply a similarly measure and you can use any similarity measure that you want, but this is the similarity measure that we used. This looks complicated, but this is actually the simplest possible similarity measure that you can think of. It’s as if you have a feature that is in the subspace and you have the same feature value as your prototype then I am going to score higher than other data points that don’t have that feature. So if I am a taco, well actually prototype is a taco and I am a taco salad, I will share avocado and sour cream with my prototype so I will score higher than say chocolate berry tart. >>: So go back to your N part, does that mean that you have a closed universe assumption that we cannot accept new items in this model? >> Been Kim: So I will not create a new item. >>: You will not generate a new item in this model? >> Been Kim: Yeah and if I do the assumption here is that they would loose the intuitive. You give me patient data and I will generate some fictional patient that you never dealt with. >>: So what is being generated in this model? I am a bit confused now. >> Been Kim: So are you familiar with the LDA generated model right. >>: [inaudible]. >> Been Kim: So this is just generated story how I –. >>: But you are not sampling your documents right? You are not sampling new patients? >> Been Kim: I am not sampling new patients, right. >>: Or are you saying you could generate new patients, but just take another set as the set for the purpose of getting prototypes? Like do you have that end and that end is limiting how many items you can have in a system? >> Been Kim: I see, uh-huh, uh-huh. >>: Or is that not true? You can actually in your model you can produce item N plus 1. >> Been Kim: So the answer to that is yes and no. So I guess I can, it’s a generated model so you can generate fictional prototype, but one of the points of this model is doing so would decrease how intuitive this model could be. >>: Because in the end you use the generated model for interpreting the parameters right? You are going to look at all the parameters and tell a story about it instead of fitting new items. That’s the purpose of the model right? >> Been Kim: Right. >>: A comment on that. So generating examples is really dangerous in a complex domain because you will get some detail probably wrong. I mean in healthcare for example if you were to generate a patient and you got something wrong, like say you had a male that was pregnant. You know unless you had sort of perfect generating structure you might make those kinds of mistakes and then experts will just immediately will ignore everything you do because it is sort of non-sensible. >>: Sure in some ways –. >>: So using real examples is sort of safe because they must exist. >>: It’s the same for LDS story; although it’s generative nobody in their right mind would actually generate new documents with LDS. It’s a matter of looking at the topics and then imagines the story as [inaudible]. >> Been Kim: Exactly, a really good example. So, NG function is similarly measure and you can use other similarly measures such as loss functions. It’s a pretty general model. You can peg similarity measure and that fits your obligation. So when I am working with interpretable models I can’t just show you that, “Here this is a model; I clustered model well and take it.” That’s not how it works. We have to answer all these questions in order to convince you that I built something interpretable. So first is sanity check. You said that you learned prototypes and subspaces; does it learn intuitive prototypes and subspaces that make sense? Second if its true interpretability is great, but we don’t really want to sacrifice performance for interpretability, it doesn’t maintain. Also lastly if the two are true then can this really improve human understanding about the clustering results? So I will present BCMs results by answering each of these questions. We ran BCM on two publicly available data sets. The first one is a recipe data set. This is from a computer cooking contest. One data point is a list of ingredients here. The first one is a soy sauce, chicken, sugar, sesame seeds and rice. So it’s a list of ingredients as Boolean features, it’s making an Asian inspired chicken dish. So those are our data points. When I ran BCM on this recipe data we learned 4 clusters. The first one looks a lot like pasta cluster and it selects herbs and tomato in pasta as it’s prototype and it learns oil, pasta, pepper and tomato as an important set of features. The second cluster which looks a lot like a chili cluster selects generic chili recipe as it’s prototype and learns beer, chili powder and tomato as it’s subspace. And if you are like one of my committee members who when is showed this to her and she was like, “Been something is weird, beer is should not be in chili. You have got to double check your model.” If you are wondering about the same thing I highly recommend that you should start putting beer in your chili. It really makes a lot of things better. You can put it in your stir fry. Beer makes a lot of things better, like triple and quadruple better. We also have a brownie cluster and it selected microwave brownie as its prototype selecting baking powder and chocolate as its subspace. >>: So you are showing part of the words as part of the words from that particular recipe. >> Been Kim: That’s correct. >>: Then you just highlight the ones that are the most likely. >> Been Kim: Important ingredients that describe the cluster. >>: Is there any hierarchy to the clustering or is it one level? >> Been Kim: It is one level right now, but I think we can extend it to a hierarchy cluster. >>: Can I say something about your inference algorithm? >> Been Kim: How do I perform it? >>: Yes. >> Been Kim: Yeah it’s coming, but I perform Gibbs sampling. >>: You do have discrete variables here right? >> Been Kim: I do. >>: You said easy to do sampling, like does it behave nicely? >> Been Kim: There is like some art about making Gibbs sampling work. It is about hyper parameter –. >>: [inaudible]. >> Been Kim: I found it to be okay and I kind of learned, this is not my first Bayesian generating model, I learned how to make it behave itself. But I think there are a lot of other inference techniques like variation inference that could also be applied to this model if I sit down and workout the conjugacy. >>: What about the naive approach where you just run your LDA or whatever on this data and then for every one of the clusters you find the recipe and just show that? >> Been Kim: Yes that will be on another example, in fact my human subject experiment explored that it exact way. It doesn’t pick the example, but it shows a list of ingredients that really looks a lot like a recipe. All right, another data set that I ran BCM on is hand written digit data. This is USPS hand written digit data and I am showing you 5 different clusters. On the right I zoomed in one of the clusters. On the left the first row is showing how it learns prototype as the Gibbs sampling iteration goes on from left to right and the down lower is showing subspace. So you want to see something that doesn’t make sense on the left and hopefully it will make more sense as you move to the right. The prototype looks like its learning digit number 7 and the subspace looks like 7, which makes sense. So we were encouraged by these 2 experiments and we said, “Okay what about the performance?” Yes? >>: I thought prototype has to be one of those end examples, but why do you have [inaudible]? >> Been Kim: This one? >>: Yeah. >> Been Kim: Yeah I get this question all the time, it is actually. So I double checked it’s a number 1 in their example. It’s digitally written hand digit data set. So if somebody put the pressure really low then its like 1, it exists. Okay, what about performance? We compare this clustering performance with LDA because it uses the same Admixture model to a model underlying data distribution. When we tested it for 2 different hand digit data sets and 20 new scripts, and the green one on the top is BCM and the yellow one is LDA, we show that BCM is able to maintain performance and often it performs better than LDA. We also perform sensitivity analysis. There are a couple of hyper parameters that we can tweak. We just want to make sure that we didn’t just get lucky by hitting the right hyper parameters. So we tested within a range of values for different hyper parameters and show that the performance didn’t change significantly. >>: So just to clarify this is classification right? >> Been Kim: Yeah. >>: So you are representing the object by its topics mixture of weight as the new feature representation? >> Been Kim: Yes, exactly. >>: Okay so here you are saying that somehow this transformation maintains [indiscernible]? >> Been Kim: Yes so I spoke briefly earlier, but I kind of went quickly. In LDA paper the way that they have tried to convince the readers that the clustering performance is good is that they use this cluster distribution as a new feature, so like you said, combined with SVM to produce this sort of graph in a [indiscernible] and that’s exactly what we did. >>: Not very convincing anyway, but I understand what you are doing and it’s good you see that it’s [indiscernible]. >> Been Kim: Yeah I think it’s difficult to put your hand down on the clustering method right there. Other methods like topic coherence or other measurements the topping model community came up with and it’s subjective, it’s difficult and you don’t have a ground truth. Yeah, it’s a difficult problem. >>: So can you explain truthfully why this new model BCM is better than LDA? >> Been Kim: It’s coming, I have a picture example of that. >>: So in this case you have one prototype per call or per topic? >> Been Kim: Yes. >>: Since you are performing in this way because you having a [indiscernible], why not have more than 1 per class? >> Been Kim: Yeah I think it would be a good idea to extend the model to have multiple models. It just makes things more expensive when it comes to inference, but I totally agree there are [indiscernible] working Stanford where they learn multiple prototypes to cover different ranges of examples. I think that’s a great idea, but it’s something that I haven’t done. Yeah? >>: So what are the features to the SVM? >> Been Kim: So feature to the SVM are this pi vector that describes cluster distribution. So I show you the Z vector where it collects As, Bs and Cs. You normalize them to get distribution vector. So like parameters for multinomial distribution. So it’s just like LDA. Okay so a pictorial example to answer your question, a pictorial example of why this might be true. If you think about posterior distribution of LDA and think about a level set where all the solutions on that level set scores equally in LDA model. LDA would pick any of these because they are equally good, but what BCM is trying to do is pushing this solution towards some other point that is also that is also equally good in terms of clustering, but also interpretable for humans, because in posterior of BCM this point would score higher than this point. You can also think about the way that BCM characterizes the cluster is working as a really smart regularize for this model. >>: So do you think human interpretability effectively creates a sort of margin? >> Been Kim: Yeah that’s how I intuitively understand why this might be the case or another example that you briefly mentioned, because we are clustering around the examples maybe that gives arise to a better solution because it exists. >>: So you are using the same number of topics? >> Been Kim: As LDA yeah. >>: I have a simple explanation for what was happening there; your LDA model cannot capture all the correlations in the data, but the data has those correlations and you are using the data in your model so that’s why you are getting this sort of hybrid thing that captures these higher level correlations that are not common. >> Been Kim: Yes exactly, that as well. >> That’s why I am saying multiple examples are even better. Then of course you have to compare with [indiscernible] approaches. >> Been Kim: Right, exactly. Yeah I normally learn how many examples do I need to learn the example? Yeah that would definitely be a good way to expand this work. All right. We talked about this, but I perform Gibbs sampling to do this. This is a good equation that I didn’t mean to walk you through where you can see that we see some bad functions by integrating functions. We integrate out fees and pi’s because we don’t need them, but we can forward generate those cluster distributions if we want to. So the last experiment that I did is; does this learned representation or explanation make sense to humans? One way to measure this is taking subjective measures. So you have people come in and you ask them whether you like explanation A verses B. A better way to do this in my opinion is taking objective measure of human understanding by asking humans to be a human classifier. So we explain 4 to 5 clusters using the 2 different methods, BCM and LDA, then we give them a new data point and ask them to classify where this new data point belongs to. We measure how accurately they can do that. When cluster is explained by BCM it looks like a list of ingredients. We don’t give them the name of the dish because it would be too easy. When it is explained by BCM it makes a dish, it’s an example. When explained by LDA it is the top K topic or ingredients for each of the clusters. So it look a lot like that it will make a dish, but it does not make a dish. So it is not an example. >>: Can you explain that again? I didn’t quite get it. >> Been Kim: How the clusters are –? >>: How they are presented. >> Been Kim: So this is A example, this example that I pointed out earlier. This is about some Asian chicken dish. These are ingredients that make that dish, this is an example. >>: So this is a particular example [indiscernible]? >> Been Kim: Yes whereas LDA I will just select top K ingredients of each cluster where when you look at it looks a lot like a dish, but it does not make a dish. >>: I see. >>: It seems that there are 2 things going on there. So there is the prototype and there is the fact that you have a real example, right. So I can take LDA and then randomly sample a data point and then look at its [indiscernible] cluster and see if the person agreed with it. >> Been Kim: Ah, randomly sample among the samples that belong to a particular cluster? >>: Yeah or just randomly sample a –. So the point is the prototype is special because it somehow maximally represents the cluster verses an arbitrary guy in the cluster. So both differences are good. >> Been Kim: Yeah. >>: [indiscernible]. >>: Or like perhaps salt is in everything so it’s not a good predictor, but if you don’t have salt then it doesn’t meet people’s perception of a recipe. >> Been Kim: Yeah true. There wasn’t a question right? >>: Well so I am suggesting that maybe the comparison is a little unfair in the sense that you are giving BCM not only a prototype, but a real example that LDA doesn’t get. I could have LDA prime that returns a real example as opposed to the top features. >> Been Kim: Right so we could compare the same sort of like a nearest neighbor example. >>: And I mentioned earlier off the LDA you just pick prototypes. >> Been Kim: Right, right. >>: [inaudible]. >> Been Kim: Yeah we could do that. I think that the way that I didn’t want to add on to –. Yeah no that could be a perfectly good baseline that we didn’t test. >>: You said something about your past dishes. >> Been Kim: Yeah. >>: Where do your truths come from? >> Been Kim: Where is it coming from? >>: Yeah. >> Been Kim: It is one of the data points from the data set that I mentioned earlier. >>: So it is one of those “and” things? >> Been Kim: It’s one of those “and” things yeah, because it’s a new example. As a classifier you take a new data and you classify them. >>: What do you mean a new example? So my question is do you leave that point out when you are trying your model? >> Been Kim: Oh I see, yeah of course, yeah. >>: So you actually have to try a whole bunch of models, leaving a bunch of dishes out? >> Been Kim: Why would I need that? >>: I am just asking you. Your specific dish, your test dish –. >> Been Kim: Would it be left out from –? >>: It came from our original corpus right, but when you trained your BCM model was that in the training set? >> Been Kim: No it shouldn’t be because then it would be –. >>: So what’s your protocol? Did you leave a bunch of dishes out? >> Been Kim: Oh I see yes, of course yeah. >>: So that’s what you did. >> Been Kim: Yeah, yeah, yeah. >>: How much did you leave out? >> Been Kim: I asked 16 questions for people. So I think I left out about 20 dishes when I was doing clustering. >>: And the true label of the dish how is that determined? >> Been Kim: These are all really good questions. So it is determined based on its name. So we know we had 2 independent human annotators and it has opened on human experiments and we have them sort of give them classes that they can label each dishes too and they label them. If you get it as a human it is pretty obvious which cluster this would belong to. >>: Sorry to dwell on this, but the clusters are with respect to what the BCM model has covered right? >> Been Kim: Uh-huh. >>: So have you compared against the pure computer system where you try all “and” items and just see where those guys fall, where those dishes [indiscernible]? >> Been Kim: So assuming that they would come up with some other clusters than what has been tested? >>: Right, okay I see the problem. We can talk about this offline. >> Been Kim: Okay, great. >>: So when people do this they are labeling, by your recipe, they are doing Admixture of the new recipe with respect to names and they are using name dish 1 and dish 2. >> Been Kim: Yeah so we don’t give them the actual name of the dish. >>: And how much time is spent analyzing this? There is a complexity here because now I as a human subject have to read the recipe and understand it. I do a lot of processing. So if I spend very little time I might be confused. I might be learning other tasks and the early examples I do wrong, but the later ones I do better. Also if I am allowed to name these things I might do better. >> Been Kim: Interesting. >>: If I am asked to draw what the recipe is going to look like at the end and make the recipe I may do even better. So I don’t know how that’s –. >> Been Kim: I see. >>: It’s a [indiscernible] vector, but also it is kind of an interesting research question. >> Been Kim: Right, right. >>: So what are your experiences regarding that? I know that was not the target, but. >> Been Kim: Yeah, no it is a part of the experiment where you make sure that in terms of the ordering the later one you do better than the first one. We have the prefect latent square; it’s just which question was presented earlier for 1 participant and some other participant so we can have a balance impact. In terms of naming things we actually, the first pilot study, we struggled to set people to the same granularity of recipes. What I mean by that is when I show them the cluster they were thinking “Is it this holiday brownie or is this mint brownie?” That’s the kind of granularity they were thinking. So we were like, “No it’s not”. So we gave them categories of candidate including some of the ones that represented the tier as a cluster and some of the other things of the same level of hierarchy to prime them to think about in this sort of level of space. >>: [inaudible]. >> Been Kim: So when we did that we get, you probably already this by now, we have 24 people, 384 classification questions and we got statistical significant results. What this is showing you is that this model learns reasonable prototypes and subspaces, maintains performance and it can improve human understanding for the test that we tested. So, moving onto the next part the closing; at the beginning of the talk I talked about how we want to leverage human domain expert knowledge back to the machine learning systems. In this part of work I extended BCM in order to incorporate human domain expert knowledge and implemented this for real-world obligation, the computer science education domain. So before going into detail, and I feel like this crowd would buy into this idea, let me convince you why interacting is important. So this is the data set that showed you in the beginning of the talk. I showed you that one way to cluster this data, and we worked this out together, is Mexican food, crepe and chocolate berry tart. But if you are an owner of a restaurant and you are trying to hire a pastry chef the way that might be really useful for you to cluster your menu items might be this; savory food on the left and sweet food on the right. So when you are evaluating your candidate you want to make sure they can cook these things. What this is trying to tell you is that depending on what you are trying to do with these clustering results what is most useful could be different. I am arguing that trying to figure that out interactively is a good idea. And of course leveraging interactiveness to deliver better clustering or classification results has been studied. A lot of people at MSR had studied this idea. Some of them assumed that the users know machine learning, so we help them to better explore more parameter settings, assuming that they know what different hyper parameter settings would influence the performance or some other word had assumed some simplified medium for communication. So users would internalize this medium to be something, communicate through this medium. And machine learning would take this medium, transform into something that makes sense to machines, but these two could be potentially different. The work that I’m suggesting here provides a unified framework where humans and machines can talk and communicate using the same exact medium, prototypes and subspaces. So we may BCM to be interactive and we called it iBCM, by making prototypes and subspaces and another node to be what we call interactive latent variables. These are like latent variables, but not quite because not only values of these nodes are inferred from data, but also feedback from humans. The key part why making interactive system is difficult is because you want to balance what data is trying to say and what humans are trying to say. You don’t want to completely override what data is trying to say with what humans said, but at the same time you don’t want to completely neglect what the human is trying to say. So our approach is decomposing Gibbs sampling steps to adjust the feedback propagation depending on their confidence. And also since we are working with an interactive system we want to make sure that the response time is really quick. You can’t have them wait forever. So we accelerate the inference by rearranging the latent variables. In the higher level, without going too much into detail we first listen to users, then we propagate user’s feedback to accelerate inference and then reflect the patterns of the data. We tested this to 2 different domains; the first one is abstract domain and then I am going to show you the real-world domain. Abstract domain is useful because we have a control over how we are going to make the distributions of the data to be. In this case we have generated this data such that there are multiple optimal ways to cluster the data. This is the interface that humans used to interact with your system. Each row is a cluster so I am showing you 4 clusters. The first column is a prototype so the second row says that the data point 74 is the prototype of this cluster and on the right I show you the features that it has along with subspaces. So if it has checkmarks it has got round patterns and it’s triangle and it’s yellow, but only these two features are in the subspaces. So it’s a triangle and its yellow and if you look at on the right which shows other items in that group it all has triangles and its colored yellow. There are 2 things that users can do; you can click 1 of the checkmarks to change to star checkmark to include that feature into subspace and you could click the star checkmark to make in the checkmark to exclude that feature from the subspace. Then you can also click any of these items to promote that item to replace the prototype of any of these clusters. >>: So the first cluster was the data over the round pattern start? >> Been Kim: Say that again. >>: Your first row is the beginning of a stared feature called “ram pattern” which I assume is the not lines. >> Been Kim: Oh yeah, yeah. >>: [inaudible] has overridden the users feedback and said, “Oh well I am going to throw lines in there because that’s what the data says.” >> Been Kim: So maintaining that balance is difficult. That’s part of what this is trying to do. The way that we ran this experiment is that we first show the subjects randomly arranged this whole data set and then we asked them, “What is the way that you prefer to cluster this data set,” in order to sort of learn the inherent or underlying preference. Then we show the results from BCM. So essentially what this does is it selects one of the optimal ways to cluster this data. Then we asked subjects, “How is this matching with your preferred way to cluster?” And we collect that data according to [indiscernible] scale. Then we have subjects interact with iBCM. So they can select/un-select the subspaces from the prototype to make this cluster more like what they wanted. Then we ask the same question again to indicate how well the results match with what they want. We compare the answers collected in these two steps, we asked for 24 participants under 92 questions and subjects had expressed that they agreed a lot more strongly that final clusters matched with their preference after their interaction and that was statistically significant. So encouraged by this idea we now take this iBCM to a real-world domain, to education. >>: Did you test for the placebo effect here? I mean maybe they just like the final clusters because they contributed. >> Been Kim: I see, we collected –. >>: [indiscernible] a random cluster, I mean just noise to your cluster and say, “This is a response to what you have said.” Did they still prefer the new ones to the old ones? >> Been Kim: Because they just fell like they have interacted with it. >>: Yeah they are committed to it, invested in it. >> Been Kim: Interesting idea. So this might be slightly [indiscernible], but to answer your question we didn’t test that, the placebo effect. But one of the ideas of the interactive model is that often when users start to interact with interact assistance they don’t actually know what they want. As you interact with it you kind of learn, “Okay yeah, this is what I wanted,” and it’s like a way for them to interact with the system to figure out what they want as well. If the final clustering is really not what they wanted it’s just that simple placebo effect I personally don’t think that would be the case, but it’s something that we could totally test. >>: Can you go back to the previous slide. I think I have a related question here. What do you mean by the first step? >> Been Kim: So the first step is really trying to fetch what humans wanted initially. What are their underlying distributions? >>: But to find the subject I am supposed to arrange those objects into clusters? >> Been Kim: I see, no we asked them, “What are the features you want each cluster to have?” Because there are only 6 features here we said, “Cluster 1 you wanted cluster to have line feature pattern and color.” >>: I see, I see, but what about I just suggested? Maybe half the humans just free play and form clusters. Then you have an objective way to measure. >> Been Kim: Yeah, yeah, yeah, the way that I measured this “P” value is just simply comparing these two, but you are right. If I had compared it with like original then the placebo effect could be tested. But I looked at it, scanned it and I don’t have a number for it, but they are roughly similar, but I don’t have a number for you right now. >>: For the teachers who are predefined you gave them the features? >> Been Kim: The features of the abstract data? >>: Yeah the circle random [indiscernible]. >> Been Kim: Yeah, yeah, because those are generated. >>: Have you thought about just letting them come up with features and just give them an empty table and say, “You fill in whatever you want,” and then other people using the features that somebody else has come up with and so on. >> Been Kim: That’s interesting, that would be interesting, but I don’t know how you would evaluate that. If you are using different features –. I guess if the only thing I am going to take is [indiscernible] scale then I can probably evaluate it, but that would kind of introduce another factor that defeats the purpose of using abstract domain, because the point of using abstract domain was that we have this clearly defined world where only we allow this many features. >>: [indiscernible]. >> Been Kim: No a completely different set. There is no overlap between those two, because the first people knew what I was doing. So I took the iBCM encouraged by the previous results to the real domain and the education is a particularly appropriate domain form iBCM and interactive machine learning because teachers have accumulated years of knowledge or maybe their philosophy of how an introductory Python programming class should be taught and we want to leverage that and deliver something that is useful for them. In particularly the domain that we were looking at was how they create grading rubric. So, exploring a spectrum of student’s submission for homework problems. Currently what teachers do is they select randomly 4 to 5 assignments, they look at them and scan them in order to create the homework, but we know that if teachers can understand better the variation of this data they can provide better tailored feedback for the students and ultimately hopefully improve the education experience for the students. But it is difficult working with coding data because we don’t have the obvious features as we do for other types of domains. So we leverage a system that Alena Glassman and Rob Miller at MIT developed called OverCode which performs static and dynamic analysis in the code to extract the right features. So we use those. This is the system we built. On the left we have prototypes. I am showing your 3 clusters. The last one is blue because it is clicked. When it is clicked on the right it is showing you other items, other homework submissions that belong to that cluster. And these are from a couple of years of homework submissions that were collected from the MIT introductory Python class. The red rectangles are subspaces of course, the keywords. Humans can interact with the system using 2 things; similarly unselect/select subspaces. So make a keyword going inside of the red rectangle or not and also promote certain examples to be prototypes. So if a teacher likes this prototype then they could promote this to replace any of the prototypes. How we performed this experiment is comparing 2 interactive systems. We made the benchmark system interactive to keep the engagement level fair for both of the interactive systems. The first one is the BCM system, the one I just told you and the second one is still interactive, but where subspaces and prototypes are preselected for the users. The way that this is generated is that we ran BCM with new random initial points. So it converts to different clusters, but it’s still optimal according to the internal metric of the clustering method. So when users click that button up there it just shuffles around and shows you a new cluster. We invited 12 teachers who previously taught Intro to Python class at MIT and we told them that their job was to explore full spectrum of students’ submissions and write down a discovery list. So any features or interesting things they find when you are looking through this large student submission to write them down. This is a video demonstration how the teacher might use the iBCM system. So first the teacher goes to the first cluster and because “while” is in the subspace most of the submissions on the right has the “while” keyword in it. But the teacher scores down and finds that everyone used “while” until they find something interesting. Some person imported an [indiscernible]. If you are familiar with Python this is like importing a library. The teacher said like okay let’s take a further look at this by promoting this guy to a prototype and then indicate what’s interesting to the teacher. Then look at all the other people who imported a module. Somebody imported math, pi, [indiscernible] tools and then the teacher moves onto the next cluster and is checking through this until they find something interesting. This student is checking length of these two vectors and depending on what teacher you are talking to some people think that’s a good idea and some people don’t think it’s a good idea. So the teacher goes through and updates that to the prototype and continues to investigate the student submissions. >>: So do I understand correctly that you have a fixed number of clusters, hence prototypes and if I found something potentially interesting I need to replace one of my existing [indiscernible]? >> Been Kim: Yes, yes this is a good question because that’s why the task of the how we envisioned this tool could be used was more exploration. It’s not about clustering the data set. Once you explored and discovered things from the existing clusters our idea was that you can now forget about that cluster, update that with that to some other cluster, make it into a different cluster and continue to explore it. >>: Yeah I understand, this is essentially what we call “island finding”, finding new things. But I’m a little bit worried that because of your iBCM system would there be some coupling by the fact that I need to push down some existing clusters that might hide something. >> Been Kim: Yeah totally, totally, like I feel like we worked on this together or something. Yeah we had that effect. We started this and the way that we had overcome that issue, and I think it’s still a really important issue to overcome when you are working on interactive clustering methods, is that you need to explain how the system works. And explaining that to someone who doesn’t know machine learning is still a very challenging problem. They need to know that these two clusters interact. They share some information so if you make an entire cluster to suck up all the submissions of one particular type you are not going to get anything like that for the other clusters. >>: Another potential solution is to go to basic non-parametric. Instead of a fixed number of topics you can go the route of things like [indiscernible]. >> Been Kim: Right, but that doesn’t solve the problem of interacting clusters. That only shows you that it is going to be another cluster, that if there exists enough evidence I am going to create another cluster. >>: I can create a new cluster. I can construct a new one. >> Been Kim: Right, right, totally, yeah, yeah, but to explain the nature of the clustering method is very difficult. So the way that we did is we had trained these people to use the assisted behavior that you would expect and now they choose it. Yeah, good points. So we invited 12 subjects who previously taught the class, we showed them 48 problems and we asked them 15 [indiscernible] scale questions where 12 of them resulted in statistical significance. They fall into one of these categories over here. With iBCM they said they were more satisfied. They better explored the full spectrum of student submissions. They better identified important features to expand the discovery list. They thought that important features and prototypes are useful. So some other codes from the participants they said that iBCM enabled them to go in depth on how students could do. They found it useful in particularly large data sets where brute force would not be practical. And this is particularly encouraging because in the rising of [indiscernible] where teachers now have to teach not just hundreds, but thousands or maybe more students maybe this interactive system can help them to better explore student submissions and ultimately deliver better education experiences for the students. >>: But here you again have that same effect as before because if you just give them some clusters and they are spent using them as opposed to you give them an opportunity to play with the data and understand where clusters are going and come up with almost the same solution themselves. They might prefer that second path to the first path, which doesn’t mean the clustering itself is better, it means that exposing technology and making them understand the technology is what actually helps. >> Been Kim: I see, that’s the experiment that I did in the interactive experiment. That’s exactly what I did. This is a pre-cluster thing and this is something they can interact. I asked which one they preferred and they preferred the first one. >>: Yeah, but then the question is, “Do they prefer this because this whole system helped them learn or did they prefer it because they ended up with better clusters?” I mean both are valuable. >> Been Kim: What do you mean by better clusters here? >>: So in the end when they create these new prototypes by moving things around they get a different set of prototypes than you would have gotten with BCM applied directly. >> Been Kim: True, but BCM –. >>: Or LDA and then choosing the best matching prototype. >> Been Kim: Right, but BCM is also optimal. All of these clusters are good; it’s just a matter of which one matches with your idea of clusters better? >>: But even that, the issue is that when you just provide the clusters I have no idea what the clusters are. When I do this I get an idea of what the clusters are. That doesn’t mean that –. >> Been Kim: I see just learning about the [inaudible]. >>: I mean we could have started with the clusters that you end up with, you run this experiment and end up with good prototypes for one teacher, but you give these prototypes to the next teacher and they go back to something else. If you run this in a circle like this they might just be going all over the place. They are all happier with the resulting clusters, not because these clusters are better for them, although that’s the illusion you get, but simply because by going through all of this, to [indiscernible], they start understanding the ecology. >> Been Kim: I see got it, got it. So this second tool kind of gives you that feel by giving you different ways to cluster the data. The users can see 5 different or 10 different ways to cluster the same data. That gives you an opportunity to learn. >>: But I think that’s where the value is rather than in getting the optimal clusters, maybe they are not getting the optimal clusters. They are just getting a set of clusters, but now they understand what they mean. >>: So related to this what is your quantitative measure for this result? >> Been Kim: It’s the 15 [indiscernible] scale types of questions, post questionnaire questions. >>: But this is done per subject, right. I am going to evaluate my own. So, maybe one solution is to do a cross-subject evaluation. I evaluate your resulting [indiscernible]. >> Been Kim: So we thought about this idea, but then there is another conflict that what we were trying to do here is to leverage that teacher’s philosophy of teaching. We have like 10 to 12 features coming in to the room and they have really a completely different idea of what good homework assignments should be. Like the “assert” example some people think, “Oh yeah of course you have got to check the length of these two vectors,” but some teachers are like, “No you don’t do that, it’s a waste of line. You just assume that given inputs are all that he checked.” So these things are very different and if I ask you to check my results chances are it’s probably not ideal for you. >>: [inaudible]. >>: I just think like a feature comes into your system and starts messing things around. So like the cat comes onto the mat and she is going to start moving the mat around, the mat is going to be exactly the same when she lies into the mat, but she thinks, “Well now it is better than it was before because I have done something to it.” >> Been Kim: My assumption is –. >>: What you do to it is not to “it”, you are doing something to yourself. You are preparing your self to lie on the mat. >> Been Kim: Right, so my assumption to my work is that humans are better than that. Humans have some expert knowledge that we can really leverage in the system. That is an assumption to my work. >>: But it’s testable and either way it’s useful, even if that’s what happens, that the teacher get’s used to this tool, that’s still useful. >> Been Kim: Right, how is it testable that you are doing? >>: I think that’s the answer actually. >>: You could test for coverage or recall of different features. So just using the tool collectively on many different features can be discovered. >> Been Kim: So these are things we that we thought about by collecting the discovery list, what they wrote and how much of the coverage that is compared to like the Oracle set of features of the whole assumption. That’s all subjective, we have to somehow come up with the Oracle list which is subjective to begin with and being able to count when the teacher writes this features. The teacher might mean two different things and having that just really lined out one by one in Boolean vector is really hard. So even calculating this absolute value measuring this is actually really hard to test. Okay so I talked about machine learning models that can make sense to humans by building Bayesian Case Model that could provide intuitive explanations using examples. Then I extended this idea to be interactive, implemented in a real-world domain computer science introductory Python class to show how this could be useful. I think there are a lot of really exciting things that can be done and interpretable in interactive machine learning models. One other thing that I worked with briefly and it deserves a lot more attention is visualization. I worked with this idea briefly when I was an intern at Google where I worked with software engineers whose job is to look at this very complex data on a daily basis and explore the spectrum, which is pattern data. I looked at different feature reduction methods and compared them to see what the ways are to represent the same data such that they could better explore the distributions of the data. Another thing that I think is very important and I think it goes back our discussion earlier is looking at what is really your need in a specific domain? So one of the systems for the project that I worked with was working with a medical domain expert who looks at autism spectrum disorder data and for them a meaningful cluster is not just clustering that data, but really figuring out what are the features that distinguishes 2 different clusters? What is the difference between A and B? What’s the difference between B and C to help hypothesis generation for example? So we built the model to learn those distinguishable features. Another thing that I think people like [indiscernible] at MSR were looked at is using this interactiveness as a way to help data scientists. To debug models and better explore hyper parameters. And now a lot of companies are hiring data scientists where their daily job is to look at this model, stare at this model, and look at what are the hyper parameters that are a better fit for my performance? How do I achieve that performance that I want? I think we can use the interactiveness to really make their job easier and more efficient. So I work at Ai2. At Ai2 we think one of the problems that will help us to take a step forward in AI is building a machine that can answer a fourth grade science exam. Here is an example of a fourth grade science exam, “In which environment would a white rabbit be best protected from predators?” The examples are: shady forest, snowy field, grassy lawn and muddy riverbank. When you look at this question as an adult hopefully you see that the teacher is trying to test the idea of camouflage. If you know that abstract concept called the camouflage you might be able to apply that to so answer this question. So what I am trying to do at Ai2 is: How do we learn these abstract concepts in an unsupervised manner from a large corpus? Once we detect this abstract concept then maybe we can answer these questions using this sort of abstract idea that humans had defined. And one of the keen sides that I think will help us is that the learned graph that represents this abstract concept should make sense to humans in order for it to be a concept that humans had decided to define such as camouflage. This involves one of the first ideas that we are exploring which is using word embedding representation, this is just learning mapping a word to a point in a vector space and use that sort of representation to learn the context of which it represents the camouflage. That extends the assumption that I had in my earlier work where given features to me, the data set has already interpretable features. It is no longer recipe or patient data, it is some feature that we don’t know what they are about. >>: [inaudible]. >> Been Kim: Oh this matrix is actually [indiscernible] representation of this particular question. I just put it there because –. >>: So the entire question is one point? >> Been Kim: Just one question. >>: So this entire question and then there are answers. So it is just the question without the answers that are being embedded? >> Been Kim: I see, so what I did here to make this matrix is I select a bag of words from the question, this is very preliminary, we are just starting to investigate and then I extended them with their neighbors in [indiscernible] space to kind of have a smoothing effect. So we are not only looking at rabbit. I am going to look at fox and raccoon. Then we cluster them with respect to rows and columns to learn that these are the words that common equal occur set of words and these are the context that common equal occur and then I am hoping to learn a graph out of it. >>: So is there sorting on the –? >> Been Kim: Yes. >>: They are sorted by? >> Been Kim: They are clustered. These are one cluster in a row. >>: Oh I see. >> Been Kim: I like how attention is directly going to the matrix. What I think would be a really interesting topic to work on is that this deep learning has achieved much success in various fields and I think in my opinion one of the drawbacks of deep learning is that it is not interpretable. What we really want to do is for people to gain more insight into this powerful tool and ultimately really make better decisions. This part looking at how we can assign a learn interpretability from such non-interpretable data is another interesting research topic. >>: But we could make the argument that any model is interpretable in the sense that I can always remove the feature, retrain and compare it to model and now I have an explanation of the power of the teacher. >> Been Kim: How would you compare 2 models? >>: Oh compare 2 models? >> Been Kim: You said you remove a feature and then you compare 2 models. How do you compare? >>: So I want to know if the effect of that feature on this model. So I train it with and without the feature. If the performance goes down a lot then I know this feature as important. So now I can sort all the features. So even though I don’t know what’s happening inside I know the effect of all the features, which is a way to interpret the model. >> Been Kim: I see yeah, this is another idea that I actually thought about when you are working with like dim learning or neural nets. Removal [indiscernible] and see what that does in order to assign a meaning to it. I think that’s a good way to go about it, but it’s not in my opinion is not direct enough. If you have thousands of neurons in your layer you are going to have to train thousands models and training one model takes 4 weeks. >>: [inaudible]. >> Been Kim: If you have an idea about this –. >>: [inaudible]. We tried that with our splicing work. We can predict how certain genes can be spliced, but now for biologists we want to interpret it and say, “Well this is what does that and this is what does that and so on.” It turns out that features are so correlated that we couldn’t get anything interpretable this way. You almost have to –. You should take derivatives but with something more complex and then you have to kind of interpret that too. So it is not as easy. >>: But basically when we say things are not interpretable I kind of object to this because there is a way to interpret what’s happening. There are things you can do. You can move things from train to test and that tells you a lot. You can train with or without the feature and that also tells you a lot. You can always remove a feature and then retrain without the first feature –. >>: Yeah if you want to spend a lot of time everything is interpretable, if you want to spend a ton of time. So maybe –. >>: No, no –. >>: [inaudible]. >>: [inaudible]. >>: Yeah, but once you hold out a feature what you get is you now have to hold another feature. You have to then go back after you group the features and say, “Now how about this group of features verses that group.” It’s an interesting process and it becomes, we fail. In the end we just couldn’t do the interpretation of the new one. >>: [inaudible]. One thing you could do though, which is close to what you are already doing, is you can still do a case based kind of analysis of what [indiscernible]. We go somewhere into the internal representation, do Euclidean distance on the internal representation and then you can still find cases that are similar to each other, similar to a test example. And if you believe that the cases are intelligible to humans then you can explain the reasoning of the model by presenting these cases to humans. >> Been Kim: Yeah, yeah, that’s true. I think this comparing feature idea is for the exact reasons that you mentioned. For those listening in and can’t hear you guys is exactly right. It quickly explodes in order to enumerate all these questions and because of this correlation you can’t really tease them out. The exact meanings that is [indiscernible]. >>: But it’s not a bad place to start. >> Been Kim: It’s not a bad place to start. >>: It’s actually a complex research issue. It’s not easy. >>: [inaudible]. >>: Yes, that’s true. >>: [inaudible]. >>: That’s why I was thinking that example you had with circles, if you actually had an empty table that people added features [indiscernible]. Do they actually end up in [indiscernible] or not? >> Been Kim: That’s interesting, yea. For the coding example I think they definitely come up with different features. >>: [inaudible]. >> Been Kim: For the coding experiment that I did, when I asked them to write down the discovery list that’s kind of identifying the features of the human it was all over the place, very different. >>: [inaudible]. If they are all working on that shape problem and then as somebody adds a feature it populates everybody’s list and they see, “Okay I just click here instead of adding my own.” >> Been Kim: [inaudible]. >>: Then you start automatically raising some rows which nobody is using and so what happens? >> Been Kim: Yeah that’s interesting. That’s more like a collaborative way of doing it. That will be a next step or maybe together. Okay so the last thing I was going to say is that Ai2 is just down the road in a new district. If you are interested and care for machine learning that would benefit humans I would love to chat. Thank you. [Applause] >> Rich Caruana: So feel free to yell, but if you have got another question or 2 we have got another 5 or 10 minutes. >>: I have got a question which is something you sort of skipped in the iBCM. You described the way to inject human interaction as a procedure in your Gibbs sampling. So what is the mathematical view of that? So changing Gibbs sampling algorithm is a procedural thing [inaudible]. Is there an equivalent mathematical view to say that humans are essentially [inaudible]? What is that? >> Been Kim: It’s kind of providing a new data point, but it’s a more important data point than an original data point for example. I don’t know if it’s mathematical, but that’s the intuition I am going for. If you have a data point and the data point is yelling at some pattern, if you are human and you don’t see the pattern that you looking to see then it is kind of like creating another data point. And actually this view has been studied; I think it’s a paper from MSR that viewing human’s interactions as a set of new data points or like a curriculum of like a principal example. That’s sort of the view that I’m applying here. >>: Okay. >> Rich Caruana: All right, thank you again Been. >> Been Kim: Thank you guys. [Applause]