Document 17835026

>>: Hi, everyone. We might as well go ahead and get started. But before we jump into this next talk, I've been asked to give a couple of announcements. For starters, the session abstracts and speaker biographies are now online on our website.

>>: We can't hear you.

>>: Are we on now?

>>: Yes.

>>: Thank you. Thank you.

So again, two announcements. One is that the speaker biographies and the talk abstracts are now online on our website. And second, many of you probably already know for those of you who are attending and are faculty, we will be giving out access to Azure for sample development and whatnot. If you've already registered through the website, you have access to this and instructions on how to get it. If you registered in the last week manually, after the registration site was closed, please see somebody out at the desk and they'll get you on the list of people who can get access to Azure for evaluation purposes.

Okay. In the next 90 minutes we'll be telling you about Azure for research. My name is Roger Barga. My colleague Jared Jackson will be presenting with me. And other contributors of this talk include Nelson

Araujo, Dennis Gannon, and Wei Lu.

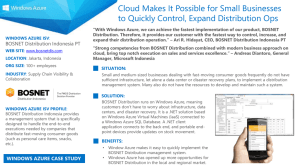

Our team is responsible for actually taking -- working with external researchers developing services, providing data sets for Azure for research. So our day job is really working with researchers to leverage our Cloud computing platform to advance research. We are the new kids on the block. Hopefully you heard earlier today, you heard the talk from Depoch on Amazon and you also heard about Google. But relatively speaking, our Cloud platform is still in it's formative stages, been released less than a year.

So we are going to spend a fair amount of time, in fact the first 35 minutes talking about Windows Azure, just giving an overview of what it is, because we would like you to leave today having an understanding of how our platform as a service is differentiate from infrastructures of service and where it's trending.

We will also talk a little bit about Microsoft and Cloud computing.

How we see Cloud computing. Not definitions but the kind of investments we are making.

Then I'm going to turn it over to Jared who is actually going to talk about research applications that we have built on Azure. Actually run

some demos of real code running on Azure for some of the researches services our team is starting to build. And then drop down and actually talk about how they are built and a closer technical look to a closer technical look at Azure. And then we'll close by talking about our Cloud research engagement initiative where our group is offering access to Azure to researchers through government funding agencies.

And it's an international program. So I'll tell you what we are doing with the NSF and what our team is offering. But again, this is just part of an international program which is going to be extended to countries around the world. So let's jump in.

Microsoft and Cloud computing. Clearly, if you haven't gotten the sense from the talk today, computing and science are tightly coupled.

In fact, we are making a scientific infrastructure in a society that's tightly coupled with computer infrastructure. And if you take a look at the major advances that have taken place over the last ten, 15 years you see science and computing power are really tightly coupled. You can't help but ask yourself the question, with the advances taking place in Cloud computing, multicore computing, and even in the future, quantum computing, what will the future research look like? And this is an area in which Microsoft is investing heavily and trying to understand and actually play an active role in that. And, of course, the Fourth Paradigm book has been mentioned several times in which people looking at and are envisioning what that future looks like. But the missing chapters of that book are what are the technology solutions that actually deliver the visions of those authors? So this is a very active area for us to be involved in.

And a slide that Dan Reed uses a lot in his presentations that I happen to like, talks about the Sapir-Whorf hypothesis. And that is is an individual person's thinking and ability to reason is actually a function of the language they have. And we can actually see analogs in scientific computing. The tools you have to compute, the amount of data you have to manipulate, changes research, has an impact on the kind of data research questions you can ask and how you go about asking these questions. And again, we see many examples today in multi-core sensors, ubiquitous sensors, clouds, cloud services. You say, what lessons can we draw and what are probably going to be the big macro influencers on this space? And you can't help but acknowledge the pull of economics. Which we think even though you may have heard some wonderful talks today about clouds, very little talk about the economics. And if you look at how economics is shaped, how we've leveraged technology. Moore's Law really favored consumer commodities.

Commodity hardware. And economics drove in enormous improvements in commodity hardware. And specialized mainframes and clusters faltered.

No matter how superior they were, because of the economic draw of getting commodity hardware, easy to deploy, very cheap to deploy, we changed the algorithms and the way we conduct the research to take advantage of the this economic trend. And today's economic drivers, many-core and multi-core processors, accelerators, Cloud computing.

And so this really is going to drive change. You may have have a better algorithm that works better on an MPI cluster today, but as cloud computing has an advantage, you'll see how researcher start to think about how to leverage that platform to carry out their research.

And in the process of doing that, be able to ask different questions.

And just, you know, for scale, this is a paper, this is very dated information from a lattice paper that James Hamilton and others published. They talked about economies of scale, both -- and what you're seeing here in this column is the cost for a small size data center for networking, for storage, and for administration costs. And this is the cost for a large scale data center are hotmail services -- or MSN, excuse me. And actual costs, and 5h3 ratio of the costs, 5h3 cost savings. Those are incredible, you know, ratios of improvement in our data centers. And the real killer here is administration where an individual admin may only be able to administer 140 machines on a midsize data center you might find at a university. But a cloud scale, we are trending well over a thousand servers or more per person, effectively reducing that high overhead.

And just as a matter of scale, this is just one of our data centers, a single one, this is 11.5 times the size of a football field. Patterson was talking earlier today about how many servers could potentially fit in one of these facilities. And if you add up the raw compute, the raw disk, the raw memory in any one of those facilities, it's actually greater than all of that you would find in our Department of Energy facilities for doing our research which is what we think of as our technical computing backbone in our country. So the size of the scale and the economics of these things is truly staggering.

And we've seen this picture before. The nice angled parking for the containers to back in. And what they are really connecting to are these units. In blue in the lower right-hand corner that bring power, cooling, and networking to anyone of these containers. And the beauty of this is that within a few hours certainly with a day of backing up a container, all of the servers in that container 2,500 to 5,000 are up and running in the compute power in just one day. So just the ability to deploy that many services is really quite striking.

What people might not appreciate is just how fast this is unfolding.

Microsoft is already in its third generation of data center design, which is the Chicago facility of the containers. We are doing away with a lot of the computer room air conditioned controlled. We've now gone to containers with just a concrete shell.

We are actually driving and pioneering even more changes. This is

Christian Belotti and his data center attendant where they are actually moving data centers out into ambient temperatures and running them without the cooling to try to bring the PUE or the power utilization

efficiency closer to one. You're not paying to cool the room. You're actually trying to have a wider operational range.

This is Rick Raschow, it's not a very good picture, that's standing in front of a cluster made up of atom processors, very low energy processors to make up a rack.

And our fourth generation, a mock up of our fourth generation data center, we are even doing away with the two or three hundred million dollar container, and we are just simply putting the shell, if you will, the building, and just putting the containers themselves out and stacking them without any shell around them, and actually selectively putting backup systems or batteries on per containers to back them up.

So we don't have the large diesel generators. This will drive even more economies of scale even more saving to the data center. So it's going to be an area of rapid innovation and improved cost savings over time.

But enough of that. If you were to walk around in one of these data centers, and we are going to be talking about benchmarks and performance, but if you were to be walking around in one of these data centers, you might say, what's really different about one of these facilities relative to the data center we have at our university. So we can just pick a few things to contrast. Picked up node and system architectures, the communication fabrics, the storage systems, reliability and resilience and then we'll transition into models and talk about Azure.

First off, if you look at the node in systems architecture the data center relative to a cluster, there really is very little differentiating factors between them. There's Intel Nehalem processors, AMD Barcelona or Shanghai. You know, there are multiple processors per node, per rack. A big chunk of memory on the nodes. So they are actually indistinguishable at that level, at the processor level.

Where things start to get really interesting is the communication fabric. On an HPC cluster, this is actually a graph of the top 500.

What you're seeing is over time on the top 500 systems, what do they use for the technology? And I realize this isn't easy to see, but you can actually download the slides. What we are seeing is basically the

Cray interject, gigabit, Ethernet, infiniband over time. This is what you're going to find in a high performance computing cluster. The bisectional bandwidth is great, and you almost have a very flat network so the communication from point to point is the same across the entire cluster and everybody can talk to everybody. Hence, programming models like MPI work very well. Every node can take a little bit of work, do a little bit of computation, share it with others and they continue to march down and perform the processing.

But it's quite a bit different in data center. In a data center, we might have a rack of 20 to 40. And that number is increasing rapidly.

Processors at the top of the rack, we'll find the top of rack switch gig E. And then at the second level we actually see a ten gig E, but it's actually just partitioned heavily. In fact, there's lot of oversubscription taking place on this network such as all the machines trying to talk to each other at a very fine granularity, the latency is going to be high, the collisions are going to be high. So there's a lot of oversubscription on these networks. And even at the next layer, layer two and three, you have continued oversubscription rates, such that point to point communication is no longer possible in these systems. Instead, you want to pull off a large piece of work and work for a large period of time before you have to get off that node in exchange information. Because again, if you're not under the same top of rack switch, the communication penalties are going to be high.

Which is why during some of the questions today people are asking about

MPI codes.

This is one factor with they don't simply work well unless you're coincidentally trying to get everything under the same rack and we are going to try not to as you'll see later get them under the same rack for reliability purposes. So huge difference in the communication fabric. But again, we are coding around that to take advantage of commodity switches, commodity routers, et cetera.

And second, storage systems. You know, in HPC, you might have a local scratch per node, but it's for the most part nonexistent, local storage per node. Instead you have your secondary storages, a stand or distributed file system, and you have pedi bytes of secondary storage.

In contract, in the data center, much like the Google infrastructure you're hearing about, you have terabyte local storage per compute node which makes for great local storage if you want to bring a large piece of data over to work with. And your secondary storage is a bunch of disks. And the answer is going to be eventual consistency and replication, but again, you're not seeing any stands in these. And tertiary storage is absolutely nonexistent. You have no big tape backup. You have to ability to do check points.

And moving on, reliability and resilience. In HPC, especially if you look at the trends over time, they do periodic check points because something is going to fail. And most of the codes are fairly fragile.

If something fails, you roll back to your last known good checkpoint, reinstall it, and continue on.

But over time as we get more and more compute nodes, the meantime between failure for something failing, even when you're trying to boot the machine up is approaching zero, the checkpointing frequency has been increasing, and the IO overhead, the amount of time to do a checkpoint is going from 20 to 30 to 40 minutes to do these check points. They are simply not scaling.

In a data center, everything is loosely consistent. Ken Burman, of course would like to convince us otherwise in his talk. He had a great talk. But the system is actually designed to recover from failure automatically. Failures are going to happen and the system is design signed to actually pick up from failure and resume computation. So a very different model through resiliency and reliability.

And then finally, programming models and services which is going to lead us into what we are talking about in our talk today about Azure.

And this is kind of an extension of what Patterson was -- David

Patterson was talking about is you really see the cloud programming models and the systems that they support is a spectrum today with discreet points we can point out like Amazon web service. Your VM look very much like the hardware you have today. There's no limit on your application model. User must implement other services to implement scalability and fail over.

At the other extreme, we have very highly constrained models with a heck of a lot of automation for the verticals that they are targeting.

And somewhere right here in the middle is Azure which is our platform as a service, and that's what we'll be talking about today in our talk for the next 30 minutes.

So let's start with Windows Azure and talk about a few fundamentals and talk about what kind of services it provides during run time before looking at the demos.

So we start with a bunch of machines in a data center. And yes, they are probably going to come in through a container that's been backed up to one of those power supplies I showed you earlier. We have something, and what differentiates Azure from other models is we have something called a fabric controller which is highly available. It's a packs of cluster, and the fabric controller owns all of the hardware inside of the data center. It actually burns the hardware in from the moment it's backed in. It actually knows the performance profile of an individual piece of hardware, be it a PCU, be it a piece of memory, a router, or a load balancer. It understands what its performance profile is and it's going to monitor it.

So the first thing it does is installs an optimized hypervisor and then puts it puts in a host virtual machine which is Windows Server 2008.

Then installs a guest VM, which is where your code can run. And it can actually install seven of them or eight of them to be accurate to run an individual node. That's really a function of how many cores that you have available to you. And as you'll see, we can actually slice that up, a node, a unit of resource, computing resource, in various ways. We could at a minimum, we could give you one core and give you a slice of the CPU which is a one 1.5 to 1.7 gigahertz X64. We can give you your fair share of the memory which right now is 1.7 gigabytes,

give you a hundred plus megabits per second of the network, that's a core slice of the network, and local storage which is again a fair slice, an equal slice of the amount of disks that you have available.

Or you can say, I want a very large VM. In which case, I want everything that's on that node. Eight cores, 14 gigabytes of memory, and two terabytes of local storage. And on top of that we are going to be running dot net framework, Windows server and Windows Server 2008

Enterprise Edition. That's the basics.

But then we actually install the Azure platform. This is where things start to differentiate from just being able to put up a VM be it Lenox or Windows 2008 with your code inside of it. Instead, we are saying, look, we want you to tell us what the requirements of your code is. We want you to fragment it up and kind of give us some hints about what it's supposed to do. And we'll be talking about this in two pieces.

One the compute and the second is of the storage.

The Windows compute services, there's our fabric controller and the fabric you're running on which is all the machines you need access to.

We differentiate your code by a web role or worker role. Over time we'll see more roles introducing which again is packaging up your code and saying how you want it deployed and what you want it to be able to do. You're web role is really what's going to be talking to your customers. It's where you're going to run ASP.net, WCF, any code that's going to talk to the outside world.

There's a load balancer that will be stuck in front of that. So if you actually have a very large web presence, you want to support thousands of users, you're going to see you're going to have multiple copies of these web roles to actually interact with the outside world with a load balancer sitting in front of it that people can send HTP requests to.

The back end is where the work gets done, the worker roles. That's where the main line code goes. Standing between these there is actually something called an agent which we can talk about in the Q and

A. But that's what's monitoring the health of your installation, your code that's running on the VM and sending that back to the fabric controller to let it know how things are running. It's monitoring the hardware and it's monitoring your software.

And this is where we'll throw in the pretty pictures of the queues.

Let me go ahead and just stretch this out.

So works come into the web role. The first thing it's going to do is bundle the work up. It actually could partition the work up and break it up into multiple units. You may have given it a large chunk of work. What your web role may do is tease that apart into multiple chunks. Each one of those chunks would actually go into a separate queue request. This is work to be done. You'd actually have a farm of worker roles monitoring that queue looking for work to do. And the

moment a piece of work appears in the queue, they'll pull it off and actually start executing the queue. And again, because they are separate, because they are different instances that are running, you can scale out the two independently. Depending on how compute intensive your work is or how chatty the external interface is. In fact, you can also take it out to a large extend and you could actually partition your main line code up into multiple stages sticking queues in between it because you may have a step in your pipeline that's very compute intensive, and you can partition the work out. And you'll have tens of workers actually doing that, you know, divide and conquer of the work. Then you might have the actual piece of work to actually glue all those results back together to actually make a complete result for the user. And you'll see that in some of the demo applications we'll show you later.

But again, you have durable queues standing in between them. The queues help you decouple the parts of the application so you can scale it independently. It's for resource allocation. You can even have different priority of users, high-priority paying customer, you're going to have a hundred workers responding to the request. A freebie service you may actually have one worker respond to it. So again, you can break your work apart in different patterns and assign them different workers and glue things together with queues.

And it masks failures. Again, we are going to assume that failures are going to happen either due to your own code, or simply where moving workers around across the data center, you have to design for failure.

The ideas of a worker picks up a piece of work out of a queue, this is a durable queue, the item didn't really get to lead it until the work gets completed. If this worker were to have failed, the item will reappear after a specified period of time back in the queue and some other idle worker could pick that back up and resume computation against that piece of work. So again, it could mask failures in our system. And more recently we even have TCP. We have IP connections between individual workers. You don't have to go through queues. As you might imagine, a durable queue actually is a very expensive write application to make. The overheads are high. If these workers need to actually exchange information, we now have point-to-point communication, but again, it's not durable, so you're exposing yourself should something fail, you're going to lose that work.

Okay. So moving on to storage. There's actually one element missing here, but we are not talking today about [inaudible] Azure we are just looking at Azure storage. Again, we have a load balancer sitting in front of all of the storage infrastructure. There's a rest API to all of these. In addition to .netlibrary which I think Jared will also go into more. But we have blobs, queues, and tables. There's also this notion of drives as well which is an NTFS drive system which is really built on top of blobs. It's an abstraction on top of blobs.

So what's difference about these? HTP requests come in. Blobs are ideal infrastructure for tearing apart a large image or large video.

We have our drives, tables, and queues. And blobs are really just a very simple interface for storing name files along with the metadata.

There's certain amount of metadata you can store with blobs, and Jared will actually go into more detail on blobs.

Drives are durable NTFS volumes. The beauty of the drives is that if you write to them, if an individual worker writes to them, all the writes are backed up to durable storage. So if the worker fails and another one comes back, you can actually immediate bring that state back from the drive.

Tables are not relational tables, they are entity-based table but they are very similar to big tables, if you're familiar with that abstraction. But it gives you ability to store large numbers of data, large amount of data in these tables and actually index them over the properties.

And queues are again reliable messaging infrastructure. And again there's .net and RESTful interfaces. I think I already said that. Data can be accessed either by our Windows Azure aps or by external parties.

What's nice about that is you can actually cook data products on a regular basis where the request comes in, a data set comes in, you can have the worker rows actually process the data and then put it back in a blob, put it back into a table. Other applications which are not running on Azure connects them to the REST interface to actually pull down that data independently of going through Azure.

And then the development environment. You have a complete simulated development environment on your machine, on your work station such that it actually looks like you're writing to a blob, t looks like you're writing to a table or a queue. You can compile the application, build your workers, build your web roles and actually test your application locally and then press the deploy button that actually will move it into a cloud, into a staging area, where you can test it out. Finally when you're ready to go live, you can say, go ahead and make this web service, make this cloud service live.

So you say, what's the value add? What really is Azure adding to actually make all this? Of course, I've partitioned my data. I had this put into workers, I put into web roles, what really is it adding for me? Well, the whole idea is that it's intended to be a platform that's scaleable and available. Services are always running. We have to expect there's going to be rolling upgrades and downgrades over time. And this service is intended to run all throughout the year.

You may want to upgrade a portion of it, there could be faults that could happen. And failure of any node is expected state has to be replicated. And services can grow very large so we have to have some way of efficiently managing all of that state.

So one of the things that we introduced in the fabric controller, and it's going to generalize over time, where you tell the fabric controller exactly what you need. What is my SLA? And the fabric controller is going to use the hardware available to it to actually deploy your application.

So I think I actually said most of this except the fall line. Again, it's a highly available Paxos cluster. But one of the things you can actually specify is fault domains. You can say look, I realize this data center is large. I want it. My service can't afford any failures. I actually want to put them on independent fault mains.

Which means I want you to push my instances of my worker roles and my web roles on orthogonal pieces of hardware throughout the data center.

Different power supplies, different network, different clusters, everything. And you can specify exactly how many fault domains that you want. And again, fault domains, it's a function of compute node, a rack of machines. And when Azure is looking for how to deploy your service and even manage it over time as the system is running, it will instantiate it on different pieces of hardware throughout the data center.

So again, we can say, I want ten front ends, ten web roles across two fault domains. We guarantee that they're going to be on different isolated sections of the data center.

Similarly, we can say, update domains. This is something -- you know, this is a service I expect to update throughout its lifetime but I can't afford to bring my whole service down. In fact I may need to have an old copy running through out the entire year or during a certain period of time while the new service is being deployed, tested, and even used by new users coming in. So you can specify how many update domains you want as well. This is orthogonal and complementary to the fault domains.

And what this means is that if you specify, I wanted to have two update domains, and then you actually come by later and actually push in a new instance of your service, Azure will wait until all the behaviors, all the activities crest on one sense, stand up a new instance of your service on a new update domain, routing new traffic, new request to this new instantiation of the domain and wait until all the behavior on the other domain, the first update domain is quiet before actually going ahead and continuing to roll out the update across all the domains.

So again, it's giving you a lot of built-in functionality for how you manage your service, how you hide faults and how you isolate yourself from both updates and failure that might occur over time.

And I think this is just a long-winded explanation for how it does that and how it combines all that, all those requests. And again, we can expect to see more SLAs that you can say when you deploy your service, the kind of behavior you want, perhaps even the ability to co-locate nodes under the same rack or within the same reachability district for performance. But that's not quite there yet.

Okay. And again, the fabric controller because there's an agent installed and everything, it's actually monitoring the health. Some of the health is actually the hardware. Is the network switch working properly? Is the router and the load balancer working properly? If it starts to detect the operating envelope, the operating parameters are moving out of the envelope of normal, it will actually start to actively migrate your services off of the system. So if it detects the role has died or it's unhealthy, it can actually reboot your VM, your worker role and restart your code. If it actually detects the hardware is unhealthy, we'll actually take it out of the hole array, out of the fabric, and actually start your service on a different piece of the data center.

And if it can't, if a failed node can't be recovered, it migrates it to a completely different part of the data center.

Now, FYI, something that they are working on is basically the ability to deploy any VM up on Azure and wire protocol for how to actually write an agent so you can actually report back to the fabric controller the health of your VM, and it will actually manage it for you as well.

So some key take aways from this first part: Cloud services do have some specific design consideration, always on, distributed state, large scale, fault tolerance. And just because you have a data center backing you up does not mean your application is going to automatically scale. So what Azure is trying to provide in the form of these worker roles, web roles, and the role of the fabric controller is a way for your application to automatically scale and for the fabric controller to move around or take steps necessary on your behalf to ensure you have a highly available service as a long with replicated states so it can restart failures for you automatically.

And again, it manages both your services and the underlying servers as well to mask underlying failures from that.

So with that, I'm going to turn it over to Jared to actually show you what we've been doing for building research services on top of Azure.

Excuse me.

>>: No problem.

So what we are going to do at this point is actually move away from kind of general description of the way Azure works and actually jump into some ways which we've made it work in the past

We've got four applications which we have put together, either in our group with Roger, or actually in collaboration with some other groups here here at Microsoft like a scientific group in external research as well as some outside collaborators at the University of Washington and some other places.

The first two we'll show demonstrations for, and after we show the demonstrations, I'll talk a little bit, about how the second two work.

And then we'll kind of from there, once you get a sense of what Azure can do, I'll describe how we put those together and hopefully with that context in mind, we can then move on to taking a little bit of a deeper dive into how the Azure works so if you wanted to try it yourself, you might have kind of a background in how the technology works together.

So I'm going to jump out of PowerPoint at this point and move over to a demo. Right here. This is Azure Blast. If you're -- everyone here is a biologist. I realize that. I'm not a biologist either. It's amazing how much biology I've had to learn at Microsoft Research, it's not something I expected. But the Blast program is something we didn't write, it's been around for a long time very well accepted algorithm for taking input sequences with proteins or DNA or whatever they might have been to be and comparing that against the database of known sequences. And it returns what it thinks are the most relevant hits.

It's not like a regular database lookup. It has to do with a lot of little things like if you inserted a few characters here, took a few away here. So it's a pretty involved algorithm, and it's definitely the standard that's used out there.

So we thought to ourselves, well, as the science gets larger and larger, wouldn't it be great if we could take this application as it exists and distribute it on a lot of different nodes, and that's what we've done. So this is a web interface, it's a web role on Azure.

Basically, you go and you select an input file of some sort. In this case, it's a fast A file. Fast A is a very common format for representing either DNA or protein sequences. In this case, these are

DNA sequences. There's ten of them in this file that's why it's called

Pseudo Ten. So I tell it to look into that. Blast P actually looks up proteins. Blast N looks up DNA. Blast X looks up both. So I'm going to switch that to Blast X, that's what we are going to run. There's only ten sequences here, so it doesn't really make sense to use 25 computers to figure out what those are in, so I'm going to take that down to five. And I can also select which database I might happen to want to use over here, and I'm going to point it to that. And I think here is the only one we've had available. And I've actually -- you don't have to do this, but what we've done here, we've also allowed you to log in using your Live ID, so you can submit multiple use jobs.

Some of these jobs may take a long time, but you can then go back and look just for your jobs and see what the status is of them. I've already logged in, and I will call this our Cloud Futures Run.

So that's all the parameters we need, and basically I'm going to hit submit. And what this is going to do is it's going to take from the web role, it's going to take a message and put it in one of these Azure queues which Roger talked about. It says at the bottom there the request was submitted successfully. I can now -- this is not a long-running job, so I can look at the jobs that are out there. Here is the status. There are some that have been completed. This one is currently running. I can go look at what is happening to this particular job. It's telling me that it is broken it up, it has split the sequences into little tasks, and several of them are up and running right now, and it hasn't completed yet. And it's actually refreshing in realtime there. And eventually, it will end up just like any of these other jobs which have already completed here. In fact, there it says it's already succeeded. This was a very small input sample. And it's now telling me up at the top here that they did get a result.

It's just in a URL. It took the result of what Blast would have given you normally had you submitted this ten input file to it. And it stored it up in a blob up in Azure. And this is the URL which requests that particular blob. So I could, if I wanted to, just copy this URL, paste it back up into my browser, and you get what you expect. I said the output should be in X amount, and that's what I got. Not all that interesting in this particular run because you could have done this very easily with NTBI or EBI which are the public and free available version of this. But imagine, we had something come up just three weeks ago or so where we were working with the Sage Group. That's a bio -- a bio group at the University of Washington. And they had just sequenced a new bacteria. They didn't know much about it. They had

700,000 sequences that came out of their sequencing machine. And they actually tried. They went to NCBI and they tried to submit this job up there. And NCBI, every time they did it after a few hours kicked them off because they were blocking everybody else that wanted to use the service. There was no way they could get this done and they had this deadline for getting it done. And so they heard about us and they came to us and said, look, can you do this for us? We were able to take their input, spread it out across 50 nodes in this case, and get that done in overnight, basically. It was something that couldn't be done before. And they had a one-week paper deadline and they met it. So we were very proud of that.

Let's get back to the PowerPoint. So that's one -- that's kind of very typical way, right? You have a web role, it has a web interface which gets to a worker role. It does the work for your reports back using the data storage.

A whole different way is something we did also in conjunction not with the -- with the University of Washington, but not with their biology

site but with oceanographic. You saw hopefully [inaudible] talk earlier, this actually came from work that one of his students is doing.

This is COVE. It's like a Virtual Earth. It's like Google Maps only it doesn't go on land, it goes underneath the ocean. So you take all the data that may come along from that guy in the row boat sticking his head in the water. You bring that up here. Put it in their formats, and it will visualize it.

What we've done is we just added a little bit of a service. You almost -- it's almost transparent that Azure is working here. But what we are saying is there may be some sort of data product you need to compute and your computer might not be sufficient for doing that. It's great for visualizing and displaying the results of it. But it may be something that needs to happen over a much larger run with a lot more computer resources.

So we have a back end service that we've written on top of Azure. We call it Ant Hill. And what it does is allow you to submit compute jobs to us. You can look at the whole history. This is all the runs we've ever done in various jobs. If you wanted to start a new one, what it's basically doing here is listing all the algorithms that it has that it knows to do on the data that's available. I could pick I want to do advect particles. Again, I'm not an oceanographer, either. I'm not sure what advect particles does, but it's job that runs a reasonable amount of time.

Once I said I want to run this job, it tells me what nodes it knows are available. I can select one or I can just say, use whatever is available, and it will go up and the job is started. And again, you can track the status or you can actually look at jobs that have already been completed. So if I go back to this one, there's the one I just launched, there's one that's already been completed. I won't wait for this one to complete. But the other thing it does is it registers the data product that comes out of this particular job. There may be only one. In this case, there's only one. You can select that data product, say this is the one I want to see. And it will download it back from that source on the web and then COVE visualizes it. So that's the visualization of it.

It turns out that it's much better to have your computer with its graphics card doing the visualization. It's much better to have something where there's intense computation happening up in the cloud and this mirrors the two technologies as well.

All right. Back to the PowerPoint.

Another project that I didn't personally work on at all, but it has been very interesting, is the Azure Modis project. And this project is

one where there's some satellites that have been some government satellites put up I believe by NASA that are taking visual imagery of the earth as it's passing by. And what it's trying to do, then, is to stitch all of that data together. Hundreds and thousands of these very high density photographs with different angles and different conditions at the time when there is a snapshot with the atmosphere and things like that. So it has to go through several iterations of computation on these images. You've got all these various different computers are bringing down the data. It goes through collection, reprojection, derivation, analysis until it comes up with something that makes sense to that particular scientist. And again, it's got a web base front end, and I don't know what these results happen to mean, but it's again, it's a way of taking something that's very intense computation and bringing it to the scientist without them having to worry too much.

PhyloD, another biological example. This comes out of Dave Heckerman's work. This is probably the first application we worked on. This was well before Azure was even released. It was just in its early stages.

But it concerns HIV vaccinations and AIDS. It turns out AIDS mutates very heavily even inside a single instance of a host. And because of that, it's very difficult, actually, to come up with a vaccine because the vaccine actually targets on particular proteins that are generated inside the HIV. Most of them are generating different ones, even inside one person.

So what they do is take phylogenetic trees which come out of the way the AIDS virus mutates, they track that and they have to do an analysis which sees which are the most common, which of those AIDS viruses are fragile and which are are not? And what this does is it took a job -- a lot of these jobs are between 10 and 20 CPU hours. But the big ones were around a thousand to 2,000 CPU hours. And it was able to reduce it in an almost linear fashion so you were able to get the results almost right away.

All right. And yeah, this image on the right was actually featured on

PILA, which in the biology world is big.

So let's talk about how these work. Azure Blast. It's staged. You have a worker role. And this is very typical pattern inside of Azure.

It's basically you need to -- you have an initialization function. -- sorry.

Anyway, you have a step where the program is deployed and basically what that does is it takes a large database. The database which you're trying to search on in the Blast case, it compresses it down, sends it up to the fabric, the storage fabric that's up in Azure, and then each one of the workers which is available for you to run on, takes the compressed and compressed data and puts it locally onto its own -- onto that particular worker role. And we actually, what we use is the -- we didn't rewrite the Blast program, we take the one that's actually used

in NTBI, EBI, the one that the scientists trust and we copy that as well.

So then the user comes along with their input, sends it off to a web role. That web role partitions it out into acceptable little tasks that each one of the worker roles can work with. Those tasks as they are partitioned apart, put a message on the queue which are talked about and all the workers then take those messages off the queue, do the work, take the output, write it back up to a -- to a blob on there.

Now, this particular result, this is just a lot of dots until you kind of understand what's going on here. We actually just did a project where we took ten million sequences. There's a -- kind of a latest nonredundant protein database that's up at NCBI. It has ten million sequences inside of it. And what we wanted to do is take those ten million one at a time and search one at a time against the entire database. So basically you're looking for the things that are most like that, and you can find the clustering that's involved. So that's a huge job. We figured when we did a few computations on it and a few test runs, and we figured it would be a little over ten years if you did it on one computer with eight nodes. And what you're looking at here is in order for us to do it, we couldn't even fit it in one data center actually the way the allocations worked out with Azure. We had to spread it over a few data centers. What you're looking at here is one third of the job in one of the data centers, and this is about one

8th of the work. Inside of here, these are -- the rows here are the computers we ran on, so we have just over 60. We had 62 in each one of the clusters. And this is each task being completed. So this is a partition, a small set up to 35 of the sequences at a time happening in a run. Now, the interesting thing we found about this, these red points are where we had failures. And we expected that. From time to time, something would go wrong. Unfortunately, Azure kind of picked up. You see the machines don't stop. There are blue dots after the red dots come along. And the Azure fabric just did that for us and it just picked up from where it went. We only had to rerun where these red dots were. But the interesting thing to us was it was going along and these were working out, see how dense this is through here. It's running quite quickly. The partitions don't change at all, but you actually see that it starts spreading out a little bit more and toward the end it starts getting dense again. So there are just conditions out there, we don't know what it necessarily does to it, maybe that the data fabric itself is being used more heavily by other processes. It may be that some sort of updates are being pushed through, but all in all, it's still a good result.

More interesting is what we found in one of the other data centers.

Now, you can see that that was very similar from about here over to the one that we saw before. It's probably a little more even. But what happened here? What we saw was that every single one of our computers at some point during this day in the morning failed and then came back.

And then something else happened that I think that the data center may have caught onto something or an update was happening and they decided to shut all our computers down at one time. So you see the vertical line where everything shut down. The nice thing is that everything recovered from that. But it does show you that there are external conditions out there that you just can't even plan for. The power may have gone out at the center or some critical system update may have happened. And then we still haven't figured this out, either, but some of our very last jobs took more than a day to complete. And that may be something inside of our software where we are still actually just running it. These results came off last week, so we are not even entirely sure. We are still analyzing it.

Anyway, some lessons we learned in working with these large data sets is that no matter what system you're working with, I think you always have to plan for failure. And that failure can happen at any time. It can happen while you're deploying. It can happen in the middle of the run. It can happen while you're writing the output to the store. And you have to, in order to be able to accommodate that failure, you have to both be able to listen to the log messages that come back from the fabric, but you have to be logging yourself so that you know where those are and you can focus your attention when the job is done.

Test runs are your friend. This last computation we did was probably over $20,000, and we ran a few test runs on very small sets of the data, and we found lots of problems with our first theory of how we should run that. And so those little test runs cost us a hundred dollars. I think we are saving quite a bit if we don't have to run it three times at 20,000.

All right. But the great thing is that Azure or whatever cloud technology you happen to be using is a really good thing. It's something where you can get a lot of your work done in a lot faster time. This is -- like Azure 50D actually showed these results.

Basically as you increased the number of instances, you decreased the clock time pretty much linearally to a certain point at which point you peg out. So there's an optimal amount of computers you can run. With

Azure we actually found it that -- that basically, if you -- if you got a hundred computers that you added to your run time, you got a 94 time speed up. So you're getting close to linear speed up.

All right. I did want to also point out not just how Azure worked that that Azure Ocean project we are working with, it operates on a much different principle, a much different pattern. In this case what we are actually doing is we are using a register type system where you say that you have some sort of computation, and it doesn't have to fit any particular model other than to say that this has to work on some sort of data to produce some sort of data, and you can register that up into a central database. And so the jobs themselves, the computation and the data that all comes out of it goes and gets registered into a

central database. You can then apply this technology to any type of application which you feel like you're prepared to write

All right. Let's just -- I'm probably taking up a lot of time so what

I'm going to do is talk a little bit more about the fundamentals of what is involved in Azure. For those people who are -- we've talked about this already, Roger brought it up, there are three fundamental types of data inside of Azure. There are queues, there are tables, and there are blobs. On top of that, on top of blobs there are drives.

And on top of this and kind of external to this talk which we have which we didn't have time to go into is also this equal Azure source.

So you can actually get a relational database and work it in Azure as well.

Each one of these are accessible through very well-known patterns. You can use REST by just doing HTTP, or a secure HTTPS. You can use .net

APIs to get at them, or you can use something more like an NTFS drive.

When you create an Azure account, you basically sign in with an ID that identifies it to yourself. And then you have the flexibility to choose also where you want your services to run. And that's based on the data center and what we call a geocenter. You may say you want it to run in the United States or for some reason or another you prefer the European servers or Asia servers. You can choose those things.

Once you've done that, you can actually choose to have both compute accounts and storage accounts. And your capacity it tied to your account. But you have up to a hundred terabytes per storage account.

And when you first sign up, you have a limit of five storage accounts.

So that gives you 500 terabytes of data. And you can request more, but kind of like we said earlier, if you want to request more than that, we are going to make sure -- they are going to contact you to make sure that you're really going to sues that. Otherwise, they don't want to get over provisioned.

So how does this work? If you have a -- if you want to write some data, you have an account. Inside of your account you can create as many containers as you want to so long as it stays within the amount of data, you have that one hundred terabytes. As long as you don't exceed that, you can have as many containers as you want to. Inside of those containers you can have as many blobs as you want to and each one of those as a unique ID. They have a name which is readily accessible through a REST API. So if I have this picture one that I wanted to access, all I have to do is type in the account name, .blob.windows.net slash the container name slash the blob name. Now there's no multiple levels of containers. You can't have a container which contains a container, but the interesting thing is that blobs inside of Azure actually allows you to have the slash in their name. So you can kind of fake it to have multiple containers by saying, my blob is called, my family/pic one or something like that. So it would look like a URL,

that there's more structure than that. But there's just on blob containers.

Each blob container, obviously we talked about that. And the other important information is that you can associate metadata with every single blob. You can associate metadata with your account, with the container and with the blob. So if you want to do name value pairs to say what -- if it's apicture, what's in that picture, you're perfectly welcome to do that. The limit is that you can only have eight kilobytes worth of storage. So what most people tend to do is say this picture has information about it, but I've stored that up in an Azure table, go look at this table and this entry. And that's about enough information in eight kilobytes to do that.

There are two types of blobs. There's the blot blob. This is targeted for streaming. Reads and writes, and it has a limit of 200 gigabytes.

There is also a page blob which is actually somewhat new. And it's more for the random read/write scenario. So it has a bigger limitation, one terabyte.

So really, there is one more level of that abstraction, but you can't get that from any of the rest APIs. You actually break down the blobs into smaller chunks if you want to. But when somebody goes to download them, you get the whole blob.

So let's illustrate how this might happen to work. Say you have a ten gigabyte movie you are wanting to put up on Azure. You create a blob.

We'll call it the blob.movie. Maybe it's that movie or whatever you want on there. And then you have all these blocks. And one by one, you put those blocks down into your blob until you reach the end and then you put all of your blocks down. Now, at this particular point, your blob hasn't actually been created. All the data is up in Azure right now, but it's just a bunch of little chunks and nobody could access your blob. Just a place holder there. What you do is say okay, now from all those blocks that I've put up, put the whole thing together and then make it accessible. Now why did they do that? Well, the reason is, in a lot of a cases if you have a very large data set, especially in the scientific world, that's coming from a lot of computation that you've done across a lot of different nodes. By doing it in this format, you can actually have all those different computation nodes sending up all this data at once. And then you can have a check done to see if some of those were failures and whether you wanted to skip them or not or whether you wanted to have them redone.

And you didn't have to do it in just a sequential format. So it's actually quite useful for being efficient in cases of failure and for getting things done out of order.

So here are some details about that. I'll let you go back and refer to those later.

Page blobs, on the other hand, have a larger limit. You actually say what size you want your page blob to be, and it allocates that. Now, where the block blobs, actually each block could be variable size. The pages themselves actually are of a fixed size and then you read and write to it at whatever random sequence you want to.

So here we go. Maybe the first thing we wanted to do was write in those few pages. Then if you wanted to write again, you got the other data, and it had to -- you saw it overlapped where that was, it wrote right over the pages that you had started from before. You can clear those out and keep going until you have the data which you're looking for. Now, once all those operations are done, you might be asking, okay, what part of my data is actually valid where I've written to.

You can actually query against the .net APIs, get the arrangements which are valid and go and ask for the data insert in certain ranges.

So what you'll find, and the most interesting aspect of this is that they actually mapped an NTF file system. So on your worker roles, you can allocate this page blob, and then you can say each one of these particular chunks happen to represent files.

Well, they can -- the blobs, the different sizes and different offsets might represent different starts of different files, but now you've got a way of overwriting those, putting them in different places, having them always redundant, backed up and replicated and ready to go at any point

Anyway. All right. So since you've got those two different types of blobs you need to know when to use which one. Normally it's your access to it, whether you want it to be streaming, whether you want it reading writing, whether you want it used inside of a drive in your worker roles, et cetera.

Now, snapshots is another interesting aspect. This is something provided by the data storage fabric as well. So if you happen to have a data blob out there and you want to make little changes to it but you're not sure if those changes are something you want to commit to, you can actually take snapshots of your data. So a snapshot is like you would expect with a snapshot, a picture, it's like taking a picture of the data, putting it over here and having a copy and it's just pointing to that other one. So the nice thing is now you have different copies you can work with and you're not actually paying for the extra data. You're paying for the one set of data where it's being stored and you can put different blocks on top of that. So in this case, I take a snapshot of my original data and I try changing something in the middle of it. Or I try appending onto the end of it.

I can keep going like that until I say, oh, yeah, that's basically what

I want to do, and then I take another as snapshot of that. Basically

I've got three sections of data here, but I'm really only paying for

the blocks which are unique. And that -- with the second snapshot, I can do the same thing. I can add on to it and say in the end, that's the one that I really did want after all and promote that to being the original blob. So this is actually a very handy function for when you're having to work with data that's going to change and still want to be able to go back, have a history.

All right. Azure drive. This is -- we are finding this in scientific applications, this is actually a very handy function as well. For instance when we were working with the Blast application, we didn't change the Blast application at all. Well it doesn't have any knowledge whatsoever of what Azure blobs are to get its data from, but it does know how to work with the file system. So if you can map the blobs that are in your storage already in Azure storage fabric into something that looks like the file system, then you can use legacy application you may have written in Fortran, you may have written in

Pearl, you may have written in Python, anything you want to that can run on a Windows server system can now access a file system in the background, actually be hooking up to Azure storage.

Azure also provides a big table implementation, so this is the second type of the blobs, and then tables, and then we have queues.

Basically, it is just a way of writing up key value pairs where there's a partition key and there's a role key. And these are the only things that are indexed. It actually does very quick look ups based on those keys on the partition in the row. But if you wanted to do something more complicated like a SQL state which is doing complicated joins on tables or things like that, you'll find that it's not very efficient.

The data model is set up to record entities on partitions rows. All right. So the very last thing I wanted to show you is that we have been as a group looking at a lot of different best practices. We've been measuring things, benchmarks. What is the performance like? If I have an application that wants to run against a data set with a lot of partitions and it's always accessing the same blob, every worker is accessing the same blob. How many workers can I have accessing that same blob at the same time? That's a question you may want to ask.

We've been trying to answer that, those exacts datas. And we put together a list of things which would be useful. So if you do decide to come and give Azure a try, you may want to come and take a look at these best practices as well as once you've looked through that, take a look at couple of websites we put up. The first is a website to our particular group. Has a lot of interesting pointers to resources in terms of learning what Azure is about, some presentations and samples, some scenarios. And the second one has our benchmarks as well as a lot of the best practices that we've learned. Things that we really wish we would have known when we started doing the development. And hopefully will be helpful to you as well.

All right. With that I'll go back to Roger.

>>: Thanks, Jared. To go back to this, to Jared's slide just for a second. This has only been released very recently which is our Azure scope which is in fact a benchmark suite. Jared has covered a lot of information here on Azure. As you can imagine, a platform that you're not familiar with, if you're thinking about how to put your application to it, you're going to have a lot of questions. So this is something, we actually built the Azure scope benchmark suite which has microbenchmarks, NDN benchmarks we actually pulled some of our friends in the community asking for their their best practices, their advice on benchmarks. We've added it to the suite and we certainly welcome your feedback on new benchmarks to add, and we are going to be rolling this benchmark out across different data centers because the different data centers, given how fast this technology is moving, run differently. So you can ask if I'm running at this data center, what can I expect for performance? And the best practices that Jared summarized there is a sample of what we have on the site, many of which are backed up by actual benchmarks to gravity the application.

So I'm going to close the talk by talking about Cloud research engagement because I start saying the role of our team is to support researchers in leveraging the cloud to support their research. That's kind of a vague statement abroad. So I'll tell you a little bit about what my team does. So yes, we are building the services we've shown you. We are releasing them as open source. And we think this is just the start of the tools we are going to be building providing to researchers to leverage the cloud.

Members of the team, and this really a part of an international engagement program where we are offering cloud resources, access to

Azure over the next several years to researchers in academic communities, and we are backing up this offering with a technical team, our team to actually help researchers get their applications up and running on Azure so that they can be successful.

And we are really trying to articulate as a community what research work loads, what applications run well on the clouds. What should really be staying on the cluster, because as a community there's still a lot of open questions, and it's going to vary from community to community. So we are looking for partners to actually explore this.

And part of our chart is to lower the barrier to entry. There are a lot of unknowns about moving to the cloud. So what as a team can we do to provide tutorials, code accelerators, best practices, and our team is actively involved in trying to support policy change.

Today with NSF, it's easy to get 200,000 or a half million to buy a cluster as opposed to buying time on a cloud, so how can we affect policy change? And we've got a lot of work to do inside the company.

If you had a chance to listen to Depoch's talk today, researchers can

send into Amazon data sets they like to have uploaded. So we have to educate our own company on what kind of services we need to provide as a company to make our cloud useful to researchers. Because if we had a longer conversation over a drink tonight, research workloads and web services that .net and Azure is targeted for are not necessarily mutually cohabitable. You have to usually move one and the other together.

Data sets. We are going to provide select reference data sets on

Azure. Data on the cloud is a lot like free puppies. You have to maintain it and support it. So we are waiting for community pull. So what we are trying to do is look for communities that said yes, if this data set was available, it's worth our investment if people would actually use the data to support their research. But what we are focusing on right now what we are making an investment are tools that we can give to you get your data up into the cloud seamlessly from clients to help you upload, upload data from client to cloud and then later we can talk about what reference data sets make sense for a community.

And services. We are just starting to touch the surface and are starting to reach out to external cloud registrants to say what service should stand out to make a community much more empowered. So I'll talk about that in a second.

And we are really asking the questions, you know, at what point can we build enough services, put enough research data, enough accelerators that we can stand back and the community would take it and go forward from there. What does it take to capitalize the community? We don't want to continue to make investments, and the day we stop working with the community, the activity stops. At what point do we have to push it until the community can take it to the next level? And we are also asking ourselves what products in Microsoft should we make cloud friendly for researchers to use, which helps me transition into the next thing.

This is something Ed talked about in his key note this morning the

Branscom Pyramid and the text got messed up on it. If you look at the research community as a pyramid, at the very top of the pyramid you have people who have super computers. They are not going to be needing any access to the cloud. And at the second level of the pyramid, you have people that have clusters, they have support teams. And again, they are well prepared to carry out large research at scale. But the rest of us who don't have access to this kind of capability, how can you make them just as empowered to carry out research, even if it's just for a few days? So that's really what our focus is. In fact, this community we say, you know, they use laptops, a lot of them. You know, they have small desktop workstations. They are collecting data at furious rates. Now what can they do with it? What kind of tools can we offer them and the cloud actually analyze and do something

interesting with that data? It's really about analyzing the data.

It's not about blobs or how fast this runs relative to a cluster. It's about how fast can they get their research done and not spend as much time as they are currently spending writing software. And we think that this could represent a paradigm shift for research for two reasons. One is yes, you do give people the ability to actually as

Dave Patterson talked in his talk today of having the search capability for those brief periods of time when they need more than the cluster down the hall, they can in fact have access to that capability. But it actually broadens participation. You have so many groups that we talk to, university groups we talk to that simply don't have large clusters and capabilities. And again, like the work with Sage that Jared told you about earlier. For the few days the group we worked with, the total cost for the bill was $200 and they got a publication. They won't need a cluster or Blast service for months, so how can you actually give them tools to carry out research like that and again have funding agencies fund those kind of workloads versus making them justify the expense for buying a cluster which they may or may not get.

Our take on this is that we are looking at how to use the cloud as an intellectual amplifier for clients and tools that researchers are already using which is why we like the COVE example, because again, it's a tool that the community built. How can we actually make the cloud invisible to that where they are actually just using the client, specifying some research, and the work gets done? And of course one of the best tools we have is Excel and as we heard in Ed's talk earlier today, the shear volume of data both in business and science that starts with Excel, how can we actually amplify that with the power of the cloud? So we are looking at research ad-ins that can actually move the data from Excel up to the cloud and actually show you libraries of functions that you can run against that data up in the cloud. So again, the cloud in its presence is really invisible except for the capability it offers to the user. And we really don't want the user to have to think about whether they are running map induced libraries on the back end order or invoke Aura or mat lab scripts all they see is buttons that represent the kind of analysis that they want to perform over the data, and in fact they may be manipulating a petabyte of data, but they have just some window that they are actually viewing that data with on Excel. And our team is looking at, well how can we provide data visualization capabilities? How can I provide povidone so if you did cook the data and you did bring it back to your client, we can explain how that data got created and how can you actually have collaboration spaces in the cloud so you can publish that data so others can then analyze it and share your insights with you.

We also have our engagement program. So we recently announced and the call for proposals is currently out with the NSF. We are offering NSF substantial access to Windows Azure. NSF gets to choose the projects and compliment it with NSF funding for the researchers to use the cloud for the research. And we'll be doing this for the next three years.

And they have access to our team and they also have first class support from Azure should their service fall over and they need to have some technical support on Azure. Our current offering, and that probably will grow over time because as the costs fall, is 20 million core hours per year. 200 terabytes of triply replicated storage per project, one terabyte per day per project for ingress or egress and again that can change over time in tier one support for Azure. And this is an international program. This is simply what we are offering here in the

U.S. it's an international program. Discussions are under way in

Europe and Asia we are not ready to make any announcements yet, but this is intended to have similar engagements in other countries through government agencies letting them choose. But what we hope to see from this on our website which I'll show you, Jared had the URL earlier is we are starting to write up the use cases. What did people use Azure for for the research? Where is the code? How can you download it?

How can you build on what people have already built? And we are right now rounding up tutorials and information, programming guides, etc., on

Windows Azure. So we have a separate page on that if you go to our website. The benchmark suite is also available off this site. Tools and service which is code that we are writing on both the code and service if you want to stand it up to use in your research project, and frequently asks questions which are common questions people ask when they first come up to Azure and they are trying to understand how to leverage it for their research. And, again, overtime code accelerators, tutorials programming, samplers, et cetera, that we use to help support the community as well.

So this is what we hope to be our community presence and help support researchers as they are starting to dabble with Azure and of course there is an e-mail alias that you can send us e-mail and if it's a great question we will not only provide the answer but the code we might use to help solve the answer and put that up in the facts so the community can see what other people are asking about it as well.

So that's pretty much all we wanted to cover in our tutorial other than answer your questions.

>>: I have had a [inaudible].

>> : Bertram.

>>: [inaudible] will you care to compare the Azure tables with the big tables that were presented in the Google talk earlier this afternoon?

>> : Birds of a feather. Very similar. I mean, yeah.

>>: Hi there. Did I pick it up right that it's possible to run native libraries on these [inaudible]?

>> : Yes. Yes, it is. It supports both managed and unmanaged code, yes. So you can actually run native libraries. And soon you'll have the ability, this was announced next, that you'll have the ability, soon Azure will be running Windows 2008 VMs. You'll be able to download the VM to your local machine, image it yourself and push the differencing disk with all the codes you've installed, everything you baked yourself, push the differencing VM up to Azure and it will manage that for you. So pretty much install anything you want as a worker node VM it will stand up.

>>: In supposing that someone uploaded several blobs with raw data, is there a model for sharing these blobs between different applications instead of using the standard APIs a more efficient way?

>> : I'm thinking drives and snapshots or -- go ahead, Jared.

>>: I don't know how [inaudible]. There are both storage accounts and let me know if I'm not answering your question. There are both storage accounts and then there are your compute accounts. Your worker nodes and your web roles come off of your compute account. And then you have your storage account where you're storing all of your blobs. Now, all of your worker nodes have access to any of your blobs on the other account. So by their very nature, they are actually very much accessible. You can also set a flag on them as to whether they are public or private. If they are private, then basically only your worker nodes and your web roles can get at that particular data. If they are public, however, then anybody with, you know, a web browser can point a URL at it.

>>: [inaudible].

>> : It's at the blob level, although you can actually set the bit for public or private at container level as well.

>> : Any more.

>>: Is there any [inaudible] of code to -- [inaudible].

>> : So the question was, if I got it correctly, was is there any plans or preparations for providing Azure for academia so the students can actually experiment with it and use it? Our academic outreach team is working aggressively to make that available. There already have different offering, I know there are people here we can put you in

contact with but we are academics can actually get Azure to use in their classrooms for students.

Jim, do you have something you can add for that.

>>: [inaudible]

>> : Krishna has a talk tomorrow, but he's also identified himself, he's the session chair so you can actually tag him or come to his talk tomorrow.

>>: Tomorrow from 2:30 to 4:00 is the academic Azure focus session.

So yes, we will address some of those questions there. And also we'll be announcing tomorrow morning that everybody here will be getting an access token for Azure that's good for a month so that you can basically take it and test drive it, basically. And in tomorrows session actually we'll walk-through step by step you know in terms of setting it up and all of that. So little blocks. So thank you for that.

>>: [inaudible].

>>: Absolutely. The question was what does the token mean? Basically it's a user name and a password that we've set up for everyone here that allows you to log into Azure and get access to compute and storage. On compute you get 20 instances basically which is the exact same account that you get when you sign in with a credit card as well.

Storage are the same accounts that they mentioned there. You get five storage accounts and a hundred terabytes for storage accounts. So exact same ones. Thank you.

>>: [inaudible]

[laughter.]

>>: Hopefully the question was is it only for one month can we not go beyond that. And I guess that sort of falls back to what Roger mentioned we are basically working on a strategy. This is if you will sort of away to test drive it and we'll hopefully have a sort of a segue into a fuller, longer term plan. And so we'll again talk about -- I don't want to take a lot of Q and A time here. We'll talk about it in our certain tomorrow evening.

>>: You can be guaranteed a lot of people care about making that happen so we'll work on it.

>>: Can I pull up too, about the native code putting in. In the existing way that Azure works, there's a managed API. So basically when you say your worker is ready to start, it gives you just one little function, the start method that you have to start with. And the way that we've done it and the way that I think is very common in the science applications, if you have a Fortran program you want to distribute or you've got a script of some sort you want to distribute, is you bring that up into Azure in the storage account and then when your worker node starts, it goes and copies it from that storage account, takes that executable, sticks it right there on that worker role and let it go from there, just say start and whatever you've written before, native or not will be working. It's a very easy pattern. Yeah?

>>: [inaudible] oh, sorry. I didn't catch that.

>>: We'll have to get the microphone over to you. Please go ahead.

Sorry.

>>: Real quick question. We didn't talk about SQL Azure.

>>: Yeah.

>>: What is SQL Azure or is analysis services a component of SQL

Azure? What's the difference between SQL Azure and SQL server? I mean, you know, what are the BI capabilities at this point in time on the cloud?

>>: Yeah. So BI -- the short answer is SQL -- the difference between

SQL Azure and SQL server right now is size and access to master DB.

Size right now is offered in one gigabyte, ten gigabyte going to 50 gigabyte in size initially. You have no access to master DB. They are not spacial in the next couple of months and SQL analysis service is coming right along behind it as well. So again, they are pulling through more and more of the functionality behind SQL and actually also building something called Dallas which you will hear about tomorrow if you're able to attend which is a data mark, a way of discovery data, discovering other researchers data and be able to mash them up. But again the functionality from SQL, Azure new equalizer is new and they are pulling more and more functionality through but it will be present, okay.