22547 >> Jonathan Fay: I'm Jonathan Fay principal architect on... telescope. We have a special guest here today Tony...

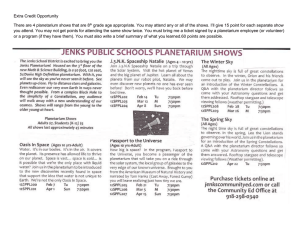

advertisement

22547 >> Jonathan Fay: I'm Jonathan Fay principal architect on Microsoft worldwide telescope. We have a special guest here today Tony Butterfield from the Houston museum of natural science who is going to be showing us some very cool stuff that they've been working on. He basically is, does production for their full dome shows that not only involve their planetarium, but also visualizing earth and environmental concepts and things like that. So they've been really groundbreaking in the planetarium field for quite a long time, including in the first full digital, full domed digital planetarium system, back in 1998. And they were the first sky scan installation for the full dome digital. They do video production that is not only used for their own in-house internal shows but also distributed worldwide. And their program also makes use of this adjunct program that Tony's boss runs that distributes inflatable digital planetariums through classrooms all over the U.S., I believe. >>: And worldwide. >> Jonathan Fay: And worldwide, yes. And so the shows are not only done in bricks and mortar planetarium but as well as these inflatables that go all over, and it's really kind of completely changed the way people think about planetariums, anyway, without further ado this is Tony Butterfield. >> Tony Butterfield: Thank you very much. My name is Tony, and I work at the planetarium at the Houston Museum of Natural Science. The Houston Museum is one of the largest museums in the country but the planetarium is one of the most progressive in the world. What I'm going to talk about today is some of the changes that we have contributed to the industry over the past 20 years. And I've played a big role in pushing the technology to the next level. When I started in the planetarium field over 20 years ago, there was just a big machine in the middle of the room. A bunch of baby food jars, used for special effects, and a lot of slide projectors. Back then, if you look at what was going on in 1991, we were working with a whopping powerful system of Apple 2Es and 486 computers. And I thought it would be fun to kind of take a look back at how it all started as far as animation 20 years ago. Over the years, I've tried to help push the new technology along in the planetarium field. I'm like the beta guy that always has the prerelease version. So in preparing this presentation, I got lucky and found the commercial that changed my life for the better, that helped me get started, which ultimately impacted the industry and what you see out there in the theater today. What's fun when you look at these old commercials is the graphical interface or some of the speed performance. And so I put this together just to have a little bit of fun, looking back. [video] transition, digital effect, digital effect. Graphic. Graphic. Title. Animation. Animation. You're about to see. You're about to see. Was created entirely with a video toaster from NewTek. Remember how it used to be in science? They called it the paradigm shift, yeah. Paradigm -- what's that, one historical moment, the sun revolves around the earth. Yeah. The next moment enter Copernicus. And voila. The earth revolves around the sun. Shows you how things can change. Right now. This very moment. As we speak. A paradigm shift of equal magnitude. It's grabbed the world of video. A paradigm shift. And the force behind the shift. We at NewTek call the video toaster. Hey, that's a hot name. Yeah, and we assure you once you've seen what our toaster can do, I'm ready, the world of video will never look the same again. [music], yeah. Took a long time. What, it took 40 years for the computer to evolve from the garage side to a desktop tool. But with the video toaster, it happened as quick as snap. We're not talking evolution here. We're talking revolution. Yeah. See, we used to be add to a VCR and editor and you have a full-blown production screen. This is what I call a video revolution. That's just what it is. And here's another reason why. Light wave 3-D. Light wave. With light wave you have the excitement of three dimensional graphics. How does it work? Easy. You begin by creating wire frame models, with the light wave model. Yeah. Then you use the light wave renderer to give it three dimensional life. Hey, that looks real. It's real, all right, real easy. You're the director. The next Walt Disney. You position objects, camera and lights. Just by sliding the mouse. Then when you're ready, light wave will animate your scene just like this. Or like this. Neat. This, crazy. Or this. Yeah. And all this is included in the price of the toaster? You bet, it's each piece of equipment you need to have real broadcast television combined into one device with one easy-to-use interface. No wonder I've heard so much about this. Now you know why. It's changing the world of video forever. You see, when NewTek created the video toaster our goal wasn't to be as good as, it was to be better. It wasn't just evolution. It was revolution. Elite. A shift. Yeah, a paradigm shift. So buckle up your seatbelt, Jack, and open up your mind and let the video toaster take you places you've never been before. >> Tony Butterfield: Well, that was a lot of fun. After I saw that and I had been working in the planetarium field, the very first animation you saw was about astronomy. And from that point on I was hooked. At the end of that video there was a still frame of just one of the frames that was being rendered out. It took six minutes and 49 seconds to render that frame out. And I quickly found out that it takes a long time to make animation. Ever since then there's been a quest to render faster and faster. At one point there were even tricks of hooking up a deck alpha to the workstation and the workstation did all the graphics and sent all the jobs out to the alpha. I have another short little video that pokes fun at trying to solve those solutions, and a lot of times when we're rendering animations, even 20 years later, there's a lot of hurry up and wait. And so I wanted to show this to you [video] followed along the course you're now the proud owner of a dedicated 3-D graphics station costing only $65,000. Trust us when you say you'll be indebted to it for many years to come. Today, we'll take a look at the many exciting past times you can explore while waiting for images to render. Through the years, professional 3-D animators have become pioneers in exciting fields such as dictionary proof reading. And chess by mail. However, few things can match the exotic thrill of learning a foreign language. [Foreign language] I'm waiting for the machine to make a picture. [Foreign language] my machine costs more than a house. [Foreign language] patience is a virtue. [Crashing sound] [beeping]. [Music]. >> Tony Butterfield: So even from then it was a slow start to getting any kind of animation out there. Talked about backing up your stuff, how many of you have your files backed up from 20 years ago? I was pretty impressed when I tripped across my very first animation. It's ten seconds long and it took a week to render. I found most of the frames, and it stops a little bit short. But after getting the software, I wanted to be able to contribute to the planetarium industry by making scientifically accurate animations. And being able to create our own in-house animations was an effort to make that possible. Back then, animations were recorded on a single frame recording VCR. You would render one frame. The VCR would back up, record 1-30th of a second and we would wait and wait for another frame and that went on for weeks. That was a whole lot of fun. That's 20 years ago when I first started. But in order to get where we are today, I wanted to take us back and look at a historical perspective of planetariums. Planetariums have been around for thousands of years, as mankind's quest to recreate the nighttime sky. In the beginning there were celestial globes that depicted the stars and mechanical models which recreated the motions of the planets. Shortly after World War II a German company named Zeis combined the two and created what we know of as the classical planetarium. The classical planetarium is probably the planetarium you went to in third grade with a big machine in the middle of the room. Chicago was the first planetarium in the United States to get one of these expensive machines shortly followed by New York, LA. You know, the other major cities. They were super expensive, and so a part-time planetarium employee decided to create a low-cost version, and that was known as the Spitz Planetarium by Armand Spitz. Thousands of these were sold because they were the Volkswagen of the planetarium industry. If you go to most cities around America, your chances are you're going to come across a Spitz planetarium. As far as the digital universe goes, by the 1980s, the technology advanced enough that we started to introduce computer technologies into the planetarium theater. A military defense contractor named Evans and Sutherland that specialized in aircraft simulations had a small little division where they put together the first computer generated star field. This was done by a high resolution or high voltage CRT that was underneath a fish eye lens, and it allowed for you, for the first time, to look at the stars, not as viewed from the surface of the earth. This really changed the way educators could teach students the stars and the relationship of the earth in the cosmos. The problem was it was all wire frame. That's cool and fun and you can do a lot of fun things with wire frame. But it would be 15 more years before we'd get solid objects. Eventually you would have controlled, controlled planetariums where they would have two or more systems running at the same time. Ultimately, one project had a digital star machine and the top of the line optical star machine. But then after a while it was just a matter of how much stuff can you cram into a planetarium. More baby food jars. More spaghetti colanders, more slide projectors, and more video projectors. At that point in time, video projectors were like a small Volkswagen. And they would put them up on steel pan tilt mounts and it was like trying to program a car to look in a certain direction in the dome. The thing about it was it got us started thinking about producing content in the full dome format. But we were just using dozens of separate systems all dancing at the same time to pull off the full dome experience. If you went to a planetarium, you probably, chances are, went to a laser show. If you were near a big city, your last experience of going to the planetarium was probably seeing a led Zeppelin or Pink Floyd show. Laser shows were a very important part of the planetarium industry, because in Friday and Saturday night revenue, you could pay for all of your expenses for the rest of the week. The laser show industry used the planetarium as a venue to develop its technology and we embraced that in the planetarium field by ultimately creating the first full dome laser system, which then could display a video, not a video, but a laser graphic image across the whole dome. So we have all these different systems going at one time or another. And from a production point of view, it was really complex trying to keep all these balls up in the air. [Music]. I produced quite a few laser shows, and I actually found them to be a little bit more complicated than doing video because you have to get every note of the music. You have to get every tempo change. You have so much more complexity going on. And if it's just not synched right then everybody knows it. Basically, these were cell animations cycled through quickly, and so they all have to be drawn out ahead of time, digitized and then reprojected out with a laser projector. We did a lot of cool things with lasers and combining them with educational programs, because they could do color and the DigiStar could only draw a green wire frame. We actually used a combination of the two to tell the story and make it more of an engaging experience. The Omni scan, like I said, was the full dome laser system, and it would be combined with other equipment to create the same experience. Right about that time digital video was becoming more common place, and the first use of digital disk recorder was introduced, and that is the concept that everybody knows of today as Tivoing but the idea of recording a video sequence on to a hard drive and having it play back. That was a big breakthrough in the sense that you didn't have to print to a single frame recording VCR or you wanted to make edits just like typing a paper you would just cut and paste. I was one of the first people to use a disk playback system, and we were sitting around in a conference and I said, you know, some day we're going to be shipping shows around on these hard drives. And it will be, you know, easy to be able to share content between city and city, because then you wouldn't have to worry about how many baby food jars they had in order to make the special effects. What we have today is basically multiple channels of those disk playback and all combined together at one time. As we got more sophisticated with the technology, we also had to mature in our production styles. Not only did we have lasers going in all parts of the dome, we had analog projections that were made either with painting, rotating pieces of glass or shower curtain. Slide projectors. But the whole concept of how to produce for the full dome was starting to take place. One of the first things I pointed out was as you moved in and under and around things, you had to proportionally change the point of view of the object. Up until that point everything was edge-on, and it didn't quite work right. So the idea is that if you were to go underneath the planet, you should be able to see the bottom of the planet. And since I could make the animations, then it was easy to incorporate into the show. Then came full dome video. The concept of being able to take all those different video projectors and combine them into an edge blended system became available. There were earlier attempts that didn't quite make it. But in 1998, the technology became available and we were the first to install that at the Houston Museum. What we have is a multi-channel edge blended system, and depending on the specific location, it might be six projectors or eight projectors, or in some places they've tried with 12 projectors, but the whole point is there's not one single high resolution video projector that can cover the surface of the dome so in order to gain the resolution we have to combine multiple projectors together. This is what we call a dome master. It's basically a fish eye format projection. In this format, the bottom of the screen or the bottom of the image below the satellite dish is what we call the front of the room. You have to imagine if you're sitting in your chair in the planetarium, that the satellite dish is at the bottom of the room, the east on this image is the left side of the room. And the back of the room, if you were to turn around and you see would be at the top of the image where it says north. This is the standard format that we use to trade content between other planateriums, and this makes it allow for the quality of production to be shared from big productions down to small cities and maintaining the quality of the -- the value of the production. These are some more examples of the dome master fish eye format. We can take panoramas and stitch them together and have it properly distorted. And on the right-hand side we've even gone so far as having a fish eye lens on the space station, and they regularly send us pictures so that we can incorporate that into educational programs. Later on I'm going to talk about how our next project is to combine all those years worth of astronaut photography into a show. One of the things that has stayed consistent over the years, be it high tech digital or old fashioned analog is this debate from one institution to another of what's called live versus taped. 50 years ago, it was a live operator with a pointer standing in the middle of the planetarium giving a lecture, and answering questions by the audience. In contrast to live, then you had a prerecorded soundtrack. It played the same music, same presentation each time. The advantage to that is that the school kids got to see the same thing each time and could be consistently tested to make sure that they were exposed to the same material. Today in modern times that debate is transferred to the future. Not from live versus tape. But to be real timed through a graphics image generator or prerendered in a production. So the same style varies from institution to institution and the method of conveying the information. That's the debate that will never end. The gap between the big planateriums that had full dome systems and all the other cities got wide. The big cities who had the money and could buy the big systems quickly leaving behind medium-sized cities, museums, educational institutions, and so we received the grant to create the first portable full dome system. This is an example of an inflatable dome much like the one that's out in the hallway. Our very first full dome system was using a fish eye lens and a video projector and since we had a surplus of slide projector stands sitting around we thought we'd strap it to that. And that was the first portable digital video projector. All the way back from 2004 and 2005. The problem there is that the glass is insanely expensive. That lens is $16,000 alone, and it's married to the design of that projector, so as soon as that obsoletes, you're stuck with an expensive piece of glass. Plus, we would send these out on the road each day to part timers to go to gymnasiums at schools and if they dropped it or you've got an expensive package in a small spot. So even though that was an affordable solution to the multi-million dollar full dome planetarium, that was still an expensive little purchase. It obsoleted very fast and we tried to come up with something better. In 2001 I saw an example of an alternative to the fish eye, but you gotta remember back then we were still dealing with NTS video, and so anytime you take standard definition video and you stretch it over 50 feet wide, you see scan lines and pixels, and so although the concept of putting an image up on the dome was mathematically feasible, we didn't have source material to be able to deliver to it. In 2001, I saw a demonstration of the concept of bouncing a video image off a spherical mirror, which, much like with telescopes, takes the cost saving advantage of using a mirror instead of a lens to display the graphics up on to the dome. It turns out that what I call a warped fish eye image is basically in software 360 degree fish eye turned back on itself. Many of the software packages these days can create a fish eye image we just need the portion that covers the dome. What we have done since then is we've made a portable self-contained system that you can use to take on the airplane or in the back seat of a small car, but then you're not married to a piece of optics. In this picture here you see a standard pro summer video home theater projector spherical mirror and right about here should be a flat mirror. Basically what the optical path is, is the light comes out of the video projector, off of the flat mirror, and then hits the curved mirror. What we do in our content is we predistort it in the content so by the time it hits the curved mirror, the curved mirror straightens it back out when it hits the dome. Now, that is a huge breakthrough and a cost savings, because you can swap out these projectors because the mirror doesn't care, and if you bang up the mirror you can swap that out pretty easily. So it's off-the-shelf technology. It's easy to do. It's something that people can relate to, just like with astronomy, you have advantages with refractor telescopes and you have advantages of reflector telescopes, where we're incorporating that same technology into a low cost portable solution. This is an example of the opening of one of our shows in what I call this warped fish eye format. The -- [video] the dead center front of the room is right around the word full, and as you move along the bottom of the image, so this is the front of the room. This is the left side of the room. This is the right side of the room. And this all -- all this back here is the back of the room. This is 16 by 9. So it can be displayed off of a Blu-ray player or it can be edited in standard off-the-shelf home editing software. So you don't need special format or special aspect ratio. [video] energy destruction turned into it. For the most powerful events in the air over the ocean and even from the sun we reserve the title force five. [video]. >> Tony Butterfield: When you see that in the dome it's pretty scary. I wanted to stop right here, because this also introduces some of the other things that we have been doing in Houston. And that's almost everything in addition to astronomy and the other sciences we've been expanding. The most obvious is earth science, looking back at the earth and so in this part we're going to tell a whole scene about ocean currents and wind currents affect the weather but the whole point is that the planetarium is not just for looking at the stars, but also teaching earth and other sciences. We have just in order to be able to survive in our own institution, because it's a major institution, we have competition within our own building. We have an I max theater, we have a butterfly center. We have two or three exhibits. A couple of gift shops. So we're not just trying to get the visitors to come to our building, but once we get them to our building we're even fighting to get them to pay money for a ticket to go to one of our shows. This is a collection of some of the movie posters that I brought with me. And we have learned that because we are low man on the totem pole when it comes to marketing and budgeting and things like that we have to go along with what the museum is doing. So over time, if the museum gets a big exhibit, in order to take advantage of what they're doing and ride along on that marketing train, then we'll go ahead and produce a show that corresponds with that. I don't know if the titanic exhibit has made it through museums in this part, but we had the titanic exhibit so we made a planetarium show that went along with it. We also worked with scientists during the international polar year. We worked with polar scientists and glacier scientists to create a show that helped us learn about ice on our planet so we could better understand ice on other planets. That was called Ice Worlds. One of our most successful shows of all time was something that we, it's one of those one hit wonders you are just shocked it happened it's called erstwhile dried it's now translated into 18 different languages. It's shown around the world. And basically it's a story of a grandfather on the moon with his grandkids looking at earth. And he's telling to the grandkids what it's like to be on the earth and why it's green, why it's white, the way it used to be. And so it's a story, and it's been the most successful. We're kind of making an updated sequel to that next year and I'll talk a little bit about that. Our biggest project has been to get ready for the demise of the planet. The end of the world is coming, and so we prepared for that. [video]. >> Tony Butterfield: And yes we do sacrifice some of the -- [video] it's an intern, that's where they all go. And we had actually a lot of stress over the sacrifice scene. We showed it to a lot of board members and special discussion groups. Do you show a sacrifice to kids at a museum. Well, if you're going to be truthful to the culture of the people of that time period and to show what it was like back then to keep the sacrifice in would be accurate to the context of the people. But then if you've got little kids, you want to really explain to them that they're scooping out a bleeding heart and showing it to everybody in the audience. So we are offering that show in two versions. That show opened two weekends ago, and it has overnight tripled our attendance over the weekend. So that might be our next big all-time big hit. The end of the world is only going to happen in the United States. If you actually talked to the people that are in the know in Central America or Mayan people, the Mayan believe in time basically looping kind of like when we go from winter to spring and things start over and bloom and blossom again. It's just a repeating cycle of one cycle after another for them. And so if they were here, they're actually going to be out partying and encouraging it to happen as the sooner the better. So that's American media. I want to talk about what's going on now. It's like hurry up and waiting with the hardware. >> Tony Butterfield: Would be accurate to the context of the people. But then if you've got little kids, you want to really explain to them that they're scooping out a bleeding heart and showing it to everybody in the audience. So we are offering that show in two versions. That show opened two weekends ago. And it has overnight tripled our attendance over the weekend. So that might be our next big all-time big hit. The end of the world is only going to happen in the United States if you actually talk to the people that are in the know in Central America or Mayan people. The Mayan believe in time basically looping, kind of like when we go from winter to spring and things start over and bloom and blossom again. It's just a repeating cycle of one cycle after another for them. And so if they were here, they're actually going to be out partying encouraging it to happen as the sooner the better. So that's the American media. I want to talk about what's going on now. It's like hurry up and waiting with the hardware. Well, now the software is finally catching up. A lot of the high end 3-D packages now either have plug ins or built into the camera rigs the capability to render in a fish eye format. But there are more software applications that are on purposely including in their design the fish eye format to be able to display their software into the dome. One of the best pieces of software that we've been working with so far is the worldwide telescope project with the help of Jonathan. I started working with him a couple of years ago about what it might be not in a desktop version but in a educator's planetarium operator's version. And since I'm a content creator, I've been bugging him like crazy on tools, on how to make stuff real fast. I've started incorporating the worldwide telescope into my production workflow and already in two shows I've quickly been able to add content to our shows with the help of worldwide telescope. There's a couple other software packages that's worth mentioning. As I had said before, live versus tape or real time versus rendered. One of the other areas that we're aggressively looking into as far as real time, and that was made possible with game, affordable game engine called unity 3-D. With unity 3-D we've been able to distort the graphics to properly match the dome. And we've created an educational game that goes along with the Mayan planetarium show. So that they can both watch the production, learn about the people and then as a team go in with assignments and task cards and explore the city of Takal in real time. The whole problem has been well who gets to drive has been eliminated because as you work as a team somebody's the geologist, somebody's the biologist, somebody's the navigator, and then everybody has a job and you have to find certain things on your task card. And so that's how we're combining unity 3-D as a game engine to quickly generate the full dome graphics. It also has allowed for me to do previsualization of scenes, complex scenes very fast. And so we were able to as a placeholder put a scene into our Mayan show to see if it was going to work, all created in unity 3-D. Once it passed inspection, then we sent it off to the render farm to be ray traced and properly textured. The future of Full Dome Production has a long way to go. It's starting to get the attention of other film producers. The live action capability of being able to capture a full dome image is a little bit challenging. There's a higher than HD camera called the Red where people have tried to put a fish eye lens on to the front of the Red camera. It's a huge waste of pixels because the fish eye lens truncates the CCD, and you pay a lot per pixel. One of the things that I did was I got what's called the Ladybug, and it is a camera system that has six cameras pointing in different directions, five along the horizon and one straight up. This brings up a whole new area of cinematography challenges. Where do you put the camera crew when you can see everything around you. Well, in this case I had to be the camera crew underneath the camera. I, with a couple of batteries and a backpack, and my camera system, walked around Central America and everybody thought I worked for Google Earth or something. But we have looked at that as a way to set up a scene, and we used it for the first time in the Mayan production. It's awfully expensive, and it has some shortcomings. One of the other alternative -- oh, this is a fun shot. I was, I got up in the wee hours of the morning to watch the sunrise at this temple. And these girls walked up right in the middle of my time lapse photo. So I said hi and I was nice to them. But I'm hiding there under the camera, and they're like: What's that? And so that scene is not in the show. But this is what the Ladybug captures. I poked something on this screen. Basically the Ladybug -- if I hit it again -- oh. The Ladybug, like I said, takes a two-by-one lat long image, and the only blind spot is underneath it. And that's why it's black on the bottom. Once we get it out of the Ladybug format and into the production pipeline, it's good because then we can composite CGI elements in the foreground and use it as a background. But it's the first attempt in a full dome production to use real world photography and there's more to come. Just a brief note, I don't have a picture of it here, but like you'll see here, where we're taking a video projector and bouncing an image off the mirror, well, I've done some testing of reversing that process and putting an HD camera or higher than HD camera in place of the projector so you capture what comes off of the mirror and it's already in the distorted format, because it's 16 by nine and it's already in the distorted format you could quickly edit together your show and then play it back through your media player and you have the proper format. You have 30 frames per second. It's high resolution. And I think that's a good way to go. Much like any toolbox, there are better tools for some scenes than others with the fish eye format that was more suitable in some scenarios than using the mirror, the ladybug might be better for other scenarios. So there's not a camera that can solve all your cinematography questions. You now need to have a toolbox to be able to pick and choose as a producer what you want to draw upon. Some software tools being used in our current production. I've quickly come to enjoy the Microsoft ice panorama stitching program, and that is a program that is capable of taking pictures that were pointed in fairly close, if you're -- fairly close in the same spot, it will stitch together real fast. Worldwide telescope, like I had mentioned, allows for us to image content and with its frame output capability quickly be able to have it reviewed to be edited or to expand on to. Microsoft Photosynth is the -- and what I'm using it for is along with the astronauts that took pictures for us inside the space station, they're now taking the database of images of the outside of the space station and running it through this process. And as I'm modeling and setting up the scenes, I'm able to look at the space station from different points of view that are in registration. That's helped me out a lot in my current project. Then there's a couple other high end panorama stitching programs, your standard PhotoShop after effects, and I'm a Lightwave fan. One of the things I really wanted to leave on is the astronauts who have volunteered their time to take pictures on the space station. Their time is so choreographed that they have to get a note to go to a baseball game. And when we came to them with the idea of taking a fish eye lens into orbit and taking pictures for us and sending it back down to help teach children what it's like to be in space, it had to be at their own free time, if they wanted to do it. And we started out with 100 speed film in 2007. And since then we've been using high resolution camera bodies. This picture on the right is actually a good shot. This is another example of, well, this is actually a panorama in the fish eye format but there's a couple of key astronauts in there that has taken a huge amount of pictures inside and outside the space station. And that's Mike Bear and Jeff Williams. And I think Jeffrey Williams is one of the key people that did the photosynth collection. Astronaut Scott Parazynski is on our museum board, and a few years ago when they had the crisis in orbit where the solar panel ripped apart, he was the one who got sent out to fix it with a set of cuff links. That was the mission that they were going to take all of our pictures, but since they had the emergency to deal with, I ended up with 19 good ones. So we were happy with 19 good ones. And from there we kept encouraging them to take more. This is down in NASA on the left-hand side. This is a part of their training facilities that are eventually going to be given away. But we took down one of our portable planateriums like what you see in the hallway, and we let them take pictures and then look at it in the dome format, but then what we also figured out was it's about the same size of what was going to be the constellation capsule. And so we started to write a grant to teach kids about the future of the space program and the constellation spacecraft. Well, we submitted for a grant. We got awarded the grant. And then a week later NASA cancelled the constellation space program. So now we have a grant to teach about the future of space and space exploration but no vehicles to talk about. These are some more astronauts that eventually went up a couple more times with three of our fish eye lenses and hopefully in the next week they'll take some more from the Cupola which is the new window that looks down on the earth. This is another -- this is Nicole Stott. She went up and she was real helpful on taking pictures for us. And sending them back down. But every couple of months everything changes around. So trying to teach kids what it's like to be in the space station, it's always changing. Vehicles are coming. Vehicles are going. The insider is getting rearranged. I went to go look for where the bathroom is and the bathroom is now moved to another part of the space station. Now the robinot is now sitting where the bathroom used to be. Now we've finally got enough pictures to be able to see how things have changed before and after and be able to see and teach the kids. So that's our next project, is to teach about space flight, human space flight, going to the orbit, and then beyond orbit to the moon. This is a preproduction version of a scene for our next project of going to a lunar base on the south pole of the moon, and just to remind you, when we're looking at this, the front of the room is down here. Directly overhead is right here into the left is over here, and behind you is way up here. So the action, when we produce our content, is what I call the sweet spot about 35 degrees up, because when you're sitting in your chair, you kind of get a field of view of 100 degrees wide or 120, 130 degrees wide, 80 or 90 degrees tall. Now, we don't currently do stereo projection in our planetarium. Stereo projection has been done in several different methods. It's very impressive, but much like Hollywood, the verdict is still out if it's worth the extra trouble to render everything twice. Some of the newest technology involves rendering 8 K. That's a circled rendered image that's 8,000 pixels by 8,000 pixels if you're talking about rendering time that's a lot of rendering time even on today a computers. Stereo means you have to have two channels and that's like two sets of 4 K which still adds up to the same amount of render time. And so it's fun to watch. It's engaging. It's impressive. But is it really worth the extra cost to render it out given the limited funds there are to do some of these projects, it's still kind of up in the air. Most of our projects are funded all or in part by grants. And so we have to cover certain scenes in the show that's paid for by some grant. And we end up recycling some pieces from previous shows, editing them together with new shows. Get new grants to do old stuff. And so we do the most in Houston with the least amount of money. And so this is our current production. That's about it for an introduction to the digital planetarium. The portable planetarium that's out in the hallway. How we have come from a ball with holes poked in it and light shining out to a big mechanical machine in the middle of the room to these digital projectors that allow for us to create these amazing images. This is one of my favorite photos that we got back from the Cupola. And it's a little bright. But the fish eye lens is looking at a cell use spacecraft. The tail of the orbiter is off on the side. And, oh, there's another shot where they put the fish eye lens closer to the window and then we can see it all at once. The problem is is what we wanted to do was to be able to do a complete orbit of the earth with the camera. And that would mean maybe taking a frame every five seconds. But they can't take high capacity cards into space. So they have a shoebox of like eight gig cards. And so you can't get many frames at 27 megapixels or 21 megapixels and have a worthwhile sequence because anytime you take the card out, you bump it, it's never the same. So we're kind of ->>: Is there a reason? >> Tony Butterfield: X-rays. They get -- they get damaged. So they can't be flight rated. The high capacity cards can't be flight rated because you take the chance of not getting any of your data so it's better to get a lot of your data all over the place than maybe not get your data at all. >>: The technique? >> Tony Butterfield: The other problem, which is a big stress, I guess it's an internal stress because when I brought it up everybody kind of like is like why can't you just write to the laptop hard drive? Well, NASA, like a lot of places, is grouped into different departments and that's another department which that whole concept is out of balance. So the quest for a nice image sequence is still yet to come. And as time -- the problem now is -- and me being from Houston, and I live on the side of town where NASA is, is most everybody's been laid off. In fact, the people that we worked with, the photo TV group, is no longer there. And so I went to go to call who the next in command is to be our contact person, and it's really hard to get somebody on the phone. So it's a little sad in the sense that we've lost a heavy lift vehicle, a lot of people in Houston are now thinking that they had a really cool job that they would have forever. They now don't. And so now they're having to look for jobs. But it's really kind of put a halt. And in the museum field, we are informal educators. And so we -- it's education that's not in a classroom. We're trying to teach the kids why to take math classes, why to take science classes, what kind of careers. And so we're trying to give the kids a glimpse of what might yet to become. And it's from the NASA point of view, we're kind of in limbo right now. So that's a hard story to tell kids. The future of commercial space flight is promising. But they have a long learning curve, and trying to tell that story is going to be a while. This is one of my favorite shots from the cupola. And I'll just leave it at that. If anybody has any questions. I'm going to need a few more minutes to do some alignment, if you want to come back and look at some of the worldwide telescope in the dome, that can be fine as well. >>: Will you have a preview of the show as well that you can do for ->> Tony Butterfield: Yeah, I have to transfer this laptop out to the portable. So it's a little bit of unplugging and plugging things up, but we can show you some really cool stuff. >>: About how long do we have? >> Tony Butterfield: Well, a long coffee break. >>: Okay, what, 15 minutes? >>: Yeah. >> Tony Butterfield: That's it. >>: Thank you. >> Jonathan Fay: Well, thank you very much, Tony. Appreciate you coming all the way out here and we look forward to seeing the show. For those of you who are watching on the recorded version, we're going to try to get some of these videos from the dome demos captured so they can be edited and added in. So if that works out, you'll see more video after this. Thank you very much. And we'll see you guys later. [applause]