COMING OF AGE OF JOINT EVALUATIONS? ALNAP Presentation at OECD-DAC 21

advertisement

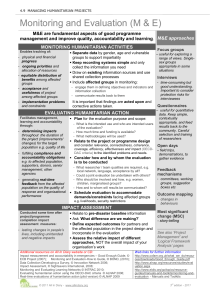

COMING OF AGE OF JOINT EVALUATIONS? ALNAP Presentation at OECD-DAC 21st January 2008 Agenda Background Findings Future Agenda for Humanitarian JEs Other relevant ALNAP work 2 Background ALNAP is a network of all the major humanitarian actors Now in its tenth year Works to improve humanitarian performance through learning and accountability Range of products and outputs, including a meta evaluation of humanitarian evaluations periodic review a sample of evaluation reports against a Quality Proforma which has been developed according to accepted evaluation good practice. Systematic use of the Proforma over a number of years has made it possible to identify trends in evaluation quality over time. 3 The ALNAP meta-evaluation series Meta-evaluation defined as “meta-analysis and other forms of systematic synthesis of evaluations providing the information resources for a continuous improvement of evaluation practice”. Overall aim of ALNAP meta evaluation is “...to improve evaluation practice, by identifying areas of weakness that deserve attention, and examples of good practice that can be built upon...” There is qualitative evidence that this aim is being met E.g. the use of DAC criteria in EHA has gradually strengthened in the last few years; consultation with primary stakeholders has improved in evaluation methodology (despite still being poorly rated overall) In other areas there has been little or no improvement, for example attention to crosscutting issues such as gender equality, protection and advocacy Where improvement in quality has been noted, it has usually happened quite gradually . 4 Humanitarian Joint Evaluations In the humanitarian sector, the first significant joint evaluation was the seminal multi-agency Rwanda evaluation in 1996 shorter history than in the development sector Subsequent JEs have usually been collaborative efforts of donor governments, but involvement is broadening to include UN and NGOs The 6th ALNAP Meta Evaluation focuses on growing number of Joint Evaluations Currently being finalised, findings presented here for discussion and debate 5 6th ALNAP Meta evaluation: specific objectives To review the quality of joint evaluation exercises, where possible comparing these with the quality of past single agency evaluations reviewed in previous ALNAP metaevaluations; To document in an accessible way the lessons from the growing experience of humanitarian JEs – especially examples of good practice – to feed into future joint endeavours Thus, to make a significant contribution to the emerging body of knowledge about humanitarian JEs 6 Meta Evaluation Methodology The methodology used was comparable to previous metaevaluations, based on a sample of 18 evaluation reports The quality of the evaluation reports has been assessed against the ALNAP Quality Proforma (slightly adapted to be appropriate to JEs) http://www.alnap.org/resources/quality_proforma.htm The evaluation processes have been reviewed through individual and group interviews with those involved in the JEs, iterating between these two methods Interviews have been carried out with representatives from 15 different organisations as well as lead / central evaluators The data from the assessment against the Proforma was analysed and compared with the results from previous ALNAP metaevaluations, which have covered a total of 138 evaluations. 7 Typology of JEs (adapted from DAC, 2005) ‘Like-minded agencies’ (or qualified): agencies with similar characteristics coming together WFP/ UNHCR pilot food distribution (UN agencies operating to an MOU) All ECB evaluations (group of NGOs); DEC evaluations (group of NGOs); IASC RTEs (UN agencies) Most common ‘Partnership’: donor recipient agencies evaluate together as equal partners ECHO/ WHO/ DFID JE: WHO emergency response, Pakistan ‘Hybrid multi-partner’: disparate actors coming together playing variable roles (eg active/ passive) IHE evaluations (comprising UN agencies, NGOs academics, recipient government etc) ‘System-wide’: open to all actors in the system TEC 8 Agenda Background Findings A Future Agenda for Humanitarian JEs Other relevant ALNAP work 9 Ten Hypotheses to be tested Humanitarian Joint Evaluations... 1. help to build trust and social capital within the sector 2. tend to be driven from the centre (ie headquarters) than the field 3. do not involve sufficiently the government of the area affected by the humanitarian crisis 4. offer greater opportunity for beneficiaries to be consulted/ surveyed than in single agency evaluations 5. have more rigorous methodologies than single agency evaluations 10 Ten Hypotheses to be tested Humanitarian Joint Evaluations... 6. pay more attention to international standards and guidelines than single agency evaluations 7. are stronger on cross-cutting issues such as gender and protection than single agency evaluations 8. overall quality tends to be higher than single agency evaluations 9. are more likely to address both policy issues and programme performance than single agency evaluations 10. pay attention to wider debates within the humanitarian sector, and situate their findings accordingly 11 Findings in relation to hypotheses (1) JEs are no longer solely the domain of donor governments, the early champions of JEs UN agencies and some NGOs are now fully engaged still early days, and some efforts to promote and institutionalise a JE approach have come and gone, despite evidence that JEs help to build trust and social capital amongst the participating organisations (hypothesis 1) JEs have so far been northern and headquarters-driven (hypothesis 2) Reflecting the set-up of international humanitarian agencies Real progress is needed in in fully involving stakeholders in-country - national NGOs, other organisations and governments Generally poorly represented (hypothesis 3). Involving latter will be easier in natural disasters than in conflict-related humanitarian crises, especially if government is an active party in the conflict There may be important lessons for the humanitarian sector from JEs on the development side, from work done by DAC to strengthen developing country participation and from the Paris Declaration on Aid Effectiveness 12 Findings in relation to hypotheses (2) Conclusive evidence that JEs are overall of higher quality. than single agency (hypothesis 8) their terms of reference are generally clearer and more useable consultation with local populations and beneficiaries is stronger (hypothesis 4) more attention is paid to international standards (hypothesis 6); and the OECDDAC EHA criteria are more rigorously used JEs have more rigorous methodologies than single agency evaluations is proven, but not across the board (hypothesis 5) There are striking gaps and weaknesses in the JEs reviewed, especially their attention to cross-cutting issues such as gender equality, protection and advocacy (hypothesis 7). Hypotheses that JEs are more likely to address policy issues and locate their findings within wider debates within the sector met with a mixed response (hypotheses 9 and 10) There is some evidence of this (e.g. UN-led humanitarian reform processes), but also missed opportunities, a number of JEs in our sample had not fulfilled this potential, despite the generally high quality 13 Broader findings: most JEs of humanitarian action are multisectoral, focus on a particular humanitarian crisis FOCUS OR SCOPE OF EVALUATION Program focus H O W A C T O R S W O R K T O G E T H E R Institutional Sectoral or thematic focus ‘Partnership’: donor & recipient agencies evaluate together as equal partners ECHO/ WHO/ DFID JE: WHO emergency response, Pakistan ‘Like-minded agencies’ (or qualified): agencies with similar characteristics coming together WFP/ UNHCR pilot food distribution (UN agencies operating to an MOU) ‘Hybrid multi-partner’: disparate actors coming together playing variable roles (eg active/ passive) IHE evaluations (comprising UN agencies, NGOs, academics, recipient government etc) ‘System-wide’: open to all actors in the system Multi-sectoral focus, related to a particular humanitarian crisis (usually bounded geographically) Global eg global Policy All ECB evaluations (group of NGOs); DEC evaluations (group of NGOs); IASC RTEs (UN agencies) TEC evaluation 14 Broader findings: Purpose of JEs The ToR for most JEs in the sample emphasise both accountability and learning as their purpose in practice learning has dominated ranging from learning about partners’ approaches, to sharing good practice, to learning about the programme or initiative being evaluated Both the TEC and ECB clearly identified another learning purpose: to learn from the process of implementing a joint evaluation, and buyild evaluation capacity Accountability is partially met by JE reports ending up in the public domain. Peer accountability is also strong in JEs which usually demand a higher level of transparency than single agency evaluations Other purposes of JEs include: building evaluation capacity, learning about the process of doing JEs, and relationship-building between participating agencies 15 Broader Findings: JE skillset JEs require a different skill set than single agency evaluations technical, political and inter-personal skills Getting the right evaluation team in place is key the pool of sufficiently skilled evaluators for JEs is small compared with demand, implying a need to invest in evaluator capacity For policy-focussed evaluations, there are benefits of hiring policy analysts to lead or be part of the team. 16 Broader Findings: Follow-up & utilisation Generally more accessible than single agency evaluation reports Possibly because of the higher skill set of evaluation team leaders Use of professional report editors may play a role Conclusions are slightly stronger than evaluations in previous years, but there is little difference in the quality of recommendations strongest sets of recommendations were those targeted to individual agencies or where responsibility was clearly indicated the weakest where there were too many recommendations and/ or they were inadequately focussed Utilisation-focus is more challenging for JEs because of the range of stakeholders involved with different needs weak link in the chain there are examples of good practice in terms of how the process is designed at the outset and how the evaluation team engages with stakeholders, especially incountry. When JEs are part of a wider institutional framework/ relationship, there tend to be better-established mechanisms for discussion and follow-up to recommendations 17 Broader findings: coherence with single agency evaluations “Should JEs replace or reduce the need for single agency evaluations?” Active but distracting debate “they are very different animals” with different purposes JEs can fulfil accountability purposes, but at a different level needs of a single agency. Accountability to peers, and to some extent to beneficiaries through stronger consultation, are features of a number of the JEs in our set. But if individual agencies need to be accountable to their funders in any detail, a JE may not fulfil this need. JEs clearly complement single agency evaluations by placing the response in the bigger picture/ wider context, exploring how agencies work together, and addressing wider policy issues. when a “club” group of like-minded agencies come together to evaluate their work in a particular area (e.g. ECB / UN) reducing the number of evaluation teams on the ground asking very similar questions of local communities, government officers and others, is clearly a good thing. Fewer but more considered JEs of this type may facilitate follow-up by reducing the overload on the humanitarian sector 18 Agenda Background Findings A future agenda Follow up ALNAP work 19 Where next for humanitarian JEs? Project that analyses and describes the pros and cons of different collaborative management structures, to guide decision-makers in their future choices Action research project exploring different and creative ways of consulting beneficiaries JEs in some thematic and policy areas in the humanitarian sector that are relatively new and/ or challenging to the international system e.g. protection as part of humanitarian action, or livelihood support in the midst of a humanitarian crisis JEs should play a more active role in agency evaluation policies, based on clear understanding of the relative costs and benefits of different types of JEs 20 Where next for humanitarian JEs? A planned system-wide JE A 3rd system-wide humanitarian evaluation should be considered in the next 18 months, focussed on a significant but relatively forgotten/ under-evaluated humanitarian crisis, for example in eastern DRC The sector has much to learn from such an exercise, yet there would be less pressure to act fast at the expense of process, and it would provide an opportunity to apply the learnings from the TEC whilst they are still fresh. This proposal should be discussed by the ALNAP membership and the wider aid community. 21 Agenda Background Findings Future Agenda for Humanitarian JEs Follow up ALNAP work 22 ALNAP follow up work of relevance to the OECD-DAC Evaluation Network Workshop on Humanitarian JEs (2nd half 2008) ALNAP Guide to Real Time Evaluation end of March 2008 Humanitarian Evaluation Trends and Issues initiating case study based research of humanitarian evaluation, background study nearing completion 7th ALNAP Meta Evaluation (2009) on RTEs 23 Thank you! 6th ALNAP Meta Evaluation Available in March / April Please get in touch for copies Ben Ramalingam b.ramalingam@alnap.org www.alnap.org 24