Intro of digital image processing Lecture 5a

advertisement

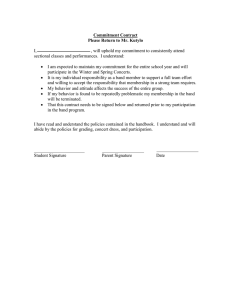

Intro of digital image processing Lecture 5a Remote Sensing Raster (Matrix) Data Format Digital number of column 5, row 4 at band 2 is expressed as BV5,4,2 = 105. Image file formats BSQ (Band Sequential Format): BIP (Band Interleaved by Pixel Format): each line of the data followed immediately by the next line in the same spectral band. This format is optimal for spatial (X, Y) access of any part of a single spectral band. Good for multispectral images the first pixel for all bands in sequential order, followed by the second pixel for all bands, followed by the third pixel for all bands, etc., interleaved up to the number of pixels. This format provides optimum performance for spectral (Z) access of the image data. Good for hyperspectral images BIL (Band Interleaved by Line Format): the first line of the first band followed by the first line of the second band, followed by the first line of the third band, interleaved up to the number of bands. Subsequent lines for each band are interleaved in similar fashion. This format provides a compromise in performance between spatial and spectral processing and is the recommended file format for most ENVI processing tasks. Good for images with 20-60 bands Band 2 Band 3 20 50 50 90 90 76 66 55 45 120 80 80 60 70 150 85 80 70 100 93 97 101 105 103 90 70 120 133 Band 4 120 150 100 120 103 210 250 250 190 245 176 166 155 85 150 156 166 155 415 220 77 135 180 180 160 170 200 200 Matrix notation for band 2 1,1,2 2,1,2 3,1,2 4,1,2 5,1,2 1,2,2 2,2,2 3,2,2 4,2,2 5,2,2 1,3,2 2,3,2 3,3,2 4,3,2 5,3,2 1,4,2 2,4,2 3,4,2 4,4,2 5,4,2 10 15 17 20 21 20 50 50 90 90 120 150 100 120 103 210 250 250 190 245 15 16 18 21 23 76 66 55 45 120 176 166 155 85 150 156 166 155 415 220 17 18 20 22 22 80 80 60 70 150 85 80 70 77 135 180 180 160 170 200 18 20 22 24 25 100 93 97 101 105 103 90 70 120 133 200 10 15 17 20 21 15 16 18 21 23 17 18 20 22 18 20 22 20 50 50 90 90 76 66 55 45 120 80 80 60 70 150 100 93 97 101 105 120 150 100 120 103 176 166 155 85 150 85 80 70 77 135 103 90 70 120 133 22 210 250 250 190 245 156 166 155 415 220 180 180 160 170 200 200 0 123 222 215 24 25 BSQ 0 123 222 215 10 20 120 210 15 50 150 250 17 50 100 250 20 90 120 190 21 15 76 176 156 16 66 166 166 18 55 155 155 21 45 85 415 23 120 150 220 17 80 85 180 18 80 80 180 20 60 70 160 22 70 77 170 22 150 135 200 18 100 103 200 20 93 90 22 97 70 123 24 101 120 222 25 105 133 215 0 BIL 90 103 245 BIP 0 123 222 215 Band sequential (BSQ) format stores information for the image one band at a time. In other words, data for all pixels for band 1 is stored first, then data for all pixels for band 2, and so on. Band interleaved by pixel (BIP) data is similar to BIL data, except that the data for each pixel is written band by band. For example, with the same three-band image, the data for bands 1, 2 and 3 are written for the first pixel in column 1; the data for bands 1, 2 and 3 are written for the first pixel in column 2; and so on. Value=image(c, r, b) Value=image(b, c, r) Band interleaved by line (BIL) data stores pixel information band by band for each line, or row, of the image. For example, given a three-band image, all three bands of data are written for row 1, all three bands of data are written for row 2, and so on, until the total number of rows in the image is reached. Value=image(c, b, r) What is image processing Is enhancing an image or extracting information or features from an image Computerized routines for information extraction (eg, pattern recognition, classification) from remotely sensed images to obtain categories of information about specific features. Many more Image Processing Includes Image quality and statistical evaluation Radiometric correction Geometric correction Image enhancement and sharpening Image classification Pixel based Object-oriented based Accuracy assessment of classification Post-classification and GIS Change detection GEO5083: Remote Sensing Image Processing and Analysis, spring 2012 Image Quality Many remote sensing datasets contain high-quality, accurate data. Unfortunately, sometimes error (or noise) is introduced into the remote sensor data by: the environment (e.g., atmospheric scattering, cloud), random or systematic malfunction of the remote sensing system (e.g., an uncalibrated detector creates striping), or improper pre-processing of the remote sensor data prior to actual data analysis (e.g., inaccurate analog-to-digital conversion). 154 155 Cloud 155 160 162 MODIS True 143 163 164 Clouds in ETM+ Striping Noise and Removal CPCA Combined Principle Component Analysis Xie et al. 2004 Speckle Noise and Removal Blurred objects and boundary G-MAP Gamma Maximum A Posteriori Filter Univariate descriptive image statistics The mode is the value that occurs most frequently in a distribution and is usually the highest point on the curve (histogram). It is common, however, to encounter more than one mode in a remote sensing dataset. The median is the value midway in the frequency distribution. One-half of the area below the distribution curve is to the right of the median, and one-half is to the left The mean is the arithmetic average and is defined as the sum of all brightness value observations divided by the number of observations. n k BV i 1 n ik Cont’ n Min Max Variance Standard deviation Coefficient of variation (CV) Skewness Kurtosis Moment vark BV i 1 k 2 ik n 1 sk k vark k CV k Multivariate Image Statistics Remote sensing research is often concerned with the measurement of how much radiant flux is reflected or emitted from an object in more than one band. It is useful to compute multivariate statistical measures such as covariance and correlation among the several bands to determine how the measurements covary. Variance–covariance and correlation matrices are used in remote sensing principal components analysis (PCA), feature selection, classification and accuracy assessment. Covariance The different remote-sensing-derived spectral measurements for each pixel often change together in some predictable fashion. If there is no relationship between the brightness value in one band and that of another for a given pixel, the values are mutually independent; that is, an increase or decrease in one band’s brightness value is not accompanied by a predictable change in another band’s brightness value. Because spectral measurements of individual pixels may not be independent, some measure of their mutual interaction is needed. This measure, called the covariance, is the joint variation of two variables about their common mean. n n SPkl BVik BVil i 1 n BV BV i 1 ik i 1 n il SPkl cov kl n 1 Correlation To estimate the degree of interrelation between variables in a manner not influenced by measurement units, the correlation coefficient, is commonly used. The correlation between two bands of remotely sensed data, rkl, is the ratio of their covariance (covkl) to the product of their standard deviations (sksl); thus: cov kl rkl s k sl If we square the correlation coefficient (rkl), we obtain the sample coefficient of determination (r2), which expresses the proportion of the total variation in the values of “band l” that can be accounted for or explained by a linear relationship with the values of the random variable “band k.” Thus a correlation coefficient (rkl) of 0.70 results in an r2 value of 0.49, meaning that 49% of the total variation of the values of “band l” in the sample is accounted for by a linear relationship with values of “band k”. Pixel Band 1 (green) Band 2 (red) Band 3 (ni) Band 4 (ni) (1,1) 130 57 180 205 (1,2) 165 35 215 255 (1,3) 100 25 135 195 (1,4) 135 50 200 220 (1,5) 145 65 205 235 example SP12 675232 (31,860) 540 cov12 135 4 Band 1 (Band 1 x Band 2) Band 2 130 7,410 57 165 5,775 35 100 2,500 25 135 6,750 50 145 9,425 65 675 31,860 232 5 Band 1 Band 2 Band 3 Band 4 Mean (k) 135 46.40 187 222 Variance (vark) 562.50 264.80 1007 570 (sk) 23.71 16.27 31.4 23.87 (mink) 100 25 135 195 (maxk) 165 65 215 255 Range (BVr) 65 40 80 60 Univariate statistics Band 1 Band 2 Band 3 Band 4 Band 1 562.25 - - - Band 2 135 264.8 0 - - Band 1 - Band 2 0.35 Band 3 718.75 275.25 1007.5 0 - Band 4 537.50 64 663.75 570 Covariance Band 1 Band Band 3 Band 2 4 - - - - - - Band 3 0.95 0.53 covariance - Band 4 0.94 0.16 0.87 Correlation coefficient - Types of radiometric correction Detector error or sensor error (internal error) Atmospheric error (external error) Topographic error (external error) Atmospheric correction Various Paths of Satellite Received Radiance There are several ways to atmospherically correct remotely sensed data. Some are relatively straightforward while others are complex, being founded on physical principles and requiring a significant amount of information to function properly. This discussion will focus on two major types of atmospheric correction: Absolute atmospheric correction, and Relative atmospheric correction. Scattering, Absorption Refraction, Reflection Total radiance LS at the sensor Solar irradiance E 0 Lp 90Þ T LT 0 T 2 Diffus e s ky irradiance Remote sens or detector Ed 1 1,3,5 4 v 0 3 LI 5 Reflectance from neigh boring area, Reflectance from study area, r n r v 60 miles or 100km Atmos phere Absolute atmospheric correction Solar radiation is largely unaffected as it travels through the vacuum of space. When it interacts with the Earth’s atmosphere, however, it is selectively scattered and absorbed. The sum of these two forms of energy loss is called atmospheric attenuation. Atmospheric attenuation may 1) make it difficult to relate handheld in situ spectroradiometer measurements with remote measurements, 2) make it difficult to extend spectral signatures through space and time, and (3) have an impact on classification accuracy within a scene if atmospheric attenuation varies significantly throughout the image. The general goal of absolute radiometric correction is to turn the digital brightness values (or DN) recorded by a remote sensing system into scaled surface reflectance values. These values can then be compared or used in conjunction with scaled surface reflectance values obtained anywhere else on the planet. a) Image containing substantial haze prior to atmospheric correction. b) Image after atmospheric correction using ATCOR (Courtesy Leica Geosystems and DLR, the German Aerospace Centre). relative radiometric correction When required data is not available for absolute radiometric correction, we can do relative radiometric correction Relative radiometric correction may be used to Single-image normalization using histogram adjustment Multiple-data image normalization using regression Single-image normalization using histogram adjustment The method is based on the fact that infrared data (>0.7 m) is free of atmospheric scattering effects, whereas the visible region (0.4-0.7 m) is strongly influenced by them. Use Dark Subtract to apply atmospheric scattering corrections to the image data. The digital number to subtract from each band can be either the band minimum, an average based upon a user defined region of interest, or a specific value Dark Subtract using band minimum Topographic correction Topographic slope and aspect also introduce radiometric distortion (for example, areas in shadow) The goal of a slope-aspect correction is to remove topographically induced illumination variation so that two objects having the same reflectance properties show the same brightness value (or DN) in the image despite their different orientation to the Sun’s position Based on DEM, sun-elevation Conceptions of geometric correction Geocoding: geographical referencing Registration: geographically or nongeographically (no coordination system) Image to Map (or Ground Geocorrection) The correction of digital images to ground coordinates using ground control points collected from maps (Topographic map, DLG) or ground GPS points. Image to Image Geocorrection Image to Image correction involves matching the coordinate systems or column and row systems of two digital images with one image acting as a reference image and the other as the image to be rectified. Spatial interpolation: from input position to output position or coordinates. RST (rotation, scale, and transformation), Polynomial, Triangulation Root Mean Square Error (RMS): The RMS is the error term used to determine the accuracy of the transformation from one system to another. It is the difference between the desired output coordinate for a GCP and the actual. Intensity (or pixel value) interpolation (also called resampling): The process of extrapolating data values to a new grid, and is the step in rectifying an image that calculates pixel values for the rectified grid from the original data grid. Nearest neighbor, Bilinear, Cubic Image enhancement image reduction, image magnification, transect extraction, contrast adjustments (linear and non-linear), band ratioing, spatial filtering, fourier transformations, principle components analysis, texture transformations, and image sharpening Purposes of image classification Land use and land cover (LULC) Vegetation types Geologic terrains Mineral exploration Alteration mapping ……. What is image classification or pattern recognition Is a process of classifying multispectral (hyperspectral) images into patterns of varying gray or assigned colors that represent either clusters of statistically different sets of multiband data, some of which can be correlated with separable classes/features/materials. This is the result of Unsupervised Classification, or numerical discriminators composed of these sets of data that have been grouped and specified by associating each with a particular class, etc. whose identity is known independently and which has representative areas (training sites) within the image where that class is located. This is the result of Supervised Classification. Spectral classes are those that are inherent in the remote sensor Information classes are those that human beings define. data and must be identified and then labeled by the analyst. unsupervised classification, The computer or algorithm automatically group pixels with similar spectral characteristics (means, standard deviations, covariance matrices, correlation matrices, etc.) into unique clusters according to some statistically determined criteria. The analyst then re-labels and combines the spectral clusters into information classes. supervised classification. Identify known a priori through a combination of fieldwork, map analysis, and personal experience as training sites; the spectral characteristics of these sites are used to train the classification algorithm for eventual land-cover mapping of the remainder of the image. Every pixel both within and outside the training sites is then evaluated and assigned to the class of which it has the highest likelihood of being a member. Hard vs. Fuzzy classification Supervised and unsupervised classification algorithms typically use hard classification logic to produce a classification map that consists of hard, discrete categories (e.g., forest, agriculture). Conversely, it is also possible to use fuzzy set classification logic, which takes into account the heterogeneous and imprecise nature (mix pixels) of the real world. Proportion of the m classes within a pixel (e.g., 10% bare soil, 10% shrub, 80% forest). Fuzzy classification schemes are not currently standardized. Pixel-based vs. Object-oriented classification In the past, most digital image classification was based on processing the entire scene pixel by pixel. This is commonly referred to as per-pixel (pixel-based) classification. Object-oriented classification techniques allow the analyst to decompose the scene into many relatively homogenous image objects (referred to as patches or segments) using a multi-resolution image segmentation process. The various statistical characteristics of these homogeneous image objects in the scene are then subjected to traditional statistical or fuzzy logic classification. Objectoriented classification based on image segmentation is often used for the analysis of high-spatial-resolution imagery (e.g., 1 1 m Space Imaging IKONOS and 0.61 0.61 m Digital Globe QuickBird). Unsupervised classification Uses statistical techniques to group n-dimensional data into their natural spectral clusters, and uses the iterative procedures label certain clusters as specific information classes K-mean and ISODATA For the first iteration arbitrary starting values (i.e., the cluster properties) have to be selected. These initial values can influence the outcome of the classification. In general, both methods assign first arbitrary initial cluster values. The second step classifies each pixel to the closest cluster. In the third step the new cluster mean vectors are calculated based on all the pixels in one cluster. The second and third steps are repeated until the "change" between the iteration is small. The "change" can be defined in several different ways, either by measuring the distances of the mean cluster vector have changed from one iteration to another or by the percentage of pixels that have changed between iterations. The ISODATA algorithm has some further refinements by splitting and merging of clusters. Clusters are merged if either the number of members (pixel) in a cluster is less than a certain threshold or if the centers of two clusters are closer than a certain threshold. Clusters are split into two different clusters if the cluster standard deviation exceeds a predefined value and the number of members (pixels) is twice the threshold for the minimum number of members. Supervised classification: training sites selection Based on known a priori through a combination of fieldwork, map analysis, and personal experience on-screen selection of polygonal training data (ROI), and/or on-screen seeding of training data (ENVI does not have this, Erdas Imagine does). The seed program begins at a single x, y location and evaluates neighboring pixel values in all bands of interest. Using criteria specified by the analyst, the seed algorithm expands outward like an amoeba as long as it finds pixels with spectral characteristics similar to the original seed pixel. This is a very effective way of collecting homogeneous training information. From spectral library of field measurements Selecting ROIs Alfalfa Cotton Grass Fallow Supervised classification methods Various supervised classification algorithms may be used to assign an unknown pixel to one of m possible classes. The choice of a particular classifier or decision rule depends on the nature of the input data and the desired output. Parametric classification algorithms assumes that the observed measurement vectors Xc obtained for each class in each spectral band during the training phase of the supervised classification are Gaussian; that is, they are normally distributed. Nonparametric classification algorithms make no such assumption. Several widely adopted nonparametric classification algorithms include: one-dimensional density slicing parallepiped, minimum distance, nearest-neighbor, and neural network and expert system analysis. The most widely adopted parametric classification algorithms is the: maximum likelihood. Hyperspectral classification methods Binary Encoding Spectral Angle Mapper Matched Filtering Spectral Feature Fitting Linear Spectral Unmixing Supervised classification method: Spectral Feature Fitting Source: http://popo.jpl.nasa .gov/html/data.html Accuracy assessment of classification Remote sensing-derived thematic information are becoming increasingly important. Unfortunately, they contain errors. Errors come from 5 sources: Geometric error still there None of atmospheric correction is perfect Clusters incorrectly labeled after unsupervised classification Training sites incorrectly labeled before supervised classification None of classification method is perfect We should identify the sources of the error, minimize it, do accuracy assessment, create metadata before being used in scientific investigations and policy decisions. We usually need GIS layers to assist our classification. Post-classification and GIS saltandpepper types Majority/Minority Analysis Clump Classes Morphology Filters Sieve Classes Combine Classes Classification to vector (GIS) Change detection Change detect involves the use of multi-temporal datasets to discriminate areas of land cover change between dates of imaging. Ideally, it requires Same or similar sensor, resolution, viewing geometry, spectral bands, radiomatric resolution, acquisition time of data, and anniversary dates Accurate spatial registration (less than 0.5 pixel error) Methods Independently classified and registered, then compare them Classification of combined multi-temporal datasets, Principal components analysis of combined multi-temporal datasets Image differencing (subtracting), (needs to find change/no change threshold, change area will be in the tails of the histogram distribution) Image ratioing (dividing), (needs to find change/no change threshold, change area will be in the tails of the histogram distribution) Change vector analysis Delta transformation Example: stages of development Sun City – Hilton Head 1994 1996 1974 1,040 urban hectares 1994 3,263 urban hectares 315% increase