CAP6938 Evolving Adaptive Neural Networks Dr. Kenneth Stanley

advertisement

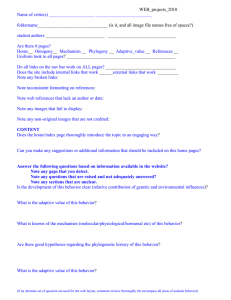

CAP6938 Neuroevolution and Artificial Embryogeny Evolving Adaptive Neural Networks Dr. Kenneth Stanley March 1, 2006 Remember This Thing? What’s missing from current neural models? An ANN Link is a Synapse (from Dr. George Johnson at http://www.txtwriter.com/Backgrounders/Drugaddiction/drugs1.html ) What Happens at Synapses? • Weighted signal transmission • But also: – Strengthening – Weakening – Sensitization – Habituation – Hebbian learning – None of these weight changes during a lifetime are happening in static models! Why Should Weights Change? • • • • The world changes Evolution cannot predict all future possibilities Evolution can succeed with less accuracy The Baldwin Effect – Learning smooths the fitness landscape – Traits that initially require learning eventually become instinct if the environment is consistent • If the mind is static, you can’t learn! How Should Weights Change? • Remember Hebbian Learning? (lecture 3) – Weight update based on correlation: – Incremental version: wi xi y wi (new) wi (old ) xi y • How can this be made to evolve? – Which weights should be adaptive? • Which rule should they follow if there is more than one? – Which weights should be fixed? – To what degree should they adapt (evolve alpha) • Evolve alpha parameter on each link Floreano’s Weight Update Equations • Plain Hebb Rule: • Postsynaptic rule: – Weakens synapse if postsynaptic node fires alone • Presynaptic rule: • Covariance rule: Strengthens when correlated, weakens when not Floreano’s Genetic Encoding Experiment: Light-switching Fully Recurrent Network • Task: Go to black area to turn on light, then go to area under light • Requires a policy change in mid-task: Reconfigure weights for new policy Blynel, J. and Floreano, D. (2002) Levels of Dynamics and Adaptive Behavior in Evolutionary Neural Controllers. In B. Hallam, D. Floreano, J. Hallam, G. Hayes, and J.-A. Meyer, editors. From Animals to Animats 7: Proceedings of the Seventh International Conference on Simulation on Adaptive Behavior, MIT Press. Results • Adaptive synapse networks evolved straighter and faster trajectories • Rapid and appropriate weight modifications occur at the moment if change However, It’s Not That Simple • A recurrent network with fixed synapses can change its policy too • The activation levels cycling through the network are a kind of memory that can affect its functioning • Do we need synaptic adaptation at all? • Experiment in paper: Kenneth O. Stanley, Bobby D. Bryant, and Risto Miikkulainen (2003). Evolving Adaptive Neural Networks with and without Adaptive Synapses, Proceedings of the 2003 IEEE Congress on Evolutionary Computation (CEC-2003). Experimental Domain: Dangerous Food Foraging • • • • Food may be poisonous or may not No way to tell at birth Only way to tell is to try one Then policy should depend on “pain” or not Condensed Floreano Rules • Two adaptation rules: One for excitatory connections, the other for inhibitory: • First term is Hebbian, second term is a decay term NEAT Trick: Use “Traits” to Prevent Dimensionality Multiplication • • • • One set of rules/traits Each connection gene points to one of the rules Rules evolve in parallel with network Weights evolve as usual Robot NNs Surprising Result • Fixed-weight recurrent networks could evolve a solution more efficiently! • Adaptive networks found solutions, but more slowly and less reliably Explanation • Fixed networks evolved a “trick”: Strong inhibitory recurrent connection on left turn output cause it to stay on until it experiences pain. Then it turns off and robot spins (from right turn output) until it doesn’t see food anymore, and it runs to the wall • In adaptive network, 22% of connections diverge after pain, causing network to spin in place: a holistic change Discussion • Adaptive neurons are not for everything, not even all adaptive tasks • In non-adaptive tasks, they only add unnecessary dimensions to the search space • In adaptive tasks, they may be best for tasks requiring holistic solutions • What are those? • Don’t underestimate the power of recurrence Next Topic: Leaky Integrator Neurons, CTRNNs, and Pattern Generators • Real neurons encode information as spikes and spike trains with differing rates • Dendrite may integrate spike train at different rates • Rate differences can create central pattern generators without a clock! Levels of dynamics and adaptive behavior in evolutionary neural controllers by Blynel, J., and Floreano, D. (2002) Evolution of Central Pattern Generators for Bipedal Walking in a Real-Time Physics Environment by Torsten Reil and Phil Husbands (2002) Optional: Evolution and analysis of model CPGs for walking I. Dynamical modules by Chiel, H.J., Beer, R.D. and Gallagher, J.C. (1999) Homework due 3/8/06 (see next slide) Homework Due 3/8/06 Genetic operators all working: •Mating two genomes: mate_multipoint, mate_multipoint_avg, others •Compatibility measuring: return distance of two genomes from each other based on coefficients in compatibility equation and historical markings •Structural mutations: mutate_add_link, mutate_add_node, others •Weight/parameter mutations: mutate_link_weights, mutating other parameters •Special mutations: mutate_link_enable_toggle (toggle enable flag), etc. •Special restrictions: control probability of certain types of mutations such as adding a recurrent connection vs. a feedforward connection Turn in summary, code, and examples demonstrating that all functions work. Must include checks that phenotypes from genotypes that are new or altered are created properly and work. Project Milestones (25% of grade) • • • • • • 2/6: Initial proposal and project description 2/15: Domain and phenotype code and examples 2/27: Genes and Genotype to Phenotype mapping 3/8: Genetic operators all working 3/27: Population level and main loop working 4/10: Final project and presentation due (75% of grade)