The role of learning in punishment, prosociality, and human uniqueness

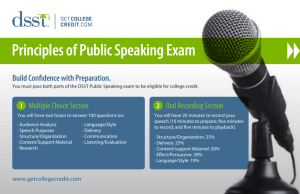

advertisement