Updates on Backward Congestion Notification

advertisement

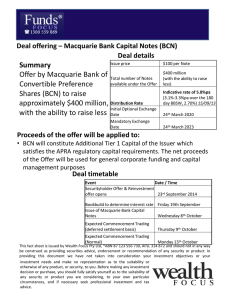

Updates on Backward Congestion Notification Davide Bergamasco (davide@cisco.com) Cisco Systems, Inc. IEEE 802 Plenary Meeting San Francisco, USA July 20, 2005 1 Agenda • Previous presentation • May 2005 IEEE 802.1 Interim Meeting in Berlin, Germany • http://www.ieee802.org/1/files/public/docs2005/newbergamasco-backward-congestion-notification-0505.pdf • Updates • Algorithm • Derivative to improve stability • Solicit Bit to accelerate recovery • AQM in rate limiter queues to reduce blocking • Simulations 2 Queue Stability Q • ISSUE: Overshoots Stop Generation of BCN Messages + - - + + - - and undershoots accumulate over time • SOLUTION: Signal + only when • Q > Qeq && dQ/dt > 0 • Q < Qeq && dQ/dt < 0 • Easy to implement in Qeq hardware: just an Up/Down counter • Increment @ every enqueue • Decrement @ every dequeue t • Reduces signaling rate by 50%!! 3 Solicit Bit • ISSUE: When the rate is very low, recovery may take too long because of sampling. R • SOLUTION: Solicit Bit in RL tag • if R < Rsolicit Solicit Rsolicit bit is set • if R >= Rsolicit Solicit bit is cleared Rmin BCN0 t Random Time BCN+2 BCN+2 BCN+4 Force Bit On Force Bit Off BCN+1 • If possible, CP will generate a BCN+ for every frame with Solicit bit on, regardless of sampling 4 Changes to Detection & Signaling T-4 T-3 T-2 FULL QUEUE T-1 T+0 T+1 T+2 T+3 T+4 EMPTY QUEUE EQUILIBRIUM IN OUT Sample Frame with Probability P MESSAGE TO GENERATE Sampled Frame? Yes No RL Tagged Frame? No BCN 0 BCN-4 BCN-3 BCN-2 BCN-1 NOP No Message No Yes MESSAGE TO GENERATE BCN 0 BCN-4 BCN-3 BCN-2 BCN-1 No Message BCN+1 BCN+2 BCN+3 BCN+4 dQ/dt < 0? 0 BCN type - MESSAGE TO GENERATE RL Tag && Solicit Bit Set? Yes + Send BCN dQ/dt > 0 Yes No Yes No Message BCN+1 BCN+2 BCN+3 BCN+4 NOP No NOP 5 Rate Limiter Queue AQM F1 R1 F2 R2 Fn No Match • ISSUE: Blocking @ Rn RL queues due to buffer exhaustion • SOLUTION: add an Flow Control Data IN Tail Drop Packets Marked with RATE_LIMITED_TAG Data OUT Control IN EDGE NODE AQM mechanism to control buffer usage BCN Messages from congested point NETWORK CORE 6 Rate Limiter Queue AQM • Traditional AQM such as RED (mark/drop) don’t work well for RL queues: • Buffer too small • Very few flows • Traffic statistics very different from Internet traffic • A novel and very simple solution based on: • Threshold on the RL queue QAQM (e.g., 10 pkts) • Fixed drop or mark probability P (e.g., 1%) • Two counters: • CTCP: Number of TCP packets in the RL queue • CUDP: Number of UDP packets in the RL queue Drop or mark TCP packets with probability P when CTCP > QAQM • • Drop UDP packets when CUDP > QAQM QAQM 7 Simulation Environment (1) ES6 Core Switch TCP Bulk SJ TCP On/Off Congestion DR2 TCP Ref1 TCP Ref2 ES1 ES2 ES3 STb2 STo2 STb3 STo3 ES4 ES5 SR2 SR1 STb1 STo1 STb4 STo4 DTb DTo DR1 8 Simulation Environment (2) • Short Range, High Speed DC Network • • • • Link Capacity = 10 Gbps Switch latency = 1 s Link Length = 100 m (.5 s propagation delay) Control loop delay ~ 3 s • Workload 1) TCP only • STb1-STb4: 3 parallel connections transferring 1 MB each continuosly • STi1-STi4: 3 parallel connections transferring 1 MB then waiting 10 ms • SR1: 1 connection transferring 10 KB (avg 16 s wait) • SR2: 1 connection transferring 10 KB (1s wait) 2) 80% TCP + 20% UDP • STb1-STb4: same as above • STi1-STi4: same as above • SR1-SR2: same as above • SU1-SU4: variable length bursts with average offered load of 2 Gbps 9 Simulation Goals • Study the performance of BCN with various congestion management techniques at the RL • No Link-level Flow Control • Link-level Flow Control • Link-level Flow Control + RL simple AQM (drop/mark) • Metrics: • Throughput and Latency of TCP bulk and on/off connections • Throughput and Latency of Reference Flows • Bottleneck Link Utilization • Buffer Utilization 10 Bulk & On/Off Application Throughput & Latency (Workload 1: TCP Only) RL Congestion Management Mechanism Bulk TCP Throughput (Tps) Bulk TCP Latency (s) No Flow Control 67.17 15,220 25.92 27,880 9.85 Flow Control 63.00 15,970 32.92 20,337 10.00 Flow Control + RL AQM (drop) 61.83 16,249 33.42 19,570 10.00 Flow Control + RL AQM (mark) 59.17 17,043 36.67 16,873 10.00 Best On/Off TCP Throughput (Tps) On/Off TCP Latency (s) Throughput on Bottleneck link (Gbps) Worst 11 Reference Applications Throughput & Latency (Workload 1: TCP Only) RL Congestion Management Mechanism Ref1 TCP Throughput (Tps) Ref1 TCP Latency (s) Ref2 TCP Throughput (Tps) Ref2 TCP Latency (s) No Flow Control 6702 132.8 30334 31.97 Flow Control 7108 124.30 31038 31.22 Flow Control + AQM (drop) 7210 122.33 31307 30.94 Flow Control + AQM (mark) 7419 119.21 31362 30.89 Best Worst 12 Buffer Utilization: No FC 13 Buffer Utilization: FC 14 Buffer Utilization: FC + RL AQM (drop) 15 Buffer Utilization: FC + RL AQM (mark) 16 Summary & Next Steps • A number of improvements have been made to BCN • Derivative to improve stability • Solicit Bit to speed up recovery • AQM in RL queues to reduce blocking • Future Steps • Build a Prototype??? •… 17 18