An Efficient Topology-Adaptive Membership Protocol for Large- Scale Cluster-Based Services Jingyu Zhou *

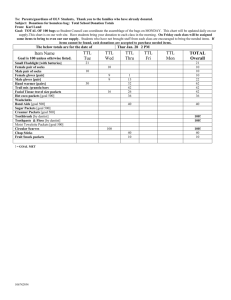

advertisement

An Efficient Topology-Adaptive Membership Protocol for LargeScale Cluster-Based Services § Jingyu Zhou * , Lingkun Chu*, Tao Yang* * Ask Jeeves §University of California at Santa Barbara § Outline Background & motivation Membership protocol design Implementation Evaluation Related work Conclusion Background Large-scale 24x7 Internet services Thousands of machines connected by many level-2 and level-3 switches (e.g. 10,000 at Ask Jeeves) Multi-tiered architecture with data partitioning and replication Some of machines are unavailable frequently due to failures, operational errors, and scheduled service update. Network Topology in Service Clusters Multiple hosting centers across Internet In a hosting center Thousands of nodes Many level-2 and level-3 switches Complex switch topology Data Center Asia Asian user NY user CA user VP N N 3DNS -WAN Load Balancer P V Data Center California VPN Internet Level-3 Switch Level-2 Switch Data Center New York Level-2 Switch Level-3 Switch Level-2 Switch ... Level-2 Switch Motivation Membership protocol Yellow page directory – discovery of services and their attributes Server aliveness – quick fault detection Challenges Efficiency Scalability Fast detection Fast Failure Detection is crucial Online auction service even with Replica 1 replication Failure of one replica 7s - 12s Service unavailable 10s - 13s Auction Service Replica 2 Replica 3 Communication Cost for Fast Detection Communication requirement Propagate to all nodes Fast detection needs higher packet rate High bandwidth Higher hardware cost More chances of failures. Design Requirements of Membership Protocol for Large-scale Clusters Efficient: bandwidth, # of packets Topology-adaptive: localize traffic within switches Scalable: scale to tens of thousands of nodes Fast failure detection and information propagation. Approaches Centralized Easy to implement Single point of failure, not scalable, extra delay Distributed All-to-all broadcast [Shen’01]: doesn’t scale well Gossip [Renesse’98]: probabilistic guarantee Ring: slow to handle multi-failures Don’t consider network topology TAMP: Topology-Adaptive Membership Protocol Topology-awareness Form a hierarchical tree according to network topology Topology-adaptiveness Network changes: add/remove/move switches Service changes: add/remove/move nodes Exploit TTL field in IP packet Hierarchical Tree Formation Algorithm 1. Form small multicast groups with low TTL values; 2. Each multicast group performs elections; 3. Group leaders form higher level groups with larger TTL values; 4. Stop when max. TTL value is reached; otherwise, goto Step 2. An Example 3 Level-3 switches with 9 nodes Group 3a 239.255.0.23 TTL=4 B Group 2a Group 2b 239.255.0.22239.255.0.22 TTL=3 TTL=3 B A A B C C Group 1a 239.255.0.21 TTL=2 Group 1b 239.255.0.21 TTL=2 Group 1c 239.255.0.21 TTL=2 A B C Group 0a 239.255.0.20 TTL=1 Group 0b 239.255.0.20 TTL=1 Group 0c 239.255.0.20 TTL=1 A B C Node Joining Procedure Purpose Find/elect a leader Exchange membership information Process 1. Join a channel and listen; 2. If a leader exists, stop and bootstrap with the leader; 3. Otherwise, elects a leader (bully algorithm); 4. If is leader, increase channel ID & TTL, goto 1. Properties of TAMP Upward propagation guarantee A node is always aware of its leader Messages can always be propagated to nodes in the higher levels Downward propagation guarantee A node at level i must leaders of level i-1, i-2, …, 0 Messages can always be propagated to lower level nodes Eventual convergence View of every node converges Update protocol when cluster structure changes Heartbeat for failure detection Leader receive an update - multicast up & down 3 Level 2 E Level 1 2 2 B E 2 3 Level 0 A ABC DEF GHI H B 1 C AB DEF GHI D DEF ABC GHI 3 E F 4 DEF AB GHI G GHI ABC DEF H I 4 GHI AB DEF Fault Tolerance Techniques Leader failure: backup leader or election Network partition failure Timeout all nodes managed by a failed leader Hierarchical timeout: longer timeout for higher levels Packet loss Leaders exchanges deltas since last update Piggyback last three changes Scalability Analysis Protocols: all-to-all, gossip, and TAMP Basic performance factors Failure detection time (Tfail_detect) View convergence time (Tconverge) Communication cost in terms of bandwidth (B) Scalability Analysis (Cont.) Two metrics BDP = B * Tfail_detect , lower failure detection time with low bandwidth is desired BCP = B * Tconverge , lower convergence time with low bandwidth is desired BDP All-to-all Gossip TAMP BCP O(n2) O(n2) O(n2logn) O(n2logn) O(n) O(n)+O(B*logkn) n: total # of nodes k: each group size, a constant Implementation Inside Neptune middleware [Shen’01] – programming and runtime support for building cluster-based Internet services Can be easily coupled into others clustering frameworks Hierarchical Membership Service Client Code SHM External Receiv er SHM Local Status Tracker Service Status Data Structure Inform er Conten der Annou cer Multicast Channels Service Code /proc File System Evaluation: Objectives & Settings Metrics Bandwidth failure detection time View convergence time Hardware settings 100 dual PIII 1.4GHz nodes 2 switches connected by a Gigabit switch Protocol related settings Frequency: 1 packet/s A node is deemed dead after 5 consecutive loss Gossip mistake probability 0.1% # of nodes: 20 – 100 in step of 20 Bandwidth Consumption All-to-All & Gossip: quadratic increase TAMP: close to linear Failure Detection Time Gossip: log(N) increase All-to-All & TAMP: constant View Convergence Time Gossip: log(N) increase All-to-All & TAMP: constant Related Work Membership & failure detection [Chandra’96], [Fetzer’99], [Fetzer’01], [Neiger’96], and [Stok’94] Gossip-style protocols SCAMP, [Kempe’01], and [Renesse’98] High-availability system (e.g., HA-Linux, Linux Heartbeat) Cluster-based network services TACC, Porcupine, Neptune, Ninja Resource monitoring: Ganglia, NWS, MDS2 Contributions & Conclusions TAMP is a highly efficient and scalable protocol for giant clusters Exploiting TTL count in IP packet for topology-adaptive design. Verified through property analysis and experimentation. Deployed at Ask Jeeves clusters with thousands of machines. Questions?