21 Century Computer Architecture Mark D. Hill, Univ. of Wisconsin-Madison

advertisement

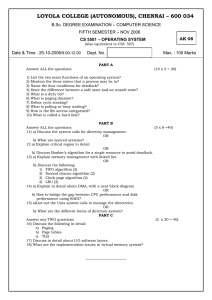

st 21 Century Computer Architecture Mark D. Hill, Univ. of Wisconsin-Madison 2/2014 @ HPCA, PPoPP, & CGO 1. Whitepaper Briefing 2. Impact & Computing Community Consortium (CCC) 3. E.g., Efficient Virtual Memory for Big Memory Servers 21st Century Computer Architecture A CCC community white paper http://cra.org/ccc/docs/init/21stcenturyarchitecturewhitepaper.pdf • • • • • Participants & Process Information & Commun. Tech’s Impact Semiconductor Technology’s Challenges Computer Architecture’s Future Pre-Competitive Research Justified White Paper Participants Sarita Adve, U Illinois * David H. Albonesi, Cornell U David Brooks, Harvard U Luis Ceze, U Washington * Sandhya Dwarkadas, U Rochester Joel Emer, Intel/MIT Babak Falsafi, EPFL Antonio Gonzalez, Intel/UPC Mark D. Hill, U Wisconsin *,** Mary Jane Irwin, Penn State U * David Kaeli, Northeastern U * Stephen W. Keckler, NVIDIA/U Texas Christos Kozyrakis, Stanford U Alvin Lebeck, Duke U Milo Martin, U Pennsylvania José F. Martínez, Cornell U Margaret Martonosi, Princeton U * Kunle Olukotun, Stanford U Mark Oskin, U Washington Li-Shiuan Peh, M.I.T. Milos Prvulovic, Georgia Tech Steven K. Reinhardt, AMD Michael Schulte, AMD/U Wisconsin Simha Sethumadhavan, Columbia U Guri Sohi, U Wisconsin Daniel Sorin, Duke U Josep Torrellas, U Illinois * Thomas F. Wenisch, U Michigan * David Wood, U Wisconsin * Katherine Yelick, UC Berkeley/LBNL * “*” contributed prose; “**” effort coordinator Thanks of CCC, Erwin Gianchandani & Ed Lazowska for guidance and Jim Larus & Jeannette Wing for feedback 4 White Paper Process • Late March 2012 o CCC contacts coordinator & forms group • April 2012 o o o o Brainstorm (meetings/online doc) Read related docs (PCAST, NRC Game Over, ACAR1/2, …) Use online doc for intro & outline then parallel sections Rotated authors to revise sections • May 2012 o o o o Brainstorm list of researcher in/out of comp. architecture Solicit researcher feedback/endorsement Do distributed revision & redo of intro Release May 25 to CCC & via email Kudos to participants on executing on a tight timetable 5 20th Century ICT Set Up • Information & Communication Technology (ICT) Has Changed Our World o <long list omitted> • Required innovations in algorithms, applications, programming languages, … , & system software • Key (invisible) enablers (cost-)performance gains o Semiconductor technology (“Moore’s Law”) o Computer architecture (~80x per Danowitz et al.) 6 Enablers: Technology + Architecture Architecture Technology Danowitz et al., CACM 04/2012, Figure 1 7 21st Century ICT Promises More Data-centric personalized health care Human network analysis Computation-driven scientific discovery Much more: known & unknown 8 21st Century App Characteristics BIG DATA SECURE/PRIVATE ALWAYS ONLINE Whither enablers of future (cost-)performance gains? 9 Technology’s Challenges 1/2 Late 20th Century The New Reality Moore’s Law — 2× transistors/chip Transistor count still 2× BUT… Dennard Scaling — ~constant power/chip Gone. Can’t repeatedly double power/chip 11 Classic CMOS Dennard Scaling: the Science behind Moore’s Law Source: Future of Computing Performance: Game Over or Next Level?, National Academy Press, 2011 Scaling: Voltage: Oxide: (Finding 2) V/a tOX/a Results: 1/a2 Power/ckt: Power Density: ~Constant National Research Council (NRC) – Computer Science and Telecommunications Board (CSTB.org) 12 Post-classic CMOS Dennard Scaling Post Dennard CMOS Scaling Rule TODO: Chips w/ higher power (no), smaller (), Scaling: dark silicon (), or other (?) Voltage: V/a V tOX/a Oxide: Results: 1/a2 1 Power/ckt: Power Density: ~Constant a2 National Research Council (NRC) – Computer Science and Telecommunications Board (CSTB.org) 13 Technology’s Challenges 2/2 Late 20th Century The New Reality Moore’s Law — 2× transistors/chip Transistor count still 2× BUT… Dennard Scaling — ~constant power/chip Gone. Can’t repeatedly double power/chip Modest (hidden) transistor unreliability Increasing transistor unreliability can’t be hidden Focus on computation over communication Communication (energy) more expensive than computation 1-time costs amortized via mass market One-time cost much worse & want specialized platforms How should architects step up as technology falters? 14 System Capability (log) “Timeline” from DARPA ISAT Fallow Period 80s 90s 00s 10s 20s 30s 40s 50s Source: Advancing Computer Systems without Technology Progress, ISAT Outbrief (http://www.cs.wisc.edu/~markhill/papers/isat2012_ACSWTP.pdf) Mark D. Hill and Christos Kozyrakis, DARPA/ISAT Workshop, March 26-27, 2012. Approved for Public Release, Distribution Unlimited The views expressed are those of the author and do not reflect the official policy or position of the 15 Department of Defense or the U.S. Government. 21st Century Comp Architecture 20th Century 21st Century Single-chip in generic computer Architecture as Infrastructure: Spanning sensors to clouds X Performance + security, privacy, availability, programmability, … Performance via invisible instr.-level parallelism Energy First ● Parallelism X ● Specialization ● Cross-layer design Predictable technologies: CMOS, DRAM, & disks New technologies (non-volatile memory, near-threshold, 3D, photonics, …) Rethink: memory & storage, reliability, communication CrossCutting: Break current layers with new interfaces 16 Available for Free: Search on “Synthesis Lectures on Computer Architecture” 17 21st Century Comp Architecture 20th Century 21st Century Single-chip in generic computer Architecture as Infrastructure: Spanning sensors to clouds Performance + security, privacy, availability, programmability, … Performance via invisible instr.-level parallelism Energy First ● Parallelism X ● Specialization ● Cross-layer design Predictable technologies: CMOS, DRAM, & disks New technologies (non-volatile memory, near-threshold, 3D, photonics, …) Rethink: memory & storage, reliability, communication CrossCutting: Break current layers with new interfaces 18 21st Century Comp Architecture 20th Century 21st Century Single-chip in generic computer Architecture as Infrastructure: Spanning sensors to clouds X Performance + security, privacy, availability, programmability, … Performance via invisible instr.-level parallelism Energy First ● Parallelism X ● Specialization ● Cross-layer design Predictable technologies: CMOS, DRAM, & disks New technologies (non-volatile memory, near-threshold, 3D, photonics, …) Rethink: memory & storage, reliability, communication CrossCutting: Break current layers with new interfaces 19 21st Century Comp Architecture 20th Century 21st Century Single-chip in generic computer Architecture as Infrastructure: Spanning sensors to clouds X Performance + security, privacy, availability, programmability, … Performance via invisible instr.-level parallelism Energy First ● Parallelism X ● Specialization ● Cross-layer design Predictable technologies: CMOS, DRAM, & disks New technologies (non-volatile memory, near-threshold, 3D, photonics, …) Rethink: memory & storage, reliability, communication CrossCutting: Break current layers with new interfaces 20 21st Century Comp Architecture 20th Century 21st Century Single-chip in stand-alone computer Architecture as Infrastructure: Spanning sensors to clouds Performance + security, privacy, availability, programmability, … Performance via invisible instr.-level parallelism Energy First ● Parallelism ● Specialization ● Cross-layer design Predictable technologies: CMOS, DRAM, & disks New technologies (non-volatile memory, near-threshold, 3D, photonics, …) Rethink: memory & storage, reliability, communication CrossCutting: Break current layers with new interfaces 22 What Research Exactly? • Research areas in white paper (& backup slides) 1. 2. 3. 4. Architecture as Infrastructure: Spanning Sensors to Clouds Energy First Technology Impacts on Architecture Cross-Cutting Issues & Interfaces • Much more research developed by future PIs! • Example from our work in last part of talk [ISCA 2013] o Cloud workloads(memcached) use vast memory (100 GB to TB) wasting up to 50% execution time o A cross-cutting OS-HW change eliminates this waste 23 Pre-Competitive Research Justified • Retain (cost-)performance enabler to ICT revolution • Successful companies cannot do this by themselves o Lack needed long-term focus o Don’t want to pay for what benefits all o Resist transcending interfaces that define their products • Corroborates o Future of Computing Performance: Game Over or Next Level?, National Academy Press, 2011 o DARPA/ISAT Workshop Advancing Computer Systems without Technology Progress with outbrief http://www.cs.wisc.edu/~markhill/papers/isat2012_ACSWTP.pdf 24 st 21 Century Computer Architecture Mark D. Hill, Univ. of Wisconsin-Madison 2/2014 @ HPCA, PPoPP, & CGO 1. Whitepaper Briefing 2. Impact & Computing Community Consortium (CCC) 3. E.g., Efficient Virtual Memory for Big Memory Servers $15M NSF XPS 2/2013 + $15M for 2/2014 38 Computing Community Consortium 39 CS Research Community CCC Research Funders Research Funders http://cra.org/ccc Research Beneficiaries Research Beneficiaries The CCC Council Leadership Susan Graham, UC Berkeley (Chair) Greg Hager, Johns Hopkins (Vice Chair) Ed Lazowska, U. Washington (Past Chair) Ann Drobnis, Director Kenneth Hines, Program Associate Andy Bernat, CRA Executive Director Terms ending 6/2016 Randy Bryant, CMU Limor Fix, Intel Mark Hill, U. Wisconsin, Madison Tal Rabin, IBM Research Daniela Rus, MIT Ross Whitaker, Univ. Utah Stephanie Forrest, Univ. New Mexico Robin Murphy, Texas A&M John King, Univ. Michigan Dave Waltz, Columbia Karen Sutherland, Augsburg College Terms ending 6/2015 Liz Bradley, Univ. Colorado Sue Davidson, Univ. Pennsylvania Joe Evans, Univ. Kansas Ran Libeskind-Hadas, Harvey Mudd Elizabeth Mynatt, Georgia Tech Shashi Shekhar, Univ. Minnesota Terms ending 6/2014 Deborah Crawford, Drexel Anita Jones, Univ. Virginia Fred Schneider, Cornell Bob Sproull, Sun Labs Oracle (ret.) Josep Torrellas, Univ. Illinois Chris Johnson, Univ. Utah Bill Feiereisen, LANL Dick Karp, UC Berkeley Greg Andrews, Univ. Arizona http://cra.org/ccc Frans Kaashoek, MIT Dave Kaeli, Northeastern Andrew McCallum, UMass Peter Lee, Carnegie Mellon A Multitude of Activities Community-initiated visioning: Workshops to discuss “out-of-the-box” ideas Challenges & Visions tracks at conferences Outreach to White House, funding agencies: Outputs of visioning activities Short reports to inform policy makers Task Forces – Health IT, Sustainability IT, Data Analytics Public relations efforts: Library of Congress symposia Research “Highlight of the Week” CCC Blog [http://cccblog.org/] Nurturing the next generation of leaders: Computing Innovation Fellows Project “Landmark Contributions by Students” Leadership in Science Policy Institute http://cra.org/ccc Catalyzing: Visioning Activities Over 20 Workshops to date More than 1,500 participants Free & Open Source Software http://cra.org/ccc Catalyzing and Enabling: Architecture 2010 Josep Torrellas UIUC 2010 Mark Oskin Washington 2012 Mark Hill Wisconsin http://cra.org/ccc 2013 CCC & You CCC Chair Susan Graham ~20 Council Members Standing committee of Computing Research Association CRA established via NSF cooperative agreement You Be a rain-maker & give forward White papers Visioning And more http://cra.org/ccc 44 st 21 Century Computer Architecture Mark D. Hill, Univ. of Wisconsin-Madison 2/2014 @ HPCA, PPoPP, & CGO 1. Whitepaper Briefing 2. Impact & Computing Community Consortium (CCC) 3. E.g., Efficient Virtual Memory for Big Memory Servers A View of Computer Layers Punch Thru (small) 7/26/2016 Problem Algorithm Application Middleware / Compiler Operating System Microarchitecture Logic Design Transistors, etc. Instrn Set Architecture 46 Efficient Virtual Memory for Big Memory Servers Arkaprava Basu, Jayneel Gandhi, Jichuan Chang*, Mark D. Hill, Michael M. Swift * HP Labs Q: “Virtual Memory was invented in a time of scarcity. Is it still good idea?” --- Charles Thacker, 2010 Turing Award Lecture A: As we see it, OFTEN but not ALWAYS. Executive Summary • Big memory workloads important – graph analysis, memcached, databases • Our analysis: – TLB misses burns up to 51% execution cycles – Paging not needed for almost all of their memory • Our proposal: Direct Segments – Paged virtual memory where needed – Segmentation (No TLB miss) where possible • Direct Segment often eliminates 99% DTLB misses 7/26/2016 ISCA 2013 48 Virtual Memory Refresher Process 1 Virtual Address Space Core Physical Memory Cache TLB (Translation Lookaside Buffer) Process 2 Challenge: TLB misses wastes execution time 7/26/2016 Page Table 49 Memory capacity for $10,000* 1,000.00 1 Memory size GB MB TB 10,000.00 10 Commercial servers with 4TB memory 100.00 100 10.00 10 1.00 1 0.10 100 Big data needs to access terabytes of data at low latency 0.01 10 0.00 0 1980 1990 *Inflation-adjusted 2011 USD, from: jcmit.com 2000 2010 50 TLB is Less Effective • TLB sizes hardly scaled Year L1-DTLB entries 1999 72 (Pent. III) 2001 64 (Pent. 4) 2008 2012 96 100 (Nehalem) (Ivy Bridge) • Low access locality of server workloads [Ramcloud’10, Nanostore’11] Memory Size + TLB size + Low locality TLB miss latency overhead 51 Experimental Setup • Experiments on Intel Xeon (Sandy Bridge) x86-64 – Page sizes: 4KB (Default), 2MB, 1GB 4 KB L1 DTLB L2 DTLB 2 MB 1GB 64 entry, 4-way 32 entry, 4-way 4 entry, fully assoc. 512 entry, 4-way • 96GB installed physical memory • Methodology: Use hardware performance counter 7/26/2016 ISCA 2013 52 7/26/2016 yS Q L d ISCA 2013 PS 51.1 GU NP B: CG NP B: BT M em ca ch e ap h5 00 35 m gr Percentage of execu on cycles spent on servicing DTLB missses Big Memory Workloads 83.1 30 4KB 25 2MB 20 15 1GB 10 5 Direct Segment 0 53 35 51.1 83.1 4KB 30 25 2MB 20 15 1GB 10 Direct Segment 5 m 7/26/2016 ISCA 2013 PS GU NP B: CG NP B: BT yS Q L M d em ca ch e ap h5 00 0 gr Percentage of execu on cycles wasted Execution Time Overhead: TLB Misses 54 35 51.1 83.1 51.3 4KB 30 25 2MB 20 15 1GB 10 Direct Segment 5 m 7/26/2016 ISCA 2013 PS GU NP B: CG NP B: BT yS Q L M d em ca ch e ap h5 00 0 gr Percentage of execu on cycles wasted Execution Time Overhead: TLB Misses 55 35 51.1 Significant overhead of paged virtual memory 30 25 20 83.1 51.3 4KB Worse with TBs of memory now or in future? 2MB 15 1GB 10 Direct Segme 5 m 7/26/2016 ISCA 2013 PS GU NP B: CG NP B: BT yS Q L M d em ca ch e ap h5 00 0 gr Percentage of execu on cycles wasted Execution Time Overhead: TLB Misses 56 35 51.1 83.1 51.3 4KB 30 25 2MB 20 15 0.01 10 ~0 0.48 ~0 0.01 1GB 0.49 Direct Segment 5 m 7/26/2016 ISCA 2013 GU PS NP B: CG NP B: BT L yS Q M ca ch ed em h5 00 0 gr ap Percentage of execu on cycles wasted Execution Time Overhead: TLB Misses 57 Roadmap • • • • • Introduction and Motivation Analysis: Big memory workloads Design: Direct Segment Evaluation Summary 7/26/2016 ISCA 2013 58 How is Paged Virtual Memory used? An example: memcached servers 7/26/2016 In-memory Hash table Network state Client memcached server # n ISCA 2013 Key X Value Y 59 Big Memory Workloads’ Use of Paging Paged VM Feature Our Analysis Implication Swapping ~0 swapping Not essential Per-page protection ~99% pages read-write Overkill Fragmentation reduction Little OS-visible fragmentation (next slide) Per-page (re)allocation less important 7/26/2016 ISCA 2013 60 Allocated Memory (in GB) Memory Allocation Over Time Warm-up graph500 memcached 0 300 MySQL NPB:BT NPB:CG GUPS 90 75 60 45 30 15 0 150 450 600 750 900 1050 1200 1350 1500 Time (in seconds) 25 minutes! Most of the memory allocated early 7/26/2016 ISCA 2013 61 Where Paged Virtual Memory Needed? Paging Valuable Paging Not Needed * VA Dynamically allocated Heap region Code Constants Shared Memory Mapped Files Stack Guard Pages Paged VM not needed for MOST memory 7/26/2016 ISCA 2013 * Not to scale 62 Roadmap • Introduction and Motivation • Analysis: Big Memory Workloads • Design: Direct Segment – Idea – Hardware – Software • Evaluation • Summary 7/26/2016 ISCA 2013 63 Idea: Two Types of Address Translation A Conventional paging • All features of paging • All cost of address translation B Simple address translation • Protection but NO (easy) swapping • NO TLB miss • OS/Application decides where to use which [=> Paging features where needed] 7/26/2016 ISCA 2013 64 Hardware: Direct Segment 2 Direct Segment 1 Conventional Paging BASE LIMIT VA OFFSET PA Why Direct Segment? • Matches big memory workload needs • NO TLB lookups => NO TLB Misses 7/26/2016 ISCA 2013 65 H/W: Translation with Direct Segment [V47V46……………………V13V12] [V11……V0] LIMIT<? BASE ≥? DTLB Lookup Paging Ignored HIT/MISS Y OFFSET 7/26/2016 MISS Page-Table Walker [P40P39………….P13P12] [P11……P ] 66 0 H/W: Translation with Direct Segment [V47V46……………………V13V12] BASE ≥? [V11……V0] LIMIT<? Direct Segment Ignored N DTLB Lookup HIT OFFSET 7/26/2016 HIT/MISS MISS Page-Table Walker [P40P39………….P13P12] [P11……P ] 67 0 S/W: 1 Setup Direct Segment Registers • Calculate register values for processes – BASE = Start VA of Direct Segment – LIMIT = End VA of Direct Segment – OFFSET = BASE – Start PA of Direct Segment • Save and restore register values BASE LIMIT VA2 VA1 OFFSET PA 7/26/2016 ISCA 2013 68 S/W: 2 Provision Physical Memory • Create contiguous physical memory – Reserve at startup • Big memory workloads cognizant of memory needs • e.g., memcached’s object cache size – Memory compaction • Latency insignificant for long running jobs – 10GB of contiguous memory in < 3 sec – 1% speedup => 25 mins break even for 50GB compaction 7/26/2016 ISCA 2013 69 S/W: 3 Abstraction for Direct Segment • Primary Region – Contiguous VIRTUAL address not needing paging – Hopefully backed by Direct Segment – But all/part can use base/large/huge pages VA PA • What allocated in primary region? – All anonymous read-write memory allocations – Or only on explicit request (e.g., mmap flag) 7/26/2016 ISCA 2013 70 Segmentation Not New ISA/Machine Address Translation Multics Segmentation on top of Paging Burroughs B5000 Segmentation without Paging UltraSPARC Paging X86 (32 bit) Segmentation on top of Paging ARM Paging PowerPC Segmentation on top of Paging Alpha Paging X86-64 Paging only (mostly) Direct Segment: NOT on top of paging. NOT to replace paging. NO two-dimensional address space: keeps linear address space. 7/26/2016 ISCA 2013 71 Roadmap • • • • Introduction and Motivation Analysis: Big Memory Workloads Design: Direct Segment Evaluation – Methodology – Results • Summary 7/26/2016 ISCA 2013 72 Methodology • Primary region implemented in Linux 2.6.32 • Estimate performance of non-existent direct-segment – Get fraction of TLB misses to direct-segment memory – Estimate performance gain with linear model • Prototype simplifications (design more general) – One process uses direct segment – Reserve physical memory at start up – Allocate r/w anonymous memory to primary region 7/26/2016 ISCA 2013 73 35 51.1 83.1 51.3 Lower is better 30 4KB 25 2MB 20 15 1GB 10 Direct Segment 5 PS GU NP B: CG NP B: BT yS Q L M d m em ca ch e ap h5 00 0 gr Percentage of execu on cycles wasted Execution Time Overhead: TLB Misses 7/26/2016 ISCA 2013 74 35 51.1 83.1 51.3 Lower is better 30 4KB 25 2MB 20 15 0.01 10 ~0 ~0 0.48 0.01 1GB 0.49 Direct Segment 5 GU PS NP B: CG T NP B: B L yS Q M ca ch ed m em h5 00 0 gr ap Percentage of execu on cycles wasted Execution Time Overhead: TLB Misses 7/26/2016 ISCA 2013 75 35 51.1 83.1 51.3 Lower is better 30 20 4KB “Misses” in Direct Segment 25 99.9% 2MB 92.4% 99.9% 99.9% 99.9% 99.9% 15 0.01 10 ~0 ~0 0.48 0.01 1GB 0.49 Direct Segment 5 GU PS NP B: CG T NP B: B L yS Q M ca ch ed m em h5 00 0 gr ap Percentage of execu on cycles wasted Execution Time Overhead: TLB Misses 7/26/2016 ISCA 2013 76 (Some) Limitations • Does not (yet) work with Virtual Machines • Can be extended but memory overcommit challenging • Less suitable for sparse virtual address space • One direct segment – Our workloads did not justify more 7/26/2016 ISCA 2013 77 Summary • Big memory workloads – Incurs high TLB miss cost – Paging not needed for almost all memory • Our proposal: Direct Segment – Paged virtual memory where needed – Segmentation (NO TLB miss) where possible • Bonus: Whither Memory Referencing Energy? 7/26/2016 ISCA 2013 78 A 2nd Virtual Memory Problem: Energy 13% TLB is energy Hungry * From Sodani’s /Intel’s MICRO 2011 Keynote To save it we ask: Where is the energy spent? 83 Standard Physical Cache TLB access on every memory Core reference in parallel w/ L1 cache • Enables physical address Cache hit/miss check TLB • Hides TLB latency (Translation Lookaside Buffer) BUT DOES NOT HIDE TLB ENERGY! 7/26/2016 84 Old Idea: Virtual Cache • TLB access after L1 cache miss (1-5%) Core • Greatly reduces TLB access energy • But breaks compatibility • e.g., read-write synonyms Cache TLB (Translation Lookaside Buffer) • OUR ANALYSIS: Read-write synonyms rare 7/26/2016 85 Opportunistic Virtual Cache • A New Hardware Cache [ISCA 2012] – Caches w/ virtual addresses for almost-all blocks – Caches w/ physical addresses only for compatibility • OS decides (cross-layer design) • Saves energy – Avoids most TLB lookups – L1 cache can use lower associativity (subtle) 7/26/2016 ISCA 2013 86 st 21 Century Computer Architecture Mark D. Hill, Univ. of Wisconsin-Madison 2/2014 @ HPCA, PPoPP, & CGO 1. Whitepaper Briefing 2. Impact & Computing Community Consortium (CCC) 3. E.g., Efficient Virtual Memory for Big Memory Servers Dynamic Energy Savings? dynamic energy % of on-chip memory subsystem's Physical Caching Opportunistic Virtual (VIPT) Caching 100 90 80 70 60 50 40 30 20 10 0 TLB Energy L1 Energy L2+L3 Energy On average ~20% of the on-chip memory subsystem’s dynamic energy is reduced 89