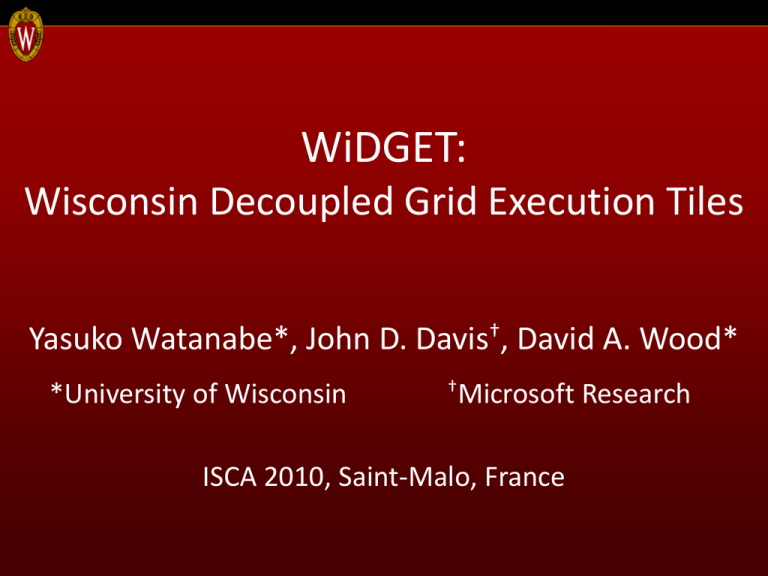

WiDGET: Wisconsin Decoupled Grid Execution Tiles Yasuko Watanabe*, John D. Davis

advertisement

WiDGET: Wisconsin Decoupled Grid Execution Tiles Yasuko Watanabe*, John D. Davis†, David A. Wood* *University of Wisconsin †Microsoft ISCA 2010, Saint-Malo, France Research Normalized Chip Power Power vs. Performance 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 Xeon-like A single core Atom-like 0.3 0.5 0.7 0.9 1.1 Normalized Performance 1.3 1.5 A full range of operating points on a single chip 2 Executive Summary • WiDGET framework – Sea of resources – In-order Execution Units (EUs) • ALU & FIFO instruction buffers • Distributed in-order buffers → OoO execution – Simple instruction steering – Core scaling through resource allocation • ↓ EUs → Slower with less power • ↑ EUs → Turbo speed with more power 3 Intel CPU Power Trend Pentium D Core i7 Pentium 4 100 Thermal Design Power (W) Pentium II Pentium 8086 10 286 386 Core 2 Pentium III 486 Pentium MMX 8080 1 8085 8008 4004 0.1 1971 1978 1985 1992 1999 2006 4 Conflicting Goal: Low Power & High Performance • Need for high single-thread performance – Amdahl’s Law [Hill08] – Service-level agreements [Reddi10] • Challenge – Energy proportional computing [Barroso07] • Prior approach – Dynamic voltage and frequency scaling (DVFS) 5 Diminishing Returns of DVFS 2 Operating Voltage (V) 1.8 1.6 IBM PowerPC 405LP Intel Xscale 80200 TransMeta Crusoe TM 5800 Intel Itanium Montecito Atom Silverthorne 1.4 1.2 1 0.8 0.6 0.4 0.2 0 1997 2000 2003 2006 2009 • Near saturation in voltage scaling • Innovations in microarchitecture needed 6 Outline • • • • High-level design overview Microarchitecture Single-thread evaluation Conclusions 7 High-Level Design • Sea of resources L1I L1I L1I L1I Core Core Core Core L1D L1D L1D L1D Thread context management or Instruction Engine (Front-end + Back-end) L2 L1D L1D L1D L1D In-order Execution Unit (EU) Core Core Core Core L1I L1I L1I L1I 8 WiDGET Vision TLP Power ILP Power ILP Power TLP ILP Power ILP Power Just 5 examples. Much more can be done. 9 In-Order EU L1I L1I L1I L1I Router L1D •Executes 1 instruction/cycle In-Order L1D L1D L1D Instr Buffers L2 L1D L1D L1D L1D •EU aggregation for OoO-like performance Increases both issue BW & buffering Prevents stalled instructions from blocking ready instructions Extracts MLP & ILP Operand Buffer Router L1I L1I L1I L1I 10 EU Cluster L1I L1D L1I L1D L1I L1D L2 L1D L1D L1I L1I 0 L1I 1 IE 0 IE 1 Thread context management or Instruction Engine (Front-end + Back-end) L1D EU Cluster L1D L1D In-order Execution Unit (EU) L1D 0 L1D 1 Full 1-cycle bypass inter-cluster within a cluster link L1I L1I L1I L1I 11 Instruction Engine (IE) L1I L1I L1I L1I RF BR Pred L1D L1D Fetch L1D Decode Rename Steering ROB Front-End L1D Commit Back-End •Thread L2 specific structures L1D L1D •Front-end + back-end •Similar a conventional OoO pipe L1D toL1D •Steering logic for distributed EUs •Achieve OoO performance with in-order EUs •Expose independent instr chains L1I L1I L1I L1I 12 Coarse-Grain OoO Execution OoO Issue 1 2 7 8 3 5 4 6 WiDGET 8 5 6 7 2 4 6 1 3 5 4 3 2 8 1 7 13 Methodology • Goal: Power proportionality – Wide performance & power ranges • Full-system execution-driven simulator – Based on GEMS – Integrated Wattch and CACTI • SPEC CPU2006 benchmark suite • 2 comparison points – Neon: Aggressive proc for high ILP – Mite: Simple, low-power proc L1I 0 IE 0 L1D 0 • Config: 1 - 8 EUs to 1 IE – 1 - 4 instruction buffers / EU 14 Power Proportionality Normalized Chip Power 1 Neon 0.9 8EUs+4IBs Neon Mite 0.8 1 EU 0.7 2 EUs 0.6 3 EUs 0.5 4 EUs 0.4 5 EUs 0.3 1EU+1IB Mite 6 EUs 7 EUs 0.2 0.3 • • • • 0.5 0.7 0.9 1.1 Normalized Performance 1.3 8 EUs 21% power savings to match Neon’s performance 8% power savings for 26% better performance than Neon Power scaling of 54% to approximate Mite Covers both Neon and Mite on a single chip 15 Power Breakdown 1 Normalized Power L3 0.8 L2 L1D 0.6 L1I Fetch/Decode/Rename 0.4 Backend ALU 0.2 Execution 0 Neon 1 2 3 4 1 2 3 4 1 2 3 4 1 2 3 4 1 2 3 4 1 2 3 4 1 2 3 4 1 2 3 4 Mite 1 EU 2 EUs 3 EUs 4 EUs 5 EUs 6 EUs 7 EUs 8 EUs • Less than ⅓ of Neon’s execution power – Due to no OoO scheduler and limited bypass • Increase in WiDGET’s power caused by: – Increased EUs and instruction buffers – Higher utilization of other resources 16 Conclusions • WiDGET – EU provisioning for power-performance target – Trade-off complexity for power – OoO approximation using in-order EUs – Distributed buffering to extract MLP & ILP • Single-thread performance – Scale from close to Mite to better than Neon – Power proportional computing 17 How is this different from the next talk? WiDGET Forwardflow [watanabe10] [Gibson10] Vision Scalable Cores Scalable Cores Mechanism In-order, Steering In-Order Approximation 18 Thank you! Questions? 19 Backup Slides • • • • • • • • • • • • • • • • • • DVFS Design choices of WiDGET Steering heuristic Memory disambiguation Comparison to related work Vs. Clustered architectures Vs. Complexity-effective superscalars Steering cost model Steering mechanism Machine configuration Area model Power efficiency Vs. Dynamic HW resizing Vs. Heterogeneous CMPs Vs. Dynamic multi-cores Vs. Thread-level speculation Vs. Braid Architecture Vs. ILDP 20 Dynamic Voltage/Freq Scaling (DVFS) • Dynamically trade-off power for performance – Change voltage and freq at runtime – Often regulated by OS • Slow response time • Linear reduction of V & F – Cubic in dynamic power – Linear in performance – Quadratic in dynamic energy • Effective for thermal management • Challenges – Controlling DVFS – Diminishing returns of DVFS 21 Service-Level Agreements (SLAs) • Expectations b/w consumer and provider – QoS, boundaries, conditions, penalties • EX: Web server SLA – Combination of latency, throughput, and QoS (min % performed successfully) • Guaranteeing SLAs on WiDGET 1. Set deadline & throughput goals • Adjust EU provisioning 2. Set target machine specs • X processor-like with X GB memory 22 Design Choice of WiDGET (1/2) Nehalem-like CMP ROB Core Core Core Core BR Pred I$ L2 Fetch Decode D$ RF Rename OoO IQ Commit OoO issue queue + full bypass = 35% of processor power Core Core Core Core 23 Design Choice of WiDGET (2/2) Nehalem-like CMP ROB Core Core Core Core BR Pred I$ Fetch Decode D$ RF Rename OoO IQ Commit In-Order Exec L2 OoO issue queue + full bypass = 35% of processor power Core Core Core Core Replace with simple building blocks Decouple from the rest 24 Steering Heuristic • Based on dependence-based steering [Palacharla97] – Expose independent instr chains – Consumer directly behind the producer – Stall steering when no empty buffer is found • WiDGET: Power-performance goal – Emphasize locality & scalability Cluster 0 Cluster 1 Outstanding Ops? 0 Any empty buf 2 1 Avail behind either of producers? Y Y N Empty buf in Producer buf either of clusters Avail behind producer? N Empty buf within cluster • Consumer-push operand transfers – Send steered EU ID to the producer EU – Multi-cast result to all consumers 25 Opportunities / Challenges • Decoupled design + modularity = Reconfig by turning on/off components Core scaling by EU provisioning Rather than fusing cores Core customization for ILP, TLP, DLP, MLP & power • Challenges o Parallelism-communication trade-off o Varying communication demands 26 Memory Disambiguation on WiDGET • Challenges arising from modularity – Less communication between modules – Less centralized structures • Benefits of NoSQ – Mem dependency -> register dependency – Reduced communication – No centralized structure – Only register dependency relation b/w EUs • Faster execution of loads 27 Memory Instructions? • No LSQ thanks to NoSQ [Sha06] • Instead, – Exploit in-window ST-LD forwarding – LDs: Predict if dependent ST is in-flight @ Rename • If so, read from ST’s source register, not from cache • Else, read from cache • @ Commit, re-execute if necessary – STs: Write @ Commit – Prediction • Dynamic distance in stores • Path-sensitive 28 Comparison to Related Work Scale Up & Down? Symmetric? Decoupled Exec? In-Order? Wire Delays? Data Driven? ISA Compativility? WiDGET √ √ √ √ √ √ √ Adaptive Cores X - √/X √/X - - √ Heterogeneous CMPs X X X √/X - - √ Core Fusion √ √ X X √ - √ CLP √ √ √ √ √ √ X TLS X √ X √/X - X √ Multiscalar X √ X X - X X ComplexityEffective X √ √ √ X √ √ Salverda & Zilles √ √ X √ X √ √ ILDP & Braid X √ √ √ - √ X Quad-Cluster X √ √ X √ √/X √ Access/Execute X X X √ - √ X Design EX 29 Vs. OoO Clusters • OoO Clusters – Goal: Superscalar ILP without impacting cycle time – Decentralize deep and wide structures in superscalars • Cluster: Smaller OoO design – Steering goal • High performance through communication hiding & load balance • WiDGET – Goal: Power & Performance – Designed to scale cores up & down • Cluster: 4 in-order EUs – Steering goal • Localization to reduce communication latency & power 30 EX: Steering for Locality Clustered Architectures 1 2 e.g., Advanced RMBS, Modulo 3 5 2 1 4 3 Cluster 1 Delay 6 5 Maintain load balance 4 WiDGET 6 Cluster 0 Exploit locality Cluster 0 2 1 4 3 Cluster 1 Delay 6 5 31 Vs. Complexity-Effective Superscalars • Palacharla et al. – Goal: Performance – Consider all buffers for steering and issuing • More buffers -> More options • WiDGET – Goal: Power-performance – Requirements • Shorter wires, power gating, core scaling – Differences: Localization & scalability • Cluster-affined steering • Keep dependent chains nearby • Issuing selection only from a subset of buffers – New question: Which empty buffer to steer to? 32 Steering Cost Model [Salverda08] • Steer to distributed IQs • Steering policy determines issue time – Constrained by dependency, structural hazards, issue policy • Ideal steering will issue an instr: – As soon as it becomes ready (horizon) – Without blocking others (frontier) (constraints of in-order) • Steering Cost = horizon – frontier – Good steering: Min absolute (Steering Cost) 33 EX: Application of Cost Model • Steer instr 3 to In-order IQs IQ 0 2 3 4 • Challenges Time 1 IQ 1 1 1 2 2 3 4 F F C = -1 C = -1 IQ 2 IQ 3 F F: Frontier Horizon 3 F C=0 C=1 Other instrs Cost = H - F – Check all IQs to find an optimal steering – Actual exec time is unknown in advance • Argument of Salverda & Zilles – Too complex to build or – Too many execution resources needed to match OoO 34 Impact of Comm Delays If 1-cycle comm latency is added… What should happen instead IQ 0 IQ 1 IQ 2 IQ 3 1 2 2 3 4 Time 2 1 1 IQ 0 IQ 1 IQ 2 IQ 3 1 1 3 2 2 3 4 3 3 4 4 4 5 5 Exec latency: 3 5 cycles Exec latency: 4 cycles Trade off parallelism for comm 35 Observation Under Comm Delays • Not beneficial to spread instrs Reduced pressure for more execution resources • Not much need to consider distant IQs Reduced problem space Simplified steering 36 Instruction Steering Mechanism • Goals – Expose independent instruction chains – Achieve OoO performance with multiple in-order EUs – Keep dependent instrs nearby • 3 things to keep track – Producer’s location – Whether producer has another consumer – Empty buffers Last Producer Table & Full bit vector Empty bit vector 37 Steering Example Has a consumer? Register Buffer ID 1 2 6 8 3 5 4 7 1 2 3 4 5 6 7 8 ﬩ 0 ﬩ 0 ﬩ 1 ﬩ 1 ﬩ 0 ﬩ 2 ﬩ 1 ﬩ 2 1 0 0 1 0 1 0 1 0 0 1 0 0 0 1 2 3 0 1 1 0 1 0 1 0 0 0 0 0 1 2 3 Empty / full bit vectors Last Producer Table 38 Instruction Buffers • Small FIFO buffer – Config: 16 entries • 1 straight instr chain per buffer Instr • Entry – Consumer EU field Op 1 Op 2 Consumer EU bit vector • Set if a consumer is steered to different EU • Read after computation – Multi-cast the result to consumers 39 Machine Configurations Atom [Gerosa08] Xeon [Tam06] L1 I / D* BR Predictor† WiDGET 32 KB, 4-way, 1 cycle Tage predictor; 16-entry RAS; 64-entry, 4-way BTB Instr Engine 2-way front-end & back-end 4-way front-end & back-end; 128-entry ROB Exec Core 16-entry unified instr queue; 2 INT, 2 FP, 2 AG 32-entry unified instr queue; 3 INT, 3 FP, 2AG; 0-cycle operand bypass to anywhere in core 16-entry instr buffer per EU; 1 INT, 1FP, 1AG per EU; 0-cycle operand bypass within a cluster Disambiguation† No Store Queue (NoSQ) [Sha06] L2 / L3 / DRAM* 1 MB, 8-way, 12 cycles / 4 MB, 16-way, 24 cycles / ~300 cycles Process * Based on Xeon 45 nm † Configuration choice 40 Area Model (45nm) • Assumptions – Single-threaded uniprocessor – On-chip 1MB L2 – Atom chip ≈ WiDGET (2 EUs, 1 buffer per EU) • WiDGET: > Mite by 10% < Neon by 19% Area (mm²) 50 40 30 20 10 0 Mite WiDGET Neon 41 Harmonic Mean IPCs 1.4 Neon Mite 1 EU 2 EUs 3 EUs 4 EUs 5 EUs 6 EUs 7 EUs 8 EUs Normalized IPC 1.2 1 0.8 0.6 0.4 0.2 0 1 2 3 Instruction Buffers 4 • Best-case: 26% better than Neon • Dynamic performance range: 3.8 42 Harmonic Mean Power 1.2 Normalized Power 1 0.8 0.6 0.4 0.2 0 1 2 3 Instruction Buffers 4 Neon Mite 1 EU 2 EUs 3 EUs 4 EUs 5 EUs 6 EUs 7 EUs 8 EUs • 8 - 58% power savings compared to Neon • 21% power savings to match Neon’s performance • Dynamic power range: 2.2 43 Geometric Mean Power Efficiency (BIPS³/W) Neon Mite • Best-case: 2x of Neon, 21x of Mite • 1.5x the efficiency of Xeon for the same performance 44 Energy-Proportional Computing for Servers [Barroso07] • Servers – 10-50% utilization most of the time • Yet, availability is crucial – Common energy-saving techs inapplicable – 50% of full power even during low utilization • Solution: Energy proportionality – Energy consumption in proportion to work done • Key features – Wide dynamic power range – Active low-power modes • Better than sleep states with wake-up penalties 45 PowerNap [Meisner09] • Goals – Reduction of server idle power – Exploitation of frequent idle periods • Mechanisms – – – – System level Reduce transition time into & out of nap state Ease power-performance trade-offs Modify hardware subsystems with high idle power • e.g., DRAM (self-refresh), fans (variable speed) 46 Thread Motion [Rangan09] • Goals – Fine-grained power management for CMPs – Alternative to per-core DVFS – High system throughput within power budget • Mechanisms – Migrate threads rather than adjusting voltage – Homogeneous cores in multiple, static voltage/freq domains – 2 migration policies • Time-driven & miss-driven 47 Dynamic HW Resizing • Resize if under-utilized for power savings – Elimination of transistor switching – e.g., IQs, LSQs, ROBs, caches • Mechanisms – Physical: Enable/disable segments (or associativity) – Logical: Limit usable space – Wire partitioning with tri-state buffers • Policies – Performance (e.g., IPC, ILP) – Occupancy – Usefulness 48 Vs. Heterogeneous CMPs • Their way OoO Core L2 – Equip with small and powerful cores – Migrate thread to a powerful core for higher ILP • Shortcomings – – – – More design and verification time Bound to static design choices Poor performance for non-targeted apps Difficult resource scheduling • My way – Get high ILP by aggregating many in-order EUs 49 Vs. Dynamic Multi-Cores • Their way – Deploy small cores for TLP – Dynamically fuse cores for higher ILP Fuse L2 • Shortcomings – Large centralized structures [Ipek07] – Non-traditional ISA [Kim07] • My Way – Only “fuse” EUs – No recompilation or binary translation 50 Vs. Thread-Level Speculation • Their way – SW: Divides into contiguous segments – HW: Runs speculative threads in parallel L2 Speculation support • Shortcomings – Only successful for regular program structures – Load imbalance – Squash propagation • My Way – No SW reliance – Support a wider range of programs 51 Vs. Braid Architecture [Tseng08] • Their way – ISA extension – SW: Re-orders instrs based on dependency – HW: Sends a group of instrs to FIFO issue queues • Shortcomings – Re-ordering limited to basic blocks • My Way – No SW reliance – Exploit dynamic dependency 52 Vs. Instruction Level Distributed Processing (ILDP) [Kim02] • Their way – New ISA or binary translation – SW: Identifies instr dependency – HW: Sends a group of instrs to FIFO issue queues • Shortcomings – Lose binary compatibility • My Way – No SW reliance – Exploit dynamic dependency 53 Vs. Multiscalar • Similarity – ILP extraction from sequential threads – Parallel execution resources • Differences – Divide by data dependency, not control dependency • Less communication b/w resources • No imposed resource ordering – Communication via multi-cast rather than ring – Higher resource utilization – No load balancing issue 54 Approach • Simple building blocks for low power – In-order EUs • Sea of resources – Power-Performance tradeoff through resource allocation • Distributed buffering for: – Latency tolerance – Coarse-grain out-of-order (OoO) 55