Dynamic Verification of Sequential Consistency Albert Meixner Daniel J. Sorin

advertisement

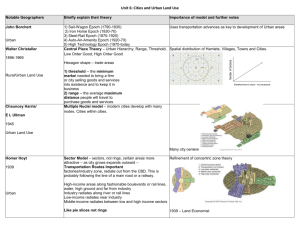

Dynamic Verification of Sequential Consistency Albert Meixner Daniel J. Sorin Dept. of Computer Science Duke University Dept. of Electrical and Computer Engineering Duke University Introduction • Multithreaded systems becoming ubiquitous • Commercial workloads rely heavily on parallel machines • Reliability and availability are crucial • Backward Error Recovery can provide high availability • Recover to known good state upon error • But can only recover from errors detected in time • Memory system is of special interest • Complex – Many components, large transistor count • Numerous error hazards Memory System Error Detection • Must cover all memory system components • DRAMs, caches, controllers, interconnect, and write buffers • Mechanisms for individual components exist • Storage structures: ECC • Interconnect: checksums, sequence numbering • Cache and memory controllers: replication • Adding detection to all components is hard • Complicates design of every component • Requires good intuition of interactions and possible errors Want comprehensive, end-to-end error detection Dynamic Verification • Dynamic verification • • • • Correct system operation constantly monitored at runtime End-to-end scheme Detects transient errors, design bugs, and manufacturing errors Differs from statically verifying that design is bug-free • High level invariants are checked, instead of individual components • Simplified design of system components • Can detect any low-level error that violates invariant Memory Consistency • Memory consistency model • Formal specification of memory system behavior in a multithreaded system • Defines order in which memory accesses from different CPUs can become globally visible • Many consistency models exist, we focus on one • Verifying memory consistency = Verifying correctness of the memory system • Ideal invariant for dynamic verification Sequential Consistency (SC) • Requires appearance of total global order of all loads and stores in system • Each load must receive value of most recent store in total order to the same address • Program order of all processors is preserved in total order • SC is most intuitive consistency model • Good for programmers • Speculation can make SC almost as fast as more relaxed models • Our contribution: Dynamic Verification of Sequential Consistency (DVSC) Outline • • • • • Introduction DVSC-Direct DVSC-Indirect Results Conclusion DVSC-Direct CPU 1 t=1.1 LD A→1 t=2.1 ST B←2 t=3.1 LD A→2 Program Order CPU 2 t=1.2 LD C→1 t=2.2 ST A←2 t=3.2 LD C→1 Program Order LD ST A←2 C→1 A→1 A→2 B←2 Verifier Global Order DVSC-Indirect: Idea • Verify conditions sufficient for Sequential Consistency • In-order performance of memory operations • Cache coherence • Conditions formally defined and proven by Plakal et al. [SPAA 1998] • Two mechanisms • On-chip checker for in-order performance • Distributed checker for cache coherence In-Order Performance Verification • A load of block B receives the value of… • …the most recent local store to B or most recent global store to B performed after all local stores • Trivially observed on in-order processor with coherent caches • Modern processors execute out-of-order • Results of ooo-execution are considered speculative until inorder re-execution and verification • DVSC-Indirect uses DIVA checker core by Austin [Micro 1999] • Could substitute other mechanisms Cache Coherence • All processors observe the same order of stores to a given memory location • Difficult because the same memory location can exist in different caches • Maintained by a coherence protocol • Different protocols: MOSI, MSI, MOESI, Token Coherence, … • Different maintenance mechanisms: directory, snooping • Verification uses “divide and conquer” • Verify conditions provably sufficient for cache coherence • Initially defined for proof of sequential consistency by Plakal et al. [SPAA1998] Cache Coherence Verification • Coherence Conditions Epoch 1. Cache accesses are contained in an epoch The time interval between obtaining and losing • Stores in read-write epochs permissions on a block. • Loads in read-write or read-only epochs 2. Read-write epochs do not overlap other epochs 3. Block data at beginning of epoch equals block data at end of last read-write epoch • Verification • • • Check if accesses are in appropriate epoch during DIVA-replay Collect epoch information at every node and send to verifier Verifier checks epoch history for overlaps and data propagation Implementation Overview CPU Core DIVA CPU Core DIVA CPU Core DIVA Cache Record Epochs Cache Record Epochs Cache Record Epochs Memory Collect Epochs Verify Epochs Epoch History Interconnect Memory Collect Epochs Verify Epochs Epoch History Memory Collect Epoch Verify Epochs Epoch History At the Cache Controller • All caches keep track of active epochs in the Cache Epoch Table (CET) • Every DIVA cache access checks CET for active epoch CET • Epoch Inform sent to the memory controller when epoch ends • Begin and end data are hashed Epoch Inform Type: read-write or read-only Begin time Begin data • Ensure access is contained in epoch End time • Verification off the critical path End data • Second order performance effect from bandwidth usage At the Memory Controller • Check for epoch overlaps and correct value propagation • Generally requires entire block history → O(N) space • If epoch informs are processed in order… • Need end value of last read-write epoch for propagation check • Need end time of last read-write and last read-only epoch for overlap check • O(1) space • Epochs arrive almost in order • Fix remaining re-orderings in priority queue before verifications • Epoch state in Memory Epoch Table (MET) • Last end time of read-only epoch and read-write epoch, last value Experimental Evaluation • Empirically determine error detection capability • Error injection into caches, controller, interconnect, switches, etc. • Quantify error-free overhead • Increase in interconnect bandwidth consumption • Potential decrease in application performance Simulation Methodology • Full-system simulation of 8-CPU UltraSPARC SMP • Simics functional simulation • GEMS-based timing simulation • 2 GB RAM, 4-way 32KB I+D L1, 4-way 1MB L2 • SafetyNet for backward error recovery • MOSI-Directory and MOSISnooping Benchmarks Apache 2 Static web-server SpecJBB 3-Tier Java system OLTP Online transaction system with DB2 Slashcode Dynamic website with perl and mysql Barnes Barnes-Hut from SPLASH2 Bottleneck Link Bandwidth Directory slower Error-Free Runtime - Directory Conclusions • DVSC-Direct and DVSC-Indirect enable end-to-end verification of the memory system • DVSC-Indirect imposes acceptable hardware and performance overhead • An extension of DVSC-Indirect to relaxed consistency is currently under development Questions?