Chapter 6: Step 4, Knowing What the Learner Knows

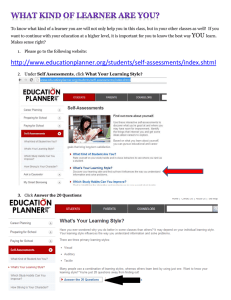

advertisement

Chapter 6: Step 4, Knowing What the Learner Knows Keep These Things in Mind While Reading What is the difference between assessment and evaluation? What is the purpose of leaner assessment? What is the difference between norm and criterion referenced assessment? What are some techniques of learner assessment for measuring select, organize, and integrate objectives? Chapter Introduction You now see the puppeteer with the "4" marionette highlighted. This marionette represents the 4th and final step in the 4-Step process. This is the step where you focus on assessing what the learner knows as a result of all your efforts to educate or train. In the image you see audience thought bubbles, with various reactions to the puppet show. The Step 4: Knowing What the Learner Knows step attempts to see into the learner's minds to check achievement of your objective. Although the first three steps of our process are important, it is this last step, Knowing What the Learner Knows that tells us how well we’ve done our jobs as instructional designers. This information not only provides a measure of the learner’s progress, but also helps to guide the designer in revising the instruction and in developing additional materials. This chapter presents a simple approach to the most basic types of assessment: true/false, short answers, essays, multiplechoice, and rubrics. As always, our focus is on giving you a clear picture of designing a unit of instruction. To keep your cognitive load optimal we focus this chapter on the types of assessment you are most likely to use for a single unit of instruction. Assessment is a very large and important topic, therefore we encourage you to explore the assessment literature specifically related to the instructional strategies you favor. Chapter 6--- 1 Chapter Outline 1. The purpose of learner assessment 2. Norm and criterion-referenced assessment 3. Select, organize and integrate levels of assessment 4. Assessment techniques a. Assessing selection i. Multiple-choice ii. True/false iii. Fill-in-the blank b. Assessing organization i. Short essays ii. Demonstrations iii. Rubrics c. Assessing integration i. Self-evaluation The Purpose of Learner Assessment The first question to ask yourself is why are you interested in knowing what the learner knows. When you are initially working on instructional materials, this is often done to see if the materials need to be changed. However, later you will have a greater degree of confidence in the materials and wish to find out if the learner has attended to them properly. Although the testing may be similar, in the first case, you need as much information as possible that aids in revising the materials; in the second case, the testing may result in a decision to give the learner remedial instruction or prevent them from moving forward in a program until they can demonstrate proficiency. At this point, it’s important to define two terms: evaluation and assessment. Evaluation is the systematic process of comparing progress against stated outcomes. Individuals can be evaluated, but so can programs, projects, and organizations. In addition, evaluation can occur during instructional development (i.e. formative evaluation) or can occur when instructional development is essentially complete (i.e. summative evaluation). In contrast with evaluation, assessment is a summative or final measure of an individual against stated outcomes. In the context of this book, we are interested primarily in the assessment of a learner against the learning outcomes once they have completed the educational materials or intervention. We’ll refer to this as the learner assessment. Chapter 6--- 2 Norm and Criterion Referenced Assessment The purpose of a learner assessment depends upon the type of assessment you conduct. Two common types of assessment are normreferenced and criterion-referenced. Norm-referenced assessment attempts to address a student’s learning or ability against similar individuals. The goal of norm-referenced testing is a ranked list of individuals on a given test or measure. The bellcurve, used to show a statistical distribution of scores, is a type of norm-referenced test. Criterion-referenced assessment attempts to address a student’s learning against a pre-determined list of learning outcomes. The goal of criterion-referenced testing is a determination of mastery, or successful completion, of those outcomes. It is this type of assessment we cover in this chapter. Designers are advised to write an assessment measure when they write their objective. Why? Because the behavior they want the learner to achieve should be the behavior for which the learner is evaluated. Following are some examples: For the objective “TLW eat a healthy diet”, the “d” or degree might be stated as: by selecting a diet that consists of 60% fruits or vegetables For the objective “TLW practice healthy activities”, the “d” or degree might be stated as: by exercising 50 minutes three times a week. For the objective “TLW value healthy consumption of dairy products”, the “d” or degree might be stated as: by choosing low fat milk or ice cream. Select, Organize, and Integrate Levels of Assessment The way you write the “d” (degree) depends upon the level learning desired. For example, a select-level objective would require only recognize-level assessment. Labeling, circling, or multiple-choice would be appropriate testing items for a recognize-level behavioral goal. Why? Because these types of activities allow you to assess whether the learner has in fact noticed something or “recognized” something. When writing recognition level assessment items, you are addressing Chapter 6--- 3 selection. Did the learner see, recognize, or focus on a particular aspect of instruction. An organize-level objective would require assessment that indicates higher level thinking than recognition. Short and long essays, paraphrasing, journaling, drawings, mind mapping, and any type of personalized practice (mental and physical) are considered organize-level assessment measures. When you are writing an organize level of assessment for cognitive skills (as opposed to psychomotor skills) you may need to provide the learner with cues to stimulate recall. By cuing recall, you give the learner something to work with. For example, if you ask students to describe their last vacation with as many adjectives as possible, you might cue their memory by asking students to work with a photograph from the vacation. If you want a student to describe an event from a particular era, you might stimulate their memory by asking them to play music from that time in history. Integrate-level objectives require assessment of the learner’s ability to transfer learning to a novel situation. You ask the learner to apply what they learned to increasingly different and ultimately new situations. Projects, analysis papers, poems, drawings, presentations and any strategies that require the learner to create new or different interpretations of the content usually require an integrate-level of understanding. Below you see ideas for select, organize and integrate levels of assessment. Assessment Techniques Below are suggestions for writing multiple choice questions, true/false questions, fill-in-the blank questions, rubrics, demonstrations, short essays, and self-evaluations. The organization of these techniques into select, organize, and integrate categories is a suggestion only. There may be times when you use any of these techniques for any level of learning. Chapter 6--- 4 Assessing Selection How to Write Multiple-Choice Questions A multiple-choice question consists of three parts: 1. The question, 2. The correct (or most correct) answer, and 3. A list of “distracter” (incorrect) answers. Each of these has one or more guidelines associated with it. 1. Rules of thumb for writing the question. Write the question clearly. Do not try to “trick” the learner with misleading information or ambiguous prose. The question can contain information that is not relevant to the correct answer, but make sure you do not use information that is obviously misleading Make the question the longest part of the multiple choice item. 2. Rules of thumb for writing the correct answer - Do not make the correct answer too obvious or too subtle. - Avoid “All of the above” or “None of the above” choices or any other that requires logical thinking in addition to the learning being examined. 3. Rules of thumb for writing the distracters - Do not make the distracters obviously wrong. - Make the distracters reasonable considerations. - Ideally, each distracter would be selected by a equal number of learners who do not know the correct answer. - It is a common mistake to make the correct answer the longest of available answers. Avoid this by varying the length of distracters in relation to the correct answer. How to Write True/False Questions - Use true/false questions only when the information is clearly true or clearly false. Use simply constructed sentences for true false statements. Chapter 6--- 5 - Provide a clear, unambiguous way for learners to respond. How to Write Fill-in-the-Blank/Short Answer Questions - - Use only simple sentences. Make sure that the missing word or phrase is a specific term (e.g. “pressure”) or qualifying word (e.g. “increase”). Make it clear how many or what length response is required. When used in addition to recognition items, confirm that the recognition items are not cuing performance on these items. Assessing Organization How to Design Demonstration Assessments - Prior to assessment (during instruction), make it clear to students what performance is expected and what cues will be provided when they are evaluated. - Ensure that the students are not asked to demonstrate skills or mastery using methods unrelated to mastery, or in conflict with developmental readiness. For example, asking young children to draw diagrams to show mastery of verbal skills such as saying "please" or "thank you" would not measure the desired skills. What would be measured instead would be their ability to draw diagrams, which most young children are developmentally unable to demonstrate. - Prepare a scoring guide or rubric that will be used to evaluate responses. It is usually a good idea to share this with learners prior to actual assessment. How to Write Short Essay Questions (Applies to Integration as well) - Describe the approximate length of an expected answer (e.g. 1/2 page, single spaced, 12 point Times font). - Provide examples of good answers prior to the assessment for lower levels of learning. - For higher levels of learning, do NOT provide examples of good answers. Students will likely attempt to memorize prior examples rather than think of unique responses. Chapter 6--- 6 - For transfer questions, provide criteria of good answers for learners to use in self-evaluation practice. Clearly specify what is required in an acceptable answer. Creating Effective Rubrics (Applies to Integration as Well) - Criteria should be as unambiguous as possible. - Specify levels for distinguishing between minimal and higher level performance as needed. - Use checkmarks for easily identifiable criterion. - Allow room for rater’s comments when the reason for justifying meeting or not meeting a criterion is not obvious. Assessing Integration How to Create High-Level Self-Evaluations (Transfer) - Require learners to respond concretely and completely prior to beginning the evaluation of the response. - Record responses for learner review (via text, recorded audio, recorder video). - After responding, display learner and expert performance in like media if possible. - Provide prompting questions or self-evaluation items after performance is recorded. - Require learners to suggest ways in which their performance can be improved before the next cycle of evaluation. - Allow repetition of performance/evaluation cycle until performance is proficient (individuals may vary greatly in time and effort to attain proficiency). - Do not allow unlimited access to evaluation. Student performance will often plateau in any specific area, causing additional practice to be increasingly counterproductive. Also, students tend to practice skills they do well more than those they don’t do well in order to achieve success. Know These Terms! Assessment – a summative or final measure of an individual against stated outcomes. Chapter 6--- 7 Criterion-Referenced assessment – a student’s learning or ability against a pre-determined list of learning outcomes. Evaluation – the systematic process of comparing progress against stated outcomes. Norm-Referenced assessment – a student’s learning or ability against similar individuals. Summary In the previous chapters, you’ve learned the first three stapes in the 4-step process, Sizing up the learners, Stating the Outcome, and Making it Happen. This chapter covers the fourth step: Knowing What the Learner Knows. Knowing What the Learner Knows provides a measure of the learner’s progress and guides the designer in revising the instruction. It is important to distinguish two terms of evaluation and assessment. Evaluation is the systematic process of comparing progress against stated outcomes while assessment is a summative or final measure of an individual against stated outcomes. It is also important to distinguish two common types of assessment: norm-referenced and criterion-referenced. Norm-referenced assessment attempts to address a student’s learning against similar individuals. Criterion-referenced assessment attempts to address a student’s learning against a pre-determined list of learning outcomes. Some techniques on how to write questions for recognition, recall, and transfer levels were also introduced. Examples included how to write multiple-choice questions, true/false questions, fill-in-the-blank/short answer questions, short essay questions, creating rubrics and high level selfevaluation. Chapter 6--- 8 Discussion Question The words assessment and evaluation have different meanings. Create a lesson/example to teach instructional designers how to use the terms correctly. References Gould, S.J. (1981). The mismeasure of man. Norton: NY. Likert, R. (1932). A technique for the measurement of attitudes. Archives of Psychology, 140, 5-53. Kirkpatrick, D.L. (1994). Evaluating training programs: The four levels. San Francisco, CA: Berrett-Koehler. For More Information Name of related book Chart that shows key information and additional resources Other source of information Bloom, B. S., Hastings, J. T., & Madaus, G. F. (1971). Handbook on formative and summative evaluation of student learning. New York, McGraw-Hill. George J., & Cowan, J. (1999). A handbook of techniques for formative evaluation: Mapping the student’s learning experience. London: Kogan Page. Tessmer M. (1993). Planning and conducting formative evaluation: Improving the quality of education and training. London: Kogan Page. End of Chapter 6 Chapter 6--- 9