CAS EXAMINATION PROCESS

CAS Exam Committee presents:

CAS EXAMINATION

PROCESS

Steve Armstrong, FCAS Daniel Roth, FCAS

Manalur Sandilya, FCAS Tom Struppeck, FCAS

2005 CAS Annual Meeting – Session C8

Baltimore

CAS Examination

Process Update

Fall 2000 – CAS Issues RFP for External

Review of Admissions Processes

The Chauncey Group (Subsidiary of ETS)

Selected

Spring 2001 – Chauncey Group Conducts

Audit of CAS Admissions Processes

2

Audit Findings

The CAS Does Many Things Well:

•

Good Communication with Candidates

•

Sound Procedures for Maintaining

Confidential Information

•

Exams are Administered with

Appropriate Controls and Standardized

Procedures

3

Audit Findings

Several Areas for Improvement:

•

Need Better Link Between Learning

Objectives and Exams/Readings

•

Learning Objectives and Exam Blueprints

Should be Published

•

Need Better Training of Item Writers

•

Need to Consider Alternative Processes for

Selecting Pass Marks

4

Major Objectives

The Chauncey Group Engaged to Help CAS

With Three Issues:

•

Write Better Learning Objectives and

Establish Links to Readings/Exams

•

Develop a Process for Training Item Writers

•

Pilot an Alternative Process for Selecting

Pass Marks

5

Major Milestones

2001 – Chauncey Began Facilitating Meetings to Write Learning Objectives

2001 – Pass Mark Panels Pilot

2002 – Item Writer Training Pilot

2003 – Executive Council Agrees to Fund Item

Writer Training and Pass Mark Panels as

Ongoing Processes

2003 – Executive Council Approves New

Learning Objectives

6

Learning Objectives

The way things were The way things are now

–

What topics should successful candidates understand

–

What readings should they know?

–

What should successful candidates be able to DO?

7

Learning Objectives

The way things were The way things are now

–

Individual topics and readings were the basis for assigning the writing of exam questions

–

Learning

Objectives are the basis for assigning the writing of exam questions

8

Learning Objectives

The Syllabus Committee has developed

Learning Objective Documents for CAS

Exams 3, 5, 6, 7-US, 7-Canada, 8 and 9 and also the VEE exams

There are simpler and more direct Learning

Objectives for the Joint Exams 1, 2, & 4

9

Learning Objective Documents

Five Elements

•

Overview Statement for a Group of

Learning Objectives

•

Learning Objectives

•

Knowledge Statements

•

Syllabus Readings

•

Weights

10

Learning Objective Documents

Overview Statements

•

Certain Syllabus Sections Can Have

Multiple Learning Objectives (e.g.,

Ratemaking)

11

Learning Objective Documents

Learning Objectives

•

What successful candidates should be able to do

• Learning Objectives Should:

Clearly state a main intent

Reflect a measurable outcome

Support an attainable behavior

Relate to the learner’s needs or job function

Have a definitive time frame

12

Learning Objective Documents

Knowledge Statements

•

Support Learning Objectives

•

In order to accomplish the objective, what does the learner need to know?

13

Learning Objective Documents

Readings

•

An individual reading may be listed under more than one learning objective

•

Readings listed under multiple objectives may facilitate more synthesis/reasoning/cross-topic Exam questions

14

Learning Objective Documents

Weights (by Learning Objective)

•

Will be shown as ranges

•

The ranges are guidelines and are not intended to be absolute

•

Advantages of old-style “blueprints” without disadvantages

•

Will (perhaps) end practice of candidates calculating de facto weights by reading or topic from past Exams

15

Learning Objectives and the Syllabus

Learning Objective Documents Provide

High Level Guidance

–

Review of Current Syllabus Material

–

Identification of Topics Requiring New

Syllabus Material

Weights help Syllabus Committee Target

Specific Objectives

16

Future Changes to Learning

Objective Documents

These are Living Documents

–

Never Perfect

–

Subject to Change

Updates – When and How Often?

–

Not Yet Determined

–

Once a Year Per Exam Seems Reasonable

–

At Least Disruptive Time for Candidates

17

Future Changes to Learning

Objective Documents

CAS Executive Council (VP-Admissions)

Will Perform Oversight and Final Approval of Any Changes

–

Just as it does with changes to the

Syllabus

–

Just as it has with the initial Learning

Objective Documents

18

Learning Objective Summary

Transition to Published Learning Objectives

Should Help the CAS Achieve:

–

Better Syllabus Content and Exam

Questions

–

More Transparent Basic Education Process

–

Better Model for Evaluating Future Changes to the Syllabus

–

Better Model for Evaluating Future Changes to the Desired Education of Casualty

Actuaries

19

Integrated Syllabus Material

Ratemaking for Catastrophes

Multiple issues

Multiple papers

Feedback from candidates

Need for Integrated Study Material

RFP process

20

Learning Objectives related to

Ratemaking for Catastrophes

Two Learning Objectives

Operational Issues

Ratemaking Issues

0 – 10 weight spread

Two or more readings; Candidate feedback

Exam Committee feedback

Can we create an integrated study note

21

Learning Objectives to Integrated

Study Notes

Outline begins with Learning Objectives

Expand the outline based on Knowledge

Statements

Add new Knowledge Statements where appropriate

Review the flow of ideas

Finalize the Study Notes

22

Writing Exam Questions

What makes a good exam question?

1)

2)

3)

Should be easy to grade.

Should be answerable in a reasonable amount of time.

Should measure the student’s mastery of the material, ideally by doing something.

Not easy to achieve all of these.

23

Question 1

According to the errata, the “4.2” on page

93, line 15 should read “7.9”.

24

Question 1

According to the errata, the “4.2” on page

93, line 15 should read “7.9”.

This satisfies two of the criteria.

25

Question 2

Struppeck gives six example test questions, list them.

26

Question 2

Struppeck gives six example test questions, list them.

This is better than Question 1, but it still isn’t giving the student a chance to show mastery of the material, only recall.

27

Question 3

Struppeck gives six example test questions, list them and describe them.

28

Question 3

Struppeck gives six example test questions, list them and describe them.

This is better than Question 2.

29

Question 4

Struppeck claims that Question 3 is better than Question 2, explain why.

30

Question 4

Struppeck claims that Question 3 is better than Question 2, explain why.

This would actually be a pretty good question.

31

Question 5

Write Question 7 and use Struppeck’s three criteria to evaluate it.

32

Question 5

Write Question 7 and use Struppeck’s three criteria to evaluate it.

This might be a bit too open-ended to be graded easily.

33

Question 6

Use Struppeck’s three criteria to evaluate

Question 6.

34

Question 6

Use Struppeck’s three criteria to evaluate

Question 6.

1)

Easy to grade. OK

35

Question 6

Use Struppeck’s three criteria to evaluate

Question 6.

1)

2)

Easy to grade. OK

Can be done quickly. OK

36

Question 6

Use Struppeck’s three criteria to evaluate

Question 6.

1)

2)

3)

Easy to grade. OK

Can be done quickly. OK

Illustrates that we can use the three criteria to do something. OK

37

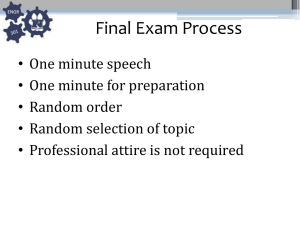

Grading Exam Questions

Assignments for grading will be distributed immediately after the exam as to which question(s) you are assigned to grade.

Questions are graded in pairs, just like writing exam questions.

A sample solution and a copy of the exam will be sent to the grading pair for review prior to getting the actual papers.

The actual papers come to the grading pair in the week or two following the examination.

38

Grading Exam Questions

Graders are encouraged to develop a grading key that accounts for the different combinations and permutations of answers that can be provided.

Graders are encouraged to use this grading key for a random set of questions (between 20-40) to ensure consistency in application of the grading key.

The graders should meet after grading this set of random questions to reconcile any differences and change the answer key if necessary.

39

Grading Exam Questions

In the subsequent weeks, individual graders will grade every candidate’s response and log the points in a Grading Program.

Graders are encouraged to reconcile scores along the way so that this work does not monopolize the time spent at the on-site Grading Session.

The on-site Grading Session is now being conducted in Las Vegas and lasts for two days.

40

Grading Exam Questions

On-Site Grading Session

The on-site Grading Session allows the following:

Allows those on the committee to meet with one another.

Small allowance of time to reconcile all scores between graders to no more than a ¼ point difference

(differs by Part Chair).

Establishment by the committee of an appropriate passing score.

Re-grading of those candidate’s questions that are within a range of the passing score.

Discussion on how to make the exam even better for next year.

41

Grading Exam Questions

On-Site Grading Session

After the first day, a group activity occurs at night to allow those on the committee to interact with one another in a less formal manner.

The on-site Grading Session is concluded when the group has established a mutually agreed upon passing score by inspecting all the relevant statistics and grading/re-grading those candidates around the passing score to ensure that their score is correct.

All final scores by candidate, including grading keys and model solutions are left with the Part

Chair to create the report to be sent to the

Exam Officers.

42

What Have We Learned From

The Chauncey Initiatives?

Questions should be focused on learning objectives, rather than individual papers

Triple True/False is not the only kind of multiple choice question

Art of selecting good “wrong” multiple choice answers

43

What Changes Should The Candidates

See On The Exams?

Better questions

Questions with many possible full-credit answers

Less “according to” and “based on” questions

Heavy “list” papers may become open-book

44

Setting the Pass Mark

Identify Purpose of the Pass Mark

Convene Pass Mark Panel

Analyze Exam Statistics

Prepare Recommendation

Proceed through Approval Process

45

Purpose of the Pass Mark

Establish Objective Pass/Fail Criterion

Pass Minimally Qualified (or better)

Candidates

Fail Others

Ensure Consistency between Sittings

46

Purpose of the Pass Mark

Passers

Failures

Minimally Qualified Candidate

47

Pass Mark Panel

Panel includes:

New Fellows (1-3 years)

Fellows experienced in practice area

Officers of exam committee

Recommends a pass mark independent of the normal exam committee procedures

48

Pass Mark Panel

Defines Minimally Qualified Candidate

What he or she will should know

What he or she will not know

What he or she will be able to demonstrate on the exam

Relates Criteria to Learning Objectives for defining the minimally qualified candidate.

49

Pass Mark Panel

Each panelist independently estimates how 100 minimally qualified candidates will score on each question (and sub-part of each question).

Scores are assembled and shared in a group format.

Group discusses ratings and may change estimates

Facilitator compiles ratings and shares results with exam committee officers

50

Analyze Exam Statistics (back at the

Grading Session in June)

Collect Initial Scores for All Candidates

Review/Discuss Key Measures

High, Low, Mean

Percentiles, Percentile Relationships

Pass Mark Panel Recommendation

Prior statistics from previous exams

CAS Board goal that 40% or more of the candidates should get a score of 70% or more on any given exam; and all candidates that get such a score should pass

Pick an initial pass mark and re-grade candidates within certain range of pass mark (+/- 3 points, for example)

51

Prepare Recommendation

Recollect scores if any have changed and review all relevant statistics again.

Review borderline candidates (tighter threshold now) and re-grade/review for consistency with answer key.

Repeat process until only looking at the 5 exams above and the 5 exams below the recommended pass mark.

Justify Recommended Pass Score

52

Approval Process

Part Chair

General Officer (Spring / Fall)

Exam Committee Chair (Arlie Proctor)

VP-Admissions (Jim Christie)

53