General Iteration Algorithms

advertisement

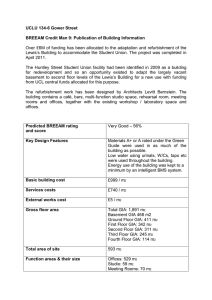

General Iteration Algorithms by Luyang Fu, Ph. D., State Auto Insurance Company Cheng-sheng Peter Wu, FCAS, ASA, MAAA, Deloitte Consulting LLP 2007 CAS Annual Meeting Chicago, Nov. 11-14, 2007 1 Agenda History and Overview of Minimum Bias Method General Iteration Algorithms (GIA) Demonstration of a GIA Tool GIA Statistics Conclusions Q&A 2 History on Minimum Bias A technique with long history for actuaries: Bailey and Simon (1960) Bailey (1963) Brown (1988) Feldblum and Brosius (2002) A topic in CAS Exam 9 Concepts: Derive multivariate class plan parameters by minimizing a specified “bias” function Use an “iterative” method in finding the parameters 3 History on Minimum Bias Various bias functions proposed in the past for minimization Examples of multiplicative bias functions proposed in the past: Balanced Bias wi , j (ri , j xi y j ) i, j Squared Bias wi , j (ri , j xi y j ) 2 i, j Chi Squared Bias i, j wi , j (ri , j xi y j ) 2 wi , j xi y j 4 History on Minimum Bias Then, how to determine the class plan parameters by minimizing the bias function? One simple way is the commonly used an “iterative” methodology for root finding: Start with a random guess for the values of xi and yj Calculate the next set of values for xi and yj using the root finding formula for the bias function Repeat the steps until the values converge Easy to understand and can be programmed in almost any tool 5 History on Minimum Bias For example, using the balanced bias functions for the multiplicative model: Balanced Bias wi , j (ri , j xi y j ) 0 i, j Then, w r i, j i, j xˆi ,t j w i, j yˆ j ,t 1 j w w r i, j i, j yˆ j ,t i i, j xˆi ,t 1 i 6 History on Minimum Bias Past minimum bias models with the iterative method: w w r i, j i, j xˆi ,t j i, j xˆi ,t yˆ j ,t 1 j xˆi ,t xˆi ,t wi , j ri 2,j yˆ j ,1t 1 j wi , j yˆ j ,t 1 j ri , j 1 ˆ j ,t 1 n j y 2 w i , j ri , j yˆ j ,t 1 j w 2 i, j yˆ 2j ,t 1 j 1/ 2 w r yˆ w yˆ i, j i, j xˆi ,t j ,t 1 j i, j 2 j ,t 1 j 7 Iteration Algorithm for Minimum Bias wi , j ( ri , j xi y j ) is not “bias”. Theoretically, i, j Bias is defined as the difference between an estimator and the ˆ i is bias. If xi xˆi 0 , true value. For example, xi x then xhat is an unbiased estimator of x. To be consistent with statistical terminology, we name our approach as General Iteration Algorithm. 8 Issues with the Iterative Method Two questions regarding the “iterative” method: Answers: How do we know that it will converge? How fast/efficient that it will converge? Numerical Analysis or Optimization textbooks Mildenhall (1999) Efficiency is a less important issue due to the modern computation power 9 Other Issues with Minimum Bias What is the statistical meaning behind these models? More models to try? Which models to choose? 10 Summary on Historical Minimum Bias A numerical method, not a statistical approach Best answers when bias functions are minimized Use of an “iterative” methodology for root finding in determining parameters Easy to understand and can be programmed in many tools 11 Connection Between Minimum Bias and Statistical Models Brown (1988) Show that some minimum bias functions can be derived by maximizing the likelihood functions of corresponding distributions Propose several more minimum bias models Mildenhall (1999) Prove that minimum bias models with linear bias functions are essentially the same as those from Generalized Linear Models (GLM) Propose two more minimum bias models 12 Connection Between Minimum Bias and Statistical Models Past minimum bias models and their corresponding statistical models w r w yˆ xˆi ,t i, j Poisson j ,t 1 xˆi ,t j wi , j ri 2,j yˆ j ,1t 1 j 2 wi , j yˆ j ,t 1 j ri , j 1 Exponential n j yˆ j ,t 1 xˆi ,t j ,t 1 j 2 i, j 2 j ,t 1 Normal j 1/ 2 xˆi ,t w r yˆ w yˆ 2 i, j i, j i, j i, j j w r yˆ w yˆ i, j i, j xˆi ,t j ,t 1 j i, j 2 j ,t 1 Least Squared j 13 Statistical Models - GLM Advantages include: Commercial software and built-in procedures available Characteristics well determined, such as confidence level Computation efficiency compared to the iterative procedure 14 Statistical Models - GLM Issues include: Requires more advanced knowledge of statistics for GLM models Lack of flexibility: Reliance on commercial software / built-in procedures. Cannot do the mixed model. Assumes a pre-determined distribution of exponential families. Limited distribution selections in popular statistical software. Difficult to program from scratch. 15 Motivations for GIA Can we unify all the past minimum bias models? Can we completely represent the wide range of GLM and statistical models using Minimum Bias Models? Can we expand the model selection options that go beyond all the currently used GLM and minimum bias models? Can we fit mixed models or constraint models? 16 General Iteration Algorithm Starting with the basic multiplicative formula The alternative estimates of x and y: ri , j xi y j xˆ i , j ri , j / y j , j 1, 2, to n yˆ j ,i ri , j / xi , i 1, 2, to m, The next question is – how to roll up xi,j to xi, and yj,i to yj ? 17 Possible Weighting Functions First and the obvious option - straight average to roll up ˆi x ˆj y 1 1 ˆi , j x n n j 1 1 ˆ y j ,i m m i j ri , j ˆj y i ri , j ˆi x Using the straight average results in the Exponential model by Brown (1988) 18 Possible Weighting Functions Another option is to use the relativity-adjusted exposure as weight function wi , j ri , j wi , j y wi , j y ˆj ˆ j ri , j j ˆi ˆi , j x x ˆ ˆ ˆ ˆj w y w y y wi , j y j j i, j j i, j j j j j j wi , j ri , j ˆi ˆi ri , j wi , j x wi , j x ˆ j ˆ j ,i y i y ˆi ˆi x ˆi ˆi wi , j x i wi , j x i wi , j x i i i This is Bailey (1963) model, or Poisson model by Brown (1988). 19 Possible Weighting Functions Another option: using the square of relativity-adjusted exposure wi2, j yˆ 2j xˆi 2 ˆ 2j j wi , j y j wi2, j xˆi2 yˆ j 2 ˆi2 i wi , j x i xˆi , j yˆ j ,i 2 w i , j ri , j yˆ j j w 2 i, j yˆ 2j j w r xˆ w xˆ 2 i, j i, j i i 2 i, j 2 i i This is the normal model by Brown (1988). 20 Possible Weighting Functions Another option: using relativity-square-adjusted exposure ˆi x ˆj y ˆ 2j wi , j y ˆ 2j j wi , j y j ˆi2 wi , j x ˆ i2 i wi , j x i ˆi , j x ˆ j ,i y w r yˆ w yˆ i, j i, j j j i, j 2 j j w r xˆ w xˆ i, j i, j i i i, j 2 i i This is the least-square model by Brown (1988). 21 General Iteration Algorithms So, the key for generalization is to apply different “weighting functions” to roll up xi,j to xi and yj,i to yj Propose a general weighting function of two factors, exposure and relativity: WpXq and WpYq Almost all published to date minimum bias models are special cases of GIA(p,q) Also, there are more modeling options to choose since there is no limitation, in theory, on (p,q) values to try in fitting data – comprehensive and flexible 22 2-parameter GIA 2-parameter GIA with exposure and relativity adjusted weighting function are: wip, j y ˆ qj xˆi p ˆ qj j wi , j y j wip, j xˆiq ˆ j y p ˆiq i wi , j x i xˆi , j ˆ j ,i y w r w p i, j i, j yˆ qj 1 j p i, j ˆ qj y j w r xˆ w xˆ p i, j i, j q 1 i i p i, j q 1 i i 23 2-parameter GIA vs. GLM p q GLM 1 -1 Inverse Gaussian 1 0 Gamma 1 1 Poisson 1 2 Normal 24 2-parameter GIA and GLM GIA with p=1 is the same as GLM model with the variance function of V ( ) 2q Additional special models: 0<q<1, the distribution is Tweedie, for pure premium models 1<q<2, not exponential family -1<q<0, the distribution is between gamma and inverse Gaussian After years of technical development in GLM and minimum bias, at the end of day, all of these models are connected through the game of “weighted average”. 25 3-parameter GIA One model published to date not covered by the 2parameter GIA: Chi-squared model by Bailey and Simon (1960) Further generalization using a similar concept of link function in GLM, f(x) and f(y) Estimate f(x) and f(y) through the iterative method Calculate x and y by inverting f(x) and f(y) 26 3-parameter GIA wip, j yˆ qj f ( xˆi ) p q ˆ w y j i, j j j f (xˆi , j ) ri , j j w yˆ f ( yˆ ) j p i, j q j p q ˆ w y i, j j j ri , j w xˆ f ( ) p q wi , j xˆi xˆi i f ( yˆ j ) f ( yˆ j ,i ) p q p q ˆ ˆ w x w x i i, j i i, j i i i p i, j q i 27 3-parameter GIA Propose 3-parameter GIA by using the power link function f(x)=xk qk j w r y ˆ j ˆi x p q ˆ w y i, j j j wip, j ri k, j x ˆiq k i ˆj y p q ˆ w x i, j i i p k i, j i, j 1/ k 1/ k 28 3-parameter GIA When k=2, p=1 and q=1 ˆi x ˆ 1 wi , j ri 2 , j y j j ˆ w y i, j j j ˆj y w r w 1/ 2 i i 1/ 2 ˆ i1 x i, j ˆ x i, j i 2 i, j This is the Chi-Square model by Bailey and Simon (1960) 29 Additive GIA ˆi x p w i , j ( ri , j y j ) j p w i, j j ˆ y j p w i , j ( ri , j xi ) i p w i, j i 30 Mixed GIA For commonly used personal line rating structures, the formula is typically a mixed multiplicative and additive model: Price = Base*(X + Y) * Z ˆi, x ˆ i, y ˆi , z j ,h j ,h j ,h ri , ri , j ,h zh j ,h zh ri , y j xi j ,h xi y j 31 Constraint GIA In real world, for most of the pricing factors, the range of their values are capped due to market and regulatory constraints w r y j xˆ1 p q w1, j y j j p k 1, j 1, j q k j 1/ k w r y j xˆ 2 max( 0.75 xˆ1 , min( 0.95 xˆ1 , p q w y 2, j j j p k 2, j 2, j q k j 1/ k )) 32 Numerical Methodology for GIA For all algorithms: Use the mean of the response variable as the base Starting points:1 for multiplicative factors; 0 for additive factors Use the latest relativities in the iterations All the reported GIAs converge within 8 steps for our test examples For mixed models: In each step, adjust multiplicative factors from one rating variable proportionally so that its weighted average is one. For the last multiplicative variable, adjust its factors so that the weighted average of the product of all multiplicative variables is one. 33 Demonstration of a GIA Tool Written in VB.NET and runs on Windows PCs Approximately 200 hours for tool development Efficiency statistics: Efficiency for different test cases Excel Date # of Records # of Variables Loading Time CSV Date Model Time Loading Time Model Time 508 3 0.7 sec 0.5 sec 0.1 sec 0.5 sec 25,904 6 2.8 sec 6 sec 1 sec 5 sec 48,517 13 9 sec 50 sec 1.5 sec 50 sec 642,128 49 N/A 40 sec 34 GIA Statistics: Bias Function GLM Bias Function, Mildenhall (1999): Z 'W ( r ) 0 Z: the design matrix h(x): the reverse function of link function v(x): the variance function of GLM W: the diagonal matrix of weights with the ith diagonal element of wk h' ( z k ) / V ( k ) 35 GIA Statistics: Bias Function For multiplicative GLM: Link function is log, so h(x)=exp(x) Variance function v(x)=x^c, The bias function of multiplicative GLM: w (r ) 0 for i=1 to m w (r ) 0 for j=1 to n n j 1 i, j 1c i, j i, j i, j i, j 1 c i, j i, j i, j n i 1 36 GIA Statistics The bias function of GIA: w w P i, j iq, j k (ri k, j ik, j ) 0 P i, j iq, j k (ri k, j ik, j ) 0 j i w P i, j j for j=1 to n for i=1 to m iq, j k (ri k, j ik, j ) 0 wiP, j y qj k (ri k, j xik y kj ) 0 j wip, j ri k, j y qj k j P q k k k p q wi , j y j ri , j xi wi , j y j 0 xˆ i p q j j wi , j y j j 1/ k 37 GIA Statistics 3-parameter GIA is equivalent to a GLM assuming k r the response variable follows a distribution with variance function V ( ) 2q / k GIA theory reveals that the underlying assumption of Chi-Square model is that r2 follows a Tweedie distribution with a variance function V ( ) . 1.5 38 GIA Statistics: Residual Diagnosis Scaled Pearson residual of GIA: ei , j ri k, j rˆi k Var ( ) Var (ei , j ) Var ( ri k, j xˆ ik yˆ kj ( xˆ yˆ ) k i k j 2q / k ri k, j xˆik yˆ kj ( xˆik yˆ kj ) 2q / k ri k, j xˆ ik yˆ kj xˆ i2 k q yˆ 2j k q Var (ri k, j ) ) k k 2 q / k 1 ( xˆi yˆ j ) 39 GIA Statistics: Residual Diagnosis 0.6 0.4 0.2 -0.2 0.0 Scaled Pearson Residuals 0.8 Scattered residual plot of GIA with k = 1, p = 1, and q = -0.5 0 5 10 15 20 25 30 Observations 40 GIA Statistics: Residual Diagnosis 0.6 0.4 0.2 -0.2 0.0 Scaled Pearson Residuals 0.8 Scattered residual plot of GIA by Driver Age with k = 1, p = 1, and q = -0.5 17-20 25-29 35-39 50-59 60+ Age 41 GIA Statistics: Residual Diagnosis 0.6 0.4 0.2 0.0 -0.2 Scaled Pearson Residuals 0.8 Scattered residual plot of GIA by Vehicle Use with k = 1, p = 1, and q = -0.5 Business DriveLong DriveShort Pleasure Use 42 GIA Statistics: Residual Diagnosis 0.6 0.4 0.2 0.0 -0.2 Scaled Pearson Residuals 0.8 Residual Q-Q plot of GIA with k = 1, p = 1, and q = -0.5 -2 -1 0 1 2 Quantiles of Standard Normal 43 GIA Statistics: Calculation Efficiency GLM: Iterative reweighted least-square algorithm Beta ( Z 'WZ ) 1 Z 'Wr In each iteration, GLM coefficients are calculated using the weight matrix from the last iteration. GIA: Fixed Point Iteration GLM is faster, GIA is not slow. 44 Conclusions 2 and 3 Parameter GIA can completely represent GLM and minimum bias models Can fit mixed models and models with constraints Provide additional model options for data fitting Easy to understand and does not require advanced statistical knowledge Can program in many different tools Calculation efficiency is not an issue because of modern computer power. 45 Q&A 46