QUANTITATIVE ASSESSMENT METHODS A USER FRIENDLY PRIMER

advertisement

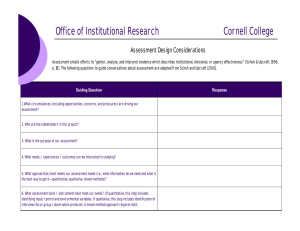

QUANTITATIVE ASSESSMENT METHODS A USER FRIENDLY PRIMER R. Michael Haynes, PhD Executive Director Office of Institutional Research and Effectiveness Tarleton State University What is assessment? “Assessment is any effort to gather, analyze, and interpret evidence which describes institutional, divisional, or agency effectiveness…” (Upcraft & Schuh, 1996, page 18). Today’s learning outcomes • Understanding the difference between quantitative and • • • • qualitative data collection methods Types of quantitative assessment Determining what you are attempting to measure Differences between commercial and homegrown instruments Developing an appropriate survey instrument But before we start….. Why is assessment important? • Strategic planning • Accreditation (NSSE/CIRP and SACS!) • To demonstrate program/departmental effectiveness (resource management, customer service, efficiencies, best practices…..) • To identify “gaps” in these same areas! • To identify the need for additional resources (financial, human, equipment, etc….) AND POSSIBLY THE MOST IMPORTANT REASON…. • To JUSTIFY the need for additional resources! (Upcraft & Schuh, 2001) Qualitative vs. Quantitative • Qualitative assessment: “….detailed description of the situations, events, people, interactions, and observed behaviors……” (Upcraft & Schuh, 2001, p. 27). • Quantitative assessment: “…..data in a form that can be represented by numbers, so that quantities and magnitudes can be measured, assessed, and interpreted with the use of mathematical or statistical manipulation….” (Alreck & Settle, 2004, p. 446). Types of quantitative assessment in higher education • Satisfaction (enrollment processes, orientation, student • • • • • • programming, parent services) Needs (new health center, football field, parking!) Tracking/Usage (swipe cards, recreational sport center, career centers) Campus Environment (quality of campus life, how inviting is the campus community) Outcomes (indirect learning outcomes, impact on constructs such as efficacy, maturation, etc…) Peer Comparison (comparison of services, IPEDS data) National Standards (Council for the Advancement of Standards [CAS] in Higher Education) (Upcraft & Schuh, 2001) What are you attempting to measure? • Very few things can be directly measured • Student demographics….gender, classification, ethnicity…good for classification/comparing groups • SAT/ACT/GPA…..have absolute values, but really, how good are they at measuring “deeper” traits? • How do you measure an indirect attribute/trait? • Construct: “….a concept that is inferred through observed phenomenon.” (Gall, Gall, & Borg, 2003). What are you attempting to measure? • For example, “health”….can’t be directly measured, but things associated with “health” can be measured • Weight to height ratio • Blood pressure • Cholesterol • “Satisfaction” is too general to measure…..but what can be measured? • Job satisfaction • Income • Marital status What are you attempting to measure? OK, let’s do a construct together…..how can we measure “Student Satisfaction”…what various activities/services could be associated with “satisfaction”? Aspects of…. • Admissions process • Financial aid • Orientation • Billing services • Move-in process • Class availability (OIRE is composing a survey as we speak!) • How about instruction evaluations? • Campus environment….still a bit broad, huh? Identifying an appropriate assessment instrument Commercial instruments (NSSE, CIRP, Noel Levitz, et al…..) • Pros • Already been pilot tested • Reliability/validity already checked and generally ensured by experts • Established as good instruments • Outside entity may assist in the administration process; purchaser may not have to participate in protocol whatsoever! • Vendor will most likely provide delivered reports • Cons • Cost • May not ask question specific to your area of interest • May require collaboration with outside entity in the administration process!! • Allows outside entities access to information about your target population/sample! Identifying an appropriate assessment instrument Homegrown instruments • Pros • Can be narrowly tailored to meet your specific needs • Can be relatively inexpensive to construct/administer • Privacy of your dataset • Can specify reports to focus on key findings • Cons • Identifying what you are trying to measure • Validity….making sure your items are measuring what you want to measure! • Reliability…..do your questions have internal consistency? • Conducting analysis and interpreting findings via reports What to consider when building your instrument Questions/Items • Items/questions should have 3 key attributes…. • Focus on a construct • Clarity to ensure everyone interprets the question in the same manner! (reliability!!) • Brevity…shorter questions are less subject to error/bias • Use the vocabulary of your sample • Grammar….simple questions (subject and predicate) are the most effective! • Avoid the following types of questions…. • Double-barreled: 2 questions in 1 (question about action then question about reason for action) • Leading: pulls the respondent to answer toward a bias…”Don’t you think driving too fast results in vehicular death?” • Loaded: less obvious that leading questions; often appeals to an emotion…”Do you believe the death penalty is a viable method of saving innocent lives?” (Alreck & Settle, 2004) What to consider when building your instrument Response options • Scale data types • Nominal (ethnicity, gender, hair/eye color, group membership) • Ordinal (indicates order but not magnitude…such as class rank) • Interval (equal differences between observation points….Fahrenheit/Celsius) • Ratio (same as interval, but there is an absolute “zero” value!) • Multiple/single response items • Multiple: “check all that apply” • Single: “choose the one most often…” • Likert scale • Arguably the most popular in quantitative assessment • Measures degree of agreement/disagreement; satisfaction/dissatisfaction; etc… • 1=Strongly Agree, 2=Agree, 3=Neutral, 4=Disagree, 5=Strongly Disagree • Can be used to obtain a numeric/summated value • Verbal frequency scale • Similar to Likert, but contains words that measure “how often” • 1=Always, 2=Often, 3=Sometimes, 4=Rarely, 5=Never • Semantic differential scale • Uses a series of adjectives used to describe the object of interest • For example, when ranking the quality of service at a restaurant, rank on scale of 1-6 with 1=Terrible and 6=Excellent (Alreck & Settle, 2004) Pilot testing your survey • Let someone else with expertise in assessment review your instrument • Let someone else with expertise in your field to be measure review your instrument! • If possible, ask 10-15 friends/colleagues to take your survey and provide feedback! Can help you identify….. • Confusing questions • Leading questions • Loaded questions • Questions you SHOULD asked but didn’t! Was this workshop helpful? • Please take our follow-up survey….link will be delivered via email….. • Things we want to know…. • Were the learning outcomes addressed? • Were the learning outcomes accomplished? • Was the workshop helpful for use in your work? • What other topics might be of interest? References • Alreck, P.L. & Settle, R.B. (2004). The survey research handbook (3rd Ed.). Boston: McGraw-Hill Irwin. • Gall, M.D., Gall, J.P., & Borg, W.R. (2003). Educational research. An introduction. (7th Ed.). Boston: Allyn & Bacon. • Schuh, J.H. & Upcraft, M.L. (2001). Assessment practice in student affairs: An applications manual. San Francisco: Jossey-Bass.