MPEG-4 & Wireless Multimedia Streaming CIS 642 Dimosthenis Anthomelidis

advertisement

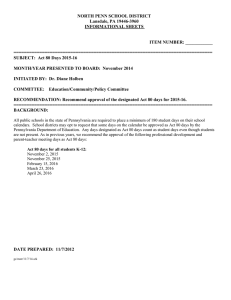

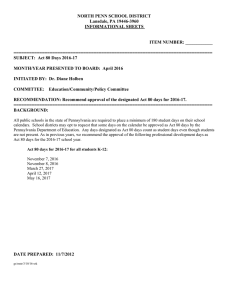

University of Pennsylvania MPEG-4 & Wireless Multimedia Streaming CIS 642 Dimosthenis Anthomelidis Overview University of Pennsylvania Math Background MPEG Family MPEG-4 Overview Packet-Video Technology 7/26/2016 2 Math Background - DCT University of Pennsylvania Discrete Cosine Transform (DCT) is key method of MPEG compression standard DCT helps separate the image into parts of differing importance Similar to DFT: transforms an image from the spatial domain to the frequency domain 2-dimensional DCT on 16x16-pixel sub-blocks of the source picture 7/26/2016 3 Math Background – DCT (2) University of Pennsylvania A is the input image, A(i,j) is the intensity of the pixel 7/26/2016 4 Math Background – DCT (3) University of Pennsylvania Coefficients for the output “image” B: B(k1,k2) is the DCT coefficient Signal energy lies at low frequencies These appear in the upper left corner of the DCT Increasing Horizontal Frequency 7/26/2016 5 MPEG Family University of Pennsylvania Motion Pictures Expert Group (MPEG): Experts dedicated to standards for digital audio and video History: MPEG-1, MPEG-2 have given rise to: o DVD o Digital TV o Digital Audio Broadcasting o MP3 codecs (coder-decoder) MPEG-4 More to come: MPEG-7 (Content Description) 7/26/2016 6 MPEG-4 Overview Formally ISO/IEC international standard 14496 Audio-visual coding standard Versions 1 & 2 Builds on success of: University of Pennsylvania o Digital TV, Interactive graphics Adopts object-based audiovisual representation model Satisfy: o Authors (reusability, owner rights) o End-users (interaction with content, multimedia to mobile users) 7/26/2016 7 MPEG-4 Parts University of Pennsylvania Part 1: Systems Part 2: Visual Part 3: Audio Part 4: Conformance Testing Part 5: Reference Software Part 6: DMIF (Delivery Multimedia Integration Framework) Part 7: Optimised software for MPEG-4 tools 7/26/2016 8 Major Forces Coding: units of audio, visual as media objects Object-oriented paradigm Integration: natural and synthetic AV objects University of Pennsylvania Scene is modeled as a composition of objects Multiplexing, synchronization of data associated with media objects Interactivity: locally at the receiver or via a back channel High Compression Mobility (low bit-rate) & Real-time data Identification and Protection of intellectual property 7/26/2016 9 Convergence of 3 worlds University of Pennsylvania Convergence 7/26/2016 10 Functionalities University of Pennsylvania Content-based interactivity o User is able to select one object in the scene o Hybrid natural and synthetic data coding Compression o Improved coding efficiency o Multiple concurrent data streams – 3D natural ‘objects’, virtual reality Universal access o Robustness in error-prone environments o Content-based scalability – Fine granularity in content 7/26/2016 11 Part 1:Systems University of Pennsylvania Framework for integrating natural and synthetic components of complex multimedia scenes. 7/26/2016 12 Part 1:Systems (2) University of Pennsylvania Decoding N e t w o r k TransMux ... Ex: MPEG-2 Transport FlexMux Composition and Rendering Primitive AV Objects ... Elementary Streams Scene Description Information Audiovisual Interactive Scene Display & local user interaction Object Descriptor DAI 7/26/2016 13 Systems Structure University of Pennsylvania TransMux Layer FlexMux Tool DAI Sync. Layer ESI Compression Layer Composition 7/26/2016 14 Media Objects University of Pennsylvania Content-based AV representation o AVO (AV objects) – VOC (Video Object Component), AOC(Audio OC) – User may access it AV scene: o composition of several media objects organized in hierarchical fashion Leaves: primitive media objects • Still images, Video objects etc Objects are placed in elementary streams (Ess) VOP (Video Object Plane): 2D VOC time sample with arbitrary shape. Contains motion parameters, shape info, texture data 7/26/2016 15 Media Objects (2) University of Pennsylvania Sprites: used to code unchanging backgrounds A scalable object can have an ES for basic quality info plus one or more enhancement layers (Video Object Layer) Visual objects in a scene are described mathematically and given a position in 2D or 3D space Object descriptor identifies all streams associated to one media object: informs the system which ESs belong to an object o It has its own ES BIFS (Binary Format for Scenes): language for describing and dynamically changing the scene. Borrows concepts from VRML. 7/26/2016 16 MPEG-4 scene 7/26/2016 University of Pennsylvania 17 Composition University of Pennsylvania Task of combining all of the separate entities that make up the scene. Multimedia scenes are conceived as hierarchical structures represented as a graph. Each leaf is a media object. Graph structure isn’t necessarily static. Composition info is delivered in one elementary stream 7/26/2016 18 Multiplex (1) University of Pennsylvania 3-layer multiplex: o Sync Layer: adding info for timing and synchronization o FlexMux layer: multiplexing streams with different characteristics o Transmux Layer: adapting the multiplexed stream to the particular network characteristics Elementary streams are packetized adding headers with timing info (clock references) and synchronization data (timestamps). They make up the synchronization layer 7/26/2016 19 Multiplex (2) University of Pennsylvania Flexible multiplex layer: intermediate multiplex layer. Group together several low-bit-rate streams (with similar QoS requirements). Transport multiplex layer: it is specific to the characteristics of the transport network. No specific transport mechanism is defined: o Existing transport formats: ATM, RTP suffice 7/26/2016 20 Multiplex (3) 7/26/2016 University of Pennsylvania 21 Multiplex (4) 7/26/2016 University of Pennsylvania 22 Synchronization layer University of Pennsylvania Associate timing and synchronization Elementary streams (ES) consist of access units: portions of the stream with a specific decoding and composition time. ES are split into SL packets, not necessarily matching the size of the access units. A header attached contains: o Sequence number o object clock reference- a time stamp used to reconstruct the time base for the object (speed of the encoder clock) o Decoding time stamp- identify the correct time to decode the access unit o Composition time stamp- identify the correct time to render a decoded access unit 7/26/2016 23 MP4 File Format University of Pennsylvania Reliable way for users to exchange complete files of MPEG-4 content 7/26/2016 24 MPEG-J University of Pennsylvania MPEG-4 specific subset of Java Defines interfaces to elements in the scene, network resources, terminal resources Personal Profile: lightweight package for personal devices o Network o Scene o Resource 7/26/2016 25 Part 2 – Visual University of Pennsylvania “rectangular” video objects Arbitrary shaped objects o Binary shape: an encoded pixel either is or is not part of the object in question (on/off). Useful for low-bit rate environments o Alpha shape: for higher-quality content each pixel is assigned a value for its transparency 7/26/2016 26 Visual (Cont’d) University of Pennsylvania MPEG-2 defines the decoding process. Encoding processes are left to the marketplace. Provide users a new level of interaction with visual contents Manipulate objects Error robustness Scalability: minimum subset that can be decoded – Base layer. Each of the other bitstreams is called enhancement layer Optimized for 3 bitrate ranges: o < 64 kbps ( wireless scenario) o 64-384 kbps o 384-4 Mbps 7/26/2016 27 Error Resilience University of Pennsylvania Very important for mobile communications because of error burstiness Resynchronization o Errors are localized through the use of resynchronization markers. These markers can be inserted in the bitstream. If error then decoder skips data till next marker and restarts from that point. Insertion after constant #coded bits - “video packets”. Data partitioning - motion info seperated from texture info If error in texture bits use decoded motion info. Header Extension code: redundant info, vital for correct decoding video Reversible Variable Length code: codewords decoded in forward and backward. If error it’s possible to decode portions of the corrupted bitstream in reverse order. 7/26/2016 28 Scalability University of Pennsylvania Use of multiple VOLs (base layer-enhancement layer) Spatial scalability o Enhancement layer improves spatial resolution Temporal scalability: o Offers higher frame rate. Improves smoothness of motion (temporal resolution) Generalized framework: a scalability preprocessor implements the desired scalability. For spatial scal., it downsamples the input VOPs to produce the base layer which is encoded by base-layer encoder. The reconstructed base layer is up-sampled by a mid-processor. The difference from original VOP is the input for enhancement encoder. 7/26/2016 29 Hold that smile University of Pennsylvania Map images onto computer-generated shapes. 7/26/2016 30 Applications University of Pennsylvania Criteria o Timing constraints – Real-time or non real-time o Symmetry of transmission facilities o Interactivity Non real-time, Non-symmetric, Non-Interactive o Multimedia broadcasting for mobile devices Manufacturers of mobile equipment and providers of mobile services have been adopting MPEG-4 7/26/2016 31 University of Pennsylvania Mobile Interactive Multimedia Mobile computing= portable computer + wireless comm. Limitations: o Limited computation capacity o Narrow bandwidth o Unreliable channel Requirements o High Compression o Error resilience 7/26/2016 32 Thinking small University of Pennsylvania Moving video possible at very low bit-rates for mobile devices. Even at 10kb/s (GSM’s data rate) Use of scalable objects: providers need encode clips only once. A base layer conveys all the info in some basic quality Already existing MPEG-4 hardware decoders, encoders to bring video to mobile devices (e.g Toshiba) 7/26/2016 33 Packet Video Technology University of Pennsylvania Visual communication “anywhere – anytime” Compliant with MPEG-4 visual spec. Optimized for single rectangular objects based on motion compensation and DCT coding of macroblocks Scalability: allows subsets of a single bitstream to go to a receiver. You encode once and deliver to multiple decoders with different capabilities 7/26/2016 34 Video Encoding 7/26/2016 University of Pennsylvania 35 Rate Control University of Pennsylvania Rate control: multiple layer bitstreams o Temporal scalability – adding enhancement to a base layer o Spatial scalability – adding enhancement with differential images 7/26/2016 36 Video Decoding 7/26/2016 University of Pennsylvania 37 PV error-resilient decoding 7/26/2016 University of Pennsylvania 38 Products University of Pennsylvania Software-based solutions PVPlayer: decoder application for rendering PVServer: server application PVAuthor: encoder, create MP4 file format bit stream 7/26/2016 39 Conclusion University of Pennsylvania Extensive tests show that MPEG-4 achieves better or similar image qualities at all bitrates targeted, with the bonus of added functionalities. 7/26/2016 40 References University of Pennsylvania http://www.cselt.it/mpeg/ http://www.packetvideo.com 7/26/2016 41