Integrating Student Learning into Program Review Barbara Wright Associate Director, WASC

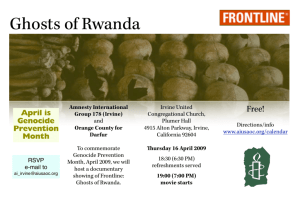

advertisement

Integrating Student Learning into Program Review Barbara Wright Associate Director, WASC bwright@wascsenior.org February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 1 Assessment & Program Review: related but different Program review typically emphasizes Inputs, e.g. Mission statement, program goals Faculty, their qualifications Students, enrollment levels, qualifications Library, labs, technology, other resources Financial support February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 2 Assessment & Program Review: related but different, cont. Program review typically emphasizes Processes, e.g. Faculty governance Curriculum review Planning Follow-up on graduates Budgeting And yes, assessment may be one of these February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 3 Assessment & Program Review: related but different, cont. Program review typically emphasizes indirect indicators of student learning and academic quality, e.g. Descriptive data Surveys of various constituencies Existence of relationships, e.g. with area businesses, professional community Program review has traditionally neglected actual student learning outcomes February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 4 Assessment & Program Review: related but different, cont. PR is typically conceived as Data-gathering Looking at the past 5-8 years Reporting after the fact where the program has been Using PR to garner resources – or at least protect what program has Projecting needs into the future Expressing “quality” & “improvement” in terms of a case for additional inputs February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 5 Capacity vs. Educational Effectivess for Programs: Capacity questions: What does the program have in the way of inputs, processes, and evidence of outputs or outcomes? What does it need, and how will it get what it needs? EE questions: How effectively do the inputs and processes contribute to desired outcomes? How good are the outputs? The student learning? February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 6 Assessment & Program Review: related but different Assessment is all about Student learning & improvement at individual, program & institutional levels Articulation of specific learning goals (as opposed to program goals, e.g. “We will place 90% of graduates in their field.”) Gathering of direct, authentic evidence of learning (as opposed to indirect evidence, descriptive data) February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 7 Assessment & Program Review: related but different, cont. Assessment is all about Interpretation & use of findings to improve learning & thus strengthen programs (as opposed to reporting of data to improve inputs) A future orientation: Here’s where we are – and here’s where we want to go in student learning over the next 3-5 years Understanding the learning “problem” before reaching for a “solution” February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 8 Assessment & Program Review: related but different, cont. Assessment of student learning and program review are not the same thing. However, there is a place for assessment as a necessary and significant input in program review. We should look for A well-functioning process Key learning goals Standards for student performance A critical mass of faculty (and students) involved Verifiable results, and Institutional support February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 9 The Assessment Loop 1. Goals, questions 4. Use 3. Interpretation February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 2. Gathering evidence 10 The Assessment Loop – Capacity Questions 4. 1. Is there a process for use of findings for improvement? Is there admin. support, planning, budgeting? Does the program have student learning goals, questions? Rewards for faculty? 3. Do they have a process for systematic, collective analysis and interpretation of evidence? February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 2. Do they have methods, processes for gathering evidence? Do they have evidence?11 The Assessment Loop – Effectiveness Questions 4. 1. What is the quality How well do they achieve their student learning goals, answer questions? of follow-through on findings for improvement? Is there improvement? How adequate, effective are admin. support, planning, budgeting? Rewards for faculty? 3. How well do processes for systematic, collective analysis and interpretation of evidence work? What have they found? February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 2. How aligned are the methods? How effective are the processes? How complete is the12 evidence? Don’t confuse program-level assessment and program review Program-level assessment means we look at learning on the program level (not just individual student or course level) and ask what all the learning experiences of a program add up to, at what standard of performance (results). Program review looks for program-level assessment of student learning but goes beyond it, examining other components of the program (mission, faculty, facilities, demand, etc.) February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 13 What does WASC want? Both! Systematic, periodic program review, including a focus on student learning results as well as other areas (inputs, processes, products, relationships) An improvement-oriented student learning assessment process as a routine part of the program’s functioning February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 14 Institutionalizing Assessment – 2 aspects: The PLAN for assessment (i.e. shared definition of the process, purpose, values, vocabulary, communication, use of findings) The STRUCTURES and RESOURCES that make the plan doable February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 15 How to institutionalize - Make assessment a freestanding function Attach to an existing function, e.g. Accreditation Academic program review Annual reporting process Center for Teaching Excellence Institutional Research February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 16 Make assessment freestanding -Positives and Maximum flexibility Minimum threat, upset A way to start February 1, 2008 Negatives Little impact Little sustainability Requires formalization eventually, e.g. Office of Assessment Retreat on StudentLlearning and Assessment, Irvine 17 Attach to Office of Institutional Research -Positives and Strong data gathering and analysis capabilities Responds to external expectations Clear responsibility IR has resources Faculty not “burdened” February 1, 2008 Negatives Perception: assessment = data gathering Faculty see little or no responsibility Faculty uninterested in reports Little or no use of findings Retreat on StudentLlearning and Assessment, Irvine 18 Attach to Center for Teaching Excellence -Positives and Strong impact possible Ongoing, supported Direct connection to faculty, classroom, learning Chance for maximum responsiveness to “use” phase February 1, 2008 Negatives Impact depends on how broadly assessment is done No enforcement Little/no reporting, communicating Rewards, recognition vary, may be lip service Retreat on StudentLlearning and Assessment, Irvine 19 Attach to annual report -Positives and Some impact (depending on stakes) Ongoing Some compliance Habit, expectation Closer connection to classroom, learning Cause/effect possible Allows flexibility February 1, 2008 Negatives Impact depends on how seriously, how well AR is done No resources Reporting, not improving, unless specified Chair writes; faculty involvement varies Retreat on StudentLlearning and Assessment, Irvine 20 Attach to accreditation -Positives and Maximum motivation Likely compliance Resources available Staff, faculty assigned Clear cause/effect February 1, 2008 Negatives Resentment of external pressure Us/them dynamic Episodic, not ongoing Reporting, gaming, not improving Little faculty involvement Little connection to the classroom, learning Main focus: inputs, process Retreat on StudentLlearning and Assessment, Irvine 21 Attach to program review -Positives and Some impact (depending on stakes) Some compliance Some resources available Staff, faculty assigned Cause/effect varies February 1, 2008 Negatives Impact depends on how seriously, how well PR is done Episodic, not ongoing Inputs, not outcomes Reporting, not improving Generally low faculty involvement Anxiety, risk-aversion Weak connection to the classroom, learning Retreat on StudentLlearning and Assessment, Irvine 22 How can we deal with the disadvantages? Strong message from administration: PR is serious, has consequences (bad and good) Provide attentive, supportive oversight Redesign PR to be continuous Increase weighting of assessment in overall PR process increase Involve more faculty, stay close to classroom, program Focus on outcomes, reflection, USE Focus on improvement (not just “good news”) and REWARD IT February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 23 How can we increase weighting of learning & assessment in PR? E.g., From to Optional part One small part of total PR process “Assessment” vague, left to program Various PR elements of equal value (or no value indicated) Little faculty involvement February 1, 2008 Required Core of the process (so defined in instructions) Assessment expectations defined Points assigned to PR elements; student learning gets 50% or more Broad involvement Retreat on StudentLlearning and Assessment, Irvine 24 Assessment serves improvement and accountability A well-functioning assessment effort systematically improves curriculum, pedagogy, and student learning; this effect is documented. At the same time, The presence of an assessment effort is an important input & indicator of quality, The report on beneficial effects of assessment serves accountability; and Assessment findings support $ requests February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 25 New approaches to PR/assessment Create a program portfolio Keep program data continuously updated Do assessment on annual cycle Enter assessment findings, uses, by semester or annually For periodic PR, review portfolio and write reflective essay on student AND faculty learning February 1, 2008 Retreat on StudentLlearning and Assessment, Irvine 26