On Speaker-Specific Prosodic Models for Automatic Dialog Act Segmentation of Multi-Party Meetings

advertisement

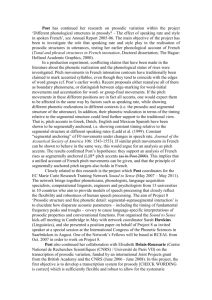

On Speaker-Specific Prosodic Models for Automatic Dialog Act Segmentation of Multi-Party Meetings Jáchym Kolář1,2 Elizabeth Shriberg1,3 Yang Liu1,4 1International 2University 3SRI Computer Science Institute, Berkeley, USA of West Bohemia in Pilsen, Czech Republic International, USA 4University of Texas at Dallas, USA Why automatic DA segmentation? • Standard STT systems output a raw stream of words leaving out structural information such as sentence and Dialog Act (DA) boundaries • Problems for human readability • Problems when applying downstream natural language processing techniques requiring formatted input 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 2 Goal and Task Definition • Goal: Dialog Act (DA) segmentation of meetings • Task definition: • 2-way classification in which each inter-word boundary is labeled as within-DA boundary or boundary between DAs • e.g. “no jobs are still running ok” 3 DAs: “No.” + “Jobs are still running.” + “OK.” • Evaluation metric – “Boundary error rate” # Incorrectly classified word boundaries E #Words 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 3 Approach: Explore Speaker-Specific Prosody • • • • • Past work has used both lexical and prosodic features, but collapsing over speakers Speakers appear to differ, however, in both feature types, especially in spontaneous speech Meeting applications: speaker is often known or at least recorded on one channel; often participates in ongoing meetings good opportunity for modeling Speaker adaptation used successfully in cepstral domain for ASR This study takes a first look specifically at prosodic features for the DA boundary task 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 4 Three Questions 1) Do individual speakers benefit from modeling more than simply pause information? 2) Do individual speakers differ enough from the overall speaker model to benefit from a prosodic model trained on only their speech? 3) How do speakers differ in terms of prosody usage in marking DA boundaries? 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 5 Data and Experimental Setup • ICSI meeting corpus – multichannel conversational speech annotated for DAs • Baseline speaker-independent model trained on 567k words • For speaker-specific experiments – 20 most frequent speakers in terms of total words (7.5k – 165k words) • 17 males, 3 females • 12 natives, 8 nonnatives 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 6 Data and Experimental Setup II. • Each speaker’s data: ~70% training, ~30% testing • Jackknife instead of separate development set using 1st half of test data to tune weights for the 2nd half and vice versa • Tested on forced alignments rather than on ASR hypotheses 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 7 Prosodic Features and Classifiers • Features: 32 for each interword boundary • Pause – (after current, previous and follow. word) • Duration – (phone-normalized dur of vowels, final rhymes and words; no raw durations) • Pitch – (F0 min, max, mean, slopes, and diffs and ratios across word boundaries; raw values + PWL stylized contour) • Energy – (max, min, mean frame-level RMS values, both raw and normalized) • Classifiers: CART-style decision trees with ensemble bagging 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 8 Pause-only vs. Richer Set of Prosodic Features • Compare speaker-independent (SI) model with pause only (SI-Pau) with SI model with all 32 prosodic features (SI-All) • SI-All significantly better for 19 of 20 speakers • Relative error rate reduction by prosody not correlated with the amount of training data 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 9 Pause-only vs. Rich Prosody: Relative Error Reduction Relative error reduction [%] 14% 12% 10% NONNATIVES 8% SI-All 6% SI-Pau 4% 2% 0% -2% 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 Speakers 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 10 Speaker-Independent (SI) vs. Speaker-Dependent (SD) Models • We compare SI, SD, and interpolated SI+SD models • SI+SD defined as: PSI SD ( X ) PSI ( X ) (1 ) PSD ( X ) • Significantly improved result would suggest prosodic marking of boundaries differs from baseline SI model 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 11 Effects of Adding SD Information • SD models much smaller than SI model; as expected SI better than SD alone for most subjects (though for some SD better!) • Many subjects, no gain by adding SD information (no SD info or not enough data?) • For 7 of 20 speakers, however, SD or SI+SD is better than SI, 5 improvements statistically significant • Improvement by SD not correlated with amount of data, error rate, chance error, proficiency in English, or gender • SD often helps in “unusual” prosody situations – hesitation, lip smack, long pause, emotions • SD helps more in preventing false alarms than misses 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 12 Audio Examples: SD Helps Example of preventing a FALSE ALARM: “and another thing that we did also is that |FA| we have all this training data … ” SD does not false alarm after 2nd “that” because it ‘knows’ this nonnative speaker has limited F0 range and often falls in pitch before hesitations ----------------------------------------------------------------------------Example of preventing a MISS: “this is one |.| and I think that's just fine |.|” SD finds DA boundary after “one”, despite the short pause, probably based on the speaker’s prototypical pitch reset 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 13 Feature Usage, Natives vs. Nonnatives • Feature usage – how many times a feature is queried in the tree weighted by the number of samples it affects • 5 groups of features: • • • • • Pause at boundary Near pause Duration Pitch Energy • Compare the SD feature usage of improved speakers with the SI distribution 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 14 FEATURE USAGE Feature Usage: Natives vs. Nonnatives 50% NATIVES 40% 30% SI me011 20% fe016 10% 0% FEATURE USAGE PAUSE DURATION PITCH 50% ENERGY NEAR PAU NONNATIVES 40% 30% 20% SI mn015 mn007 mn005 fn002 10% 0% PAUSE 09/20/2006 DURATION PITCH ENERGY NEAR PAU Kolář et al.: On Speaker-Specific Prosodic Models for ... 15 Summary • Prosodic features beyond pause provides improvement for 19 of 20 frequent speakers • For ~30% speakers studied, simply interpolating large SI prosodic model with small SD model yielded improvement • Amount of data error rate, chance error, proficiency in English, or gender not correlated with improvement by SD • Some interesting observations – nonnative speakers differ from native in feature usage patterns, SD information helps in “unusual” prosody situations and preventing false alarms 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 16 Conclusions and Future Work • Results are interesting and suggestive, but as of yet inconclusive • SD prosody modeling significantly benefits some speakers, but predicting who they will be is still an open question • Many issues still to address, especially joint modeling with lexical features, and better integration approach • Approach interesting to explore for other domains like broadcast news, where segmentation important and some speakers occur repeatedly 09/20/2006 Kolář et al.: On Speaker-Specific Prosodic Models for ... 17 On Speaker-Specific Prosodic Models for Automatic Dialog Act Segmentation of Multi-Party Meetings Jáchym Kolář1,2 Elizabeth Shriberg1,3 Yang Liu1,4 1International 2University 3SRI Computer Science Institute, Berkeley, USA of West Bohemia in Pilsen, Czech Republic International, USA 4University of Texas at Dallas, USA